Abstract

The design of optimum filters constitutes a fundamental aspect within the realm of signal processing applications. The process entails the calculation of ideal coefficients for a filter in order to get a passband with a flat response and an unlimited level of attenuation in the stopband. The objective of this work is to solve the FIR filter design problem and to compare the optimal solutions obtained from evolutionary algorithms. The design of optimal FIR low pass (LP), high pass (HP), and band stop (BS) filters is achieved by the utilization of nature-inspired optimization approaches, namely gray wolf optimization ,cuckoo search, particle swarm optimization, and genetic algorithm. The filters are evaluated in terms of their stop band attenuation, pass band ripples, and departure from the anticipated response. In addition, this study compares the optimization strategies applied in the context of algorithm execution time which is achievement of global optimal outcomes for the design of digital finite impulse response (FIR) filters. The results indicate that when the Gray wolf algorithm is applied to the development of a finite impulse response (FIR) filter, it produces a higher level of performance than other approaches, as supported by enhanced design precision, decreased execution time, and achievement of an optimal solution.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Filtering is typically the most intricate procedure employed in signal processing. In digital signal processing, filters change the spectrum of the input signal so that the output signal has the right spectrum properties. Digital filters are widely used because they have linear phase characteristics, work accurately, are stable at high temperatures, can be changed with a programmable processor, can multiplex, log data, and can be used over and over again. They can work with both real-time and stored data and can be built in both hardware and software1. There are two types of digital filters: finite impulse response (FIR) filters and infinte impulse response (IIR) filters. If there is no need for phase shift, FIR filters are the best choice to choose. FIR filters are also naturally stable systems that aren’t as affected by the limited word length effect. On the other hand, IIR filters have fewer factors, use less memory, and are used when there needs to be a sharp cut-off2. Unlike the design of IIR filters, the design of FIR filters is not related to the design of analogue filters. The design of FIR filters is primarily focused on directly approximating the given magnitude response, while also typically requiring the phase response to be linear. The transfer function H(z) of length \(N+1\) is a causal Finite Impulse Response (FIR) polynomial of degree N, where \(z^{-1}\) represents the backward shift operator is shown in Eq. (1).

A sequence x(n) with a finite duration and length N+1 can be fully described by N+1 samples of its discrete Fourier transform \(X(e^{-jw})\). Therefore, it is feasible to create a FIR filter by determining N+1 samples of its frequency response or impulse response h(n), resulting in a filter length of N+1. The conditions for the linear phase design are as follows:

Researchers have devised and implemented heuristic evolutionary optimization algorithms3 in accordance with the principles of natural selection and evolution. The genetic algorithm optimization (GAO), which was introduced in 1975, is a class of probabilistic search algorithms designed for general purposes. It draws inspiration from natural genetic populations and aims to evolve solutions to optimization and search problems with a high degree of success. Substantial effort has been dedicated to the construction of optimum filters using GAO and its variations4. PSO has the ability to handle functions that are not differentiable and have many objectives5,6 and guarantees global solutions. Developed in 1996, differential evolution (DE) is an additional stochastic, population-based optimization technique7,8. Methods for designing FIR filters utilizing the DE algorithm are detailed in reference9. An additional bio-inspired algorithm is cuckoo search optimization (CSO), which was introduced in 2007 and is more versatile by incorporating the intelligent foraging behavior and intriguing characteristics of a honey bee swarm in its quest for sustenance10,11. Cuckoo-search optimization (CSO) is one of the most recent meta heuristic optimization techniques inspired by nature; it was created in 200912,13. Recent research suggests that among several nature-inspired algorithms, CSO is the most efficient optimization tool for solving structural engineering challenges14,15.

Mirjalili et al.16 introduced the GWO, a novel meta heuristic algorithm that emulates the natural hunting mechanism and social hierarchy of grey wolves. The algorithm consists of three primary stages: encircling prey, pursuing prey, and attacking prey. The mathematical representation of the leadership hierarchy of wolves designates the ideal option as alpha, with the second and third optimal alternatives being represented as delta and beta, respectively. The remaining possible solutions are assumed to be omega17.

An exhaustive and integrated examination of GWO is utilized to design a FIR LP, HP, and BS filter in this article. By utilizing a comparable design methodology, it is possible to modify these filters to create a additional FIR filters like band pass filter. The process of developing an ideal filter entails determining the filter coefficients that generate a passband ripple response of minimal magnitude and a stopband attenuation that is of significant magnitude. This is determined by the coefficients that are computed using GWO17. A comparative analysis is conducted between the designs and alternative bio-inspired optimization techniques, namely PSO, CSO, and GAO.

The structure of this research work as follows. Section refs2 delineates the mathematical formulation of the problem pertaining to the design of the FIR filter with cost function estimation. The algorithms utilized in the design of the FIR filter are the subject of Section refs3, which also describes the implementation of these algorithms that are specific to the problem at hand. Section refs4 describes the results and analysis of the simulations that were conducted. Section refs4 provides a detailed analysis of the effectiveness of FIR filters and an update on the progress of GWO in compared to other algorithms. Section refs5 provides the concluding remarks for the entire endeavor.

Classification of finite discrete length sequences

Derived from the principles of geometric symmetry

Geometric symmetry is a significant factor in digital signal processing applications, as evidenced by the utilization of finite discrete samples. There are two types of geometric symmetric are used: (i) N-point symmetric and (ii) N-point anti-symmetric18,19. The following condition should be satisfies for a length N-point symmetric response:

The following condition should be satisfies for a length N-point anti-symmetric response:

where N can be either even or odd samples, based on N values the 4 different types of geometric symmetric condition are formed. Figure 1 shows the four types of geometric symmetry with center for symmetry.

From the Fig. 1, In the cases of type 1 and type3 sequences, the point of symmetry lines up with one of the samples in those sequences. This is why it is called “whole-sample symmetry.” For type 2 and type 4 sequences, on the other hand, the point of symmetry is in the middle of two center samples, which is why this type of symmetry is called “half-sample symmetry.” Thus, sequences of types 1, 2, 3, and 4 are called whole sample symmetric, half sample symmetric, whole sample anti-symmetric, and half sample anti-symmetric, respectively20.

Type 1: symmetric sequence with N=odd

Type 1 linear phase odd length sequence, the corresponding Fourier transform of the sequence is shown in Eq. (4),

Type 1: symmetric sequence with N=even

Type 2 linear phase even length sequence, the corresponding Fourier transform of the sequence is shown in Eq. (5),

Type 3: anti-symmetric sequence with N=odd

Type 3 linear phase even length sequence, the corresponding Fourier transform of the sequence is shown in Eq. (6),

Type 4: anti-symmetric sequence with N=even

Type 4 linear phase even length sequence, the corresponding Fourier transform of the sequence is shown in Eq. (7),

Consequently, the FIR filter design error function possesses L+2 or L+3 extrema, where L denotes the greatest limit of symmetry. Usually, extra ripple (side lobe) filters are those that have more than L+2 changes or ripples in their form. We can raise the number of the filter to get rid of the ripples. But as the order goes up, it may take longer and be more difficult to build the structures. So, we came up with a new way to cut down on the ripples and improve the performance of the FIT filter design using meta-heuristic optimizations21,22.

Cost function estimation for high pass and band pass FIR filter

In this paper, we used23 cost function for high pass filter is modified as follows24:

where, \(\alpha =\frac{N-1}{2}\) , \(E_p\) and \(E_s\) is the pass and stop band error for low pass FIR filter which can be formulated by [];

where, b is the symmetric sequences, stop band error has two coefficients P and Q are to be calculated by:

In case of pass band error C are calculated by:

In case of band stop filter, the FIR filter cost function can be defined as:

where, \(E_{s1}\) and \(E_{s2}\) as same as discussed previous, only limit has to consider for the same. Here, the goal of this work is to reduce the side lobe and reduce the transition band25.

Grey wolf optimization

Grey wolf optimization (GWO) is a meta-heuristic algorithm proposed initially in 2014 by Mirjaliali16. Grey wolves employ this method of locating the optimal solution through the use of social hierarchy and foraging strategies17. This grey wolf has the advantages of being loyal to pack members, working together in a pack, and being a dominant pack leader. The wolf helps to find the decision-making of the optimal bands. GWO algorithm mimics the leadership and hunting mechanism of grey wolves which is shown in algorithm 1.

The Mathematical model of GWO algorithm is discussed in following steps: (i) Convergence of social hierarchy and hunting strategy. (ii) Find fitness solution of Alpha \((\alpha )\) wolf. (iii) Find the second and third best fit solution, Beta \((\beta )\) and Delta \((\delta )\) wolves respectively. (iv) Finally, Omega \((\omega )\) of these three wolves16. Grey Wolf encircle the prey during hunting and so, encircling model behavior is shown in Eqs. (15) and (16),

where, t is the iteration, \(\left\{ X_p\right\}\) is location of the pray, \(\left\{ X\right\}\) is the wolf position location and \(\left\{ A\right\} \,\ \left\{ C\right\}\) are coefficient vectors. It has the value of \(A\ =2 \times a \times \left\{ r_1\right\} -a\) and \(C\ =2 \times r_2\) respectively. Here \(\left\{ r_1\right\} , \left\{ r_2\right\}\), are random vectors that range from 0 to 1 and The component exhibits a linear drop from 2 to 0 during the iterations, enabling the wolf to attain any location within the range of the two spots14.

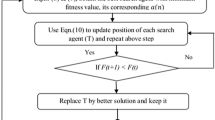

Finally, the position of the grey wolves is shown in Eq. (17), and the flow chart of GWO optimization is shown in Fig. 2.

Proposed methodology

A summary is provided of the procedures followed in order to address the filter design problem using gray wolf optimization (GWO). In the process of designing FIR LP, HP, and BS filters, respectively, the error target functions (fitness functions) specified in Eqs. (6) and (7) are utilized to evaluate each potential solution. To make a Nth order FIR filter, the best way to solve this problem is to get N+1 symmetric filter coefficients. This is made sure of by setting the symmetry condition on the coefficients that need to be improved. For symmetric coefficients, \(h(n)=h(N-1-n)\), and \(h(n)=-h(N-1-n)\) for anti-symmetric coefficients. The range of values for n is 0 to N. This means that the filter that was built has a linear phase response2.

Filter design using GWO

The subsequent procedures for implementing the Grey Wolf Optimization (GWO) algorithm for the design challenges of Finite Impulse Response (FIR) filters are outlined. The equations represent the objective functions of the cost estimation, as indicated by Eqs. (8) and (14), which are assessed at each iteration for the potential solutions in the construction of FIR filters. The adaptive values of a and A determine the parameter values of gray wolf population and exploration and exploitation. These two factors are dynamically adjusted and facilitate a seamless transition between exploration and exploitation. When decreasing the value of A, the first half of the values will be dedicated to exploration, while the remaining values will be focused on exploitation. The proposed flow are described in the Fig. 3. There will be only two ways to improve the GWO performance by adjusting a and C. The following steps are to followed to find the optimal FIR filter coefficients.

-

Step 1:

Augment the gray wolf population by 100, a= [0, 1], c= [0, 2] and A= [0, 1] and maximum objective function is fixed as − 1. +1 for LPF and BSF. We assume that number of iterations as N=4000.

-

Step 2:

Assume an initial population randomly, where each individual represents a set of variable filter coefficients x=[x0, x1, x2…xN]. The variable x denotes the collection of N+1 filter coefficients that are to be improved.

-

Step 3:

Calculate the gray wolf fitness functions of the wolves that were randomly created initially, as well as the objective function in Eqs. (8) and (14) at iteration i.

-

Step 4:

Produce a novel solution utilizing the hunting position model as depicted in the Eqs. (9) and (10).

-

Step 5:

Remove the wolves with the lowest fitness values based on the probability and create a new wolf.

-

Step 6:

Calculate gray wolf the fitness functions of all the new wolves and update the best wolves (best filter coefficient).

-

Step 7:

Continue executing steps 2 to 6 iterative until the last iterations of the cost function, resulting in the acquisition of the best wolves and their corresponding optimal filter coefficients, denoted as x.

The process of configuring algorithm control parameters is a formidable challenge. The nature of the problem at hand renders it an optimization problem, and the algorithm’s efficacy might be significantly impacted in relation to the specific problem being addressed. In the existing body of literature, there is a lack of a specific and clearly defined approach for the task at hand. The process of optimizing parameters. Researchers conducted comprehensive simulations using several sets of parameter values as indicated in their study. The concept of “range” refers to the extent or scope of something. It is often This paper use a similar methodology13. Conducting numerous simulations with minor adjustments to the values of the control parameters, while adhering to the specified range indicated in the study. According to the literature18, in this study, the selection of parameters for each algorithm is conducted subsequent to thorough analysis and evaluation. Several simulations were conducted using a range of values as described in the previous research. The filter design method is outlined in Table 1.

In the context of genetic algorithms (GAO), the selection process is carried out by employing a tournament operator with a size of 6. This approach enhances the effectiveness of the selection process by providing a selection pressure, so enabling the identification of the most optimal individual. The recommended crossover probability falls within the range of 0.6 to 0.95. In this particular case, it has been set to 0.8. This choice is based on the observation that the algorithm consistently converged within a significant number of iterations when the crossover probability was below this value. Conversely, increasing the crossover rate resulted in premature convergence. The mutation probability varies between 0.001 and 0.05. The solution exhibits reduced disturbance when low values are utilized, whereas a high mutation rate provides increased diversity. In this study, a fixed mutation rate of 0.01 is employed to augment the capacity for exploration. In order to achieve efficient exploration performance, PSO algorithm parameter are typically adjusted to a value of 2. The observed range of particle velocity is determined to be within the interval [0, 1]. The higher success rate of CSO can be attributed to its reliance on a limited number of parameters. The study reveals that no fine-tuning is necessary, and the CSO method is not affected by any modifications in the parameters pa and dimensions14. When pa is equal to 0.25, a set portion of one-fourth of the time is allocated for the exploitation process, while the remaining three-fourths are dedicated to exploring the search space in pursuit of a global solution. This ensures the fulfillment of the global optimal criterion with a greater likelihood. For GWO, mutation rate is fixed on 2 based on literature13 with discovering rate of 0.5. In terms of learning parameters, A is between [0,2] and C value fixed by 1. For all the case, limits of filter coefficients are fixed, − 1, +1 for LPF and BSF respectively.

Experimental Results

The evaluation of the performance of the FIR filters that have been built using various evolutionary algorithms (EA) is conducted through the analysis of the filter function, efficiency, and the execution time of the algorithms. The simulations were performed using MATLAB 2023A on a computer system featuring an Intel Core i3 CPU operating at a frequency of 2.3 GHz and 8 GB of RAM. The results presented in this study were derived from an extensive series of approximately 30 simulation trials, wherein the parameters were randomly altered. To ascertain the efficacy of the optimally constructed filter via Grey Wolf Optimization (GWO) and other meta heuristic optimization techniques, we also adopt the standard Parks McClellan’s (PM) technique, which is commonly utilized for designing equiripple filters, for the sake of comparison. The collection of solutions in which the lowest fitness value is attained is documented as the optimal solution. Table 1 presents the governing parameters for the four algorithms, namely CSO, PSO, GA, and GWO13.

Low pass FIR filter

The following are the FIR design specifications: The filter order is represented by N = 18 and 19, respectively, and the cut-off frequency is indicated by \(\omega _c\) \(= 0.34\). The cost objective function for the algorithms employed in this work is denoted by Eqs. (1) to (4). The derived optimal filter coefficients for the 20th and 21st order FIR) Low pass filter utilizing Particle Swarm Optimization (PSO), Cuckoo Search Optimization (CSO), Genetic algorithm Optimization (GAO), and Grey Wolf Optimization (GWO) approaches are described from Tables 2, 3 respectively. Table 4 shows the qualitative analysis for 21st order low pass FIR filter and compared with 6 different metaheuristic optimization algorithms.

The Fig. 4 for 20th order and 5 for 21st order shows a graphical comparison of the magnitude response of the designed FIR low pass filter. The plot clearly demonstrates that GWO possesses the highest capacity to reduce signal strength in the stopband region. The filters are defined principally by their levels of lowest stop band attenuation (Astop) and maximum passband attenuation (Apass). These measures are given in Table 4 for 20th order and 5 for 21st order FIR low pass filters. From this Table 4,It has been discovered that a GWO produces the lowest stopband attenuation of − 36.59dB in comparison to PSO (− 16.06dB), GAO (− 14.40dB) and CSO (− 12.20dB). However, the maximum passband attenuation of GWO is 0.03dB, which is minimum from all the other method and same for Table 5 also. In terms of execution time, it is taking very less time to compute the filter coefficients compared to other methods. This is due to the GWO algorithm has better computing performance and it has capability to work together in a pack of symmetry coefficients with very less convergence rate.

The stopband attenuation coefficients of the developed FIR low pass filter are presented in Tables 6 and 7. As seen in Table 6, the average value of the GWO based FIR low pass filter is significantly low, which indicates the consistent performance of the stopband region. The same observation applies to Table 7. The magnitude response of the suggested filter is depicted in Figs. 4 and 5. From these figures, GWO shows better performance with very less discontinuity of the FIR filter whereas PSO, GAO and CSO has the moderate discontinuity and almost all the optimization has same magnitude of overshoot. The maximum normalized passband ripple of FIR low pass filter of GWO is 0.0129 for 21st order filter and 0.0769 for 20th order filter, which makes a overshoot of 0.0041% above the ideal response.

High pass FIR filter

The design specifications are outlined as follows: The filter order is denoted as N = 18 and 19, while the cut-off frequency is represented as \(\omega _c\) \(= 0.34\). The cost objective function for the FIR high filter design algorithms utilized in this study is represented by Eqs. (1) to (4). The function is assessed at each iteration to find an optimal solution 8.

The Figs. 6 and 7 clearly describes that GWO has the maximum ability of attenuating signal in stopband region. These measures are given in Table 8 for 20th order and 9 for 21st order FIR high pass filters. From this Table 9, its observed that a GWO yields the minimum stopband attenuation of − 31.80dB in comparison to PSO (− 6.58dB), GAO (− 7.66dB) and CSO (− 5.40dB). However, the maximum passband attenuation of GWO is 0.07dB, which is minimum from all the other method and same for Table 8 also. In Tables 10 and 11 shows a stopband attenuation coefficients of the designed FIR high pass filter. As indicated in Table 10, the mean value of the GWO based FIR high pass filter is significantly low, which indicates the consistency of the performance in the stopband region. The same observation applies to Tables 4, 7. The magnitude response of the developed filter is depicted in Figs. 6 and 7. Based on the data, it can be observed that GWO exhibits superior performance with little discontinuity in the FIR filter. On the other hand, PSO, GAO, and CSO have moderate levels of discontinuity. Additionally, all optimization methods exhibit similar levels of overshoot. The FIR high pass filter of GWO has a maximum normalized passband ripple of 1.8343 for a 21st order filter and 0.04378 for a 20th order filter. This results in an overshoot of 0.0037% above the optimal response.

Band stop FIR filter

The Band stop FIR filter designs specification are as follows, filter order will be 20th and 21st, the cut-off frequency was fixed \(\omega _{c1} =0.35\pi\) and \(\omega _{c2} =0.73\pi\). The objective fitness function of GWO is defined Eqs. (13) and (14). The optima filter coefficients are limited to a maximum of +1 and minimum of − 1.

The Fig. 8 for 20th order and 9 for 21st order for 21st order shows a graphical comparison of the magnitude response of the designed FIR low pass filter. These measures are given in Table 12 for 20th order and 13 for 21st order FIR band-stop pass filters. From this Table 13, its observed that a GWO produces the minimum stopband attenuation of − 28.65dB in comparison to PSO (− 20.37dB), GAO (− 15.05dB) and CSO (− 17.52dB). However, the maximum passband attenuation of GWO is 0.05dB, which is minimum from all the other method and same for Table 12 also. In Tables 14 and 15 shows stop-band attenuation coefficients of the designed FIR band-stop pass filter. As seen in 14, The fact that the mean value in the GWO-based FIR band-stop pass filter is very low indicates that the performance of the stopband region is consistent, and the same for the Table 15. In Figs. 8 and 9, the magnitude response of the designed filter is shown. From these figures, GWO shows better performance with very less discontinuity of the FIR filter whereas PSO, GAO and CSO has the moderate discontinuity and almost all the optimization has same magnitude of overshoot. The maximum normalized passband ripple of FIR band-stop pass filter of GWO is 1.357 for 21st order filter and 1.496 for 20th order filter, which makes an overshoot of 0.0046% above the ideal response.

This section provides a comprehensive analysis of the developed Finite Impulse Response (FIR) filter, highlighting the improvements achieved in the filter design (Low Pass Filter, High Pass Filter, Band Stop Filter) utilizing GWO compared to PSO, GAO, and CSO. The GWO design exhibits a higher percentage improvement in low pass filter characteristics compared to the other designs illustrated in Figs. 10 and 11. The GWO-based lowpass filter coefficients demonstrate a significant enhancement of 39.18%, 88.13%, and 5.93% compared to filters based on PSO, GAO, and CSO, respectively, in terms of achieving the least stopband attenuation. The greatest ripples created demonstrate an improvement of 51.49%, 11.07%, and 5.09% compared to the PSO, GAO, and CSO designs, respectively. Significant progress has been observed in the execution time of the low pass filter design process, as depicted in Figs. 10 and 11. The GWO algorithm converges rapidly and terminates to identify an optimal coefficient. The GWO design exhibits a higher percentage improvement in high pass filter characteristics compared to the other designs illustrated in Figs. 12 and 13. The study demonstrates that the GWO-based lowpass filter coefficients outperform those based on PSO, GAO, and CSO by 18.8%, 83.83%, and 11.80%, respectively, in terms of achieving the smallest stopband attenuation. The greatest ripples created demonstrate an improvement of 23.23%, 16.45%, and 9.7% compared to the PSO, GAO, and CSO designs, respectively. Significant progress has been made in the execution time of the low pass filter design process, as depicted in Fig. 12. The GWO algorithm converges rapidly and terminates when it finds the optimal coefficient. The GWO design exhibits a higher percentage improvement in band pass filter characteristics compared to the other designs illustrated in Fig. 14. The GWO-based lowpass filter coefficients show a significant improvement of 28.88%, 81.32%, and 11.41% compared to filters based on PSO, GAO, and CSO, respectively, in terms of minimal stopband attenuation. The highest ripples achieved demonstrate an improvement of 38.83%, 4.88%, and 42.88% compared to the PSO, GAO, and CSO designs, respectively. Significant progress has been observed in the execution time of the low pass filter design process, as depicted in Fig. 15. The GWO algorithm converges more rapidly and terminates to discover an optimal coefficient.

The results of the study demonstrate a substantial enhancement in the design of HP and BS filters through the utilization of gray wolf optimization techniques. These filters exhibit a high level of accuracy and may be effectively implemented in many applications. The comparative analysis reveals that the proposed filter, developed utilizing the grey wolf optimizer (GWO), exhibits superior performance in comparison to the genetic algorithm optimizer (GAO)7, Particle Swarm Optimizer (PSO)11,23,26, and Cuckoo search optimizer (CSO), Moth flame (MFO), Whale optimization (WAO) and binary Bat optimization (BBA)13,24.

Conclusion

This study addresses the performance evaluation of constructed finite impulse response (FIR) filters, namely Lowpass, Highpass, and Bandstop filters, utilizing several metaheuristic algorithms, particularly PSO, GAO, CSO, and GWO. The goal of the design is to determine the filter coefficients that minimize the absolute relative error between the filter’s response and the desired output. The solution that was observed demonstrates an effective design that meets the criteria of achieving a high level of attenuation in the stopband and maintaining a uniform response in the passband of a digital filter. To facilitate the design of Lowpass, Highpass, and Bandstop filters, it is possible to employ transformations on the provided design methodology. After conducting a comparative analysis of three optimization algorithms on a standardized platform and with comparable specifications, it was found that the grey wolf optimization (GWO) yielded the most optimal solution. The filter based on the GWO algorithm exhibited the lowest design error and exhibited superior performance in terms of magnitude response, with strong attenuation in the stopband, minimal ripples in the passband and stopband, and a nearly identical transition width. Additionally, it demonstrated the shortest execution time. Moreover, this results in increased flexibility when building the finite impulse response (FIR) filter, since there is no need for meticulous adjustment of parameters. Therefore, it can be inferred that the grey wolf optimization (GWO) is the most optimal heuristic algorithm within the scope of this particular research domain. Presently, our focus lies on the issue of system identification utilizing the grey wolf optimization (GWO) algorithm to maximize outcomes with the objective of achieving a reduction in filter length.

Data availibility

The datasets used and analysed during the current study available from the corresponding author on reasonable request.

References

Antoniou, A. Digital Filters: Analysis, Design, and Signal Processing Applications (McGraw-Hill Education, 2018).

Mitra, S. K. Digital Signal Processing: A Computer-based Approach Vol. 1221 (McGraw-Hill New York, 2011).

De Jong, K. Evolutionary computation: A unified approach. In Proc. of the Genetic and Evolutionary Computation Conference Companion, 373–388 (2017).

Suckley, D. Genetic algorithm in the design of fir filters. IEE Proc. G (Circuits, Devices Syst.) 138, 234–238 (1991).

Yang, X.-S., Cui, Z., Xiao, R., Gandomi, A. H. & Karamanoglu, M. Swarm Intelligence and Bio-inspired Computation: Theory and Applications (Newnes, 2013).

Yang, X.-S. Nature-Inspired Optimization Algorithms (Academic Press, 2020).

Boudjelaba, K., Ros, F. & Chikouche, D. Potential of particle swarm optimization and genetic algorithms for fir filter design. Circuits Syst. Signal Process. 33, 3195–3222 (2014).

Kacelenga, R., Graumann, P. & Turner, L. Design of digital filters using simulated annealing. In IEEE International Symposium on Circuits and Systems, 642–645 (IEEE, 1990).

Liang, J. J., Qin, A. K., Suganthan, P. N. & Baskar, S. Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans. Evolut. Comput. 10, 281–295 (2006).

Ababneh, J. I. & Bataineh, M. H. Linear phase fir filter design using particle swarm optimization and genetic algorithms. Digit. Signal Process. 18, 657–668 (2008).

Mandal, S., Ghoshal, S. P., Kar, R. & Mandal, D. Design of optimal linear phase fir high pass filter using craziness based particle swarm optimization technique. J. King Saud Univ.-Comput. Inf. Sci. 24, 83–92 (2012).

Yang, X.-S. & Deb, S. Cuckoo search via lévy flights. In 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), 210–214 (IEEEE, 2009).

Aggarwal, A., Rawat, T. K. & Upadhyay, D. K. Design of optimal digital fir filters using evolutionary and swarm optimization techniques. AEU-Int. J. Electron. Commun. 70, 373–385 (2016).

Rashidi, K., Mirjalili, S. M., Taleb, H. & Fathi, D. Optimal design of large mode area photonic crystal fibers using a multiobjective gray wolf optimization technique. J. Lightw. Technol. 36, 5626–5632 (2018).

Anand, R., Samiaappan, S., Veni, S., Worch, E. & Zhou, M. Airborne hyperspectral imagery for band selection using moth-flame metaheuristic optimization. J. Imaging 8, 126 (2022).

Nadimi-Shahraki, M. H., Taghian, S. & Mirjalili, S. An improved grey wolf optimizer for solving engineering problems. Expert Syst. Appl. 166, 113917 (2021).

Yadav, S., Kumar, M., Yadav, R. & Kumar, A. A novel approach for optimal digital fir filter design using hybrid grey wolf and cuckoo search optimization. In Proc. of First International Conference on Computing, Communications, and Cyber-Security (IC4S 2019), 329–343 (Springer, 2020).

Prakash, M. B., Sowmya, V., Gopalakrishnan, E. & Soman, K. Noise reduction of ecg using chebyshev filter and classification using machine learning algorithms. In 2021 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), 434–441 (IEEE, 2021).

Sanjana, K., Sowmya, V., Gopalakrishnan, E. & Soman, K. Explainable artificial intelligence for heart rate variability in ECG signal. Healthc. Technol. Lett. 7, 146 (2020).

Chen, X. & Parks, T. Design of fir filters in the complex domain. IEEE Trans. Acoust. Speech Signal Process. 35, 144–153 (1987).

Kumar, K. P. & Kanhe, A. FPGA architecture to perform symmetric extension on signals for handling border discontinuities in fir filtering. Comput. Electr. Eng. 103, 108307 (2022).

Ye, J., Yanagisawa, M. & Shi, Y. Scalable hardware efficient architecture for parallel fir filters with symmetric coefficients. Electronics 11, 3272 (2022).

Rajasekhar, K. L1-norm and lMS based digital fir filters design using evolutionary algorithms. J. Electr. Eng. Technol. 19, 753–762 (2024).

Chauhan, S., Singh, M. & Aggarwal, A. K. Designing of optimal digital IIR filter in the multi-objective framework using an evolutionary algorithm. Eng. Appl. Artif. Intell. 119, 105803 (2023).

Anand, R., Veni, S. & Aravinth, J. Robust classification technique for hyperspectral images based on 3d-discrete wavelet transform. Remote Sens. 13, 1255 (2021).

Siddiqui, L., Mani, A. & Singh, J. Improving design accuracy of a finite impulse response fractional order digital differentiator filter using quantum-inspired evolutionary algorithm. In 2024 14th International Conference on Cloud Computing, Data Science & Engineering (Confluence), 142–147 (IEEE, 2024).

Author information

Authors and Affiliations

Contributions

This manuscript reflects the collective efforts of three authors, each bringing their unique expertise and perspectives to the project. R.A played a pivotal role in conceptualizing the research design and formulating the research questions and experimental analysis, draft manuscripts. S.S conducted the primary data collection, demonstrating exceptional skill in data gathering and attention to detail. M.P took charge of the statistical analysis, applying advanced analytical methods to interpret the results with precision. The collaborative efforts of these three authors have resulted in a well-rounded and robust contribution to the field, showcasing a synergy of skills and expertise.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

R, A., Samiappan, S. & Prabukumar, M. Fine-tuning digital FIR filters with gray wolf optimization for peak performance. Sci Rep 14, 12675 (2024). https://doi.org/10.1038/s41598-024-62403-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-62403-6

- Springer Nature Limited