Abstract

Quality control and quality assurance are challenges in direct metal laser melting (DMLM). Intermittent machine diagnostics and downstream part inspections catch problems after undue cost has been incurred processing defective parts. In this paper we demonstrate two methodologies for in-process fault detection and part quality prediction that leverage existing commercial DMLM systems with minimal hardware modification. Novel features were derived from the time series of common photodiode sensors along with standard machine control signals. In one methodology, a Bayesian approach attributes measurements to one of multiple process states as a means of classifying process deviations. In a second approach, a least squares regression model predicts severity of certain material defects.

Similar content being viewed by others

Introduction

Direct metal laser melting (DMLM) is an additive manufacturing process where complex parts are built by directing a laser into a bed of powdered metal in a pattern defined by computer control. This process can exhibit significant variability from layer to layer and across the build area. The lack of sufficient quality control and assurance limits the potential of DMLM for high-performance components and high-volume production. Conventional approaches include between-build machine diagnostics and downstream part inspection, but these processes catch problems only after undue cost has been incurred processing defective parts.

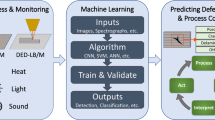

The need for in-process monitoring and control has been the subject of ongoing reviews1,2,3,4,32. Recent work has explored in-process monitoring and defect detection using many different sensing and imaging modalities5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32. The majority of these methods employ custom instrumentation and produce high-resolution and high-speed data streams, making them difficult to deploy on commercial machines in a production setting.

This paper describes new in-process monitoring methods, developed under the guiding principle of easy deployment on original equipment manufacturers’ machines with minimum modifications to available sensor packages. Two techniques are proposed, one to classify process shifts using time-dependent models of photodiode signals and another to predict severity of part defects using machine learning models.

Methods

Machine configuration

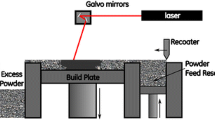

Figure 1 shows a representative system configuration of a commercially available DMLM machine with a standard melt pool monitoring package. An industrial PC interprets input design files to provide control to a galvanometric scanner and the laser. Additional optics direct light emitted from the melt pool to in-line sensors including photodiodes and CMOS cameras. A data acquisition system collects the sensor signals and synchronizes them with a laser trigger signal. The methods described in this paper were evaluated on Concept Laser M2 machines with QMMeltpool 3D melt pool monitoring system, which includes a down-beam photodiode and a CMOS camera, and EOS M290 machines with EOSTATE melt pool monitoring system, which includes a down-beam photodiode and an off-axis photodiode. The M2 machine used in this study comprises a build envelope of 250 × 250 × 350 mm3 (x,y,z) equipped with a laser system with power available up to 400 W, a scanning speed of 7 m/s and allows layer thicknesses between 20 – 80 μm. EOS M290 machine has a build envelope of 250 × 250 × 325 mm3 (x,y,z), equipped with a 400 W Yb-fiber laser allowing a layer thickness of 100 μm.

Process fault detection

One aim of this study was to investigate if and how the transient melt pool behavior can be used to detect off-normal process conditions. As described in “Machine configurat ion” section, the additive machines are equipped with multiple sensors (photodiodes and/or cameras) to monitor the melt pool behavior during the build process. We consider the response of the melt pool as measured from \(S\) sensors (where, S denotes the number of sensors on the machine). A laser strike is defined as the interaction between the laser and the material being melted from the time the laser turns on to when the laser turns off, and the corresponding melt pool emissions. The beginning of each laser strike is identified in the sensor signals because the data acquisition system synchronizes the sensor measurements with the laser trigger signal. The sensor signals are referenced from when the laser turns on for each strike at sample \(k = 1\). Samples up to \(k = {k}_{T}\) are considered transient, and samples \(k > {k}_{T}\) are steady state. The parameter, \({k}_{T}\), was chosen heuristically: an initial choice was based on qualitative judgement of when the process response to each laser strike settled close to a steady value for a variety of process conditions. \({k}_{T}\) was later iteratively refined based on the sensitivity of the results to this parameter, as well as computational considerations.

For process fault detection we have employed a multiple model hypothesis test (MMHT), which is a Bayesian approach that compares sensor measurements to multiple pre-estimated models to indicate a deviation from normal conditions. A model of the normal process is required, and models of one or more off-normal processes are optional. While we describe a particular form of model here, the framework is effective for different types of models (e.g. auto-regressive, PCA, physics-based, etc.). At each sample, \(k = 1, 2,\ldots;\) and for a given process condition; the measurements from S sensors are modeled as a jointly Gaussian random vector. The set of process models (for example, processes with different shifts in laser power level), are indexed by \(m = 1, 2, \ldots, M .\) The likelihood of a set of sensor measurements \({\varvec{x}}\in {\mathbb{R}}^{S}\) is

where the covariance matrix, \({\Sigma }_{k}\), and the mean vector, \({\mu }_{k}\) are constant for \(k > {k}_{T}\). Time-dependent correlation of the measurement samples is neglected in this analysis. While better classification performance may be possible if such correlation is accounted for, this assumption facilitates computational flexibility to aggregate points arbitrarily in spatial dimensions.

Next, we define a kernel by a set of points aggregated in either space or time. Let I be the set of N indices defining the kernel and \({X}_{I}\in {\mathbb{R}}^{S\times N}\) be the matrix of measurements from S sensors corresponding to the indices in the kernel. Because of the time-based independence assumption, the likelihood of \({X}_{I}\) can be computed easily for arbitrary kernels. This flexibility is important for being able to spatially aggregate measurements that may not have occurred adjacent in time. A square kernel is illustrated in the top right of Fig. 2a, with indices that identify the set of measurement points within that square. We apply Bayes’ rule to compute a posterior probability for each process model as

(a) Multiple model hypothesis test using a point-wise model of the melt pool response during laser strikes. Left side of figure shows actual models for the on-axis photodiode for the normal process and representative faulted processes. The right side illustrates a square spatial kernel that aggregates points across multiple laser strikes. These points are then analyzed as described in the text. (b) Four nominal models to analyze Concept Laser data. The solid lines represent the mean intensity models while the dashed lines represent one standard deviation bounds.

where \(\mathrm{Pr}(m)\) is the prior probability model \(, m.\) The likelihood of \({X}_{I}\) is

where \({x}_{n}\) is the \({n}{th}\) column of \({X}_{I}\), and \({k}_{n}\) is the laser-on index associated with the \({n}\)th sample in the kernel. We also introduce an “unknown” likelihood,

where \({p}_{\mathrm{unk}}\) can be chosen to prevent measurements from being attributed to an existing model if the associated likelihood is too low. In this study, \({p}_{\mathrm{unk}}\) was chosen to be equal to the likelihood at 2.3 standard deviations from the mean (i.e., corresponding to the 99% confidence interval) of the average of a Gaussian distributed-variable, with an assumed standard deviation, averaged over \(S\times N\) measurements. The standard deviation was based on an approximate signal-to-noise ratio and is assumed to be comparable in all process states. Finally, we use a maximum a posteriori (MAP) decision rule to attribute the measurements to the model (or unknown) that has the highest posterior probability. Figure 2a illustrates the MMHT process. The left side of the figure shows an example of models for the normal process and representative faulted processes. For ease of illustration, a one-dimensional model with only on-axis photodiode data is shown, with markers and error bars representing the mean and variance, respectively, of the model for each sample time. (Note that with multiple sensors, the model would include a mean vector and covariance matrix.) The right side illustrates a square spatial kernel that aggregates points across multiple laser strikes. These points are then aggregated and analyzed as previously described.

The primary experiments were conducted on the EOS M290 system on which measurements were collected from a down-beam photodiode sensor and an off-axis photodiode at \(60 \; \mathrm{kHz}\), for the hatch scan strategy. Raw measurements were normalized to remove fixed trends across the build plate area due to machine-specific optic artifacts. Then the measurements from both sensors were combined into a vector (i.e., \(S=2\) in Eq. (1)). The mean vector and covariance matrix for the two sensors at each sample time were estimated by compiling intensity measurements from representative training builds, including a normal process and a series of processes in which laser power, scan speed, or hatch spacing were shifted in different increments. The parts in these builds included a variety of geometries such as holes, thinner walls, and sharp corners.

Finally, validation builds were run with different process parameter shifts to test the ability of the algorithm to accurately classify deviations from the normal process. In addition, builds that experienced shifted gas flow and damaged optics were considered to evaluate the detection of localized process anomalies. For the process shifts, training and validation builds were produced by the same machine. In the case of optic defects, the data was from a real occurrence from a production setting, so training and validation was performed on different layers of the same build.

Though the primary experiments for process fault detection analysis were performed using EOS machines, the analysis was later expanded to include Concept Laser (CL) M2 machines in a limited scope. The default scan strategy for the builds used in this analysis was to divide a part into skin and core areas. Collectively these would comprise the regions of the part that are labeled as hatch in an EOS system. Scope of our analysis has been to focus on the hatch scan strategy since the volumetric bulk of most parts are processed under those conditions. The M2 machine used in this program was of dual scan head configuration. They are situated one in front of the other with the scan head towards the back of the build plate being Head 0 (LH0) and the front scan head being Head 1 (LH1). M2 machines are equipped with both on-axis photodiode and camera sensors. However, the camera was not properly configured in the machine used for this analysis and, hence, we only considered the on-axis photodiode. To classify nominal conditions for the Concept Laser parameter set, four models were built using the on-axis photodiode, one per scan head per scan parameter (skin/core): LH0—Skin, LH0—Core, LH1—Skin and LH1—Core (Fig. 2b). The core parameter set uses a higher laser power than the skin parameter set and a larger spot size. The goal of this analysis was to use a part wise kernel (following the same MMHT framework) with the nominal CL models to classify parts built with the different parameter sets. It is important to note that in practice, all four parameter sets would be considered “normal”, but they were used in this study to demonstrate classification of different process states.

Part quality prediction

In addition to displaying signatures of process anomalies, melt pool behavior has a strong relationship to resultant part quality. Process deviations that cause porosity, lack of fusion, and cracks are observable in the melt pool response. Here we develop a statistical model to predict the relationship between sensor data and the severity of different material defects as measured from optical micrographs for representative pins made from CoCr. Several solid pins were built across the build plate in a Concept Laser M2 machine by varying power, speed and spot size of the processing parameters from the nominal values (details of nominal values are withheld due to proprietary nature of the process parameter set) by up to 50% to simulate defects caused by both higher and lower energy densities. A lower energy density would cause lack of fusion while a higher energy density will cause boiling porosity. Cracks are typically formed by rapid cooling of the melt pool. This expected nature of the relationship between defects and process observables is accounted for through the selection of features in the regression model, some of which include laser control parameters:

where \({D}_{\mathrm{defect}}\) is the area fraction of a given type of defect, which can be obtained from either optical micrograph from part cut ups, CT scans of the part, or physical testing of the part. The defect score of each type is then calculated based on the ratio of the total area belonging to a certain defect class in a cross section to the total area in the said cross section. Laser parameters are given at the beginning of build process: \(p\) is power, \(s\) is speed, \(f\) is focus, p/s is linear energy density, and \(h\) is hatch spacing. Sensor data are aggregated into part level variables: µpart is the part-wise average intensity, \({\sigma }_{\mathrm{part}}\) is the standard deviation of the part-wise intensity, and \({\sigma }_{\mu \mathrm{layer}}\) is the standard deviation of layer-wise average intensity. The transfer function (Eq. 5) was obtained using stepwise ordinary least squares (OLS) optimization for each of the defects. Given the presence of collinearity, we constrained the variance inflation factor when applying stepwise OLS optimization to determine the significant model features.

Results

Process fault detection

We found that the transient response of the melt pool provided better discriminatory information than the steady state response. This can be seen in Fig. 2a, where the steady-state melt pool intensity from a build with \(10\%\) higher power overlaps with the intensity from a build with \(10\%\) lower speed, while the transient responses to these shifts were distinct. A chosen transient length of 45 samples demonstrated compelling classification performance, while also balancing computational load.

Analysis of shifts in bulk parameters (laser power, speed, and hatch spacing) was performed using a kernel that captured all points in a layer. A square spatial kernel tuned to \(0.39\) mm per side was used to detect localized process anomalies such as optic damage and artifacts of low gas flow. This kernel size was tuned to balance classification accuracy and resolution.

Figure 3a shows the classification results for EOS M290 for bulk parameter shifts on data from validation builds that were different from the training build. There is a tradeoff of classification accuracy for an extended model set that incorporates more failure modes. In other words, with more candidate process models, there are more opportunities to confuse their output signatures. The most frequent confusion was between processes with shifted hatch spacing and a normal process, suggesting a relative insensitivity of the melt pool to this parameter shift. Another prominent confusion was between wider hatch spacing and lower power, indicating there may be a thermal effect that could be added to the model to improve classification. It might be reasonable to exclude hatch spacing in order to improve results, since this is a parameter defined by the build strategy that is unlikely to deviate during a process and it could be verified by other means upstream of the build process.

(a) Classification of process defects using MMHT on EOS M290. On the left is a confusion matrix for bulk parameter shifts. On the right are examples of detection of localized defects. (b) Classification of process shifts using MMHT on Concept Laser M2. The plot shows skin sections from a layer of a 36-cylinder build. The top 3 rows of parts, built using laser head 0, were correctly classified by the LH0-skin model (green, figure on the left) while they were classified as unknown (yellow) by the LH0—Core model (figure on the right). The table shows classification results aggregated over 20 layers. Models from builds with two parameter sets (skin and core) correctly classify the parts built with corresponding parameter sets with high accuracy (> 90%).

The image on the top right of Fig. 3a shows detection of a real optic defect that occurred in a production setting. The signature for this anomalous condition was relatively dramatic, so local classification of the defect was unambiguous. The bottom right image in Fig. 3a shows low gasflow-induced anomalies, including unknown classifications for unmodeled behavior. The model for a low gasflow defect was trained in regions of the sensor data thought to be associated with smoke occlusion of the laser beam. However, when the classification algorithm was applied, large areas of another anomalous condition were identified. This demonstrates the utility of the “unknown” model for detecting off-normal conditions, even if an explicit model of this condition does not exist.

Figure 3b shows results from the limited analysis that was performed on the Concept Laser M2 machine. Using the same MMHT framework, we demonstrate that models built on a combination of scan head and build parameters are highly successful in classifying parts built with the same scan head and different build parameters. For instance, the LH0-Skin model correctly classifies all the parts built with LH0 and Skin parameters as true positives while the same parts were (correctly) classified as true negatives when analyzed using the LH0-Core model.

The proposed fault detection methodology was optimized computationally to complete analysis within a layer cycle time, providing an ongoing process monitoring diagnostic during a build.

Part quality prediction

As previously mentioned, training builds for this study included pins with varying parameter shifts with the intent of generating parts with correspondingly varying defect severity, defined by percent area as observed in standard optical micrographs. The micrographs of the samples are obtained from the cross-sectional view from an STL file generated by a standard micro CT module. A proprietary image processing algorithm is used on the cross-sectional views to identify pores, cracks and lack of fusion defects and get their dimensions to micron resolution. Figure 4 shows fits of the respective regression models and lists the significant factors for each one. Predictions for pores and lack of fusion had a relatively high R-Squared value, which is an indicator of goodness of fit of the model. On the other hand, cracks were harder to predict since our models were primarily trained on parts made of CoCr, a material that is typically less prone to cracking. For all defects, predictive factors were contained in both the photodiode signal features and certain laser control parameters. Part quality predictions were analyzed on a part-wise basis at the end of a build.

Conclusion

We successfully implemented in situ analytics that leverage process monitoring packages available on two commercial DMLM machines. In one experiment on an EOS system, process defect detection was able to resolve 10–20% shifts in bulk process parameters, and detect other localized defects to sub-millimeter accuracy, all within layer cycle time. This was accomplished by extracting discriminatory information from the transient response of the melt pool in the microseconds after the laser turns on at the beginning of each strike. There are several opportunities for further refinement of this method. First, classification performance may be improved by removing the hatch spacing variation models as discussed in “Process fault detection” section. Second, while normalization of the raw sensor measurements effectively removed gross trends due to optical artifacts, additional systematic variations were observed in the sensor measurement that limited system resolution. Ongoing work is focused on understanding these variations and creating parametric predictive models against which the sensor signals can be compared. Third, there is potential to refine performance based on a more systematic selection of the defined length of the “transient” (defined by parameter, \({k}_{T}).\) Finally, incorporating other available sensor signals such as the CMOS camera in the Concept Laser system could provide process signatures with better discriminatory power.

Next steps would also address several limitations of the study described here. Detection of bulk parameter shifts was tested on builds that included a variety of part geometries within the build, but the training and validation builds had the same parts. Further studies are needed to characterize classification performance when the training build differs in geometry from the build being evaluated. Also, unlike the bulk parameter shift test, localized anomalies were not evaluated with all available candidate models together. A more systematic study of localized defect detection is recommended. The main challenge with local defects is that the process conditions associated with them tend to be more difficult to induce in a controlled manner in order to train reliable models.

These results of the second study demonstrate a proof of concept that for defects of a certain level of severity caused by the variation in input parameters, part quality can be successfully predicted without deploying the time-consuming method of optical micrograph analysis. This was also accomplished through selection relevant features derived from both the sensor signals and machine control parameters. The scope of this study was limited to CoCr only, so future evaluation would expand to other materials.

In spite of the limitations discussed here, results, show that the sensor data can be used to model nominal processes and identify in situ process shifts; and that there is a high correlation between down-beam photodiode sensor data and as-built material defect severity. These early, nondestructive diagnostics enable cost savings and, with adequate validation, could potentially serve as quality assurance metrics. Note, however, that due to the aforementioned limitations, the analytics described in this paper is limited to research applications and further development of the technology along the suggested lines will be required to mature the technology into a commercial offering.

References

Spears, T. G. & Gold, S. A. In-process sensing in selective laser melting (slm) additive manufacturing. Integr. Mater. Manuf. Innov. 5(1), 16–40 (2016).

Chua, Z. Y., Ahn, I. H. & Moon, S. K. Process monitoring and inspection systems in metal additive manufacturing: Status and applications. Int. J. Precision Eng. Manuf. Green Technol. 4(2), 235–245 (2017).

Grasso, M. & Colosimo, B. M. Process defects and in situ monitoring meth- ods in metal powder bed fusion: A review. Meas. Sci. Technol. 28(4), 044005 (2017).

Malekipour, E. & El-Mounayri, H. Common defects and contributing pa- rameters in powder bed fusion am process and their classification for online monitoring and control: A review. Int. J. Adv. Manuf. Technol. 95(1–4), 527–550 (2018).

Grasso, M., Demir, A., Previtali, B. & Colosimo, B. In situ monitoring of selective laser melting of zinc powder via infrared imaging of the process plume. Robot. Comput. Integr. Manuf. 49, 229–239 (2018).

Imani, F. et al. Process mapping and in-process monitoring of porosity in laser powder bed fusion using layerwise optical imaging. J. Manuf. Sci. Eng. 140(10) (2018).

Khanzadeh, M. et al. Dual process monitoring of metal-based additive manufacturing using tensor decomposition of thermal image streams. Addit. Manuf. 23, 443–456 (2018).

Lu, Q., Nguyen, N., Hum, A., Tran, T. & Wong, C. Optical in-situ monitoring and correlation of density and mechanical properties of stainless steel parts produced by selective laser melting process based on varied energy density. J. Mater. Process. Technol. 271, 520–531 (2019).

Ye, D., Hong, G. S., Zhang, Y., Zhu, K. & Fuh, J. Y. H. Defect detection in selective laser melting technology by acoustic signals with deep belief networks. Int. J. Adv. Manuf. Technol. 96(5–8), 2791–2801 (2018).

Yuan, B. et al. Machine-learning-based monitoring of laser powder bed fusion. Adv. Mater. Technol. 3(12), 1800136 (2018).

Zhang, Y., Hong, G. S., Ye, D., Zhu, K. & Fuh, J. Y. Extraction and evaluation of melt pool, plume and spatter information for powder-bed fusion am process monitoring. Mater. Des. 156, 458–469 (2018).

Zhang, B., Liu, S. & Shin, Y. C. In-process monitoring of porosity during laser additive manufacturing process. Addit. Manuf. 28, 497–505 (2019).

Bisht, M., Ray, N., Verbist, F. & Coeck, S. Correlation of selective laser melting-melt pool events with the tensile properties of ti-6al-4v eli pro- cessed by laser powder bed fusion. Addit. Manuf. 22, 302–306 (2018).

Kolb, T., Müller, L., Tremel, J. & Schmidt, M. Melt pool monitoring for laser beam melting of metals: Inline-evaluation and remelting of surfaces. Procedia Cirp 74, 111–115 (2018).

Coeck, S., Bisht, M., Plas, J. & Verbist, F. Prediction of lack of fusion porosity in selective laser melting based on melt pool monitoring data. Addit. Manuf. 25, 347–356 (2019).

Montazeri, M. & Rao, P. Sensor-based build condition monitoring in laser powder bed fusion additive manufacturing process using a spectral graph theoretic approach. J. Manuf. Sci. Eng. 140(9) (2018).

Grünberger, T. & Domröse, R. Direct metal laser sintering: Identification of process phenomena by optical in-process monitoring. Laser Technik J. 12(1), 45–48 (2015).

Sampson, R. et al. An improved methodology of melt pool monitoring of direct energy deposition processes. Optics Laser Technol. 127, 106194 (2020).

Scime, L. & Beuth, J. Using machine learning to identify in-situ melt pool signatures indicative of flaw formation in a laser powder bed fusion additive manufacturing process. Addit. Manuf. 25, 151–165 (2019).

Zhang, J., Wang, P. & Gao, R. X. Modeling of layer-wise additive manufacturing for part quality prediction. Procedia Manuf. 16, 155–162 (2018).

Seifi, S. H., Tian, W., Doude, H., Tschopp, M. A. & Bian, L. Layer-wise modeling and anomaly detection for laser-based additive manufacturing. J. Manuf. Sci. Eng. 141, 081013 (2019).

Gaikwad, A. C. et al. Heterogeneous sensing and scientific machine learning for quality assurance in laser powder bed fusion—a single-track study. additive manufacturing (Accepted, In-Press, 2020).

Li, R., Jin, M. & Paquit, V. C. Geometrical defect detection for additive manufacturing with machine learning models. Mater. Des. 206, 109726 (2021).

Mohammadi, M. G., Mahmoud, D. & Elbestawi, M. On the application of machine learning for defect detection in L-PBF additive manufacturing. Opt. Laser Technol. 143, 107338 (2021).

Gobert, C., Reutzel, E. W., Petrich, J., Nassar, A. R. & Phoha, S. Application of supervised machine learning for defect detection during metallic powder bed fusion additive manufacturing using high resolution imaging. Addit. Manuf. 21, 517–528 (2018).

Williams, J., Dryburgh, P., Clare, A., Rao, P., & Samal, A. Defect detection and monitoring in metal additive manufactured parts through deep learning of spatially resolved acoustic spectroscopy signals. Smart Sustain. Manuf. Syst. 2(1) (2018).

Chen, L., Yao, X., Xu, P., Moon, S. K. & Bi, G. Rapid surface defect identification for additive manufacturing with in-situ point cloud processing and machine learning. Virtual Phys. Prototyping 16(1), 50–67 (2021).

Snow, Z., Diehl, B., Reutzel, E. W. & Nassar, A. Toward in-situ flaw detection in laser powder bed fusion additive manufacturing through layerwise imagery and machine learning. J. Manuf. Syst. 59, 12–26 (2021).

Khan, M. F. et al. Real-time defect detection in 3D printing using machine learning. Mater. Today Proc. 42, 521–528 (2021).

Gaikwad, A. et al. Heterogeneous sensing and scientific machine learning for quality assurance in laser powder bed fusion—A single-track study. Addit. Manuf. 36, 101659 (2020).

Carter, W. et al. An open-architecture multi-laser research platform for acceleration of large-scale additive manufacturing (ALSAM). In 2019 International Solid Freeform Fabrication Symposium. University of Texas at Austin (2019).

Blom, R. S., John, F., Dean M. R., Subhrajit, R., & Harry, K. M. Jr. Systems and method for advanced additive manufacturing. U.S. Patent 10,747,202, issued August 18, 2020.

Acknowledgements

This material is based upon work performed at GE supported by the Air Force Research Laboratory under contract number FA8650-14-C-5702.

Author information

Authors and Affiliations

Contributions

S.R.M., S.F., X.P. and S.R. wrote the main manuscript. S.R.M., S.F., K.M., G.L., M.L. and T.S. contributed to the develop the process fault detection techniques. X.P. and S.R. contributed to develop the part quality prediction techniques.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Felix, S., Ray Majumder, S., Mathews, H.K. et al. In situ process quality monitoring and defect detection for direct metal laser melting. Sci Rep 12, 8503 (2022). https://doi.org/10.1038/s41598-022-12381-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-12381-4

- Springer Nature Limited

This article is cited by

-

Real-time monitoring and quality assurance for laser-based directed energy deposition: integrating co-axial imaging and self-supervised deep learning framework

Journal of Intelligent Manufacturing (2023)