Abstract

Human gait data have traditionally been recorded in controlled laboratory environments focusing on single aspects in isolation. In contrast, the database presented here provides recordings of everyday walk scenarios in a natural urban environment, including synchronized IMU−, FSR−, and gaze data. Twenty healthy participants (five females, fifteen males, between 18 and 69 years old, 178.5 ± 7.64 cm, 72.9 ± 8.7 kg) wore a full-body Lycra suit with 17 IMU sensors, insoles with eight pressure sensing cells per foot, and a mobile eye tracker. They completed three different walk courses, where each trial consisted of several minutes of walking, including a variety of common elements such as ramps, stairs, and pavements. The data is annotated in detail to enable machine-learning-based analysis and prediction. We anticipate the data set to provide a foundation for research that considers natural everyday walk scenarios with transitional motions and the interaction between gait and gaze during walking.

Measurement(s) | Full-body motion data including positional skeleton data, joint angles, acceleration and velocity of 23 body segments • Foot pressure • Eye gaze position including first-person scene video |

Technology Type(s) | Xsens body suit with 17 IMU sensors • IEE ActiSense Smart Footwear Sensor with 8 sensor segments per foot • Pupil Invisible Glasses Eyetracker |

Independent variable(s) | pressure values per foot • eye gaze X, Y position |

Similar content being viewed by others

Background & Summary

The scientific assessment and modeling of human locomotion has been a central topic in various domains such as medicine, ergonomics, robotics, and sports1,2,3,4,5,6,7,8. Traditionally, human gait data have been recorded in controlled laboratory settings9,10,11, e.g., on catwalks or treadmills. However, based on these data, it is impossible to model human gait in more natural and challenging environments where people exhibit a richer gait behavior. Such models are necessary, e.g., for a satisfactory user experience with assist devices that can be used in a clinical environment and in daily life.

Often gait data are available as part of human activity data sets12,13,14,15 and hence they rarely contain ground truth information for the segmentation of single steps. These data sets provide lower body IMU data and sometimes include FSR data to provide ground truth for step segmentation. There are only a few dedicated human walk data sets that show walking in natural outdoor environments16,17,18. The focus is on walking speed variation and measurement of different walk patterns in isolation.

We aimed to create a richly annotated gait data set of natural, everyday walk scenarios requiring continuous walking of 5 to 15 minutes that naturally contain diverse gait patterns such as level walking, walking up/down ramps and stairs, as well as the corresponding transitions in between. In particular, the recordings include the natural interaction with other pedestrians and cyclists that affect the subjects’ gait behavior. We provide whole-body data from 17 IMU sensors to enable a wide variety of motion modeling. Additionally, we include plantar foot pressure data that yield accurate foot contact information and may be used independently from the IMU data.

The data set consists of 9 hours of gait data recorded from 20 healthy subjects. They walked across three different courses in a public area around a suburban train station. A single repetition of each course required several minutes of walking and captured many common elements such as straight and curvy passages, slopes, stairs, and pavements. We annotated the walking mode, e.g., regular walk, climb/descend stairs, ascend/descend slopes, interactions with other pedestrians/cyclists, curves and turnarounds, as well as terrain segments. The timings of heel strike and toe-off events are provided as well.

Another unique feature of our data set is the usage of a mobile eye tracker to record the gaze behavior of our participants during walking. Humans extensively use visual information of the environment for strategic control planning19. For instance, they adapt their gait speed and gaze angle to the complexity of the environment20. Spatio-temporal visual information is essential for proper foot positioning on complex surfaces21. Thus gaze may serve as an indicator of human intention during walking22 as well as an estimator of fall risk23,24,25,26. To our knowledge, this data set is the first publicly available database that provides gait motion data together with the corresponding visual behavior. In particular, the data allows the estimation of the gaze trajectory by combining the gaze position with the head orientation. As gaze is known to be an early predictor of human intention27,28, we think that the analysis of gaze patterns as a predictive signal for the anticipation of walk mode transitions provides an exciting research opportunity.

In summary, we anticipate that this data set will provide a foundation for future research exploring machine learning for real-time motion recognition and prediction, potentially incorporating visual behavior and analyzing its benefits.

Methods

Participants

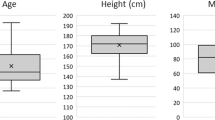

Twenty-five healthy adults with normal or corrected-to-normal vision volunteered to take part in the study. The data from five participants were incomplete due to sensor failures and are not included in the data set. The anthropometry of the remaining 20 participants, 5 females and 15 males, is given in Table 1. The participants’ average height of 178.55 ± 7.6 cm corresponds to the average height in central Europe, while their average weight of 72.95 ± 8.7 kg was slightly below the central European average, i.e. all participants were slim, cf. Figure 1.

All participants provided written informed consent, including written permission to publish the data of this study. The study was approved by the Bioethics Committee in Honda’s R&D (97HM-036H, Dec. 14, 2020).

Experimental tasks

The participants were asked to complete different walking courses in the area of a suburban train station that included walking on level ground, ascending and descending stairs, walking up and down ramps, and stepping up and down a curb. Figure 2 shows maps of the three walking courses.

Courses A and B include level walking, walking up and down ramps, and up and down stairs. Figure 3(a–d) illustrate the walking tasks A and B: Fig. 3(a) shows a level area. Figure 3(b) shows typical stairs. They consist of one or two groups of 8 to 13 steps that are separated by landings. Typical ramps, as shown in Fig. 3(c), have a slope of 6% and a length of 50 m to 70 m. There is one short and steep ramp in course A as shown in Fig. 3(d). Here the slope is 15%, and the length is approx. 3 m. The walking distance for each course is roughly 500 m.

Course C includes straight level walking, walking a 90-degree curve, stepping up and down a curb in a lay-by, and turning by 180 degrees. The lay-by is shown in Fig. 3(e). The curb height here is 10 cm. The walking distance in course C is roughly 200 m.

Sensors

The participants were equipped with the following sensors, cf. Figure 4:

-

Inertial measurement units (IMUs). For tracking of motion and posture, we used a full-body inertial kinematic measurement system, the Xsens motion capture suit29 consisting of the MVN-Link BIOMECH full-body system and the MVN Link lycra suit. The system consists of 17 IMU sensors with 3D rate gyroscopes for measuring angular velocity, 3D linear accelerometers measuring accelerations including gravitational acceleration, 3D magnetometers for measuring the Earth’s magnetic field, and a barometer to measure the atmospheric pressure. The IMUs are placed on the head, sternum, sacrum, and on the shoulders, upper arms, forearms, hands, upper legs, lower legs, and feet.

-

Force sensitive resistors (FSRs). Foot pressure data we recorded using the IEE ActiSense Smart Footwear Sensor insole30 (IEE S.A., Luxembourg). The measurement system consists of thin, foil-based, removable pressure insoles with eight high dynamic pressure sensing cells that are inserted into the shoes below the shoes’ insoles. The FSR sensor cells are located below the hallux, the toes, the heads of the first, third, and fifth metatarsal, resp., the arch, and the left and right side of the heel. The pressure insoles are controlled by ECUs that are clipped to the participants’ shoes and come with IMUs consisting of a 3D accelerometer, a 3D gyroscope, and a magnetometer. Note, that here the accelerometer and gyroscope axis coincide while the magnetometer orientation is rotated by 180 degrees around the accelerometer/gyroscope x-axis.

-

Eye tracker. Eye-tracking data were recorded with a mobile eye tracker, the Pupil Invisible Glasses31. The eye tracker is worn like a regular pair of glasses. Two small cameras on the bottom rim of the glasses capture the wearer’s eye movements by using infrared light (IR) LEDs for tracking of the pupil and map the wearer’s gaze point into a scene video captured by a scene camera attached to the spectacle frame.

The sensory equipment of the participants (left). They wore a mobile eye tracker, the Pupil Invisible Glasses31 (top right), an Xsens full-body motion suit with 17 IMU sensors (middle right), and the IEE ActiSense Smart Footwear Sensor insoles (IEE S.A., Luxembourg) to record foot pressure data (bottom right). Note that the ICUs of the pressure insoles, the small black boxes attached to the shoes, also contain an IMU each. This means that there are two IMUs from different measurement systems attached to each foot: the IMU from the motion capture suit is located below the shoe tongue in the middle of the top of the instep and the pressure insole IMU is located on the shoe on the side of the top of the instep. The participant shown in this figure provided permission for their likeness to be used.

Data collection

Hardware set-up

The participants were asked to bring tightly fitting clothes and comfortable, flat, lace-up shoes with removable insoles. They wore the Xsens suit over their clothes. The FSR insoles were inserted in their shoes below the shoe insoles. The Xsens suit requires a separate calibration recording before the actual data recording. This calibration consisted of the participant standing in a neutral pose for 5 seconds than walking forward for 5 to 10 meters, making a u-turn, walking back to the starting position, turning and again standing in neutral pose for 5 seconds. The other sensors did not need a calibration procedure. Their proper functioning was checked using their associated smartphone applications. To facilitate the synchronization of the different sensors, we asked the participants to look at their feet for the first few and last few steps of each recording.

Experimental tasks

The participants were asked to complete three repetitions of walking courses A and B, resp., and five repetitions of walking course C. They were instructed to walk at their preferred, normal speed and to take a break whenever necessary. All participants completed each walking task without taking a break. One experimenter followed them at a distance to give directions and support the participant if necessary.

The experiments took place in dry weather conditions either in the late morning or the early afternoon to avoid busy commuting times at the train station. However, all participants encountered commuters and passers-by during the experiments so that the data contains side-stepping maneuvers. Note also that some participants chose to take two steps at a time when climbing stairs.

The average time for completing one repetition of courses A, B, and C was 235 s ± 22 s, 198 ± 19 s, and 77 ± 7 s., resp. Figure 5 illustrates the time it took each participant to complete one repetition of each task. Note that the participant with ID 7 completed six instead of five repetitions of course C. The total recording time of the complete data set amounts to 9:22 hrs with 3:55 hrs for course A, 3:17 hrs for course B, and 2:10 hrs for course C.

Data processing

The data were recorded on-device and transferred to a desktop computer for post-processing. For each sensor, we used the post-processing software provided by the respective manufacturer.

-

IMU Data. The Xsens IMU data were recorded with a sampling frequency of 240 Hz. The raw sensor data was post-processed with the Xsens MVN software29 (MVN Studio 4.97.1 rev 62391) that computes full-body kinematic data based on a biomechanical model of the participant and sensor fusion algorithms. We provide the full data as post-processed by MVN Studio. Magnetometer data are subject to magnetic distortion from the environment and should be used with care. The resulting data were saved in MVNX file format for further processing.

-

FSR Data. FSR data were recorded with a sampling frequency of 200 Hz. The IEE ActiSense Smart Footwear Sensor insoles30 come with a tool to convert the raw digital values to voltages and to convert the raw accelerometer, gyroscope, and magnetometer data to accelerations, angular rates and magnetic flux density. Additionally, the tool synchronizes the data from both feet. The resulting data is saved in CSV file format.

-

Eye Tracking Data The gaze data were recorded with a sampling rate of 66 Hz. We used the open-source software Pupil Player32 (v3.4) to export the gaze position data to CSV file format and to create a scene video with a gaze position overlay. In a second step, we blurred passers-by and license plates in the resulting scene video for data protection reasons.

The data of all three sensors have been down-sampled to 60 Hz. In the case of the IMU data, we kept every fourth data point, whereas we did a linear interpolation of the FSR and eye-tracking data values. All three modalities have been synchronized manually by one experimenter and validated by the other, using the visualization and labeling tool shown in Fig. 6 that is provided with the software related to this data set. The participants were asked to look at their feet during the first and last few steps of each recording to facilitate the post-synchronization. This is required in particular to synchronize the eye tracker recordings with the other two modalities. Some example videos showing all sensor modalities after the synchronization procedure are available in the code repository related to the data set.

Visualization tool that jointly displays all three sensor modalities. The body posture is based on the XSens segment positions. In the case of the insoles, eight pressure segments are shown for each foot as well as a binary state that indicates whether the foot is on the ground. The scene video including the current fixation as well as the recent gaze trajectory is visualized from the eye tracker recordings.

Using the same tool, the data was labeled by walk mode and walk orientation: The walk modes are ‘walk’, ‘stairs_down’, ‘stairs_up’, ‘slope_down’, ‘slope_up’, and ‘pavement_up’, ‘pavement_down’ to indicate stepping up or down a curb. The walk orientations are ‘straight’, ‘curve_right’, ‘curve_left’, ‘turn_around_clockwise’, and ‘turn_around_counterclockwise’.

Additionally, we label whether or not the participant interacts with passers-by, i.e. whether or not the participant’s motion trajectory is affected by the motion of other persons in their surroundings. It has been shown that gaze is the main source of information used by pedestrians to control their motion trajectory33. Therefore, we annotated encounters with other persons as interaction based on the gaze behavior from the eye tracker that was overlayed over the eye tracker’s world video. We defined an interaction to start as soon as other persons were visually fixated by the participants and to end when the persons left the field of vision.

To easily identify identical course segments over participants and repetitions, the walk courses were segmented by walk mode and consecutively numbered, cf. Figure 8. Since each task was recorded in one go, we included a counter to indicate the repetition of the walking task.

Step detection

To simplify gait analysis, we determine the heel strike and toe-off events by the pressure outputs of the insole sensors. Similar to the approach of Hassan et al.34, we use a heuristic based on two thresholds. We normalize the measured values of each sensor cell for each recording between the first and 99th percentile to remove outliers and achieve an invariance against different body weights, shoe characteristics etc. A foot is assumed to be on the ground if its maximum sensor output surpasses the threshold αstep and lifted if it falls below the threshold αlift. We consider all sensor cells because the most relevant ones can vary, particularly for stairs and slopes or when subjects perform evasive motions due to other pedestrians or cyclists. A heel strike or toe-off event is given for the first moment the foot switches from being lifted to being on the ground and vice versa. An illustrative result of the detected events is depicted in Fig. 7.

Both thresholds αstep and αlift are optimized using a random search on a small set of annotated recordings, minimizing the mean absolute error between the ground truth events and the detected ones. The set of annotated recordings contains five different subjects. For each of those the heel-strike and toe-off events for 60 steps were annotated, thereby solely using the insole visualization shown in Fig. 6. The average temporal difference between detected and annotated events of a test subject is approx. 3.5 ms for heel strikes and approx. 5.5 ms for toe-offs, which is accurate considering the signal frequency of 60 Hz.

We also provide the estimated foot contact data calculated by the Xsens software, however, in our experience they are often inaccurate, particularly for slopes and stairs, and we suggest to treat them with caution.

Data Records

We provide the data on the figshare data-sharing platform35. The repository contains a folder with detailed documentation on the walk courses, including photos to illustrate the area, length, and slope of the ramps and the number, height, and width of the steps in the stairs. The processed data is provided in a file structure that is organized hierarchically by experimental task and participant. Each participant folder contains synchronized CSV data files with (i) eye tracker data (8 columns), (ii) pressure insoles data (91 columns), (iii) full-body Xsens data (757 columns), (iv) labels (22 columns) and (v) the eye tracker scene video in MP4 format. Detailed lists and explanations of all data columns in each file are given in Tables 2–5 and are also provided in the documentation folder on the data-sharing platform. Note that all files contain columns with the experiment time, the participant ID, and the experimental task. The experiment time is synchronized over all sensors and may be used to join data from several files.

Related Data Sets

An overview of related data sets is given by Table 7. Our database differs from the available ones in multiple aspects. The main difference is the extensive sensory setup that combines full-body IMU data with foot pressure and gaze data. In particular, this is the first data set providing natural gait data that includes the visual behavior of the subjects. Another significant difference lies in the trial design. In most data sets, trials aim to capture specific effects such as the influence of the terrain complexity on the gait in an isolated manner. In contrast, our scenarios were designed to capture traits of natural everyday walks, including transitions between various gait patterns. Each trial consists of several minutes of walking in a public space covering common elements such as straight and curvy passages, slopes, stairs, and pavements. All these elements are annotated.

Technical Validation

The sensors were validated before each recording session in the following way: The Xsens suit was calibrated in the lab before going to the experiment location. The validity of the calibration was checked by visualizing the resulting modeled skeleton in the lab using the Xsens software. The calibration was repeated at the experiment location directly before the recording to account for potential shifts of the IMU sensors. The insoles were validated for each participant by inspecting the pressure signals using the manufacturer’s live-streaming app during a short practice walk of approximately 30 seconds. The eye tracker does not require manual calibration. However, we ensured a reasonable accuracy of the estimated gaze point by letting participants fixate four objects in the vicinity and inspected the estimated gaze point on the world camera video.

Usage Notes

Each sensor modality is stored in a separate CSV file for each walking task and participant and can be imported into any software framework for further analysis. The labels are also available in separate CSV files. We provide a Python script that generates a single pandas data frame from the CSV files, which can be directly used within standard machine-learning libraries such as Scikit-learn36, Pandas37, PyTorch38 or Tensorflow39.

The data provides natural walking behavior annotated by different walking modes and heel strike and toe-off timings. One concrete application could be to train real-time machine learning models on the task of classifying and/or predicting the walk modes and/or hell strike, toe-off timings in order to enhance the control of walk assist systems such as exoskeletons40, or prostheses41.

Code availability

To streamline the processing of the data, we provide various tools and scripts that are accessible at https://github.com/HRI-EU/multi_modal_gait_database. In particular, a Python script is available to join the CSV files into one single pandas data frame, which also supports filtering for specific tasks, participants, and data columns. Furthermore, we provide a visualization tool that jointly displays all three sensor modalities as illustrated by Fig. 6. The tool allows the adjustment of current labels and the creation of custom labels or tags, enabling the generation of additional machine learning tasks.

References

Chen, S., Lach, J., Lo, B. & Yang, G.-Z. Toward pervasive gait analysis with wearable sensors: A systematic review. IEEE Journal of Biomedical and Health Informatics 20, 1521–1537 (2016).

DeLisa, J. A. (ed.) Gait analysis in the science of rehabilitation (Diane Publishing, 1998).

Larsen, P. K., Simonsen, E. B. & Lynnerup, N. Gait analysis in forensic medicine. Journal of Forensic Sciences 53, 1149–1153 (2008).

Lima, R., Fontes, L., Arezes, P. & Carvalho, M. Ergonomics, anthropometrics, and kinetic evaluation of gait: A case study. Procedia Manufacturing 3, 4370–4376 (2015).

Tao, W., Liu, T., Zheng, R. & Feng, H. Gait analysis using wearable sensors. Sensors 12, 2255–2283 (2012).

Muro-De-La-Herran, A., Garcia-Zapirain, B. & Mendez-Zorrilla, A. Gait analysis methods: An overview of wearable and non-wearable systems, highlighting clinical applications. Sensors 14, 3362–3394 (2014).

Barbareschi, G., Richards, R., Thornton, M., Carlson, T. & Holloway, C. Statically vs dynamically balanced gait: Analysis of a robotic exoskeleton compared with a human. In 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 6728–6731 (2015).

Gouwanda, D. & Senanayake, S. M. N. A. Emerging trends of body-mounted sensors in sports and human gait analysis. In 4th Kuala Lumpur International Conference on Biomedical Engineering 2008, 715–718 (2008).

Loose, H. & Lindström Bolmgren, J. GaitAnalysisDataBase - short overview. Technische Hochschule Brandenburg, University of Applied Sciences, http://gaitanalysis.th-brandenburg.de/static/files/GaitAnalysisDataBaseShortOverview.f883b62f9e67.pdf (2019).

Truong, C. et al. A data set for the study of human locomotion with inertial measurements units. Image Processing On Line 381–390 (2019).

Ngo, T. T., Makihara, Y., Nagahara, H., Mukaigawa, Y. & Yagi, Y. The largest inertial sensor-based gait database and performance evaluation of gait-based personal authentication. Pattern Regcognition 47, 228–237 (2014).

Reiss, A. & Stricker, D. Introducing a new benchmarked dataset for activity monitoring. In 2012 16th International Symposium on Wearable Computers, 108–109 (2012).

Reiss, A. & Stricker, D. Creating and benchmarking a new dataset for physical activity monitoring. In PETRA ‘12: Proceedings of the 5th International Conference on PErvasive Technologies Related to Assistive Environments, Article No. 40 (2012).

Chavarriaga, R. et al. The Opportunity challenge: A benchmark database for on-body sensor-based activity recognition. Pattern Recognition Letters 34, 2033–2042 (2013).

Chereshnev, R. & Kertész-Farkas, A. HuGaDB: Human gait database for activity recognition from wearable inertial sensor networks. In van der Aalst, W. M. P. E. A. (ed.) Analysis of Images, Social Networks and Texts - 6th International Conference, AIST 2017, 131–141 (2017).

Casale, P., Pujol, O. & Radeva, P. Personalization and user verification in wearable systems using biometric walking patterns. Personal and Ubiquitous Computing 16, 563–580 (2012).

Khandelwal, S. & Wickström, N. Evaluation of the performance of accelerometer-based gait event detection algorithms in different real-world scenarios using the MAREA gait database. Gait & Posture 51, 84–90 (2017).

Luo, Y. et al. A database of human gait performance on irregular and uneven surfaces collected by wearable sensors. Scientific Data 7, 219 (2020).

Tucker, M. R. et al. Control strategies for active lower extremity prosthetics and orthotics: A review. Journal of NeuroEngineering and Rehabilitation 12, 1 (2015).

Thomas, N. D., Gardiner, J. D., Crompton, R. H. & Lawson, R. Look out: An exploratory study assessing how gaze (eye angle and head angle) and gait speed are influenced by surface complexity. PeerJ 8, e8838 (2020).

Matthis, J. S., Yates, J. L. & Hayhoe, M. M. Gaze and the control of foot placement when walking in natural terrain. Current Biology 28, 1224–1233.e5 (2018).

Miyasike-daSilva, V., Allard, F. & McIlroy, W. E. Where do we look when we walk on stairs? Gaze behaviour on stairs, transitions, and handrails. Experimental Brain Research 209, 73–83 (2011).

Li, W. et al. Outdoor falls among middle-aged and older adults: A neglected public health problem. American Journal of Public Health 96, 1192–1200 (2006).

Khanna, T. & Singh, S. Effect of gaze stability exercises on balance in elderly. IOSR Journal of Dental and Medical Sciences (IOSR-JDMS) 13, 41–48 (2014).

Garg, H. et al. Gaze stability, dynamic balance and participation deficits in people with multiple sclerosis at fall-risk. The Anatomical Record 301, 1852–1860 (2018).

Yamada, M. et al. Maladaptive turning and gaze behavior induces impaired stepping on multiple footfall targets during gait in older individuals who are at high risk of falling. Archives of Gerontology and Geriatrics 54, e102–e108 (2012).

Ravichandar, H. C., Kumar, A. & Dani, A. Gaze and motion information fusion for human intention inference. International Journal of Intelligent Robotics and Applications 2, 136–148 (2018).

Singh, R. et al. Combining gaze and AI planning for online human intention recognition. Artificial Intelligence 284, 103275 (2020).

Schepers, M., Giuberti, M. & Bellusci, G. Xsens MVN: Consistent tracking of human motion using inertial sensing. Tech. Rep., Xsens Technologies B. V. (2018).

IEE S.A. Smart footwear sensing solutions fact sheet. https://www.iee-sensing.com/media/5df9d3fb484ec_191217-fs-actisense-web.pdf (2019).

Tonsen, M., Baumann, C. K. & Dierkes, K. A high-level description and performance evaluation of pupil invisible. arXiv:2009.00508 [cs.CV] (2020).

Pupil Labs. Pupil. https://github.com/pupil-labs/pupil (2021).

Moussaïd, M., Helbing, D. & Theraulaz, G. How simple rules determine pedestrian behavior and crowd disasters. Proceedings of the National Academy of Sciences 108, 6884–6888 (2011).

Hassan, M., Daiber, F., Wiehr, F., Kosmalla, F. & Krüger, A. Footstriker: An EMS-based foot strike assistant for running. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 1 (2017).

Losing, V. & Hasenjäger, M. A multi-modal gait database of natural everyday-walk in an urban environment, figshare, https://doi.org/10.6084/m9.figshare.c.5758997.v1 (2022).

Pedregosa, F. et al. Scikit-learn: Machine learning in Python. Journal of Machine Learning Research 12, 2825–2830 (2011).

The p andas development team. pandas-dev/pandas: Pandas 1.4.3 (v1.4.3). Zenodo https://doi.org/10.5281/zenodo.6702671 (2022).

Paszke, A. et al. PyTorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32, 8024–8035 (2019).

Abadi, M. et al. TensorFlow: Large-scale machine learning on heterogeneous systems (2015). Software available from tensorflow.org.

Jang, J., Kim, K., Lee, J., Lim, B. & Shim, Y. Online gait task recognition algorithm for hip exoskeleton. In 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 5327–5332 (2015).

Maqbool, H. F. et al. A real-time gait event detection for lower limb prosthesis control and evaluation. IEEE Transactions on Neural Systems and Rehabilitation Engineering 25, 1500–1509 (2017).

Xsens Technologies B.V., Enschede, Netherlands. MVN User Manual, Revision Z, 10 04 2020 edn. (2020).

Horst, F., Slijepcevic, D., Simak, M. & Schöllhorn, W. I. Gutenberg Gait Database, a ground reaction force database of level overground walking in healthy individuals. Scientific Data 8, 232 (2021).

Fukuchi, C. A., Fukuchi, R. K. & Duarte, M. A public dataset of overground and treadmill walking kinematics and kinetics in healthy individuals. PeerJ 6, e4640 (2018).

Acknowledgements

The authors would like to thank Deutsche Bahn AG for kindly permitting video recordings on their premises, Tobias Meyer from IEE S.A. for technical support with the ActiSense Smart Footwear Sensors, Jonathan Jakob for his help in labeling and blurring the eye tracker scene videos, and Taizo Yoshikawa for sharing his visualization of the Xsens data.

Author information

Authors and Affiliations

Contributions

V.L. and M.H. conceived, conducted, and analysed the experiments. Both authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Losing, V., Hasenjäger, M. A Multi-Modal Gait Database of Natural Everyday-Walk in an Urban Environment. Sci Data 9, 473 (2022). https://doi.org/10.1038/s41597-022-01580-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-022-01580-3

- Springer Nature Limited

This article is cited by

-

NONAN GaitPrint: An IMU gait database of healthy young adults

Scientific Data (2023)