Abstract

How the brain processes information accurately despite stochastic neural activity is a longstanding question1. For instance, perception is fundamentally limited by the information that the brain can extract from the noisy dynamics of sensory neurons. Seminal experiments2,3 suggest that correlated noise in sensory cortical neural ensembles is what limits their coding accuracy4,5,6, although how correlated noise affects neural codes remains debated7,8,9,10,11. Recent theoretical work proposes that how a neural ensemble’s sensory tuning properties relate statistically to its correlated noise patterns is a greater determinant of coding accuracy than is absolute noise strength12,13,14. However, without simultaneous recordings from thousands of cortical neurons with shared sensory inputs, it is unknown whether correlated noise limits coding fidelity. Here we present a 16-beam, two-photon microscope to monitor activity across the mouse primary visual cortex, along with analyses to quantify the information conveyed by large neural ensembles. We found that, in the visual cortex, correlated noise constrained signalling for ensembles with 800–1,300 neurons. Several noise components of the ensemble dynamics grew proportionally to the ensemble size and the encoded visual signals, revealing the predicted information-limiting correlations12,13,14. Notably, visual signals were perpendicular to the largest noise mode, which therefore did not limit coding fidelity. The information-limiting noise modes were approximately ten times smaller and concordant with mouse visual acuity15. Therefore, cortical design principles appear to enhance coding accuracy by restricting around 90% of noise fluctuations to modes that do not limit signalling fidelity, whereas much weaker correlated noise modes inherently bound sensory discrimination.

Similar content being viewed by others

Main

The sensitivity and noise fluctuations of primary sensory neurons, such as photoreceptors or mechanoreceptors, limit the perception of weak stimuli16,17,18, although disagreement persists about which downstream noise sources limit perceptual discriminations when sensory inputs exceed detection thresholds4,5,6,7,8,9,10,11,12,13,14. A groundbreaking experiment spurred this debate by identifying individual visual cortical neurons that signal visual attributes nearly as reliably as an animal’s perceptual reports2,3. One proposed explanation is that similarly tuned cortical neurons might share positively correlated noise fluctuations that limit the perceptual improvements attainable by averaging signals from multiple cells with similar response properties2,4 (Extended Data Fig. 1a–c).

Theoretical studies show that positively correlated noise limits the information that cells with similar sensory-evoked responses can encode4,5,7, but this is not necessarily the case for ensembles of cells with diverse tuning properties8,9,10 (Extended Data Fig. 1d–f). A recent framework based on a feedforward neural network asserts that, in the space of all possible neural ensemble dynamics, it is only noise in the dimensions of sensory representations that constrains coding fidelity13,14 (Extended Data Fig. 1g–m). Previous experiments have examined noise in cell pairs, but this approach incurs substantial measurement errors13,19,20 and the results were conflicting4,6,21,22,23. To our knowledge, no previous study has recorded neural ensemble noise patterns, related these to sensory signals, and tested the idea that only specific noise patterns confine the information encoded by large neural populations13,14.

A multi-beam two-photon microscope

To make such measurements, we built a laser-scanning two-photon microscope with a 4-mm2 field of view for imaging across the span of the mouse primary visual cortex (V1). The microscope has 16 photodetectors and 16 corresponding beams, which originate from one laser and are focused 500 μm apart in the specimen in a 4 × 4 array (Fig. 1). Four beams are active at any instant, and switching to a different four beams takes about 50 ns; this enables scanning of a larger area per unit time than would be feasible with one beam and the same optics (Extended Data Figs. 2–4). Compared to 16 active beams, our approach yields fourfold greater fluorescence for any given time-averaged illumination power and delivers fourfold less heat to the brain for an equivalent rate of fluorescence emission (Supplementary Note). The active laser foci are ≥1 mm apart, so fluorescence scattering between the four active image tiles is <2%; scattering into inactive tiles can be corrected computationally using the 16 photocurrents (Extended Data Fig. 4). Our system images neocortical activity down to layer 5 with full-frame acquisition rates of 7.23–17.5 Hz (Supplementary Videos 1–3), whereas other two-photon microscopes with large fields of view attain similar imaging rates over smaller sub-fields24,25,26,27 (Extended Data Fig. 2j, k).

a, Schematic of the microscope. Sixteen laser beams converge on a pair of galvanometer mirrors (X- and Y-galvos). Sixteen photomultiplier tubes (PMTs) detect fluorescence. b, Two-photon image (greyscale, mean of 1,000 frames taken at 7.23 Hz) of GCaMP6f-expressing layer 2/3 pyramidal neurons in the visual cortex of an awake mouse. Overlaid are >2,000 neuronal somata (green) identified in the Ca2+ video. Boxed areas are magnified in c. c, Magnifications of the boxed areas in b. d, Example Ca2+-activity sources (computationally identified), revealing dendrites. Images in b–d are representative of results from 10 mice. Scale bars: b, 250 μm; c, d, 100 μm.

Imaging studies across cortical area V1

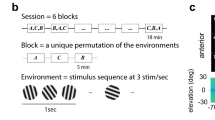

We studied layer 2/3 pyramidal neurons, which project extensive connections from V1 to higher visual areas. In awake mice expressing the Ca2+-indicator GCaMP6f in these neurons, we imaged around 1,000–2,000 cells concurrently as mice viewed, with one eye, a random sequence of moving gratings. Each grating was oriented at either +30° or −30° from vertical, lasted 2 s and spanned the central ~50 deg of the eye’s visual field (Fig. 2a–c). There were 350 trials with each stimulus, but because locomotion modulates vision28 we analysed only trials with locomotor speeds of less than 0.2 mm s−1 (217–332 trials per stimulus). From these recordings we extracted 8,029 neurons, mainly in V1 (1,031–2,191 cells in each of 5 mice; Extended Data Figs. 5, 6).

a, Image of visual cortex, processed as in Fig. 1b. The coordinate system indicates anterior (A) and lateral (L) directions. The red line marks the area V1 boundary, found by retinotopic mapping. Scale bar, 500 μm. b, Top, in each trial, one of two randomly chosen stimuli (A or B) appeared for 2 s, followed by a uniform background for 2 s. Bottom, each stimulus was a drifting grating, oriented at either +30° or −30° from vertical. The analyses in d–g used 217–332 trials per stimulus in each of 5 mice. c, Example Ca2+ activity traces. F, fluorescence intensity. d, Histograms of noise correlation coefficients (Pearson’s r) for concurrently imaged cell pairs (6,946,280 cell pairs; 5 mice), computed using the estimated spike count of each cell within [0.5 s, 2 s] of stimulus onset. r values are averages across both stimuli for real and trial-shuffled datasets. The latter histogram was Gaussian (R2 = 0.9982 ± 0.0005 (95% confidence interval)) with a variance around 50% of that of the real data, showing the difficulty of accurately determining pairwise noise correlations with hundreds of trials. Error bars estimated as counting errors are too small to see. e, Histograms of noise correlation coefficients differed significantly for cell pairs with similarly or differently tuned mean responses to the two stimuli, computed for the top 10% most active cells and by grouping cell pairs into those with positively or negatively correlated mean responses to the two stimuli. (***P < 1.3 × 10−6 for all 5 mice; two-tailed Kolmogorov–Smirnov test; 901 cells, 43,887 positively and 43,768 negatively correlated pairs). For exact P values for this and all subsequent figures, see Supplementary Information. f, g, Box plots of mean (f) and full width at half maximum (FWHM) (g) values of the colour-corresponding distributions in d, e. Circles indicate data points for 5 individual mice. Noise correlations in f were greater for cell pairs with similarly tuned responses (one-tailed Wilcoxon rank sum test, ***P < 0.001 for all 5 mice). Extended Data Fig. 6g–i shows results for all cell pairs. Boxes cover the middle 50% of values, horizontal lines denote medians, and whiskers span the full range of the data.

A total of 5,008 cells responded at least weakly to the stimuli, with activity rates and stimulus preferences consistent with those found in previous studies28,29 (Extended Data Fig. 6a–d). These neurons likely had substantially overlapping inputs, because mouse V1 neurons respond to large portions of the visual field that are comparable in size to our stimuli29. Noise correlation coefficients in pairs of concurrently recorded cells were widely distributed, with positive mean values (r = 0.06 ± 0.01; mean ± s.d.; 5 mice) as in most previous reports6 (Fig. 2d–g, Extended Data Fig. 6e–i). Active cell pairs that on average responded similarly to the two stimuli had, on average, noise correlation coefficients about twice as large as those that responded dissimilarly (Fig. 2f, g).

To evaluate the significance of these correlations, we created trial-shuffled datasets in which the responses of each cell were permuted across different trials, thereby mimicking cells with statistically identical individual responses as in the real data but with uncorrelated noise fluctuations. Non-zero noise correlations in trial-shuffled datasets merely reflect the finite number of trials. Indeed, noise correlation coefficients were more narrowly distributed than in real data, although many deviated substantially from zero (Fig. 2d, g). This confirms the difficulty of measuring noise correlations given limited trials13,19 and likely explains why previous studies of cell pairs yielded divergent results4,6,21,22,23.

Evaluations of cortical coding fidelity

To study visual coding, we represented the dynamics using a population vector (one cell per dimension) and used the discriminability index, d′, to assess the statistical confidence in distinguishing the stimuli on the basis of their evoked neural responses30. (d′)2 relates to the Fisher information that the cell ensembles convey about stimulus identity8,13,30, which even for binary classifications (≤1 bit of Shannon entropy) can be infinite—that is, 100% confidence31. Theories of noise correlations and neural coding have largely examined pairwise discriminations, as error rates discriminating more than two stimuli are well approximated using d′ values from all the pairwise comparisons31.

To enable us to determine d′ accurately despite having about 5- to 10-fold fewer trials than cells recorded per mouse, we created analyses to extract the primary, ensemble noise modes without measuring noise in cell pairs (Appendix). First, we performed a dimensional reduction by using partial least squares (PLS) analysis to identify and retain only five population vector dimensions in which the stimuli were highly distinguishable; retaining more than five dimensions only added noise and decreased the ability to distinguish the stimuli (Fig. 3a, b, Extended Data Figs. 5b, 7a–c). In this five-dimensional representation, the neural dynamics evoked by the two stimuli became distinguishable over the first ~0.5 s of stimulus presentation (Fig. 3b–d). Using an optimal linear decoder of the ensemble activity, d′ values rose to a plateau within ~0.5 s of the stimulus onset; the optimal decoder then remained stable until stimulus offset (Extended Data Fig. 7d). In shuffled datasets the stimuli were even more distinguishable, as d′ values attained greater values than in real datasets, indicating that correlated noise degrades stimulus representations in the real data.

a, Schematic of neural ensemble dynamics in a population vector representation of reduced dimensionality. Trajectories, rA(t) and rB(t), depict single-trial responses to different (red, blue) stimuli. At a fixed time after stimulus onset, the sets of responses to the two stimuli form two distributions of points (ellipses). At the bottom left are projections of these distributions onto a subspace, found by PLS analysis, in which responses to the two stimuli are most distinct. The green line indicates the optimal linear boundary for classifying stimuli in this subspace. The stimulus discriminability, d′, equals the separation, Δμ, of the two distributions along the dimension orthogonal to this boundary, divided by the s.d., σ, of each distribution along this dimension. b, c, Neural ensemble responses, 0.15 s (left) and 1 s (right) after stimulus onset, in the two-dimensional space in which the sets of responses to the two stimuli are most distinct, for real (b) or trial-shuffled (c) datasets. The blue and red crosses denote individual trials (220 trials per stimulus); the green and orange lines mark the classification boundaries for real and trial-shuffled data, respectively; and the vertical black line in b is the classification boundary for diagonal discrimination, which ignores correlations in the responses of the cells. woptimal, wshuffled and wdiagonal represent directions normal to the classification boundaries. wshuffled = wdiagonal, as the corresponding classification boundaries are identical. d, Mean values of d′ (coloured data points; N = 5 mice) plotted as a function of time after stimulus onset, for the classifiers in b, c. Error bars represent the standard deviation. Coloured lines show the d′ values for individual mice, computed using the protocol of Extended Data Fig. 5b and averaged over 100 different randomly chosen subsets of 1,000 cells and randomly chosen assignments of trials to decoder training sets and test sets in each mouse. d′ values are normalized by those obtained for trial-shuffled data (averaged across 0.83–1.11 s). e, Same as d but using cumulative decoding, which considers the full time-course of the activity of each cell up to time t. For each mouse, d′ values in e–h have the same normalizations as in d. f, (d′)2 values during the interval 0.83–1.11 s from stimulation onset, plotted against the number of cells, n, used for analysis. Data points in f–i are averages over 100 different subsets of cells, and the shading in f, g indicates the standard deviation. For real data, (d′)2 values were well fit by the expression (d′)2 = (d)2shuffled/(1+ε × n), (green curves; R2 = 0.88 ± 0.03 (s.d); ε = 0.0019 ± 0.0007; 5 mice), where ε is the fit parameter and (d′)2shuffled is the (d′)2 value for n cells in a linear regression to the shuffled data (orange lines). g, Same as f, but for (d′)2 values computed using cumulative decoding for the interval 0–1.11 s. h, i, Asymptotic d′ values in the limit of many cells (h) and the number of cells at which (d′)2 attains half its asymptotic value (i) determined from curve fits as in f, g for instantaneous (open boxes) and cumulative (filled boxes) decoding. Optimal linear decoders (green) slightly but significantly outperformed diagonal decoders (black) (**P < 10−11; one-tailed Wilcoxon rank sum test; N = 100 different assignments to decoder testing and training sets using all cells recorded in each mouse; dots are mean values from individual mice). Boxes cover the middle 50% of values, horizontal lines denote medians, and whiskers span the full range of the data. Analyses in d–i are based on 217–332 trials per stimulus in each of 5 mice and time bins of 0.275 s.

We also evaluated decoders that ignore noise correlations. ‘Diagonal decoders’, which neglect off-diagonal elements of the noise covariance matrix30, performed nearly as well as optimal linear decoders, although the decrement was statistically significant (Fig. 3d–h). Thus, although correlated neural noise degraded stimulus encoding, using the noise structure to improve decoding brought only modest benefit.

The stability of the optimal decoder across most of the stimulus duration suggested that, by integrating neural activity across the stimulus presentation, the brain might in principle average out noise in its sensory representations to improve discrimination. To test this, we examined the optimal linear decoder of the time-integrated neural responses over each trial, which indeed yielded greater d′ values (Extended Data Fig. 7e). For comparison, we examined decoders of the cumulative set of neural responses that had occurred up to each moment in the stimulation trial (Fig. 3e–h). Cumulative decoders surpassed those using individual time-bins of neural activity, but not the simple decoder of time-integrated activity (Extended Data Fig. 7e). This suggests that there was little temporal structure in the sustained neural responses that might improve decoding beyond that attained using time-integrated activity, at least as reported by Ca2+ imaging.

We next examined how decoding varied with n, the number of cells analysed. In the absence of correlated noise, each additional cell used should linearly increase the Fisher information that is conveyed about the identity of the stimulus5,12. Trial-shuffled datasets confirmed this, as (d′)2 increased linearly with n (Fig. 3f, g). In real data, (d′)2 reached a plateau when n exceeded ~1,000 cells, for both instantaneous and cumulative decoders (Fig. 3f–i). This constitutes direct evidence of information saturation in large neural populations, without extrapolations from cell pairs.

Several control analyses bolstered these conclusions. First, we validated linear decoding as a way of assessing Fisher information. The noise covariance matrix was stimulus-independent, with similar matrix elements for both stimuli (r = 0.81 ± 0.16; mean ± s.d.; 20 off-diagonal matrix elements for each of 5 mice). Thus, nonlinear decoders should have similar accuracy as the optimal linear decoder, which we confirmed by quantifying the additional information that an optimal quadratic decoder could extract from the data (Extended Data Fig. 7f–h). Second, we verified that there were a sufficient number of trials to estimate d′ accurately. In every mouse the empirically determined values of d′ approached a stable estimate with increasing numbers of trials and were stationary across the imaging session (Extended Data Fig. 7g, i, j). Third, we confirmed that alternative decoding methods using regularized regression yielded similar d′ values and identical conclusions to those from PLS analysis (Extended Data Fig. 8a, b). Further, we used regularized regression to analyse publicly available neural activity datasets32, which also showed that d′ reached a plateau (Appendix). Fourth, we used simulations to verify that our decoders were robust to potential large sources of neural variability, such as common mode noise and gain modulation of visual responses (Extended Data Fig. 8c–h). Fifth, we mathematically derived the accuracy of d′ determinations made via PLS analysis (Appendix). Altogether, numerous analyses and derivations upheld the information saturation that we found in ensembles of ~1,000 neurons or more.

The data also enabled us to test a framework for understanding cortical noise fluctuations based on a feedforward network12,13. In this framework, the encoded information, I, as a function of the ensemble size, n, obeys I(n) = (I0n)/[1 + εn], where the constant I0 is the mean encoded information per cell in the shuffled data and the parameter ε characterizes the strength of information-limiting correlations13. Our data matched this prediction (Fig. 3f, g), verifying the existence of information-limiting correlations and establishing the effect size. The minimum set of cells needed to detect information saturation is approximately 2ε−1, which is around 800–1500 cells for the instantaneous decoders (Fig. 3h, i). This shows the importance of large recordings to adjudicate whether correlated noise limits coding accuracy, and likely explains why previous recordings of less than 350 cells did not observe information saturation19,21.

Comparing neural coding to visual acuity

An additional benefit of recordings across V1 is to enable estimates of the attainable perceptual acuity given only the information encoded in the early visual cortex, which is important for fine discriminations of grating stimuli33. To approximate conditions more representative of the perceptual threshold, we examined another 5 mice that viewed the same grating stimuli as before but with ±6° orientations—closer to the discriminability limits.

As expected, these stimuli were harder to distinguish from their evoked neural activity stimuli (Extended Data Fig. 9). The asymptotic d′ value (~2.5) for large n suggests that gratings presented at ±2.4° under otherwise identical viewing conditions would have the minimal, perceptibly distinct orientations (d′ ≈ 1). Behavioural studies of mouse visual spatial acuity under photopic illumination15 yield similar predictions of ±2.3° (Methods). Direct measurements of mouse visual orientation sensitivity have been slender and used different stimuli from ours, but yielded similar values34. The fine agreement in these numbers is probably fortuitous, but the similar values estimated from cortical responses and behavioural studies15,34 suggest that the information signalling limits of visual cortical coding likely have an important role in setting perceptual bounds.

Origins of information-limiting noise

To identify why the information saturates, we analysed the neural noise structure by finding the principal eigenvectors of the neural noise covariance matrix and the mean amplitudes of visual signals encoded along each of these eigenvectors. This allowed us to decompose (d′)2 into a sum of signal-to-noise ratios, one for each eigenvector13 (Methods). Although visual signal amplitudes increase linearly with ensemble size, n (Fig. 4a, b), certain noise eigenvalues might also increase with n, which could offset the greater signalling capacity of a larger ensemble and cause the information saturation.

a, Schematics of trial-to-trial variability in ensemble neural responses with increasing numbers of cells, n. Ellipsoids represent 1 s.d. fluctuations around mean ensemble responses, r(s), to two similar stimuli parameterized by a variable s (here the stimulus orientation). For large n, response variability along the tuning curve, r(s), increases proportionally to the separation, Δμ, between the two mean responses, leading to a saturation of d′. w is the normal to the optimal linear classification boundary between the two response sets. e1,2,3 are three eigenvectors, eα, of the noise covariance matrix, averaged across both stimuli. The eigenvalues, λα, are the noise variances along each eigenvector. b, Mean values of (Δμ)2 plotted against n for 5 mice in units of the variance in the shuffled datasets, which have isotropic noise covariance matrices. Analyses in b–h used instantaneous decoding in the five-dimensional space found by PLS analysis and 100 different randomly chosen subsets of cells and assignments of trials to decoder training sets and test sets. Given these 100 sets of results, lines and shading in b–f denote mean ± s.d., g shows 100 individual results, and h has box plots. c, Cosine of the angle between Δμ and w plotted against n, for 5 individual mice in real and trial-shuffled datasets. Because Δμ is nearly collinear with w, optimal linear decoding—which accounts for noise correlations—only modestly outperforms diagonal decoding, which does not (Fig. 3h). d, Eigenvalues, λα, for the eigenvectors best-aligned with Δμ in 5 individual mice (green lines) increase linearly with n, revealing the origin of information-limiting correlations. For trial-shuffled data (25 orange lines, 5 eigenvalues for each of 5 mice), the noise variance along Δμ is independent of n and is uniform for all eigenvectors of the noise covariance matrix. e, f, The geometric relationships between visual signals and noise indicate that the largest noise mode is not the one that is information-limiting. Each colour denotes a different eigenvector, eα, of the noise covariance matrix in the reduced five-dimensional space, α∈{1,2,3,4,5}. In each individual mouse (e) there were multiple eigenvalues, λα, of the noise covariance matrix that increased with n. Extended Data Fig. 10 shows results for all mice. Visual signals (f) also increased with n, as shown by decomposing Δμ into components along the five eigenvectors, eα. In each mouse the eigenvector with the largest eigenvalue, e1, was the least well aligned with the visual encoding direction, Δμ (compare the red curves in e, f). g, A plot of noise values computed as in e against signal values computed as in f, using all recorded cells from mouse 1. The largest noise mode (red points) is an order of magnitude greater than the noise modes that limit neural ensemble signalling (green and yellow points), yet it is the least aligned with the signal direction. h, Signal-to-noise ratios for all five eigenvectors, computed using the values in g. (d′)2 equals the sum of these five signal-to-noise ratios. Boxes cover the middle 50% of values for the same 100 data subsets used in e–g, horizontal lines denote medians, and whiskers span 1.5 times the interquartile range. Analyses in b–h are based on 217–332 trials per stimulus in each of 5 mice.

We developed methods to determine the principal eigenvectors of the noise covariance matrix without needing accurate estimates of its matrix elements—a key distinction from previous analyses13,19,20. Contravening prevailing thinking, with our approach recordings of more cells enable accurate estimates of these eigenvectors and of d′ using fewer trials (Extended Data Fig. 10). As n increased, mean ensemble responses to the two stimuli became increasingly distinct while staying aligned to the dimensions important for optimal decoding (Fig. 4b, c). In real but not in shuffled datasets the noise covariance matrix had 2–3 eigenvalues that also increased linearly with n (Fig. 4d, e). We examined how these particular noise eigenmodes related to the dimensions in which the neural ensembles represented visual signals.

In every mouse the visual signalling dimensions were nearly orthogonal to the largest noise mode, which therefore had almost no effect on coding fidelity even though it was around tenfold greater than any other noise mode (Fig. 4e–h; Extended Data Fig. 10). Instead, it was the third-largest noise mode that primarily aligned with the visual coding dimensions and thereby limited coding accuracy (Fig. 4f–h). These properties were sometimes seen, to a lesser extent, in the second-largest mode. The existence of noise eigenvectors that closely align to the dimensions used for visual representations and have eigenvalues that grow with n explains the information saturation for large n and why there was little performance decrement for decoders that did not account for correlated noise. Although these inferences rely on Ca2+ signals, not electrical recordings, this is unlikely to affect the conclusions, as variability in how spikes produce Ca2+ signals arises mainly from fluctuations in Ca2+ levels, photon emission and detection, which are statistically independent across cells and are not information-limiting.

A key question is how does information-limiting noise arise. Recent work examines this issue in a two-layer, feedforward network model with sensory inputs and intrinsic noise in both its input and its output layers12. As more cells are added to the output layer, the encoded information approaches a plateau, the value of which depends on the noise levels and synaptic weights12 (Extended Data Fig. 1j–m). Our re-analysis of this model12 revealed that the dimensionality of the space of receptive fields in the output layer equals the number of noise covariance matrix eigenvectors for which the eigenvalues increase linearly with the number of output cells (Appendix). This shows that information-limiting correlations arise even in rudimentary networks, and reflect the co-propagation of signals and noise through the same synaptic connections.

Discussion

Our findings address longstanding questions about how the brain computes accurately despite neural noise1, and help to resolve a 30-year-old puzzle by providing direct evidence that correlated noise limits cortical coding accuracy2,3,4. These results adjudicate against models in which noise correlations do not limit—or even improve—cortical ensemble coding7,8. Encoded visual signals in our recordings were orthogonal to the largest noise eigenmode, enhancing coding accuracy by restricting ~90% of noise fluctuations to dimensions that did not impede signalling. This strategy allows cortical codes to evade a majority of noise, although coding fidelity is ultimately bounded by the weaker correlated noise patterns that cannot be disambiguated from signal. (This strategy might not apply to sensory variables, such as full-field luminance, that animals rarely use for fine discriminations.) In support of these conclusions, mouse visual acuity measured using stimuli similar to ours15,34 is around tenfold better than would be predicted from the total noise amplitude in the visual cortex, but fits with the amplitudes of the information-limiting noise modes.

Nevertheless, rigorous comparisons between the accuracies of sensory cortical coding and psychophysical discriminations will require concurrent evaluations in individual animals, using identical stimuli. Visual stimuli of greater size can increase d′ values32 by decreasing the mean level of shared inputs among responsive cells and thereby reducing ε, whereas stimuli of greater saliency should increase d′ by increasing I0. The recent history of sensory stimuli will also influence d′ owing to sensory adaptation. Although specific values of d′ will vary across stimulus types, information-limiting noise correlations and the saturation of information for large n arise generically from the propagation of signals and noise through common circuitry and place fundamental constraints on coding accuracy. Therefore, our experimental results likely reflect basic attributes of hierarchical networks and should generalize to diverse stimuli and sensory modalities.

The brain probably cannot learn its own correlated noise structure to decode sensory features optimally, as any particular sensory scene almost never repeats precisely. Nonetheless, decoders that ignore noise correlations can still be near optimal (Fig. 3d, e, h, Extended Data Fig. 9c), as predicted for large networks with information-limiting noise correlations14. Therefore, information-limiting cortical noise might help downstream circuits to readout diverse sensory features nearly optimally.

Future work should extend our experiments to different stimuli, sensory modalities and behavioural conditions. Together with our analyses tailored for large-scale recordings, microscopes that image multiple brain regions concurrently24,25,26,35 will enable studies of noise correlations and information flow across successive cortical areas. Such measurements will help to address longstanding questions about the decoding strategies that the brain uses for perception, and the effect of attention on perceptual sensitivity and neural ensemble noise.

Methods

Microscope design

We used a systems-engineering approach to design the two-photon microscope. To simulate its optical performance and assess component suitability, we used optical design software (ZEMAX) to simulate both ray and wave propagation through the optical pathway. To validate the multiplexing strategy (Extended Data Fig. 2b–d) and the computational un-mixing of crosstalk between image tiles (Extended Data Figs. 3c–e, 4a–c), we simulated fluorescence scattering in brain tissue using the non-sequential mode of ZEMAX. We created an optomechanical design of the microscope using CREO Parametric 3.0 CAD mechanical design software.

Laser source and control of illumination

We used an ultrashort-pulsed Ti:sapphire laser (MaiTai eHP DeepSee; Spectra Physics) with an 80 MHz repetition rate. We tuned the emission wavelength to 910 nm and used the laser’s built-in pre-chirping module to attain pulses of 130 ± 20 fs duration (FWHM) at the sample plane. For general purpose routeing of the laser light to and within the microscope we used broadband dielectric mirrors (BB1-E03, Thorlabs). A computer-driven rotating half-wave (λ/2) plate (WP, AHWP05M-980; Thorlabs) controlled the laser beam polarization and hence the power transmitted through a polarizing beam splitter (PBS) (PBS102, Thorlabs) and into the microscope’s illumination pathway (Extended Data Fig. 2d). To block all laser illumination to the microscope during the turnaround portion of the fast galvanometer mirror’s scanning cycle, we used a custom laser chopper wheel (90:10 duty ratio), positioned after the PBS and synchronized in frequency and phase with the fast-axis galvometer cycle.

Multiplexing of the 16 illumination pathways

Owing to the powerful ultrafast lasers that are now commercially available, past users of two-photon microscopy have often had more than enough illumination power at their disposal but remained limited with regards to the imaging speeds and the fields of view that were attainable with a single beam and existing scanning hardware. We therefore developed a multi-beam, two-photon microscope that puts the (previously) excess laser power to good use, by using multiple beam paths that enable the coverage of larger fields of view at faster image-frame acquisition rates. The Supplementary Note, Extended Data Fig. 2j, k and Supplementary Fig. 1 quantitatively compare our imaging system to other recent approaches to large-scale two-photon microscopy.

To steer laser illumination into four different sets of four beam paths, we used three pairs of electro-optic modulators (EOM) (LM0202 3 × 3 mm 5W, LIV20 pulse amplifier; QIOptic) and PBS cubes (PBS102, Thorlabs) (Extended Data Fig. 2d). We drove each EOM with a high-voltage (310 V amplitude) square wave oscillation, with the period matched to that of the microscope’s pixel clock. When imaging using the 4 × 4 set of beams, the square waves driving the second and third EOMs were both phase-shifted by ¼ period relative to the square wave driving the first EOM (Extended Data Fig. 2c). By toggling the beam exiting each EOM between the two linear orthogonal polarization states (the transition time between polarizations was around 50 ns), these three square-wave signals steered the beam from the laser successively into each of the four sets of four beam paths (that is, 16 total), with each set of four illuminated for ¼ of each pixel clock cycle (Extended Data Fig. 2b–d). Within each set, three beamsplitters (10RQ00UB.2 and 10RQ00UB.4, respectively, for S and P polarizations; Newport) divided the beam power equally between four different paths corresponding to four non-neighbouring image tiles in the 4 × 4 array (Extended Data Fig. 2b). Because the efficiency of two-photon fluorescence excitation increases as the square of the peak illumination intensity, this temporal multiplexing scheme enabled fourfold greater fluorescence excitation compared with an otherwise identical, 4 × 4 set of beams that were not multiplexed in time.

Illumination pathways

Each of the 16 beam pathways contained a pair of kinematically mounted mirrors, a 1:2 telescope implemented using a pair of lenses (AC254-500-B-ML, LA1464-B; Thorlabs), and a gimbal-mounted mirror (GMB1/M; Thorlabs). The 16 beam paths converged on a 6-mm-diameter, Ag-coated mirror mounted on a galvanometer scanner (6215HSM40B scanner, 671215HHJ-1HP driver; Cambridge Technologies). This galvanometer served as our slow-axis scanner.

To image the 16 beams striking the first scanning mirror onto an identical galvanometer scanning mirror serving as the fast-axis scanner, we used a pair of telecentric f-theta lenses designed to induce minimal group velocity dispersion with ultrashort-pulsed illumination (S4LFT0089/094; Sill Optics) in a 1:1 telescope configuration (Fig. 1a). A third, identical f-theta lens and a tube lens (f = 300 mm, G322-372-525, Linos) imaged all 16 beams striking the second scanning mirror onto the back aperture of the microscope objective. The objective focused the 16 beams to a square array of 4 × 4 foci, which together scanned a 2 mm × 2 mm specimen area at image frame acquisition rates up to around 8 Hz.

Alternatively, to enable image frame acquisition rates up to 20 Hz over a 2 mm × 2 mm specimen area, we used a resonant galvanometer scanner (6SC08KA040-02Y, Cambridge Technology, 8 kHz, 7 mm clear aperture) as the fast-axis scanner. The 8 kHz rate of resonant line-scanning allowed us to use a data acquisition scheme based on line multiplexing instead of pixel multiplexing. In this mode we used EOM3 to direct the laser illumination into one of its two optical output paths (Extended Data Fig. 1d, phase I and phase IV). During the resonant scanner turnaround times, we used EOM1 to redirect the laser illumination towards EOM2, the output pathway of which was blocked. During both the forward and backward motion of the resonant scanner a set of 4 laser beams scanned across a total of 8 image tiles—that is, 2 tiles per beam. By using a different set of 4 beams during the forward and backward scanning motions, we sampled one image line in all 16 image tiles during each cycle of the resonant scanner while using only 8 of the 16 beam paths. As with the pixel-multiplexing approach, only 4 beams were active at any instant in time.

For the microscope objective lens, we used either an air objective lens (Leica, 5.0 × Planapo 0.5 NA; 19 mm working distance; anti-reflection (AR) coated for 400–1,000 nm light; transmission >90% at 520 nm, >75% at 910 nm) or a water-immersion lens optimized for large-scale two-photon imaging26 (1.0 numerical aperture (NA) fluorescence collection, objective (Jenoptik; 2.5 mm working distance). The illumination beams underfilled the back aperture of the microscope objective lens, leading to an optical resolution of approximately 1.2 μm and 8 μm in the lateral and axial dimensions, respectively, as determined from the FWHM values of the microscope’s optical point-spread function.

Fluorescence collection pathway

Fluorescence emanating from the sample returned through the objective lens, reflected from a dichroic mirror (FF735-Di02-58x82, Semrock) and passed through a collection lens (AC508-180-A, Thorlabs) and a fluorescence emission filter (FF02-525/40-25, Semrock).

The objective and the collection lens project a magnified image of the fluorescence foci in the sample. To optimize the efficiency of fluorescence detection, we designed a custom 4 × 4 lens array (4.5 mm pitch, plano-convex lenslets, custom injection-moulded in poly(methyl methacrylate) (AR-coated: reflectivity <0.5%, 450–650 nm) that efficiently coupled fluorescence emissions into a 4 × 4 array of 3-mm diameter (0.5 NA) plastic optical fibres (FF-CK-120, AR-coated, FibreFin) (Fig. 1a).

To capture the maximum amount of fluorescence near the edges of the large field of view, the outer lenslets in the array were slightly larger than the others, extending outward from the perimeter of the array. Because even the outer lenslets had a maximum numerical aperture (0.19 NA) much lower than that of the plastic fibres (0.5 NA), this lenslet design yielded a theoretical efficiency of >97% for coupling fluorescence into the array of 16 optical fibres. The fibre array delivered the fluorescence to a set of 16 GaAsP photomultiplier tubes (PMT) (H10770PA-40, Hamamatsu). Each 400-mm-long fibre had a specified transmission efficiency of >98%, yielding an overall design efficiency of >95% for conveying fluorescence into the photomultiplier tubes.

Optomechanics

We custom-fabricated the majority of the structural components of the microscope at our laboratory’s machine shop using high-strength 7075-aluminium alloy and computer numeric control machining. We used three-dimensional (3D) printing to create a cover for the microscope objective lens and a mount for the dichroic mirror. The optomechanical components were generally catalogue parts from standard vendors, mainly Thorlabs, Newport and Linos.

Data acquisition electronics

Owing to the unique multiplexing scheme of our microscope, data acquisition differs from that in a conventional two-photon microscope (Extended Data Fig. 3a). A major concern was to ensure that the signals from each of the four phases per pixel clock cycle were correctly assigned. This necessitated sampling the 16 PMTs sufficiently rapidly to ensure that the signals corresponding to different pixels and phases were not conflated. Hence, we chose a sampling rate of 50 MHz for each PMT. Because the duration of each of the four multiplexing phases was 400 ns, this sampling rate yielded 20 samples per pixel per multiplexing phase (Extended Data Fig. 3b).

To implement data sampling at this rate, we first converted the photocurrents from the 16 PMTs into voltage signals using a set of four trans-impedance amplifiers, each with four input channels (SR445A, Stanford Research Systems). We then sampled the resulting voltage signals using a 16-channel, 50 MS/s analogue-to-digital converter (ADC; 14-bit-samples encoded in 2 bytes) module (NI 5751, National Instruments). The ADC connected to the NI FlexRIO field programmable gate array (FPGA) Module for PXI Express, which was controlled by a host computer (Win 64-bit, 2 Intel E5-2630 processors, 32 GB RAM, Lenovo) through a PCIe-PXIe link (NI PXIe-7962R, NI PXIe-1082 chassis, PXIe-PCIe8381 link, National Instruments) (Extended Data Fig. 3a). For each multiplexing phase, the FPGA module summed the digitally sampled values of the photocurrents into pixel intensities. All subsequent data manipulations involved only the pixel intensities, yielding a total data throughput rate of 60 MB s−1 or 105 MB s−1, for image frame acquisition at 7.23 Hz or 17.5 Hz, respectively, as opposed to the 1.6 GB s−1 raw data stream. To eliminate any residual crosstalk between pixels resulting from the approximately 50-ns switching time of the EOMs, the software interface gave the user the flexibility to discard the first few samples of each pixel.

Instrument control

When imaging in pixel-multiplexing mode, we used ScanImage36 software (version 3.8) to generate the analogue signals driving the galvanometer scanners and the digital line-clock and frame-clock signals (Extended Data Fig. 3a). Using the clock signals from ScanImage, the FPGA module generated signals to drive the EOMs. We created custom LabVIEW (National Instruments, version 2012 SP1, 32 bit) code to initiate the imaging sessions and control the data acquisition parameters. When imaging in line-multiplexing mode, we controlled the instrumentation fully using custom software written in LabVIEW. We synchronized laser line-scanning and data acquisition by using the clock of the resonant scanner as a master clock.

In both imaging modes, the FPGA module continually transmitted to the host computer the imaging data in packets of pixels, combined into image lines, via a high-speed direct memory access first-in first-out (DMA FIFO) data link. The host computer constructed image tiles from the image line data, accounting for the number of photodetection channels and temporal multiplexing phases. The computer then streamed the image data onto its hard drive (Extended Data Fig. 3a).

Mice

The Stanford Administrative Panel on Laboratory Animal Care (APLAC) approved all procedures involving animals, and we complied with all of the panel’s ethical regulations. We analysed data acquired from 6 male and 4 female Ai93 triple transgenic GCaMP6f-tTA-dCre mice from the Allen Institute (Rasgrf2-2A-dCre/CaMK2a-tTA/Ai93), which expressed the Ca2+-indicator GCaMP6f in layer 2/3 pyramidal cells37. Mice resided on a 12-h reverse light cycle in standard plastic disposable cages. Experiments occurred during the dark cycle. All animals in the experiment belonged to the same group, so blinding and random assignments were neither needed nor feasible.

For illustrative purposes only, we imaged a single tetO-GCaMP6s/CaMK2a-tTA mouse38, which expressed the Ca2+-indicator GCaMP6 s in a subset of neocortical pyramidal neurons (Supplementary Video 3).

Surgical procedures

At the start of surgery we gave adult mice (12–17 weeks old) buprenorphine (0.1 mg kg−1) and carprofen (5 mg kg−1) and anaesthetized them with 1–2% isoflurane in O2. We implanted a glass window within a 5-mm-diameter craniotomy positioned over the right visual cortical area V1 and surrounding cortical tissue. The window was a round #1 cover glass (5 mm diameter, 0.15 ± 0.02 mm thickness, Warner Instruments) that we attached to a circular steel annulus (1 mm thick, 4.9 mm outer diameter, 4.4 mm inner diameter) using adhesive cured with ultraviolet light (NOA81, Norland Products). To fill the gap between skull and glass window we applied 1.5% agarose. We secured the window on the cranium with dental acrylic. We also implanted an aluminium metal bar atop the cranium, allowing the mice to be head-restrained during in vivo brain imaging. For two days after surgery, we gave the mice buprenorphine (0.1 mg kg−1) and carprofen (5 mg kg−1) to reduce post-surgical discomfort. Mice recovered for at least one month before any imaging experiments began.

Visual stimulation

Mice viewed visual stimuli on a gamma-corrected computer monitor (Lenovo LT2323p; 58.4 cm diagonal extent) that was 10 cm away from the left eye and spanned around 142° of this eye’s accessible, angular field of view. We generated visual stimuli using the psychophysics toolbox libraries of the MATLAB (Mathworks; version 2017b) programming environment. Stimuli were sinusoidal drifting gratings (spatial frequency, 0.04 cycles per degree; stimulus angular diameter, 50 deg; drifting rate, 50 deg s−1, centred on the left eye’s visual field; stimulation duration, 2 s; amplitude modulation depth, 100%; screen background intensity, 50%; Fig. 2b). During each experiment, we presented the gratings at two different angles, ±30° or ±6° to the vertical, in a random sequence. Between successive stimuli, the monitor was uniformly illuminated at the background intensity for a 2-s inter-trial interval. To prevent light from the visual stimuli from entering the fluorescence collection pathway of the microscope, the stimuli used only the blue component of the RGB colour model, which was blocked by the fluorescence emission filter. We also placed a colour filter (Rosco, 382 Congo Blue) on the monitor screen. The mean luminance from the stimulus at the mouse eye was approximately 5 × 1010 photons mm−2 s−1, which is more than two orders of magnitude higher than the transition threshold to photopic vision in mice15.

Imaging sessions

To reduce the stress of head restraint, we head-fixed mice on a 100-mm-diameter Styrofoam ball that could rotate in two angular dimensions. We tracked the movement of the ball with an optical computer mouse. Because running or walking is known to alter visual processing in rodents28, we ensured that all visual stimulation trials used for analysis were those when the mice were passively viewing the video monitor, without locomotion, by excluding all trials during which the mice had an ambulatory speed of greater than 0.2 mm s−1. We imaged the Ca2+ activity of neocortical layer 2/3 pyramidal neurons, 150–250 μm below the cortical surface. The pixel clock cycle duration was 1.6 μs, hence the pixel dwell time in each of the four multiplexing phases was 400 ns. Owing to the ~50-ns switching time of the EOMs, we discarded four samples at the start of each phase, removing any crosstalk between phases. Across the full duration of each imaging session, fluorescence intensities decreased by ~9% owing to photobleaching. The total laser illumination power was 280–320 mW, divided evenly amongst the 4 beams that were active at any instant in time. Hence, each of the 16 image tiles (each 500 μm × 500 μm in size) received a time-averaged power of 17.5–20 mW, for a time-averaged illumination intensity of 70–80 mW mm−2. Previous Ca2+ imaging studies of layer 2/3 neocortical neurons with conventional two-photon microscopy39,40,41,42 have used mean illumination intensities of 89–1,800 mW mm−1.

For studies in which the visual stimulation comprised moving gratings oriented at ±30°, we used the air objective lens and the pixel-multiplexing approach to image acquisition. We acquired images with 1,024 × 1,024 pixels at a 7.23 Hz frame rate across the 2 mm × 2 mm field of view using the air objective lens. The total imaging duration per session was 2,800 s (about 20,000 two-photon image frames), resulting in 700 visual stimulation trials, 350 for each of the two visual stimuli.

For studies in which the moving grating stimuli were oriented at ±6° to vertical, we used the water-immersion objective lens and line-multiplexing to acquire images with 1,728 × 1,728 pixels at 17.5 Hz across the 2 mm × 2 mm field of view, which we averaged and downsampled on the FPGA module to 864 × 864 pixels (Extended Data Fig. 9a–c, e, Supplementary Videos 2, 3). The total imaging duration per session was around 1,500 s.

Image reconstruction

We wrote custom MATLAB (Mathworks; version 2017b) scripts to manipulate the experimental datasets directly from the computer hard drive, without loading all the data into the computer’s random-access memory.

The first step of image reconstruction accounted for the differences in the gain values of the 16 PMTs. We determined the gain values by imaging a static fluorescence sample and then analysing the statistics of the photon shot-noise limited fluorescence detection. Specifically, we performed a linear regression between the mean signal from each PMT and its variance. In the shot-noise limited regime, the slope of this relationship equals the combined gain of the PMT, pre-amplifier and ADC. Knowledge of the pre-amplifier and ADC gain values enabled us to determine the PMT gain. Given these empirically determined PMT gain values, the first step of image reconstruction was normalization of the fluorescence signals from each PMT channel by its gain.

The second step in image reconstruction was un-mixing of the crosstalk between the different PMT channels (Extended Data Fig. 3). In principle, when using laser-scanning microscopes with multiple illumination beams, one can apply to the set of PMT signal traces an un-mixing matrix that represents the inverse of a pre-calibrated, empirically determined matrix of crosstalk coefficients between the different photodetection channels43. However, this approach assumes that the biological sample is uniform and hence that a single un-mixing matrix will apply equally well across the entire specimen. In practice, brain tissue is not optically uniform, and it is challenging to precisely determine the crosstalk matrix in image sub-regions with low fluorescence levels, such as in blood vessels. Furthermore, two-photon neural Ca2+ imaging routinely involves modest signal-to-noise ratios and consequently the application of the inverse crosstalk matrix introduces additional error, analogous to the errors introduced by deconvolution methods when applied to weak signals.

For these reasons, we used a more straightforward, conservative and computationally efficient method of image reconstruction. Because crosstalk was only present in our microscope near the boundaries between image tiles, for each of the four sub-frames per image we computationally reassigned the signals from the boundary regions between tiles to the nearest neighbour source tile from which the crosstalk signals originated according to Extended Data Fig. 3c. We empirically determined that boundary regions 50 pixels wide contained ~75% of the scattered fluorescence photons from each laser focus. Hence, computational re-assignment of the photons from these boundary regions enabled conservative estimates of cells’ fluorescence signals, near continuous stitching of the images (Extended Data Fig. 3d, e), and high-fidelity extraction of neural activity (Extended Data Fig. 4).

Beyond each 50-pixel-wide boundary region, there were generally residual scattered fluorescence photons. Thus, for purposes of visual display only (Fig. 1b, c; Supplementary Video 1), we removed boundary artefacts left over after computational re-assignment (Extended Data Fig. 3c) by parameterizing the boundary with a smoothly decaying function:

where x is the distance from the tile edge, d = 70 pixels is the width of the boundary region, and a = 25 pixels characterizes the smoothness of the boundary decay.

Image pre-processing

After image reconstruction, each dataset comprised 16 videos, each 256 pixels × 256 pixels × 21,000 frames for a typical experiment, corresponding to the 16 tiles of each image frame. To correct for lateral displacements of the brain during image acquisition, we applied a rigid image registration algorithm (Turboreg44; http://bigwww.epfl.ch/thevenaz/turboreg/) to each of the individual video tiles. We chose this approach because the application of a single, rigid image registration algorithm over the entire 2 mm × 2 mm field of view did not account for variations in tissue motion between the different image tiles. After image registration, for display purposes only we merged the 16 motion-corrected video tiles into images or videos of the entire field of view (Supplementary Videos 1–3). We performed all further analysis on individual tiles.

For display purposes only (Supplementary Video 2, 3), to minimize stitching artefacts during video playback we applied to each image frame a linear-blending stitching algorithm45,46. We then computationally corrected the movie for lateral displacements of the brain by using a piecewise rigid image registration algorithm47. To highlight the details for viewers using a typical computer monitor, we saved the processed video using a contrast (γ) value of 0.75.

Computational extraction of neural activity traces

To identify individual neurons in the Ca2+ imaging data, we separately analysed the 16 individual video tiles in each movie and applied an established algorithm for cell sorting based on the successive application of principal component and independent component analyses35,48 (Mosaic software, version 0.99.17; Inscopix). We visually screened the resulting set of putative cells and removed any that were clearly not neurons (about 50% of candidate cells were removed). For the resulting set of cells, we created a corresponding set of truncated spatial filters that were localized to the cell bodies by setting to zero all pixels in the filter with values <5% of the peak amplitude of the filter. After thresholding, we removed any connected components containing less than 30 pixels. To obtain traces of neural Ca2+ activity, we applied the truncated spatial filters to the (F(t) − F0)/F0 movies (Extended Data Fig. 5), where F(t) denotes the time-dependent fluorescence intensity of each pixel and F0 is its mean intensity value, time-averaged over the entire movie.

For each cell, we used fast non-negative deconvolution to estimate the number of spikes fired in each time bin49. We then temporally down-sampled twofold the resulting traces by summing the estimated numbers of spikes in pairs of adjacent time bins, yielding time bins of 0.276 ms. We performed all subsequent analysis on the down-sampled traces.

Moreover, previous work has shown that the activity of mouse visual cortical neurons differs substantially between behavioural states of passive viewing and viewing during active locomotion28,35. To ensure that all visual stimulation trials used for analysis were those when the mice were passively viewing the video monitor, we excluded from analysis all trials during which the mice were running or walking (at speeds greater than 0.2 mm s−1). The resulting set of trials retained for data analysis in each mouse was 217–332 for each stimulus condition, except for the analysis of Extended Data Fig. 9a–c, e, which involved 122–167 trials per stimulus condition.

Trial-shuffled datasets

To create trial-shuffled datasets, we randomly permuted the activity traces of each cell across the full set of trials in which the same stimulus was presented, using a different random permutation for each individual cell. Thus, the trial-shuffled datasets preserved the statistical distributions of each cell’s responses to the two stimuli, but any temporally correlated fluctuations in different cells’ stimulus-evoked responses were scrambled. For analyses of trial-shuffled data, we averaged results over 100 different randomly chosen subsets of cells and/or stimulation trials, each of which was trial-shuffled with its own distinct permutations; exceptions to this statement are the analyses of Extended Data Figs. 8c–h, 10a, b, for which we averaged results over 30 such calculations instead of 100.

Noise correlations in the visual stimulus-evoked responses of pairs of cells

To compute correlation coefficients for the noise in the visual responses of a pair of neurons, we first integrated the estimated spike count of each cell between [0.5 s, 2 s] from the start of visual stimulation. After separating the trials for each of the two visual stimuli, we subtracted from each trace the mean stimulus-evoked response of the cell and then calculated the Pearson correlation coefficient, r, for the resulting set of responses from the two cells. We then averaged these noise correlation coefficients over the two stimulus conditions. Figure 2d, e and Extended Data Fig. 6e, g show statistical distributions of the resulting mean correlation coefficients across many cell pairs.

We compared the statistical distributions of mean correlation coefficients for two different sets of cell pairs, those with positive and those with negative covariance of their mean stimulus responses (that is, cell pairs with similar or dissimilar visual tuning) (Extended Data Fig. 6e, g). To visually highlight the differences between the two distributions (Fig. 2e), we also analysed only the most responsive cells, defined as those cells with the top 10% values of \(\sqrt{{\langle {r}_{{\rm{A}}}\rangle }^{2}+{\langle {r}_{{\rm{B}}}\rangle }^{2}}\), where \({r}_{{\rm{A}}}\) and \({r}_{{\rm{B}}}\) are the mean responses to the two stimuli.

Dimensionality reduction and computation of d′ for neural responses to visual stimuli

To estimate how much information the neural activity conveyed about the stimulus identity, we used the metric d′, which characterizes how readily the distributions of the neural responses to the two different sensory stimuli can be distinguished50. The quantity (d′)2 is the discrete analogue of Fisher information30. We evaluated three different approaches to computing d′ values for the discrimination of the two different visual stimuli (Fig. 3).

In the first approach, which we termed ‘instantaneous decoding’ (Fig. 3d, f, Extended Data Figs. 7a, 9a), we chose for analysis a specific time bin relative to the onset of visual stimulation. To examine the time-dependence of d′, we used the instantaneous decoding approach and varied the selected time bin from t = 0 s to t = 2 s relative to the start of the trial. The number of dimensions of the neural ensemble activity evoked in response to the visual stimulus was No, the number of recorded neurons (No ≈ 1,500). Said differently, the set of estimated spike traces provided an No-dimensional population vector response to each stimulus presentation.

In the second approach, termed ‘cumulative decoding’ (Fig. 3e, g, Extended Data Figs. 7b, 9b), we concatenated the responses of each neuron over time, from the start of the trial up to a chosen time, t. In this case, the dimensionality of the population activity vector was No × Nt, where Nt is the number of time bins spanning the interval [0 s, t].

In the third approach, termed ‘integrated decoding’ (Extended Data Fig. 7c), we examined the neural ensemble responses integrated over the interval from [0 s, 2 s] relative to stimulation onset. In the plots of d′ against time as computed by instantaneous decoding, the interval [0.5 s, 2 s] is when the d′ values have already reached an approximate plateau (Extended Data Fig. 7e). With integrated decoding, the dimensionality of the population vector response was No, the number of recorded neurons, as in the instantaneous decoding approach.

In each of the three decoding approaches, we arranged the traces of estimated spike counts into three-dimensional data structures (number of neurons × number of time bins × number of trials), for each of the two visual stimuli (Extended Data Fig. 5b).

A challenge was that calculation of d′ in an No-dimensional population vector space would have involved estimation of a No × No noise covariance matrix with over a million matrix elements. Direct estimation of the covariance matrix would have been unreliable, because the typical number of cells per dataset, No ≈ 1,500, was much larger than the typical number of trials P ≈ 600. This issue was even more severe in the case of cumulative decoding, for which the population activity vector had No × Nt dimensions. However, we found mathematically that by reducing the dimensionality of the space used to represent the ensemble neural responses, one can reliably estimate eigenvalues for the largest eigenvectors of the noise covariance matrix, which govern how well the two visual stimuli can be discriminated based on the neural responses (Appendix).

Our approach to dimensional reduction relied on a PLS discriminant analysis51. The PLS analysis enabled us to find the dimensions of the population vector space that were most informative about which visual stimulus was shown. To determine how many dimensions were important for discriminating the two stimuli, we constructed an orthonormal projection operator, which projected the No-dimensional (or No × Nt dimensional) ensemble neural responses onto a truncated set of the NR dimensions identified by the PLS analysis as being the most informative about the identity of the visual stimulus.

In the reduced space with NR dimensions, we calculated the (d′)2 value of the optimal linear discrimination strategy as:

where \(\Sigma =\frac{1}{2}({\Sigma }_{{\rm{A}}}+{\Sigma }_{{\rm{B}}})\) the noise covariance matrix averaged across two stimulation conditions, \(\Delta \mu ={\mu }_{{\rm{A}}}-{\mu }_{{\rm{B}}}\) is the vector difference between the mean ensemble neural responses to the two stimuli and wopt = Σ−1 Δμ, which is normal to the optimal linear discrimination hyperplane in the response space30.

To determine the optimal value of NR for these computations of d′, we split the data into three sets, each comprising a third of all trials. We used the first set to identify the PLS dimensions, the second ‘training’ set to find the optimal discrimination boundary defined by wopt, and the third ‘test’ set to estimate the discrimination performance d′. We then varied NR and plotted the resulting d′ values for both the training and test datasets (Extended Data Fig. 7a–c).

For all three decoding strategies, we chose NR = 5 for all subsequent determinations of d′, because the addition of further dimensions led to overfitting, as shown by the increase in discrimination performance using the training set and the decline in performance (that is, poorer generalization to previously unseen data) using the test set (Extended Data Fig. 7a–c).

After picking NR = 5, for all further computations of d′ we first chose a subset of neurons and divided the set of stimulation trials into two groups of equal size. We used the first group of trials to conduct the PLS analysis and the second group to determine d′ and the eigenvalue spectrum of the noise covariance matrix (Extended Data Fig. 5b). To make plots of d′ (Fig. 3d–g), we averaged d′ values across 100 different randomly chosen subsets of cells, which we analysed independently for every time bin. For each subset of cells and every time bin, we randomly split the set of visual stimulation trials into two halves, one half for determination of the five-dimensional sub-space and decoder training, and the other half for decoder testing. In Fig. 3d, e, we kept constant the number of cells per subset. In Fig. 3f, g, we varied the number of cells per subset. For instantaneous and cumulative decoders in the experiment with visual gratings oriented at ± 30°, we used [0.83 s, 1.11 s] and [0 s, 1.11 s] time intervals, respectively (Fig. 3f–i). For the experiment with gratings oriented at ± 6°, the time intervals used for instantaneous and cumulative decoding were respectively [0.70 s, 0.94 s] and [0 s, 0.94 s] (Extended Data Fig. 9a–c).

To determine the asymptotic value of d′ in the limit of many neurons, and the number of cells, n1/2, at which (d′)2 attains half of its asymptotic value (Fig. 3h, i), we performed a two-parameter fit to the growth of d' with increasing numbers of neurons, n: (d′)2 = (sn) / (1 + εn). We determined the asymptotic value of d′ as (s/ε)1/2 and n1/2 as ε–1.

To verify that linear decoding is a near optimal decoding strategy, we confirmed that the noise covariance matrix Σ was stimulus-independent in the reduced, five-dimensional space used to calculate d′ (Extended Data Fig. 7f). We found that the matrix elements of the noise covariance matrix were highly correlated across the two stimulus conditions (r: 0.81 ± 0.16, mean ± s.d., N = 5 mice). This indicates that other more complex, nonlinear decoding strategies are unlikely to substantially surpass the accuracy of the linear strategy, which we further confirmed via an analysis of quadratic decoding (Extended Data Fig. 7h).

We also verified that we had sufficient numbers of visual stimulation trials to estimate d′ accurately (Extended Data Fig. 7g). For every mouse, d′ approached an asymptote as the number of stimulation trials used for analysis was increased; this indicates that beyond a certain point the computed value of d′ is insensitive to the number of trials. Moreover, we developed an analytic theory describing how the accuracy of our estimates of d′ depends jointly on the numbers of neurons and experimental trials (Extended Data Fig. 10f–k, Appendix).

In addition to our analyses of real data, we also calculated \({({d}_{{\rm{shuffled}}}^{{\prime} })}^{2}\) (Fig. 3b–g), the optimal linear discrimination performance using trial-shuffled datasets, which we created by shuffling the responses of each cell across stimulation trials of the same type. Owing to this shuffling procedure, the off-diagonal elements of \({\Sigma }_{{\rm{A}}}\) and \({\Sigma }_{{\rm{B}}}\) become near zero.

We further calculated the performance of a ‘diagonal’ discrimination strategy (Fig. 3b, d, e) that was blind to the noise correlations between neurons, using the actual (unshuffled) datasets30. For this sub-optimal strategy, \({({d}_{{\rm{diagonal}}}^{{\prime} })}^{2}\) determines the separation of two response distributions obtained when the vector of decoding weights w is collinear with Δμ (Fig. 3), which we calculated according to:

where Σd is the diagonal covariance matrix.

Eigenvalues of the noise covariance matrix

To examine how the statistical structure of neural noise affects the ability to discriminate neural responses to the two different visual stimuli (Fig. 4, Extended Data Fig. 10a–e), we expressed (d′)2 in terms of the eigenvalues λα and eigenvectors eα of the noise covariance matrix Σ:

which can be viewed as a sum of signal-to-noise ratios, one for each eigenvector. Clearly, the eigenvectors well aligned with Δμ are the most important for discriminating between the two distributions of neural responses. Noting that λα equals the noise variance along eα, our data revealed noise modes that were well aligned with Δμ and for which the variance increased linearly with the number of cells. The combination of these two attributes is what leads to the saturation of d′ as the number of cells in the ensemble becomes large (Fig. 4). Notably, our analysis also uncovered noise modes with much larger variance that are not information-limiting, as they do not align well with Δμ.

Calculation of decoding weights

We calculated the vector of optimal linear decoding weights, wopt, in the reduced space identified by PLS analysis:

For moving grating visual stimuli oriented at ±30°, wopt was generally well aligned to Δμ, indicating that correlation-blind decoding performed near optimally (Figs. 3b, h, 4a, c). This was somewhat less the case with moving gratings oriented at ± 6° (Extended Data Fig. 9c). To assess the contributions of individual cells to the optimal decoder, we estimated the vector of decoding weights in the space of all neurons as:

where T is a transformation matrix from the high-dimensional population vector space, in which the responses of each cell occupy an individual dimension, into the five-dimensional space identified by PLS analysis. Starting around 0.4 s after the onset of visual stimulation, wdecoding was largely time-invariant (Extended Data Fig. 7d).

L2-regularized regression

Because our method for computing d′ via PLS analysis involved a dimensional reduction, we compared the d′ values found with PLS analysis to those determined via a different method, L2-regularized regression52, which does not depend on dimensional reduction (Extended Data Fig. 8a, b). This form of regression uses a regression vector, b, that lies within the high-dimensional space of all ensemble neural activity patterns, but its length is limited by the use of an adjustable regularization parameter, k. For each subset of neurons considered, we randomly chose 90% of the visual stimulation trials for the determination of b. We projected the neural responses from the remaining 10% of trials onto the dimension determined by b. We then computed d′ with the same formula as used with PLS analysis, except with b replacing wopt, the optimal linear discrimination hyperplane. Using this approach, we found the maximum value of d′ across all values of k within the range [1, 105]. We averaged these maximal d′ values across 100 different subsets of neurons and visual stimulation trials (Extended Data Fig. 8a).

Kullback–Leibler divergence

To assess the extent to which quadratic decoding might surpass the optimal linear decoder, we computed the Kullback–Leibler (KL) divergence31 between the two distributions of ensemble neural responses to the two different visual stimuli (Extended Data Fig. 7h). The KL divergence is a generalization of d′ to arbitrary distributions and, like d′, provides an assessment of the statistical differences between two distributions. When the two distributions are Gaussians with equal covariance matrices, the KL divergence reduces to (d′)2, and linear decoding methods suffice to optimally discriminate between the two distributions52. By comparison, for two Gaussian distributions with different means and covariance matrices, (d′)2 is not equivalent to the KL divergence, and quadratic decoding methods are required to optimally discriminate between the two distributions52.

To assess the potential benefits of quadratic decoding, we fit multivariate Gaussians to the two stimulus response distributions without assuming they had equal covariance matrices. We computed the KL divergence of the response distribution to stimulus A relative to the response distribution to stimulus B according to:

where ΣA, ΣB are the noise covariance matrices for the two stimulation conditions, Δμ = μA − μB is the vector difference between the mean ensemble neural responses to the two stimuli, and N is the dimensionality of the response distribution (that is, the number of cells in the ensemble). The KL divergence saturated as N increased and was generally not much greater than (d′)2 (Extended Data Fig. 7h). This result was consistent with the finding that the noise covariance matrix was similar for the two different visual stimuli (Extended Data Fig. 7f) and supported the conclusion that quadratic decoding would achieve little performance gain beyond that of the optimal linear decoder.

Computational studies of the robustness of empirically determined d' values

To verify that our decoding methods were robust to the potential presence of effects such as common mode fluctuations and multiplicative gain modulation that could increase the trial-to-trial variability of neural responses, we compared the d′ values obtained from PLS analysis versus L2-regularized regression using computationally simulated datasets of neural population responses (Extended Data Fig. 8c–h).

First, to examine the combined effects of information-limiting correlations and common mode fluctuations (Extended Data Fig. 8c–f), we studied a model of the neural ensemble responses in which the noise covariance matrix exhibited information-limiting noise correlations via a single eigenvector, f, the eigenvalue of which grew linearly with the number of cells in the ensemble. In addition to this rank 1 component, we included a noise term that was uncorrelated between different cells, as well as a common mode fluctuation, yielding a noise covariance matrix with the form

where σ2 = 1 is the amplitude of uncorrelated noise, I is the identity matrix, J is a rank 1 matrix of all ones, and f is the information-limiting direction, a vector that we chose randomly in each individual simulation from a multi-dimensional Gaussian distribution with unity variance in each dimension. The amplitude of information-limiting correlations was ε = 0.002, approximately matching the level observed in the experimental data. In the model version without common mode fluctuations, we set εcommon to zero. In the version with common mode fluctuations, we set εcommon = 0.02, ten times the value of ε. We chose the difference in the means of the two stimulus response distributions, Δμ, to be aligned with f (Fig. 3a) and to have a magnitude of 0.2, so that the asymptotic value of d′ for large numbers of cells approximately matched that of the data. We compared the decoding results attained with and without the presence of common mode fluctuations in the neural responses.

Second, to study the possible effects of multiplicative gain modulation (Extended Data Fig. 8g, h), we compared two versions of a model in which the responses of the V1 neural population either were or were not subject to a multiplicative stochastic gain modulation but were otherwise statistically equivalent. We modelled the V1 cell population as a set of linear Gabor filters (see Appendix section 5). In the version with gain modulation, on each visual stimulation trial we multiplied the output of the Gabor filter by a randomly chosen factor, uniformly distributed between 50–150%, the value of which was the same for every cell but varied from trial to trial.

Estimates of perceptual acuity

We used the empirical determinations of d′ based on visual cortical activity and the parameters of the moving grating visual stimuli to estimate the minimum perceptible orientation difference between the two stimuli. We compared the resulting values to those estimated from past behavioural measurements of visual acuity in mice15,34, all of which agree well.

One behavioural study assessed how well three individual mice could discriminate the orientations of visual gratings34. The best trained of these three mice—that is, the mouse that performed the most sessions and had the smallest error bars in the threshold determination—had a behavioural threshold for orientation discrimination (4.6° ± 0.1°; n = 7 sessions) close to the value estimated from our neural data (4.8°). The second mouse had a 5.7° ± 0.6° threshold (n = 4 sessions), and the third mouse had a threshold of 6.9° (n = 1 session).

Another behavioural study examined visual acuity in 13 mice and determined the highest visual spatial frequencies the mice could discern15. To compare our results to this study, we used the fact that our grating stimuli had a low spatial frequency (0.04 cycles per degree) to approximate the perceptual challenge of estimating the grating orientation as being equivalent to that of estimating the orientation of the line of peak illumination intensity over the same viewing diameter. In the behavioural study of acuity15, the mice used both eyes to view the stimulus, whereas in our studies mice viewed the stimulus with one eye, and we recorded neural activity from only one cerebral hemisphere. To account for these differences, we posited that neural noise fluctuations should be nearly independent across the visual streams from the two eyes, which would boost d′ values by about a factor of √2 over those achievable with one eye. However, our determinations of d′ from neural activity concern the discrimination of two distinct visual stimuli, which should also increase d′ values by a factor of about √2 over those for a single stimulus viewed with one eye. Given these counterbalancing factors, we used the d′ values to estimate the highest perceptible spatial frequency as \(f\approx d{\prime} (\theta )/D\,\sin \,\theta \), where D is the diameter of the visual stimuli (50 deg; Fig. 2b) presented at orientations of ±θ. For the grating stimuli oriented at ±30° to vertical, d′ ≈ 6, yielding f ≈ 0.3 cycles per degree. For the grating stimuli oriented at ±6°, which are more representative of the perceptual threshold, d′ ≈ 2.5 and thus f ≈ 0.48 cycles per degree, comparable to the value of f ≈ 0.5 cycles per degree attained from the behavioural studies at a unity d′ value for the behavioural performance15. We converted values of f into the minimum perceptible orientation difference, 2θmin, between two grating stimuli oriented at ±θmin by using \({\theta }_{\min }={\sin }^{-1}(1/Df)\). This conversion yielded a prediction of θmin ≈ 2.3° based on the behavioural studies of mouse visual acuity15, as compared to θmin ≈ 2.4° based on our neural data.

Computational simulations of activity in a two-layer neural network

To illustrate that cells whose receptive fields overlap exhibit shared noise correlations, we simulated a simple two-layer feed-forward network of linear neurons, with 14 input neurons and 3 output neurons (Extended Data Fig. 1j–m). The neurons in each layer were equally spaced along a linear axis. We defined the strengths of the connections, wi, between the input and output neurons such that the receptive field profiles of the different output neurons were spatially overlapping Gaussian functions of the linear separation between each output neuron and the input neurons (Extended Data Fig. 1j).