Abstract

According to management by objectives (MBO) theory, the significance of management objectives must be considered as a reference point in a performance evaluation. Cross efficiency evaluation has always been considered to be one of the important performance evaluation methods. However, few studies to date have considered the impact of management objectives on cross efficiency. According to prospect theory, the choice of reference point will cause irrational psychology in decision makers. A management objective is a natural reference point, which will cause a ‘gain and loss’ psychology in enterprises and may create irrational psychology. Performance level is an important index by which to evaluate resource allocation, which in turn can be regarded as an important enterprise management objective. This paper proposes a cross efficiency evaluation method based on performance level. Cross efficiency evaluation models are constructed, based on the irrational psychology that occurs under organization objectives, personal objectives and composite objectives. This method not only considers the bounded rational behavior of enterprises, but is also more flexible. A numerical example is given to illustrate the application of the bounded rational cross efficiency evaluation method in data envelopment analysis (DEA) ranking.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Data envelopment analysis (DEA), first proposed by Charnes et al. [1], is a non-parametric method used to evaluate decision-making unit (DMU) performance based on input and output data. In this method, the weights of the input and output indexes do not need to be given subjectively; they are objectively obtained through model optimization. The advantages of the DEA method have been generally recognized by scholars [9, 11, 20]. A traditional DEA model, such as the CCR model, will have multiple solutions of weights, leading to the problem of the full ranking of DMU performance. In this regard, Sexton et al. [15] proposed cross-efficiency. The study regarded CCR efficiency as the decision-making unit based on self-evaluation efficiency, and achieved peer evaluation by introducing secondary objectives to optimize input and output weights. Doyle and Green [7] further proposed aggressive and benevolent cross-efficiency methods under three secondary objectives. As a performance evaluation tool, cross-efficiency evaluation has been widely studied and applied worldwide [5, 6]

Cross-efficiency evaluation is, in fact, a relative efficiency evaluation method with reference points that may be either efficient or inefficient frontiers [8, 12, 17, 21], or a DMU, such as ideal DMU (IDMU) or anti-ideal DMU (ADMU) [13, 18, 19], Shi et al., 2020), or interval reference points, which take the best production state and the worst production state as reference states (Huang, et al., [10]). In performance evaluation, management objectives serve as an important measure for the degree of achievement of decision-making units. As a significant performance evaluation method, the cross-evaluation method should consider management objectives as a reference point. Many existing studies have also considered the reference point in cross-evaluation, but few have taken management objectives as the reference point.

According to management by objectives (MBO) as proposed by Drucker, management objectives are diverse, but regardless of the type of management objectives, they should abide by the SMART (specific, measurable, achievable, relevant and time-bound) principle. To be clear, “SMART” means that management objectives must be specific (this feature requires management objectives to be clear and easy to understand), measurable (this feature requires that the reference standards used in the evaluation are consistent with the management objectives), attainable (this feature refers to management objectives not being too low or too high, and that the objectives can be achieved through hard work), relevant (this feature requires that the sub-objectives are related to the enterprise’s overall objectives) and time-based (this feature requires that the completion of the objective is time-limited). The reference points in existing research do not meet the principles of management objectives. For example, a positive IDMU or an ADMU is used as a reference point; the point will vary with the changes of members of the evaluated DMUs, and can only reflect the best and worst states of the evaluation criteria. Obviously, this type of reference point is not clear, measurable and achievable; the efficient and inefficient frontiers also have these limitations. This study proposes to use the relevant performance level as a reference point. The performance level is defined according to the application requirements (see definition), and is not limited to the optimal performance level. Thus, this approach conforms to the SMART principle of management objectives.

The theory of MBO was further developed by Odiorne in [14], and holds that management objectives should not only focus on the relationship between performance evaluation and management objectives, but should also consider the preferences (for example, attitude, rationality, irrationality, etc.) of decision makers. Prospect theory was put forward by Kahneman and Tversky in [14], and maintains that people will have bounded rational psychology when facing a reference point. When the performance is better than the reference point, the degree of excellence will be underestimated. Conversely, when the performance is worse than the reference point, the degree of poor performance will be enlarged (Shi et al., 2020). Judgments of value vary due to changes in the reference points; similarly, performance will vary due to changes in management objectives. Therefore, performance evaluation should not only consider the significance of management objectives as reference points, but also consider the bounded rationality of the evaluated object when facing management objectives.

In conclusion, traditional cross-efficiency evaluation methods often use specific DMU or virtual DMU as the reference point for performance evaluation, rarely consider the role and significance of performance level as the reference point, and ignore the influence of bounded rationality on the behavior of decision-making units. In reality, decision makers are often not entirely rational, and their decision-making processes are influenced by cognitive limitations, information asymmetry, and other factors. Therefore, it is essential to consider the bounded rationality of decision makers and adopt performance level as the management objective in cross-efficiency evaluation methods, which holds significant theoretical and practical value.

Performance evaluation is the ranking of evaluated DMUs based on performance level. High level performance is the pursuit of all DMUs, so a high performance level is a natural management objective. The performance level value under the DEA method is between 0 and 1, and the value can be solved by a DEA model. When the performance of the DMU is good enough, the value can reach the highest level of 1, which conforms to the three principles of specificity, measurability and reachability. Obviously, for the cross efficiency evaluation model, the performance level is the ideal management objective. This paper considers the bounded rationality psychology of DMUs when performance level is taken as the management objective, constructs a cross efficiency model, realizes the full ranking of each DMU, and provides effective technical means for decision-makers to make scientific decisions.

2 Theory Premise

2.1 Prospect Theory

Prospect theory is a theory describing the decision-making process of decision-makers in the face of risk. This is inconsistent with traditional expectation value theory and expected utility theory. Risk preference on the part of decision-makers is inconsistent in the face of loss and profit; they become risk-seeking in the face of loss, while risk-averse in the face of profit. The choice and change of a reference point will affect the decision-maker’s perception of revenue and loss, and then affect the decision-making results. The value function of prospect theory is as follows:

where

when \(\Delta z \ge 0\), there is a gain; when \(\Delta z < 0\), there is a loss; \(\alpha\) and \(\beta\) represent the concavity and convexity of the regional value function of return and loss, respectively. Loss aversion coefficient \(\lambda \ge 1\) indicates that the loss area is steeper than the income area. In this theory, there is an inflection point in the value function; that is, there is a so-called “reference point”.

As shown in Fig. 1, the reference point is the inflection point of the value curve. The value curve is divided into the profit area and loss area. Obviously, the loss curve is steeper than the income curve, which shows that decision makers are more sensitive to loss than income. On the other hand, the outlook value curve is concave in the income domain and convex in the loss domain. This reflects the decision makers’ risk aversion tendency to the income and their risk seeking tendency to the loss.

2.2 Efficiency Evaluation

2.2.1 Self-Evaluation

Suppose there are n DMUs to be evaluated with m inputs and s outputs. Denote by \(x_{ij} (i = 1, \ldots ,\,s)\) and \(y_{rj} \left( {r = 1, \ldots ,\,s} \right)\) the input and output values of DMUj \(\left( {j = 1, \ldots ,\,n} \right)\). Consider a DMU, say, DMUk, \(k \in \{ 1, \ldots ,\,n\}\), whose efficiency relative to the other DMUs can be measured by the following CCR model [1]:

where ε is an infinitesimal. Let \(u_{rk}^{*} \,(r = 1, \ldots ,s)\) and \(v_{ik}^{*} \,(r = 1, \ldots ,m)\) be the optimal solution to Model (2). Then, \(\theta_{kk}^{*} = \sum\nolimits_{r = 1}^{s} {u_{rk}^{*} y_{rk} }\) is referred to as the CCR-efficiency of DMUk, which also reflects the self-evaluated efficiency of DMUk. As such, \(\theta_{jk} = \sum\nolimits_{r = 1}^{s} {u_{rk}^{*} y_{rj} /\sum\nolimits_{i = 1}^{m} {v_{ik}^{*} x_{ik} } }\) is referred to as a cross-efficiency of DMUj and reflects the peer evaluation of DMUk to DMUj \(\left( {j = 1,...,n;j \ne k} \right)\).

2.2.2 Peer Evaluation

Scholars have proposed a variety of cross-evaluation models for different application problems, considering either the preference or the psychology of DMUs (Chen, [2,3,4]). However, few studies to date have considered the irrational psychology of DMUs. A study by Liu et al. [13] proposed one of the few cross-evaluation methods that consider the irrational psychology of DMUs. The cross-evaluation method considers the risk aversion of decision makers with the best and worst performing decision units as reference points. The best performing decision unit (IDMU) and the worst performing decision unit (ADMU) are defined, as can be seen in Definition 1 and Definition 2. Then, cross-evaluation models are constructed, taking IDMU and ADMU as reference points, and regarding both IDMU and ADMU as reference points.

Definition 1:

(IDMU): Assume a virtual DMU is the ideal DMU, which can use the least input \(x_{i}^{\min }\) to generate the most outputs \(y_{r}^{\max }\); where \(x_{i}^{\min } = \mathop {\min }\limits_{j} \left\{ {x_{ij} } \right\},j = 1,2,...,n\), \(y_{r}^{\max } = \mathop {\max }\limits_{j} \left\{ {y_{rj} } \right\},\) \(j = 1,2,...,n\), denoted by IDMU.

Definition 2:

(ADMU): Assume a virtual DMU is the anti-ideal DMU, which would use the most input \(x_{i}^{\max }\) to generate the least outputs \(y_{r}^{\min }\); where \(x_{i}^{\max } = \mathop {\max }\limits_{j} \left\{ {x_{ij} } \right\},\)\(i = 1,2,...,n\), \(y_{r}^{\min } = \mathop {\min }\limits_{j} \left\{ {y_{ij} } \right\},\) \(i = 1,2,...,n\), denoted by ADMU.

Shi et al. [16] further expanded ADMU and IDMU into interval DMU, which is defined in Definition 3.

Definition 3:

Assume that there is a production possibility set which can use m interval inputs \(\left[ {a_{i} x_{i}^{\min } ,b_{i} x_{i}^{\max } } \right]\,\left( {i = 1,2,...,m} \right)\), to generate s interval outputs \(\left[ {c_{r} y_{r}^{\min } ,d_{r} y_{r}^{\max } } \right]\,\,\left( {r = 1,2,...,s} \right)\), where \(a = \left( {a_{1} ,a_{2} ,...,a_{m} } \right)\), \(b = \left( {b_{1} ,b_{2} ,...,b_{m} } \right)\), \(c =\)\(\left( {c_{1} ,c_{2} ,...,c_{s} } \right)\) and \(d = \left( {d_{1} ,d_{2} ,...,d_{s} } \right)\) are all vectors, and \(1 \le a_{i} \le \frac{{x_{i}^{\max } }}{{x_{i}^{\min } }}\), \(\frac{{x_{i}^{\min } }}{{x_{i}^{\max } }} \le b_{i} \le 1,i = 1,2,...,m\), \(1 \le c_{r} \le \frac{{y_{r}^{\max } }}{{y_{r}^{\min } }}\), \(\frac{{y_{r}^{\min } }}{{y_{r}^{\max } }} \le d_{r} \le 1\), \(r = 1,2,...,s\), and \(x_{i}^{\min } > 0\), \(x_{i}^{\max } > 0\),\(y_{r}^{\max } > 0\), \(y_{r}^{\min } > 0\). This is called an interval-DMU.

The cross-evaluation model taking interval-DMU as a reference point is shown as follows:

where

and \(\theta_{kk}^{*}\) is the self-evaluation efficiency of DMUk obtained using Model (2). Also, \(u_{rk} \,\,(r = 1, \ldots ,s)\) and \(v_{ik} \,(i = 1, \ldots ,m)\) are decision variables. The objective function is the prospect value of DMUk, based on interval-DMU. The model unifies multiple data types of reference points, but the model is too complex.

3 Cross-Efficiency Evaluation Models Based on Management Objectives

The DEA method is used to evaluate the performance of DMUs. According to the modeling ideas and meaning of the DEA method, each inefficient DMU can achieve improved efficiency through the adjustment of inputs and outputs. The DMU’s efficiency reflects the reasonable degree of resource allocation of the DMU, and DEA efficiency ranges from 0 to 1. That is, when the DEA efficiency is 1, the resource allocation of the DMU is optimal. Obviously, the DEA efficiency, as a management objective, is attainable, specific and measurable; therefore, this study uses DEA efficiency as the performance management objective.

In practical applications, management objectives have multiple levels, multiple angles and diversity. As a reference point for DMUs, an objective may be for all DMUs or a single DMU. When the management objective is the performance evaluation criterion of all DMUs, that objective is an organizational objective, which arises from the needs of either the industry or society. When the management objective is the evaluation criterion of a DMU, that objective is a personal objective, which is subjectively formulated by the DMU according to the status quo and needs. In fact, the performance evaluation of a DMU often has to simultaneously face both personal objectives and organizational objectives. We regard this management objective system (i.e., one that considers both organizational objectives and personal objectives) as a composite objective. The cross-efficiency evaluation models based on personal objectives, organizational objectives and composite objectives are constructed in consideration of bounded rationality.

Assumption:

(1) Organizational objectives and personal objectives are consistent; that is, both organizational objectives and personal objectives urge the DMU to try its best to improve resource allocation (that is, to increase efficiency). (2) All DEA models in this study assume constant returns to scale.

3.1 Profit and Loss Model Under Management Objectives

Objectives management is divided into the objective setting stage and the performance evaluation stage. This study focuses on performance evaluation, with management objectives as the reference points, rather than the formulation of management objectives. In the management objective setting stage, the management objective should be higher than the performance level of most DMUs. However, in the performance evaluation stage, the performance of some DMUs may meet or even exceed the management objective. For a DMU, say DMUj (j = 1, 2,…, n), the management objective is θMO (0 < θMO < 1), and its self-evaluation efficiency (CCR efficiency) is θj (j = 1, 2, …, n) in the performance evaluation stage. DMUk, being the evaluated DMU, takes θMO as a reference level to evaluate peer DMUj. There is redundancy in inputs and deficiencies in output, which are all losses for DMUj (j = 1, 2,…, n). The prospect value of DMUj can be defined by the redundancy of inputs and the deficiencies of outputs as described below.

Definition 4:

There will be an equation [see Formula (4)], when DMUk is evaluated with θMO as a reference point to evaluate its peer DMUj (j = 1, 2,…, n) and θMO > θjj:

where \({ - }\Delta x_{j}\) and \({ - }\Delta y_{j}\) represent the redundancies in inputs and deficiencies in output, respectively, such that \(S_{j1}^{k} =\)\(- \lambda \left( { - \left( { - \Delta y_{j} } \right)} \right)^{\beta } - \lambda \left( { - \left( { - \Delta x_{j} } \right)} \right)^{\beta }\) is defined as the loss of DMUj, taking θMO as the reference point.

Definition 2

There is an equation, shown as Formula (5), when θMO ≤ θjj

where \(\Delta x_{j}\) and \(\Delta y_{j}\) represent the savings in inputs and profits in output, respectively, such that \(S_{j2}^{{\text{k}}} =\)\(\left( {\Delta y_{j} } \right)^{\alpha } + \left( {\Delta x_{j} } \right)^{\alpha }\) is defined as the gain of DMUj, taking θMO as the reference point.

Models (6)–(7), which take \(S_{j1}^{k}\) and \(S_{j2}^{{\text{k}}}\) as objective functions, are all nonlinear programming, and there may be multiple optimal solutions, which will destroy the availability of the models. To ensure that the objective functions of these models have unique optimal solutions, the propositions shown below are given and proved.

Proposition 1:

There is a function \(S = \left( {\Delta y} \right)^{\alpha } + \left( {\Delta x} \right)^{\alpha }\), where \(\alpha \left( {0 < \alpha < 1} \right)\) is a constant, and \(\Delta x \ge 0,\,\Delta y \ge 0\). Then, the function \(S\) is monotonically increasing and will have the unique extreme values.

Proof:

Let \({\text{B}}\left( {x,y} \right)\) and \({\text{B}}_{1} \left( {x_{1} ,y_{1} } \right)\) be two points in two dimensional space, and let l be the direction of the vector \(\mathop {{\text{BB}}_{1} }\limits^{ \to } { = }\left( {\Delta x,\Delta y} \right)\left( {\Delta x{ = }x_{1} - x,\,\Delta y = y_{1} - y} \right)\), and \(\left( {\Delta x \ge 0,\,\Delta y \ge 0} \right)\).

A unit vector in the same direction as l is denoted as \(e_{j}\)

Partial derivative of function S, \(\frac{\partial S}{{\partial \Delta x}}{ = }\alpha \left( {\Delta x} \right)^{{\alpha { - }1}}\), \(\frac{\partial S}{{\partial \Delta y}}{ = }\alpha \left( {\Delta y} \right)^{{\alpha { - }1}}\).

The derivative of \(S\) in the direction of l is

such that \(S\) is monotonically increasing in the direction of \(\mathop {{\text{BB}}_{1} }\limits^{ \to }\) and has the unique extreme values.

Proposition 2

There is a function \(S = - \lambda \left( {\Delta y} \right)^{\beta } - \lambda \left( {\Delta x} \right)^{\beta }\), where \(\beta \left( {0 < \beta < 1} \right)\) is a constant, and \(\Delta x \ge 0,\,\Delta y \ge 0\). Then, the function \(S\) is monotonically decreasing and will have the unique extreme values.

Proof:

The proof process is similar to Proposition 1.

3.2 Cross-Efficiency Evaluation Model Under Organizational Objectives

Organizational objectives, as one of the management objectives, are the reference points for all DMUs. Based on self-interest, when a DMU faces organizational objectives, the DMU will pay more attention to the prospect value of peers with organizational objectives as the reference point. This enlarges the disadvantages and degrades the advantages of peers to achieve an increase in a DMU’s ranking.

According to prospect theory, the prospect value is divided into gain domain (advantage) and loss domain (disadvantage) (see Fig. 1); the value is positive in the gain domain and negative in the loss domain. In other words, minimizing the prospect value of peers means minimizing the gains of peers and maximizing the losses of peers. Therefore, when a DMU, say, DMUk, k {1,…, n} faces organizational objectives \(\left( {\theta^{{{\text{OO}}}} } \right)\) as a reference point and has the opportunity to evaluate each peer (DMUj), the DMU will choose a set of weights from the optimal self-evaluation weights to obtain a high self-evaluation score. This set of weights is obtained by minimizing the prospect value \(\left( {S_{j1}^{k} } \right)\) and maximizing the prospect value \(\left( {S_{j2}^{k} } \right)\) of DMUj.

When the organizational objective \(\left( {\theta^{{{\text{OO}}}} } \right)\) is less than the self-evaluation efficiency \(\left( {\theta_{{_{jj} }}^{*} } \right)\) of DMUj, the cross-evaluation model with \(\theta^{{{\text{OO}}}}\) as reference point, taking the \(S_{j1}^{k}\) as the objective function, is shown as Model (6):

where \(\Delta x_{j1}\) and \(\Delta y_{j1}\) are, respectively, the savings in inputs and profits in outputs of DMUj, with the \(\theta^{{{\text{OO}}}}\) as the reference point, when DMUk evaluates its peer, DMUj.

When the organizational objective \(\left( {\theta^{{{\text{OO}}}} } \right)\) is greater than the self-evaluation efficiency \(\left( {\theta_{jj}^{*} } \right)\) of DMUj (peer of DMUk), the cross-evaluation model, taking the prospect value \(\left( {S_{j2}^{k} } \right)\) of DMUj as the objective function, is shown as Model (7):

where \(- \Delta x_{j2}\) and \(- \Delta y_{j2}\) are, respectively, redundancies in the inputs and deficiencies in the outputs of DMUj, with the \(\theta^{{{\text{OO}}}}\) as the reference point.

3.3 Cross-Efficiency Evaluation Model Under Personal Objectives

The DMU pays more attention to its own interests, rather than the interests of peers, when faced with personal objectives. This occurs to such an extent that the DMU often takes personal objectives as a reference point to maximize its own prospect values by maximizing gains and minimizing losses. Therefore, this study’s idea of cross-efficiency modeling under personal objectives is that each DMU will try to select a set of input and output weights to maximize its own prospect value, and this set of weights is also used to evaluate the DMU’s peers.

When personal objective \(\theta^{{{\text{PO}}}}\) is less than the self-evaluation efficiency \(\left( {\theta_{{_{kk} }}^{*} } \right)\) of DMUk, there is a savings in inputs and profits in output, which are all gains for DMUk. The prospect value \(\left( {S_{k1}^{k} } \right)\) can be defined by the gains of DMUk. For DMUk, k {1,…, n}, DMUk will try to choose a group of weights from multiple self-evaluation weights of itself, specifically weights which can maximize the prospect value of DMUk when facing its own personal objectives \(\left( {\theta^{{{\text{PO}}}} } \right)\). The cross-evaluation model with \(\theta^{{{\text{PO}}}}\) as the reference point takes \(S_{k}^{I}\) as the objective function; this is shown as Model (8):

where \(\Delta x_{k1}\) and \(\Delta y_{k1}\) are, respectively, savings in the inputs and profits in the outputs of DMUk, with the \(\theta^{{{\text{PO}}}}\) as the reference point.

Conversely, when the personal objective \(\theta^{{{\text{PO}}}}\) is greater than the self-evaluation efficiency \(\left( {\theta_{kk}^{*} } \right)\) of DMUk, there are redundancies in inputs and deficiencies in outputs, which are all losses for DMUk. The prospect value \(\left( {S_{k2}^{k} } \right)\) is defined by the losses of DMUk. The cross-efficiency evaluation model minimizing the prospect value \(\left( {S_{k2}^{k} } \right)\) is shown as Model (9):

where \(- \Delta x_{k2}\) and \(- \Delta y_{k2}\) are, respectively, redundancies in the inputs and deficiencies in the outputs of DMUk, with the \(\theta^{{{\text{PO}}}}\) as the reference point.

3.4 Calculation of Cross Efficiency

Parameter λ, α and β (see Sect. 2.1) reflects the bounded rationality of DMUs, which is determined in line with people’s psychology through empirical research (Rieger et al., 2017; Tversky and Kahneman, 1992). This study focuses on the cross evaluation method based on the existing research results of psychological parameters. The psychological parameters will directly use the research results of Tversky and Kahneman; that is, α = β = 0.88, and λ = 2.25. In this study, management objectives are divided into organizational objectives, personal objectives and composite objectives. The formation and calculation process of cross efficiency based on these three types of management objectives is shown below.

3.4.1 The Steps of Cross Evaluation Based on Organizational Objectives

Step1: The organizational objective \(\left( {\theta^{{{\text{OO}}}} } \right)\) is formulated according to the self-evaluation efficiency of evaluated DMUs and macro control policies, see Fig. 2 for the organizational objective setting process linked by the blue arrow.

Step2: When the DMUk evaluates DMUj (j = 1, 2,…, n; j ≠ k), DMUk compares the self-evaluation efficiency \(\left( {\theta_{jj}^{*} } \right)\) of DMUj with the value of the organizational goal \(\left( {\theta^{{{\text{OO}}}} } \right)\). If the \(\theta^{{{\text{OO}}}}\) is larger, the prospect value Sj1k is the loss of DMUj. On the contrary, if the prospect value Sj2k is a gain, the evaluated DMUk, for its personal benefit, will then consider selecting a group of weights from the self-evaluation weights to maximize Sj1k and minimize Sj2k, and that group of weights will be used to evaluate DMUj, see Fig. 2 for the performance evaluation process linked by the red arrow.

Step3: Here, DMUk not only evaluates its peer DMUj (j = 1, 2,…, n; j ≠ k), but also accepts cross evaluation from these peers. The process of cross evaluation is shown as step 2. The DMU’s self-evaluation efficiency \(\theta_{kk}^{*}\) is solved by Model (2), and the cross evaluation scores \(\left( {\theta_{jk} } \right)\) from peers are solved by Models (6)–(7). There are n-1 peers for DMUk. Therefore, the cross efficiency \(\theta_{{{\text{OO}} - k}}^{{\text{cross - efficiecy}}}\) of DMUk is the combination of \(\theta_{kk}^{*}\) and \(\theta_{jk}\)(j = 1, 2,…, n; j ≠ k).

3.4.2 The Steps of Cross Evaluation Based on Personal Objectives

Step 1: The personal objective \(\left( {\theta^{{{\text{PO}}}} } \right)\) of DMUk (k = 1, 2,…, n) is generally related to the DMU’s current level and expectations for the future, see Fig. 3 for the personal objective setting process linked by the blue arrow.

Step 2: The DMUk compares its own self-evaluation efficiency \(\left( {\theta_{kk}^{*} } \right)\) with the personal objective \(\left( {\theta^{{{\text{PO}}}} } \right)\). If \(\theta^{{{\text{PO}}}}\) is larger, the prospect value Sk1k is a loss for DMUk. Conversely, if, the prospect value Sk2k is a gain, DMUk, will then consider selecting a group of weights from the self-evaluation weights to minimize Sk1k and maximize Sk2k, and this group of weights is used to evaluate DMUj, see Fig. 3 for the performance evaluation process linked by the red arrow.

Step3: Here, DMUk’s self-evaluation efficiency \(\theta_{kk}^{*}\) is solved by Model (2), and the cross evaluation score \(\theta_{kj}^{{}}\) from peers is solved by Models (8)–(9). Similarly, the cross efficiency \(\theta_{{{\text{PO}} - k}}^{{\text{cross - efficiecy}}}\) of DMUk is the combination of \(\theta_{kk}^{*}\) and \(\theta_{jk}\)(j = 1, 2,…, n; j ≠ k).

3.4.3 The Steps of Cross Evaluation Based on Composite Objectives

Next, DMUk may get cross evaluations from peers based on organizational objectives or personal goals. The type of management objective directly affects the ranking of DMUs. Therefore, DMUs should first simultaneously determine the importance of the organizational objectives and personal objectives. Step 1: Determine the organizational objective’s importance (μ) according to the actual application, as well as the importance of personal goals (1-μ). The composite objectives are equal to \(\theta^{OO}\) × μ + \(\theta_{k}^{{{\text{P}}O}}\) × (1-μ). Step 2: Calculate the cross efficiency based on composite objectives. Cross efficiency is the average value of self-evaluation and peer cross evaluation, which is based on composite objectives.

4 Illustration Example

In this section, an example in the energy industry is used to illustrate the effectiveness and rationality of the cross efficiency evaluation method proposed in this study. The data used in this example comes from the 2018 Annual Performance Statistics Report of energy enterprises, provided by the Fujian Science and Technology Bureau. The source data involves 23 enterprises. To avoid disclosing enterprise information, the data has been anonymized (with enterprise names hidden) and represented as DMUs. Description of source data is shown in Table 1.

The source data present the following issues: (1) there are invalid data points, such as a “product sales revenue” value of 0, indicating that the enterprise has no product sales, and consequently, no new product sales or profits; (2) there is strong endogeneity between “total profit” and “net profit”, as well as between “product sales revenue” and “new product sales revenue”; and (3) there are many negative values in “total profit” and “net profit” whereas the method proposed in this paper (DEA method) is only applicable to positive data.

Before processing the data using the model proposed in this paper, the following steps were taken: (1) preprocessing the source data by deleting invalid data points, resulting in the removal of 2 invalid entries and leaving 21 valid entries; (2) due to the strong endogeneity between “net profit” and “total profit”, and their small data difference making them interchangeable, only one was selected, with “net profit” chosen as one of the output indicators; (3) using the ratio of “new product sales revenue” to “product sales revenue” as a new output indicator, “new product sales revenue rate” to eliminate the end-ogeneity between these two indicators; (4) converting the units of “net profit” and “R&D expenses” from “thousand” to “million”; and (5) standardizing the “net profit” data to address the issue of negative values. The preprocessed indicators and data are shown in Table 2.

This example is divided into four parts, taking organizational objectives, personal objectives and composite objectives as reference points, respectively, to illustrate the effectiveness of the cross efficiency evaluation method. Finally, the method proposed in this study is compared with the traditional method. All psychological parameters related to cross efficiency are α = β = 0.71, λ = 2.25。

4.1 Cross Evaluation Based on Organizational Objectives

To reflect the impact of organizational objectives on cross efficiency, seven different organizational objectives are selected for the example, namely \(\theta^{{OO{ - }1}}\) = 0.4, \(\theta^{{OO{ - }2}}\) = 0.5, \(\theta^{{OO{ - 3}}}\) = 0.6, \(\theta^{{OO{ - }4}}\) = 0.7, \(\theta^{{OO{ - 5}}}\) = 0.8, \(\theta^{{OO{ - 6}}}\) = 0.9 and \(\theta^{{OO{ - 7}}}\) = 1. Solve the self-evaluation efficiency of each DMU according to Model (2) and obtain the DMU’s peer evaluation efficiency according to Models (6) or (7). Then, calculate the cross efficiency of each DMU according to the self-evaluation score and peer-evaluation scores. The cross efficiencies of these 21 enterprises under the seven organizational objectives are shown in Table 3.

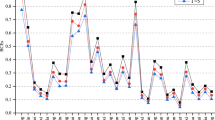

To intuitively show the change of the cross efficiency of the 21 DMUs under the seven organizational objectives, the 21 DMUs are ranked according to the cross efficiency value in Table 3. The ranking of DMUs s is also graphically illustrated, as shown in Fig. 4. Combined with Table 2, it is not difficult to find that the relationship between the self-evaluation scores of DMUs and the values of organizational objectives affects the ranking of DMUs. On the one hand, except for DMU19, the ranking of DMUs with self-evaluation scores of less than 1 (such as DMU2, DMU3, DMU7, DMU11, DMU12, DMU16 and DMU20) is greatly affected by the value of organizational objectives. On the other hand, cross efficiency and the ranking of a DMU would be lower than that under other organizational objectives when the DMU’s self-evaluation efficiency is close to the organizational objectives. For example, DMU12, whose self-evaluation efficiency is 0.6976, ranks the lowest and the sixth under θOO−6 (θOO−6 = 0.9). Similar situations can also be seen in DMU17, DMU3 and other DMUs.

The above results show that the sampled enterprises will receive benevolent evaluations from their peers when the resource allocation level is higher than the organizational objective. If the contrary is true, an enterprise will face aggressive evaluation from its peers. The resource allocation levels of many enterprises in the example have always been higher than the organizational objectives. Therefore, their cross evaluation efficiencies have not changed much, and their rankings are relatively stable. On the contrary, the enterprises whose resources need to be optimized face organizational objectives; the cross efficiency changes greatly, and the ranking is relatively unstable. Therefore, these enterprises sometimes face aggressive evaluations from their peers, while sometimes they receive benevolent evaluations from their peers. This leads to greater changes in cross efficiency under different organizational objectives, as well as great fluctuations in rankings.

4.2 Cross Evaluation Results Based on Personal Objectives

To reflect the role of personal objectives in cross evaluation, this part considers various personal objectives for each enterprise. For example, seven groups of personal objectives are selected for each enterprise, as shown in Table 4. The cross efficiency of these 21 DMUs is calculated according to “the steps of cross evaluation based on personal objectives”, which is shown in Table 5.

As can be seen from Table 5, the cross efficiencies of each DMU are different under different personal objectives. This finding shows that personal objectives, as reference points, have an impact on the cross efficiency of the 21 DMUs. To more intuitively present the trend of the cross efficiency of the 21 sampled enterprises under the seven personal objectives (ΘPO−t, t = 1, 2,…, 7), the cross efficiency ranking of the 21 DMUs is shown in Fig. 5. As can be seen from Fig. 5, only a few of the 21 DMUs showed changes in rankings under personal objectives, such as DMU1, DMU5, DMU7, DMU10 and DMU20. Obviously, the cross efficiency of each DMU varies in line with the change of personal objectives, but has little impact on the ranking trend of DMUs. This finding indicates that the change trend of cross efficiency among DMUs is consistent under personal objectives. Comparing Figs. 5 and 4, both personal objectives and organizational objectives have impacts on enterprise ranking. However, organizational objectives have a greater impact on ranking than personal objectives, which is more consistent with the impact of macro-control than micro adjustment in management applications.

4.3 Evaluation Results Under Composite Objectives

Enterprises often not only face either organizational objectives or personal objectives; they can also face organizational objectives and personal objectives at the same time; that is, composite objectives. There is an important problem for composite objectives, which is determining the importance of organizational objectives relative to personal objectives. The importance is determined by practical application. For the sake of brevity, let’s assume that organizational objectives are as important as personal objectives; that is, composite objectives. This is calculated by the following formula:

The cross efficiency of the 21 DMUs based on seven composite objectives is shown in Table 6.

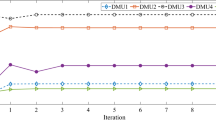

As can be seen from Table 6, the cross efficiency of the 21 DMUs is obviously affected by the composite objectives. To visually show the change trend of cross efficiency under the composite objective, the ranking of the 21 DMUs in Table 6 is graphically shown in Fig. 6. According to Fig. 6 and Table 6, it is not difficult to find that the rankings of DMUs with CCR efficiency equal to 1 (such as DMU1, DMU9, DMU10, DMU14, DMU15, etc.) are less affected by composite objectives. In addition, the change of ranking is within three places, which indicates that DMUs with higher performance levels maintain ranking advantages under an objectives incentive. As can be seen from Fig. 6, the influence of the seven composite objectives on the ranking of the 21 DMUs is significantly different. The ranking of DMUs has changed greatly under the ΘCO−4, this clearly indicates that the value of the composite objective will also affect the ranking of DMUs. In addition, the ranking under composite objectives is similar to that under organizational objectives, which again shows that organizational objectives have a greater impact on enterprise ranking.

4.4 Method Comparison

This part shows the advantages and significance of the proposed method through a comparison with classical methods, including benevolent and neutral cross efficiency evaluation methods.

The cross efficiencies of the 21 DMUs under the organizational objective, personal objective and composite objective are compared, see Fig. 7. It is not difficult to find that the performance level of each DMU under the organizational objective and composite objective is similar; The performance level of each DMU under the personal objective is relatively decentralized, and the cross efficiency of the 21 DMUs with lower CCR efficiency is lower. Conversely, the cross efficiency of DMUs with higher CCR efficiency is higher.

The methods proposed in this paper with classical methods are compared. As can be seen from Fig. 7, the cross efficiency of the DMUs based on management objectives is more consistent with the aggressive cross efficiency evaluation, which in turn is lower than the benevolent cross efficiency and more centralized than the neutral cross efficiency.

According to management theory, the organizational objectives are generally higher than the current performance levels for all DMUs. Faced with a higher level of organizational objectives, each DMU accepts aggressive evaluation from the DMU’s peers, resulting in lower cross efficiency based on the organizational objective. The effect is also closer to the aggressive cross evaluation method. The DMU in the neutral cross efficiency evaluation method only considers its own interests and is indifferent to peer performance, so the evaluation results are relatively scattered. Personal objectives are generally higher than a DMU’s current level, and may be higher or lower than that of the DMU’s peers, so peers in turn may get a higher or lower level evaluation from the DMU. Therefore, the cross efficiency method based on personal objectives is between benevolent and aggressive efficiency. As is known from this study, the cross evaluation method based on organizational objectives is more suitable for performance evaluation under market macro-control, where organizational objectives can be adjusted to adapt to different performance evaluation needs. Compared with the aggressive cross evaluation method, the method based on organizational objectives breaks the practice of blindly suppressing peers, but the method can still control the strength and extent of regulation. The cross evaluation method based on personal objectives can also increase the differentiation between DMUs, and is applicable to relevant evaluations, such as qualification or clearance evaluations.

5 Conclusions

Cross-efficiency evaluation is an important performance evaluation method for ranking DMUs. Most existing studies have often taken DMUs, which are virtual or specific, as the evaluation criteria. However, as the management objective, the evaluation criteria are representative and one-sided. Performance level is selected as the management objective in this paper to reflect the bounded psychology of the DMUs when facing the management objective. Organizational objectives, personal objectives and composite objectives are the reference points for performance evaluation. The DMUs whose performance levels are higher than the management objectives will receive expanded excellent scores from peers. Meanwhile, DMUs whose performance levels are lower than the management objectives will obtain expanded negative scores from peers. The corresponding management methods proposed by this paper should be set according to different application backgrounds, to increase the flexibility of cross efficiency evaluation methods. To confront the risk avoidance psychology of DMUs, cross efficiency evaluation models based on prospect theory are constructed in this study to improve the applicability of the method. Impact and significance of this method at the policy level:

The cross-efficiency evaluation method, which accounts for bounded rationality, incorporates actual performance levels as management objectives, better aligning with real-world conditions. This approach enables policymakers to more accurately assess the actual performance of various decision-making units, thereby avoiding biases from overly idealized frontier settings and enhancing the scientific and rational foundation of policy formulation.

By accurately assessing performance levels DMUs, policymakers can allocate resources more effectively. For instance, in distributing public resources, the government can prioritize allocation based on each unit’s performance, optimizing efficiency and enhancing social welfare.

By using cross-efficiency evaluation with performance levels as management objectives, policymakers can assess how decision-making units perform under various performance targets. This helps identify which units excel and which fall short, enabling policymakers to develop targeted measures to improve overall management performance.

Data Availability

No datasets were generated or analysed during the current study.

References

Charnes, A., Cooper, W.W., Rhodes, E.: Measuring the efficiency of decision making units. Eur. J. Oper. Res. 2(6), 429–444 (1978)

Chen, L., Wang, Y.M.: DEA target setting approach within the cross efficiency framework. Omega Int. J. Manag. Sci. 96, 102072 (2020)

Chen, L., Wang, Y.M., Huang, Y.: Cross-efficiency aggregation method based on prospect consensus process. Ann. Oper. Res. 288, 115–135 (2020)

Chen, L., Huang, Y., Li, M.J., Wang, Y.M.: Meta-frontier analysis using cross-efficiency method for performance evaluation. Eur. J. Oper. Res. 280(1), 219–229 (2020)

Cook, W.D., Seiford, L.M.: Data envelopment analysis (DEA)–thirty years on. Eur. J. Oper. Res. 192(1), 1–17 (2009)

Cummins, J.D., Xie, X.: Mergers and acquisitions in the US property-liability insurance industry: productivity and efficiency effects. J. Bank. Finance 32(1), 30–55 (2008)

Doyle, J., Green, R.: Efficiency and cross-efficiency in DEA: derivations, meanings and uses. J. Oper. Res. Soc. 45(5), 567–578 (1994)

Ganji, S.R.S., Rassafi, A.A., Xu, D.L.: A double frontier dea cross efficiency method aggregated by evidential reasoning approach for measuring road safety performance. Measurement 136, 668–688 (2019)

Hakim, S., Seifi, A., Ghaemi, A.: A bi-level formulation for DEA-based centralized resource allocation under efficiency constraints. Comput. Ind. Eng. 93, 28–35 (2016)

Huang, Y., He, X., Dai, Y., et al.: Hybrid game cross efficiency evaluation models based on interval data: a case of forest carbon sequestration. Expert Syst. Appl. 204(10), 117521 (2022)

Kwon, H.B., Lee, J.: Two-stage production modeling of large US banks: a DEA-neural network approach. Expert Syst. Appl. 42(19), 6758–6766 (2015)

Lim, S., Zhu, J.: Primal-dual correspondence and frontier projections in two-stage network DEA models. Omega 83, 236–248 (2019)

Liu, H.H., Song, Y.Y., Yang, G.L.: Cross-efficiency evaluation in data envelopment analysis based on prospect theory. Eur. J. Oper. Res. 273, 364–375 (2019)

Odiorne, G.S.: Management by objectives: a system of managerial leadership. Pitman Press, Marshfield, Massachusetts (1965)

Sexton, T.R., Silkman, R.H., Hogan, A.J.: Data envelopment analysis: critique and extensions. New Dir. Progr. Eval. 1986(32), 73–105 (1986)

Shi, H.L., Chen, S.Q., Chen, L., Wang, Y.M.: A neutral cross-efficiency evaluation method based on interval reference points in consideration of bounded rational behavior. Eur. J. Oper. Res. 290(3), 1098–1110 (2021)

Shi, H.L., Wang, Y.M., Chen, L.: Neutral cross-efficiency evaluation regarding an ideal frontier and anti-ideal frontier as evaluation criteria. Comput. Ind. Eng. 132, 385–394 (2019)

Wang, Y.M., Chin, K.S.: A neutral DEA model for cross-efficiency evaluation and its extension. Expert Syst. Appl. 37(5), 3666–3675 (2010)

Wang, Y.M., Chin, K.S., Luo, Y.: Cross-efficiency evaluation based on ideal and anti-ideal decision making units. Expert Syst. Appl. 38(8), 10312–10319 (2011)

Zha, Y., Zhao, L., Bian, Y.: Measuring regional efficiency of energy and carbon dioxide emissions in China: a chance constrained DEA approach. Comput. Oper. Res. 66, 351–361 (2016)

Zhou, Z., Xiao, H., Jin, Q., Liu, W.: DEA frontier improvement and portfolio rebalancing: an application of China mutual funds on considering sustainability information disclosure. Eur. J. Oper. Res. 269(1), 111–131 (2017)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 71801048), Social Science Foundation of Fujian Province (No. FJ2022B059; FJ2021B145) and Natural Science Foundation of Fujian Province (No. 2021J011229; 2023J011095)

Ethics declarations

Conflict of Interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Shi, HL., Wang, YM. & Zhang, XM. Cross-Efficiency Evaluation Method with Performance Level as a Management Objective in Consideration of Bounded Rationality. Int J Comput Intell Syst 17, 244 (2024). https://doi.org/10.1007/s44196-024-00650-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-024-00650-1