Abstract

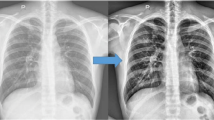

Chest X-ray images (CXR) can convey a great deal about a patient’s condition; hence, the standard chest radiograph should be reconsidered. Interpretation of radiographs is challenging and requires skilled people to determine lung disease without false positives and negatives. A detailed investigation addressing lung diseases COVID-19, Pneumonia, and Tuberculosis is presented here with the goal of assisting investigators in constructing models that automatically identify lung diseases. This paper is presented in three folds. The first is an exploration of how research has progressed from classic feature engineering approaches to deep learning methods; the second is how these are used to identify the listed diseases using radiology images such as Chest X-rays (CXRs); and the third is the future path way of research to detect these diseases.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Automated medical image analysis began when the first medical image was digitized. It has combined low-level pixel processing (edge and line detector filters, extracting region) and computational analysis (fitting lines, circles, and ellipses) to develop compound rule-based systems that perform complex operations during the 1970–1990s [90]. Any condition that prevents lung functioning is referred to as lung disease. Lung diseases are divided into three categories [73]: airway, lung tissue, and lung circulation disorders. Asthma, pneumo thorax, lung cancer, lung infection, and pulmonary edema are prevalent lung illnesses. In all of these, patients have breathing problems, such as shortness of breath, can not breathe deeply, and difficulty exhaling. In the same vein, in late 2002, a pneumonia-related disease known as “severe acute respiratory syndrome” (SARS) was reported from Guangdong Province, China, and was officially called SARS in 2003 [146]. In 2019, another severe respiratory disease known as COVID-19 was identified in Wuhan, Hubei Province, China [161].

This review examines and investigates techniques for recognizing chest ailments such as COVID-19, Pneumonia, and Tuberculosis (TB) and how technology has changed. This paper is presented in threefold. The first explores how research has progressed from classic feature engineering approaches to deep learning methods. The second is how these are used to identify the listed diseases using radiology images such as chest X-rays (CXRs); the third is the future research pathway to detect these diseases.

Chest X-ray(CXR) images can convey a great deal about a patient’s condition; hence, the standard chest radiograph should be reconsidered [151]. Early detection of lung disorders is crucial for effective treatment and may reduce stress in the healthcare system. CXR images and computed tomography (CT) scans are the standard image diagnosis tests for lung diseases. Although CT scans are the gold standard, CXRs are still valuable, because they are less expensive, faster, and widely available. A detailed literature review on the techniques to identify lung diseases is presented by [90, 132]. Even though the authors [20] discussed various deep learning methods published between 2015 and Feb 2023, we emphasize how feature extraction is migrating from traditional approaches (geometrical-based methods) to deep learning methods.

Ginneken [46] described how machine and deep learning surpassed prior rule-based approaches to become the most common ways to identify lung illness. Deep learning is based on the Convolution Neural Network (CNN), which was initially presented as “Cognitron” by Fukushima [41, 42] and later improved as “Neocognitron,” a more vigilant way for finding visual patterns based on geometrical similarities. Despite the use of Artificial Neural Networks (ANNs) to diagnose interstitial lung disorders in 1999 [13], ANNs had never been used to predict Pneumonia until 2003 [58]. CXR images were used to predict Pneumonia in infants in 2004 [49]; later, researchers believed that a mix of pattern recognition and clinical experience is the best way to diagnose lung condition [45]. Image wavelet transform coefficients were predominantly used to generate feature vectors [105], a 15-nearest neighbor algorithm, and distance-dependent weighting was able to identify Pneumonia from CXR images. The authors [35] employed ANNs to diagnose Pneumonia, and TB from patient epicrisis reports, whereas [103] identified it by decoding the region of interest from CXR images. We can observe from Fig. 1 the number of publications over the years and how COVID-19 has pushed the research on other lung diseases.

Texture and shape features from segmented lung fields derived from CXR images Support Vector Machine (SVM) to classify TB and Pneumonia on top of it [75]. In contrast, a fuzzy inference system was introduced to detect TB from CXR images by [33]. A machine learning-based framework where Computer-Aided Detection (CAD) scores from CXR images and clinical features of each subject are considered to identify TB [100]. In contrast, a neural net is employed to identify TB and pneumonia by deriving geometrical features from segmented CXR images [80].

A customized CNN was developed to detect interstitial lung diseases using lung image patches [89] with adam optimizer, learning rate of 0.001. Feature extraction through Transfer learning techniques has began [60, 87, 91, 94, 102, 162] to classify lung diseases. Various Transfer learning methods, such as AlexNet [84], GoogleNet, InceptionNet [141, 142], ResNet [56], VGG16 [135], and DenseNet [63] are different feature extractors. ChexNet [117] is a 121 Dense layer CNN used to identify pneumonia that became the state-of-the-art method and a benchmark model by outperforming average radiologist performance. However, [114, 140] tailored CNN cannot be ignored. The authors [163] have extracted region of interest features through an attention-based mechanism to identify TB from CXR images.

Several review papers [3, 9, 83, 129, 137] have given detailed reviews with various deep learning methods from their perspective and compared with human readers, adding more value to state-of-the-art transfer learning techniques.

Despite these studies, the disease has mysterious hidden secrets that need further attention. The studies which detect COVID-19 from various authors include COVIDNet from [157], Dark Covid Net [106] inspired by Dark Net-19 [145], COVIDX-Net from [59], COVID-ResNet from [39] with different scales of input images, Deep COVID from [101] employed with SqueezNet [67] and DenseNet, CoVNet-19 [76] is an ensemble of VGG19 and DenseNet121, CoroDet [65] is a custom CNN trained from scratch. Similarly, a modified version of Extreme Inception [24, 78], a modified Efficient-Net [95], a VGG-19 with five additional Max pooling layers [107], a patch-based CNN with a limited set of trainable parameters [104], a fuzzy tree-based feature extraction with ensembles by [131, 149] proposed Distance Based Naive Bayes (DBNB), which uses a computational method called Advanced Particle Swarm Optimization (APSO), which extracts the most compelling and relevant features from clinical findings and then employs Naive Bayes, Pre-trained models InceptionV3, DenseNet121, and VGG19 were used to extract features and then combined individual predictions using Choquet fuzzy integral to get final labels [32], Apostolopoulos [12] experimented with VGG19, MobiNetv2 [119], Inception, Xceptionv2 [24], InceptionResNet-v2 with different parameters.

CXR images were pre-processed through the fuzzy color technique, stacked with original images to avoid noise [147], fed to a Squeeznet and MobileNet, and classified through SVM. A state-of-the-art fully connected CNN with the adversarial critic model Attention U-Net to segment the lungs [43]. At the same time, Rajaraman et al. have focused on reducing network complexity by iterative pruning on an ensemble of deep learning techniques to classify COVID-19 [115]. Hassantabar et al. [55] have used fractal methods to extract features and then a deep convolution neural net defined to classify COVID-19.

A Deep convolutional Generative adversarial network was employed for augmentation [116]. The Proposed Deep CNN model was trained to find pneumonia infections from CXR images from Chest X-ray8 datasets. It has achieved 99.34% accuracy on unseen CXR images; However, Deep CNN was developed based on VGG19 with a few modifications as per the binary classification problem. This network is trained for 500 epochs, with a batch size of 16, and the learning rate is varied from 0.2 to 0.8 with a mini-batch gradient as optimizer on NVIDIA DGXI deep learning server. COVID-19, bacterial pneumonia, and non-COVID-19 viral pneumonia infections [68] were classified using the AlexNet model. A wide variety of models were built from binary class to four class problems. AlexNet trained for 20 epochs with an initial learning rate of 0.0001 on a Matlab-installed personal computer.

A multi-image augmentation mechanism [44] was proposed to detect COVID-19 by employing preprocessing images using a Median filter, binary mark, image enhancement, and Inception v3 was used to extract features. However, the images are fewer in numbers 87 COVID and 100 Non-COVID, which raises questions about training for 500 epochs to get good accuracy.

MethodologyThe evolution of the CAD and Deep learning methods is shown in Fig. 2. The rest of the paper is structured as follows: Sect. “Methodology” talks about data, image processing methods, disease materials, and methods, and various machine learning and deep learning methods; Sect. “Performance Evaluation” conversations about different evaluation metrics in classification and segmentation, model validation, data imbalance, and results; Sect. “Discussion” is a discussion and Sect. “Conclusion and Future Research Perspective” is conclusion and future research perspective.

Methodology

Datasets

Dr. J P Cohen’s from the University of Montreal collected data [30] consisting of CXR and CT scan images—as far as the author’s knowledge; this is the first COVID-19 data set is shared on the GitHub website.

COVIDx dataset [158] comprising 13,975 CXR images across 13,870 patient cases. The authors integrated and modified five publicly available data repositories to create this dataset. i.COVID-19 image collection of Dr. J.P.Cohen, ii. COVID-19 Chest X-ray Dataset Initiative [28], iii. Actual Med COVID-19 Chest X-ray Dataset Initiative [27], iv. RSNA Pneumonia Detection Challenge dataset [124], and v. COVID-19 radiography database [123]. The dataset can be reproduced through the codes from [159]. Data distribution is shown in Table 1.

Non-COVID and COVID instances of both CXR and CT images are included in the COVID-19 dataset. A total of 17,099 X-ray and CT images are generated from the dataset using various techniques [34]. COVID-R [65] dataset consists of 2843 COVID-19, 3108 Normal, and 1439 Pneumonia CXR images. Some of the publicly available datasets has shown in Table 2.

The National Institute of Health released one of the largest publicly labeled dataset called Chest X-ray8 [160] in this field, containing 1,08,948 images of 32,717 different patients, classified into eight different categories. The radiologists’ annotations were labeled using natural language processing techniques. Chexpert [69] is a large dataset containing 2,24,316 CXRs from 65,240 people divided into 14 classes. Tuberculosis Chest X-ray database [10] consists of 3500 TB images and 3500 Normal CXR images. [139] Montgomery County X-ray Set consists of 80 Normal, 58 TB from the Department of Health and Human Services of Montgomery County, MD, USA. Shenzhen Hospital X-ray 336 TB, 326 Normal CXR images. X-ray images in this data set have been collected by Shenzhen No.3 Hospital in Shenzhen, Guangdong province, China. Two Datasets named Dataset A(DA) Dataset B(DB) [22]. The training set (52 non-TB and 52 TB CXRs) and the test set (26 non-TB and 26 TB CXRs) made up the DA. DB, on the other hand, consists of 50 non-TB CXRs and 50 TB CXRs, and the test set is 25 non-TB and 25 TB CXRs. [134] Two radiologists collected Chest X-rays at each center (BWC, with 19 years of experience in chest radiology, MJC, 18 years of experience, and six chest radiologists with more than 10 years of experience) from four hospitals from 2015 to 2017. This dataset consists of 200 Abnormal and 800 Normal images. The US National Library of Medicine [71] has made two datasets with a significant focus on Tuberculosis. One contains 80 normal cases and 58 TB; with 326 normal cases and 336 TB. [1] CXR images with lung nodules are 154 and 93 without a nodule were selected from 14 medical centers. Some of the datasets which are available for the public are shown in Table 3.

Image Preprocessing Methods

Image preprocessing is crucial step before feeding the images to the CNNs. Real-time image capturing techniques may lead to various resolutions that need resizing without loosing the quality of pixels; there may be occlusions or noise that needs to addressed with noise removal methods. In addition, image normalization is to be done to brought the pixel values to the same range. Most of the literature has included the steps such as resizing, normalization, image enhancement [44, 52, 117, 126] and image augmentation as the preprocessing steps [5] to classify the images further. In mean normalization or z score normalization, image enhancement includes histogram equalization methods. Importance of image processing methods is better explained in [125]. Various types of noise artifacts typically seen in radiographs include Poisson, Gaussian, salt, and pepper noise, especially when acquired in huge quantities from public domain sources such as the internet [74]. The need for such images is growing in part, because eliminating one form of noise can sometimes affect the other. General preprocessing involves enhancement, and subtraction [151]. A brief description of the preprocessing stage is shown in Table 4.

Diseases, Materials, and Methods

It is known that nonmedical researchers’ knowledge will not match of radiologist experts; however, one doing algorithm analysis on CXR images should have a basic knowledge of Chest anatomy and various abnormalities. These can be studied from chapter-1 of [120]. The subsequent sections will discuss techniques used to detect COVID-19, Pneumonia, and TB over the years. Disease detection is primarily defined as a classification problem that consists of various stages, as shown in Fig. 3: i. image acquisition; ii. image preprocessing and segmentation; iii. feature extraction; iv. training classifier.

COVID-19

Since the first case of COVID-19 in 2019, so much research has taken place worldwide. We have selected heterogeneous articles published by Elsevier, Google Scholar, IEEE, and other leading publishers. Researchers used a variety of state-of-the-art image processing approaches, ranging from hand-crafted techniques to the most cutting-edge transfer learning, and adversarial networks used to create synthesized images.

COVID-Net [158] is presented as one of the first AI architectures for detecting COVID-19. Thereafter, a slew of studies has sprung up based on the COVIDx data set and diverse sampling methodologies. The COVID-Net architecture employs lightweight residual projection–expansion–projection–extension (PEPX) design patterns composed of many stages of projections with 1 * 1 convolutions and 3 * 3 depth-wise convolutions. According to the authors’ understanding, such a tailored, lightweight, machine-driven design pattern has never been seen before. COVID-Net also has architectural diversity in terms of long-range connectivity, with kernel sizes ranging from 1 * 1 to 7 * 7. COVID-Net trained for 22 epochs with a batch size of 64, a learning rate of 0.0002, and a patience of five.

Several architectures were trained to detect COVID-19. For example, [106] implemented Dark COVIDNet, a 17-layer architecture inspired by DarkNet-19 [119] with a flattened and a dense layer, softmax to classify three classes COVID-19, Pneumonia, and healthy lungs. The network trained for 100 epochs to improve the results and observed higher loss at the start of training due to fewer samples in the COVID-19 class. Fivefold cross-validation was done to avoid the problem of overfitting. Similarly, [26] applied a variety of transfer learning networks and concluded that DenseNet201 [63] produced the best results.

VGG19 and MobileNet V2 were feature extractors with the Rectified linear unit as an activation function at hidden layers in [12]. Khan [78] experimented with Xception network [24] to classify COVID-19, Pneumonia bacterial, Pneumonia viral, and Normal with a batch size of 10, trained for 80 epochs generated an output vector of shape 5 * 5 * 2048 for each sample of CXR image and [39] leveraged the usage of ResNet-50 by introducing the network in three different stages with input images of shapes 128 * 128 * 3, 224 * 224 * 3, 229 * 229 * 3, where the first two stages divided into head and body trained for (3,5), (3,5) epochs, and last stage for 25 epochs, respectively, with discriminating learning rate [61]. The authors have aimed for a better generalization using progressive resizing with different input size images and reported a hundred percent of recall on the COVID-19 class. The authors have developed COVID-CX Net [52]. Its backbone is a DenseNet 121; it applied various image enhancement techniques, such as histogram equalization (HE), adaptive histogram equalization (AHE), and contrast-limited AHEs. It is inspired by ChexNet [117] and modified as per the requirement of binary classification with a fully connected layer of ten nodes followed by a drop out of 0.2 to prevent overfitting with sigmoid as an activation function in the last layer. [130] used an SVM classifier to classify the features acquired from multiple CNN models. The ResNet50 model provided discriminant features that helped COVID-19 detection as per their study.

The authors [147] have worked on three classes, namely coronavirus, Pneumonia, and normal X-ray imagery; enhanced the images through affine transformations, i.e., a Fuzzy Color technique [109]. After that, they have stacked the output image with the original image through Yotam’s code,Footnote 1 extracted features through SqueezNet [67], MobileNetV2 and used linear SVM classifier. [95] make use of the Efficient Net family [145] to take care of depth, width, and resolution dimensions while training. The family of models takes the inputs from 224 * 224 * 3, 240 * 240 * 3, 260 * 260 * 3, 300 * 300 * 3, 380 * 380 * 3, and 456 * 456 * 3 by adding a fully connected layer and an output layer with softmax. Perhaps, the authors utilized swish [40] as an activation function, resulting in a smooth curve throughout the minimization loss process using a gradient descent technique.

FC Dense Net101 was employed to segment the lung regions from CXR images in [104] and proposed a patch-based network, say 224 * 224 pixels cropped randomly from the image at various instances and fed to the network ResNet-18 with Adam optimizer [82], the initial learning rate of 0.0001, and trained for 100 epochs. Authors have investigated potential biomarkers in the CXR and found that the globally distributed localized intensity variation can be discriminatory for COVID-19. The two-dimensional curvelet transformation used by [8] subdivides the input using a linear and weighted mixture of fundamental functions called curvelets. A curvelet is a set of radial and angular windows specified in the polar coordinate system in the frequency domain. A Fast Digital Curvelet Transform (FDCT) is implemented via wrapping to synthesize data from RGB image to grayscale and then fed to Efficient Net-B0 [145]. [101] leverage the usage of different transfer learning techniques, including ResNet18, ResNet50, SqueezNet, and DenseNet-121 on smaller datasets to identify COVID-19. Models were trained for 100 epochs, with a batch size of 20, ADAM optimizer with a learning rate of 0.0001, and image input of 224 * 224. [155] As per the author’s knowledge, CovidGAN is the first paper to generate synthesized CXR images using Generative Adversarial Networks. With CovidGAN, authors could generate normal CXR and COVID +ve images by training with a batch size of 64, a learning rate of 0.0002, and a beta of 0.5 for 2000 epochs.

Chauhan [23] experimented with DenseNet-121 with various combinations of parameters, such as loss functions, optimizers for several epochs with learning rates, and reported good metrics with the Ada max optimizer with Cross-Entropy loss function, Step LR scheduler. An ensemble of Inception [142], VGG19, and DenseNet121 has been implemented by the authors of [32]. Further, the classifiers’ predictions are aggregated using Choquet Fuzzy integral method [154], which will give weight to individual classifiers based on the calculated fuzzy scores. Similarly, the authors of [76] proposed CoVNet-19, an ensemble of VGG19 and DenseNet-121 feature extractors, and SVM as a meta-learner. [38] employed various multikernel-size spatial channel attention(MKSC) modules to extract feature maps further to classify COVID-19. COVID-RENet-1 and COVID-RENet-2 [79] introduced averaging layer, ensuring region and edge-based operations between convolution blocks. These two networks differ in their architecture; however, they achieved the same score.

A hybrid learning approach [85] consists of CNN and Recurrent Neural Nets (RNNs). Each step has a length of 64 in RNN, totaling 700. However, there needs to be more information on how RNNs have been used to extract features and fuse with CNN to call it a hybrid model. Interestingly, [62] authors have focused on real-time detection by leveraging LeNet-5, Extreme Learning Machines (ELMs) [64], and Chimp optimization Algorithm (ChoA) citeKhishe2020. LeNet-5 is used as a feature extractor after being trained on a large dataset and provided to ELM input, and ChoA will take care of the rest. ChoA s designed to overcome the problem of slow convergence speed and getting trapped in local minima. This makes the ELM network stable and reliable to operate in real-time operation.

A systematic and consistent approach for lung segmentation and COVID-19 localization with infection quantification [143]. Lung segmentation is done by training 3000 annotated images through UNet [122], UNet++. Balaha [17] has segmented the images using the watershed approach, i.e., separating foreground from background and fed to a transfer learning algorithm to extract features and for further classification using the weighted sum metric of various models. The authors [153] have given importance to preprocessing images before using several transfer learning techniques from VGG16 to SqueezNet. Image enhancement methods, such as Adaptive Histogram Equalization (AHE) and Limited Contrast Adaptive Histogram Equalization (CLAHE), made the image tidier.

A content-based image retrieval (CBIR) [164] model, which utilizes multi-similarity loss [156] with a sophisticated mining sampling approach and an attention mechanism to discover the optimal embedding space. Optimized embedding space is nothing, but the low-dimensional feature space learned through ResNet50 as a backbone and, in addition, a spatial attention module employed to extract local embeddings to provide additional guidance. A lightweight convolution model is trained on Gaussian blurred images proposed by [99]. The architecture consists of 4 convolutional layers with 32, 32, 64, and 64 filters, respectively, each kernel size 3 * 3. A fully connected layer of 64 neurons with a dropout rate of 0.5 and a final layer with a softmax activation function.

In contrast to all the approaches proposed, [96] has shown similar accuracy by completely masking lungs from the CXR image, which poses questions on the deep learning approaches. However, it is worth mentioning that the authors have proposed new protocols to automate the detection process of COVID-19. Some of the papers’ observations and feature extractors are shown in Table 5.

The authors [118] have employed the DarkCOVID Net model, formulated as binary and three class problem, trained on more than 10,000 CXR images, and achieved an average accuracy of 99.53 and 94.18 for binary and multi-classification, respectively. DarkCOVID Net uses DarkNet-19 as a base model, constructed using a YOLO (you look only once) real-time object detection system. Images are of 256 * 256 pixels fed to the network and trained for 100 epochs for binary and 50 epochs for the multi-class problem;

A Concat CNN [126] was developed to detect COVID-19, viral pneumonia and regular infections. The authors have used four CNN models as feature extractors and concatenated the feature maps for better network efficiency. Class imbalance is addressed by sampling an equal number (500) of images from three classes. The feature extractor is defined in terms of several filters in two convolution layers with 32,64 combinations with max pooling of 2 * 2, dropout of 0.5, and activation of Relu is used to bridge the layers. These results were compared with state-of-the-art models VGG16, Inceptionv3, ResNet50, and DenseNet121 with fivefold cross-validation.

The researchers [51] focused on three classes, COVID-19, Viral pneumonia, and Health images. The authors have trained four pre-trained deep neural networks to find the best Net. Since it is a transfer learning model, the dataset consists of 2905 images, with 219, 1341, and 1345 images in COVID-19, healthy and viral pneumonia, respectively. AlexNet gave good results among the other applied models, VGG16, MobileNetv2, and ResNet18. A deep convolutional neural network-based architecture (Focus Covid) [6] is proposed for the COVID-19 detection using chest radiographs. It is a modified version of Focus Net where some layers were removed, reducing the number of parameters (16, 32, 64, 128, and 256). It has helped to reduce training time by reducing the no. of parameters.

A lightweight CNN architecture [14] is for detecting COVID-19 disease, which is robust-a comparison study performed between transfer learning and shallow CNN. A total of 2541 samples were considered from two public databases consisting of Normal and COVID infected images. Other transfer learning models are Inception-v2, Xception, MobileNet, and DenseNet201. The shallow six-layer network trained for different batch sizes 16, 20, 25, 32, 50, and 64 has a learning of 0.001 for 20 epochs. A trained output-based transfer learning (TOTL) [86] approach for COVID-19 detection from CXRs. Preprocessing methods, such as noising, contrasting, and segmentation, were applied before feeding the image into pre-trained transfer learning models, such as Inception, Xception, MobileNet, ResNet, and VGG. Here, the features extracted from pre-trained models are fed to a shallow model consisting of 64, 128, 256, 128, and 64 neurons with relu activation function and a dropout rate of 0.2.

A total of 18 models were implemented in [25], and their performance was evaluated. Major voting built on top of 18 models, including the top four models with above 93% accuracy. Two certified radiologist analyzed the image outputs generated by gradcam, their decision resemblance with Sqeeznet output. The performance of the CNN classifier can be improved using the nature-inspired optimization algorithm Hill Climbing(CNN-HCA) [110] by enhancing the CNN model’s parameters. After evaluating the present state, the hill climbing algorithm is a local search optimization technique exploring superior neighborhood solutions. However, this algorithm works for two hyperparameters, such as kernel size and the number of neurons in the first dense layer, which certainly adds more parameters as it evolves.

A multi-level image segmentation method [111] is based on the swarm intelligence algorithm (SIA) to enhance the image segmentation of COVID-19 X-rays. Ant colony optimization was introduced later; direction crossover was used to enhance the convergence speed of the algorithm. Directional mutation strategy helps to jump out of local optima. It helps determine the proper threshold value to segment the CXR image.

A deep two-step learning (DL) architecture Multi COVIDNet [48] to detect COVID-19. The uniqueness of this paper introduces an optimization algorithm called “Multi-Objective Grasshopper Optimization Algorithm (MOGOA)” to optimize the DL network layers; the Grasshopper optimization Algorithm (GOA) can balance between exploration and exploitation. The nature-inspired swarming nature of GrossHooper inspires it. It has generated multiple solutions, picking the best one using Pareto Optimality (PO) operator.

Pneumonia

Unet Architecture was utilized to segment the lung field from CXR by [98] and employed ResNet-50, IncetpionV3, InceptionResNetV2 architectures using Adam, SGD optimizers and a batch size of 16 and 32, respectively, to produce the best results to detect pneumonia. Ayan et al. [15] have experimented with an extreme version of inception (Xception), VGG16 as feature extractors and concatenated a dense output layer with two neurons, softmax activation function, and trained for 50 epochs. A discriminative localization using Class Activation Maps solved the block-box nature of deep learning models to an extent using modified VGG16 on pediatric CXR images [114]. It has employed an algorithm based on Anatomical atlases for the auto-detection of lung borders. Parameters tuned using the grid search method stopped at a learning rate of 0.0004, momentum 0.99, and L2 regularization of 0.000001 in VGG16. An unsupervised fuzzy c-means classification learning algorithm was used to extract features in [108] and classify the pneumonia disease by grouping into five clusters. The region of interest is encoded into a vector of wavelet texture measures and derived statistical-based features to detect pneumonia from CXR images [103]. Other wavelet texture measures are used to extract features for the same task [105]. Thorax disease classification [50] approach is a three-branch attention-guided CNN (AG-CNN). Pneumonia detection was achieved through ResNet-50 and DenseNet121. CheXLocNet [157] segmented CXR images using Mask R-CNN [57] and achieved better localization. Some of the papers’ observations and feature extractors are shown in Table 5.

Tuberculosis

The ResNet101, VGG19, and DenseNet201 were employed independently [112] to extract features from the given data set without further fine-tuning. These features were extracted from the last convolution block before the global average pooling layer. After the last convolution, the output shape of ResNet101, VGG19, and DenseNet201 for a sample image is (7, 7, 2048), (7, 7, 512), and (7, 7, 1920), respectively. These vectors further flattened and passed to the XGBoost classifier to classify the Tuberculosis disease.

The authors of [113] put-forth ensemble of pre-trained models Inception-V3, InceptionResNet-V2, and DenseNet-121 with average stacking and have shown better results. In addition, a three-layer custom CNN was built with 64, 128, 256 neurons in respective layers. It is trained for 100 epochs with a kernel size of 5 * 5 in each layer. [152] have designed a custom architecture of four CNN layers with 32, 32, 32, and 64 neurons, respectively. It is followed by a flattened and fully connected layer of 128 neurons. The model was trained for 200 epochs with a kernel of 3 * 3, activation function ReLU and batch size of 32. A Convolutional Block Attention Module (CBAM) [163] has been proposed and placed at every bottleneck of the residual network. CBAM will give more weight to the essential features and extract complex, in-depth features as training progresses. CBAM aims to ensure nonlinear interaction with an emphasis on multiple channel features. For the first time, a mix of demographic data and deep learning characteristics is applied by [60]. A deep CNN architecture [66] consists of 1, 1, 3, 1 layers in each block of CNN-Max pooling, two fully connected layers, and a dropout layer toward the end. The authors stated that getting optimized weights with the said architecture is challenging, and they moved to transfer learning approaches, giving better results. On the other hand, [100] has combined the CAD score and clinical features of patients to detect TB through ML. The authors [87, 91, 94] have used AlexNet, Google Net, VGGNet, and ResNet as feature extractors with a meta-learner for classification. AlexNet and GoogleNet are trained with a learning rate of 0.01 and 0.001 using a stochastic gradient descent optimizer, a momentum of 0.9, and exponential decay of 0.002.

The Lung fields were segmented [71] from CXR images using a graph cut technique. Object detection-inspired features such as shape and texture descriptors were extracted using various histogram methods, such as the Intensity Histogram (IH), Gradient Magnitude Histogram (GM), Shape Descriptor Histogram (SD), and Curvature Descriptor Histogram (CD). Low-level features, such as intensity, edge, texture, and form moment, were extracted using Content-Based Image Retrieval (CBIR) algorithms, totaling 594 features. An SVM classifier is trained to classify feature sets into normal and abnormal.

A reliable automated CXR image-based screening system [11] for detecting pulmonary diseases with a significant focus on TB. The approach mainly focused on localization to see lung boundaries from CXR. The feature sets were derived from segmented lung fields through object-detected methods, Content-based image retrieval, and standard MATLAB region proposals. Bandyopadhyay [54] has proposed an algorithm for CXR image enhancement. It consists of segmentation and enhancement as sub-steps. Image segmentation is achieved by applying a sequence of membership and fuzzy distance-based operations on one-dimensional function and image enhancement through the fuzzy-based contrast enhancement technique. Table 6 overviews selected papers.

Convolutional Neural Nets (CNN), Machine learning, and Segmentation

A CNN is employed to collect feature vectors during the feature extraction phase, as shown in Fig. 4. Deep CNNs comprise a convolutional, max pooling, dense layer with parameters, such as the number of kernels, kernel size, hidden and output activation functions, dropout rate, and the number of neurons. Different model architectures can be formed with various combinations. Finding the proper architecture is a laborious process that demands more data and optimization of hyperparameters, such as batch size, learning rate, momentum, number of epochs, and batch normalization.

A CNN network is categorized as shallow or deep based on the number of layers. A customizable CNN is a deep network trained on a specific task. A Deep CNN constructed and trained on an extensive data set and achieved excellent results could be reused. The caveat is that the original network may have been trained on different data. These networks extract feature vectors and then build a meta-learner. These networks are known as Transfer Learning techniques (TL). Since 2015, many transfer learning methods have been developed, including Alex Net, Dense Net, Efficient Net, Google Net, Inception, Mobile Net, ResNet, Squeeze Net, VGGNet, and Xception. AlexNet, ResNet, VGGNet, and GoogleNet are the most often used TL models in medical image analysis [83], while SVM is the most commonly used meta-learner. For example, feature vectors taken from the flattened layer (None, 25,088) or the fully connected layers (fc1 or fc2) (None, 4096) as illustrated in Fig. 5 might be fed into a softmax or SVM.

Transfer learning method: vgg16 model summary [135]

Machine learning models, such as logistic regression, k nearest neighbor, and SVM and ensembles such as bagging and boosting (Adaboost, XGboost) can be trained on structured data. Structured data could be clinical features such as age, patient habits, smoking (y/n), diabetes (y/n), or texture, shape, intensity, color, statistical, and other features of CXR images. Different geometrical, machine learning, and ensemble methodologies utilized to classify CXRs were discussed in detail in [74]. Various CXR segmentation methods edge detection [21], active shape modeling [47] , and modern methods, such as UNet [122] and Unet++ [17, 43, 138, 165], could be used for segmentation.

Comparison of Materials and Methods

In the previous sections, we introduced several techniques employed for the prediction of pulmonary ailments. Among these convolutional neural networks (CNNs), the more efficient approach is to extract features from images, as compared to using manually created features. Nevertheless, the process of extracting features from CXR images poses a challenge due to the inherent similarity of all lung images. Only a limited number of significant patterns are effective in differentiating lung illnesses. Transfer learning approaches proved to be highly beneficial in this context. However, these TL approaches are specifically tailored for a set of 1000 broad categories. To customize these models according to the specific needs, it is necessary to adjust them by eliminating the topmost dense layers from their original structure. Also, it can be noted that the researchers have employed various Transfer Learning techniques and occasionally a combination of all these methods to predict lung disease. The majority of studies have looked into different image sizes, image enhancement techniques, and feature extraction methods. With the exception of [97, 157], all of these studies used a limited number of images. To accomplish generalization in a deep learning model, the network must be trained with a diversified set of sample images including a high number of examples. Furthermore, the model should be rigorously tested to confirm its applicability for real-time deployment.

Performance Evaluation

Evaluation Metrics

A disease detection problem can often be formulated as a classification problem using CXR images. Classification metrics, such as Accuracy, Sensitivity, Specificity, Precision, F1 score, and Area under the curve, will be used to evaluate these problems. It can be either binary or multi-class. In the case of multiple classes, the average of the metrics concerning the classes will be computed.

Confusion Matrix

The confusion matrix as shown in Fig. 6 gives various metrics on how close the predictions are to actual values.

Recall/Sensitivity is correct predictions from all positive class samples. Specificity is defined as the total number of correct predictions out of all negative class samples. Precision is defined as the total number of correct predictions out of all predicted positives. It tells how precise the model is. F1 score is the harmonic mean of precision and recall 2*P*R/(P+R) with a minimum value of 0 and a max of 1. The area under the curve is calculated between True-positive rate and 1- Specificity, i.e., False-positive rate, which guides to calculate of the threshold value to classify the samples. The range of values is from 0 to 1. There are cases where precision–recall curves will be plotted depending on the requirement, as shown in Fig. 7.

ROC curve for three classifiers: A, B and random [19]

Intersection over Union

Intersection over Union (IoU), also known as Jaccard index [70], is the most generally used metric for comparing the similarity between two arbitrary formations. It summarizes how well-ground truth overlaps with the object predicted by the model as illustrated in Fig. 8. An IoU threshold was 0.5 set, which is commonly used [119]. The bounding boxes were filtered based on the confidence score before computing IoU. This score will be varied while calculating other metrics, such as Average Precision (AP), Average Recall, and IoU from 0.5 to 1 to calculate mean Average Precision (mAP), mean Average Recall, and mean Average Recall [127].

A Intersection over the union; B examples of different values of IoU [128]

Model Validation

Model validation needs two data sets, one to train the model and the other to validate. Train and test metrics need to be considered to evaluate model performance. However, an algorithm that performs well on train data may not perform well on test data. There could be challenges with bias or variance, causing under-fitting or overfitting, which further needs to be addressed. Before bringing a model into production, it is common to regularize the model.

Data Imbalance

A pervasive challenge in many classification problems is data imbalance. Consider a binary class problem, such as predicting a patient with COVID-19 disease. For a variety of reasons, COVID-19 positive will have fewer samples. Patients are reluctant to reveal personal information, gather all relevant data, and make it available to appropriate organizations. As a result of these factors, the number of data points in one class will be lower. Assume that the number of COVID-19 -ve samples is 90 and the other class has 10. Getting an accuracy of 90 is deceptive, because 90 percent of the samples belong to one class. One more metric will be considered, along with accuracy. Since the positive class has a lower number, recall, and accuracy will be considered, i.e., how many +ve COVID-19 are predicted correctly out of all predicted positives?

Result Analysis

Ensemble of Transfer learning methods [7, 16, 29, 32] is consistently giving good results in identifying lung diseases, including COVID-19 and other diseases. For example, we observe some models built on less than 100 samples early in COVID-19 detection and can give reasonable metrics.

Maguolo [96] has shown similar results by masking the lung region completely, raising questions on deep learning methods or needing more explanations to support the accuracy of the models. Deep learning models applied to detect other diseases, Pneumonia, Tuberculosis, have shown promising results. For example, [134] have incorporated DCNN methods in software and can detect Lung nodules and cross the average sensitivity achieved by the radiologist group while reducing False positives. Some selected papers’ metrics are shown in Table 7. Some authors have used various preprocessing methods to improve the disease accuracy. For example [147] has pre-processed through the fuzzy color technique and achieved 97.78% and [52, 153] have used Adaptive Histogram Equalization (AHE) and Limited Contrast Adaptive Histogram for image enhancement and achieved 99% and 98.68% accuracy, respectively. On the other hand, transfer learning methods produced promising results in finding TB [60, 87, 91, 94, 102, 162] and [112]. Among these, [112] produced 99% accuracy with ResNet and XGBoost and [87] able to get 98.8% with weighted ensemble of AlexNet,GoogleNet. Others have experimented by incorporating lung segmentation as preprocessing step, [98, 114] scored 93.06%,91.8% accuracy, respectively, while detecting pneumonia and [104, 115] produced 95.63% and 88.90%, respectively, while detecting Pneumonia,COVID-19 and TB. It is obvious that one can raise questions on segmentation as we can observe that the models without lung segmentation are producing the higher results than with segmentation.

Discussion

Models were introduced in the early days of medical research leveraging structured data such as patient demographics and health records, such as blood, urine, and other lab tests to diagnose various disorders. Due to the complex nature of lung disorders, medical imaging plays a significant role in diagnosing them compared to other clinical data. As long as practitioners are accessible and proficient in evaluating CXR images using various computer-aided methods based on their experience, such an alternative should be explored. These conclusions are based on two factors: The results of computer-assisted procedures and the frequency of positive cases observed in their overall experience. The latter is based solely on expert information that cannot be disputed. Few of the researchers were build models on segmented on CXRs and able to produced promising results, pointed out the importance of segmentation methods. For example, [71] to detect TB, [98, 114] to detect pneumonia, and [104, 115] to detect COVID-19 have applied various segmentation methods, such as graphcut and Unet methods. Lung segmentation is one of the preprocessing to be included in the image classification pipeline. However segmentation of lungs is time-consuming and need a well-trained model that suits for all kinds of images.

Deep learning algorithms will provide the metric and outperform expert radiologist judgment on a measure in many instances; however, a black-box methodology cannot be relied on despite its 100% accuracy. Multiple combinations of image processing, feature extractor, and classification with ensembles will achieve better results.

For example, explainable AI (XAI) [136] techniques, such as Guided Grad CAM, Gradient Back Propagation, and Class activation maps (CAM), would be used to identify how features are selected based on expert knowledge. Because experts are restricted in their ability to analyze each image, the region of interest is selected using a blend of Explainable AI and a pre-trained Transfer learning model in a medical field similar to AlexNet trained on a massive set of images. However, radiologist expertise is vital to confirm the prediction performance and further localization to investigate ROIs detected by the models. It is a short time to get there. The prospect will see the combination of Deep learning procedures and older approaches to diagnose illnesses. XAI methods must produce precise areas of interest that an expert group must validate, which is ahead. If we look at the diseases from the 2000s, we will see that they are changing toward lung-related infections with more severe health repercussions. Nobody predicted COVID-19 and its spread, even though pneumonia has been prevalent since 460 BC [72].

Conclusion and Future Research Perspective

Most CAD practitioners believed that CAD with hand-crafted features would be the primary technique for automated image analysis until 2009 [46]. Determining which features are appropriate for solving a particular problem is a time-consuming and labor-intensive process requiring significant human engagement and expertise. One may wonder why computers could not improve the process of translating images into features. Even though the first deep learning network was defined by [88], the success of deep learning methods began with [84], winner of the ImageNet challenge. Despite the black-box nature of the findings, researchers began experimenting with deep learning methodologies [18, 31, 89]. ChexNet [117] impacted the medical community with a 121-layer Dense Network. Since then, Deep Learning techniques have been effectively employed in a wide range of applications, including diagnosing skin cancer [29, 36], arrhythmia detection [2, 53], Fudnus image segmentation [144], and Lung segmentation [138]. Since the epidemic of COVID-19, the utilization of deep learning methods has grown exponentially, compelling the community to thoroughly investigate all image processing methodologies, from Professor Dr.Ladwick’s research to contemporary image processing and analysis models with Explainable AI(XAI).

The success of deep learning has already been proven with number of publications and deploying the models into machinery; however, the traditional image preprocessing methods are to be reconsidered to preprocess the images while inputting the images into deep learning models. Authors’ point of view, upcoming research will be a hybrid technology with a mix of traditional preprocessing and state-of-the-art deep learning models. Here, one should agree that deep learning is unable to explain why infection is getting classified as a disease. Experts’ knowledge is required to evaluate the heatmaps generated by XAI techniques. XAI methods provide local explanations, i.e., a part of the image can be studied further to get accurate results. Annotating the infections in any medical image is a big challenge; recently, one research group has conducted a deep study [81] on auto-labeling CXR images through the XAI method and achieved promising results. It is the other future perspective generating local infections, validating the results back and forth until we get a reliable result.

Data availability

The authors did not use any datasets as there are no experiments, however, to conduct research on lung disorders through CXR images, relevant dataset citations are provided.

References

Abe H, MacMahon H, Engelmann R, et al. Computer-aided diagnosis in chest radiography: results of large-scale observer tests at the 1996–2001 rsna scientific assemblies. Radiographics. 2003;23:255–65. https://doi.org/10.1148/rg.231025129.

Acharya UR, Oh SL, Hagiwara Y, et al. A deep convolutional neural network model to classify heartbeats. Comput Biol Med. 2017;89:389–96. https://doi.org/10.1016/j.compbiomed.2017.08.022.

Adamidi ES, Mitsis K, Nikita KS. Artificial intelligence in clinical care amidst covid-19 pandemic: A systematic review. Comput Struct Biotechnol J. 2021;19:2833–50. https://doi.org/10.1016/j.csbj.2021.05.010.

Adedigba AP, Adeshina SA, Aina OE, et al. Optimal hyperparameter selection of deep learning models for covid-19 chest X-ray classification. Intell Based Med. 2021;5(100):034. https://doi.org/10.1016/j.ibmed.2021.100034.

Agrawal S, Honnakasturi V, Nara M, et al. Utilizing deep learning models and transfer learning for covid-19 detection from X-ray images. SN Comput Sci. 2023;4:326. https://doi.org/10.1007/s42979-022-01655-3.

Agrawal T, Choudhary P. Focuscovid: automated covid-19 detection using deep learning with chest x-ray images. Evol Syst. 2022;13:519–33. https://doi.org/10.1007/s12530-021-09385-2.

Alshazly H, Linse C, Barth E, et al. Ensembles of deep learning models and transfer learning for ear recognition. Sensors (Switzerland). 2019;19:1–26. https://doi.org/10.3390/s19194139.

Altan A, Karasu S. Recognition of covid-19 disease from x-ray images by hybrid model consisting of 2d curvelet transform, chaotic salp swarm algorithm and deep learning technique. Chaos Solitons Fractals. 2020;140: 110071. https://doi.org/10.1016/j.chaos.2020.110071.

Alzubaidi M, Zubaydi HD, Bin-Salem AA, et al. Role of deep learning in early detection of covid-19: Scoping review. Comput Methods Prog Biomed Update. 2021;1: 100025. https://doi.org/10.1016/j.cmpbup.2021.100025.

Amith K, T Rahman, Muhammad Enamul Hoque C. Tuberculosis-tb-chest-x-ray-database. 2020. https://www.kaggle.com/tawsifurrahman/tuberculosis-tb-chest-xray-dataset

Antani S, Candemir S, Jaeger PFS, et al. Automated detection of lung diseases in chest x-rays a report to the board of scientific counselors. Technical Report to the LHNCBC Board of Scientific Counselors. 2015.

Apostolopoulos ID, Mpesiana TA. Covid-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Phys Eng Sci Med. 2020;43:635–40. https://doi.org/10.1007/S13246-020-00865-4.

Ashizawa K, Ishida T, MacMahon H, et al. Artificial neural networks in chest radiography: application to the differential diagnosis of interstitial lung disease. Acad Radiol. 1999;6(1):2–9. https://doi.org/10.1016/s1076-6332(99)80055-5.

Asif S, Zhao M, Tang F, et al. A deep learning-based framework for detecting covid-19 patients using chest x-rays. Multimed Syst. 2022;28:1495–513. https://doi.org/10.1007/s00530-022-00917-7.

Ayan E, Ünver HM. Diagnosis of pneumonia from chest x-ray images using deep learning. In: 2019 Scientific Meeting on Electrical-Electronics and Biomedical Engineering and Computer Science (EBBT) 2019. p. 1–5.

Ayaz M, Shaukat F, Raja G. Ensemble learning based automatic detection of tuberculosis in chest x-ray images using hybrid feature descriptors. Phys Eng Sci Med. 2021;44:183–94. https://doi.org/10.1007/s13246-020-00966-0.

Balaha HM, Balaha MH, Ali HA. Hybrid covid-19 segmentation and recognition framework (hmb-hcf) using deep learning and genetic algorithms. Artif Intell Med. 2021;119: 102156. https://doi.org/10.1016/j.artmed.2021.102156.

Bar Y, Diamant I, Wolf L, et al. Deep learning with non-medical training used for chest pathology identification. In: Proceedings Volume 9414, Medical Imaging 2015: Computer-Aided Diagnosis. 2015.

Branco P, Torgo L, Ribeiro R. A survey of predictive modelling under imbalanced distributions. 2015. arXiv:1505.01658

Çallı E, Sogancioglu E, van Ginneken B, et al. Deep learning for chest x-ray analysis: A survey. Med Image Anal. 2021;72: 102125. https://doi.org/10.1016/j.media.2021.102125.

Carreira MJ, Cabello D, Mosquera A, et al. Medical images segmentation using region and edges information. In: Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS. 1992. p. 1909–10.

Chauhan A, Chauhan D, Rout C. Role of gist and phog features in computer-aided diagnosis of tuberculosis without segmentation. PLoS ONE. 2014;9(112):980. https://doi.org/10.1371/journal.pone.0112980.

Chauhan T, Palivela H, Tiwari S. Optimization and fine-tuning of densenet model for classification of covid-19 cases in medical imaging. Int J Inf Manage Data Insights. 2021;1(100):020. https://doi.org/10.1016/j.jjimei.2021.100020.

Chollet F. Xception: deep learning with depthwise separable convolutions. 2017.

Chow LS, Tang GS, Solihin MI, et al. Quantitative and qualitative analysis of 18 deep convolutional neural network (cnn) models with transfer learning to diagnose covid-19 on chest x-ray (cxr) images. SN Comput Sci. 2023;4:141. https://doi.org/10.1007/s42979-022-01545-8.

Chowdhury ME, Rahman T, Khandakar A, et al. Can AI help in screening viral and covid-19 pneumonia? IEEE Access. 2020;8:132665–76. https://doi.org/10.1109/ACCESS.2020.3010287.

Chung. Actualmed covid-19 chest x-ray data initiative. 2020a. https ://github.com/agchung/Actualmed-COVID-chest xray-dataset.

Chung. Covid-19 chest X-ray data initiative. 2020b. https ://github.com/agchung/Figure1-COVID-chest xray-dataset.

Codella NCF, Nguyen QB, Pankanti S, et al. Deep learning ensembles for melanoma recognition in dermoscopy images 1. IBM J Res Dev. 2017;61:5.

Cohen JP, Morrison P, Dao L. Covid-19 image data collection. 2020. https://github.com/ieee8023/covid-chestxray-dataset

Cruz-Roa A, Basavanhally A, González F, et al. Automatic detection of invasive ductal carcinoma in whole slide images with convolutional neural networks. 2014.

Dey S, Bhattacharya R, Malakar S, et al. Choquet fuzzy integral-based classifier ensemble technique for covid-19 detection. Comput Biol Med. 2021;135: 104585. https://doi.org/10.1016/j.compbiomed.2021.104585.

Ekata, Tyagi PK, Gupta NK, et al (2016) Diagnosis of pulmonary tuberculosis using fuzzy inference system. In: 2016 Second International Innovative Applications of Computational Intelligence on Power, Energy and Controls with their Impact on Humanity (CIPECH), p. 3–7.

El-Shafai FAESW. Extensive covid-19 X-ray and CT chest images dataset. 2020. https://data.mendeley.com/datasets/8h65ywd2jr/3, Date accessed:28 Feb 2022

Er O, Yumusak N, Temurtas F. Chest diseases diagnosis using artificial neural networks. Expert Syst Appl. 2010;37:7648. https://doi.org/10.1016/j.eswa.2010.04.078.

Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–8. https://doi.org/10.1038/nature21056.

Falco ID, Pietro GD, Sannino G. Classification of covid-19 chest x-ray images by means of an interpretable evolutionary rule-based approach. Neural Comput Appl. 2022;35:16061. https://doi.org/10.1007/s00521-021-06806-w.

Fan Y, Liu J, Yao R, et al. Covid-19 detection from x-ray images using multi-kernel-size spatial-channel attention network. Pattern Recogn. 2021;119(108):055. https://doi.org/10.1016/J.PATCOG.2021.108055.

Farooq M, Hafeez A. Covid-resnet: A deep learning framework for screening of covid19 from radiographs. 2020. arXiv:abs/2003.14395

Fisher Y, VladlenKoltun PR, Zoph B, Brain QVLG. Searching for activation functions. In: 6th International Conference on Learning Representations, ICLR 2018 - Workshop Track Proceedings. 2018.

Fukushima K. Cognitron: A self-organizing multilayered neural network. Biol Cybern. 1975;20:121–36.

Fukushima K. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol Cybern. 1980;36:202.

Gaál G, Maga B, Lukács A. Attention u-net based adversarial architectures for chest x-ray lung segmentation. 2020. arXiv:abs/2003.10304

Geetha R, Balasubramanian M, Devi KR. Covidetection: deep convolutional neural networks-based automatic detection of covid-19 with chest x-ray images. Res Biomed Eng. 2022;38:955–64. https://doi.org/10.1007/s42600-022-00230-2.

Gevenois A, Bankier A, Sibille Y, et al. Imaging of pneumonia: trends and algorithms. Eur Respir J. 2001;18:196–208. https://doi.org/10.1183/09031936.01.00213501.

Ginneken BV. Fifty years of computer analysis in chest imaging: rule-based, machine learning, deep learning. Radiol Phys Technol. 2017;10:23–32. https://doi.org/10.1007/s12194-017-0394-5.

Ginneken BV, Romeny BMTH. Automatic segmentation of lung fields in chest radiographs. Med Phys. 2000;27:2445–55. https://doi.org/10.1118/1.1312192.

Goel T, Murugan R, Mirjalili S, et al. Multi-covid-net: Multi-objective optimized network for covid-19 diagnosis from chest x-ray images. Appl Soft Comput. 2022;115: 108250. https://doi.org/10.1016/j.asoc.2021.108250.

Grafakou O, Moustaki M, Tsolia M, et al. Can chest x-ray predict pneumonia severity? Pediatr Pulmonol. 2004;38:465–9. https://doi.org/10.1002/ppul.20112.

Guan Q, Huang Y, Zhong Z, et al. Thorax disease classification with attention guided convolutional neural network. Pattern Recogn Lett. 2020;131:38–45. https://doi.org/10.1016/j.patrec.2019.11.040.

Gupta V, Jain N, Sachdeva J, et al. Improved covid-19 detection with chest x-ray images using deep learning. Multimed Tools Appl. 2022;81:37657–80. https://doi.org/10.1007/s11042-022-13509-4.

Haghanifar A, Majdabadi MM, Choi Y, et al. Covid-cxnet: Detecting covid-19 in frontal chest x-ray images using deep learning. Multimed Tools Appl. 2020;81:30615. https://doi.org/10.1007/s11042-022-12156-z.

Hannun AY, Rajpurkar P, Haghpanahi M, et al. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nature Med. 2019;25:65–9. https://doi.org/10.1038/s41591-018-0268-3.

Hariharan S, Ray A, Ghosh M. An algorithm for the enhancement of chest x-ray images of tuberculosis patients. In: Proceedings of IEEE International Conference on Industrial Technology 2000 (IEEE Cat. No.00TH8482), 2000. p. 107–112

Hassantabar S, Ahmadi M, Sharifi A. Diagnosis and detection of infected tissue of covid-19 patients based on lung x-ray image using convolutional neural network approaches. Chaos Solitons Fractals. 2020;140: 110170. https://doi.org/10.1016/j.chaos.2020.110170.

He K, Zhang X, Ren S, et al. Deep residual learning for image recognition. 2016.

He K, Gkioxari G, Dollár P, et al. Mask r-cnn. In: 2017 IEEE International Conference on Computer Vision (ICCV). 2017. p. 2980–2988

Heckerling PS, Gerber BS, Tape TG, et al. Prediction of community-acquired pneumonia using artificial neural networks. Med Decis Making. 2003;23:112–21. https://doi.org/10.1177/0272989X03251247.

Hemdan EED, Shouman MA, Karar ME. Covidx-net: A framework of deep learning classifiers to diagnose covid-19 in x-ray images. 2020. arXiv:abs/2003.11055

Heo SJ, Kim Y, Yun S, et al. Deep learning algorithms with demographic information help to detect tuberculosis in chest radiographs in annual workers’ health examination data. Int J Environ Res Public Health. 2019;16:250.

Howard J, Ruder S. Universal language model fine-tuning for text classification. 2018. arXiv preprint arXiv:1801.06146

Hu T, Khishe M, Mohammadi M, et al. Real-time covid-19 diagnosis from x-ray images using deep cnn and extreme learning machines stabilized by chimp optimization algorithm. Biomed Signal Process Control. 2021;68: 102764. https://doi.org/10.1016/j.bspc.2021.102764.

Huang G, Liu Z, Maaten LVD, et al. Densely connected convolutional networks. 2017.

Huang GB, Wang DH, Lan Y. Extreme learning machines: A survey. Int J Mach Learn Cybern. 2011;2:107–22. https://doi.org/10.1007/s13042-011-0019-y.

Hussain E, Hasan M, Rahman MA, et al. Corodet: A deep learning based classification for covid-19 detection using chest x-ray images. Chaos Solitons Fractals. 2021;142: 110495. https://doi.org/10.1016/j.chaos.2020.110495.

Hwang S, Kim HE, Jeong J, et al. A novel approach for tuberculosis screening based on deep Convolutional Neural Network. 2016.

Iandola FN, Han S, Moskewicz MW, et al (2016) Squeezenet: Alexnet-level accuracy with 50x fewer parameters and \(<\)0.5mb model size. Appl Soft Comput.

Ibrahim AU, Ozsoz M, Serte S, et al. Pneumonia classification using deep learning from chest x-ray images during covid-19. Cogn Comput. 2021. https://doi.org/10.1007/s12559-020-09787-5.

Irvin J, Rajpurkar P, Ko M, et al. Chexpert: A large chest radiograph dataset with uncertainty labels and expert comparison. In: Proceedings of the AAAI conference on artificial intelligence. 2019. p. 590–597

Jaccard P. Article in bulletin de la societe vaudoise des sciences naturelles. Bulletin de la Société Vaudoise des Sciences Naturelles. 1901;37:547–79. https://doi.org/10.5169/seals-266450.

Jaeger S, Karargyris A, Candemir S, et al. Automatic tuberculosis screening using chest radiographs. IEEE Trans Med Imaging. 2013;33:233. https://doi.org/10.1109/TMI.2013.2284099.

James Cherry GJDSRDF. Textbook of Pediatric Infectious Diseases, vol. 1. 5th ed. W.B: Saunders; 2004.

Johns Creek (GA):Ebix Inc. A Lung disease. 2022. https://medlineplus.gov/ency/article/000066.htm

Joshi D, Singh TP. A survey of fracture detection techniques in bone x-ray images. Artif Intell Rev. 2020;53:4475–517. https://doi.org/10.1007/s10462-019-09799-0.

Karargyris A, Siegelman J, Tzortzis D, et al. Combination of texture and shape features to detect pulmonary abnormalities in digital chest x-rays. Int J CARS. 2016;11:99–106. https://doi.org/10.1007/s11548-015-1242-x.

Kedia P, Anjum Katarya R. Covnet-19: A deep learning model for the detection and analysis of covid-19 patients. Appl Soft Comput. 2021;104: 107184. https://doi.org/10.1016/j.asoc.2021.107184.

Kermany DS, Goldbaum M, Cai W, et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell. 2018;172:1122-1131.e9. https://doi.org/10.1016/j.cell.2018.02.010.

Khan AI, Shah JL, Bhat MM. Coronet: A deep neural network for detection and diagnosis of covid-19 from chest x-ray images. Comput Methods Progr Biomed. 2020;196: 105581. https://doi.org/10.1016/j.cmpb.2020.105581.

Khan SH, Sohail A, Khan A, et al. Covid-19 detection in chest x-ray images using deep boosted hybrid learning. Comput Biol Med. 2021;137: 104816. https://doi.org/10.1016/j.compbiomed.2021.104816.

Khobragade RN, Kelkar RU, Sunilkumar M, et al. Health system resilience: Ensuring tb services during covid-19 pandemic in kerala, india. Indian J Tuberc. 2021;69:427. https://doi.org/10.1016/j.ijtb.2021.10.004.

Kim D, Chung J, Choi J, et al. Accurate auto-labeling of chest x-ray images based on quantitative similarity to an explainable ai model. Nat Commun. 2022;13(1):1867. https://doi.org/10.1038/s41467-022-29437-8.

Kingma DP, Ba JL. Adam: A method for stochastic optimization. 2015.

Kora P, Ooi CP, Faust O, et al. Transfer learning techniques for medical image analysis: A review. Biocybern Biomed Eng. 2022;42:79–107.

Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. In: Pereira F, Burges CJC, Bottou L, et al (eds) Advances in Neural Information Processing Systems, vol 25. Curran Associates, Inc., 2012. https://proceedings.neurips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

Kumar M, Shakya D, Kurup V, et al. Covid-19 prediction through x-ray images using transfer learning-based hybrid deep learning approach. Mate Today Proc. 2021;51:2520.

Kumar S, Mallik A. Covid-19 detection from chest x-rays using trained output based transfer learning approach. Neural Process Lett. 2022;55:2405. https://doi.org/10.1007/s11063-022-11060-9.

Lakhani P, Sundaram B. Deep learning at chest radiography : Automated classification of pulmonary tuberculosis by using convolutional. Radiology. 2017;284:574–82. https://doi.org/10.1148/radiol.2017162326.

Lecun Y, Bottou L, Bengio Y, et al. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86(11):2278–324. https://doi.org/10.1109/5.726791.

Li Q, Cai W, Wang X, et al. Medical image classification with convolutional neural network. In: 2014 13th International Conference on Control Automation Robotics Vision (ICARCV), 2014. p. 844–848.

Litjens G, Kooi T, Bejnordi BE, et al. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. https://doi.org/10.1016/j.media.2017.07.005.

Liu C, Cao Y, Alcantara M, et al. Tx-cnn: Detecting tuberculosis in chest x-ray images using convolutional neural network. In: 2017 IEEE International Conference on Image Processing (ICIP). 2017. p. 2314–2318.

Lodwick GS. Computer-aided diagnosis in radiology. Invest Radiol. 1966;1(1):72–80.

Lodwick GS, Keats TE, Dorst JP. The coding of roentgen images for computer analysis as applied to lung cancer. Radiology. 1963;81(2):185–200.

Lopes UK, Valiati JF. Pre-trained convolutional neural networks as feature extractors for tuberculosis detection. Comput Biol Med. 2017;89:135–43. https://doi.org/10.1016/j.compbiomed.2017.08.001.

Luz E, Silva P, Silva R, et al. Towards an effective and efficient deep learning model for covid-19 patterns detection in x-ray images. Res Biomed Eng. 2021. https://doi.org/10.1007/s42600-021-00151-6.

Maguolo G, Nanni L. A critic evaluation of methods for covid-19 automatic detection from x-ray images. Inf Fusion. 2021;76:1–7. https://doi.org/10.1016/j.inffus.2021.04.008.

Mahmud T, Rahman MA, Fattah SA. Covxnet: A multi-dilation convolutional neural network for automatic covid-19 and other pneumonia detection from chest x-ray images with transferable multi-receptive feature optimization. Comput Biol Med. 2020;122: 103869. https://doi.org/10.1016/j.compbiomed.2020.103869.

Manickam A, Jiang J, Zhou Y, et al. Automated pneumonia detection on chest x-ray images: A deep learning approach with different optimizers and transfer learning architectures. Meas J Int Meas Confed. 2021;184: 109953. https://doi.org/10.1016/j.measurement.2021.109953.

Masud M. A light-weight convolutional neural network architecture for classification of covid-19 chest x-ray images. Multimed Syst. 2022;28:1165.

Melendez J, Sánchez CI, Philipsen RHHM, et al. An automated tuberculosis screening strategy combining x-ray-based computer-aided detection and clinical information open. Nature Publishing Group. 2016.

Minaee S, Kafieh R, Sonka M, et al. Deep-covid: predicting covid-19 from chest x-ray images using deep transfer learning. Med Image Anal. 2020;65: 101794. https://doi.org/10.1016/j.media.2020.101794.

Nguyen QH, Nguyen BP, Dao SD, et al. Deep learning models for tuberculosis detection from chest x-ray images. In: 2019 26th International Conference on Telecommunications (ICT). 2019. p. 381–385

Noor NM, Rijal OM, Yunus A, et al. A discrimination method for the detection of pneumonia using chest radiograph. Comput Med Imaging Graph. 2010;34:160–6. https://doi.org/10.1016/j.compmedimag.2009.08.005.

Oh Y, Park S, Ye JC. Deep learning covid-19 features on cxr using limited training data sets. IEEE Trans Med Imaging. 2020;39:2688–700. https://doi.org/10.1109/TMI.2020.2993291.

Oliveira LLG, Silva SA, Ribeiro LHV, et al. Computer-aided diagnosis in chest radiography for detection of childhood pneumonia. Int J Med Inf. 2008;77:555–64. https://doi.org/10.1016/j.ijmedinf.2007.10.010.

Ozturk T, Talo M, Yildirim EA, et al. Automated detection of covid-19 cases using deep neural networks with x-ray images. Comput Biol Med. 2020;121(103):792. https://doi.org/10.1016/j.compbiomed.2020.103792.

Panwar H, Gupta PK, Siddiqui MK, et al. A deep learning and grad-cam based color visualization approach for fast detection of covid-19 cases using chest x-ray and ct-scan images. Chaos Solitons Fractals. 2020;140: 110190. https://doi.org/10.1016/j.chaos.2020.110190.

Parveen NRS, Sathik MM. Detection of pneumonia in chest x-ray images. J X-Ray Sci Technol. 2011;19:423–8. https://doi.org/10.3233/XST-2011-0304.

Patrascu V. Color image enhancement using the support fuzzification in the framework of the logarithmic model. 2005.

Pradhan AK, Mishra D, Das K, et al. A covid-19 x-ray image classification model based on an enhanced convolutional neural network and hill climbing algorithms. Multimed Tools Appl. 2022;82:14219. https://doi.org/10.1007/s11042-022-13826-8.

Qi A, Zhao D, Yu F, et al. Directional mutation and crossover boosted ant colony optimization with application to covid-19 x-ray image segmentation. Comput Biol Med. 2022;148: 105810. https://doi.org/10.1016/j.compbiomed.2022.105810.

Rahman M, Cao Y, Sun X, et al. Deep pre-trained networks as a feature extractor with xgboost to detect tuberculosis from chest x-ray. Comput Electr Eng. 2021;93: 107252. https://doi.org/10.1016/j.compeleceng.2021.107252.

Rajaraman S, Antani SK. Modality-specific deep learning model ensembles toward improving TB detection in chest radiographs. IEEE Access. 2020;8:27318–26. https://doi.org/10.1109/ACCESS.2020.2971257.

Rajaraman S, Candemir S, Kim I, et al. Visualization and interpretation of convolutional neural network predictions in detecting pneumonia in pediatric chest radiographs. Appl Sci (Switzerland). 2018;8:1715. https://doi.org/10.3390/app8101715.

Rajaraman S, Siegelman J, Alderson PO, et al. Iteratively pruned deep learning ensembles for covid-19 detection in chest x-rays. IEEE Access. 2020;8:115041–50. https://doi.org/10.1109/ACCESS.2020.3003810.

Rajasenbagam T, Jeyanthi S, Pandian JA. Detection of pneumonia infection in lungs from chest x-ray images using deep convolutional neural network and content-based image retrieval techniques. J Ambient Intell Hum Comput. 2021. https://doi.org/10.1007/s12652-021-03075-2.

Rajpurkar P, Irvin J, Zhu K, et al. Chexnet: Radiologist-level pneumonia detection on chest x-rays with deep learning. 2017. arXiv preprint arXiv:1711.05225

Redie DK, Sirko AE, Demissie TM, et al. Diagnosis of covid-19 using chest x-ray images based on modified darkcovidnet model. Evol Intell. 2022;16:729. https://doi.org/10.1007/s12065-021-00679-7.

Redmon J, Farhadi A. Yolo9000: Better, faster, stronger. 2017. https://doi.org/10.1109/CVPR.2017.690

Rieumont M, Wittenberg J, Weissleder R. Primer-of-diagnostic-imaging, 5th-Edition. Mosby, Inc., an affiliate of Elsevier Inc. 1997.

Rohmah RN, Susanto A, Soesanti I. Lung tuberculosis identification based on statistical feature of thoracic x-ray. In: 2013 International Conference on QiR. 2013. p. 19–26.

Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2015. p. 234–41.

RSNA. Radiological society of north america, covid-19 radiography database. https://www.kaggle.com/tawsifurrahman/covid 19-radiography-database. 2019a.

RSNA. Radiological society of north america pneumonia detection challenge. https://www.kaggle.com/c/rsna-pneumonia-detection-challenge/data. 2019b.

Russ JC, Russ JC. Introduction to image processing and analysis. CRC Press; 2017.

Saha P, Neogy S. Concat_cnn: A model to detect covid-19 from chest x-ray images with deep learning. SN Comput Sci. 2022;3(4):305. https://doi.org/10.1007/s42979-022-01182-1.

Salau J, Krieter J. Instance segmentation with mask r-cnn applied to loose-housed dairy cows in a multi-camera setting. Animals. 2020;10:1–19. https://doi.org/10.3390/ani10122402.

Salau J, Krieter J. Instance segmentation with mask r-cnn applied to loose-housed dairy cows in a multi-camera setting. Animals. 2020;10:2402. https://doi.org/10.3390/ani10122402.

Salehi AW, Baglat P, Gupta G, et al. Review on machine and deep learning models for the detection and prediction of coronavirus. Mater Today Proc. 2020;33:3896–901. https://doi.org/10.1016/j.matpr.2020.06.245.

Sethy PK, Behera SK, Ratha PK, et al. Detection of coronavirus disease (covid-19) based on deep features and support vector machine. Int J Math Eng Manag Sci. 2020;5:643–51.

Shaban WM, Rabie AH, Saleh AI, et al. Accurate detection of covid-19 patients based on distance biased naïve bayes (dbnb) classification strategy. Pattern Recogn. 2021;119: 108110. https://doi.org/10.1016/j.patcog.2021.108110.

Shen D, Wu G, Suk HII. Deep learning in medical image analysis. Ann Rev Biomed Eng. 2017;19:221–48. https://doi.org/10.1146/annurev-bioeng-071516.

Shiraishi JJ, Katsuragawa SS, Ikezoe JJ, et al. Development of a digital image database for chest radiographs with and without a lung nodule: Receiver operating characteristic analysis of radiologists’ detection of pulmonary nodules. Am J Roentgenol. 2000;174:71–4.

Sim Y, Chung MJ, Kotter E, et al. Deep convolutional neural network-based software improves radiologist detection of malignant lung nodules on chest radiographs. Radiology. 2020;294:199–209. https://doi.org/10.1148/radiol.2019182465.

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. In: 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings. 2015. http://www.robots.ox.ac.uk/

Singh A, Sengupta S, Lakshminarayanan V. Explainable deep learning models in medical image analysis. J Imaging. 2020;6:1–19. https://doi.org/10.3390/JIMAGING6060052.

Singhal T. Review on covid19 disease so far. Indian J Pediatr. 2020;87:281–6.

Souza JC, Diniz JOB, Ferreira JL, et al. An automatic method for lung segmentation and reconstruction in chest x-ray using deep neural networks. Comput Methods Progr Biomed. 2019;177:285–96. https://doi.org/10.1016/j.cmpb.2019.06.005.

Stefan J, Sema C, Antani S, et al. Two public chest x-ray datasets for computer-aided screening of pulmonary diseases. Quant Imaging Med Surg. 2014;4:475–7.

Stephen O, Sain M, Maduh UJ, et al. An efficient deep learning approach to pneumonia classification in healthcare. J Healthc Eng. 2019. https://doi.org/10.1155/2019/4180949.

Szegedy C, Liu W, Sermanet P, et al. Going deeper with convolutions. 2014.

Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision. 2016.

Tahir AM, Chowdhury ME, Khandakar A, et al. Covid-19 infection localization and severity grading from chest x-ray images. Comput Biol Med. 2021;139: 105002. https://doi.org/10.1016/j.compbiomed.2021.105002.

Tan JH, Fujita H, Sivaprasad S, et al. Automated segmentation of exudates, haemorrhages, microaneurysms using single convolutional neural network. Inf Sci. 2017;420:66–76. https://doi.org/10.1016/j.ins.2017.08.050.

Tan M, Le QV. Efficientnet: Rethinking model scaling for convolutional neural networks. 2019. arXiv:abs/1905.11946

Thomas G, Ksiazek DE. A novel coronavirus associated with severe acute respiratory syndrome. N Engl J Med. 2003;348:1953–66.

Toğaçar M, Ergen B, Cömert Z, et al. Covid-19 detection using deep learning models to exploit social mimic optimization and structured chest x-ray images using fuzzy color and stacking approaches. Comput Biol Med. 2020;121: 103805. https://doi.org/10.1016/j.compbiomed.2020.103805.

Toriwaki JI, Suenaga Y, Negoro T, et al. Pattern recognition of chest x-ray images. Comput Graph Image Process. 1973;2(3–4):252–71.

Tuncer T, Ozyurt F, Dogan S, et al. A novel covid-19 and pneumonia classification method based on f-transform. Chem Intell Lab Syst. 2021;210: 104256. https://doi.org/10.1016/j.chemolab.2021.104256.

Ucar F, Korkmaz D. Covidiagnosis-net: Deep bayes-squeezenet based diagnosis of the coronavirus disease 2019 (covid-19) from x-ray images. Med Hypotheses. 2020;140(109):761. https://doi.org/10.1016/j.mehy.2020.109761.

Van Ginneken B, Ter Haar Romeny B, Viergever M. Computer-aided diagnosis in chest radiography: a survey. IEEE Trans Med Imaging. 2001;20(12):1228–41. https://doi.org/10.1109/42.974918.

Verma D, Bose C, Tufchi N, et al. An efficient framework for identification of tuberculosis and pneumonia in chest x-ray images using neural network. Procedia Comput Sci. 2020;171:217–24. https://doi.org/10.1016/j.procs.2020.04.023.

Vieira P, Sousa O, Magalhães D, et al. Detecting pulmonary diseases using deep features in x-ray images. Pattern Recogn. 2021;119: 108081. https://doi.org/10.1016/j.patcog.2021.108081.

Vu HQ, Beliakov G, Li G. A choquet integral toolbox and its application in customer preference analysis. Data Mining Applications with R. 2014. p. 247–272.

Waheed A, Goyal M, Gupta D, et al. Covidgan: Data augmentation using auxiliary classifier gan for improved covid-19 detection. IEEE Access. 2020;8:91916–23. https://doi.org/10.1109/ACCESS.2020.2994762.

Wang C, Elazab A, Wu J, et al. Lung nodule classification using deep feature fusion in chest radiography. Comput Med Imaging Graph. 2017;57:10–8. https://doi.org/10.1016/j.compmedimag.2016.11.004.

Wang H, Gu H, Qin P, et al. Chexlocnet: Automatic localization of pneumothorax in chest radiographs using deep convolutional neural networks. PLoS ONE. 2020;15: e0242013. https://doi.org/10.1371/journal.pone.0242013.

Wang L, Lin ZQ, Wong A. Covid-net: a tailored deep convolutional neural network design for detection of covid-19 cases from chest x-ray images. Sci Rep. 2020;10:19549. https://doi.org/10.1038/s41598-020-76550-z.

Wang L, Lin ZQ, Wong A. Covidx dataset. 2020. https://github.com/lindawangg/COVID-Net

Wang X, Peng Y, Lu L, et al. ChestX-ray: hospital-scale chest X-ray database and benchmarks on weakly supervised classification and localization of common thorax diseases. Springer International Publishing; 2019. p. 369–392.