Abstract

Adoption of digital twin (DT) in smart factories, which simulates an actual system that is manufacturing conditions and updates them in real-time, increased the output and decreased the costs and energy use which were some ways that this manifested. Fast-changing consumer demands have caused a sharp increase in factory transition in addition to producing fewer life cycles of a product. Such scenarios cannot be handled by conventional simulation and modeling techniques; we suggest a general framework for automating the creation of simulation models that are data-driven as the foundation for smart factory DTs. Our proposed framework stands out thanks to its data-driven methodology, which takes advantage of recent advances in machine learning and techniques for process mining, constant model validation, and updating. The framework's objective is to completely define and reduce the requirement for specialist knowledge to get the appropriate simulation models. A case study is used to demonstrate our framework.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Businesses today need to adapt to the easy-to-adaptable environment by changing the way they do business to continue competing internationally. A rise in several businesses is organizing themselves as project organizations in response to the rising demand for customization. Project management companies can deliver high-quality, highly customized products, but at the expense of added complexity. Project managers in these companies must manage the difficulties associated with project planning including scheduling, resource allocation, and capacity planning [1, 2]. planning capacity, allocating resources, and schedule to successfully satisfy the needs of the customer, which makes these issues even more difficult. There are frequent delays in the real world and human involvement-related deviations in project planning. Consequently, decision-making by project managers must take into account a variety of factors while taking into account the many features of different departments, such as the availability of resources, disruptions, repairs, maintenance, and demand to meet customer demands [3]. To anticipate the disruptions that will occur and take preventative action, Project managers must track and review the development of their projects and to meet the deadline and stay within budget, utilize other departments' data in real-time.

Devices for communicating and sharing information recently developed new innovative technologies like cloud computing have also recently emerged, the fourth industrial revolution has been sparked by big data and the internet of things [4].

The concept of intelligent interactions between manufacturing systems and people has emerged with the advent of industry 4.0. The transformation of conventional resources into smart resources through intelligence and digitalization is a fundamental requirement of industry 4.0, which can sense and act in an intelligent environment. As a result, a system known as a cyber-physical system that integrates the virtual and physical worlds is created by fusing industry 4.0 enablers like artificial intelligence, the internet of things, big data, and cloud computing with the production systems[5]. As a result, the business can benefit from greater flexibility thanks to the smart manufacturing system and improved quality, shorter lead times, and productivity with mass customization. The project-based organizations should implement Industry 4.0 and smart manufacturing systems by giving the project manager real-time access, and can tighten monitoring and control over projects to make better decisions, regarding the status of the projects and data about the customers. For smart manufacturing, we create a framework for data-driven DTs. Numerous benefits are offered by this framework to address the current issues with the manufacturing of smart products.

Literature Review

For various manufacturing systems, several researchers have proposed a framework for "smart manufacturing", by fusing manufacturing and digital systems, intending to create a cyber-physical system [6]. For a knowledge-based diagnosis system in smart manufacturing, researchers have presented a framework. To implement the smart manufacturing system, researchers have provided a conceptual framework [7]. A DT-based framework for smart production management in an assembly shop was proposed by researchers[8]. Researchers have provided a structure for business transformation toward the smart service system[9]. An architecture for a smart manufacturing system has, however, been proposed by some researchers, however, a framework specifically for project-based organizations is still required, which are capable of making decisions for issues like project priorities based on a variety of criteria, scheduling, planning, observing and controlling, capacity planning, allocating and transferring resources, estimating costs and risks, purchasing, shipping, and taking customer orders and suggestions for meeting deadlines and staying within budget [10,11,12].

It is possible to view the creation of DTs as a logical progression from conventional simulation modeling in combination with a result of improved connectivity, data availability, and changing end-user needs[11]. A DT aids in comprehending, monitoring, and experimenting with complex physical systems, much like simulation modeling does. The information gleaned from the simulation is also used by DTs to offer feedback to physical systems to manage a portion of it to achieve a set of end-user-specified goals [12]. The case of data-driven DT modeling for smart manufacturing is taken into consideration in this study [1].

The operation, design, and control of complex systems have been evaluated using simulation modeling, which has been deemed to be an excellent tool. It has several benefits, including the capacity to simulate causal relationships between various occurrences, the ability to validate models using gathered data as well as provision for the system's planning, controlling, and predictive capabilities. These models frequently base their assumptions on idealistic notions of the systems they represent [13]. Complex system modeling typically involves the use of discrete event simulation models and experts who choose the appropriate abstractions manually create them to simulate the relevant systems and produce unique simulation models [14,15,16]. For reconfigurable manufacturing systems, this technique for creating simulation models is inappropriate because the software and physical architecture of these systems are constantly changing, to try and keep up with the market's shifting demands[17]. Therefore, after their creation, custom models become quickly out of date, and New models must be created, or existing ones must be manually updated [18]. In a sector that changes quickly, like manufacturing, it is clear that this situation is not ideal.

Proposed System

Data-Driven Simulation Modeling

Data-driven simulation models are those that are created and parametrized using data. Using data-driven strategies has some benefits, the following are listed as alternatives to an intuition-driven approach or representational:

-

Accuracy: in comparison to conventional methods, models that are data-driven are frequently more accurate representations of the systems they are modeling. Data contain the behaviors and traits of systems, which explains this, and it may represent unforeseeable phenomena.

-

The capacity to benefit from advances in artificial intelligence and machine learning: opportunities to apply AI and ML advancements are created by the use of data to better comprehend how systems behave and to make wise decisions.

Due to the benefits listed above and several instances of data-driven strategies being adopted successfully, conventional simulation modeling has been replaced with more data-driven techniques as the main focus [19]. The improvements in data storage and generation are what are driving this shift, the ever-evolving manufacturing market's requirements and algorithms.

Data collection: If this task is successful, the data that the identified entities are continuously producing in a database houses the manufacturing systems used in the factory. Following are the main informational sources [20]: traces and logs, diagnostic data market/user data, and needs, and end-user inputs are some of the data types that are generated.

Data validation Data-related issues mainly revolve around: uncertainty, various modalities, and missing values: huge sizes, structured text, audio, and images. A crucial task that must be completed to guarantee the data's validity is data preprocessing. Integration and imputation are a couple of the preprocessing activities.

Knowledge extraction Taking information from obtained validated data and extracting the following sub-tasks are taken from the previous step.

-

An essential component of data-driven simulation modeling is identifying the events that occur in a smart factory that is pertinent to the simulation's goals. Developing discrete-event simulation models is primarily driven by events, and the success of the simulation modeling is dependent on the simulation model's capacity. Measuring devices have already been identified in prior research on smart manufacturing and reliability assurance in tiny factories is a challenging task. A crucial task is the automatic detection of specific events that indicate the presence of faults that affects how accurate and responsive the smart model of the factory is.

A smart factory's knowledge extraction component benefits from learning about the pertinent processes that take place there because processes are thought to convey significant data about how the factory operates. The input for processing mining algorithms is the event logs that were gathered as part of the data collection phase which automatically identifies the primary processes from the data.

Model development The next essential part of the proposed plan is the creation of a comprehensive data-driven model. This step's inputs come from the procedures and events that were found. Initially, with some degree of human involvement, this information is used to create the simulation model. Then, automatically updating this initial model to account for adjustments made by the smart factory [21]. It is crucial to identify the connections between the model and the data streams coming from smart factories. Additionally, it will be necessary to create algorithms for model updates and model extraction to support semi-automatic and automatic simulation modeling processes.

The next essential part of the proposed plan is the creation of a comprehensive data-driven model. These steps' identified processes and events serve as the input for this step [22]. For this, it is crucial to identify the connections between the data and model streams from smart factories. Model validation is utterly and heavily dependent on data, which requires rigorous validation done on the data itself, the models are derived from high-quality data, for example, to ensure this.

DT Data-Driven Framework

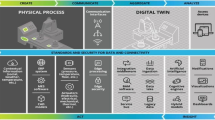

Figure 1 illustrates the framework we suggest for a data-driven DT for a smart factory. The modeled real-world entity, the smart factory, continuously generates data via sensors and IoT devices. The data-driven modeling methodology is founded on these data. The extraction of data involves identifying pertinent entities and storing data in databases[23]. Entity identification involves specifically naming the pertinent entities, such as production systems control. The Haystack standard is a fascinating method to use to gather and comprehend manufacturing data. This standard provides common semantic data models through an open-source initiative, to make it simpler to collect information produced by various manufacturing facilities that include IoT devices. Data cleaning will be processed by general data validation, integration, and preprocessing is the next logical step. Implicitly recorded in the data are significant factory-related events.

Making these occurrences clearer will help with the development of the model process and make them more obvious, with the assistance of humans, event labeling is carried out semi-automatically, by hand, and stakeholders or experts identify and categorize several pertinent events with expertise in the intelligent factory. With the aid of such labeled data, ML models are used to automatically detect additional events. The produced event logs are also used for process discovery and putting them through mining algorithms for processing. In the following, both the checked events and processes are utilized for the creation of a simulation model for a specific intelligent factory. The model's ongoing validation is an essential step in the model development process. If it turns out that the model is accurate, the pertinent model outer measurements are saved for later use. Decisions about how the smart factory will operate can then be knowledgeably made by stakeholders.

Case Study

The facility offers infrastructure and resources that support a variety of Industry 4.0-related technologies. The objective is to establish a dynamic and imaginative workplace where representatives of production and robot companies collaborate closely together with students and researchers to create cutting-edge and efficient manufacturing techniques [24].

A production line that puts together a portion of a quadcopter drone is currently supported by the lab. The sub-assembly and its three parts are depicted in Fig. 2. A rotor, a chassis, and a motor make up the drone component when it is assembled. The construction of a quadcopter drone requires four of these assembled components. 5 resources make up the production line, i.e.: (1) A storage facility that uses automatic order entry and picking, (2) a mobile robot that can move independently and has a robotic arm that can load, move, and unload objects, (3) A fast assembly line with transport attached magnetically. Two assembly cells with cooperative arms of robot that can perform particular tasks, and (5) For managing and observing the production process, a human–machine interface. In Fig. 3, we give a summary of the manufacturing process.

Results and Discussion

The Petri net illustrates how the process is strictly sequential. Given that the production line is currently configured without a buffer or redundancy, The entire production is stopped when one of the assets fails [25]. Inhibitor arcs that prevent certain transitions from firing were used to model potential failures that could occur during asset runtime.

These cells might, for instance, allow humans and robots to collaborate, and cells that are only based on interactions between people. To increase the production's error rate, buffers and redundancy could be added. The ongoing stability of the system would also benefit from routine asset maintenance, such as that performed by. The conceptual framework and proposed methodology in this paper, models can be extracted, like our reliability Petri net model from Fig. 4 utilizing only a small amount of expert knowledge, from sensor data.

Simulation Modeling

The manually created Petri net in Fig. 4 serves as a standard for what our data-driven DT framework should be able to do, also the proposed approach, enabling data extraction is necessary. The process of automatically extracting a Petri net with a reliability focus using process and data mining techniques are described in more detail. Many hardware flexibility models are data-driven rather than expert-driven, we investigated the data needs for each model. We discovered that the data generated by intelligent factories to withstand data-driven reliability modeling can be divided into two types, condition monitoring, and event data. Each record for all three data types contains a timestamp as well as information specific to that data type because all three have a time series format. Figure 5 uses an example and a schematic diagram data set to show the different types of data that were found.

Process discovery techniques can be used to extract the process model out of event data that describes how the drone subassembly was made. The operational state changes of the assets can be modeled and extracted using the state data. In the Petri net, both modeling facets are indicated. It is important to use the right criteria to assess the quality of the reliability-focused Petri net after extraction[26]. One option for doing this is to use the data log, The ground truth Petri net served as a source for or as the foundation for the Petri net. The criteria for appropriateness and fitness have been added for the former. Recall, Precision, and F1 score, and these three are used. Precision is defined as the proportion of edges that were correctly assigned to all corners. The recall is the proportion of edges that were correctly identified to all edges in the default model. The following measures can be expressed mathematically:

where \({T}_{\mathrm{P}}\) represents the collection of edges that coexist in the original and extracted model;\({ F}_{\mathrm{P}}\) is the collection of edges present in the extracted model but absent from the default model, and The group of edges in \({F}_{\mathrm{N}}\) is those that are absent from the extracted model but are present in the initial model.

In addition to the estimated distribution's cumulative distribution function, the reliability function also exists and shows the likelihood that a production asset will endure for longer than a specified period. Fault trees are a popular tool for evaluating system reliability in addition to Petri nets. Event-driven system failure in a manufacturing setting to create fault trees must first be identified. When fault events can't be captured by the event data's current level of granularity, based on production plant condition monitoring data, this could be accomplished using the fault classification and detection techniques described [27].

Demonstration

We manufactured a thousand drone parts to collect data and logged pertinent occurrences and adjustments to the production assets' operational states. A portion of the event log and state log that were produced during this process is shown in Tables 1 and 2, respectively. Modern process mining algorithms will be made accessible to the general public with this project to the encouragement of industry-university cooperation. Along with the execution of different conformance checking and process discovery algorithms, for simulating and modeling Petri nets, pm4py also provides a sizable code base. A library for survival analysis and reliability engineering is called "reliability". Using the aforementioned Python libraries, Fig. 5 displays the entire extracted Petri net.

To extract the production line's material flow model from the event log that was generated, we first used the Alpha Miner algorithm. The repair and failure loops for each asset were then extracted from the state log and connected to the relevant asset operating transitions. For each asset, this was accomplished by first creating 2 locations and transitions and arcs are used to connect them. Second, an inhibitor arc was used to connect the newly created "failed" location to each transition that uses the corresponding asset. The ground truth model and the extracted model are identical. The sample production line's comparatively straightforward design is to blame for this: there are no parallel operations or reworks because the process is sequential, and product types are limited to one.

We then calculated the repair and failure distributions for each asset using the state log. Calculating the duration of each repair and failure was required to achieve this. Calculating the interval between each succeeding failure and repair was done to determine the failure times, or, the time difference from the production line's start time in the event of the first failure. It was determined how long it took to complete each repair after a failure to determine the repair times. The repair and failure distributions were computed using the MLE method. We evaluated each asset's statistical likelihood after fitting several probability distribution functions that are frequently used in reliability engineering. The cumulative distribution function and probability density function are shown in Fig. 6a, b. The assembly track failures for each fitted distribution. The extracted Petri net was updated with the most likely candidates and their associated parameters after the distribution functions have been fitted. The reliability functions for each asset were then calculated; for more information, see Fig. 7a–c.

Discussions

In the field of smart manufacturing, we investigated the requirements for and potential applications for data-driven DTs. There are many benefits to creating DTs in real time using data from IoT devices in intelligent factories, i.e. With more adaptable and reconfigurable factory layouts, we'll have reliable models that are always current; It will also present a chance to incorporate ongoing model validation, etc. To get to data-driven DTs, though, there are still a lot of improvements to be made. Human intervention will always be required to some extent during some of the steps and may never be fully automated. In this way, the challenge is to reduce and integrate human intervention. To try and meet the requirements of data-driven DT development, an advanced conceptual framework has also been created by us. We predict increased interest in this data-driven simulation modeling and the availability of more mechanisms for ensuring the quality of data. The difficulties and possibilities are also covered.

Several difficulties come with the proposed framework for data-driven DTs in smart factories, a list of which is provided as determining and describing in detail the proper level of human intervention. The use of a primarily data-driven strategy that relies on human input to make automation possible is the challenge at hand. The data-driven simulation modeling will be properly set up if there are clearly defined points for human input, additionally, it is effective and easy to understand. An aspect of an intelligent approach or data-driven known as interpretability offers some guiding principles for comprehending the choices made from the viewpoint of a stakeholder provided by the DT. Accuracy and timeliness are two components of the data-driven DT that are referred to as effectiveness.

Real-time decision-making: using various performance indicators as a guide, DTs can help stakeholders reach advantageous decisions. Data-driven DTs can extract relevant information from all collected data, enabling this type of decision-support, that the stakeholder might find to be extremely useful. The requirements for timeliness should be matched to the importance and weight of the associated decisions in terms of criticality and timeliness. The level of detail in the data being gathered ought to demonstrate this. Safety-related decisions, especially those in safety–critical systems, must be made more quickly than production-related decisions.

Stable and affordable production frequencies are the goals of smart manufacturing systems. The reliability of manufacturing systems, for example, and other characteristics are closely related to this goal lowering of energy and waste material production, etc. In addition to these, environmental objectives, such as reducing emissions of greenhouse gas, a crucial factors to be taken into account to reflect and update, building simulation models and serving such a broad range of shifting goals is extremely difficult and complex. High-fidelity DT development is possible thanks to the proposed framework's data-driven approach, Models frequently provide accurate depictions of the corresponding production systems, and processes in smart factories that reflect current behaviors. Furthermore, this accuracy is continuously upheld through ongoing validation of both models and data for greater comprehension of procedures and decisions. Our proposed method includes process discovery as a key component.

The event logs that have been gathered are mined to accomplish this. In addition to improving decision-making, this contributes to a better understanding of how the system's processes flow. The stakeholders can decide on the future of the DT and the system's future by using the system's process flows. In conclusion, implementing DTs presents several advantages for the smart factory, in addition to posing different difficulties. The adoption of data-driven DTs in manufacturing systems can be seen as being motivated by these opportunities.

Conclusion

For Industry 4.0 adoption to be successful, data-driven DTs are essential. With today's manufacturing systems, manually modeling simulations is not an option that throughout their lifetimes experience frequent and quick reconfigurations. In light of this, We looked into what would be needed for simulation models to be developed using data for the foundation of their DTs for intelligent manufacturing systems. DTs are created automatically from data collected from smart factories using real-time data, significant needs can be met by IoT devices, and models for simulation that are constantly updated and reflect changes in factory layouts accurately. Integrated ongoing model validation will be enabled by data-driven DTs, which may begin once specific model components have been taken out. This will be possible thanks to the data's accessibility from the actual system. Many difficulties and requirements must also be satisfied to take advantage of the opportunities. Even after all tasks have been automated, some processes will still require human involvement. It will be more mainstream, though, if they are identified and distinguished. For example, experts will be required to set the simulation's goal and identify the pertinent events. Event of recorded data log exception.

References

Huang, Z., Shen, Y., Li, J., Fey, M., &Brecher, C. (2021). A survey on AI-driven DTs in Industry 4.0: Smart manufacturing and advanced robotics. Sensors, 21(19), 6340.

Singh, T., Solanki, A., Sharma, S. K., Nayyar, A., & Paul, A. (2022). A Decade Review on Smart Cities: Paradigms, Challenges and Opportunities. IEEE Access.

Ozturk GB. DT research in the AECO-FM industry. Journal of Building Engineering. 2021;40: 102730.

Garg G, Kuts V, Anbarjafari G. DT for fanuc robots: Industrial robot programming and simulation using virtual reality. Sustainability. 2021;13(18):10336.

Palensky, P., Cvetkovic, M., Gusain, D., & Joseph, A. (2022). DTs and their use in future power systems. DT, 1(4), 4.

Bamunuarachchi D, Georgakopoulos D, Banerjee A, Jayaraman PP. DTs supporting efficient digital industrial transformation. Sensors. 2021;21(20):6829.

Hassani H, Huang X, MacFeely S. Impactful DT in the Healthcare Revolution. Big Data and Cognitive Computing. 2022;6(3):83.

Latchoumi, T. P., Swathi, R., Vidyasri, P., & Balamurugan, K. (2022, March). Develop New Algorithm To Improve Safety On WMSN In Health Disease Monitoring. In 2022 International Mobile and Embedded Technology Conference (MECON) (pp. 357–362). IEEE.

Sepasgozar, S. M., Mair, D. F., Tahmasebinia, F., Shirowzhan, S., Li, H., Richter, A., ... & Xu, S. (2021). Waste management and possible directions of utilising digital technologies in the construction context. Journal of Cleaner Production, 324, 129095.

BaghalzadehShishehgarkhaneh M, Keivani A, Moehler RC, Jelodari N, RoshdiLaleh S. Internet of Things (IoT), Building Information Modeling (BIM), and DT (DT) in Construction Industry: A Review, Bibliometric, and Network Analysis. Buildings. 2022;12(10):1503.

Garikapati P, Balamurugan K, Latchoumi TP, Malkapuram R. A Cluster-Profile Comparative Study on Machining AlSi 7/63% of SiC Hybrid Composite Using Agglomerative Hierarchical Clustering and K-Means. SILICON. 2021;13:961–72.

Delgado JMD, Oyedele L. DTs for the built environment: learning from conceptual and process models in manufacturing. Adv Eng Inform. 2021;49: 101332.

Sneha, P., & Balamurugan, K. (2023). Investigation on Wear Characteristics of a PLA-14% Bronze Composite Filament. In Recent Trends in Product Design and Intelligent Manufacturing Systems (pp. 453–461). Springer, Singapore.

Wang Y, Xu R, Zhou C, Kang X, Chen Z. DT and cloud-side-end collaboration for intelligent battery management system. J Manuf Syst. 2022;62:124–34.

Ma S, Ding W, Liu Y, Ren S, Yang H. DT and big data-driven sustainable smart manufacturing based on information management systems for energy-intensive industries. Appl Energy. 2022;326: 119986.

Bu L, Zhang Y, Liu H, Yuan X, Guo J, Han S. An IIoT-driven and AI-enabled framework for smart manufacturing system based on three-terminal collaborative platform. Adv Eng Inform. 2021;50: 101370.

ElZahed M, Marzouk M. Smart archiving of energy and petroleum projects utilizing big data analytics. Autom Constr. 2022;133: 104005.

Latchoumi TP, Ezhilarasi TP, Balamurugan K. Bio-inspired weighed quantum particle swarm optimization and smooth support vector machine ensembles for identification of abnormalities in medical data. SN Appl Sci. 2019;1:1137. https://doi.org/10.1007/s42452-019-1179-8.

Wang K, Hu Q, Zhou M, Zun Z, Qian X. Multi-aspect applications and development challenges of DT-driven management in global smart ports. Case Studies on Transport Policy. 2021;9(3):1298–312.

Cai, W., Wang, L., Li, L., Xie, J., Jia, S., Zhang, X., ... & Lai, K. H. (2022). A review on methods of energy performance improvement towards sustainable manufacturing from perspectives of energy monitoring, evaluation, optimization and benchmarking. Renewable and Sustainable Energy Reviews, 159, 112227.

Martinez EM, Ponce P, Macias I, Molina A. Automation pyramid as constructor for a complete DT, case study: a didactic manufacturing system. Sensors. 2021;21(14):4656.

M. Anand, N. Balaji, N. Bharathiraja, A. Antonidoss, A controlled framework for reliable multicast routing protocol in mobile ad hoc network, Materials Today: Proceedings, 2021, ISSN 2214–7853

Wang, Y., Kang, X., & Chen, Z. (2022). A survey of DT techniques in smart manufacturing and management of energy applications. Green Energy and Intelligent Transportation, 100014.

Gao Y, Chang D, Chen CH, Xu Z. Design of DT applications in automated storage yard scheduling. Adv Eng Inform. 2022;51: 101477.

Bhandal, R., Meriton, R., Kavanagh, R. E., & Brown, A. (2022). The application of DT technology in operations and supply chain management: a bibliometric review. Supply Chain Management: An International Journal.

Gutiérrez R, Rampérez V, Paggi H, Lara JA, Soriano J. On the use of information fusion techniques to improve information quality: taxonomy, opportunities and challenges. Information Fusion. 2022;78:102–37.

Liu Z, Shi G, Jiao Z, Zhao L. Intelligent safety assessment of prestressed steel structures based on DTs. Symmetry. 2021;13(10):1927.

Luo, D., Thevenin, S., &Dolgui, A. (2022). A state-of-the-art on production planning in Industry 4.0. International Journal of Production Research, 1–31.

Funding

The authors declare that no funding has been received to carry out the work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Research Trends in Communication and Network Technologies” guest edited by Anshul Verma, Pradeepika Verma and Kiran Kumar Pattanaik.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yadav, R., Roopa, Y.M., Lavanya, M. et al. Smart Production and Manufacturing System Using Digital Twin Technology and Machine Learning. SN COMPUT. SCI. 4, 561 (2023). https://doi.org/10.1007/s42979-023-01976-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-023-01976-x