Abstract

High-order strong stability preserving (SSP) time discretizations are often needed to ensure the nonlinear (and sometimes non-inner-product) strong stability properties of spatial discretizations specially designed for the solution of hyperbolic PDEs. Multi-derivative time-stepping methods have recently been increasingly used for evolving hyperbolic PDEs, and the strong stability properties of these methods are of interest. In our prior work we explored time discretizations that preserve the strong stability properties of spatial discretizations coupled with forward Euler and a second-derivative formulation. However, many spatial discretizations do not satisfy strong stability properties when coupled with this second-derivative formulation, but rather with a more natural Taylor series formulation. In this work we demonstrate sufficient conditions for an explicit two-derivative multistage method to preserve the strong stability properties of spatial discretizations in a forward Euler and Taylor series formulation. We call these strong stability preserving Taylor series (SSP-TS) methods. We also prove that the maximal order of SSP-TS methods is \(p=6\), and define an optimization procedure that allows us to find such SSP methods. Several types of these methods are presented and their efficiency compared. Finally, these methods are tested on several PDEs to demonstrate the benefit of SSP-TS methods, the need for the SSP property, and the sharpness of the SSP time-step in many cases.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The solution to a hyperbolic conservation law

may develop sharp gradients or discontinuities, which results in significant challenges to the numerical simulation of such problems. To ensure that it can handle the presence of a discontinuity, a spatial discretization is carefully designed to satisfy some nonlinear stability properties, often in a non-inner-product sense, e.g., total variation diminishing, maximum norm preserving, or positivity-preserving properties. The development of high-order spatial discretizations that can handle discontinuities is a major research area [4, 6, 15, 28, 30, 35, 46,47,48,49,49, 54].

When the partial differential equation (1) is semi-discretized we obtain the ordinary differential equation (ODE)

where u is a vector of approximations to U. This formulation is often referred to as a method of lines (MOL) formulation, and has the advantage of decoupling the spatial and time discretizations. The spatial discretizations designed to handle discontinuities ensure that when the semi-discretized equation (2) is evolved using a forward Euler method

where \(u^n\) is a discrete approximation to U at time \(t^n\), and the numerical solution satisfies the desired strong stability property, such as total variation stability or positivity. If the desired nonlinear stability property such as a norm, semi-norm, or convex functional, is represented by \(\Vert \cdot \Vert\) and the spatial discretization satisfies the monotonicity property

under the time-step restriction

In practice, in place of the first-order time discretization (3), we typically require a higher order time integrator, that preserves the strong stability property

perhaps under a modified time-step restriction. For this purpose, time discretizations with good linear stability properties or even with nonlinear inner-product stability properties are not sufficient. Strong stability preserving (SSP) time discretizations were developed to address this need. SSP multistep and Runge-Kutta methods satisfy the strong stability property (6) for any function F, any initial condition, and any convex functional \(\Vert \cdot \Vert\) under some time-step restriction, provided only that (4) is satisfied.

Recently, there has been interest in exploring the SSP properties of multi-derivative Runge-Kutta methods, also known as multistage multi-derivative methods. Multi-derivative Runge-Kutta (MDRK) methods were first considered in [3, 21, 22, 31, 33, 34, 40, 41, 45, 51], and later explored for use with partial differential equations (PDEs) [9, 29, 36, 39, 50]. These methods have a form similar to Runge-Kutta methods but use an additional derivative \({\dot{F}} = u_{tt} \approx U_{tt}\) to allow for higher order. The SSP properties of these methods were discussed in [5, 32]. In [5], a method is defined as SSP-SD if it satisfies the strong stability property (6) for any function F, any initial condition, and any convex functional \(\Vert \cdot \Vert\) under some time-step restriction, provided that (4) is satisfied for any \(\Delta t \le \Delta t_{\text {FE}}\) and an additional condition of the form

is satisfied for any \(\Delta t \le {\tilde{K}} \Delta t_{\text {FE}}\). These conditions allow us to find a wide variety of time discretizations, called SSP-SD time-stepping methods, but they limit the type of spatial discretization that can be used in this context.

In this paper, we present a different approach to the SSP analysis, which is more along the lines of the idea in [32]. For this analysis, we use, as before, the forward Euler base condition (4), but add to it a Taylor series condition of the form

that holds for any \(\Delta t \le K \Delta t_{\text {FE}}\). Compared to those studied in [5], this pair of base conditions allows for more flexibility in the choice of spatial discretizations (such as the methods that satisfy a Taylor series condition in [7, 10, 37, 39]), at the cost of more limited variety of time discretizations. We call the methods that preserve the strong stability properties of these base conditions strong stability preserving Taylor series (SSP-TS) methods. The goal of this paper is to study a class of methods that is suitable for use with existing spatial discretizations, and present families of such SSP-TS methods that are optimized for the relationship between the forward Euler time step \(\Delta t_{\text{FE}}\) and the Taylor series time step \(K \Delta t_{\text {FE}}\).

In the following subsections we describe SSP Runge-Kutta time discretizations and present explicit multistage two-derivative methods. We then motivate the need for methods that preserve the nonlinear stability properties of the forward Euler and Taylor series base conditions. In Sect. 2 we formulate the SSP optimization problem for finding explicit two-derivative methods which can be written as the convex combination of forward Euler and Taylor series steps with the largest allowable time step, which we will later use to find optimized methods. In Sect. 2.1 we explore the relationship between SSP-SD methods and SSP-TS methods. In Sect. 2.2 we prove that there are order barriers associated with explicit two-derivative methods that preserve the properties of forward Euler and Taylor series steps with a positive time step. In Sect. 3 we present the SSP coefficients of the optimized methods we obtain. The methods themselves can be downloaded from our github repository [14]. In Sect. 4 we demonstrate how these methods perform on specially selected test cases, and in Sect. 5 we present our conclusions.

1.1 SSP Methods

It is well known [13, 42] that some multistep and Runge-Kutta methods can be decomposed into convex combinations of forward Euler steps, so that any convex functional property satisfied by (4) will be preserved by these higher order time discretizations. If we re-write the s-stage explicit Runge-Kutta method in the Shu-Osher form [43],

it is clear that if all the coefficients \(\alpha _{ij}\) and \(\beta _{ij}\) are non-negative, and provided \(\alpha _{ij}\) is zero only if its corresponding \(\beta _{ij}\) is zero, then each stage can be written as a convex combination of forward Euler steps of the form (3), and be bounded by

under the condition \(\frac{\beta _{ij}}{\alpha _{ij}} \Delta t\le \Delta t_{\text{FE}}\). By the consistency condition \(\sum _{j=0}^{i-1} \alpha _{ij}=1\), we now have \(\Vert u^{n+1}\Vert \le \Vert u^{n}\Vert\), under the condition

where if any of the \(\beta\)s are equal to zero, the corresponding ratios are considered infinite.

If a method can be decomposed into such a convex combination of (3), with a positive value of \(\mathcal{{C}}>0\) then the method is called strong stability preserving (SSP), and the value \(\mathcal{{C}}\) is called the SSP coefficient. SSP methods guarantee the strong stability properties of any spatial discretization, provided, only, that these properties are satisfied when using the forward Euler method. The convex combination approach guarantees that the intermediate stages in a Runge-Kutta method satisfy the desired strong stability property as well. The convex combination approach clearly provides a sufficient condition for preservation of strong stability. Moreover, it has also been shown that this condition is necessary [11, 12, 16, 17].

Second- and third-order explicit Runge-Kutta methods [43] and later fourth-order methods [23, 44] were found that admit such a convex combination decomposition with \(\mathcal{{C}}>0\). However, it has been proven that explicit Runge-Kutta methods with positive SSP coefficient cannot be more than fourth-order accurate [27, 38].

The time-step restriction (8) is comprised of two distinct factors: (1) the term \(\Delta t_{\text{FE}}\) that is a property of the spatial discretization, and (2) the SSP coefficient \(\mathcal{{C}}\) that is a property of the time discretization. Research on SSP time-stepping methods for hyperbolic PDEs has primarily focused on finding high-order time discretizations with the largest allowable time step \(\Delta t\le \mathcal{{C}}\Delta t_{\text{FE}}\) by maximizing the SSP coefficient \(\mathcal{{C}}\) of the method.

High-order methods can also be obtained by adding more steps (e.g., linear multistep methods) or more derivatives (Taylor series methods). Multistep methods that are SSP have been found [13], and explicit multistep SSP methods exist of very high order \(p>4\), but have severely restricted SSP coefficients [13]. These approaches can be combined with Runge-Kutta methods to obtain methods with multiple steps, and stages. Explicit multistep multistage methods that are SSP and have order \(p>4\) have been developed as well [1, 24].

1.2 Explicit Multistage Two-Derivative Methods

Another way to obtain higher order methods is to use higher derivatives combined with the Runge-Kutta approach. An explicit multistage two-derivative time integrator is given by:

where \(y^{(1)} = u^n\).

The coefficients can be put into matrix-vector form, where

We also define the vectors \(c = A \mathbf{e}\) and \({\hat{c}} = {\hat{A}} \mathbf{e}\), where \(\mathbf{e}\) is a vector of ones.

As in our prior work [5], we focus on using explicit multistage two-derivative methods as time integrators for evolving hyperbolic PDEs. For our purposes, the operator F is obtained by a spatial discretization of the term \(U_t= -f(U)_x\) to obtain the system \(u_t = F(u)\). Instead of computing the second-derivative term \({\dot{F}}\) directly from the definition of the spatial discretization F, we approximate \({\tilde{F}} \approx {\dot{F}}\) by employing the Cauchy-Kovalevskaya procedure which uses the PDE (1) to replace the time derivatives by the spatial derivatives, and discretize these in space.

If the term F(u) is computed using a conservative spatial discretization \(D_x\) applied to the flux:

then we approximate the second derivative

where a (potentially different) spatial differentiation operator \({\tilde{D}}_x\) is used. Although these two approaches are different, the differences between them are of high order in space, so that in practice, as long as the spatial errors are smaller than the temporal errors, we see the correct order of accuracy in time, as shown in [5].

1.3 Motivation for the New Base Conditions for SSP Analysis

In [5] we considered explicit multistage two-derivative methods and developed sufficient conditions for a type of strong stability preservation for these methods. We showed that explicit SSP-SD methods within this class can break this well known order barrier for explicit Runge-Kutta methods. In that work we considered two-derivative methods that preserve the strong stability property satisfied by a function F under a convex functional \(\Vert \cdot \Vert\), provided that the conditions:

and

where \({\tilde{K}}\) is a scaling factor that compares the stability condition of the second-derivative term to that of the forward Euler term. While the forward Euler condition is characteristic of all SSP methods (and has been justified by the observation that it is the circle contractivity condition in [11]), the second-derivative condition was chosen over the Taylor series condition:

because it is more general. If the forward Euler (12) and second-derivative (13) conditions are both satisfied, then the Taylor series condition (14) will be satisfied as well. Thus, a spatial discretization that satisfies (12) and (13) will also satisfy (14), so that the SSP-SD concept in [5] allows for the most general time discretizations. Furthermore, some methods of interest and importance in the literature cannot be written using a Taylor series decomposition, most notably the unique two-stage fourth-order methodFootnote 1

which appears commonly in the literature on this subject [9, 29, 36]. For these reasons, it made sense to first consider the SSP-SD property which relies on the pair of base conditions (12) and (13).

However, as we will see in the example below, there are spatial discretizations for which the second-derivative condition (13) is not satisfied but the forward Euler condition (12) and the Taylor series condition (14) are both satisfied. In such cases, the SSP-SD methods derived in [5] may not preserve the desired strong stability properties. The existence of such spatial discretizations is the main motivation for the current work, in which we re-examine the strong stability properties of the explicit two-derivative multistage method (9) using the base conditions (12) and (14). Methods that preserve the strong stability properties of (12) and (14) are called, herein, SSP-TS methods. The SSP-TS approach increases our flexibility in the choice of spatial discretization over the SSP-SD approach. Of course, this enhanced flexibility in the choice of spatial discretization is expected to result in limitations on the time discretization (e.g., the two-stage fourth-order method is SSP-SD but not SSP-TS).

To illustrate the need for time discretizations that preserve the strong stability properties of spatial discretizations that satisfy (12) and (14), but not (13), consider the one-way wave equation

(here \(f(U) = U\)) where F is defined by the first-order upwind method

When solving the PDE, we compute the operator \({\tilde{F}}\) by simply applying the differentiation operator twice (note that \(f'(U) = 1\))

We note that when computed this way, the spatial discretization F coupled with the forward Euler satisfies the total variation diminishing (TVD) condition:

while the Taylor series term using F and \({\tilde{F}}\) satisfies the TVD

In other words, these spatial discretizations satisfy the conditions (12) and (14) with \(K=1\), in the total variation semi-norm. However, (13) is not satisfied, so the methods derived in [5] cannot be used. Our goal in the current work is to develop time discretizations that will preserve the desired strong stability properties (e.g., the total variation diminishing property) when using spatial discretizations such as the upwind approximation (17) that satisfy (12) and (14) but not (13).

Remark 1

This simple first-order motivating example is chosen because these spatial discretizations are provably TVD and allow us to see clearly why the Taylor series base condition (14) is needed. In practice, we use higher order spatial discretizations such as WENO that do not have a theoretical guarantee of TVD, but perform well in practice. Such methods are considered in Examples 2 and 4 in the numerical tests, and provide us with similar results.

In this work we develop explicit two-derivative multistage SSP-TS methods of the form (9) that preserve the convex functional properties of forward Euler and Taylor series terms. When the spatial discretizations F and \({\tilde{F}}\) that satisfy (12) and (14) are coupled with such a time-stepping method, the strong stability condition

will be preserved, perhaps under a different time-step condition

If a method can be decomposed in such a way, with \(\mathcal{{C}}_{\text {TS}} > 0\) we say that it is SSP-TS. In the next section, we define an optimization problem that will allow us to find SSP-TS methods of the form (9) with the largest possible SSP coefficient \(\mathcal{{C}}_{\text {TS}}\).

2 SSP Explicit Two-Derivative Runge-Kutta Methods

We consider the system of ODEs

resulting from a semi-discretization of the hyperbolic conservation law (1) such that F satisfies the forward Euler (first derivative) condition (12)

for the desired stability property indicated by the convex functional \(\Vert \cdot \Vert\).

The methods we are interested in also require an appropriate approximation to the second derivative in time

We assume in this work that F and \({\tilde{F}}\) satisfy an additional condition of the form (14)

in the same convex functional \(\Vert \cdot \Vert\), where K is a scaling factor that compares the stability condition of the Taylor series term to that of the forward Euler term.

We wish to show that given conditions (12) and (14), the multi-derivative method (9) satisfies the desired monotonicity condition under a given time step. This is easier if we re-write the method (9) in an equivalent matrix-vector form

where \(\mathbf{y}= \left( y^{(1)}, y^{(2)}, \ldots , y^{(s)}, u^{n+1}\right) ^{\text {T}}\),

and \(\mathbf{e}\) is a vector of ones. As in prior SSP work, all the coefficients in S and \({\hat{S}}\) must be non-negative (see Lemma 3).

We can now easily establish sufficient conditions for an explicit method of the form (22) to be SSP:

Theorem 1

Given spatial discretizationsFand\({\tilde{F}}\)that satisfy (12) and (14), an explicit two-derivative multistage method of the form (22) preserves the strong stability property\(\Vert u^{n+1} \Vert \le \Vert u^n \Vert\)under the time-step restriction\(\Delta t \le r \Delta t_{\text{FE}}\)if it satisfies the conditions

for some\(r>0\). In the above conditions, the inequalities are understood component-wise.

Proof

We begin with the method

and add the terms \(r S \mathbf{y}\) and \(2{\hat{r}}({\hat{r}}-r) {\hat{S}} \mathbf{y}\) to both sides to obtain the canonical Shu-Osher form of an explicit two-derivative multistage method:

where

If the elements of P, Q, and \(R \mathbf{e}\) are all non-negative, and if \(\left( R + P + Q\right) \mathbf{e}= \mathbf{e}\), then \(\mathbf{y}\) is a convex combination of strongly stable terms

and so is also strongly stable under the time-step restrictions \(\Delta t\le r \Delta t_{\text{FE}}\) and \(\Delta t\le K {\hat{r}} \Delta t_{\text{FE}}\). In such cases, the optimal time step is given by the minimum of the two. In the cases we encounter here, this minimum occurs when these two values are set equal, so we require \(r= K {\hat{r}}\). Conditions (23a)–(23c) now ensure that \(P \ge 0\), \(Q\ge 0\), and \(R \mathbf{e}\ge 0\) component-wise for \({\hat{r}} = \frac{r}{K}\), and so the method preserves the strong stability condition \(\Vert u^{n+1} \Vert \le \Vert u^n \Vert\) under the time-step restriction \(\Delta t \le r \Delta t_{\text {FE}}\). Note that if this fact holds for a given value of \(r>0\) then it also holds for all smaller positive values.

Definition 1

A method that satisfies the conditions in Theorem 1 for values \(r \in (0, r_{\max }]\) is called a Strong Stability Preserving Taylor Series (SSP-TS) method with an associated SSP coefficient

Remark 2

Theorem 1 gives us the conditions for the method (22) to be SSP-TS for any time step \(\Delta t\le \mathcal{{C}}_{\text {TS}} \Delta t_{\text{FE}}\). We note, however, that while the corresponding conditions for Runge-Kutta methods have been shown to be necessary as well as sufficient, for the multi-derivative methods we only show that these conditions are sufficient. This is a consequence of the fact that we define this notion of SSP based on the conditions (12) and (14), but if a spatial discretization also satisfies a different condition (for example, (13)) many other methods of the form (22) also give strong stability preserving results. Notable among these is the two-derivative two-stage fourth-order method (15) which is SSP-SD but not SSP-TS. This means that solutions of (15) can be shown to satisfy the strong stability property \(\Vert u^{n+1} \Vert \le \Vert u^n \Vert\) for positive time steps, for the appropriate spatial discretizations, even though the conditions in Theorem 1 are not satisfied.

This result allows us to formulate the search for optimal SSP-TS methods as an optimization problem, as in [5, 13, 23, 25].

However, before we present the optimal methods in Sect. 3, we present the theoretical results on the allowable order of multistage multi-derivative SSP-TS methods.

2.1 SSP Results for Explicit Two-Derivative Runge-Kutta Methods

In this paper, we consider explicit SSP-TS two-derivative multistage methods that can be decomposed into a convex combination of (12) and (14), and thus preserve their strong stability properties. In our previous work [5] we studied SSP-SD methods of the form (9) that can be written as convex combinations of (12) and (13). The following lemma explains the relationship between these two notions of strong stability.

Lemma 1

Any explicit method of the form (9) that can be written as a convex combination of the forward Euler formula (12) and the Taylor series formula (14) can also be written as a convex combination of the forward Euler formula (12) and the second-derivative formula (13).

Proof

We can easily see that any Taylor series step can be rewritten as a convex combination of the forward Euler formula (12) and the second-derivative formula (13):

for any \(0< \alpha < 1\). Clearly then, if a method can be decomposed into a convex combination of (12) and (14), and in turn (14) can be decomposed into a convex combination of (12) and (13), then the method itself can be written as a convex combination of (12) and (13).

This result recognizes that the SSP-TS methods we study in this paper are a subset of the SSP-SD methods in [5]. This allows us to use results about SSP-SD methods when studying the properties of SSP-TS methods.

The following lemma establishes the Shu-Osher form of an SSP-SD method of the form (9). This form allows us to directly observe the convex combination of steps of the form (12) and (13), and thus easily identify the SSP coefficient \(\mathcal{{C}}_{\text {SD}}\).

Lemma 2

If an explicit method of the form (9) written in the Shu-Osher form

has the properties that

-

(i)

all the coefficients are non-negative,

-

(ii)

\(\beta _{ij}=0\) whenever \(\alpha _{ij}=0,\)

-

(iii)

\({\hat{\beta }}_{ij}=0\) whenever \({\hat{\alpha }}_{ij}=0,\)

then this method preserves the strong stability properties of (12) and (13) (i.e., is SSP-SD) for\(\Delta t\le \mathcal{{C}}_{\text {SD}} \Delta t_{\text{FE}}\)with

Proof

For each stage we have

assuming only that for each one of these \(\Delta t\frac{\beta _{ij}}{\alpha _{ij}} \le \Delta t_{\text{FE}}\) and \(\Delta t\frac{{\hat{\beta }}_{ij}}{{\hat{\alpha }}_{ij}} \le {\tilde{K}} \Delta t_{\text{FE}}\). The result immediately follows from the fact that for each i we have \(\sum _{j=1}^{i-1} \left( \alpha _{ij} + {\hat{\alpha }}_{ij} \right) =1\) for consistency.

When a method is written in the block Butcher form (22), we can decompose it into a canonical Shu-Osher form,

This allows us to define an SSP-SD method directly from the Butcher coefficients.

Definition 2

Given spatial discretizations F and \({\tilde{F}}\) that satisfy (12) and (13), an explicit two-derivative multistage method of the form (22) is called a Strong Stability Preserving Second Derivative (SSP-SD) method with and associated SSP coefficient \(\mathcal{{C}}_{\text {SD}} = \min \{r_{\max }, {\tilde{K}}{\hat{r}}_{\max } \}\) if it satisfies the conditions

for all \(r = (0, r_{\max }]\) and \({\hat{r}} = (0, {\hat{r}}_{\max }]\). In the above conditions, the inequalities are understood component-wise.

The relationship between the coefficients in (9) and (24) allows us to conclude that the matrices S and \({\hat{S}}\) must contain only non-negative coefficients.

Lemma 3

If an explicit method of the form (9) can be converted to the Shu-Osher form (24) with all non-negative coefficients\(\alpha _{ij}, \beta _{ij}, {\hat{\alpha }}_{ij}, {\hat{\beta }}_{ij},\)for alli, j, then the coefficients\(a_{ij}, b_j, {\hat{a}}_{ij}, {\hat{b}}_j\)must be all non-negative as well.

Proof

The transformation between (9) and (24) is given by \(a_{21} = \beta _{21}\) and \({\hat{a}}_{21} = {\hat{\beta }}_{21}\) and, recursively,

Clearly then,

and

From there we proceed recursively: given that all \(\alpha _{ij} \ge 0\) and \(\beta _{ij} \ge 0\) for all i, j, and that \(a_{kj} \ge 0\) and \({\hat{a}}_{kj} \ge 0\) for all \(1 \le j < k \le i-1\), then by the formulae (26a) and (26b) we have \(a_{ij} \ge 0\) and \({\hat{a}}_{ij} \ge 0\).

Now given \(\alpha _{ij} \ge 0\) and \(\beta _{ij} \ge 0\) for all i, j and \(a_{kj} \ge 0\) and \({\hat{a}}_{kj} \ge 0\) for all \(1 \le j < k \le s\), the formulae (26c) and (26d) give the result \(b_{j} \ge 0\) and \({\hat{b}}_{i} \ge 0\). Thus, all the coefficients \(a_{ij}, {\hat{a}}_{ij}, b_j, {\hat{b}}_j\) must be all non-negative.

We wish to study only those methods for which the Butcher form (9) is unique. To do so, we follow Higueras [18] in extending the reducibility definition of Dahlquist and Jeltsch [19]. Other notions of reducibility exist, but for our purposes it is sufficient to define irreducibility as follows:

Definition 3

A two-derivative multistage method of the form (9) is DJ-reducible if there exist sets \(T_1\) and \(T_2\) such that \(T_1 \ne \varnothing\), \(T_1 \cap T_2 = \varnothing\), \(T_1 \cup T_2 = [1,2, \ldots , s]\), and

We say a method is irreducible if it is not DJ-reducible.

Lemma 4

An irreducible explicit SSP-SD method of the form (22) must satisfy the (component-wise) condition

Proof

An SSP-SD method in the block form (22), must satisfy conditions (25a)–(25c) for \(0 < r \le r_{\max }\) and \(0 < {\hat{r}} \le {\hat{r}}_{\max }\). The non-negativity of (25b) and (25c) requires their sum to be non-negative as well,

Note that these matrices commute, so we have

Recalling the definition of the matrix S, we have

Now, we can expand the inverse as

Because the positivity must hold for arbitrarily small \(r<<1\) and \({\hat{r}}<<1\) we can stop our expansion after the linear term, and require

which is

Now we are ready to address the proof. Assume that \(j=J\) is the largest value for which we have \(b_J = {\hat{b}}_J=0\),

Clearly, then we have

Since the method is explicit, the matrices A and \({\hat{A}}\) are lower triangular (i.e., \(a_{iJ}={\hat{a}}_{iJ} = 0\) for \(i \le J\)), so this condition becomes

By the assumption above, we have \((b_i+{\hat{b}}_i) > 0\) for \(i > J\), and \(r>0, {\hat{r}}>0\). Clearly, then, for (27) to hold we must require

which, together with \(b_J={\hat{b}}_J =0\), makes the method DJ-reducible. Thus we have a contradiction.

We note that this same result, in the context of additive Runge-Kutta methods, is due to Higueras [18].

2.2 Order Barriers

Explicit SSP Runge-Kutta methods with \(\mathcal{{C}}>0\) are known to have an order barrier of four, while the implicit methods have a barrier of six [13]. This follows from the fact that the order p of irreducible methods with non-negative coefficients depends on the stage order q such that

For explicit Runge-Kutta methods the first stage is a forward Euler step, so \(q=1\) and thus \(p \le 4\), whereas for implicit Runge-Kutta methods the first stage is at most of order two, so that \(q=2\) and thus \(p \le 6\).

For two-derivative multistage SSP-TS methods, we find that similar results hold. A stage order of \(q=2\) is possible for explicit two-derivative methods (unlike explicit Runge-Kutta methods) because the first stage can be second order, i.e., a Taylor series method. However, since the first stage can be no greater than second order we have a bound on the stage order \(q \le 2\), which results in an order barrier of \(p \le 6\) for these methods. In the following results we establish these order barriers.

Lemma 5

Given an irreducible SSP-TS method of the form (9), if\(b_j=0,\)then the corresponding\({\hat{b}}_j =0\).

Proof

In any SSP-TS method the appearance of a second-derivative term \({\tilde{F}}\) can only happen as part of a Taylor series term. This tells us that \({\tilde{F}}\) must be accompanied by the corresponding F, meaning that whenever we have a non-zero \({\hat{a}}_{ij}\) or \(\hat{b_j}\) term, the corresponding \({a}_{ij}\) or \({b_j}\) term must be non-zero.

Lemma 6

Any irreducible explicit SSP-TS method of the form (9) must satisfy the (component-wise) condition

Proof

Any irreducible method (9) that can be written as a convex combination of (12) and (14) can also be written as a convex combination of (12) and (13), according to Lemma 1. Applying Lemma 4 we obtain the condition \(b+{\hat{b}} > 0\), component-wise. Now, Lemma 5 tells us that if any component \({b}_j =0\) then its corresponding \({\hat{b}}_j =0\), so that \(b_j + {\hat{b}}_j > 0\) for each j implies that \(b_j >0\) for each j.

Theorem 2

Any irreducible explicit SSP-TS method of the form (9) with order\(p\ge 5\)must satisfy the stage order\(q=2\)condition

where the term\(c^2\)is a component-wise squaring.

Proof

A method of order \(p \ge 5\) must satisfy the 17 order conditions presented in the Appendix 1. Three of those necessary conditions areFootnote 2

From this, we find that the following linear combination of these equations gives

(once again, the squaring here is component-wise). Given the strict component-wise positivity of the vector b according to Lemma 6 and the non-negativity of \(\tau _2^2\), this condition becomes \(\tau _2 ={\mathbf {0}}\).

Theorem 3

Any irreducible explicit SSP-TS method of the form (9) cannot have order\(p=7\).

Proof

This proof is similar to the proof of Theorem 2. The complete list of additional order conditions for seventh order is lengthy and beyond the scope of this work. However, only three of these conditions are needed for this proof. These are

Combining these three equations we have

From this we see that any seventh order method of the form (9) which admits a decomposition of a convex combination of (12) and (14), must satisfy the stage order \(q=3\) condition

However, as noted above, the first stage of the explicit two-derivative multistage method (9) has the form

which can be at most of second order. This means that the stage order of explicit two-derivative multistage methods can be at most \(q=2\), and so the \(\tau _3=0\) condition cannot be satisfied. Thus, the result of the theorem follows.

Note that the order barriers do not hold for SSP-SD methods, because SSP-SD methods do not require that all components of the vector b must be strictly positive.

3 Optimized SSP Taylor Series Methods

In Sect. 2 we formulated the search for optimal SSP two-derivative methods as:

To accomplish this, we develop and use a matlab optimization code [14] (similar to Ketcheson’s code [26]) for finding optimal two-derivative multistage methods that preserve the SSP properties (12) and (14). The SSP coefficients of the optimized SSP explicit multistage two-derivative methods of order up to \(p=6\) (for different values of K) are presented in this section.

We considered three types of methods:

-

(M1) Methods that have the general form (9) with no simplifications.

-

(M2) Methods that are constrained to satisfy the stage order two (\(q=2\)) requirement (28),

$$\begin{aligned} \tau _2 = A c +{\hat{c}} - \frac{1}{2} c^2 = 0. \end{aligned}$$ -

(M3) Methods that satisfy the stage order two (\(q=2\)) (28) requirement and require only \({\dot{F}}(u^n)\), so they have only one second-derivative evaluation. This is equivalent to requiring that all values in \({\hat{A}}\) and \({\hat{b}}\), except those on the first column of the matrix and the first element of the vector, be zero.

We refer to the methods by type, number of stages, order of accuracy, and value of K. For example, an SSP-TS method of type (M1) with \(s=5\) and \(p=4\), optimized for the value of \(K=1.5\) would be referred to as SSP-TS M1(5,4,1.5) or as SSP-TS M1(5,4,1.5). For comparison, we refer to methods from [5] that are SSP in the sense that they preserve the properties of the spatial discretization coupled with (12) and (13) as SSP-SD MDRK(s, p, K) methods.

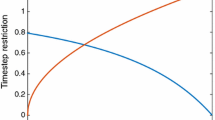

The SSP-TS coefficient \(\mathcal{{C}}_{\text {TS}}\) (on the y-axis) of fourth-order SSP-TS M1 and M2 methods with \(s={3},{4},{5}\) stages plotted against the value of K (on the x-axis). The open stars indicate methods of type (M1) while the filled circles are methods of type (M2). Filled stars are (M1) markers overlaid with (M2) markers indicating close if not equal SSP coefficients

3.1 Fourth-Order Methods

Using the optimization approach described above, we find fourth-order methods with \(s=3,4,5\) stages for a range of \(K=0.1, \ldots , 2.0\). In Fig. 1 we show the SSP coefficients of methods of SSP-TS methods of type (M1) and (M2) with \(s=3,4,5\) (in blue, red, green) plotted against the value of K. The open stars indicate methods of type (M1) while the filled circles are methods of type (M2). Filled stars are (M1) markers overlaid with (M2) markers indicating close if not equal SSP coefficients.

Three Stage Methods Three-stage SSP-TS methods with fourth-order accuracy exist, and all these have stage order two (\(q=2\)), so they are all of type (M2). Figure 1 shows the SSP coefficients of these methods in blue. The (M3) methods have an SSP coefficient

For the case where \(K\ge 1\) we obtain the following optimal (M3) scheme with an SSP coefficient \(\mathcal{{C}}_{\text {TS}} =1\):

When \(K\le 1\) we have to modify the coefficients accordingly to obtain the maximal value of \(\mathcal{{C}}_{\text {TS}}\) as defined above. Here we provide the non-zero coefficients for this family of M3(3,4,K) as a function of K:

In Table 1 we compare the SSP coefficient of three-stage fourth-order SSP-TS methods of type (M2) and (M3) for a selection of values of K. Clearly, the (M3) methods have a much smaller SSP coefficient than the (M2) methods. However, a better measure of efficiency is the effective SSP coefficient computed by normalizing for the number of function evaluations required, which is 2s for the (M2) methods, and \(s+1\) for the (M3) methods. If we consider the effective SSP coefficient, we find that while the (M2) methods are more efficient for the larger values of K, for smaller values of K the (M3) methods are more efficient.

Four Stage SSP-TS Methods While four-stage fourth-order explicit SSP Runge-Kutta methods do not exist, four-stage fourth-order SSP-TS explicit two-derivative Runge-Kutta methods do. Four-stage fourth-order methods do not necessarily satisfy the stage order two (\(q=2\)) condition. These methods have a more nuanced behavior: for very small \(K<0.2\), the optimized SSP methods have stage order \(q=1\). For \(0.2< K< 1.6\) the optimized SSP methods have stage order \(q=2\). Once K becomes larger again, for \(K \ge 1.6\), the optimized SSP methods are once again of stage order \(q=1\). However, the difference in the SSP coefficients is very small (so small it does not show on the graph) so the (M2) methods can be used without significant loss of efficiency.

As seen in Table 2, the methods with the special structure (M3) have smaller SSP coefficients. But when we look at the effective SSP-TS coefficient we notice that, once again, for smaller K they are more efficient. Table 2 shows that the (M3) methods are more efficient when \(K \le 1.5\), and remain competitive for larger values of K.

It is interesting to consider the limiting case, SSP-TS M2(\(4,4,\infty\)), in which the Taylor series formula is unconditionally stable (i.e., \(K=\infty\)). This provides us with an upper bound of the SSP coefficient for this class of methods by ignoring any time-step constraint coming from condition (14). A four-stage fourth-order method that is optimal for \(K=\infty\) is

This method has an SSP coefficient \(\mathcal{{C}}_{\text {TS}}=4\), with an effective SSP coefficient \(\mathcal{{C}}_{\text{eff}}= \frac{1}{2}\). This method also has stage order \(q=2\). This method is not intended to be useful in the SSP context but gives us an idea of the limiting behavior: i.e., what the best possible value of \(\mathcal{{C}}_{\text {TS}}\) could be if the Taylor series condition had no constraint (\(K=\infty )\). We observe in Table 2 that the SSP coefficient of the M2(4,4,K) method is within 10% of this limiting \(\mathcal{{C}}_{\text {TS}}\) for values of \(K=2\).

Five-Stage Methods The optimized five-stage fourth-order methods have stage order \(q=2\) for the values of \(0.5 \le K \le 7\), and otherwise have stage order \(q=1\). The SSP coefficients of these methods are shown in the green line in Fig. 1, and the SSP and effective SSP coefficients for all three types of methods are compared in Table 3. We observe that these methods have higher effective SSP coefficients than the corresponding four-stage methods.

3.2 Fifth-Order SSP-TS Methods

While fifth-order explicit SSP Runge-Kutta methods do not exist, the addition of a second derivative which satisfies the Taylor Series condition allows us to find explicit SSP-TS methods of fifth order. For fifth order, we have the result (in Sect. 2.2 above) that all methods must satisfy the stage order \(q=2\) condition, so we consider only (M2) and (M3) methods. In Fig. 2 we show the SSP-TS coefficients of M2(s,5,K) methods for \(s=4,5,6\).

Four-Stage Methods Four-stage fifth-order methods exist, and their SSP-TS coefficients are shown in blue in Fig. 2. We were unable to find M3(4,5,K) methods, possibly due to the paucity of available coefficients for this form.

Five-Stage Methods The SSP coefficient of the five-stage M2 methods can be seen in red in Fig. 2. We observe that the SSP coefficient of the M2(5,5,K) methods plateaus with respect to K. As shown in Table 4, methods with the form (M3) have a significantly smaller SSP coefficient than that of (M2). However, the effective SSP coefficient is more informative here, and we see that the (M3) methods are more efficient for small values of \(K \le 0.5\), but not for larger values.

Six-Stage Methods The SSP coefficient of the six-stage M2 methods can be seen in green in Fig. 2. In Table 4 we compare the SSP coefficients and effective SSP coefficients of (M2) and (M3) methods. As in the case above, the methods with the form (M3) have a significantly smaller SSP coefficient than that of (M2), and the SSP coefficient of the (M3) methods plateaus with respect to K. However, the effective SSP coefficient shows that the (M3) methods are more efficient for small values of \(K \le 0.7\), but not for larger values.

3.3 Sixth-Order SSP-TS Methods

As shown in Sect. 2.2, the highest order of accuracy this class of methods can obtain is \(p=6\), and these methods must satisfy (28). We find sixth-order methods with \(s=5,6,7\) stages of type (M2). As to methods with the special structure M3, we are unable to find methods with \(s \le p\), but we find M3(7,6,K) methods and M3(8,6,K) methods. In the first six rows of Table 5 we compare the SSP-TS and effective SSP-TS coefficients of the (M2) methods with \(s=5,6,7\) stages. In the last four rows of Table 5 we compare the SSP coefficients and effective SSP coefficients for sixth-order methods with \(s=7,8\) stages. Figure 3 shows the SSP-TS coefficients of the optimized (M3) methods for seven and eight stages, which clearly plateau with respect to K (as can be seen in the tables as well). For the sixth-order methods, it is clear that M3(8,6,K) methods are most efficient for all values of K.

3.4 Comparison with Existing Methods

First, we wish to compare the methods in this work to those in our prior work [5]. If a spatial discretization satisfies the forward Euler condition (12) and the second-derivative condition (13) it will also satisfy the Taylor series condition (14), with

In this case, it is preferable to use the SSP-SD MDRK methods in [5]. However, in the case that the second-derivative condition (13) is not satisfied for any value of \({\tilde{K}} >0\), or if the Taylor series condition is independently satisfied with a larger K than would be established from the two conditions, i.e., \(K > {\tilde{K}} \left( \sqrt{{\tilde{K}}^2 +2 } - {\tilde{K}} \right)\), then it may be preferable to use one of the SSP-TS methods derived in this work.

Next, we wish to compare the methods in this work to those in [32], which was the first paper to consider an SSP property based on the forward Euler and Taylor series base conditions. The approach used in our work is somewhat similar to that in [32] where the authors consider building time integration schemes which can be composed as convex combinations of forward Euler and Taylor series time steps, where they aim to find methods which are optimized for the largest SSP coefficients. However, there are several differences between our approach and the one of [32], which results in the fact that in this paper we are able to find more methods, of higher order, and with better SSP coefficients. In addition, in the present work we find and prove an order barrier for SSP-TS methods.

The first difference between our approach and the approach in [32] is that we allow computations of \({\dot{F}}\) of the intermediate values, rather than only \({\dot{F}}(u^n)\). Another way of saying this is that we consider SSSP-TS methods that are not of type M3, while the methods considered in [32] are all of type M3. In some cases, when we restrict our search to M3 methods and \(K=1\), we find methods with the same SSP coefficient as in [32]. For example, HBT34 matches our SSP-TS M3(3,4,1) method with an SSP coefficient of \(\mathcal{{C}}_{\text {TS}}=1\), HBT44 matches our SSP-TS M3(4,4,1) method with \(\mathcal{{C}}_{\text {TS}}=\frac{20}{11}\), HBT54 matches our SSP-TS M3(5,4,1) method with \(\mathcal{{C}}_{\text {TS}}=2.441\), and HBT55 matches our SSP-TS M3(5,5,1) method with an SSP coefficient of \(\mathcal{{C}}_{\text {TS}}=1.062\). While methods of type M3 have their advantages, they are sometimes sub-optimal in terms of efficiency, as we point out in the tables.

The second difference between the SSP-TS methods in this paper and the methods in [32] is that in [32] only one method of order \(p>4\) is reported, while we have many fifth- and sixth-order methods of various types and stages, optimized for a variety of K values.

The most fundamental difference between our approach and the approach in [32] is that our methods are optimized for the relationship between the forward Euler restriction and the Taylor series restriction while the time-step restriction in the methods of [32] is defined as the most restrictive of the forward Euler and Taylor series time-step conditions. Respecting the minimum of the two cases will still satisfy the nonlinear stability property, but this approach does not allow for a balance between the restrictions considered, which can lead to severely more restrictive conditions. In our approach we use the relationship between the two time-step restrictions to select optimal methods. For this reason, the methods we find have larger allowable time steps in many cases. To understand this a little better consider the case where the forward Euler condition is \(\Delta t_{\text {FE}} \le \Delta x\) and the Taylor series condition is \(\Delta t_{\text {TS}} \le \frac{1}{2}\Delta x\). In the approach used in [32], the base time-step restriction is then \(\Delta t_{\max } = \max \{ \Delta t_{\text {FE}}, \Delta t_{\text {TS}} \} \le \frac{1}{2}\Delta x\). The HBT23 method in [32] is a third-order scheme with two stages which has an SSP coefficient of \(\mathcal{{C}}_{\text {TS}}=1\), so the allowable time step with this scheme will be the same \(\Delta t\le \mathcal{{C}}_{\text {TS}} \Delta t_{\max } \le \frac{1}{2}\Delta x\). On the other hand, using our optimal SSP-TS M2(2,3,0.5) scheme, which has an SSP coefficient \(\mathcal{{C}}_{\text {TS}}=0.75\), the allowable time step is \(\Delta t\le \mathcal{{C}}_{\text {TS}} \Delta t_{\text {FE}} \le \frac{3}{4} \Delta x\), a 50% increase. This is not only true when \(K<1\): consider the case where \(\Delta t_{\text {FE}}\le \frac{1}{2}\Delta x\) and \(\Delta t_{\text {TS}} \le \Delta x\). Once again the HBT23 method in [32] will have a time-step restriction of \(\Delta t\le \mathcal{{C}}_{\text {TS}} \Delta t_{\max } \le \frac{1}{2}\Delta x\), while our M2(2,3,2) method has an SSP coefficient \(\mathcal{{C}}_{\text {TS}}=1.88\), so that the overall time-step restriction would be \(\Delta t\le \frac{1.88}{2} \Delta x=0.94 \Delta x\), which is 88% larger. Even when the two base conditions are the same (i.e., \(K=1\)) and we have \(\Delta t_{\text {FE}} \le \Delta x\) and \(\Delta t_{\text {TS}} \le \Delta x\), the HBT23 method in [32] gives an allowable time step of \(\mathcal{{C}}_{\text {TS}}=1\) while our SSP-TS M2(2,3,1) has an SSP coefficient \(\mathcal{{C}}_{\text {TS}}=1.5\), so that our method allows a time step that is 50% larger.Footnote 3 These simple cases demonstrate that our methods, which are optimized for the value of K, will usually allow a larger SSP coefficient that the methods obtained in [32].

4 Numerical Results

4.1 Overview of Numerical Tests

We wish to test our methods on what are now considered standard benchmark tests in the SSP community. In this subsection we preview our results, which we then present in more detail throughout the remainder of the section.

First, in the tests in Sect. 4.3 we focus on how the strong stability properties of these methods are observed in practice, by considering the total variation of the numerical solution. We focus on two scalar PDEs: the linear advection equation and Burgers’ equation, using simple first-order spatial discretizations which are known to satisfy a total variation-diminishing property over time for the forward Euler and Taylor series building blocks. We want to ensure that our numerical approximation to these solutions observe similar properties as long as the predicted SSP time-step restriction, \(\Delta t \le \mathcal{{C}}_{\text {TS}} \Delta t_{\text {FE}}\), is respected. These scalar one-dimensional partial differential equations are chosen for their simplicity so we may understand the behavior of the numerical solution, but the discontinuous initial conditions may lead to instabilities if standard time discretization techniques are employed. Our tests show that the methods we design here preserve these properties as expected by the theory.

In Example 2, we extend the results from Example 1 to the case where we use the higher order weighted essentially non-oscillatory (WENO) method, which is not probably TVD but gives results that have very small increases in total variation. We demonstrate that our methods out-perform other methods, such as the SSP-SD MDRK methods in [5], and that non-SSP methods that are standard in the literature do not preserve the TVD property for any time step.

In many of these examples we are concerned with the total variation-diminishing property. To measure the sharpness of the SSP condition we compute the maximal observed rise in total variation over each step, defined by

as well as the maximal observed rise in total variation over each stage, defined by

where \(y^{(s+1)}\) corresponds to \(u^{n+1}\). The quantity of interest is the time step \(\Delta t_{\text {obs}}\), or the SSP coefficient \(\mathcal{{C}}_{\text {TS}}^{\text {obs}} = \frac{\Delta t_{\text {obs}}}{\Delta t_{\text{FE}}}\) at which this rise becomes significant, as defined by a maximal increase of \(10^{-10}\).

It is important to notice that the SSP-TS methods we designed depend on the value of K in (14). However, in practice we often do not know the exact value of K. In Example 3 we investigate what happens when we use spatial discretizations with a given value of K with time discretization methods designed for an incorrect value of K. We conclude that although in some cases a smaller step size is required, for methods of type M3 there is generally no adverse result from selecting the wrong value of K.

In Example 4 we investigate the increased flexibility in the choice of spatial discretization that results from relying on the (12) and (14) base conditions. The only constraint in the choice of differentiation operators \(D_x\) and \({\tilde{D}}_x\) (described at the end of Sect. 1.2) is that the resulting building blocks must satisfy the monotonicity conditions (12) and (14) in the desired convex functional \(\Vert \cdot \Vert\). As noted above, this constraint is less restrictive than requiring that (12) and (13) are satisfied: any spatial discretizations for which (12) and (13) are satisfied will also satisfy (14). However, there are some spatial discretizations that satisfy (12) and (14) that do not satisfy (13). In Example 4 we find that choosing spatial discretizations that satisfy (12) and (14) but not (13) allows for larger time steps before the rise in total variation. And finally, in Example 5, we demonstrate the positivity-preserving behavior of our methods when applied to a nonlinear system of equations.

4.2 On the Numerical Implementation of the Second Derivative

In the following numerical test cases the spatial discretization is performed as follows: at each iteration we take the known value \(u^n\) and compute the flux \(f(u^n) = - u^n\) in the linear case and \(f(u^n) = \frac{1}{2} \left( u^n \right) ^2\) for Burgers’ equation. Now to compute the spatial derivative \(f(u^n)_x\) we use an operator \(D_x\) and compute

In the numerical examples below the differential operator \(D_x\) will represent, depending on the problem, a first-order upwind finite difference scheme and the fifth-order finite difference WENO method [20]. In our scalar test cases \(f'(u)\) does not change sign, so we avoid flux splitting.

Now we have the approximation to \(U_t\) at time \(t^n\), and wish to compute the approximation to \(U_{tt}\). For the linear advection problem, this is very straightforward as \(U_{tt} = U_{xx}\). To compute this, we take \(u_x\) as computed before, and differentiate it again. For Burgers’ equation, we have \(U_{tt} = \left( - U U_t \right) _x\). We take the approximation to \(U_t\) that we obtained above, and we multiply it by \(u^n\), then differentiate in space once again. In pseudocode, the calculation takes the form

Using these, we can now construct our two building blocks

In choosing the spatial discretizarions \(D_x\) and \({\tilde{D}}_x\) it is important that these building blocks satisfy (12) and (14) in the desired convex functional \(\Vert \cdot \Vert\).

4.3 Example 1: TVD First-Order Finite Difference Approximations

In this section we use first-order spatial discretizations, that are probably total variation diminishing (TVD), coupled with a variety of time-stepping methods. We look at the maximal rise in total variation.

Example 1a: Linear advection As a first test case, we consider a linear advection problem

on a domain \(x \in [-1,1]\), with step-function initial conditions

and periodic boundary conditions. This simple example is chosen as our experience has shown [13] that this problem often demonstrates the sharpness of the SSP time step.

For the spatial discretization we use a first-order forward difference for the first and second derivative:

These spatial discretizations satisfy

-

Forward Euler condition\(u^{n+1}_j = u^n_j + \frac{\Delta t}{\Delta x} \left( u^n_{j+1} - u^n_j \right)\) is TVD for \(\Delta t \le \Delta x\), and

-

Taylor series condition\(u^{n+1}_j = u^n_j + \frac{\Delta t}{\Delta x} \left( u^n_{j+1} - u^n_j \right) + \frac{1}{2} \left( \frac{\Delta t}{\Delta x} \right) ^2 \left( u^n_{j+2} - 2 u^n_{j+1} + u^n_{j} \right)\) is TVD for \(\Delta t \le \Delta x\).

So that \(\Delta t_{\text{FE}}= \Delta x\) and in this case we have \(K=1\) in (14). Note that the second-derivative discretization used above does not satisfy the second-derivative condition (13), so that most of the methods we devised in [5] do not guarantee strong stability preservation for this problem.

For all of our simulations for this example, we use a fixed grid of \(M=601\) points, for a grid size \(\Delta x = \frac{1}{600}\), and a time step \(\Delta t = \lambda \Delta x\) where we vary \(\lambda\) from \(\lambda = 0.05\) until beyond the point where the TVD property is violated. We step each method forward by \(N=50\) time-steps and compare the performance of the various time-stepping methods constructed earlier in this work, for \(K = 1\). We define the observed SSP coefficient \(\mathcal{{C}}_{\text {TS}}^{\text {obs}}\) as the multiple of \(\Delta t_{\text{FE}}\) for which the maximal rise in total variation exceeds \(10^{-10}\).

We verify that the observed values of \(\Delta t_{\text{FE}}\) and K match the predicted values, and test this problem to see how well the observed SSP coefficient \(\mathcal{{C}}_{\text {TS}}^{\text {obs}}\) matches the predicted SSP coefficient \(\mathcal{{C}}_{\text {TS}}^{\text {pred}}\) for the fourth-, fifth-, and sixth-order methods. The results are listed in the upper half of Table 6.

Example 1b: Burgers’ equation We repeat the example above with all the same parameters but for the problem

on \(x \in (-1,1)\). Here we use the spatial derivatives

and

Using Harten’s lemma we can easily show that these definitions of F and \({\tilde{F}}\) cause the Taylor series condition to be satisfied for \(\Delta t \le \Delta x\). The results are quite similar to those of the linear advection equation in Example 1a, as can be seen in the lower half of Table 6.

The results from these two studies show that the SSP-TS methods provide a reliable guarantee of the allowable time step for which the method preserves the strong stability condition in the desired norm. For methods of order \(p=4\), we observe that the SSP coefficient is sharp: the predicted and observed values of the SSP coefficient are identical for all the fourth-order methods tested. For methods of higher order (\(p=5,6\)) the observed SSP coefficient is often significantly higher than the minimal value guaranteed by the theory.

4.4 Example 2: Weighted Essentially Non-oscillatory (WENO) Approximations

In this section we re-consider the nonlinear Burgers’ equation (35)

on \(x \in (-1,1)\). We use the step function initial conditions (34), and periodic boundaries. We use \(M=201\) points in the spatial domain, so that \(\Delta x =\frac{1}{100}\), and we step forward for \(N=50\) time steps and measure the maximal rise in total variation for each case.

For the spatial discretization, we use the fifth-order finite difference WENO method [20] in space, as this is a high-order method that can handle shocks. We describe this method in Appendix 3. Recall that the motivation for the development of SSP multistage multi-derivative time-stepping is for use in conjunction with high-order methods for problems with shocks. Ideally, the specially designed spatial discretizations satisfy (12) and (14). Although the weighted essentially non-oscillatory (WENO) methods do not have a theoretical guarantee of this type, in practice we observe that these methods do control the rise in total variation, as long as the step-size is below a certain threshold.

Below, we refer to the WENO method on a flux with \(f'(u) \ge 0\) as \(\hbox {WENO}^+\) defined in (41) and to the corresponding method on a flux with \(f'(u) \le 0\) as \(\hbox {WENO}^-\) defined in (42). Because \(f'(u)\) is strictly non-negative in this example, we do not need to use flux splitting, and use \(D =\hbox {WENO}^+\). For the second derivative we have the freedom to use \({\tilde{D}}_x=\hbox {WENO}^+\) or \({\tilde{D}}_x=\hbox {WENO}^-\). In this example, we use \({\tilde{D}}_x=D_x=\hbox {WENO}^+\). In Example 4 below we show that this is more efficient.

In Fig. 4(a), we compare the performance of our SSP-TS M3(7,5,1) and SSP-TS M2(4,5,1) methods, which both have eight function evaluations per time step, and our SSP-TS M3(5,5,1), which has six function evaluations per time step, to the SSP-SD MDRK(3,5,2) in [5] and non-SSP RK(6,5) Dormand-Prince method [8], which also have six function evaluations per time step. We note that we use the SSP-SD MDRK(3,5,2) (designed for \(K=2\)) because this method performs best compared to other explicit two-derivative multistage methods designed for different values of K. Clearly, the non-SSP method is not safe to use on this example. The M3 methods are most efficient, allowing the largest time step per function evaluation before the total variation begins to rise.

This conclusion is also the case for the sixth-order methods. In Fig. 4(b), we compare our SSP-TS M3(9,6,1) and M2(5,6,1) methods, which both have ten function evaluations per time step, and our M3(7,6,1), which has eight function evaluations per time step, to the SSP-SD MDRK(4,6,1) and non-SSP RK(8,6) method given in Verner’s paper table [52], which also have eight function evaluations per time step. Clearly, the non-SSP method is not safe to use on this example. The M3 methods are most efficient, allowing the largest time step per function evaluation before the total variation begins to rise.

This example demonstrates the need for SSP methods: classical non-SSP methods do not control the rise in total variation. We also observe that the methods of type M3 are efficient, and may be the preferred choice of methods for use in practice.

Example 2: Comparison of the maximal rise in total variation (on the y-axis) as a function of \(\lambda =\frac{\Delta t}{\Delta x}\) (on the x-axis) for a selection of time-stepping methods for evolving Burgers’ equation with WENO spatial discretizations. (a) Fifth-order methods. (b) Sixth-order methods

4.5 Example 3: Testing Methods Designed with Various Values of K

In general, the value of K is not exactly known for a given problem, so we cannot choose a method that is optimized for the correct K. We wish to investigate how methods with different values of K perform for a given problem. In this example, we re-consider the linear advection Eq. (33)

with step function initial conditions (34), and periodic boundary conditions on \(x \in (-1,1)\). We use the fifth-order WENO method with \(M=201\) points in the spatial domain, so that \(\Delta x =\frac{1}{100}\), and we step forward for \(N=50\) time steps and measure the maximal rise in total variation for each case. Using this example, we investigate how time-stepping methods optimized for different K values perform on the linear advection with finite difference spatial approximation test case above, where it is known that \(K=1\). We use a variety of fifth- and sixth-order methods, designed for \(0.1 \le K \le 2\) and give the value of \(\lambda = \frac{\Delta t}{\Delta x}\) for which the maximal rise in total variation becomes large, when applied to the linear advection problem.

In Fig. 5(a) we give the observed value (solid lines) of \(\lambda\) for a number of SSP-TS methods, M2(4,5,K), M2(5,5,K), M2(6,5,K), M3(5,5,K), and M3(6,5,K), and the corresponding predicted value (dotted lines) that a method designed for \(K=1\) should give. In Fig. 5(b) we repeat this study with sixth-order methods M2(5,6,K), M2(6,6,K), M3(7,6,K), and M3(8,6,K). We observe that while choosing the correct K value can be beneficial, and is certainly important theoretically, in practice using methods designed for different K values often makes little difference, particularly when the method is optimized for a value close to the correct K value. For the sixth-order methods in particular, the observed values of the SSP coefficient are all larger than the predicted SSP coefficient.

Example 3: The observed value of \(\lambda =\frac{\Delta t}{\Delta x}\) such that the method is TVD (y-axis) when methods designed for different K values (on the x-axis) are applied to the problem with \(K=1\). For each method, the observed value(solid line) is higher than the predicted value (dashed line)

4.6 Example 4: The Benefit of Different Base Conditions

In [5] we use the choice of \(D_x= {\text {WENO}}^{+}\) defined in (41), followed by \({\tilde{D}}_x={\text {WENO}}^{-}\) defined in (42), by analogy to the first-order finite difference for the linear advection case \(U_t = U_x\), where we use a differentiation operator \(D_x^{+}\) followed by the downwind differentiation operator \(D_x^{-}\) to produce a centered difference for the second derivative. In fact, this approach makes sense for these cases because it respects the properties of the flux for the second derivative and consequently satisfies the second-derivative condition (13). However, if we simply wish the Taylor series formulation to satisfy a TVD-like condition, we are free to use the same operator (\({\text {WENO}}^{+}\) or \({\text {WENO}}^{-}\), as appropriate) twice, and indeed this gives a larger allowable \(\Delta t\).

In Fig. 6 we show how using the repeated upwind discretization \(D= {\text {WENO}}^{-}\) and \({\tilde{D}}_x = {\text {WENO}}^{-}\) (solid lines) which satisfy the Taylor Series Condition (14) but not the second-derivative condition (13) to approximate the higher order derivative allows for a larger time step than the spatial discretizations (dashed lines) used in 9. We see that for the fifth-order methods the rise in total variation always occurs for larger \(\lambda\) for the solid lines (\({\tilde{D}}_x=D_x={\text {WENO}}^{-}\)) than for the dashed lines (\(D_x={\text {WENO}}^{-}\) and \({\tilde{D}}_x= {\text {WENO}}^{+}\)), even for the method designed in [5] to be SSP for the second case but not the first case. For the sixth-order methods the results are almost the same, though the SSP-SD MDRK(4,6,1) method that is SSP for base conditions of the type in [5] performs identically in both cases. These results demonstrate that requiring that the spatial discretizations only satisfy (12) and (14) (but not necessarily (13)) results in methods with larger allowable time steps.

Example 4: The maximal rise in total variation (on the y-axis) for values of \(\lambda\) (on the x-axis). Simulations using the repeated upwind discretization \(D_x= {\text {WENO}}^{-}\) and \({\tilde{D}}_x = {\text {WENO}}^{-}\) (solid lines) are more efficient than those using \(D_x={\text {WENO}}^{-}\) and \({\tilde{D}}_x= {\text {WENO}}^{+}\) (dashed lines). This demonstrates the enhanced allowable time step afforded by the SSP-TS methods

4.7 Example 5: Nonlinear Shallow Water Equations

As a final test case we consider the shallow water equations, where we are concerned with the preservation of positivity in the numerical solution. The shallow water equations [2] are a nonlinear system of hyperbolic conservation laws defined by

where h(x, t) denotes the water height at location x and time t, v(x, t) the water velocity, g is the gravitational constant, and \(U = (h, h v)^{\text {T}}\) is the vector of unknown conserved variables. In our simulations, we set \(g=1\). To discretize this problem, we use the standard Lax-Friedrichs splitting

and define the (conservative) approximation to the first derivative as

We discretize the spatial grid \(x\in (0,1)\) with \(M=201\) points. To approximate the second derivative, we start with element-wise first derivative \(u_{j,t} := -\frac{1}{ {\Delta x} } \left( {\hat{f}}_{j+1/2} - {\hat{f}}_{j-1/2} \right)\), and then approximate the second derivative (consistent with (11)) as

where \(f'(u_{j\pm 1})\) is the Jacobian of the flux function evaluated at \(u_{j\pm 1}\). A simple first-order spatial discretization is chosen here because it enables us to show that positivity is preserved for forward Euler and Taylor series for \(\lambda ^+_{\text {FE}} = \lambda ^+_{\text {TS}}= \alpha \frac{\Delta t}{\Delta x} \le 1\).

In problems such as the shallow water equations, the non-negativity of the numerical solution is important as a height of \(h<0\) is not physically meaningful, and the system loses hyperbolicity when the height becomes negative. For a positivity-preserving test case, we consider a Riemann problem with zero initial velocity, but with a wet and a dry state [2, 53]:

In our numerical simulations, we focus on the impact of the numerical scheme on the positivity of the solver for the the water height h(x, t). This quantity is of interest from a numerical perspective because if the height \(h(x,t) < 0\) for any x or t, the code will crash due to square-root of height.

First, we investigate the behavior of the base methods in terms of the positivity-preserving time step. In other words, we want to get a numerical value for \(\Delta t_{\text{FE}}, K\). To do so, we numerically study the positivity behavior of the forward Euler and Taylor series approach. To do this, we evolve the solution forward for more time steps with different values of \(\lambda = \alpha \frac{\Delta t}{\Delta x}\) to identify the predicted positivity-preserving value \(\lambda ^{\text {pred}}\). Using the approach, we see that as we increase the number of steps the predicted value of the positivity preserving value, \(\lambda ^{\text {pred}}_{\text {FE}} \rightarrow 1\) and \(\lambda ^{\text {pred}}_{\text {TS}} \rightarrow 1\), for both forward Euler and Taylor series. We are not able to numerically identify \({\tilde{K}}\) resulting from the second-derivative condition, which cannot be evolved forward as it does not approximate the solution to the ODE at all.

In Table 7 we compare the positivity-preserving time step of a variety of numerical time integrators. We consider the fifth-order SSP-TS methods M2(4,5,1), M3(5,5,1), and M3(6,5,1), and compare their performance to the SSP-SD MDRK(3,5,2) method in [5], and the non-SSP Dormand-Prince method. We also consider the sixth-order SSP-TS methods M2(5,6,1), M3(7,6,1), and M3(9,6,1), as well as the SSP-SD MDRK(4,6,1) from [5] and the non-SSPRK(8,6) method. Positivity of the water height is measured at each stage for a total of \(N=60\) time steps. We report the largest allowable value of \(\lambda = \alpha \frac{\Delta t}{\Delta x}\) (\(\alpha\) is the maximal wavespeed for the domain) for which the solution remains positive. For each method, the predicted values \(\lambda ^{\text {pred}}\) are obtained by multiplying the SSP coefficient \(\mathcal{{C}}_{\text {TS}}\) of that method by \(\lambda ^{\text {pred}}_{\text {FE}} = \lambda ^{\text {pred}}_{\text {TS}} = 1\). For the SSP-SD MDRK methods we do not make a prediction as we are not able to identify \({\tilde{K}}\) resulting from the second-derivative condition.

In Table 7 we show that all of our SSP-TS methods preserve the positivity of the solution for values larger than those predicted by the theory \(\lambda ^{\text {obs}} > \lambda ^{\text {pred}}\), and that even for the SSP MSRK methods there is a large region of values \(\lambda ^{\text {obs}}\) for which the solution remains positive. However, the non-SSP methods permit no positive time step that retains positivity of the solution, highlighting the importance of SSP methods.

5 Conclusions

In [5] we introduced a formulation and base conditions to extend the SSP framework to multistage multi-derivative time-stepping methods, and the resulting SSP-SD methods. While the choice of base conditions we used in [5] gives us more flexibility in finding SSP time-stepping schemes, it limits the flexibility in the choice of the spatial discretization. In the current paper we introduce an alternative SSP formulation based on the conditions (12) and (14) and investigate the resulting explicit two-derivative multistage SSP-TS time integrators. These base conditions are relevant because some commonly used spatial discretizations may not satisfy the second-derivative condition (13) which we required in [5], but do satisfy the Taylor series condition (14). This approach decreases the flexibility in our choice of time discretization because some time discretizations that can be decomposed into convex combinations of (12) and (13) cannot be decomposed into convex combinations of (12) and (14). However, it increases the flexibility in our choice of spatial discretizations, as we may now consider spatial methods that satisfy (12) and (14) but not (13). In the numerical tests we showed that this increased flexibility allowed for more efficient simulations in several cases.

In this paper, we proved that explicit SSP-TS methods have a maximum obtainable order of \(p=6\). Next we formulated the proper optimization procedure to generate SSP-TS methods. Within this new class we were able to organize our schemes into three sub categories that reflect the different simplifications used in the optimization. We obtained methods up to and including order \(p=6\) thus breaking the SSP order barrier for explicit SSP Runge-Kutta methods. Our numerical tests show that the SSP-TS explicit two-derivative methods perform as expected, preserving the strong stability properties satisfied by the base conditions (12) and (14) under the predicted time-step conditions. Our simulations demonstrate the sharpness of the SSP-TS condition in some cases, and the need for SSP-TS time-stepping methods. Furthermore the numerical results indicate that the added freedom in the choice of spatial discretization results in larger allowable time steps. The coefficients of the SSP-TS methods described in this work can be downloaded from [14].

Notes

Note that here we use \({\dot{F}}\) to indicate that these methods are designed for the exact time derivative of F. However, in practice we use the approximation \({\tilde{F}}\) as explained above.

In this work we use \(\odot\) to denote component-wise multiplication.

These efficiency measures do not account for the fact that the methods in [32] are of type SSP-TS M3 and so require fewer funding evaluations. Correcting for this, our methods are still 10%–40% more efficient.

References

Bresten, C., Gottlieb, S., Grant, Z., Higgs, D., Ketcheson, D.I., Németh, A.: Strong stability preserving multistep Runge-Kutta methods. Math. Comput. 86, 747–769 (2017)

Bunya, S., Kubatko, E.J., Westerink, J.J., Dawson, C.: A wetting and drying treatment for the Runge-Kutta discontinuous Galerkin solution to the shallow water equations. Comput. Methods Appl. Mech. Eng. 198, 1548–1562 (2009)

Chan, R.P.K., Tsai, A.Y.J.: On explicit two-derivative Runge-Kutta methods. Numer. Algorithms 53, 171–194 (2010)

Cheng, J.B., Toro, E.F., Jiang, S., Tang, W.: A sub-cell WENO reconstruction method for spatial derivatives in the ADER scheme. J. Comput. Phys. 251, 53–80 (2013)

Christlieb, A., Gottlieb, S., Grant, Z., Seal, D.C.: Explicit strong stability preserving multistage two-derivative time-stepping schemes. J. Sci. Comput. 68, 914–942 (2016)

Cockburn, B., Shu, C.-W.: TVB Runge-Kutta local projection discontinuous Galerkin finite element method for conservation laws II: general framework. Math. Comput. 52, 411–435 (1989)

Daru, V., Tenaud, C.: High order one-step monotonicity-preserving schemes for unsteady compressible flow calculations. J. Comput. Phys. 193, 563–594 (2004)

Dormand, J.R., Prince, P.J.: A family of embedded Runge-Kutta formulae. J. Comput. Appl. Math. 6, 19–26 (1980)

Du, Z., Li, J.: A Hermite WENO reconstruction for fourth order temporal accurate schemes based on the GRP solver for hyperbolic conservation laws. J. Comput. Phys. 355, 385–396 (2018)

Dumbser, M., Zanotti, O., Hidalgo, A., Balsara, D.S.: ADER-WENO finite volume schemes with space-time adaptive mesh refinement. J. Comput. Phys. 248, 257–286 (2013)

Ferracina, L., Spijker, M.N.: Stepsize restrictions for the total-variation-diminishing property in general Runge-Kutta methods. SIAM J. Numer. Anal. 42, 1073–1093 (2004)

Ferracina, L., Spijker, M.N.: An extension and analysis of the Shu-Osher representation of Runge-Kutta methods. Math. Comput. 249, 201–219 (2005)

Gottlieb, S., Ketcheson, D.I., Shu, C.-W.: Strong Stability Preserving Runge-Kutta and Multistep Time Discretizations. World Scientific Press, London (2011)

Gottlieb, S., Grant, Z.J., Seal, D.C.: Explicit SSP multistage two-derivative methods with Taylor series base conditions. https://github.com/SSPmethods/SSPTSmethods. Accessed 1 Mar 2018

Harten, A.: High resolution schemes for hyperbolic conservation laws. J. Comput. Phys. 49, 357–393 (1983)

Higueras, I.: On strong stability preserving time discretization methods. J. Sci. Comput. 21, 193–223 (2004)

Higueras, I.: Representations of Runge-Kutta methods and strong stability preserving methods. SIAM J. Numer. Anal. 43, 924–948 (2005)

Higueras, I.: Characterizing strong stability preserving additive Runge-Kutta methods. J. Sci. Comput. 39(1), 115–128 (2009)

Jeltsch, R.: Reducibility and contractivity of Runge-Kutta methods revisited. BIT Numer. Math. 46(3), 567–587 (2006)

Jiang, G.-S., Shu, C.-W.: Efficient implementation of weighted ENO schemes. J. Comput. Phys. 126, 202–228 (1996)

Kastlunger, K., Wanner, G.: On Turan type implicit Runge-Kutta methods. Computing (Arch. Elektron. Rechnen) 9, 317–325 (1972)

Kastlunger, K.H., Wanner, G.: Runge Kutta processes with multiple nodes. Computing (Arch. Elektron. Rechnen) 9, 9–24 (1972)

Ketcheson, D.I.: Highly efficient strong stability preserving Runge-Kutta methods with low-storage implementations. SIAM J. Sci. Comput. 30, 2113–2136 (2008)

Ketcheson, D.I., Gottlieb, S., Macdonald, C.B.: Strong stability preserving two-step Runge-Kutta methods. SIAM J. Numer. Anal. 2618–2639 (2012)

Ketcheson, D.I., Macdonald, C.B., Gottlieb, S.: Optimal implicit strong stability preserving Runge-Kutta methods. Appl. Numer. Math. 52, 373 (2009)

Ketcheson, D.I., Parsani, M., Ahmadia, A.J.: RK-Opt: software for the design of Runge-Kutta methods, version 0.2. https://github.com/ketch/RK-opt. Accessed 15 Feb 2018

Kraaijevanger, J.F.B.M.: Contractivity of Runge-Kutta methods. BIT 31, 482–528 (1991)

Kurganov, A., Tadmor, E.: New high-resolution schemes for nonlinear conservation laws and convection-diffusion equations. J. Comput. Phys. 160, 241–282 (2000)

Li, J., Du, Z.: A two-stage fourth order time-accurate discretization for Lax-Wendroff type flow solvers I. hyperbolic conservation laws. SIAM J. Sci. Comput. 38, 3046–3069 (2016)

Liu, X.-D., Osher, S., Chan, T.: Weighted essentially non-oscillatory schemes. J. Comput. Phys. 115, 200–212 (1994)

Mitsui, T.: Runge-Kutta type integration formulas including the evaluation of the second derivative. i. Publ. Res. Inst. Math. Sci. 18, 325–364 (1982)

Nguyen-Ba, T., Nguyen-Thu, H., Giordano, T., Vaillancourt, R.: One-step strong-stability-preserving Hermite–Birkhoff–Taylor methods. Sci. J. Riga Tech. Univ. 45, 95–104 (2010)

Obreschkoff, N.: Neue quadraturformeln. Abh. Preuss. Akad. Wiss. Math.-Nat. Kl. 1940(4), 20 (1940)

Ono, H., Yoshida, T.: Two-stage explicit Runge-Kutta type methods using derivatives. Jpn. J. Ind. Appl. Math. 21, 361–374 (2004)

Osher, S., Chakravarthy, S.: High resolution schemes and the entropy condition. SIAM J. Numer. Anal. 21, 955–984 (1984)

Pan, L., Xu, K., Li, Q., Li, J.: An efficient and accurate two-stage fourth-order gas-kinetic scheme for the Euler and Navier-Stokes equations. J. Comput. Phys. 326, 197–221 (2016)

Qiu, J., Dumbser, M., Shu, C.-W.: The discontinuous Galerkin method with Lax-Wendroff type time discretizations. Comput. Methods Appl. Mech. Eng. 194, 4528–4543 (2005)

Ruuth, S.J., Spiteri, R.J.: Two barriers on strong-stability-preserving time discretization methods. J. Sci. Comput. 17, 211–220 (2002)

Seal, D.C., Guclu, Y., Christlieb, A.J.: High-order multiderivative time integrators for hyperbolic conservation laws. J. Sci. Comput. 60, 101–140 (2014)

Shintani, H.: On one-step methods utilizing the second derivative. Hiroshima Math. J. 1, 349–372 (1971)

Shintani, H.: On explicit one-step methods utilizing the second derivative. Hiroshima Math. J. 2, 353–368 (1972)

Shu, C.-W.: Total-variation diminishing time discretizations. SIAM J. Sci. Stat. Comput. 9, 1073–1084 (1988)

Shu, C.-W., Osher, S.: Efficient implementation of essentially non-oscillatory shock-capturing schemes. J. Comput. Phys. 77, 439–471 (1988)

Spiteri, R.J., Ruuth, S.J.: A new class of optimal high-order strong-stability-preserving time discretization methods. SIAM J. Numer. Anal. 40, 469–491 (2002)

Stancu, D.D., Stroud, A.H.: Quadrature formulas with simple Gaussian nodes and multiple fixed nodes. Math. Comput. 17, 384–394 (1963)

Sweby, P.K.: High resolution schemes using flux limiters for hyperbolic conservation laws. SIAM J. Numer. Anal. 21, 995–1011 (1984)

Tadmor, E.: Approximate solutions of nonlinear conservation laws in Advanced Numerical Approximation of Nonlinear Hyperbolic Equations. Lectures Notes from CIME Course Cetraro, Italy, 1997, Number 1697 in Lecture Notes in Mathematics. Springer, Berlin (1998)

Toro, E., Titarev, V.A.: Solution of the generalized Riemann problem for advection-reaction equations. Proc. R. Soc. Lond. A Math. Phys. Eng. Sci. 458, 271–281 (2002)

Toro, E.F., Titarev, V.A.: Derivative Riemann solvers for systems of conservation laws and ADER methods. J. Comput. Phys. 212, 150–165 (2006)

Tsai, A.Y.J., Chan, R.P.K., Wang, S.: Two-derivative Runge-Kutta methods for PDEs using a novel discretization approach. Numer. Algorithms 65, 687–703 (2014)