Abstract

This study considers multiple values-inflated bivariate integer-valued autoregressive (MV-inflated BINAR) models. It develops the inferential procedures for parameter estimation on this model, which apply to constructing a change point test and outlier detection rule. We first introduce the MV-inflated BINAR model with one parameter exponential family and Poisson-Lindley innovations. Then, we propose a quasi-maximum likelihood estimator (QMLE) and divergence-based estimator featuring minimum density power divergence estimator (MDPDE) for robust estimation. To evaluate the performance of these estimators, we conduct Monte Carlo simulations and demonstrate the adequacy of MDPDE in zero–one inflated models. Real data analysis is also carried out using the number of monthly earthquake cases in the United States.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this study, we introduce a multiple values-inflated bivariate integer-valued autoregressive (MV-inflated BINAR) model and investigate the asymptotic behavior of the quasi-maximum likelihood estimator (QMLE) and the divergence-based estimator for robust estimation that includes minimum density power divergence estimator (MDPDE). Time series of counts appear in diverse research fields related to social, physical, medical, and engineering sciences. Some specific examples are a weekly number of airline passengers, yearly deaths from lung diseases, and the volume of stocks transacted over a finite minute period. To model these kinds of time series, McKenzie (1985) and Al-Osh and Alzaid (1987) coined the integer-valued autoregressive (INAR) models. As with the INAR models, the integer-valued generalized autoregressive conditional heteroscedastic (INGARCH) model was also proposed by Ferland et al. (2006) and Fokianos et al. (2009). For the past decades, these two models have gained great popularity among researchers in the integer-valued time series context. We refer to Weiß (2018) for a general overview.

Overdispersion is a frequently-observed phenomenon in time series of counts, and one of the reasons is zero inflation due to a high frequency of no incidences as seen in the manufacturing process, epidemiology, and insurance. When this phenomenon is not appropriately treated, it can cause a significant bias in inferences. Thus, to circumvent this, zero-inflated models have been taken into account on research problems, refer to Lambert (1992), Chen et al. (2019), and Jo and Lee (2023). Subsequently, as a task of extension of the zero-inflated models, multiple values-inflated INGARCH models have been developed to model time series of counts with excessive zeros and other values, refer to Lee and Kim (2023), who considered INGARCH models whose conditional probability mass function is a multiple values (MV)-inflated one parameter exponential family. In a similar spirit, here we extend the zero-inflated INAR models to more general multiple values-inflated BINAR models. Compared to the MV-inflated INGARCH model of Lee and Kim (2023), its INAR counterpart has the merits to have a simpler model structure and requires much less technically demanding conditions in deriving the asymptotic properties of parameter estimators, which renders the MV-inflated BINAR models to be more tractable and preferential in practical usages.

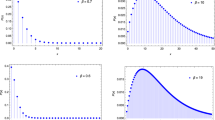

Historically, Poisson innovations have been utilized in modeling with an INAR scheme by many researchers because of its simplicity in applications, refer to Pedeli and Karlis (2013), Weiß (2015), and Lu and Wang (2022). However, the Poisson distribution is not always suitable for modeling as its mean and variance concur with each other and this property is not occasionally acceptable in real situations. Several alternatives to the Poisson innovations have been proposed in the literature, for example, geometric innovations (Jazi et al. 2012), negative binomial innovations (Pedeli and Karlis 2011), Poisson-weighted exponential innovations (Altun 2020), and Poisson-Lindley innovations (Mohammadpour et al. 2018; Livio et al. 2018). In particular, the Poisson-Lindley distribution belongs to the compound Poisson family and has good properties like unimodality and infinite divisibility. Also, unlike the Poisson distribution, the Poisson-Lindley distribution can capture well the overdispersion of datasets.

For parameter estimation for the proposed MV-inflated BINAR models, we consider the quasi-maximum likelihood estimator (QMLE) and the Brègman divergence-based estimator featuring minimum power density divergence estimator (MDPDE), and substantiate their consistency and asymptotic normality under regularity conditions. These estimators are applicable to identifying change points and outliers in time series of counts, as seen in Lee and Jo (2023b), who used MDPDE in handling those. Developing change point tests and outlier detection methods are critical issues in time series analysis as ignoring those may lead to false conclusions. A brief summary of those methods is provided for easy access to our empirical analysis. The change point tests based on the scores and residuals were established for AR and GARCH models (Gombay (2008), Berkes et al. (2004), Lee (2020)), as well as for INAR and INGARCH models (Lee and Lee 2019; Lee and Jo 2023a). We refer to Csörgő & Horváth, (1997) and Lee et al. (2003) for an overview. For assessing the performance of QMLE and MDPDE, we conduct Monte Carlo simulations and confirm the functionality of MDPDE in the presence of outliers. Real data analysis is performed for illustration using the monthly earthquake datasets in the United States, which validates our methods.

The remainder of this paper is organized as follows. Section 2 introduces the bivariate multiple values-inflated INAR model. Section 3 establishes the asymptotic results of QMLE and MDPDE for the proposed model. Sections 3.2 and 5 conduct a simulation study and real data analysis to demonstrate the validity of our methods. Finally, Sect. 6 provides the concluding remarks.

2 MV-inflated BINAR(1) model

Let \(\{Y_t\}\) be a bivariate time series of counts following the bivariate INAR(1) (BINAR(1)) model:

wherein \(\alpha _{ij}\circ Y_{t-1,i}= \sum _{k=1}^{Y_{t-1,i}}w_{t-1,ijk}\) for \(i,j = 1, 2\), \(\{w_{tijk}\}\) are mutually independent iid Bernoulli random variables with success probability \(\alpha _{ij}\in [0, 1)\), and \(Z_t=(Z_{t1}, Z_{t2})^T\) are iid nonnegative integer-valued random vectors independent of all \(w_{tij}\) with \(E\Vert Z_t\Vert ^2 < \infty \). Here, \(\Vert \cdot \Vert \) denotes the \(L_2\)-norm for vectors and matrices. We rewrite (2.1) as

with

Darolles et al. (2019) and Lee and Jo (2023a) verified that the BINAR(1) process in (2.1) has a unique strictly stationary and ergodic solution under certain conditions, such as \(E||Z_1||<\infty \) and

Moreover, when (2.3) holds, Lee and Jo (2023a) showed that \(E\Vert Z_1 \Vert ^r < \infty \) for some \(r\ge 2\) if and only if \(\Vert Y_1 \Vert ^r < \infty \). Also, \(Y_t\) is shown to be adapted to all the random variables comprising \(Y_s\), \(s\le t\), which particularly indicates that \(Y_s\), \(s\le t\), are independent of all \(Z_s\), \(s>t\).

As an estimate of A, Lee and Jo (2023a) employed conditional least squares estimator (CLSE), modified quasi-likelihood estimator (MQLE), and exponential family quasi-likelihood estimator (EQLE). However, instead of these estimators, we consider the quasi-maximum likelihood estimator (QMLE) as QMLE is in general more efficient than those, and the minimum density power divergence estimator (MDPDE) in Lee and Jo (2023b) as MDPDE is robust against possible outliers and model misspecifications. Results on QMLE and MDPDE will be appended to specify their consistency and asymptotic normality.

In what follows, \(\{Y_t\}\) is a strictly stationary and ergodic process following Model (2.1) with \(E||Z_1||^4<\infty \). Also, we assume that the support of \(Z_t\) includes (i, j) with \(i,j=0,1\). We set the conditional mean equation as

where A is the matrix in (2.3) and \(\lambda =(\lambda _1,\lambda _2)^T=EZ_t\). This equation enables practitioners to construct the CLSE of A and \(\lambda \). However, CLSE is not so inefficient as QMLE, and in the presence of multiple inflation parameters, it is not feasible to construct, see Remark 1 below.

Modeling MV-inflated BINAR(1) time series merely requires a parametric modeling procedure for the innovational distributions in (2.1). For this task, one can consider \(Z_{ti}\) whose underlying distribution belongs to the MV-inflated one-parameter exponential family \(\{f_{\varrho _i, \psi _i}: 0< \phi _i:=\sum _{j=1}^{M_i}\rho _{ij}<1, \psi _i>0\}\), \(\varrho _{i}=(\rho _{i1},\ldots , \rho _{iM_i})^T\), with pmf:

where \(M_i\) is a natural number and \(f_{\psi _i}\) is a discrete pmf with parameter \(\psi _i>0\). The number \(M_i\) denotes the number of the inflated values; for example, it is one if \(\{Y_t\}\) is zero-inflated. For \(f_{\psi _i}\), one can employ one-parameter exponential family distribution with pmf:

wherein \(\eta _i\) is the natural parameter, \({{{\mathcal {A}}}}_i\) and \(h_i\) are known functions, both \({{{\mathcal {A}}}}_i\) and \({{{\mathcal {B}}}}_i:={{{\mathcal {A}}}}_i^{'}\) are strictly increasing, where \(\psi _i={{{\mathcal {B}}}}_i (\eta _i)\) is the mean of the distribution. Also, one can alternatively use the Poisson-Lindley distribution with pmf:

Poisson-Lindley distribution merits to accommodate various types of distributions due to its skewness and kurtosis features, well describing all under-, equi-, and over-dispersion phenomena of time series, and is well known to have more desirable properties over Poisson and negative binomial distributions. For the basic properties of Poisson-Lindley INAR(1) models, we refer to Mohammadi et al. (2022).

In what follows, we assume that \(\{f_{\psi _i}\}\) is either the one in (2.6) or (2.7). Furthermore, we assume that \(f_{\varrho _i,\psi _i}\) in (2.5) satisfies the identifiability condition: \(f_{\varrho _i,\psi _i}= f_{\varrho _i^{'},\psi _i^{'}}\) implicates \(\psi _i=\psi _i^{'}\) and \(\varrho _i=\varrho _i^{'}\). Provided \(\phi _i=\sum _{j=1}^{M_i} \rho _{ij} <1\), this condition is fulfilled for frequently used distributions, for example, Poisson, negative binomial, and Poisson-Lindley distributions. Notice that the probability generating function (pgf) of the last distribution with parameter \(\psi \) is \(\frac{\psi ^2 (2+\psi -s)}{(1+\psi )(1+\psi -s)^2}\). More generally, this identifiability condition holds if the pgf \(\pi (s|\psi _i)\) of \(f_{\psi _i}(s)\), \(s\in (0,1]\), is \((M_i+1)\) times differentiable and if \(g(s|\psi _i):=\frac{\pi ^{(M_i+1)}(s|\psi _i)}{\pi ^{(M_i)}(s|\psi _i)}\) satisfies that \(g(s|\psi _i)=g(s|\psi _i^{'})\) for all \(s\in (0,1]\) implies \(\psi _i=\psi _i^{'}\), because this will ultimately lead to \(\phi = \phi _{i}^{'}\) and so \(\varrho _i=\varrho _i^{'}\). See Proposition 2.1 of Lee and Kim (2023).

Herein, we do not intend to model the joint distribution of \(Z_t\), only focusing on its components’ marginal distributions as our inferential procedure will rely on a quasi-likelihood method comprising the sum of the two marginal likelihoods, as described below. This approach has been taken by Lee and Jo (2023a) and Lee et al. (2023) and has proven to remarkably lessen the efforts invested for modeling and inferences.

3 Inference for MV-inflated BINAR(1) model

3.1 QMLE

We set \(\theta = (\alpha ^T, \varrho ^T,\psi ^T)^T\) with \(\alpha =(\alpha _{11}, \alpha _{12}, \alpha _{21}, \alpha _{22})^T\), \(\varrho =(\varrho _1^T,\varrho _2^T)^T\), \(\varrho _i= (\rho _{i1}, \ldots , \rho _{iM_i})^T\), and \(\psi =(\psi _1, \psi _2)^T\), and \(f_{\theta , i}\) denotes the conditional pmf of \(Y_{ti}\) given \(Y_{t-1}\) when \(\theta \) is a model parameter. We denote by \(\theta _0=(\alpha _0, \rho _0, \psi _0)^T= (\alpha _{110}, \alpha _{120}, \alpha _{210}, \alpha _{220}, \varrho _{0}^T, \psi _{0}^T)^T\) the true parameter, which is assumed to be an interior of a compact subset \(\Theta \) of \({\mathbb {R}}^{M_1+M_2+6}\), wherein

for some constants \(0<\kappa _i<1\), \(i=1,\ldots , 4\), and \(\kappa _5>1\).

The conditional pmf of \(Y_{ti}\), \(i=1,2\), is then expressed as

where \(J(m,n,p_1,p_2, k)= P( B_1 (m, p_1)+ B_2 (n, p_2)= k)\), \(k\ge 0\), and \(B_1 (m, p_1)\) and \(B_2 (n, p_2)\) two independent binomial random variables with success probabilities \(p_1\) and \(p_2\) (\(B_i (0, p_i)=0\)).

Note that the model identifiability holds for Model (2.1), due to the following. We rewrite (2.1) as \(Y_t=A\circ Y_{t-1}+Z_t (\varrho ,\psi )\), which becomes \(Y_t=A_0 \circ Y_{t-1}+Z_t (\varrho _0, \psi _0)\) when \(\theta \) equals \(\theta _0\). Note that if both \(\theta \) and \(\theta _0\) generate the same \(\{Y_t\}\), we get \(f_{\theta ,i}(y|Y_{t-1})=f_{\theta _0,i}(y|Y_{t-1})\) for all \(y\ge 0\), \(i=1,2\), so that \(X_{t}(\theta )=X_{t}(\theta _0)\) holds true, namely, \(AY_{t-1}+\lambda =A_0 Y_{t-1}+\lambda _0\), owing to (2.4). This implicates \(A=A_0\) and \(\lambda =\lambda _0\) as \(Y_t\) can take values of (i, j) with \(i,j=0,1\) owing to our assumption. This in turn implies \(Z_t(\varrho ,\psi ){\mathop {=}\limits ^{d}} Z_t (\varrho _0, \psi _0)\), which leads to \(\varrho =\varrho _{0}\) and \(\psi =\psi _0\), due to the model identifiability of \(f_{ \varrho _i,\psi _i,}\), so that we finally have \(\theta =\theta _0\).

QMLE is defined by

where

In Section 7, we verify the consistency and asymptotic normality of QMLE, as this verification was not explicitly addressed in the precedent studies even for the univariate cases. The following theorem states the consistency and asymptotic normality of QMLE under the regular conditions.

Theorem 1

Suppose that (2.3) holds, \(\theta _0\) is an interior of a compact parameter space \(\Theta \), and \(Z_t\) satisfies \(E||Z_1||^4<\infty \) and has a pmf of the form in (2.5) with \(f_{\psi _i}\) in (2.6) with \(E|\log h (Y_{ti}) |<\infty , \ i=1,2,\) or in (2.7). Then, \( {{\hat{\theta }}}_{n}\longrightarrow \theta _0 \) a.s. as \(n\rightarrow \infty \). Moreover, if

and \({{{\mathcal {J}}}}_0\) is non-singular, then, as \(n\rightarrow \infty \),

3.2 Divergence-based robust estimation

As QMLE is sensitive to outliers, we consider a robust estimator based on density power divergence (DPD), as DPD scheme is well known to supply an excellent estimating method in practice. Herein, we consider the DPD scheme within the Brègman divergence paradigm, aiming at providing a more general theory. Brègman divergence is defined for two densities (or probability mass functions) g and h as follows:

where \(\varphi :{\mathbb {R}}\rightarrow {\mathbb {R}}\) is a strictly convex function, named the divergence generator, as this divergence family accommodates Kullback–Leibler divergence, Brègman-exponential divergence (BED), proposed by Mukherjee et al. (2019), and DPD, which respectively correspond to \(\varphi (y)=y\log y-y\), \(2(e^{a y}-a y-1)/a^2\), \(a\in {\mathbb {R}}\), and \((y^{1+\gamma }-y)/\gamma \), \(\gamma >0\). The consistency and asymptotic normality of the estimators based on those divergences are commonly obtainable within the general framework of Brègman divergences.

Let \({{{\mathcal {G}}}}=\{g_\theta : \theta \in \Theta ^*\}\) be a family of densities, where \(\Theta ^*\) is a compact parameter space in an Euclidean space, and assume that \({{{\mathcal {G}}}}\) satisfies the identifiability condition, namely, \(\theta =\theta ^{'}\) if and only if \(g_{\theta }=g_{\theta ^{'}}\) for any \(\theta \) and \(\theta ^{'}\in \Theta ^*\). Given an iid random sample \(Y_1,\ldots ,Y_n\) following density \(g_\theta \in {{{\mathcal {G}}}}\), the minimum Brègman divergence estimator \({{\hat{\theta }}}_n^B\) for true parameter \(\theta _0\) is attained as the minimizer of the objective function:

Notice that \(l_i (\theta )\) has the property that

which ensures the strong convergence of \({{\hat{\theta }}}_n^B\) to \(\theta _0\) if \(E(\sup _{\theta \in \Theta ^*}|l_i (\theta )|)<\infty \).

The aforementioned scheme can be directly applied to the BINAR(1) models. For Brègman divergence with generator \(\varphi _\eta \), where \(\eta \) belongs to a space \({{{\mathcal {N}}}}\subset {\mathbb {R}}^m\), \(m\ge 1\), similarly to (3.10), we define the minimum Brègman divergence estimator as follows:

where \(\ell _{\eta , t}=\sum _{i=1}^2\ell _{\eta ,i, t}(\theta )\) with

Particularly, the minimum power density divergence estimator (MDPDE), with divergence generator \(\varphi _\gamma (y)=(y^{1+\gamma }-y)/\gamma \), \(\gamma \ge 0\), is given as

where \(\ell _{\gamma , t}=\sum _{i=1}^2\ell _{\gamma ,i, t}(\theta )\) with

MDPDE is well known to be a competent robust estimator, controlling the degree of robustness with the tuning parameter \(\gamma \). When \(\gamma =0\), it becomes QMLE, and when \(\gamma =1\), it is an \(L_2\) estimator.

By virtue of (3.11) and the model identifiability of BINAR(1) models, provided

we can readily see that \({{\hat{\theta }}}_{\eta ,n}\) in (3.12) is strongly consistent to \(\theta _0\), as (3.13) implicates that almost surely,

due to the uniform strong law of large numbers for stationary and ergodic processes.

Moreover, if the following additionally holds,

which lead to the following theorem, the proof of which is similar to that of Theorem 1 and is omitted for brevity. Particularly, it can be shown that MDPDE satisfies the conditions in (3.13) and (3.14), refer to Lee and Jo (2023b).

Theorem 2

Suppose that (2.3) holds, \(\theta _0\) is an interior of a compact parameter space \(\Theta \), and \(Z_t\) has a pmf of the form either in (2.5) with \(f_{\psi _i}\) in (2.6) or (2.7) and satisfies \(E||Z_1||^4<\infty \). In addition, if (3.13) and (3.14) are satisfied, as \(n\rightarrow \infty \), \({{\hat{\theta }}}_{n,\eta }\longrightarrow \theta _0\) a.s., and

where

and \({{{\mathcal {J}}}}_\eta \) is assumed to be non-singular.

Remark 1

Using a convex combination of the \(\varphi \)-functions of BED and DPD with tuning parameter a and \(\gamma \), respectively, Singh et al. (2021) proposed the exponential power divergence (EPD) family with \(\varphi \) in (3.9) of the form:

with \(\eta =(a,b,\gamma )^T\), \(a\in {\mathbb {R}}\), \(b\in [0,1]\), and \(\gamma \ge 0\). Kim and Lee (2024) recently applied this to INGARCH(1,1) models. From (3.15), the minimum exponential power divergence estimator (MEPDE) is obtained as the minimizer of \(\ell _{\eta ,t}(\theta )=\sum _{i=1}^2 \ell _{\eta ,i,t}(\theta )\) with

Any Brègman divergence estimators satisfying (3.13) and (3.14), including MDPDE and MEPDE, can be harnessed potentially for robust estimation. In our study, though, we employ MDPDE as its performance excels in general and compares well with others as reported in the simulation study of Kim and Lee (2024), while it has much simpler algorithms in computing.

Remark 2

The optimal choice of \(\gamma \) in MDPDE can be a crucial issue. A larger \(\gamma \) is recommended when the portion of outliers is large or the robustness takes precedence over efficiency. Hong and Kim (2001), Warwick (2005), and Warwick and Jones (2005), Fujisawa and Eguchi (2006), Toma and Broniatowski (2011), and Durio and Isaia (2011) developed a procedure for choosing an optimal \(\gamma \). One can instead use Pearson’s correlation as a criterion to choose an optimal \(\gamma \) by comparing the correlations of \(Y_{ti}\) and \({\hat{Y}}_{ti}\) obtained from MDPDE. Lee and Na (2005) conservatively proposed to use a small \(\gamma \), say, 0.1 to 0.3, in practice, considering the presence of a possible structural change in the dataset.

Though Theorems 1 and 2 hold for general MV-inflated models, in our empirical studies of Sections 4 and 5, we focus on the Poisson-Lindley zero–one inflated BINAR(1) models with the innovational distribution in (3.16) because this kind of time series of counts with zero–one inflation frequently occurs in real situations. If \(f_{\psi _i}\) is Poisson-Lindley in (2.7), we can write

which composes the pmf in (3.8). Note that the corresponding mean value of (3.16) is as follows:

As \(\mu _{\varrho _i, \psi _i}=\mu _{\varrho _i^{'}, \psi _i^{'}}\) does not necessarily guarantee the coincidence of \((\varrho _i, \psi _i)\) and \((\varrho _i^{'}, \psi _i^{'})\), Mohammadi et al. (2022) proposed to use a two-step CLSE using the conditional variance formula of \(Y_t\). However, such a method is not directly applicable to more general multiple inflation cases and requires more laborious efforts. This inconvenience also supports the use of QMLE.

Remark 3

The result of Theorems 1 and 2 can be applied to the change point test. The change point test is critical in the analysis of time series of counts, as the previous studies frequently reveal its existence in practical applications. For performing a test, we set up the hypotheses:

and then perform a test using the score vector-based test statistic:

where \({{\hat{\theta }}}_{\gamma ,n}\) is MDPDE and

which is consistent to \({{{\mathcal {K}}}}_\gamma \) in (3.15) under \({{{\mathcal {H}}}}_0\). Then, provided \({{{\mathcal {K}}}}_\gamma \) is nonsingular, under the conditions in Theorem 2, we can check that under \({{{\mathcal {H}}}}_0\), (3.13) and (3.14) hold true, and

where \({\textbf{B}}^\circ _{d}(s)\) denotes a d-dimensional Brownian bridge. For the proof, we refer to Lee and Jo (2023b). The critical value of the test can be obtained through a Monte Carlo simulation, using the result in (3.18). For example, for \(d=10\), \(c = 4.435\) is used as the critical value at the significance level 0.05. The location of the change point at the rejection of the null hypothesis is estimated as the point k that maximizes \(\hat{{{\mathcal {T}}}}_n (k)\) in (3.17).

Remark 4

In practice, detecting outliers is important in modeling and inference because outliers can hamper their correct behaviors. Since Fox (1972), there have been many studies on this subject in time series analysis, refer to Chang et al. (1988) and Tsay et al. (2000). Lee and Jo (2023b) described the procedure for correcting the innovational outliers (IO) and additional outliers (AO) in bivariate random coefficient INAR(1) (BRCINAR(1)) models. Below we describe the AO case that will be used in our empirical study of Section 5. We reexpress Model (2.1) as \(Y_t = AY_{t - 1} + \mu + \epsilon _t,\) where \(\mu =E Z_t\) and \(\{\epsilon _t\}\) forms a martingale difference sequence. To express the AO-contamination of \(\{Y_t\}\) with magnitude \(\omega \) at time \(\tau \), we write \(Y_t^A = Y_t + \omega \xi _t^{(\tau )}\), with \(\xi _t^{(\tau )} = I (t=\tau )\), which is an actual observation. Then, using \({{\hat{\epsilon }}}_t^A=Y_t^A-{\hat{A}}_n Y_{t-1}^A -{{\hat{\mu }}}_n\), where \({\hat{A}}_n\) and \({{\hat{\mu }}}_n\) are MDPDEs of A and \(\mu \) obtained from observations \(Y_1^A,\ldots , Y_n^A\), we obtain the estimates of \(\omega \) and \(\tau \) as follows:

Subsequently, to test the existence of AO at time \({{\hat{\tau }}}_n\), we use the chi-square statistic \({{\hat{\omega }}}_n^T {{\hat{\Sigma }}}_n^{-1}{{\hat{\omega }}}_n\), where

and \(I_2\) is a \(2 \times 2\) identity matrix. In case an outlier is detected, the corrected \(Y_t\) is obtained by subtracting \({\hat{w}}_n\) from \(Y_t^A\) at \(t={{\hat{\tau }}}_n\).

Remark 5

In the analysis of count time series models, forecasting is a noteworthy topic to consider. To perform the forecasting through our model, one can employ the conditional mean equation in (2.4). First, the target parameters \(\theta \) are estimated using MDPDE or QMLE from the training data \(Y_1, \dots , Y_n\). Then, the observed values and the estimated parameters are input into the conditional mean equation in (2.4) and (2.5), and the obtained values are rounded to complete the prediction. To perform multi-step ahead forecasting, the predicted values obtained through the one step prediction procedure are recursively utilized for the subsequent forecasting as follows: for \(i = 1, 2\),

where [x] denotes the greatest integer not exceeding x, and \({{\hat{\alpha }}}_{ij}\), \({{\hat{\rho }}}_{ij}\), and \({{\hat{\mu }}}_i\) are estimators like MDPDE or QMLE based on \(Y_1,\ldots ,Y_n\), and \({\hat{Y}}_{t-1, j}\) are obtained predicted values with initial values \({\hat{Y}}_{n j}=Y_{nj}\). Specifically, \({{\hat{\mu }}}_i\) is the corresponding estimate of \(\mu _i=\sum _{y=0}^\infty y f_{\psi _i}(y)\), which equals \(\psi _i\) in the cases of the one-parameter exponential families. This method is referred to in Jung and Tremayne (2006), and the actual analysis for this forecasting method is referenced in Lee et al. (2023).

4 Simulation study

In this section, we evaluate the performance of QMLE and MDPDE for the zero–one inflated BINAR(1) model (namely, \(M_1=M_2=2\)) with Poison-Lindley innovations. In this experiment, we use the samples of size \(n = 200\) and the parameter settings as follows:

The repetition number for calculating empirical sizes and powers in each simulation is 1000. We compare the performance of MDPDE (\(\gamma = 0.1, 0.2, 0.3, 0.5, 1\)) with that of QMLE (\(\gamma = 0\)). For this, we examine the estimators’ sample mean, variance, and mean squared error (MSE). In Tables 1, 2, 3, 4, 5 and 6, the bold figures represent minimal MSEs for each parameter setting. To generate \(Z_t\), we first generate \(U_t=(\Phi (V_{t1}), \Phi (V_{t2}))^T\), where \(V_t =(V_{t1}, V_{t2})^T\) are iid bivariate normal random variables with \(EV_{ti}=0\), \(Var(V_{ti})=1\), \(i=1,2\), and \(Corr(V_{t1}, V_{t2})= \rho \), then \(Z_t = (F_{\psi _1}^{-1}(U_{t1}),F_{\psi _2}^{-1}(U_{t2}))^T\), where \(F_\psi \) denotes the Poisson-Lindley distribution function with parameter \(\psi \). Here, we take account of \(\rho =-0.5, 0, 0.5\).

We first report the simulation result in the case of \(\rho =0\). Tables 1 and 2 show the results when the data is not contaminated by outliers, which shows that QMLE has the minimal MSE for all cases and the MSEs of MDPDE with small \(\gamma \) look similar to those of QMLE. As \(\gamma \) increases, the MSE of MDPDE also increases, which confirms that MDPDE with a large \(\gamma \) has a loss of efficiency as observed in Kim and Lee (2020).

Next, to evaluate the robustness of the estimators against outliers, we generate the contaminated data \(Y_{c,t}\) as follows (cf. Fried et al. 2015; Kim and Lee 2020; Lee and Jo 2023b):

where \(Y_t\) are generated from (2.1) with the Poisson-Lindley innovation \(Z_t\), \(P_t\) are iid Bernoulli random variables with success probability \(p = 0.05\), and \(Y_{o,t}\) are iid Poisson Lindley random variables with \(\psi = 0.5\). Tables 3 and 4 exhibit that MDPDE produces smaller MSEs than QMLE, which supports the superiority of MDPDE over QMLE when outliers contaminate the data.

Tables 5 and 6 exhibit the MSEs of MDPDE and QMLE when a significant outlier exists. Herein, the big outlier is generated from a Poisson-Lindley distribution with \(\psi = 0.1\) and its location is generated from a uniform distribution over \([\frac{n}{3}, \frac{2n}{3}]\) with \(n=200\). The result shows that the MDPDE has smaller MSEs than QMLE for all cases, confirming the validity of MDPDE as well.

Tables 7, 8, 9 and Tables 10, 11, 12 respectively portray the results on the cases of \(\rho = 0.5\) and \(-0.5\), showing a pattern similar to Tables 1, 2, 3, 4, 5 and 6. Thus far, we have conducted experiments to assess the performance of MDPDE in the presence of additive outliers and a big single outlier. Overall, our findings consolidate the excellent performance of MDPDE against outliers.

Finally, Tables 13, 14, 15, 16 display the empirical sizes and powers of the change point tests in Remark 3. The empirical sizes and powers are calculated as the ratios of the rejection numbers of the null hypothesis out of 1000 repetitions. In particular, to assess the empirical power, we assume that the change point occurs at [n/2]. Tables 13 and 14 present that there is no significant distortion in the size of the CUSUM test, regardless of the presence of outliers generated from (4.19), but still \(\gamma =0.1, 0.2\) produce more stable results by a slight margin in the presence of outliers. Tables 15 and 16 present that all the tests produce very high powers. Our findings in this specific parameter setting demonstrate the effectiveness of the change point tests.

5 Real data analysis

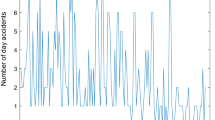

In this section, we illustrate a real data example using the number of monthly earthquake cases (magnitude more than 3) in the United States from January 2000 to December 2019. Time series data can be obtained from the earthquake catalog in the USGS earthquake hazards program (http://usgs.gov). This earthquake data has been intensively studied by researchers on the topics including earthquake forecasting, hazard assessment to seismicity analysis, and identifying temporal and spatial patterns in earthquake datasets, refer to Thomas (1994), Gerstenberger et al. (2005), and Goebel et al. (2017) for a general background. Among the earthquake datasets, we select the bivariate time series of the monthly number of earthquake cases in Washington (WA) and Nevada (NE), with 240 observations. The sample mean and variance are 2.99 and 87.48 for WA and 2.15 and 11.75 for NE. Also, the zero and one portions are 0.33 and 0.26 for WA and 0.27 and 0.27 for NE, indicating a high frequency of zero and one observations in both time series. Figures 1 and 2 plot the monthly time series of WA and NE, particularly showing a possibility of outliers in each series. The autocorrelation function (ACF) and partial autocorrelation function (PACF) of the time series, and the cross-correlation function (CCF) are displayed in Figs. 3 and 4, respectively.

To analyze this bivariate time series data, we fit a 0,1-inflated BINAR(1) model with Poisson-Lindley innovations and choose an optimal \(\gamma \) among \(\{0.1, 0.2\ldots , 1\}\) for MDPDE using the criterion of Hong and Kim (2001), who proposed to select \(\gamma \) that minimizes the trace of the estimated asymptotic variance of \({{\hat{\theta }}}_{\gamma , n}\)(cf. Lee et al. (2023)). The criterion chooses \(\gamma = 0.2\) and we obtain MDPDEs with this choice as:

The test \(\hat{{{\mathcal {T}}}}_n^\gamma \) with \(\gamma =0.2\) in (3.17) detects one change point at \(t=60\), indicated by the red vertical lines in Figs. 1 and 2. However, this result is unreliable as the outliers exist before and after the change point.

To identify and correct the outliers, we use the method described in Remark 4. As a result, three AO’s are identified at \(t=58, 100\) and 136, specified by the red points in Figs. 1 and 2. After the correction of these three outliers, MDPDEs with \(\gamma =0.2\) are obtained as

which significantly differ from what we have obtained previously. In particular, \(\rho \) and \(\psi \)’s estimators appear to experience a dramatic change compared to the others. This result implies that the outliers significantly influences on the estimation of the zero–one inflation and Poisson-Lindley parameters. Unlike before, \(\hat{{{\mathcal {T}}}}_n^\gamma \) with \(\gamma =0.2\) is revealed to detect a change point at \(t=91\) after the correction of outliers, as seen in Figs. 5 and 6, which looks more reasonable compared to the previously obtained change point of \(t=60\). This result reaffirms that outliers can severely impact both the parameter estimation and change point test and decrease their accuracies.

MDPDEs with \(\gamma =0.2\) obtained from the two subseries before and after the change point are as follows:

and

The MDPDEs before and after the change point exhibit a significant difference, particularly in \({{\hat{\alpha }}}_{11,n}\), \({{\hat{\alpha }}}_{12,n}\), \({{\hat{\rho }}}_{11,n}\), \({{\hat{\rho }}}_{22,n}\), and \({{\hat{\psi }}}_{2,n}\). Detecting the change point in the model is crucial due to the significant difference in MDPDEs before and after the change point, and this is also discussed in Lee and Jo (2023b). All our findings strongly confirm the functionality of our proposed methods in real applications.

6 Concluding remarks

In this study, we introduced an MV-inflated BINAR(1) model and substantiated the strong consistency and asymptotic normality of QMLE and MDPDE under regularity conditions. Moreover, the change point test and outlier detection method based on MDPDE were outlined for a practical application. To evaluate the performance of MDPDE in the presence of outliers, we conducted Monte Carlo simulations and real data analysis using the number of monthly earthquake cases in the United States. All the acquired results affirmed the validity of our proposed methods. While we focused on stationary time series models here, as mentioned by a referee, one can also consider an extension of our methods to time series of counts with more complicated characteristics, for example, time series with a (stochastic) trend or periodicity (seasonality). As this problem extends beyond the scope of our current study, it is deferred as a subject for future research projects.

Data availability

The data can be obtained from the earthquake catalog in the USGS earthquake hazards program (http://usgs.gov).

References

Al-Osh, M. A., & Alzaid, A. A. (1987). First-order integer-valued autoregressive INAR(1) process. Journal of Time Series Analysis, 8, 261–275.

Altun, E. (2020). A new generalization of geometric distribution with properties and applications. Communications in Statistics-Simulation and Computation, 49, 793–807.

Berkes, I., Horvath, L., & Kokoszaka, P. (2004). Testing for parametric constancy in GARCH(p, q) models. Statistics & Probability Letters, 70, 263–273.

Chang, I., Tiao, G., & Chen, C. (1988). Estimation of time series parameters in the presence of outliers. Technometrics, 30, 193–204.

Chen, C., Khamthong, K., & Lee, S. (2019). Markov switching integer-valued generalized auto-regressive conditional heteroscedastic models for dengue counts. Journal of the Royal Statistical Society: Series C: Applied Statistics, 68, 963–983.

Csörgő, M. and Horváth, L. (1997). Limit Theorems in Change-Point Analysis. John Wiley & Sons Inc. New York., 18:ISBN:978–0–471–95522–1.

Darolles, S., Fol, G., Lu, Y., & Sun, R. (2019). Bivariate integer-autoregressive process with an application to mutual fund flows. Journal of Multivariate Analysis, 173, 181–203.

Durio, A., & Isaia, E. (2011). The minimum density power divergence approach in building robust regression models. Informatica, 22, 43–56.

Ferland, R., Latour, A., & Oraichi, D. (2006). Integer-valued GARCH process. Journal of Time Series Analysis, 27, 923–942.

Fokianos, K., Rahbek, A., & Tjøstheim, D. (2009). Poisson autoregression. Journal of American Statistical Association, 104, 1430–1439.

Fox, A. J. (1972). Outliers in time series. Journal of the Royal Statistical Society, Series B: Statistical Methodology, 34, 350–363.

Fried, R., Agueusop, I., Bornkamp, B., Fokianos, K., Fruth, J., & Ickstadt, K. (2015). Retro spective bayesian outlier detection in INGARCH series. Statistics and Computing, 25, 365–374.

Fujisawa, H., & Eguchi, S. (2006). Robust estimation in the normal mixture model. Journal of Statistical Planning & Inferences, 136, 3989–4011.

Gerstenberger, M., Wiemer, S., Jones, L., & Reasenberg, P. (2005). Real-time forecasts of tomorrow’s earthquakes in California. Nature, 435, 328–331.

Goebel, T., Weingarten, M., Chen, X., Haffener, J., & Brodsky, E. (2017). The 2016 Mw5.1 Fairview, Oklahoma earthquakes: Evidence for long-range poroelastic triggering at \(>\) 40 km from fluid disposal wells. Earth and Planetary Science Letters, 472, 50–61.

Gombay, E. (2008). Change detection in autoregressive time series. Journal of Multivariate Analysis, 99, 451–464.

Hong, C., & Kim, Y. (2001). Automatic selection of the tuning parameter in the minimum density power divergemce estimation. Journal of the Korean Statistical Society, 30, 453–465.

Jazi, M., Jones, G., & Lai, C. (2012). Integer valued AR(1) with geometric innovations. Journal of the Iranian Statistical Society, 11, 173–190.

Jo, M., & Lee, S. (2023). Inference for integer valued AR model with zero inflated poisson innovations. Journal of the Korean Data & Information Science Society, 34, 1031–1039.

Jung, R., & Tremayne, A. (2006). Coherent forecasting in integer time series models. International Journal of Forecasting, 22, 223–238.

Kim, B., & Lee, S. (2020). Robust estimation for general integer-valued time series models. Annals of the Institute of Statistical Mathematics, 72, 1371–1396.

Kim, B., & Lee, S. (2024). Robust estimation for general integer-valued time series models based on the exponential-polynomial divergence. Journal of Statistical Computation and Simulation, 94, 1300–1316.

Lambert, D. (1992). Zero inflated poisson regression, with an application to defects in manufacturing. Technometrics, 34, 1–14.

Lee, S. (2020). Location and scale-based CUSUM test with application to autoregressive models. Journal of Statistical Computation and Simulation, 90, 2309–2328.

Lee, S., Ha, J., Na, O., & Na, S. (2003). The CUSUM test for parameter change in time series models. Scandinavian Journal of Statistics, 30, 781–796.

Lee, S., & Jo, M. (2023). Bivariate random coefficient integer-valued autoregressive models: Parameter estimation and change point test. Journal of Time Series Analysis, 44, 644–666.

Lee, S., & Jo, M. (2023). Robust estimation for bivariate integer valued autoregressive models based on minimum density power divergence. Journal of Statistical Computation and Simulation, 93, 3156–3184.

Lee, S., & Kim, D. (2023). Multiple values-inflated exponential family integer-valued GARCH models. Journal of Statistical Computation and Simulation, 93, 1297–1317.

Lee, S., Kim, D., & Kim, B. (2023). Modeling and inference for multivariate time series of counts based on the INGARCH scheme. Computational Statistics & Data Analysis, 177, 107579.

Lee, S., & Na, O. (2005). Test for parameter change based on the estimator minimizing density-based divergence measures. Annals of the Institute of Statistical Mathematics, 57, 553–573.

Lee, Y., & Lee, S. (2019). CUSUM test for general nonlinear integer-valued GARCH models. Annals of the Institute of Statistical Mathematics, 71, 1033–1057.

Livio, T., Khan, N., Bourguignon, M., and Bakouch, H. (2018). An INAR(1) model with poisson-lindley innovations. Economics Bulletin, 38.

Lu, F., & Wang, D. (2022). A new estimation for INAR (1) process with poisson distribution. Computational Statistics, 37, 1185–1201.

McKenzie, E. (1985). Some simple models for discrete variate time series. Journal of American Water Resource Association, 21, 645–650.

Mohammadi, Z., Sajjadnia, Z., Bakouch, H., & Sharafi, M. (2022). Zero-and-one inflated poisson-lindley INAR(1) process for modelling count time series with extra zeros and ones. Journal of Statistical Computation and Simulation, 92, 2018–2040.

Mohammadpour, M., Bakouch, H., & Shirozhan, M. (2018). Poisson-lindley INAR(1) model with applications. Brazilian Journal of Probability and Statistics, 32, 262–280.

Mukherjee, T., Mandal, A., & Basu, A. (2019). The B-exponential divergence and its generalizations with applications to parametric estimation. Statistical Methods & Applications, 28, 241–257.

Pedeli, X., & Karlis, D. (2011). A bivariate INAR(1) process with application. Statistical Modelling, 11, 325–349.

Pedeli, X., & Karlis, D. (2013). On estimation of the bivariate Poisson INAR process. Communications in Statistics - Simulation and Computation, 42, 514–533.

Singh, P., Mandal, A., & Basu, A. (2021). Robust inference using the exponential-polynomial divergence. Journal of Statistical Theory and Practice, 15, 1–22.

Thomas, H. (1994). The uniform california earthquake rupture forecast,. Version 1.

Toma, A., & Broniatowski, M. (2011). Dual divergence estimators and tests: Robustness results. Journal of Multivariate Analysis, 102, 20–36.

Tsay, R., Peña, D., & Pankratz, A. (2000). Outliers in multivariate time series. Biometrika, 87, 789–804.

Warwick, J. (2005). A data-based method for selecting tuning parameters in minimum distance estimators. Computational Statistics & Data Analysis, 48, 571–585.

Warwick, J., & Jones, M. C. (2005). Choosing a robustness tuning parameter. Journal of Statistical Computation and Simulation, 75, 581–588.

Weiß, C. H. (2015). A poisson INAR(1) model with serially dependent innovations. Metrika, 78, 829–851.

Weiß, C. H. (2018). An Intorduction to Discrete-Valued Time Series. Wiley, New York., ISBN-13:978-1119096962.

Acknowledgements

The authors are grateful to the Editor, an AE, and two anonymous referees for their careful reading and valuable comments. This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF-2021R1A2C1004009).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest.

The authors declare no Conflict of interest. Sangyeol Lee is an Associate Editor of Journal of the Korean Statistical Society. Associate Editor status has no bearing on editorial consideration.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proof

Proof

Proof of Theorem 1

From (3.8), one can see that

due to the fact: \(J(m,n,p_1,p_2, 0)=(1-p_1)^m (1-p_2)^n\) for any \(m,n\ge 0\). Then, provided \(f_{\psi _i}\) is the one in (2.6), satisfying \( E|\log h (Y_{ti}) |<\infty , \ i=1,2, \) which holds particularly true for the zero-inflated Poisson innovation case, we have

This can be shown to hold for the Poisson-Lindley case as well. Then, using the continuity of \(\ell _t (\theta )\) in \(\theta \) and the uniform strong law of large numbers for stationary ergodic processes, we can get

which implies the strong convergence of \({{\hat{\theta }}}_n\) to \(\theta _0\), as \(E\ell _t (\theta )<E \ell _t (\theta _0 )\) for all \(\theta \ne \theta _0\), due to the Kullback–Leibler divergence property and the model identifiability discussed earlier.

Moreover, using simple algebras, we can easily check that

which results in

Then, applying the martingale central limit theorem to \(\{\frac{\partial \ell _{t}(\theta _0)}{\partial \theta }\}\), we obtain

Moreover, owing to (7.20), using the uniform law of strong large numbers combined with the ergodicity of \(\{Y_t\}\) and the dominated convergence theorem, we can have

where \({\tilde{\theta }}_n\) is an intermediate point between \(\theta _0\) and \({{\hat{\theta }}}_n\), and \(||A||_1 =\sum _{i,j=1}^d |A_{ij}|\) for any \(d\times d\) matrix \(A=(A_{ij})\), so that

which asserts the theorem. \(\Box \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lee, S., Jo, M. Multiple values-inflated bivariate INAR time series of counts: featuring zero–one inflated Poisson-Lindly case. J. Korean Stat. Soc. 53, 815–843 (2024). https://doi.org/10.1007/s42952-024-00269-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42952-024-00269-0