Abstract

Purpose

Developing a computer-assisted speech training/recognition system for recognizing the speeches of dysarthric speakers has become necessary because their speeches are highly distorted due to the motor disorder in their articulatory mechanism.

Methods

In this work, two-dimensional spectrograms in BARK and MEL scale and Gammatonegram are used as features to tune the convolutional neural network (CNN) architecture designed to perform the dysarthric speech recognition.

Results

Overall recognition accuracy is 88%, 97.9%, and 98% for the CNN-based dysarthric speech recognition system using Gammatonegram, spectrogram, and Melspectrogram, respectively. However, decision-level fusion of these features results has yielded 99.72% overall accuracy with 100% individual accuracy for some of the dysarthric isolated digits. This work is extended to have a phase spectrum compensation technique to improve the intelligibility of dysarthric speeches, and the decision-level fusion classifier provides relatively better accuracy of 99.92% for classifying isolated digits spoken by dysarthric speakers.

Conclusion

This work can be utilized to recognize the distorted speeches of dysarthric speakers like normal speeches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Speech is the sequence of sounds being considered an output of the time-varying vocal tract system. Articulators are moving in response to the neural signals for producing regular speech. Dysarthric speeches are distorted because persons with dysarthria are affected by a motor speech disorder, and they cannot control the movement of articulators. As a result, dysarthric speakers experience impediments in speaking properly. Articulators and muscles involved in speech production mechanisms are damaged or paralyzed for dysarthric speakers, and they find difficulty in conveying information to others through speech. The speech intelligibility of dysarthric speakers (Kim et al. 2008) considered in our work ranges between 2 and 95%. A dysarthria severity level (Gupta et al. 2021) is assessed using short speech segments based on residual neural networks. Dysarthric severity classification (Joshy and Rajan 2021) uses deep neural networks with speech utterances from Torgo and UA-speech databases. Articulatory features and deep CNN (Emre et al. 2019) are used for developing speaker-independent speech recognition systems for dysarthric speakers.

The dysarthric speech recognition system (Kim et al. 2018) is implemented using Mel frequency cepstral coefficients (MFCC) and assessed using GMM-HMM, DNN-HMM, CNN-HMM, and CLASM-HMM classifiers. Dysarthric speech recognition (Albaqshi and Sagheer 2020) is done using MFCC and convolutional recurrent neural networks for the Torgo database. Listen, Attend and Spell (LAS) model (Takashima et al. 2019) is investigated for dysarthric speech recognition, and the performance metric used is character error rate (CER). The intelligibility of dysarthric speeches (Chen et al. 2020) is enhanced using a gated CNN-based voice conversion system. An automatic speech recognition system is developed for dysarthric speakers. The accuracy of dysarthric speech recognition (Sidi Yakoub et al. 2020) is improved using empirical mode decomposition and CNN. This work mainly uses the speech enhancement technique to improve the accuracy of the dysarthric isolated digit recognition system. It utilizes deep machine learning neural network models for template creation and testing for original dysarthric speeches and intelligibility-enhanced speeches using phase spectrum compensation (PSC) as a speech enhancement mechanism. This paper is organized as follows. ‘Development of dysarthric speech recognition system’ section describes the database used in our work and analyses normal and dysarthric speech in time, frequency, and time–frequency domains. ‘Implementation of the CNN-based dysarthric speech recognition’ section describes the methods for implementing the system with feature extraction, CNN-based model development, and the speech enhancement technique used. Experimental results based on the proposed features and CNN-based system are presented in the ‘Results of the dysarthric speech recognition system based on experiments conducted’ section. ‘Discussion based on the outcome of the experiments’ section illustrates the discussion on the experimental results. The conclusion of the work is summarized in the ‘Conclusions’ section.

Development of dysarthric speech recognition system

Dysarthric speech recognition is developed to recognize the speeches uttered by dysarthric speakers. Since a dysarthric speaker’s speeches are highly distorted, developing a speech recognition system is gaining paramount importance, and it is quite challenging to develop a robust system. This section illustrates the details of the database used and analysis of dysarthric speeches in time and frequency domains.

Details of the database used—dysarthric speaker information (Kim et al. 2008)

Table 1 indicates the speaker information considered in our study. An isolated digit recognition system is developed to recognize the digits uttered by dysarthric speakers. These speakers are diagnosed as patients with spastic. Intelligibility levels vary between 2 and 95%.

Analysis of speech in time, frequency, and time–frequency domains

Speeches uttered by normal and dysarthric speakers are analysed in time, frequency, and time–frequency domains. For example, Fig. 1 presents the analysis of speech signals uttered by a normal speaker in the time, frequency, and time–frequency domain. He takes less than a second (0.84 s) to utter this word.

Analysis of speech uttered by dysarthric speaker M09 with 86% speech fluency in time, frequency, and time–frequency domain is indicated in Fig. 2. Since this dysarthric speaker with 86% intelligibility, there are more similarities in signal characteristics as compared to the speech uttered by the normal speaker. For example, this speaker takes 2.15 s to utter the digit ‘one’.

Figure 3 indicates the analysis of speech uttered by the dysarthric speakers with 6% intelligibility in the time, frequency, and time–frequency domain. A dysarthric speaker utters this isolated digit with 6% intelligibility, and this fact is demonstrated in signal characteristics as compared to that of the normal speaker. This speaker takes 2.6 s to utter the isolated digit ‘one’.

This analysis indicates that dysarthric speakers take more time to speak simple words, and the severity level of the dysarthric speakers affects their ability to speak to a larger extent. As a result, it takes longer for them to speak. So, there is a need to develop an automated system to recognize their speeches and become a translator.

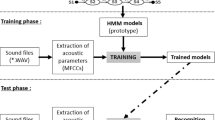

Implementation of the CNN-based dysarthric speech recognition

Dysarthric speech recognition is implemented by considering two phases: training and testing. During the training phase, features are extracted from the speeches uttered by the dysarthric speakers, the application of features to the modelling techniques to create templates as representative models of speeches, and models are fine-tuned for speech recognition. During testing, features are extracted from the speeches earmarked for testing. These features are applied to the models. Depending on the classifier used, speech is recognized as associated with the model in a pertinent isolated digit.

Feature extraction phase

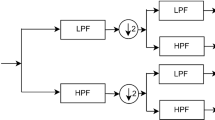

In this work on CNN-based dysarthric speech recognition, time–frequency representational features are used to fine-tune the CNN models. Features extracted should have high discriminating capability among the speeches considered. Speech utterances in a pertinent isolated digit are concatenated, and spectrogram, Melspectrogram, and Gammatonegram are extracted for the speech frames containing 8192 samples, and this process is repeated for every 256 samples. The block schematic used for feature extraction is depicted in Fig. 4.

Eighty percent of the features are used for training and the remaining 20% for testing. Size of the spectrogram, Melspectrogram, and Gammatonegram feature is [129,127], [64,127], and [64,49], respectively. For one frame, spectrogram, Melspectrogram, and Gammatonegram are plotted as in Figs. 5, 6, and 7.

Development of CNN templates

Spectrogram, Melspectrogram, and Gammatonegram two-dimensional feature sets for each isolated digit are applied to the CNN network. Network models are fine-tuned to perform speech recognition for dysarthric speakers. Table 2 describes the CNN layered architecture (Soliman et al. 2021 J. Zhang et al. 2017, Arias-Vergara et al. 2021, Vavrek et al. 2021, Sangwan et al. 2020, Chen et al. 2020, P. H. Binh et al. 2021) for Gammatonegram-based dysarthric isolated digit recognition implemented as a work.

Similar CNN architecture is implemented with variations in the image input size [129, 127, and 1] for spectrogram and [64, 127, and 1] for Melspectrogram-based CNN networks. Figure 8 indicates the modules used for creating group CNN templates for the proposed features.

Phase spectrum compensation-based speech enhancement (Stark et al. 2008)

In this method, the modified phase response is combined with a magnitude response to get the changed frequency response for the noisy speech. Analysing the relation between spectral-domain and time-domain during the synthesis process makes it possible to cancel out the high-frequency components, thus producing a signal with a reduced noise component. The STFT of the noisy signal is computed as in (1).

The compensated short-time phase spectrum is computed by using Eqs. (2) and (3).

The process obtains phase spectrum compensation function as in Eq. (2).

\(\left|{D}_{n}(k)\right|\) specifies magnitude response of the noise signal\(\lambda\)—constant

The anti-symmetry function \(\psi (k)\) is defined as in (3).

Multiplication of symmetric magnitude spectra of the noise signal with anti-symmetric function \(\psi \left(k\right)\) produces an anti-symmetric \({\wedge }_{n}\left(k\right)\). Noise cancellation is made during the synthesis process by utilization of the anti-symmetry property of the phase spectrum compensation function. The complex spectrum of noisy speech is computed as in Eq. (4).

The compensated phase spectrum of the noisy signal is derived as in Eq. (5).

Recombination of the compensated phase response with magnitude response of the noisy signal is done to get the modified spectrum, from which enhanced speech is derived by performing inverse transform as in (7) on the modified spectral response given in (6).

Figure 9 indicates the performance of the speech enhancement technique by phase compensation.

Results of the dysarthric speech recognition system based on experiments conducted

Speech utterances in pertinent isolated digits are concatenated, and spectrogram, Melspectrogram, and Gammatonegram two-dimensional features are extracted after voice activity detection for original raw dysarthric speeches. Similarly, speech intelligibility is improved using phase spectrum compensation as a speech enhancement technique on raw dysarthric speeches. The proposed time–frequency representational features are extracted for the enhanced dysarthric speeches. Eighty percent of the features have been used for training. Twenty percent of the features are considered for testing. These features are given to the CNN models. Based on the matching, each row of the test vectors is associated with one of the groups in training. Group can be categorical string of indices of isolated digits ‘1’, ‘2’, ‘3’, ‘4’, ‘5’, ‘6’, ‘7’, ‘8’, ‘9’, ‘0’. The confusion graph in Fig. 10 indicates the performance of the Gammatonegram and CNN-based dysarthric isolated digit recognition without applying PSC for speech enhancement. The overall average system accuracy is 88.3%, with a relatively low accuracy of 83% for recognizing the digit ‘2’.

The confusion chart shown in Fig. 11 depicts spectrogram and CNN-based dysarthric isolated digit recognition system without PSC. The average accuracy is 97.8%.

The confusion chart depicted in Fig. 12 demonstrates the performance of the dysarthric isolated digit recognition for the Melspectrogram and CNN-based system. The average accuracy is 98%.

Decision-level fusion of results of three spectrograms for the CNN-based system is done, and Table 3 reveals the performance of the decision-level fusion system. Figure 13 indicates the proposed decision-level fusion system. The overall accuracy of the decision-level fusion classifier is 99.72%.

Figures 14, 15, and 16 indicate the performance of the spectrogram, Melspectrogram and Gammatonegram, and CNN-based system by using PSC as an enhancement technique on the raw dysarthric speeches for improving Intelligibility.

The average recognition accuracy for Gammatonegram, spectrogram and Melspectrogram, and CNN-based system with PSC as speech enhancement technique is 96.67%, 99.46%, and 98.76%, respectively. Table 4 indicates the performance of the dysarthric isolated digit recognition system for PSC as a speech enhancement technique with a decision-level fusion of results corresponding to the two-dimensional features such as spectrogram, Melspectrogram, and Gammatonegram.

Overall average accuracy is 99.92%. Figure 17 indicates the comparative performance of the system with and without the speech enhancement technique.

This work on isolated digit recognition is extended to perform connected word recognition. Twenty related words spoken by dysarthric speakers are taken, and Fig. 18 indicates the confusion charts for connected word recognition using the proposed features and CNN-based systems. The performance of the decision-level fusion of correct indices on the features and CNN-based systems is indicated in Fig. 19. This system is evaluated using PSC for speech enhancement, and the accuracy is good.

Discussion based on the outcome of the experiments

In this work on dysarthric speech recognition, speech utterances of dysarthric speakers are split into two sets, each for training and testing. Spectrogram, Melspectrogram, and Gammatonegram features are extracted from basic training speeches, and CNN templates are created for each isolated digit based on the pertinent input features. Test sets of utterances in each isolated digit are tested, and the system’s performance is analysed based on three different two-dimensional spectrogram features with CNN for modelling and classification. Out of the features used, the overall accuracy of the system for spectrogram and Melspectrogram is the same. Another experiment is conducted on the intelligibility improved speeches of dysarthric speakers with an application of the phase spectrum compensation technique for speech enhancement. Spectrogram-based feature selection is better in terms of attaining good accuracy as compared to other features. Decision-level fusion of outcome of the experiments for the features with and without speech enhancement technique proves to be good in attaining the very good accuracy of 99.92% for all the isolated digits uttered by dysarthric speakers. Table 5 gives the comparative analysis of the proposed work with existing works mentioned in the literature.

Conclusions

In this paper, the development of a speech recognition system for recognizing the isolated digits uttered by dysarthric speakers is analysed and assessed using two-dimensional spectrogram features and deep CNN. Spectrogram, Melspectrogram, and Gammatonegram features are extracted from the speech utterances corresponding to the speech utterances of isolated digits [‘1’, ‘2’, ‘3’, ‘4’, ‘5’, ‘6’, ‘7’, ‘8’, ‘9’, ‘0’]. Eighty percent of the derived two-dimensional time–frequency representational features are applied to the CNN layered architecture, and combined group CNN models are created. The remaining 20% of the features are applied to the CNN group models, and classification is done based on the association of feature frames with one of the groups in training. Gammatonegram-, spectrogram-, and Melspectrogram-based CNN have an overall accuracy of 88.3%, 97.89%, and 98%, respectively. Decision-level fusion of correct classification indices of three CNN-based systems has yielded a good overall accuracy of 99.72%, with 100% individual accuracy for some isolated digits. The system is evaluated by applying the PSC speech enhancement technique to the raw dysarthric speeches, and a 9% increase in overall accuracy is ensured for Gammatonegram and CNN-based systems. The speech enhancement technique ensures a 1% increase in accuracy for spectrogram- and Melspectrogram-based recognition systems with marginal improvement for decision-level fusion of all CNN-based systems. If there is a system to recognize the distorted speeches of dysarthric persons, this automated system would be useful for caretakers to provide required help/assistance to the persons affected with dysarthria.

Data availability

All relevant data are within the paper.

References

Albaqshi H, Sagheer A. Dysarthric speech recognition using convolutional recurrent neural networks. Int J Intell Eng Syst. 2020;13(6):384–92. https://doi.org/10.22266/ijies2020.1231.34.

Arias-Vergara T, Klumpp P, Vasquez-Correa JC, et al. Multi-channel spectrograms for speech processing applications using deep learning methods. Pattern Anal Applic. 2021;24:423–31. https://doi.org/10.1007/s10044-020-00921-5.

Binh PH, Hoang PV, Ba DX. A high-performance speech-recognition method based on a nonlinear neural network, 2021 international conference on system science and engineering (ICSSE). 2021:96–100. https://doi.org/10.1109/ICSSE52999.2021.9537942.

Chen C-Y, Zheng W-Z, Wang S-S, Tsao Y, Li P-C, Lai Y-H. Enhancing intelligibility of dysarthric speech using gated convolutional based voice conversion system. Interspeech. 2020;2022:4686–90. https://doi.org/10.21437/Interspeech.2020-1367.

Emre Y, Vikramjit M, Sivaramand G, Franco H. Articulatory and bottleneck features for speaker-independent ASR of dysarthric speech. Comput Speech Lang. 2019;58:319–34. https://doi.org/10.1016/j.csl.2019.05.002.

Gupta S, Patil AT, Purohit M, Parmar M, Patel M, Patil HA, Capobianco Guido R. Residual neural network precisely quantifies dysarthria severity-level based on short-duration speech segments. 2021;139:105–117. https://doi.org/10.1016/j.neunet.2021.02.008.

Joshy AA, Rajan R. Automated dysarthria severity classification using deep learning frameworks. In: 2020 28th European Signal Processing Conference (EUSIPCO). IEEE; 2021. pp. 116–20.

Kim M, Cao B, An K, Wang J. Dysarthric speech recognition using convolutional LSTM neural network. Proc Interspeech. 2018;2018:2948–52. https://doi.org/10.21437/Interspeech.2018-2250.

Kim H, Hasegawa-Johnson M, Perlman A, Gunderson J, Huang T, Watkin K, Frame S. Dysarthric speech database for universal access research. INTERSPEECH 2008, 9th annual International Speech Communication Association conference, Brisbane, Australia. (2008). https://www.isca-speech.org/archive/archive_papers/interspeech_2008/i08_1741.pdf.

Sangwan P, Deshwal D, Kumar D, Bhardwaj S. Isolated word language identification system with hybrid features from a deep belief network. Int J Commun Syst. 2020;e4418.

Sidi Yakoub M, Selouani S, Zaidi BF, et al. Improving dysarthric speech recognition using empirical mode decomposition and convolutional neural network. J Audio Speech Music Proc. 2020;2020(1). https://doi.org/10.1186/s13636-019-0169-5.

Soliman A, Mohamed S, Abdelrahman IA. Isolated word speech recognition using convolutional neural network, 2020 international conference on computer, control, electrical, and electronics engineering (ICCCEEE). 2021:1–6. https://doi.org/10.1109/ICCCEEE49695.2021.9429684.

Stark AP, Wójcicki KK, Lyons JG, Paliwal KK. Noise driven short-time phase spectrum compensation procedure for speech enhancement. In: Ninth annual conference of the international speech communication association. 2008.

Takashima Y, Takiguchi T, Ariki Y. End-to-end dysarthric speech recognition using multiple databases, ICASSP 2019 - 2019 IEEE international conference on acoustics, speech, and signal processing (ICASSP). 2019:6395–6399. https://doi.org/10.1109/ICASSP.2019.8683803.

Vavrek L, Hires M, Kumar D, Drotár P. Deep convolutional neural network for detection of pathological speech. In 2021 IEEE 19th world symposium on applied machine intelligence and informatics (SAMI) (pp. 000245–000250). IEEE. 2021.

Zhang J, Xiao S, Zhang H, Jiang L. Isolated word recognition with audio derivation and CNN, 2017 IEEE 29th international conference on tools with artificial intelligence (ICTAI). 2017:336–341. https://doi.org/10.1109/ICTAI.2017.00060.

Acknowledgements

The authors thank the Department of Science & Technology, New Delhi, for the FIST funding (SR/FST/ET-I/2018/ 221(C)). In addition, the authors wish to express their sincere thanks to the SASTRA Deemed University, Thanjavur, India, for extending infrastructural support to carry out this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

This article does not contain any studies with human participants or animals performed by any authors.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Revathi, A., Sasikaladevi, N. & Arunprasanth, D. Development of CNN-based robust dysarthric isolated digit recognition system by enhancing speech intelligibility. Res. Biomed. Eng. 38, 1067–1079 (2022). https://doi.org/10.1007/s42600-022-00239-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42600-022-00239-7