Abstract

Purpose

Breast cancer is still one of the deadliest forms of cancer for women, both in developed countries and in underdeveloped and developing nations. Mammograms are currently the most validated imaging techniques to support the differential diagnosis of malignant and benign lesions. Radiologists often only need to clarify doubts about regions of interest that correspond to suspected lesions. Deep-wavelet neural networks are convolutional neural networks that do not necessarily learn, as they can have predefined filter banks as their neurons.

Methods

In this work, we propose a deep hybrid architecture to support digital mammography region-of-interest imaging diagnosis based on six-layer deep-wavelet neural networks, to extract attributes of regions of interest from mammograms, and support vector machine with kernel second-degree polynomial for final classification.

Results

Classical classifiers such as Bayesian classifiers, single hidden layer multilayer perceptrons, decision trees, random forests, and support vector machines were tested. The results showed that it is possible to detect and classify injuries with an average accuracy of 94% and an average kappa of 0.91, employing a 6-layer deep-wavelet network and a two-degree polynomial kernel support vector machine as the final classifier.

Conclusion

Using a deep neural network with prefixed weights from the wavelets transform filter bank, it was possible to extract attributes and thus take the problem to a universe where it can be solved with relatively simple decision boundaries like those composed by support vector machines with second-degree polynomial kernel. This shows that deep networks that do not learn can be important in building complete solutions to support mammographic imaging diagnosis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Motivation and problem characterization

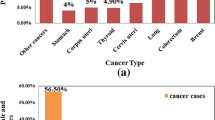

Cancer, in all its forms, has become one of the greatest public health problems of the 20th century worldwide, regardless of the levels of social and economic development of different nations around the globe (Bidard et al. 2018; Gonçalves et al. 2016; Pilevarzadeh et al. 2019; Wang et al. 2018). Of all the forms of cancer, breast cancer is the most dangerous cancer for older and middle-aged women (Bidard et al. 2018; Gonçalves et al. 2016; Pilevarzadeh et al. 2019; Wang et al. 2018), and it is also the most common form of cancer among women (Bidard et al. 2018; Gonçalves et al. 2016; Pilevarzadeh et al. 2019; Wang et al. 2018). Breast cancer is among the five most common types of cancer in the world (Shrivastava et al. 2017). In Brazil alone, it corresponds to about 28% of new cancer cases per year. Although, in general, there is a good prognosis, this disease is still responsible for the highest cancer mortality rate in the female population (Bidard et al. 2018; Gonçalves et al. 2016; Pilevarzadeh et al. 2019; Wang et al. 2018). According to the Brazilian Ministry of Health (MS), the early detection of tumors, which consists of identifying cancer in its early stages, is essential to reduce mortality from the disease (Gonçalves et al. 2016).

Breast cancer has been proliferating both in so-called developed countries and in underdeveloped and developing countries, accompanying the increase in the average life expectancy of the population, the swelling of cities, the gradual emptying of rural areas, and the adoption of new and more aggressive consumption forms (Bidard et al. 2018; Gonçalves et al. 2016; Pilevarzadeh et al. 2019; Wang et al. 2018). Even though the risk of breast cancer can be reduced through preventive strategies, such as carrying out educational campaigns that encourage visual inspection and touch of the breasts, even a good and well-accepted prevention campaign cannot eliminate most types of breast cancer, as they end up being diagnosed too late (Bidard et al. 2018; Gonçalves et al. 2016; Pilevarzadeh et al. 2019; Wang et al. 2018). Therefore, the existence and availability of technologies for early detection of breast cancer in public health systems can contribute to increase the chances of cure and treatment options (Bidard et al. 2018; Gonçalves et al. 2016; Pilevarzadeh et al. 2019; Wang et al. 2018).

Currently, the main method used to identify breast lesions is mammography, which consists of a breast scan using x-rays (Maitra and Bandyopadhyay 2017). However, despite technological advances that have resulted in improved technique and image quality, there are still situations in which mammography is insufficient to identify lesions, especially in their early stages, whether due to limitations of the method itself or inconsistencies in the specialists’ diagnosis due to the great variability of clinical cases (Bandyopadhyay 2010). For this reason, investigations using methods such as ultrasonography, magnetic resonance, and clinical examinations, in general, have been associated with the results obtained through mammography, in order to make the diagnosis more robust (Gonçalves et al. 2016). Even with the combination of these techniques, the Ministry of Health of Brazil still states that the majority of correctly identified cases are currently of advanced stage injuries, which makes treatment difficult, when it is possible to do so, and increases the need to perform procedures invasive ones such as biopsies and mastectomies (total or partial removal of the breast) (Gonçalves et al. 2016). In fact, the international scenario is not very different (Schattner 2020).

In this sense, the combination of specialist knowledge with methods of digital analysis of mammography images can contribute to improving diagnosis, prognosis, and treatment of breast cancer (Bandyopadhyay 2010; Nordin et al. 2008; Salmeri et al. 2009). As with other approaches to supporting diagnostic imaging (Santos et al. 2006a, b, 2007, 2008a, b, c, d, 2009a, b, c, d, e), the extraction of attributes is an essential aspect in obtaining high accuracy results in breast image analysis (Bandyopadhyay 2010; Boquete et al. 2012; Boujelben et al. 2009; Mascaro et al. 2009; Nordin et al. 2008). The use of CBIR (content-based image retrieval) techniques in feature representation can contribute to more accurate Lew et al. (2006) analyses. Several studies have explored these aspects and achieved high results (Azevedo et al. 2015a, b; Cordeiro et al. 2013; Cordeiro et al. 2016a, b, 2017a, b; Cruz et al. 2018; de Lima et al. 2016; de Vasconcelos et al. 2018; Lima et al. 2015; Rodrigues et al. 2019).

Overall, the accuracy of diagnosis using conventional techniques is around 7090%, a percentage that decreases to less than 60% when dealing with women under 40 years old (Urbain 2005). These factors, associated with the vast variability of clinical cases, make the identification and differentiation of breast lesions based on images a difficult task for human eyes, especially when it comes to small lesions or lesions that are difficult to access. Faced with these challenges, several works have been dedicated to the study and development of intelligent classification systems to be used as assistants to specialists, to optimize the accuracy of the diagnosis.

Several works have been developed to support the diagnosis of breast lesions in mammographic images using deep learning. Most of these works are based on convolutional neural network architectures (Hamidinekoo et al. 2018; Shen et al. 2019; Wang et al. 2016; Yala et al. 2019; Yoon and Kim 2021). Although these classifier architectures are capable of modeling very complex decision boundaries, training deep networks is usually quite expensive from the point of view of computational complexity, demanding a lot of processing time and memory. Computing architectures with a high degree of parallelism are often required, such as servers equipped with graphics processing units (GPUs) (Fang et al. 2019; Mittal and Vaishay 2019). This tends to greatly increase the costs of acquiring and maintaining solutions for training deep neural network architectures. One way to overcome this problem is to use hybrid deep architectures based on pre-trained deep networks and shallow machine learning models. This approach has been called deep transfer learning: a deep neural network trained for another problem is used to extract implicit features from images; the feature vector thus obtained is then presented to a shallow machine learning model (Hua et al. 2021; Talo et al. 2019).

However, the features extracted from images using models based on convolutional neural networks are not invariant to translation and rotation, which makes the shallow models of the output layer not very robust regarding data variability. Thus, to overcome this problem further, it is necessary to train a model with a very large database, commonly in the big data domain. This again raises the need for architectures with a high degree of parallelism to deal with the computational complexity involved. Furthermore, models based on CNNs require that the input images have a standardized resolution, which requires that images of higher resolution than expected for a given convolutional neural network have their dimensions reduced and aspect ratios changed. This can significantly affect the recognition of images where texture features are preponderant, as is the case of samples from regions of interest in mammograms.

In this work, we investigate the use of convolutional neural networks based on the wavelet transform, the deep-wavelet neural networks, DWNN. These deep networks, in the form adopted in this work, were proposed by Barbosa et al. (2020). These networks do not learn: it is enough to set the number of layers and define the type of neighborhood of pixels considered that the bank of filters will be selected. The filter bank is based on the approximation of the discrete transform of wavelets by the Mallat Algorithm: a set of high pass filters directed according to the pixel neighborhood and an approximation low pass filter. Features are extracted using statistics (synthesis blocks) obtained from the sub-images of the output layer. Thus, once the number of layers and the type of pixel neighborhood are fixed, the dimension of the feature vector is fixed, not being necessary to change the dimensions or the aspect ratio of the input images. Given the ability of the wavelet transform to be successfully used in texture recognition applications, we adopted the DWNN in the model proposed in this work hoping to obtain good results for the problem of supporting the diagnosis of breast lesions considering different textures of breast tissue. Although mammography is more suitable for women over 45 years of age, with predominantly fatty breast tissue, solutions that achieve good results with images of denser breasts can optimize diagnosis with mammographic images, allowing for the inclusion of younger women in mammographic examinations when necessary.

Therefore, taking into account the relative success of approaches related to artificial intelligence and the need for solutions that enable the early diagnosis of breast cancer, this paper proposes a study of computational approaches for the automatic classification of lesions in mammography images. The study also used a new computational tool for image attribute extraction, the deep-wavelet neural network (DWNN) (Barbosa et al. 2020), which consists of a deep and untrained architecture, inspired by Wavelet decomposition at multiple levels. The shallow methods in the final layer we investigated were empirically chosen, trying to cover the most popular machine learning models: multi-layer perceptrons, support vector machines, decision trees, random forests, extreme learning machines, and Bayesian methods.

Initially, we present relevant works in the field of breast cancer diagnosis using computational tools and mammography images. Then, we present the proposal of this study. In the next section, we show and discuss the results obtained, carrying out a quantitative and qualitative evaluation of the explored techniques. Finally, we conclude the article by highlighting the main findings and limitations, in addition to proposing some possibilities for future work.

Related works

In their work, W. Azevedo et al. (2015a, b) proposed using extreme learning machines (ELMs) with different kernels to identify healthy breasts, with benign lesions and those with malignant lesions. For this, the authors used the Image Retrieval in Medical Applications (IRMA) database, with 2796 images (128×128) of regions of interest (ROI) from mammograms, organized according to the predominant type of tissue in the breast, which may be adipose (I), fibrous (II), heterogeneously dense (III), or extremely dense (IV) tissue.

Therefore, we employ 5 different evaluation scenarios to verify the performance of the classifier configurations. Four of these scenarios consisted of using images associated with a unique type of tissue constitution. In such cases, each image must be classified into one of the following three classes: no lesion, benign lesion, or malignant lesion. Finally, the fifth scenario used all the images, associating all types of fabric and, mainly based on this, there are 12 possible classes, three for each type of fabric. Therefore, in the fifth evaluation scenario, we sought to verify if the ELM would be able to classify the images not only by the type of tissue, but also by the diagnosis associated with the image.

For attribute extraction, the authors also evaluated different combinations of the Haralick, Zernike, and Wavelet methods, all tested individually and associated in pairs. The ELM kernels were the sigmoidal, dilatation, and erosion kernels, the last two being proposed in the same study. One hundred neurons in the hidden layer of the classifier and the 10-fold cross-validation method were used.

Overall, the association of Wavelet and Haralick attributes resulted in the best performances. Results close to the best were obtained when only the Haralick attributes were used. The Zernike attributes and the association of Zernike and Wavelet resulted in the least satisfactory performances. Regarding the result for each of the databases, it was observed that the use of fabric III favored the classification, reaching up to 95% of accuracy, with a kappa index of 0.96, using the erosion kernel. Tissues I, II, and IV had very similar results, all with 90% accuracy and kappa indices of 0.92, 0.91, and 0.93, respectively, the first result being obtained with the erosion kernel, and the others two with dilatation. For the database with all tissues and 12 possible classes, as expected, there was a considerable decrease in classification performance, so that a maximum precision of 65% was obtained, with a kappa of 0.66, both for erosion and for kernel expansion.

In the work by Becker et al. (2017), a self-constructed binary database (with cancer versus without cancer) was used, from which two studies were carried out. The first was a longitudinal study, which used exams of patients followed for 7 years (2008-2015), resulting in 178 exams per class. The second study was cross-sectional, whose data were from 2012 cases, with 143 exams from cancer patients and 1003 from healthy individuals.

Image analysis was performed using the ViDi SuiteVersion software. Initially, the ROI of each image was selected by specialists in the field. The ROI heatmap was used to represent the images and a dANN (deep Artificial Neural Network) was used as a classifier. This method resulted in an accuracy of 81%, with a sensitivity of 59.8% and specificity of 84.4%, for the group of the first study. In the second study, the performance was 85% correct, with 73.7% and 72% of sensitivity and specificity, respectively.

In the study by S. Wang et al. (2017), the authors used the Mammographic Image Analysis Society (mini-MIAS) database, containing 322 mammograms (1024×1024), also divided by tissue type, but with 3 possible tissues: adipose, adipose-glandular, and dense-glandular. The main objective of this work was to investigate the performance of the proposed classification method, Jaya-FNN, using the Jaya algorithm to train a feed-forward neural network (FNN). For this, 200 images were randomly chosen, 100 of the healthy breasts class and 100 of the cancerous breasts class. Then, the images were filtered for noise attenuation and segmented, isolating the ROI.

For feature extraction, the weighted fractional Fourier transform (WFRFT) was used, resulting in the fractional Fourier spectrum, which consists of a large set of attributes. Therefore, the principal component analysis (PCA) method was used to reduce the dimensionality of these attributes. The work also proposes the Jaya-FNN classification method, which consists of an FNN whose weights and biases are trained using the Jaya algorithm. For validation, the k-fold method was used, with 10 folds. In the study, the performance of Jaya-FNN was compared with that of other algorithms widely explored in the literature, leading the authors to observe that the proposed method presented the best results when compared to the other methods. Using JayaFNN resulted in the lowest standard deviation and a mean square error of 0.0093, which was more than 70% smaller than the second-best error. As for the metrics of accuracy, sensitivity, and specificity, values around 92% were obtained for all.

Magna et al. (2016) used the A2INET network to identify asymmetries between breasts, using mammographic images from two public domain databases, the Digital Dataset for Screening Mammography (DDSM) and the mini-MIAS. The A2INET, proposed in the work, consists of a semi-supervised model of an artificial immune network. Ninety-four images were used, which were divided equally into two groups: healthy breasts and asymmetrical breasts. To represent the images, the authors used 24 attributes extracted from a measure of quantification of structural similarity between regions, to describe relevant differences between the two breasts. In addition, the PCA method was also applied to reduce the dimensionality of the attributes.

The performance of A2INET was compared with that of three other methods, kNN, Partial Least Squares Discriminant Analysis (PLS-DA), and a backpropagation neural network. The proposed network outperformed the results of the other classifiers tested, as the accuracy of up to 90% was obtained using A2INET, while accuracies of the other methods were around 70%.

In the study by Rodriguez-Ruiz et al. (2019), the authors sought to identify breast cancer in mammography images from 9 different databases, totaling 9,000 images of breasts with cancer, 3,000 of them with calcification and 180,000 images of healthy breasts. For classification, a convolutional neural network (CNN) was used, whose results were compared to the diagnoses provided by 101 radiologists. Network performance was analyzed for each of the bases separately. Based on the work, the authors verified that the computational method used had a performance close to, but inferior to, the specialized knowledge of radiologists, with specificity between 49 and 79% and maximum sensitivity of 86%.

Although in the work by Rodriguez-Ruiz et al. (2019), the authors discuss the results in order to investigate the possibility of replacing radiologists’ knowledge with computational intelligence, it is important to mention that this is not the scope of the present work. In the study proposed here, our focus is on the development of new learning models that can be used in the development of solutions to support differential diagnosis. These solutions could be made available in the form of web microservices that, in turn, can be connected to expert systems that integrate various information from different databases to support the process of diagnosing breast cancer or breast lesions.

Materials and methods

Proposal

In this study, we propose an approach for identifying and classifying breast lesions from mammography images (Fig. 1). The images used are from the Image Retrieval in Medical Applications (IRMA) database and consist of regions of interest in mammograms. In the database, there are images of different breast tissues, both healthy and with benign and malignant lesions.

Proposed method for the identification and classification of breast lesions in mammography images. Initially, we extract features through DWNN method. Then, the feature vectors are assessed by different classification algorithms to identify the existence of a breast lesion in the image and indicate the probable type of lesion.

Initially, we analyzed the dataset in two different ways: in the first, we used only images of breasts with a predominance of adipose tissue (tissue I). In a second step, the analysis was performed using all the images of the different breast compositions, combining the breast images with all possible compositions: tissues I (adipose), II (fibroglandular), III (heterogeneously dense), and IV (extremely dense). Then, we use the DWNN method to extract attributes from the images. Finally, we evaluated the performance of different algorithms in positioning the images in their respective classes (i.e. normal, benign, and malignant). Figure 1 illustrates this method.

Dataset

The mammography imaging database adopted is the Image Retrieval in Medical Applications (IRMA) database, with 2796 images (128×128 pixels) of regions of interest (ROI), developed at Aachen University of Technology, Germany, and given to the Research Group on Biomedical Computing at UFPE (Brazil), for academic use, by Prof. Thomas Deserno (Deserno et al. 2012). The IRMA repository was built from four other public databases:

-

150 images from the MIAS (Mammographic Image Analysis Society) database (Suckling et al. 1994);

-

2576 images from the DDSM (Digital Database for Screening Mammography) database (Heath et al. 2000);

-

1 image extracted from LLN database (Lawrence Livermore National Laboratory, USA);

-

69 images from the Department of Radiology at Aachen University of Technology (RWTH), Germany.

In this database, the images are organized in two ways: by the type of tissue density predominant in the breast and by the diagnosis associated with the patient’s clinical case. As for the type of tissue, they are divided into four, according to the BIRADS (Breast Imaging Reporting and Data System) (D’Orsi et al. 1998) classification: adipose (type I), fibroglandular (type II), heterogeneously dense (type III), and extremely dense tissue (type IV). Figure 2 shows examples of each of these classes of breast tissue. As for the diagnosis associated with each image, there are three possible classes: healthy breasts, malignant lesions, and benign lesions. Considering the complete image base, we have 233 images for each of the three possible diagnoses, considering the four tissue types. Thus, there are 12 classes with 233 images each, totaling 2796 images. All diagnoses were previously established by specialists and used to resize the mammography images, isolating only the ROI, as shown in Fig. 3.

In this work, all images from the IRMA database were used, which were organized into 2 databases, the first with images of predominantly adipose breasts and the second with images of breasts with a predominance of all types of tissues. Table 1 details the organization of these bases. It is noteworthy that the IRMA base used was previously balanced, so the number of images is evenly distributed among the classes. Furthermore, it was not necessary to carry out any type of conversion, since the images are already acquired in gray levels.

Deep-wavelet neural network

Deep-wavelet neural network (DWNN) is a deep-learning method of attribute extraction for pattern recognition, based on Mallat’s algorithm (Mallat 1989) for multilevel wavelet decomposition. The “Deep” in the name arises from the possibility of using multiple layers, making the method even deeper as new layers are added. Furthermore, as with conventional deep learning methods, such as convolutional neural networks (CNN), the process consists of two basic steps, in the first, the images are submitted to filters, and in the second, pooling occurs. However, while filters are not fixed in conventional methods, the filters used in deep wavelet are fixed and refer to families of Haar wavelets.

In the Wavelet decomposition based on Mallat’s algorithm, low-pass and high-pass filters are applied to an image, resulting in a set of other images. Images resulting from low-pass and high-pass filters are called approximations and details, respectively (Mallat 1989). The smoothness of the original image is highlighted in the approximations, while in details, the edges (or discontinuity regions) are highlighted. This strategy is used in pattern recognition as it allows analyzing images in both the spatial and frequency domains (Mallat 1989).

In the DWNN approach, a neuron is formed by combining a filter, gi, with a downsampling operator (↓2) as shown in Fig. 4. In the figure, the matrices X and Y represent the input and output images respectively. The resolution of Y is smaller than X just by applying downsampling.

All filters used in DWNN form a filter bank, which are kept fixed throughout the process. The bank is supposed to have n filters. Thus, an input image will be submitted to n neurons that form the first intermediary layer of the neural network. In the second layer, the images resulting from the first will be submitted to the same filter bank and to downsampling individually, in the same way as was done for the input image. And the process repeats for the third and subsequent intermediate layers. In the DWNN output layer, there is the synthesis block which is responsible for extracting information from the images resulting from the process. Such approach is outlined in Fig. 5; in the figure, the abbreviation “SB” means synthesis block, and m represents the number of layers of the DWNN. The filter bank, downsampling, and the synthesis block will be detailed below.

The filter bank used in DWNN is fixed and composed of orthogonal filters. Considering S the domain of the image (support call) and R the set of real number, we can say that the orthogonal filters are of the type gk : S → R, for 1 ≤ k ≤ n. So, mathematically, the filter bank (G), can be represented by the set:

Before determining the DWNN filter bank, it is necessary to define which neighborhood will be considered during the filtering process. Let a pixel ~u of coordinates (i,j). The 8 neighborhood pixels (also called the 8-neighborhood) of ~u is formed by the pixels: (i + 1,j), (i − 1,j), (i,j + 1), (i,j − 1), (i + 1,j + 1), (i + 1,j − 1), (i−1,j +1), and (i−1,j −1). In other words, the lateral, vertical, and diagonal pixels of ~u are considered neighbors, as show in Fig. 6a.

Considering an 8-neighborhood, it is possible to form an orthonormal filters base containing a total of five filters, where four filters are bandpass filters containing a specific orientation selectivity (Mallat 1989). That is, each filter will highlight details in a given orientation. Figure 6b shows the orientations of such filters for an 8-neighborhood. In the figure, g1 is the high frequency vertical filter, responsible for highlighting horizontal edges, g2, horizontal high frequency edges, which highlights vertical edges, and g3 and g4 are the diagonal filters, which highlight the corners of the image. Therefore, the filters g1, g2, g3, and g4 form the set of high-pass filters, also classified as derived filters for highlighting as discontinuities of the input image.

In addition to the high-pass filters, the DWNN filter bank includes a low-pass filter (g5), acting as a smoother. Its function is to highlight the homogeneous areas of the image. This filter is considered an integrator filter. An example of a normal low-pass filter for 8-neighborhood is given in Equation 2 where the center of the mask (2,2) is the reference position used during the convolution of the image with the filter.

The filters shown by Fig. 6b and by Equation 2 are valid for an 8-neighborhood. However, when choosing a different neighborhood you should consider other filters. For example, consider a neighborhood of 24 pixels as shown in Fig. 7a. The orientation-specific selectivity high-pass filters will be given as shown in Fig. 7b. In this case, there are 8 high-pass filters (g1, g2, ..., g8). For 24-neighborhood, the low-pass filter would be a 5×5 matrix formed by 1/25 terms, following the same filter principle given in Equation 2 for an 8-neighborhood. So, for a neighborhood of 24 pixels must be used as a filter bank in the DWNN the orthonormal set given by the filters shown in Fig. 7b and one more low-pass, totaling 9 filters.

The second component process of a DWNN neuron is the downsampling, responsible for reducing the image resolution, in order to reduce the data complexity for the next layers of the network.

The downsampling is done by replacing four pixels of the image, arranged in a window 2×2, with just one. Figure 8 shows a scheme of how the downsampling. The symbol φ↓2 was used to represent an operation.

Consider any function φ↓2 : R4 → R, where φ↓2(·) can be a maximum function (returns the largest value among the input values), or the minimum (returns the smallest value), or the arithmetic mean or median of the values of pixels or among other possibilities of functions of the type R4 → R. Then, the pixels a0, b0, c0, d0, identified in Fig. 8, have their values given by:

In the case shown in Fig. 8, from an image 10×10 (100 pixels) return an image 5×5 (25 pixels), reducing the image resolution by a factor a 4. It is also possible to see in Fig. 8 that the window 2×2 of pooling moves by skipping two pixels, this means that the pooling is performed using a stride 2 (Albawi et al. 2017).

The use of downsampling has an interesting feature as it reduces memory consumption during the execution of the algorithm. Consider, for example, an image with 4096 pixels submitted to the 8-neighborhood orthonormal filter bank (referring to the first middle layer of the DWNN). As a result, another n = 5 images will be obtained each containing the same amount of pixels. This then results in an increase in the amount of data by a factor of five. When considering more layers of the DWNN, the amount of data would grow exponentially by a factor of nm.

Such inconvenience could make the process unfeasible. But when applying downsampling, as the number of images increases by a factor nm,the size of each image coming from the process is reduced relative to the input image by a factor of 4m. An especially interesting situation occurs when considering an 8-neighborhood, for which the filterbank has a total of 5 filters. However, it is possible to combine the diagonal filters (g3eg4in Fig. 6b) in order to work with a bank containing 4 filters. In this special case, after m layers of DWNN neurons, 4m images will be obtained reduced each one, with respect to the input image, by a factor of 4m. In this way, then, the amount of data will remain constant throughout the execution of the algorithm. Using such an approach on the 4096 pixels image in the previous example, after the first layer of the DWNN, it will result 4 images of 1024 pixels. Therefore, the amount of data during the process remains constant.

Considering the schematic of a DWNN neuron shown in Fig. 4 and the input X and output Y images for a given neuron, you can write that Y is related to X as follows:

where the ∗ symbol represents the convolution operation.

The output layer of the DWNN is formed by the synthesis blocks. Each block has the function of extracting, from each image resulting from the intermediate layers, an information (or data) that represents it, as shown in Fig. 9.

Then, in the synthesis blocks, each of the nm reduced images will be submitted to a function ϕ : S → R. Among other possibilities, ϕ(·) can assume a function of maximum, minimum, mean, or median. Your goal is, therefore, to replace the entire image with a single value.

Thus, considering f(~u) ∈ R the value of pixel ~u, we have that xi ∈ R is obtained as follows:

Figure 9 shows a schematic of the synthesis block. At the end of DWNN, when applying the synthesis block to all images resulting from the m intermediate layers, we will have a set of terms xi (1 ≤ i ≤ nm). Such a set can be understood as the attributes of the input image. When applying the DWNN to a set of image, a database is obtained, which can be used as input to a classifier.

Feature extraction

For the extraction of attributes, we use the method deep-wavelet neural network (DWNN) (Barbosa et al. 2020). DWNN is a deep, untrained network for attribute extraction, inspired by Mallat’s (Mallat 1989) algorithm for multilevel Wavelet decomposition. This algorithm, which emerged as a strategy for implementing the discrete wavelet transform, consists of obtaining approximations and details of an image. Approximations are the low-frequency representation of the image, conserving its general trend while smoothing out the abrupt transitions present in it. The details show the image’s high-frequency components, highlighting regions of discontinuity such as edges and blemishes.

In Wavelet decomposition, low-pass and high-pass filters are applied to an image to form a set of other images that are smaller in size than the original. From low-pass filtering, approximations are obtained and details are acquired through high-pass filters. This approach allows the analysis of images in the spatial and frequency domains and, therefore, it has been widely used in pattern recognition. DWNN uses a process similar to this one, in which a neuron is formed by combining a given filter with a process of image size reduction called downsampling.

Thus, considering a bank with n filters, an input image will be submitted to n neurons that form the first layer of the neural network. In the second layer, each of the images resulting from the first will be individually submitted to the same bank of n filters and to downsampling, in the same way as was done for the input image. The process is repeated for all layers of the network, according to the quantity established by the user and which determines the depth of the network.

Finally, in the DWNN output layer, we have the synthesis blocks (SB), which are responsible for extracting information from the images resulting from the entire process. In these blocks, each of the reduced nm images will be submitted to a maximum, average, minimum, median, or mode function. Thus, each image is replaced by a unique value.

In this work, we extracted features by using a 5-layer DWNN with a filter bank of four 8-neighborhood filters, resulting, therefore, in 1024 attributes for each input image. In the synthesis block we used the minimum function to calculate the output of the network, as this one presented better results against maximum, mean, and median. Results with maximum, mean, and median synthesis blocks did not reach the minimum accuracy of 70%, which is the empirically acceptable threshold for the diagnostic support system to outperform a human mastologist analyzing a mammographic image. For this reason, these results were not presented in this work.

Classification

After extracting attributes, the set was submitted to a classification step. Tests were carried out with Bayesian network (Bayes Net), naive Bayes classifier (Naive Bayes), multilayer perceptron (MLP), support vector machine (SVM), extreme learning machines (ELM), in addition to classifiers based on trees; search: J48, random tree, and random forest. The settings for each classifier are presented in Table 2.

All tests were performed using the 10-fold cross-validation method (Jung and Hu 2015). Each configuration was tested 30 times, in order to obtain statistical information for comparing the methods.

To assess the performance of the classifiers, accuracy metrics and kappa index were used. The accuracy (Ac) corresponds to the percentage of correctly classified instances and can vary from 0 to 100% (Krummenauer and Doll 2000). This metric is calculated from the Equation 5, where ncorrect is the number of correctly classified instances and ntotal is the total number of instances.

The kappa index (κ) is a statistical metric to assess the agreement between the obtained and expected results Landis and Koch (1977); McHugh (2012). The kappa index can vary in the range [−1, 1], where values less than or equal to 0 (zero) indicate no agreement between the results, values above 0.8 demonstrate high agreement, and intermediate values represent low or moderate agreement. Kappa provides information about the degree of reproducibility of the method and is calculated as indicated in Equation 6, where Pcalculated represents the observed value and Pexpected is the expected value.

Results

In the IRMA database, the images are separated by type of tissue predominant in the breast, which can be adipose, fibrous, heterogeneously dense or extremely dense. Furthermore, for each of these tissues, the images are classified according to breast evaluation, according to the BIRADS (Breast Imaging Reporting and Data System) classification. Thus, there are normal breasts, with a benign lesion or with a malignant lesion. Initially, only images of breasts classified as adipose were analyzed, as it consists of the predominant breast constitution in women who undergo mammography. Then, the classification performance was also evaluated using images of all possible types of tissues, with the aim of increasing the variability of breast constitutions.

Figure 10 shows the performance of the different methods for classifying the highlighted regions of interest in adipose-type breasts into the normal, benign or malignant lesion classes. Figure 11 presents the results of accuracy and kappa index for the dataset of mammography images of adipose, dense and fibrous breasts analyzed together. For the extraction of attributes, the DWNN with 5 levels and a minimum function for the synthesis block was used.

From these results, we realized that the best classifications were with SVM. Thus, Fig. 3 shows the confusion matrix obtained with this method during image classification. From the matrix, we noticed a greater confusion between the benign and malignant classes. There is no confusion between these classes and the normal/healthy class. Furthermore, Table 4 shows the results of sensitivity, specificity, and AUC of this model.

Discussion

The use of DWNN in the images with adipose breasts made the performance of the classification methods very different from each other: the SVM clearly stood out, reaching accuracies above 96%, with a kappa of 0.95. This method was followed by random forest, with accuracy close to 85% and kappa around 0.70. Bayesian networks achieved intermediate performances. However, we obtained less satisfactory results using decision trees (random tree and J48), ELM and MLP. The latter stood out negatively due to the large dispersion of data, greater than that of all other methods. Basically, the same pattern was observed between the accuracy results, in Fig. 10a, and the kappa index, in Fig. 10b.

The performance of the classifiers was also verified for the classification of regions of interest in breasts with all possible tissue constitutions (adipose, fibrous, heterogeneously dense or extremely dense). As in the previous case, the aim is to automatically identify whether the image belongs to the normal class, benign lesion or malignant lesion. The DWNN configuration for attribute extraction was similar to the one used previously, with 5 levels and a minimum function for the synthesis block.

Similarly to that observed in Fig. 10, the use of DWNN in breasts with different tissue constitutions (see Fig. 11) made the performance of the classification methods very different from each other: SVM stood out a lot, but this time reaching 94% accuracy, with a kappa of 0.91. The Bayesian methods, J48, ELM, and random forest showed similar performances, with accuracies between 75 and 85%. The random tree algorithm and the MLP presented the worst results, with the MLP with the largest dispersion among the evaluated methods. The results expressed in Fig. 11 demonstrate that the method is robust, even after associating the different types of tissues, which, consequently, makes the classification problem more complex.

Tables 3 and 4 show the confusion matrix and the values of sensitivity, specificity, and area under the ROC curve, respectively, for a single run of the best model, i.e. DWNN with 5 layers and SVM with second-degree polynomial kernel. These results correspond to a test with 50% of the database. The results of the confusion matrix (see Table 3) show that mammogram patches from healthy patients were not confused with either of the other two classes. However, 8 images classified as malignant are confused with benign images out of a total of 466, while 6 benign images are confused with malignant images out of a total of 466 images. According to Table 4, the sensitivity, specificity, and AUC results are, in this order: 100%, 100%, and 100% for normals; 98.71%, 99.14%, and 98.93% for benign; and 98.28%, 99.36%, and 98.82% for malignant. The weighted values of sensitivity, specificity, and AUC are then 99%, 88.61%, and 93.80%, respectively. These results are comparable or superior to those of the state of the art, like Becker et al. (2017); Magna et al. (2016); Rodriguez-Ruiz et al. (2019); Wang et al. (2017), also obtained with the IRMA database.

Conclusion

The present study presented a new architecture of deep artificial neural network: the deep-wavelet neural network, DWNN. We present a hybrid classifier architecture aimed at recognizing patches from mammograms of different tissues to support the diagnosis of malignant and benign breast lesions. DWNN has the advantage of not needing any training or pre-adjustment of parameters other than the number of layers. The proposed hybrid architecture combines a DWNN for extracting features from mammography patches with classic machine learning methods, seeking to solve the challenges associated with the interpretation of mammography images, especially those related to denser tissues, which are commonly associated with more advanced patients. young people under the age of 45. The proposed method obtained results above 90% of accuracy, with a kappa index around 0.90 in the classification of mammographic images with lesions in different breast constitutions. We also observed, in most cases, low variability of results, implying, therefore, greater reliability. A clear exception to this finding occurred with the use of the multilayer perceptron, which showed high data dispersion in all test scenarios.

We also verified that the support vector machine excelled in relation to other classification algorithms. High results were also obtained with random forest and extreme learning machine, indicating that the problem can be generalized, but often in a non-linear way. The high performance of these methods explains the low results obtained with decision trees (J48 and random tree). Individual decision trees commonly achieve high results when the basis is very specific. The intermediate performances of Bayesian methods point to a certain independence between the attributes extracted by the DWNN.

Therefore, future works may use techniques to select the most relevant attributes to represent the data sets, eliminating redundant or non-relevant information and, therefore, optimizing the processing. In addition, other classification techniques using deep learning and unsupervised learning can be tested and combined with the technique proposed in this work, in the form of ensembles, seeking to achieve higher values of sensitivity and specificity and, therefore, even greater clinical applicability.

Finally, the results obtained reinforce the power of computational intelligence to solve complex and non-linear problems, recurrent characteristics in issues associated with biomedical applications. Deep learning techniques, such as DWNN, explored here, have been shown to be effective for such applications, as they increase the complexity of the decision frontier for solving the classification problem. As demonstrated by the results obtained, the method proved to be very promising for the classification of breast lesions in mammography images. Our approach is especially relevant because it presents interesting results even when analyzing images of breasts with different densities: from adipose breasts, in the case of patients over 45 years of age, to extremely dense breasts, in the case of adolescents and young adults. Since breast composition is directly associated with the patient’s age and clinical variability, the approach emerges as a possible alternative to support the process of diagnosing breast lesions.

References

Albawi S, Mohammed TA, Al-Zawi S. Understanding of a convolutional neural network. In: 2017 International conference on engineering and technology (ICET); 2017. p. 1–6.

Azevedo WW, Lima SM, Fernandes IM, Rocha AD, Cordeiro FR, da SilvaFilho AG, dos Santos WP. Fuzzy morphological extreme learning machines to detect and classify masses in mammograms. In: Fuzzy systems (fuzzieee), 2015 IEEE international conference; 2015a. p. 1–8.

Azevedo W, Lima S, Fernandes I, Rocha A, Cordeiro F, Silva-Filho A, Santos W. Morphological extreme learning machines applied to detect and classify masses in mammograms, 2015 International joint conference on neural networks (IJCNN). Killarney; 2015b. p. 1–8.

Bandyopadhyay SK. Survey on segmentation methods for locating masses in a mammogram image. Int J Com Appl. 2010;9(11):25–8.

Barbosa VAF, de Santana MA, Andrade MKS, de Lima RCF, dos Santos WP. Deep-wavelet neural networks for breast cancer early diagnosis using mammary termographies. In: Das H, Pradhan C, Dey N, editors. Deep learning for data analytics: Academic Press. Retrieved from https://www.sciencedirect.com/science/article/pii/B9780128197646000077; 2020. p. 99–124. https://doi.org/10.1016/B978-0-12-819764-6.00007-7.

Becker AS, Marcon M, Ghafoor S, Wurnig MC, Frauenfelder T, Boss A. Deep learning in mammography: diagnostic accuracy of a multipurpose image analysis software in the detection of breast cancer. Invest Radiol. 2017;52(7):434–40.

Bidard FC, Michiels S, Riethdorf S, Mueller V, Esserman LJ, Lucci A, Pantel K. Circulating tumor cells in breast cancer patients treated by neoadjuvant chemotherapy: a meta-analysis. JNCI: J Nati Cancer Ins. 2018;110(6):560–7.

Boquete L, Ortega S, Miguel-Jiménez JM, Rodríguez-Ascariz JM, Blanco R. Automated detection of breast cancer in thermal infrared images, based on independent component analysis. J Med Syst. 2012;36(1):103–11.

Boujelben A, Chaabani AC, Tmar H, Abid M. Feature extraction from contours shape for tumor analyzing in mammographic images. In: Digital image computing: Techniques and applications, 2009. Dicta’09; 2009. p. 395–9.

Cordeiro F, Santos W, Silva-Filhoa A. Segmentation of mammography by applying growcut for mass detection. Stud Health Technol Informatics. 2013;192:87.

Cordeiro FR, Santos WP, Silva-Filho AG. An adaptive semisupervised fuzzy growcut algorithm to segment masses of regions of interest of mammographic images. Applied Soft Computing. 2016a;46:613–28.

Cordeiro FR, Santos WP, Silva-Filho AG. A semi-supervised fuzzy growcut algorithm to segment and classify regions of interest of mammographic images. Expert Syst Appl. 2016b;65:116–26.

Cordeiro FR, Bezerra KF, dos Santos WP. Random walker with fuzzy initialization applied to segment masses in mammography images. In: 2017 IEEE 30th international symposium on computer-based medical systems (CBMS). Thessaloniki; 2017a. p. 156–61.

Cordeiro FR, Santos W, Silva-Filho AG. Analysis of supervised and semi-supervised growcut applied to segmentation of masses in mammography images. Com Methods Biomech Biomed Eng Imaging Vis. 2017b;5(4):297–315.

Cruz T, Cruz T, Santos W. Detection and classification of lesions in mammographies using neural networks and morphological wavelets. IEEE Latin America Transactions. 2018;16(3):926–32.

D’Orsi C, Sickles E, Mendelson E, Morris E. ACR BI-RADS atlas, breast imaging reporting and data system. 3rd ed. Reston, VA: Am Coll Radiol; 1998.

de Lima SM, da Silva-Filho AG, dos Santos WP. Detection and classification of masses in mammographic images in a multi-kernel approach. Com Methods Programs Biomed. 2016;134:11–29.

de Vasconcelos J, dos Santos W, de Lima R. Analysis of methods of classification of breast thermographic images to determine their viability in the early breast cancer detection. IEEE Latin Am Trans. 2018;16(6):1631–7.

Deserno TM, Soiron M, de Oliveira JE, Araújo AA. Computer-aided diagnostics of screening mammography using content-based image retrieval. Proc soc photo-optical instrum eng (SPIE). 2012;8315:831527–7.

Fang J, Fu H, Yang G, Hsieh CJ. RedSync: reducing synchronization bandwidth for distributed deep learning training system. J Parallel Distrib Comput. 2019;133:30–9.

Gonçalves JG, Siqueira ADSE, de Almeira Rocha IG, de Lima EFF, da Silva AL, da Silva BO, Land MGP. Evolução histórica das políticas para o controle do câncer de mama no Brasil. DIVERSITATES International Journal. 2016;8(1).

Hamidinekoo A, Denton E, Rampun A, Honnor K, Zwiggelaar R. Deep learning in mammography and breast histology, an overview and future trends. Med Image Anal. 2018;47:45–67.

Heath M, Bowyer K, Kopans D, Moore R, Kegelmeyer WP. The digital database for screening mammography. In: Proceedings of the 5th international workshop on digital mammography; 2000. p. 212–8.

Hua J, Zeng L, Li G, Ju Z. Learning for a robot: deep reinforcement learning, imitation learning, transfer learning. Sensors. 2021;21(4):1278.

Jung Y, Hu J. A K-fold averaging cross-validation procedure. J Nonparametric Stat. 2015;27(2):167–79.

Krummenauer F, Doll G. Statistical methods for the comparison of measurements derived from orthodontic imaging. Eur J Orthod. 2000;22(3):257–69.

Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159–74.

Lew MS, Sebe N, Djeraba C, Jain R. Content-based multimedia information retrieval: state of the art and challenges. ACM Trans Multimedia Comput. Commun Appl. 2006;2(1):1–19.

Lima S, Azevedo W, Cordeiro F, Silva-Filho A, Santos W. Feature extraction employing fuzzy-morphological decomposition for detection and classification of mass on mammograms. Annu int conf IEEE eng med biol soc IEEE eng med biol soc Annu conf. 2015;2015:801–4.

Magna G, Casti P, Jayaraman SV, Salmeri M, Mencattini A, Martinelli E, Di Natale C. Identification of mammography anomalies for breast cancer detection by an ensemble of classification models based on artificial immune system. Knowl-Based Syst. 2016;101:60–70.

Maitra IK, Bandyopadhyay SK. Identification of abnormal masses in digital mammogram using statistical decision making. Hybrid Intelligence for Image Analysis and Understanding. 2017:339–68.

Mallat SG. Multifrequency channel decompositions of images and wavelet models. IEEE Trans Acoust Speech Signal Process. 1989;37(12):2091–110.

Mascaro AA, Mello CA, Santos WP, Cavalcanti GD. Mammographic images segmentation using texture descriptors. In: 2009 Annual international conference of the IEEE engineering in medicine and biology society; 2009. p. 3653–3.

McHugh ML. Interrater reliability: the kappa statistic. Biochemia Medica. 2012;22(3):276–82.

Mittal S, Vaishay S. A survey of techniques for optimizing deep learning on GPUs. J Syst Archit. 2019;99:101635.

Nordin ZM, Isa NAM, Zamli KZ, Ngah UK, Aziz ME. Semiautomated region of interest selection tool for mammographic image. Int Symp Inf Technol. 2008;1:1–6.

Pilevarzadeh M, Amirshahi M, Afsargharehbagh R, Rafiemanesh H, Hashemi SM, Balouchi A. Global prevalence of depression among breast cancer patients: a systematic review and meta-analysis. Breast Cancer Res Treat. 2019;176(3):519–33.

Rodrigues AL, de Santana MA, Azevedo WW, Bezerra RS, Barbosa VA, de Lima RC, dos Santos WP. Identification of mammary lesions in thermographic images: feature selection study using genetic algorithms and particle swarm optimization. Res Biomed Eng. 2019;35(3):213–22.

Rodriguez-Ruiz A, Lång K, Gubern-Merida A, Broeders M, Gennaro G, Clauser P, Sechopoulos I. Stand-alone artificial intelligence for breast cancer detection in mammography: comparison with 101 radiologists. JNCI: J National Cancer Ins. 2019;111(9):916–22.

Salmeri M, Mencattini A, Rabottino G, Accattatis A, Lojacono R. Assisted breast cancer diagnosis environment: a tool for DICOM mammographic images analysis. In: 2009 IEEE international workshop on medical measurements and applications; 2009. p. 160–5.

Santos WP, Souza RE, Silva AFD, Portela NM, Santos-Filho PB. Análise multiespectral de imagens cerebrais de ressonância magnética ponderadas em difusão usando lógica nebulosa e redes neurais para avaliação de danos causados pela doença de Alzheimer. In: Xi congresso brasileiro de física médica. Brasil: Ribeirão Preto; 2006a. p. 1.

Santos WP, Souza RE, Silva AFD, Portela NM, Santos-Filho PB. Avaliação da doença de Alzheimer por análise de imagens de RMN utilizando redes MLP e máquinas de comitê. In: XX congresso brasileiro de engenharia biomédica. São Pedro, Brasil; 2006b. p. 1–4.

Santos WP, Souza RE, Santos-Filho PB. Evaluation of Alzheimer’s disease by analysis of MR images using multilayer perceptrons and Kohonen SOM classifiers as an alternative to the ADC maps. In: 29th annual international conference of the IEEE engineering in medicine and biology society. France: Lyon; 2007. p. 21182121.

Santos WP, Assis FM, Souza RE, Albuquerque ACTC, Simas MLB. A monospectral approach for fMRI analysis using Kohonen self-organized networks and objective dialectical classifiers. Int J Innov Com Appl. 2008a;1(4):260–73.

Santos WP, Assis FM, Souza RE, Santos-Filho PB. Evaluation of Alzheimer’s disease by analysis of MR images using objective dialectical classifiers as an alternative to ADC maps. In: 30th Annual international conference of the IEEE engineering in medicine and biology society. Vancouver, Canada; 2008b. p. 5506–9.

Santos WP, Souza RE, Santos-Filho PB, Lima-Neto FB, Assis FM. A dialectical approach for classification of DW-MR Alzheimer’s images. In: IEEE world congress on computational intelligence (WCCI 2008). Hong Kong, China; 2008c. p. 1728–35.

Santos WP, Souza RE, Silva AFD, Santos-Filho PB. Evaluation of Alzheimer’s disease by analysis of MR images using multilayer perceptrons and committee machines. Comput Med Imaging Graph. 2008d;32(1):17–21.

Santos WP, Assis FM, Santos-Filho RESPB, Lima-Neto FB. Dialectical multispectral classification of diffusion-weighted magnetic resonance images as an alternative to apparent diffusion coefficients maps to perform anatomical analysis. Comput Med Imaging Graphi. 2009a;33(6):442–60.

Santos WP, Assis FM, Souza RE. MRI Segmentation using dialectical optimization. In: 31st Annual international conference of the IEEE engineering in medicine and biology society. Minneapolis, USA; 2009b. p. 5752–5.

Santos WP, Assis FM, Souza RE, Mendes PB, Monteiro HSS, Alves HD. A dialectical method to classify Alzheimer’s magnetic resonance images. In: Santos WP, editor. Evolutionary computation. Vukovar: InTech; 2009c. p. 473–86.

Santos WP, Assis FM, Souza RE, Mendes PB, Monteiro HSS, Alves HD. Dialectical non-supervised image classification. In: IEEE congress on evolutionary computation (CEC 2009). Trondheim; 2009d. p. 2480–7.

Santos WP, Assis FM, Souza RE, Santos-Filho PB. Dialectical classification of MR images for the evaluation of Alzheimer’s disease. In: Naik GR, editor. Recent advances in biomedical engineering. Vukovar: InTech; 2009e. p. 241–50.

Schattner E. Correcting a decade of negative news about mammography. Clin Imaging. 2020;60(2):265–70.

Shen L, Margolies LR, Rothstein JH, Fluder E, McBride R, Sieh W. Deep learning to improve breast cancer detection on screening mammography. Sci Rep. 2019;9(1):1–12.

Shrivastava SR, Shrivastava PS, Jegadeesh R. Ensuring early detection of cancer in low-and middle-income nations: World health organization. Arch Med Health Sci. 2017;5(1):141.

Suckling J, Parker J, Dance D, Astley S, Hutt I, Boggis C, Kok S. The mammographic image analysis society digital mammogram database. Digital Mammo. 1994:375–86.

Talo M, Baloglu UB, Yıldırım Ö, Acharya UR. Application of deep transfer learning for automated brain abnormality classification using MR images. Cogn Syst Res. 2019;54:176–88.

Urbain JL. Breast cancer screening, diagnostic accuracy and health care policies. Can Med Assoc J. 2005;172(2):210–1.

Wang J, Yang X, Cai H, Tan W, Jin C, Li L. Discrimination of breast cancer with microcalcifications on mammography by deep learning. Sci Rep. 2016;6(1):1–9.

Wang S, Rao RV, Chen P, Zhang Y, Liu A, Wei L. Abnormal breast detection in mammogram images by feed-forward neural network trained by Jaya algorithm. Fundam Informatica. 2017;151(1–4):191–211.

Wang M, Ji S, Shao G, Zhang J, Zhao K, Wang Z, Wu A. Effect of exosome biomarkers for diagnosis and prognosis of breast cancer patients. Clin Transl Oncol. 2018;20(7):906–11.

Yala A, Lehman C, Schuster T, Portnoi T, Barzilay R. A deep learning mammography-based model for improved breast cancer risk prediction. Radiol. 2019;292(1):60–6.

Yoon JH, Kim EK. Deep learning-based artificial intelligence for mammography. Korean J Radiol. 2021;22(8):1225.

Acknowledgements

We thank Prof. Thomas Deserno for kindly providing the IRMA image dataset.

Funding

This study was funded by the Fundação de Amparo à Ciência e Tecnologia do Estado de Pernambuco (FACEPE) and Conselho Nacional de Desenvolvimento Científico e Tecnológico, CNPq, Brazil.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

de Santana, M.A., dos Santos, W.P. A deep-wavelet neural network to detect and classify lesions in mammographic images. Res. Biomed. Eng. 38, 1051–1066 (2022). https://doi.org/10.1007/s42600-022-00238-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42600-022-00238-8