Abstract

Mixture models occur in numerous settings including random and fixed effects models, clustering, deconvolution, empirical Bayes problems and many others. They are often used to model data originating from a heterogeneous population, consisting of several homogeneous subpopulations, and the problem of finding a good estimator for the number of components in the mixture arises naturally. Estimation of the order of a finite mixture model is a hard statistical task, and multiple techniques have been suggested for solving it. We will concentrate on several methods that have not gained much popularity yet deserve the attention of practitioners. These can be categorized into three groups: tools built upon the determinant of the Hankel matrix of moments of the mixing distribution, minimum distance estimators, likelihood ratio tests. We will address theoretical pillars underlying each of the methods, provide some useful modifications for enhancing their performance and present the results of the comparative numerical study that has been conducted under various scenarios. According to the results, none of the methods proves to be a “magic pill”. The results uncover limitations of the techniques and provide practical hints for choosing the best-suited tool under specific conditions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Aim and Scope

In multiple applications the collected data may be best described by a multimodal probability mass or density function meaning the empirical distribution contains several regions with high probability mass. Mixture models are a powerful mathematical tool that allows for characterizing such heterogeneous populations, which are believed to consist of multiple homogeneous subpopulations. A great multitude of statistical problems can be cast into the mixture model framework: linear inverse and deconvolution problems, random effects models, repeated measures and measurements error models, empirical and hierarchical Bayes, latent class and latent trait models, clustering, robustness and contamination models, hidden mixture structures, random coefficient regression models and many others [43]. A very important class of mixture models is the class of finite mixtures. These models, which assume a finite number of components, have proved to be very useful and flexible enough to model a vast range of random phenomena thus receiving much attention from both theoretical and practical viewpoints.

In some mixture models applications there is no uncertainty about the number of components in the mixture. This is the case where the components correspond to a well-known existing partition of the population. However, on many occasions this situation is far from realistic and practitioners encounter either the lack or complete absence of a priori information about the actual number of mixture components. In such cases, this number has to be inferred from the data along with the parameters of the component densities. Correct identification of mixture complexity may be of primary interest in itself or may be followed by efficient estimation of all parameters. Due to its practical importance the problem of selecting the optimal mixture complexity has been addressed in numerous statistical publications, and we will point out many seminal works as we proceed.

The objectives of the present survey are to

-

provide the theoretical background of the reviewed methods for estimating the complexity of a finite mixture;

-

assess the performance of these methods under various scenarios;

-

suggest modifications that enhance the performance of some of the methods in particular settings;

-

identify universal methods that provide stable and accurate results throughout most of the scenarios for different distribution families or single out scenarios under which certain approaches may be preferred to others.

The number of methods devoted to estimating the true number of components in a mixture is undoubtedly too large to be thoroughly described in a single survey. Thus, we restrict attention to a selected subset of approaches that have the merit of being applicable in very general settings; i.e., for wide classes of finite mixtures of distributions. One of the main goals of this survey is to uncover the extent to which each of the methods is successful in consistently estimating the true complexity for various sample sizes. The estimation techniques reviewed below can be split into three main categories:

-

1.

methods built upon the determinants of the Hankel matrix of moments of the mixing distribution;

-

2.

methods based on penalized minimum distance between the unknown probability density and a consistent estimator thereof. The distances considered in this survey are the Hellinger as well as the \(L_2\)-distance;

-

3.

likelihood ratio test (LRT) - based techniques.

Some of the key criteria we based our choice of the techniques upon were:

-

a)

a cohesive mathematical theory behind the method, including the asymptotically consistency;

-

b)

infrequent reference in the literature as well as relatively rare usage in practice despite of the coherent theoretical base;

-

c)

feasibility of implementation using any programming language, e.g. Python, R, Julia, Matlab, etc.

Pseudocodes for the algorithms discussed in this work are given in Appendix D in the supplementary materials. For completeness, other interesting methods for estimating the complexity of a finite mixture are mentioned in Sect. 8.

Although not strictly a part of a survey, the performance enhancement such as the one we bring to some of the methods through specific modifications is almost inevitable. In fact, the original version of some of the approaches reviewed here cannot be of real practical value without any further adjustment. These modifications, which will be described in separate subsections, include resorting to some judicious scaling in the case of the Hankel-based-methods or using bootstrap instead of penalization for the approaches based on minimum distance estimation. In Sect. 6, we report the results of an extensive numerical study which we carried out for different mixture distributions and various number of components with the goal of comparing the performances of the techniques reviewed in this survey.

Several examples involving the estimation of mixture complexity for real data sets using the discussed methods are presented. The data sets were taken from various fields such as geology, insurance and lexicography.

1.2 Organization of the Paper

The paper is organized as follows:

-

Section 2 provides some basic background on mixture models, mentions major works on mixture model estimation techniques and gives a brief overview of these approaches.

-

Section 3 outlines the theoretical foundation of the original method based on the determinants of the Hankel matrix of moments of the mixing distribution as proposed in [21]. In the same section, two modifications of this approach allowing for obtaining improved results, are presented. The section also gives a concise description of a neural network extension of the Hankel matrix approach, proposing possible working configurations of a multilayer perceptron for the mixtures of Gaussian, Poisson and geometric densities.

-

Section 4 describes methods based on minimum distance estimation. The section relies to a very large extent on the works [69, 74] and [20]. We re-examine the estimation techniques that use the Hellinger and the \(L_2\) distances when combined with two different penalties. In the same section, motivated by the idea of enhancing the original method, we propose a modification based on a bootstrap procedure instead of penalization.

-

Section 5 presents the estimation approach based on the LRT combined with a bootstrap procedure as described in [38].

-

Section 6 comprises the results of a comparative numerical study where all of the above mentioned techniques are tested on simulated data under various scenarios. Furthermore, the same section contains a discussion of the settings in which certain methods can be favored as they seem to outperform their counterparts.

-

Section 7 encompasses several real data sets that were analysed using the studied approaches and compares the obtained results.

-

Section 8 mentions a number of papers where other techniques for mixture complexity estimation, not addressed in the present survey, are considered.

-

Section 9 summarizes the findings and outlines the limitations of all methods reviewed in this survey.

-

Appendices A, B, C, D presented as supplementary materials to this paper include additional examples, tables with detailed simulation results, proofs of the theoretical results that are relevant for the methods described in the manuscript and pseudocodes clarifying and simplifying the implementation of the discussed techniques.

2 Finite Mixture Models: General Scope

2.1 Notation and Basic Definitions

We start with defining the terminology that will be used throughout the survey. In the sequel, a real vector of dimension r will be denoted \(\varvec{v}_r\) and its components by \(v_1, \ldots , v_r\). A class of real vectors of dimension r will also bear the subscript r in its notation. When manipulating several vectors of dimension r we will index them as \(\varvec{v}_{r,1}, \varvec{v}_{r, 2}, \ldots \). A random sample of i.i.d. random variables are going to be denoted \((X_1, \ldots , X_n)\). Also, a sequence of random variables (for example converging weakly) will be denoted for example by \(Y^{(n)}\). A class of densities which depend on some vector of parameters of dimension r will not necessarily bear the subscript r.

Suppose that some population of interest, represented abstractly by a random variable X, consists of a finite number \(m \in {\mathbb {N}} \) of subpopulations. Each subpopulation is generated by some random process that can be modeled by an individual or component distribution, e.g. normal, exponential, Poisson, geometric, etc. We will assume that each of the component distributions admits a density with respect to some common dominating measure \(\mu \). Furthermore, the component density is assumed to be parametrized through some unknown vector \(\varvec{\phi }_d \in \varvec{\Phi } \subseteq {\mathbb {R}}^d, \; d\ge 1\). To keep the manuscript to a reasonable length, we confine our attention in this survey to the one-dimensional case; i.e. the random variable \(X \in {\mathbb {R}}\). In the sequel, the dominating measure \(\mu \) is either the Lebesgue measure in case the distribution of the components is absolutely continuous, or the counting measure in case this distribution is discrete. In the latter case, all the examples considered here treat distributions that are supported on the set of non-negative integers. Let \({\mathcal {F}} = \big \{f_{\varvec{\phi }_d}: \varvec{\phi }_d \in \varvec{\Phi } \big \}\) be the family of densities which the components belong to. If \({\mathcal {X}}\) is the support of X, then the distribution of X is said to have a m-component mixture distribution with density

for all \(x \in {\mathcal {X}}\), where

\({\mathcal {S}}_{m-1}\) is the \((m-1)\)-dimensional simplex and \(\varvec{\Phi }^m\) is the Cartesian product \(\{(\varvec{\phi }_{d,1}, \ldots , \varvec{\phi }_{d,m})^T: \varvec{\phi }_{d,i} \in \varvec{\Phi }, i =1, \ldots , m \}\) with \(p_m = md + m-1\).

Above, G is a discrete distribution defined on \(\Phi \) with at most m jump points at \(\varvec{\phi }_{d,1}, \ldots , \varvec{\phi }_{d,m}\), and the integral representation in (2.1) is given here only to draw attention that finite mixtures are part of a much bigger family of mixtures where G can be any distribution function, known often under the name of “mixing distribution”. In the sequel, we will refer to either the probability density or probability mass function defined in (2.1) as the mixed density and to \(\pi _j, j =1, \ldots , m \) as the mixing probabilities.

We define the family of m-component mixture densities as the set

where \(f_{\varvec{\theta }_{p_m}}\) is given by (2.1).

Suppose we observe n random variables \(X_1, \dots , X_n \in {\mathcal {X}}\) which are i.i.d. according to an unknown density \(\sim f_0 \in \bigcup _{m\ge 1}{\mathcal {F}}_m\). What is the value of m that can be assigned to this density based on the observed data? It is clear that such a value needs to target the most parsimonious representation of the mixture. Estimation of the true complexity of \(f_0\) cannot be presented without touching upon this point, which is discussed in the next section.

2.2 Identifiability and Complexity of Mixture Models

We will now touch upon the identifiability issues arising within the mixture distributions framework, which is a crucial point when the aim is to estimate the true complexity. Identifiability of general mixtures of some additively closed family of distributions was proved in the pioneer work of Teicher [65], who recognized the importance of settling the issue of identifiability before launching into estimation of the mixing distribution. Several articles have been devoted to proving identifiability of finite mixtures of some particular classes of distributions such as finite mixtures of normal or gamma distributions; see e.g. [66]. For identifiability results in other classes or review papers on the subject we can refer to [19, 35, 36, 43, 49, 68].

The identifiability of a mixture is defined as follows: a finite mixture with respect to the family \({\mathcal {F}}\) is said to be identifiable if for any \(m \ge 1\) and any two elements \(f_{\varvec{\theta }_m}\) and \(f_{\varvec{\theta }'_m}\) in \({\mathcal {F}}_m\) satisfying the equality

then there exists a permutation \( \sigma : \{1, \ldots , p_m \} \mapsto \{1, \ldots , p_m \}\) such that the components of \(\varvec{\theta }_{p_m}\) and \(\varvec{\theta }'_{p_m}\) are equal up to the permutation \(\sigma \); i.e., \(\pi _{\sigma (i)} = \pi '_{i}, \ \varvec{\phi }_{d, \sigma (i)} = \varvec{\phi }'_{d, i}\) for \(i=1, \ldots , p_m\).

Different techniques have been developed to show identifiability. One of the most important results is the one shown in [76], which says that the characterizing condition of identifiability is linear independence of the family \({\mathcal {F}}\). Other characterizations or sufficient conditions could be built upon this result by resorting for example to using some additional properties of the elements of \({\mathcal {F}}\) or computing Fourier/ Laplace transforms (see [5, 36, 39]).

When identifiability holds, it is natural to think of the most economic representation of the finite mixture under study. Indeed, we have the inclusions

for all \(m \ge 1\), and hence we can introduce the following definition: the index of economical representation for some finite mixture density \(f \in \bigcup _{m \ge 1} {\mathcal {F}}_m\) is defined as

This index is exactly what is called the complexity (or order). Note that this number has to be unique, an immediate consequence of identifiability. Also, from a practical point of view, m(f) corresponds to the number of all the components that are actually part of the total population: all the mixing probabilities \(\pi _j, j \in \{1, \ldots , m(f)\}\) should satisfy \(\pi _j > 0\) by the very definition of m(f). The term identifiability is used here with some abuse as the components of \(\varvec{\theta }_{p_{m(f)}}\) are unique up to some permutation (whereas the mixed density is invariant under the m! permutations of the component labels). One can of course require for example that the mixing probabilities are labeled so that \(\pi _1< \dots < \pi _m\) in case they are all different. We will be following this convention when reporting the simulation results in Sect. 6.

The discussion above lays the ground for this survey. In the sequel, we shall assume that identifiability assumption holds. Also, the notation \(m(f_0) = m_0\) will be used, where \(f_0\) is the unknown density in \({\mathcal {F}}_{m_0}\) from which we observe a random sample. The true complexity or order, \(m_0\), as well as the true parameter vector

will be assumed to be unknown. The main goal of the methods reviewed further is to consistently estimate \(m_0\). An estimation procedure can be (but does not necessarily have to be) accompanied by the estimation of \(\varvec{\theta }_0\).

2.3 Popular Approaches to Mixture Model Estimation

Mixture model estimation has a long history. The early mixture model estimation techniques date back to the end of the 19-th century, when S. Newcomb [52] suggested an iterative reweighting scheme to compute the Maximum Likelihood (ML) estimator of the common mean of a mixture of a known proportions of a finite number of univariate normal populations with known variances. This scheme is regarded by many as a precursor of the well-known Expectation-Maximization (EM) algorithm.

A few years later K. Pearson [56] described an analytical and a graphical solutions to estimating the first five moments of an asymmetrical empirical distribution, which he was aiming to break up into two univariate normal curves. The graphical solutions for mixture model estimation stayed in the focus of attention until the second half of the 20-th century ([14, 34, 58]).

Between 1912 and 1922 R. Fisher [29] attempted to popularize the ML approach to fitting the mixtures. The evolution of the ML approach is considered in detail in [3]. In particular, Fisher made an analysis of the extensions of the method of moments to the likelihood equations as a way of increasing the quality of the estimates, which later caused a dispute with Pearson ([28, 57]). Around the 1950s C. R. Rao [59] used Fisher’s scoring method to estimate the parameters of a mixture of two Gaussian distributions with common variance, and soon after the ML estimation for identifying the number of components as well as for parameter estimation in finite mixture models was addressed in numerous publications, such as [22, 72, 73].

These days the most well-studied and widely-used approach to computing ML estimates for finite mixture models as defined in (2.1), is the EM algorithm, elaborately described in [23], the seminal work that greatly exhilarated the efficient usage of mixture models. The EM algorithm is implemented by assuming that there are latent variables that link every observation to one of the components, which, together with the observed data, yield complete data.

We will summarize the main idea behind this algorithm. To that end consider two sample spaces within the mixture model framework:

-

1.

the sample space of the incomplete observations, where only the realizations of the random variable X are observed, but no information on the mixing distribution \(G(\varvec{\phi }_d)\) is available;

-

2.

the sample space of the complete observations, the estimation of which can be performed explicitly.

For the sake of simplicity consider the one-dimensional case, \(\Phi \subseteq {\mathbb {R}}\). In this case we denote \(\varvec{\phi }_d\) simply by \(\phi \). The extension to the multidimensional case is possible but complicates the derivations.

Let \(x = (x_1,\dots ,x_n)\) be the observed realizations of the random variable X, and let \(z = ({\mathbf {z}}_1,\dots ,{\mathbf {z}}_n)\) denote the realizations of the corresponding unobserved (or latent) random vector \({\mathbf {Z}}\) indicating that the observation \(x_i, i=1,\dots ,n\) comes from the j-th component, \(j=1,\dots ,m\). In other words, \({\mathbf {z}}_i, i =1, \ldots , n \) are realizations of a multinomial distribution with probabilities \(\pi _1, \ldots , \pi _m\), and we have that

The pairs \(\varvec{y}_i = (x_i, \varvec{z}_i)\), for \(i=1,\dots , n\) are i.i.d. and they are usually referred to as the complete or augmented data. Let \(\varvec{y} = (\varvec{y}_1, \ldots , \varvec{y}_n)\). For a stipulated mixture complexity \(m \in {\mathbb {N}}\), let us denote by \(l^c_{\varvec{\theta }_{p_m}}\) the log-likelihood of the complete data; i.e.,

On the other hand, the log-likelihood of the observed data x is given by

It can be shown that the MLE

can be obtained by alternating between an expectation and maximization steps involving both the complete log-likelihood \(l^c_{\varvec{\theta }_{p_m}}\). This is precisely what the well-known EM-algorithm does. In the first step, the conditional expectation of \(l^c_{\varvec{\theta }_{p_m}}(\varvec{y})\) given the observed data x is computed under the current parameter. Then, the obtained expression is maximized over the parameter space and the maximizer becomes the new parameter. These two steps are repeated until convergence. If s is the number of the iteration of the current E-step, then it is easy to show that this step is completed by computing the conditional expectation of the multinomial vectors \(\varvec{z}_i\) given the observed data x. This yields for \(i=1, \ldots , n\) and \(j=1, \ldots , m\)

where \(({\hat{\pi }}^{(s-1)}_1, \ldots , \pi ^{(s-1)}_m, {\hat{\phi }}^{(s-1)}_1, \ldots , {\hat{\phi }}^{(s)}_m)\) is the MLE obtained at the \((s-1)\)-th step. Note that the maximizing mixing probabilities are easily obtained and are explicitly given in the s-th M-step by the expression

for \(j=1, \dots , m\). To obtain \({\hat{\phi }}^{(s)}_j, j=1, \ldots , m\), a numerical method might be required in case a a closed form is not possible. The optimization procedure then seeks to find at least the local maximum as finding the global maximum is not always possible. As noted in [50], the latter often occurs in the case of Gaussian mixtures with non-homogeneous dispersions (unequal covariance matrices). Components that have either one observation, or several identical observations or several nearly-identical observations, result in the estimated covariance matrices that are singular, which causes the likelihood function to be unbounded. Gaussian mixtures with homogeneous components result in covariance matrices that are restricted in the parameter space and thus do not have this problem. For references on the EM-algorithm, see e.g. [23] and [48].

The description given above treats one given m, a candidate for the true mixture complexity. To obtain an estimator for \(m_0\), the true complexity, one can resort to maximizing a penalized version of the observed log-likelihood. This means that the log-likelihood will be augmented by a penalty term depending on the model complexity. Several widely used examples of this technique include Akaike Information Criterion (AIC) [2], Bayes Information Criterion (BIC) [62], Integrated Completed Likelihood (ICL) [10], Laplace-Empirical Criterion (LEC) [49], Normalized Entropy Criterion (NEC) [9] and many others [50]. These only differ in the form of the penalty function, and we will concentrate on the two criteria that have gained most popularity in practice: The Bayesian Information Criterion (BIC) and Integrated Completed Likelihood (ICL). While BIC is most widely used for performing model selection tasks, ICL is most frequently applied for solving clustering problems.

The general idea is to treat the task of choosing the number of components in the mixture as a model selection problem by considering a sequence of models \({\mathcal {M}}_1, \dots , {\mathcal {M}}_M\) for \(m=1,\dots ,M\) with associated prior probabilities \(p({\mathcal {M}}_m)\), which are often taken to be equal. By the Bayes’ Theorem, the posterior probability of model \({\mathcal {M}}_m\), given the observed data \(\varvec{x}\) is proportional to the probability of the data given the model multiplied by the model’s prior. Under regularity assumptions, it can be show than twice the posterior probability of the mixture model with m components can be well approximated by the

where \(\nu _{{\mathcal {M}}_m} = p_m\) is the number of independent parameters in the model and \( \hat{\varvec{\theta }}_{p_m}\) is the MLE of \(\varvec{\theta }_{p_m}\). The true complexity is then estimated by finding the integer m which maximizes \(\text {BIC}_{m}\).

Given the discussion above, finding the number of components in the mixture that maximizes \(m \mapsto \text {BIC}_{p_m}\) is equivalent to choosing the mixture model with the greatest a posteriori probability. Some of the advantages of the BIC approach are that it is easy to implement, can be used for comparing non-nested models and was shown to be consistent for choosing the correct number of components in [40].

The ICL approach uses the log-likelihood of the complete data and replaces the unobserved labels \(z_{ij}, 1 \le i \le n, 1 \le j \le m\) by their maximum a posteriori (MAP) estimator, that is

Thus, for the mixture model with m components

The very useful relationship between \(\text {BIC}_{m}\) and \(\text {ICL}_{m}\) can be shown:

It has been shown in [31] that in some cases (e.g. for the mixtures of Gaussians) evaluating the likelihood at the a maximum a posteriori (MAP) estimator instead of the MLE helps the EM algorithm to avoid singularities or degeneracies.

Regularization and variable selection techniques have also found their application in this setting. For example, [55] proposed an estimation technique for Gaussian mixtures in the context of a clustering problem, where the likelihood function is augmented by an \(L_1\)-norm penalty term \(-\lambda \sum _{j=1}^{m} \sum _{k=1}^p |\mu _{jk}|\), where \(\mu _{jk}\) is the k-th coordinate of the j-th mean vector, and derived a modification of an EM algorithm fitted for the purpose. The \(L_1\) penalty can shrink some of the fitted means toward 0, thus leading to the most parsimonious model.

Example 1: EM solution for the mixture of Gaussian distributions. For a finite mixture of univariate Gaussian distributions with the parameter vector \(\varvec{\theta } = \big ( \pi _1, \dots , \pi _m, (\mu _1, \sigma _1), \dots , (\mu _m, \sigma _m)\big )\) and the mixture density given by

the E-step at the s-th iteration will update the probabilities given the current parameter vector \(\varvec{\theta }^{(s-1)}\)

The M-step provides the following solutions:

Further examples (for the mixtures of geometric and Poisson distributions) can be found in Appendix A in the supplementary materials.

Concluding this section it is necessary to point out that a great amount research has been carried out in this area, and multiple software applications have been developed for working with mixture models, in particular with the Gaussian mixture models that are most frequently used in practice. Most of the software is suited for model-based classification and in particular offering the opportunity to find the ML estimates via the EM algorithm. We refer interested practitioners to R packages Mclust [63] and mixtools [7] or the Matlab package MIXMOD [11].

3 Methods Based on the Hankel Matrices

The method of moments is generally considered to be less efficient when compared to maximum likelihood. Nonetheless, as justly argued in [46], there are situations where the method of moments reveals a nice mathematical structure. This is the case for the problem of estimating the true complexity of some finite mixture. As we will see below, the number of support points of a discrete mixing distribution with a finite number of jumps can be elegantly linked to whether the determinant of a special matrix of moments is equal to zero. Such a matrix is known under the name of a Hankel matrix.

We devote this section entirely to the estimation approaches based on the determinants of Hankel matrices of moments of the mixing distribution. The original method, with which we will start, was proposed in [21]. Additionally to the original approach we will describe a couple of its possible extensions. [21] motivated the method with a number of appealing features:

-

1.

it gives consistent estimators under some mild conditions;

-

2.

it requires no a priori upper bound on the unknown order of the mixture;

-

3.

it comes with low computational time as it does not involve estimation of the mixture parameters.

Another attracting property, not mentioned by the authors, is that the method bears a universal character and can be applied to continuous distributions as well as discrete distributions with no modifications, provided that the moment generating function of the distribution exists.

For the reader to be able to appreciate the elegant argument standing behind the method, we shall recall next the key theoretical results furnishing its basis.

3.1 The Main Theoretical Results and Basic Approach

Recall that we have confined the present study to a one-dimensional case, where \({\Phi } \subseteq {\mathbb {R}}\). For a given integer \(m \ge 1\) define the set

In other words, the component \(c_j\) is equal to the j-th moment of some distribution function G. For convenience, we will write \(\varvec{c}_{2m} = (c_1,\dots ,c_{2 m})^T\) for any given real numbers \(c_j, j =1, \ldots , 2m\). In [21] this set is defined more generally with non-negative measure G.

For \(\varvec{c}_{2m} \in {\mathbb {R}}^{2m}\), the Hankel matrix associated with this vector is the \((m +1) \times (m+1)\) real symmetric matrix , denoted \(H(\varvec{c}_{2m})\) and given by

with \([H(\varvec{c}_{2m})]_{1,1} = c_0 =1\). More explicitly, we have that

Next we state the key result which links the true complexity of a finite mixture to the Hankel matrix of moments. See also Proposition 1 in [21].

Theorem 3.1

For a given \(\varvec{c}_{2m} \in {\mathbb {R}}^{2m}\), the Hankel matrix \(H(\varvec{c}_{2m})\) is positive semidefinite if and only if \(\varvec{c}_{2m} \in {\mathcal {C}}_{2m}\). Furthermore, \(D_{m}:=\det \big ( H(\varvec{c}_{2m}) \big )=0 \) if and only if every distribution function G such that \(c_j = \int _{\Phi } \phi ^j dG(\phi )\), G is discrete with at most m support points.

Now we explain how the result above can be applied in the context of estimating the complexity of a finite mixture. Consider \(f_0\), a finite mixture of densities which belong to some family \({\mathcal {F}}\) and let \(G_0\) be the associated discrete distribution function with true complexity \(m_0\). Then, Theorem 3.1 says that

where \( D_m\), as above in Theorem 3.1, is the determinant of \(H(\varvec{c}_{2m})\) with

In other words, the correct order of the mixture is the first integer which sets the determinant to zero. But the theorem implies also that \(D_m =0\) for all \(m \ge m_0\). This characterizing feature of the true complexity is exploited to construct a sensible estimator. Indeed, assuming that it is possible based on the random sample \((X_1, \ldots , X_n) \) to obtain a strongly consistent estimator of any j-th moment of \(G_0\), \({{\hat{c}}}_j\) say, then the Hankel estimator of \(m_0\) proposed in [21] is given by

where

\(\{a_m\}_{m \ge 1}\) is a positive and strictly increasing sequence, and \(\{l_n\}_{n \ge 1}\) a positive sequence satisfying \(\lim _{n \rightarrow \infty } l_n=0\) (we have omitted writing the subscript n in the notation of the estimators of the moments and determinants).

Clearly, the term \( a_m l_n \) is acting as a penalty. Adding a penalty term to \(\vert {{\widehat{D}}}_m \vert \) is necessary because otherwise minimizing of \(m \mapsto \vert {{\widehat{D}}}_m\vert \) alone might yield an inconsistent estimator. In fact, strong consistency of \({{\hat{c}}}_j\) implies that \(\vert {{\widehat{D}}}_{m} \vert \) is a strongly consistent estimator of the true value \(\vert D_m \vert = D_m\) (see our remark below). Since the latter is equal to 0 for all \(m \ge m_0\), \(\vert {{\widehat{D}}}_{m} \vert \) will be close to 0 for all \(m \ge m_0\), which might result in choosing a value which is strictly larger than \(m_0\). Under some additional assumptions, consistency of \({\hat{m}}_n\) as defined above in (3.2) can be established as shown in Theorem 1 of [21]. We recall this result below.

Theorem 3.2

If for all integers \(j, m \ge 1\) we have that

almost surely as \(n \rightarrow \infty \), then \({\hat{m}}_n \rightarrow m_0 \quad \text {a.s.}\) as \(n \rightarrow \infty \).

Remark 3.1

Recall that \(D_m = \det \big (H(\varvec{c}_{2m}) \big )\). Thus, \(D_m\) seen as a multivariate real function of \(c_1, \ldots , c_{2m}\) (the components of \(\varvec{c}_{2m}\)), is infinitely differentiable. Thus, if \({{\hat{c}}}_j\) is a strongly consistent estimator of \(c_j\) for any integer \(j \ge 1\), then \({{\widehat{D}}}_m = \det \big (H(\hat{\varvec{c}}_{2m}) \big )\) is also a strongly consistent estimator of \(D_m\). Furthermore, a multivariate weak convergence of \(\hat{\varvec{c}}_{2m}\) toward \(\varvec{c}_{2m}\) as in the case where a multivariate Central Limit Theorem applies, the estimator \({{\widehat{D}}}_m\) will converge weakly to \(D_m\) at a rate that is as fast as that of \(\hat{\varvec{c}}_{2m}\). Typically, the estimators \({{\hat{c}}}_j\) will result from considering some empirical estimators which we know to be asymptotically normal. Below, we will touch upon this point in some more detail.

Remark 3.2

In the light of Remark 3.1, the condition \(({{\widehat{D}}}_m - D_m)/l_n \rightarrow _{a.s.} 0, \ \ \forall \ m \in {\mathbb {N}}\) made in Theorem 3.2 is satisfied in case \(({{\hat{c}}}_j - c_j)/l_n \rightarrow _{a.s.} 0\) for all \(j \in {\mathbb {N}}\). A typical situation is when \(\sqrt{n} ({{\hat{c}}}_j - c_j ) \rightarrow _d {\mathcal {N}}(0, \sigma ^2_j)\) (for some \(\sigma _j > 0\)) and \(l_n\) is such that \(\sqrt{n} l_n \rightarrow \infty \) in addition to \(l_n \rightarrow _d 0\).

Without going into the full proof of Theorem 3.2, let us give some intuition for the condition \(({{\widehat{D}}}_m - D_m)/l_n \rightarrow _{a.s.} 0, \ \ \forall \ m \in {\mathbb {N}}\). We have that

From the characterization if \(m_0\) in (3.1), it follows that \(D_m \ne 0\) for \(m < m_0\) implying that

almost surely as \(n \rightarrow \infty \), whereas

for all \(m \ge m_0\), with \(a_m> a_{m_0}, \forall \ m> m_0\) since the sequence \(\{a_m\}_{m \ge 1}\) is assumed to be strictly increasing. Thus, we expect that as \(n \rightarrow \infty \) the minimum of the penalized criterion to be achieved at \(m_0\).

The statement about consistency of \({{\hat{m}}}_n\) can be made more refined under additional regularity conditions. More precisely, suppose that for any integer \(m \ge 1\) there exist integrable functions \(\psi _j\) and \(f_j\) for \(j = 1, \ldots , 2m \) such that the j-th moment of \(G_0\) is given by

where \( {\mathbb {E}}[\varvec{\psi }_{2m}(X)]= \big ( {\mathbb {E}}[\psi _1(X)], \ldots , {\mathbb {E}}[\psi _{2m}(X)]\big )^T\). Define the estimator \({{\hat{m}}}_n\) the same way as above with

We have the following theorem. See also Theorem 2 in [21].

Theorem 3.3

Denote by \(\varvec{f}_{2m} \) the multivariate function defined as \(\varvec{f}_{2m} (\varvec{t}_{2m}) = (f_1(\varvec{t}_{2m}), \ldots , f_{2m}(\varvec{t}_{2m}))\) for \(\varvec{t}_{2m} = (t_1, \ldots , t_{2m})^T \in {\mathbb {R}}^{2m}\). Suppose that for any \(m \le m_0\),

-

\( \varvec{t}^{2m} \mapsto \det \big (H (\varvec{f}_{2m}(\varvec{t}_{2m})) \big ) \) is Lipschitz with respect to some norm on \({\mathbb {R}}^{2m}\),

-

for any \(m \le m_0 \) the generating functions \(u \mapsto \int \exp ( u \psi _j(x)) f_0(x) d\mu (x) \) exist in a neighborhood of 0 for all \(j =1, \ldots , 2m\).

Furthermore, assume that \( n^{1/2} l_n \rightarrow \infty \). Then, there exists a constant \(d > 0\) and integer \(n_0 > 0\) such that for all \(n \ge n_0\)

The main argument in the proof uses judicious upper bounds on the probabilities \({\mathbb {P}}({{\hat{m}}}_n \le m_0) \) and \( {\mathbb {P}}({{\hat{m}}}_n > m_0)\) based on concentration inequalities that involve the Cramer transform of the logarithm of the generating function of the centered random variables \(\psi _j - {\mathbb {E}}[\psi _j(X)]\) for \(j \in \{1, \ldots , 2m\}\) and \(m \le m_0\). Before commenting on the result itself, we would like to give some examples, which are relevant for the simulations section coming ahead.

Example 2: Mixture of Gaussian distributions. Consider a finite mixture of Gaussian distributions with density

with \(\varphi (x) = 1/\sqrt{2\pi } \exp (-x^2/2)\), and \(\theta _1, \ldots , \theta _{m_0} \in {\mathbb {R}}\). If \(X \sim f_0\), then X has the same distribution as \(Z + Y\) where \(Z \sim {\mathcal {N}}(0,1)\) and \(Y \sim G_0\) with \(G_0\) the mixing distribution with support points \(\theta _1, \ldots , \theta _{m_0}\) and mixing probabilities \(\pi _1, \ldots , \pi _{m_0}\) such that Y and Z are independent. Thus, for any \(j \ge 1\)

where \(\mu _0 = 1\) and for an integer \(r \ge 1\)

where x!! denotes the semifactorial of a number x.

Thus, the vector of moments \(\varvec{c}_{2m}\) satisfies the triangular linear system \(\varvec{c}_{2m} = B V\) where \(B = A^{-1}\) and A is the lower triangular \((2m) \times (2m) \) matrix

and

In this case, we have \(c_j = \sum _{k=1}^{2m} B_{j k} \left( {\mathbb {E}}[X^k] - (k-1)!! \ {\mathbb {I}}_{k \in 2 {\mathbb {N}}} \right) \). Thus, for location mixtures of Gaussian distributions we have shown that

for \(j \in \{1, \ldots , 2m\}\).

More examples are available in Appendix A in the supplementary materials.

Now we turn to commenting on Theorem 3.3. Although the result of that theorem seems to give an actual guarantee on the consistency of \({{\hat{m}}}_n\), the exponential bound on the probability of being wrong about \(m_0\) depends on \(n_0\) and a constant d which are unknown. In case d is small and \(n_0\) quite big, then consistency will not be observed for moderate and even big sample sizes: one would need an unrealistically huge number of observations to find the true complexity. Another problem is the estimation of the moments \(c_j, j =1, \ldots , 2m\) for large values of m. Although the method does not require to put an upper bound on m while finding the minimum of \(\vert {{\widehat{D}}}_m \vert + a_m l_n\) one has to choose some maximum admissible value for the mixture complexity. For large values of m the j-th moment \(c_j\) can become very large. When this is combined with a low quality estimator \({{\hat{c}}}_j\), \({{\hat{D}}}_m\) may be far away from 0, which is known to be the theoretical value for \(m \ge m_0\). Such a phenomenon is illustrated using mixture of Gaussian distributions

In Table 1 we give the first 8 theoretical moments \(c_j\) of the mixing distribution and the mean value of their estimates \({{\hat{c}}}_j\) based on 100 replications for each of the sample sizes shown in the table. Table 2 gives the corresponding mean value of \({{\hat{D}}}_m\) as well as its penalized versions with \(a_m = m\) and \(l_n = \log n/\sqrt{n}\) or \(l_n = \sqrt{\log n}/\sqrt{n}\) for \(m \in \{1,2,3, 4\}\) computed on the basis of the same replications. It is clear from the values of Table 2 that \({{\hat{m}}}_n =1\) even for this very well-separated mixture and for the large sample size \(n =10^4\).

Next, we examine what happens in a 2-component mixture of geometric distributions. To this aim, we consider the pmf

The parametrization we chose implies that \(f_0\) is a mixture of geometric distributions with success probability 0.7 and 0.2 respectively. In Table 3 one can see that the moments are accurately estimated for all sample sizes. However, the results of Table 4 indicate that the estimator \({{\hat{m}}}_n\) fails often to pick the correct mixture complexity, here \(m_0=2\) for the penalties \(a_m l_n = m \log n/\sqrt{n}\) and \(a_m l_n = m \sqrt{\log n}/\sqrt{n}\).

Our decision to take the penalty \(a_m l_n = m \log n/\sqrt{n}\) is based on the recommendation made in [21]. The second penalty \(a_m l_n = m \sqrt{\log n}/\sqrt{n}\) was added in these simulations in order to compare the results obtained with the basic approach of [21] with the first modification we propose below and which is based on scaling the estimates of the determinants.

The inconsistency noted in these examples, despite the nice theoretical guarantees of convergence of \({{\hat{m}}}_n\), are due to different reasons. In the Gaussian mixture, the penalty plays almost no role as \(\vert {{\widehat{D}}}_m \vert \) dominates with values that are blowing up as we let m take larger values. For this reason, the estimator picks \(m=1\) in all cases. In the geometric mixture, the picture is completely reversed since the moments \(c_j \in (0,1)\) and hence get smaller for larger orders j. This causes \(\vert {{\widehat{D}}}_m \vert \) to decrease with m. In this case, the penalty dominates and again \(m=1\) is often found as the minimizer of the penalized criterion.

The basic approach of [21] can suffer from serious underestimation (or overestimation) of the true mixture complexity even when the sample size is very large. Moreover, the question of how to choose the penalty term \(a_m l_n\) is not really settled in [21]. In fact, a penalty which would work for a certain family of distributions could perform miserably for another. A traditional approach here would be to resort to cross-validation to decide on an optimal choice for the penalty. Although this is a very important aspect of the problem, we choose not to pursue it here.

3.2 Modification of the Basic Approach Using Scaling

The discussion above reveals that while the estimator defined by [21] is quite appealing, it is very difficult to achieve consistency in practice. The main problem resides in that the method does not exploit any knowledge about the variability of \(\vert {{\widehat{D}}}_{m} \vert \). Without integrating the information about how these random variables behave stochastically (for n large enough), it would be almost impossible to say for example whether a small value obtained for \(\vert {{\widehat{D}}}_m \vert \) can be seen as an indication that the true determinant is really equal 0. One way of circumventing the above issue is to use a rescaled version of this estimator. The starting point here is to use the already noted fact that the true determinant of the Hankel matrix of moments \(\varvec{c}_{2m} \mapsto D_m \) is an infinitely differentiable function on \({\mathbb {R}}^{2m}\). Thus, assuming that we can use the Central Limit Theorem to the vector of estimators \(\hat{\varvec{c}}_{2m}\), then for any fixed \(m> m_0\) we get by applying the \(\delta \)-method that

where \(\varvec{W}_m= (W_1, \ldots , W_m)^T \sim {\mathcal {N}}(\varvec{0}, \Sigma )\), with \(\Sigma \) some nonnegative definite matrix of dimension \(m \times m\).

Although the covariance matrix \(\Sigma \) is unknown it can be estimated using re-sampling techniques. Here, we focus only on estimating the diagonal elements of \(\Sigma \), \(\sigma ^2_1, \ldots , \sigma ^2_m\). By sampling B times with replacement from the original sample \((X_1, \ldots , X_n)\) we obtain a new sample \((X^*_1, \ldots , X^*_n)\) which can be used to compute the bootstrap determinants \(\{{\widehat{D}}^*_{1},\dots ,{\widehat{D}}^*_{m}\} \). Repeating this procedure B times allows us to estimate \(\sigma _j/\sqrt{n} \) by computing the standard deviation \({\hat{\sigma }}^*_j \) of the bootstrap sample \(({\widehat{D}}^{*b}_{j}, b =1, \ldots , B, j = 1, \ldots , m)\). As the setting here is very standard, it follows that as \(n, B \rightarrow \infty \) \( {\hat{\sigma }}^*_j \approx \sigma _j, \ j =1, \ldots , m\) in probability.

As mentioned in the previous section, the true order of the mixture can be assumed to be smaller than some given value \(m = m_{max}\); i.e., the search of the minimizer of the penalized criterion will be performed in the set \(\{1, \ldots , m_{max}\}\). Assuming that \(m_{max} > m_0\), define the rescaled vector

Thus, we redefine the estimator \({{\hat{m}}}_n\) as

We will not give a formal proof of consistency of \({{\hat{m}}}_n\). The latter, however, can be intuitively seen to hold since it follows from the weak convergence in (3.6) that

and

where \((Y_1, \ldots , Y_{m_{max} - m_0 +1})^T \) is a multivariate Gaussian vector with a covariance matrix having all its diagonal terms equal to 1. One the one hand, this implies that for any integer \(m \in \{1, \ldots , m_0-1\}\) the probability that m is the location of the minimum should decrease as \(n \rightarrow \infty \). On the other hand, for \(m \ge m_0\) the penalty \(a_m \sqrt{n} l_n\) becomes the dominating term. Since \(a_m\) increases with m, the minimum is achieved at \(m_0\) with increasing probability.

In the following, the examples considered above will be revisited using this modified approach to see to what extent the estimation accuracy is ameliorated. More specifically, we use the scaling approach described above to compute the proportion of times the alternative estimator \({{\hat{m}}}_n\) defined (3.7) is equal to the true complexity in 100 independent replications. In both examples, we have taken \(m_{max} = 8\). The number of bootstrap replications was taken to be \(B=500\). From Table 5 and 6 , we see how the results drastically improve with the scaling method for the sample sizes \(n=1000, 10000\) with 100% or close for the recovery of the true complexity. The improvement seems to be more pronounced with the choice of penalty \(m \sqrt{\log n}/\sqrt{n}\). Thus, one conclusion that can be drawn here is that the method would greatly benefit from comparing the performance of different penalties. As mentioned above, such a comparison can be done using some cross-validation approach.

3.3 Modification of the Basic Approach Using Bootstrap

A specific feature of the Hankel matrix of moments methods discussed previously is the possibility to estimate the order of the mixture without estimating the parameters. However, it seems that there might be a high price to pay for avoiding this part: some of the essential features of the mixture may not be captured by the determinant alone, which can lead to the wrong answer with a “bad” penalty, even for very large sample sizes. Furthermore, in many applications it may be desirable to obtain the estimator of the order of the mixture as well as the estimators of all the parameters. We describe here another modification that is suited for this purpose. It is in essence a sequential testing procedure in which some statistic computed from the data is compared with a critical value obtained e.g. by re-sampling from the assumed theoretical model.

The said statistic can be taken to be either the determinant of the Hankel matrix \({\widehat{D}}_{m}\) as in the basic approach proposed by [21] or its rescaled version as described in the previous section. The idea is to replace minimizing the objective function in (3.2) or (3.7) by taking a reject/accept decision regarding whether the current value of m is equal to the true complexity. We describe this procedure only when the statistic is taken to be equal to \({{\widehat{D}}}_m\) since the modifications are rather obvious for the scaled version thereof:

-

for \(m \in \{1, \ldots , m_{max}\}\), compute \({{\widehat{D}}}_m\) and the maximum likelihood estimator (MLE) \(\hat{\varvec{\theta }}_m \) of \(\varvec{\theta }_{m_0}\) based on the given sample \(X_1, \ldots , X_n\);

-

from \(f_{\hat{\varvec{\theta }}_m} \), the corresponding estimate of the mixture density, generate a large number, B, of samples of size n to obtain a sequence of statistics \(\{ {\widehat{D}}^{*b}_{m} \}_{1 \le b \le B}\). Let \({\hat{q}}_{m,B, \alpha /2}\) and \({\hat{q}}_{m,B, 1-\alpha /2}\) be the the empirical \((\alpha /2)\)- and \((1-\alpha /2)-\)quantiles based on this bootstrap sample;

-

if \(m = m_{max}\) or \( {\hat{q}}_{m,B, \alpha /2} \le \widehat{D}_m \le {\hat{q}}_{m,B, 1-\alpha /2}\), then take \({{\hat{m}}}_n = m\), otherwise repeat the previous steps with \(m+1.\)

The procedure described above is not new in the context of estimating a mixture complexity. In fact, a similar approach will be encountered below with the only difference that it is based either on some minimum distance estimation or likelihood ratio statistic (see Sects. 4 and 5 ). In a nutshell, one sequentially tests

and declares as an estimate for \(m_0\) the first value of m for which \(H^m_0\) is not rejected.

In Table 7 and 8 we report the proportion of the time the sequential procedure described above gives the correct mixture complexity for the same finite Gaussian and geometric mixtures given in (3.4) and (3.5) respectively.

From the simulations results obtained in Table 7 and 8 we can see that this other modification of the original Hankel matrix method is less successful for the Gaussian mixture but still works well for the geometric one. This might be explained again by the large values of the higher-degree moments of the Gaussian distribution which impact heavily the quality of estimating the determinants. This is not at all an issue with the geometric distribution whose moments are much easier to estimate.

3.4 Extension of the Hankel Matrix Approach using Neural Networks

The conclusions achieved on the basis of the simulation study, summarized in Sect. 6, stipulate that there is a need of search for a more reliable and universal mixture order estimation technique that would yield more precise estimates irrespectively of the underlying scenario. Obviously, the approaches we have already examined yield estimators which depend on the features involved in a non-linear fashion. To this extent we decided to turn our attention the popular statistical tool designed specifically for modelling nonlinearities between the sets of input and output variables - Neural Networks (NNs), in the hope that they might identify patterns and relationships that the other approaches cannot capture.

For the past decade the amount of research carried out in the field of NNs has experienced exponential growth, and a great multitude of NN types and classes have been designed to successfully solve a wide range of problems. The simplest of these tasks like image labelling or pattern recognition are usually solved by feed-forward network architectures such as the multi-layer perceptron (MLP), convolutional neural network (CNN) or radial basis function network (RBFN). More sophisticated tasks such as speech recognition or text translation require more complex interactions between the layers of the network, which are implemented in such architectures as long-short-term memory (LSTM).

At this stage of our research we are not aiming at estimating the whole mixture model (finding the optimal complexity as well as all its parameters) but only pursue the goal of identifying the number of subgroups in the population based on the observed data. Thus, when cast into the NN framework, the problem of estimating the number of components in a mixture can be viewed as a supervised multiclass classification problem and the relevant questions that need to be addressed are

-

discovering the most informative features to be fed into the NN and

-

devising an adequate design for the training set;

-

proposing the optimal NN architecture;

-

choosing the learning algorithm;

-

determine whether a universal architecture for multiple families of distributions can be found;

-

understanding whether using NNs is beneficial compared to the other methods.

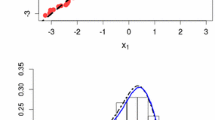

It seems natural to start the search for a well-suited network by browsing first thorough the simplest class of the NNs: the multilayer perceptron (MLP). Despite of their relative simplicity, networks with just two layers can approximate any continuous functional mapping [12]. One of the simplest possible architectures of the considered model with only two hidden layers is depicted in Fig. 1.

The input features \(x_1, \dots , x_d, d \ge 1\), the first and the second hidden layers consist of u and r neurons respectively, the weights vector \(\varvec{\omega }\) is learned by optimizing the loss function \(J(\varvec{\omega } = (\omega ^{(1)}_{1,1}, \dots , \omega ^{(3)}_{6,r} )^T)\), which is taken to be the cross entropy function, which is most often used in multiclass classification tasks such as ours:

where

are known labels for each of the generated vector of features in the training sample.

To this end, the choice over the optimal configurations is restricted to deciding on the number of hidden layers in the network, the number of neurons in each layer and the corresponding activation functions, the loss function and the learning algorithm.

For estimating the order of a mixture using a NN, one needs an appropriate assumption on the possible maximal number of components. We take the maximum number of components to be 6 for our task.

Recall from Sect. 3 that Hankel’s criterion is backed by elegant, orderly statistical theory, however the method’s performance turns out to be rather poor in practice. We use Hankel matrix determinants as inputs to the MLP to try to improve the estimation results by exploiting the information concentrated in the determinants without resorting to the use of any penalty function. Our experience reveals that using sequences of the first 6 Hankel matrix determinants of the mixing distribution as inputs leads to improved results when compared to other tested options. For this reason we regard this approach as an extension to the original Hankel technique.

Using the sequence of the Hankel determinants as inputs produced resulted in high performance for the Geometric mixtures, but showed poorer performance for the Poisson mixture due to the fact that the determinants for the Poisson mixtures tend to blow up while those for the Geometric mixtures stay bounded within a [0, 1] interval. For that reason the inputs for the Poisson mixture had to be modified. One of the modifications led to good performance was the relative changes in the absolute values of the Hankel determinants:

To ensure the variety of the training examples, the characteristics and structure of the data that is used for prediction should be scrutinized and taken into account. The training set should be designed to be as representative as possible of the data of interest. The following procedure can serve as a useful example. For the considered mixtures distributions the parameters for each mixture component is chosen without replacement from a grid with a pre-specified step to insure that these are distinct. A grid on [0.05, 1] with step 0.05 for the geometric distribution should do well in most of the applications. A grid on [1, 20] with step 1 for the Poisson can be taken if the expected rate of occurrences is believed not to exceed 20 by much and the parameters of subpopulations are separated by at least 1 unit. The step value can be reduced if more precision is desired. The mixing proportions can be taken on a gird with an appropriate step size in a similar way, in this case replacement is allowed and the generated results should be normalized. The mixtures with the parameters obtained in this way are then used for generating samples. It seems to be useful to enrich the training data set by simulating several times from each of the resulting mixtures to account for possible variation in the sample populations during the training.

The 6 output neurons of the output layer is further processed by softmax activation function \(\sigma : {\mathbb {R}}^6 \longrightarrow [0,1]^6\), which ensures that the estimated class probabilities live between 0 and 1 and sum up to 1:

where \({\hat{q}}_k\) us the resulting value of the k-th output neuron.

Whenever optimizing the loss function, the value of the learning rate becomes of importance: when too small, the weights of the NN are hardly updated, and much time is needed for the network to find a solution; when too large, the weights are updated in large increments, and overshooting the optimum becomes highly probable. In the case of mixture order estimation the value of the learning rate is influenced by the values of the parameters of the mixtures in the training set: an efficient learning rate for the geometric mixtures, where the parameters lie within (0, 1] and thus higher precision is required to separate the components, will be smaller than for Poisson or Gaussian mixtures where the parameters can range from 0 to 20.

The output of the MLP is a vector of estimates of the class probabilities, that is, the probabilities of an observation (represented by either a sample from a mixture distribution or a vector of alternative relevant features) belonging to each of possible 6 classes: \({\hat{p}}_k , k \in \{1, 2, 3, 4, 5, 6\}\). The predicted number of components in the mixture is taken to be the class with the highest estimated probability:

The search for a successful model (done using KerasTuner library [54]) requires examination of a large parameter space even for a simple network such as a MLP. Combinations of several hyperparameters of the NN were kept track of in order to identify the optimal NN architecture:

-

activation function: relu, tanh, sigmoid

-

number of layers: \(1, 2, \dots , 9, 10\)

-

neurons in each hidden layer: \(10, 25, 40, \dots , 280, 295, 310\)

-

dropout layer after the last hidden layer: dropout rate between 0 and 0.1

-

learning rate for the optimization algorithm: \(10^{-2}, 10^{-3} , 10^{-4}\)

A set of 10000 samples was used for training the NN, the motivation being that taking the mo- ments and determinants as the features requires quality estimation thereof. Therefore, while a sample of this size is rarely available in real-world datasets, the emphasis was placed on finding a neural network that would perform well if the estimates are good. In practice, a neural net- work trained with 10000 samples still predicts well when the test sample size is much smaller.

Unfortunately, we were not able to find a single MLP specification that would work equally well for all considered families of distributions - Gaussian, geometric and Poisson. Table 9 presents three different MLP configurations for Gaussian, geometric and Poisson mixtures that achieved satisfactory performance in our simulations. The predicted class probabilities as well as prediction accuracy on a number of test cases for networks with the denoted specifications can be found in Sect. 6.

4 Methods Based on Minimum Distance Estimators

4.1 General Setting

The estimation techniques discussed in this section are mainly based on the works [69, 74, 75]. Additional relevant references will be mentioned below. In a nutshell, these techniques use the minimal distance between a consistent estimator of \(f_0\), \({{\hat{f}}}_n\) say, and an estimator of the latter in the class of finite mixtures with m components \(m \ge 1\). Using the notation of Sect. 2, this means that the estimator of the true \(m_0\) will be based on the projection of \({{\hat{f}}}_n\) on the class \({\mathcal {F}}_m, m \ge 1\) in a sense that will be determined. As noted above, these classes are nested; i.e., \({\mathcal {F}}_m \subset {\mathcal {F}}_{m+1}\). Thus, the basic approach stops at the first m where the projection on \({\mathcal {F}}_{m+1} \) does not bring a substantial improvement over the projection on \({\mathcal {F}}_m\). One main feature of the methods investigated in this section is that one performs estimation of the parameters of the mixture as well as the mixture complexity.

In the above mentioned papers, the projection of \({\mathcal {F}}_m\) makes use of the Hellinger or the \(L_2\) distances. The estimation method suggested in [74] is built upon a model selection procedure by sequentially fitting the nested mixture models. Thus, the method is reminiscent of the sequential testing approach already encountered above for the second modified version of the determinant of the Hankel matrix of moments. The procedure allows at each iteration to search over a higher class by adding one component to the mixture and find the best model within each class until adding another component brings no more benefit in the sense that it decreases the objective loss function by an amount smaller than a specified tolerance level. In the sequel, we consider only the situation where the dominating measure, \(\mu \), is either the counting or Lebesgue measure. In the first case, the nonparametric estimator of \(f_0\) is the empirical probability mass function given by

In the second one where X is absolutely continuous, we consider a kernel density estimator with fixed or random bandwidth \(c_n\):

where K is some standard kernel function.

4.2 The Minimum Distance Estimator: The Basic Approach

In the following, let \({\mathcal {F}}\) denote the class of densities that are mixed. Also, let \({\mathcal {D}}\) be either the Hellinger or \(L_2\) distance, that is for two densities f and g with respect to \(\mu \) we have either

or

Recall the following notation from Sect. 2: For a given

where \(p_m = m(d +1) -1\), we denote by \(f_{\varvec{t}_{p_m}}\) the m-component mixture density given by \(f_{\varvec{t}_{p_m}}(x) = \pi _1 f_{\varvec{\phi }_{d,1}}(x) + \ldots + \pi _m f_{\varvec{\phi }_{d,m}}(x)\). For a given density f, we now consider the functional

provided that the minimum exists. Here, \(\varvec{\theta }_{p_m}^{{\mathcal {D}}}(f)\) denotes the set of all minimizers as uniqueness of the solution is not guaranteed. Note that our notation is different from the one used in [8, 74, 75] and [69]. In case \(f = {{\hat{f}}}_n\), we have the following definition: for a given m and the non-parametric estimator \({\hat{f}}_n\) defined above in (4.1) or (4.2), the minimum distance estimator with respect to \({\mathcal {D}}\) of \(\varvec{\theta }_{p_m}\) is defined as

provided that a minimizer exists.

Proving existence of a minimizer \(\varvec{\theta }_{p_m}^{{\mathcal {D}}}(f)\) for some given density f requires careful argumentation under some regularity conditions. When \({\mathcal {D}}\) is the Hellinger distance, Theorem 1 in [8] gives a proof of this existence under the condition that \(\varvec{t}_{p_m} \mapsto f_{\varvec{t}_{p_m}}(x)\) is continuous for almost every \(x \in {\mathcal {X}}\), that the mixture is identifiable and the parameter space \(\Theta _{p_m} = {\mathcal {S}}_{m-1} \times \Phi ^m\) is compact. Also, the same theorem proves uniqueness of \(\varvec{\theta }_{p_m}^{{\mathcal {D}}}(f)\) in case f is itself a finite mixture. In order words, if \( f = f_{\varvec{\theta }_{p_{m}}}\), then \(\varvec{\theta }_{p_m}^{{\mathcal {D}}}(f) = \varvec{\theta }_{p_m} \). This can be easily seen as an immediate consequence of identifiability. When \({\mathcal {D}}\) is the \(L_2\) distance and \(\mu \) is the counting measure on the set of non-negative integers, then [69] show a similar theorem while relaxing the condition of compactness on the parameter space. The main building block in the proof is to show that the mapping

is continuous. In the discrete setting considered in [69], a proof of the continuity property can be based on a slightly different argument. Indeed, if \(\varvec{t}^{(k)}_{p_m}\) is a sequence converging to \(\varvec{t}_{p_m}\) as \(k \rightarrow \infty \), then by Minkowski’s inequality (also used in page 4252 of [69])

The latter sum converges to 0 by continuity of \(\varvec{t}_{p_m} \mapsto f_{\varvec{t}_{p_m}}(x)\) and application of the Sheffé’s Theorem. For existence of a minimizer when \({\mathcal {D}}\) is the \(L_2\)-distance, [69] makes the assumption that for the pmf f to be projected, for any m there exist some compact C (which depends on m but we omit writing this dependence explicitly), and \(\varvec{\theta }^*_{p_m}\) such that

Such an assumption is not needed in case \(\Theta _{p_m}\) is itself compact. Also, the compact C is rather abstract and one only needs to exhibit its existence in some way. It is clear that even when \(\varvec{\theta }_{p_m}^{{\mathcal {D}}}(f)\) is not a singleton, we have \(f_{\varvec{\theta }_{p_m}} = f_{\varvec{\theta }'_{p_m}}\) a.e. for two minimizers \(\varvec{\theta }_{p_m}, \varvec{\theta }'_{p_m} \in \varvec{\theta }_{p_m}^{{\mathcal {D}}}(f)\). When \(f = {{\hat{f}}}_n\), we will denote the corresponding density \(f_{\varvec{\theta }_{p_m}}\) (or \(f_{\varvec{\theta }'_{p_m}}\)) by \({\widehat{f}}^{{\mathcal {D}}}_m\). Note that we have omitted the subscript n, and replaced \(p_m\) by m for the sake of a lighter notation. By definition of \(\varvec{\theta }_{p_m}^{{\mathcal {D}}}(f)\) we have

For \(f= f_0 \in {\mathcal {F}}_{m_0}\) we write

Note that \(f^{{\mathcal {D}}}_{m} = f_0\) for all \(m \ge m_0\). The roles of \({\widehat{f}}^{{\mathcal {D}}}_m\) and \(f^{{\mathcal {D}}}_m\) will become clear below. Although our notation for those projections is different from the one used in the aforementioned papers, our choice is driven by our desire to maintain some notational coherence throughout this survey.

Now, we describe how the basic approach works with the minimum distance estimators. The estimation procedure as outlined in [74] is much inspired by the work of [37]. The latter paper is however mainly focused on estimating the true complexity of a finite mixture of Gaussian distributions using the Kullback-Leibler divergence instead of the Hellinger (or \(L_2\)) distance. There are two starting points of the basic approach. The first one is to note that the true mixture complexity \(m_0\) satisfies

While the identity in (4.6) is a direct consequence of identifiability, the one in (4.7) is less obvious. Note that this identity is proved if we show that for \(m \le m_0-1\), \({\mathcal {D}}(f_0, f^{{\mathcal {D}}}_m) > {\mathcal {D}}(f_0, f^{{\mathcal {D}}}_{m+1})\). A proof of this fact when \({\mathcal {D}}\) is the Hellinger distance can be found in p. 1485 of [74], where an auxiliary lemma (Lemma A.4) was used. For the case where \({\mathcal {D}}\) is the \(L_2\) distance, and for the sake of completeness, we give a proof in Appendix C in the supplementary materials.

Based on the discussion above, it seems natural to search for the first m which minimizes some empirical version of the distance \({\mathcal {D}}^2(f_0, f_m^{{\mathcal {D}}})\), namely \({\mathcal {D}}({\hat{f}}_n,{\hat{f}}^{{\mathcal {D}}}_{m})\). The second one is to recall once more the inclusion \({\mathcal {F}}_m \subset {\mathcal {F}}_{m+1}\). This implies that

The inequality above means that without penalization it is in principle impossible to find a finite order which can be taken as an estimator of \(m_0\). This overfitting is accounted for by adding a penalty term which is proportional to the number of parameters in the mixture model. This yields the following criterion

for some chosen sequences \(\{v_m\}_m\) and \(\{b_n\}_n\) such that the former is increasing and the latter satisfies \(\lim _{n \rightarrow \infty } b_n =0\). Note that in the works [74, 75] and [69], \(b_n v_m /n\) is taken instead. Now, mimicking the property in (4.7) gives rise to the following definition of the minimum distance estimator

If this minimum does not exist, then \({\hat{m}}_n=\infty \). The term \(\alpha _{n,m}\) can be seen as a threshold so that an integer m is declared to be the estimator when the projection of \({{\hat{f}}}_n\) on the class \({\mathcal {F}}_{m+1}\) yields an insignificant change in comparison with its projection on the previous class \({\mathcal {F}}_m\).

Strong consistency of the minimum distance estimator defined above result was stated in Theorem 1 in [75] for the Hellinger distance and in the Consistency Theorem Section in [69]. In the former, the nonparametric estimator \({{\hat{f}}}_n\) is taken to be a kernel estimator, as defined above in (4.2), with bandwidth \(c_n\) such that \(\lim _{n \rightarrow \infty } \left( c_n + (nc_n)^{-1}\right) = 0\). In the latter, \({{\hat{f}}}_n\) is the empirical probability mass function as given in (4.1). Now, the only condition assumed on \(\alpha _{n,m}\) in these convergence theorems is that \(\alpha _{n,m} \rightarrow 0\) as \(n \rightarrow \infty \). This is of course guaranteed by the fact that \(\lim _{n \rightarrow \infty } b_n = 0\). However, we believe that this statement is not accurate as is, since the penalty needs to depend on the rate of convergence of \({{\hat{f}}}_n\) to the true density \(f_0\). This rate of convergence is known to depend on the smoothness of \(f_0\) and the kernel K. In Appendix C in the supplementary materials, we explain why the condition \(\lim _{n \rightarrow \infty } \alpha _{n,m} =0\) is not enough.

4.3 Modification of the Basic Approach Via Bootstrap

In this section we propose a modification of the basic approach based on minimal distance between the projection of a non-parametric estimator on the class of m-component mixtures and this estimator augmented with some given threshold. We resort in this modification to a parametric bootstrap procedure in order to avoid the bad choice of a threshold. For a given integer \(m \ge 1\), consider the hypothesis testing as in (3.8)

and recall the estimator \({{\widehat{f}}}^{{\mathcal {D}}}_m\) as defined in (4.3). Let us now define

To obtain the distribution of \(\Delta _m\) under the null hypothesis \(H^m_0\), we draw B independent samples of size n from the fitted density \({{\widehat{f}}}^{{\mathcal {D}}}_m\). For \(b =1, \ldots , B\), we compute the non-parametric estimators \({{\hat{f}}}^{(b)}_n\), \({\widehat{f}}^{{\mathcal {D}}, (b)}_{m}\) and \({\widehat{f}}^{{\mathcal {D}}, (b)}_{m+1}\) and the corresponding difference

If \({\hat{q}}_{B, \alpha /2}\) and \({\hat{q}}_{B, 1-\alpha /2}\) denote the empirical \(\alpha /2\) and \((1-\alpha /2)\)-quantiles based on the bootstrap sample \((\Delta ^{(1)}_m, \ldots , \Delta ^{(B)}_m)\), then \(H^m_0\) is rejected if

and the current candidate m is replaced by \(m+1\). We take as our estimator for \(m_0\) the first m for which the null hypothesis is not rejected. Here, we report simulations results for the two examples for finite mixtures considered above; see (3.4) and (3.5). In these simulations, \(B=500\) and the sample sizes considered are \(n =100, 1000, 10000\). The performance of the method proposed in this section is assessed through the proportion of times the estimator is equal to the true complexity (3 and 2 respectively). We used Hellinger distance for the Gaussian mixture and \(L_2\) distance for the geometric mixture.

From Tables 10 and 11 one can see that coupling the bootstrap with distance minimization gives very promising results. The downsize of this modification remains essentially the computational burden which comes with the resampling step.

5 Sequential Likelihood Ratio Tests with Bootstrap

Likelihood-based methods play a central role in statistical inference. Among these methods the likelihood ratio test (LRT) is one of the most widely used in practice. The LRT has a simple interpretation and enjoys the property of being invariant under re-parametrization; see for example [41]. Furthermore, whenever certain regularity conditions are satisfied Wilks’ theorem [71] says that, under the null hypothesis, it converges weakly to a chi-square distribution as the sample size grows to \(\infty \). The degrees of freedom of the limiting chi-square distribution are determined by the difference of the dimension of the whole parameter space and that of the null space. However, and as already mentioned above, the regularity conditions required for the asymptotic theory to hold are not satisfied in the mixture problem. The issue is that it is always possible to write a mixture model with m components as a model with \(m+1\) components (or more) by setting some of the mixing probabilities to 0. Hence, the true parameter under the null hypothesis lies on the boundary of the alternative space. To better explain the problem, let us consider the example of testing whether a random sample was generated from a (single) Gaussian distribution \({\mathcal {N}}(\theta ,1)\) versus a 2-component Gaussian mixture, where each of the components has variance equal to 1. If \(f_0\) denotes the true density, then the goal is to test

for some \(\theta \in {\mathbb {R}}\) and \( \theta _1 \ne \theta _2 \in {\mathbb {R}}\) and \(\pi _1 \in (0,1)\). If we define here the likelihood ratio as the ratio of the maximum likelihoods over the null and the whole space, then the maximization needs to be done on \({\mathbb {R}}\) (the null space) and

(equal to the whole parameter space; i.e., the union of the null and alternative spaces). Thus, a density under \(H_0\) lies on the boundary in the sense that \(f_0\) results from either setting the mixing probability \(\pi _1\) to 1 or 0 and taking \(\theta _1 = \theta \) (or \(\theta _2=\theta \)), or letting \(\theta _1 =\theta _2\) while \(\pi _1 \) is arbitrary. In the classical setting, one of the main arguments which leads to the chi-square distribution as the weak limit is the use of Taylor expansion up to the second order of the log-likelihood at the global MLE around the true parameter. We can refer here to the proof of Theorem 22 in [26] for well-explained and rigorous arguments. Things then work because the true parameter is an interior point and has a unique representation as an element in the whole parameter space. In the mixture model setting, this argument does not work because, as it can be seen from the example above, the true parameter has different representations under the null hypothesis where it is on the boundary. This non-standard situation has triggered a strong interest for either computational or theoretical investigation of the limit distribution of the LR statistic under the null hypothesis. In this context, we can refer to [1, 13, 64], and [47]. For a nice review of the papers on this subject, we can refer to Section 6.5 in [49] among others. The main message that one can take from these works is that when the limit distribution of the LRT can be simply described, it is a mixture of chi-square distributions. In more complicated cases, this limit distribution is given more abstractly by \(\sup _{s \in {\mathcal {S}}} \max (0, Y_s)^2\) where Y is some well-defined centered Gaussian process and \({\mathcal {S}}\) is some suitable set. See for example [47] where it is shown that \({\mathcal {S}}\) is the ensemble of cluster points of some generalized score.

In this section we review the sequential testing procedure based on LRT and use of resampling for approximating the distribution of the test statistic under the null hypothesis. This method was proposed in [38] for estimating the true complexity of a finite mixture of Poisson. Although the focus there was put on that family, the approach can certainly be extended to other distributions. Consider again the hypothesis testing problem

Using the same notation as above, we define the maximum likelihood under \(H^m_0\) and \(H^m_1\) as

where \(L_{\varvec{X}} \) denotes the likelihood function based on the sample \(\varvec{X} = (X_1, \ldots , X_n)\) from the unknown mixture, and \(p_m = m (d+1) -1\). As in [38], consider the log-likelihood ratio statistic

A mixture with smaller number of components will be rejected in favor of the larger model whenever the log-likelihood ratio statistic is large. Otherwise, the mixture will be declared to have m component in the absence of a strong evidence against it. Exactly as in Sects. 3 and 4 , the decision against \(H^m_0\) is taken sequentially starting with \(m=1\) until \(H^m_0\) cannot be rejected, in which case m will be declared as the estimator of \(m_0\). In view of the issues related with deriving the asymptotic distribution of the log-likelihood ratio statistic, one can resort to a parametric bootstrap. As this is already done above, the description of the procedure is omitted.

We give the results of this procedure for the two finite mixtures with \(m_0=3\) and 2 already considered above.

6 Simulation Results

In this section we describe the simulation results obtained for sample sizes \(n \in \{50, 100, 500, 1000, 5000, 10000\}\) using the procedures described in the previous sections for finite mixtures of Gaussian, geometric and Poisson distributions with \(m_0 \in \{2, 3, 4 \}\). Throughout this study, unless explicitly stated otherwise, the standard deviations for Gaussian mixture components are assumed to be 1. For the minimum distance-based methods, we follow the recommendations made in the relevant papers [75] and [69] and use the following two penalty functions \(\alpha _{n, m}\), with m denoting the stipulated mixture order and n the sample size:

-

the penalty based on the Akaike Information Criterion (AIC)

\(\alpha _{n, m} = \frac{0.6}{n} \log \Big (\frac{m+1}{m}\Big )\) for the \(L_2\) distance and \( \alpha _{n, m} = \frac{2}{n}\) for the Hellinger distance

-

the penalty based on the Schwarz Bayesian Criterion (SBC)

\(\alpha _{n, m} = 0.6 \frac{\log n}{n} \log \Big (\frac{m+1}{m}\Big )\) for the \(L_2\) distance and \(\alpha _{n, m} = \frac{\log n}{n}\) for the Hellinger distance.

For all simulations, we used 500 replications to compute the frequencies of an exact recovery of the true complexity. These are displayed in Tables 28, 29, 30, 31, 32, 33 and 34 to be found in Appendix B in the supplementary materials.

Clearly, the performance of the NN’s cannot be directly compared to the performance of the other methods we have considered. One of the reasons being that the NN framework is very much different from the other approaches in terms of training and testing procedures applied. The neural network requires to encounter as many different mixtures as possible to be able to learn the latent structure, while the other techniques (except for the Hankel approach) are seeking the best fit for a given sample from the target mixture. We still report the NN results along with the performances of the other methods with a few reservations so that the reader could put together the full picture. Several points need to be borne in mind when reading and interpreting the NN performance:

-

the values reported are the predicted class probabilities that reflect how sure the network is that an observation (one of the tested mixtures) belongs to each of the represented classes;

-