Abstract

Nonlinear mixed effects models have received a great deal of attention in the statistical literature in recent years because of their flexibility in handling longitudinal studies, including human immunodeficiency virus viral dynamics, pharmacokinetic analyses, and studies of growth and decay. A standard assumption in nonlinear mixed effects models for continuous responses is that the random effects and the within-subject errors are normally distributed, making the model sensitive to outliers. We present a novel class of asymmetric nonlinear mixed effects models that provides efficient parameters estimation in the analysis of longitudinal data. We assume that, marginally, the random effects follow a multivariate scale mixtures of skew-normal distribution and that the random errors follow a symmetric scale mixtures of normal distribution, providing an appealing robust alternative to the usual normal distribution. We propose an approximate method for maximum likelihood estimation based on an EM-type algorithm that produces approximate maximum likelihood estimates and significantly reduces the numerical difficulties associated with the exact maximum likelihood estimation. Techniques for prediction of future responses under this class of distributions are also briefly discussed. The methodology is illustrated through an application to Theophylline kinetics data and through some simulating studies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This is the birth centenary year of the living legend and giant in the world of statistics, Prof. C.R. Rao. This article is a partial reflection of Dr. Rao’s contributions to statistical theory and methodology, including sufficiency, efficiency of estimation, as well as the application of matrix theory in linear statistical inference and beyond. In this paper, we extend many results from linear models to nonlinear mixed effects (NLME) models which have been receiving notable attention in recent statistical literature, mainly due to their flexibility for dealing with longitudinal data and repeated measures data. In a NLME framework it is routinely assumed that the random effects and the within-subject measurement errors follow a normal distribution. While this assumption makes the model easy to apply in widely used software (such as R and SAS), its accuracy is difficult to check and the routine use of normality has been questioned by many authors. For example, Hartford and Davidian [8] showed through simulations that inference based on the normal distribution can be sensitive to underlying distributional and model misspecification. Litière et al. [16] showed the impact of misspecifying the random effects distribution on the estimation and hypothesis testing in generalized linear mixed models. Specifically, they showed that the maximum likelihood estimators are inconsistent in the presence of misspecification and that the estimates of the variance components are severely biased. More recently, Hui et al. [10] showed through theory and simulation that under misspecification, standard likelihood ratio tests of truly nonzero variance components can suffer from severely inflated type I errors, and confidence intervals for the variance components can exhibit considerable under coverage. Thus it is of practical interest to explore frameworks with considerable flexibility in the distributional assumptions of the random effects as well as the error terms, which can produce more reliable inferences.

There has been considerable work in mixed effects models in this direction. Verbeke and Lesaffre [32] introduced a heterogeneous linear mixed model (LMM) where the random effects distribution is relaxed using normal mixtures. Pinheiro et al. [24] and Lin and Wang [14] proposed a multivariate Student-t linear and nonlinear (T-LMM/NLMM) mixed model, respectively, and showed that it performs well in the presence of outliers. Zhang and Davidian [35] proposed a LMM in which the random effects follow a so-called semi-nonparametric distribution. Rosa et al. [27] adopted a Bayesian framework to carry out posterior analysis in LMM with the thick-tailed class of normal/independent distributions. Moreover, Lachos et al. [13] proposed a skew-normal independent (SNI) linear mixed model based on the scale mixtures of skew-normal (SMSN) family introduced by Branco and Dey [4], developing a general EM-type algorithm for maximum likelihood estimation (MLE). To avoid confusion, in this paper, we use the acronym SMSN instead of SNI to refer to the scale mixtures of skew-normal family of distributions.

In the nonlinear context, Lachos et al. [12] considered the Bayesian estimation of NLME models with scale mixtures of normal (SMN) distributions for the error term and random effects, Lachos et al. [11] developed a Bayesian framework for censored linear and nonlinear mixed effects models replacing the Gaussian assumptions for the random terms with SMN distributions, and De la Cruz [5] also considered a Bayesian framework to estimate NLME models under heavy-tailed distributions, allowing the mixture variables associated with errors and random effects to be different. From a frequentist perspective, Meza et al. [20] proposed an estimation procedure to obtain the maximum likelihood estimates for NLME models with NI distributions, and Galarza et al. [7] developed a likelihood-based approach for estimating quantile regression models with correlated continuous longitudinal data using the asymmetric Laplace distribution, both using a stochastic approximation of the EM algorithm. Furthermore, Russo et al. [28] and Pereira and Russo [21] considered a NLME model with skewed and heavy-tailed distributions, with the limitation that the nonlinearity is incorporated only in the fixed effects.

Extending the work of Lachos et al. [13], in this paper we propose a parametric robust modeling of NLME models based on SMSN distributions. In particular, we assume a mean-zero SMSN distribution for the random effects, and a SMN distribution for the within-subject errors. Together, the observed responses follow conditionally an approximate SMSN distribution and define what we call a scale mixtures of skew-normal nonlinear mixed effects (SMSN-NLME) model. In particular, the SMSN distributions provide a class of skew-thick-tailed distributions that are useful for robust inference and that contains as proper elements the skew-normal (SN), skew-t (ST), skew-slash (SSL), and the skew-contaminated normal (SCN) distributions. The marginal density of the response variable can be obtained by approximations, leading to a computationally efficient approximate (marginal) likelihood function that can be implemented directly by using existing statistical software. The hierarchical representation of the proposed model makes the implementation of an efficient EM-type algorithm possible, which results in “closed form” expressions for the E and M-steps.

The rest of the article is organized as follows. The SMSN-NLME model is presented in Sect. 2, including a brief introduction to the class of SMSN distributions and the approximate likelihood-based methodology for inference in our proposed model. In Sect. 3 we propose an EM-type algorithm for approximate likelihood inferences in SMSN-NLME models, which maintains the simplicity and stability of the EM-type algorithm proposed by Lachos et al. [13]. In Sect. 4, simulation studies are conducted to evaluate the empirical performance of the proposed model. The advantage of the proposed methodology is illustrated through the Theophylline kinetics data in Sect. 5. Finally, some concluding remarks are presented in Sect. 6.

2 The Model and Approximate Likelihood

2.1 SMSN Distributions and Main Notation

The idea of the SMSN distributions originated from an early work by Branco and Dey [4], which included the skew-normal (SN) distribution as a special case. We say that a \(p\times 1\) random vector \(\mathbf{Y }\) follows a SN distribution with \(p\times 1\) location vector \(\varvec{\mu }\), \(p\times p\) positive definite dispersion matrix \(\varvec{\varSigma }\) and \(p\times 1\) skewness parameter vector \(\varvec{\lambda },\) and write \(\mathbf{Y }\sim {{\rm SN}}_p(\varvec{\mu },\varvec{\varSigma },\varvec{\lambda }),\) if its probability density function (pdf) is given by

where \({\mathbf {y}}_0=\varvec{\varSigma }^{-1/2}({\mathbf {y}}-\varvec{\mu })\), \(\phi _p(\cdot ;\varvec{\mu },\varvec{\varSigma })\) stands for the pdf of the p-variate normal distribution with mean vector \(\varvec{\mu }\) and dispersion matrix \(\varvec{\varSigma }\), \(N_p(\varvec{\mu },\varvec{\varSigma })\) say, and \(\varPhi (\cdot )\) is the cumulative distribution function (cdf) of the standard univariate normal. Letting \({\mathbf {Z}}={\mathbf {Y}}-\varvec{\mu }\) and noting that \(a{\mathbf {Z}}\sim {{\rm SN}}_p(\mathbf{0 },a^2\varvec{\varSigma },\varvec{\lambda })\) for all scalar \(a>0\), we can define a SMSN distribution as that of a p-dimensional random vector

where U is a positive random variable with the cdf \(H(u;\varvec{\nu })\) and pdf \(h(u;\varvec{\nu })\), and independent of the \(SN_p(\mathbf{0 },\varvec{\varSigma },\varvec{\lambda })\) random vector \({\mathbf {Z}}\), with \(\varvec{\nu }\) being a scalar or vector parameter indexing the distribution of the mixing scale factor U. Given \(U=u\), \({\mathbf {Y}}\) follows a multivariate skew-normal distribution with location vector \(\varvec{\mu }\), scale matrix \(u^{-1}\varvec{\varSigma }\) and skewness parameter vector \(\varvec{\lambda }\). Thus, by (1), the marginal pdf of \({\mathbf {Y}}\) is

The notation \({\mathbf {Y}}\sim \text {SMSN}_p(\varvec{\mu },\varvec{\varSigma },\varvec{\lambda };H)\) will be used when \({\mathbf {Y}}\) has pdf (3).

The class of SMSN distributions includes the skew-t, skew-slash, and skew-contaminated normal, which will be briefly introduced subsequently. All these distributions have heavier tails than the skew-normal and can be used for robust inferences. When \(\varvec{\lambda }={\mathbf {0}}\), the SMSN distributions reduces to the SMN class, i.e., the class of scale-mixtures of the normal distribution, which is represented by the pdf \(f_0({\mathbf {y}})=\int ^{\infty }_0{\phi _p({\mathbf {y}};\varvec{\mu },u^{-1}\varvec{\varSigma }) }\mathrm{d}H(u;\varvec{\nu })\) and will be denoted by \({\text {SMN}}_p(\varvec{\mu },\varvec{\varSigma },H)\). We refer to Lachos et al. [13] for details and additional properties related to this class of distributions.

-

Multivariate skew-t distribution

The multivariate skew-t distribution with \(\nu\) degrees of freedom, denoted by \(\text {ST}_{{p}}(\varvec{\mu },\varvec{\varSigma },{\varvec{\lambda }};\nu )\), can be derived from the mixture model (3), by taking \(U\sim\) \(Gamma(\nu /2,\nu /2),\) \(\nu >0.\) The pdf of \({\mathbf {Y}}\) is

$$\begin{aligned} f({\mathbf {y}})=2t_p({\mathbf {y}};\varvec{\mu },\varvec{\varSigma },\nu )T\left( \sqrt{\frac{\nu +p}{\nu +d}}{\mathbf {A}};\nu +p\right) ,\,\,\,\,\,{\mathbf {y}}\in {\mathbb {R}}^p, \end{aligned}$$(4)where \(t_p(\cdot ;\varvec{\mu },\varvec{\varSigma },\nu )\) and \(T(\cdot ;\nu )\) denote, respectively, the pdf of the p-variate Student-t distribution, namely \(t_p(\varvec{\mu },\varvec{\varSigma },\nu )\), and the cdf of the standard univariate t-distribution, \({\mathbf {A}}=\varvec{\lambda }^{\top } \varvec{\varSigma }^{-1/2} ({\mathbf {y}}-\varvec{\mu })\) and \(d=({\mathbf {y}}-\varvec{\mu })^{\top }\varvec{\varSigma }^{-1}({\mathbf {y}}-\varvec{\mu })\) is the Mahalanobis distance.

-

Multivariate skew-slash distribution

Another SMSN distribution, termed as the multivariate skew-slash distribution and denoted by \(\text {SSL}_p(\varvec{\mu },\varvec{\varSigma },\varvec{\lambda };\nu )\), arises when the distribution of U is \(\mathrm{Beta}(\nu ,1)\), \(\nu >0\). Its pdf is given by

$$\begin{aligned} f({\mathbf {y}})=2\nu \int ^1_0u^{\nu -1}\phi _p({\mathbf {y}};\varvec{\mu },u^{-1}\varvec{\varSigma })\varPhi (u^{1/2} {\mathbf {A}})\mathrm{d}u,\,\,\,\,\,{\mathbf {y}}\in {\mathbb {R}}^p. \end{aligned}$$(5)The skew-slash distribution reduces to the skew-normal distribution as \(\nu \uparrow \infty\).

-

Multivariate skew-contaminated normal distribution

The multivariate skew-contaminated normal distribution, denoted by \(\mathrm{SCN}_p(\varvec{\mu },\varvec{\varSigma },{\varvec{\lambda }};\nu _1,\nu _2),\) arises when the mixing scale factor U is a discrete random variable taking one of two values. The pdf of U, given a parameter vector \(\varvec{\nu }=(\nu _1,\nu _2)^{\top }\), is

$$\begin{aligned} h(u;\varvec{\nu })=\nu _1 {\mathbb {I}}_{(u=\nu _2)}+(1-\nu _1) {\mathbb {I}}_{(u=1)},\,\,\,0<\nu _1<1,\,0<\nu _2< 1. \end{aligned}$$(6)It follows that

$$\begin{aligned} f({\mathbf {y}})& {}= 2\left\{ \nu _1\phi _p({\mathbf {y}};\varvec{\mu },\nu _2^{-1}{\varvec{\varSigma }}) \varPhi (\nu _2^{1/2}{\mathbf {A}})\right. \\&\left. \quad +\,(1-\nu _1) \phi _p({\mathbf {y}};\varvec{\mu },\varvec{\varSigma })\varPhi ({\mathbf {A}})\right\} . \end{aligned}$$

2.2 The SMSN-NLME Model

In this section, we present the general NLME model proposed in this work, in which the random terms are assumed to follow a SMSN distribution within the class defined in (2). The model, denoted by SMSN-NLME, can be defined as follows:

with the assumption that

where the subscript i is the subject index, \(\mathbf{Y }_i=(y_{i1},\ldots ,y_{in_i})^{\top }\) is an \(n_i\times 1\) vector of observed continuous responses for subject i, \(\eta\) represents a nonlinear vector-valued differentiable function of the individual mixed effects parameters \(\varvec{\phi }_i\), \({\mathbf {X}}_i\) is an \(n_i\times q\) matrix of covariates, \(\varvec{\beta }\) is a \(p\times 1\) vector of fixed effects, \({\mathbf {b}}_i\) is a q-dimensional random effects vector associated with the ith subject, \(\mathbf{A }_i\) is a \(q\times p\) design matrix that possibly depends on elements of \({\mathbf {X}}_i\), \(\varvec{\epsilon }_i\) is the \(n_i\times 1\) vector of random errors, \(c=c(\varvec{\nu })=-\sqrt{\frac{2}{\pi }} k_1\), with \(k_{1} = E\{U^{-1/2}\}\), and \(\varvec{\varDelta }= \mathbf{D }^{1/2} \varvec{\delta }\), with \(\varvec{\delta }={\varvec{\lambda }}/{\sqrt{1+\varvec{\lambda }^{\top }\varvec{\lambda }}}\). The dispersion matrix \(\mathbf{D }=\mathbf{D }(\varvec{\alpha })\) depends on unknown and reduced parameter vector \(\varvec{\alpha }\). Finally, as was indicated in the previous section, \(H=H(\cdot |\varvec{\nu })\) is the cdf-generator that determines the specific \(\text {SMSN}\) model that is considered.

Remark

-

1.

The model defined in (7) can be viewed as a slight modification of the general NLME model proposed by Pinheiro and Bates [22, 23], with the restriction that our new model does not allow to incorporate, for instance, “time-varing” covariates in the random effects. This assumption is made for simplicity of theoretical derivations. However, the methodology proposed here can be extended without any difficulty.

-

2.

An attractive and convenient way to specify (8) is the following:

$$\begin{aligned} \mathbf{b }_i|U_i=u_i\buildrel \mathrm{ind.}\over \sim \text {SN}_q(c\varvec{\varDelta },u_i^{-1}{\mathbf {D}},\varvec{\lambda }),\;\varvec{\epsilon }_i|U_i=u_i \buildrel \mathrm{ind.}\over \sim \text {N}_{n_i}({\mathbf {0}},\sigma ^2_e u_i^{-1}{\mathbf {I}}_{n_i}), \end{aligned}$$(9)which are independent, where \(U_i {\mathop {\sim }\limits ^{\mathrm{iid.}}}H\). Since for each \(i=1,\ldots ,n,\) \(\mathbf{b }_i\) and \(\varvec{\epsilon }_i\) are indexed by the same scale mixing factor \(U_i,\) they are not independent in general. Independence corresponds to the case when \(U_i=1\) \((i=1,\ldots ,n),\) so that the SMSN-NLME model reduces to the SMN-NLME model as defined in Lachos et al. [12]. However, conditional on \(U_i,\) \(\mathbf{b }_i\) and \(\varvec{\epsilon }_i\) are independent for each \(i=1,\ldots ,n,\) which implies that \({\mathbf {b}}_i\) and \(\varvec{\epsilon }_i\) are uncorrelated, since \(Cov({\mathbf {b}}_i,\varvec{\epsilon }_i)=\text {E}\{{\mathbf {b}}_i\varvec{\epsilon }^{\top }_i\} =\text {E}\{\text {E}\{{\mathbf {b}}_i\varvec{\epsilon }^{\top }_i|U_i\}\}={\mathbf {0}}\). Thus, it follows from (8)–(9) that marginally

$$\begin{aligned} {\mathbf {b}}_i {\mathop {\sim }\limits ^{\mathrm{iid.}}}\text {SMSN}_q(c\varvec{\varDelta },{\mathbf {D}},\varvec{\lambda }; H)\quad \text {and}\quad \varvec{\epsilon }_i\buildrel \mathrm{ind.}\over \sim \text {SMN}_{n_i}({\mathbf {0}},\sigma ^2_e {\mathbf {I}}_{n_i}; H),\quad i=1,\ldots ,n. \end{aligned}$$(10)Moreover, as long as \(k_{1}<\infty\) the chosen location parameter ensures that \(E\{{\mathbf {b}}_i\}=E\{\varvec{\epsilon }_i\}={\mathbf {0}}\). Thus, this model considers that the within-subject random errors are symmetrically distributed, while the distribution of random effects is assumed to be asymmetric and to have mean zero.

-

3.

Our model can be seen as an extension of the elliptical NLME model proposed by Russo et al. [28], where the nonlinearity is incorporated only in the fixed effects. If \(\eta (\cdot )\) is a linear function of the individual mixed effects parameters \(\varvec{\phi }_i\), then the SMSN-NLME model reduces to a slight modification of the SNI-LME model proposed by [13]. However, since in this work we consider a mean-zero SMSN distribution for the random effects, the result given in Lachos et al. [13] cannot be directly applied. One the other hand, this choice of location parameter is important, since \(E\{{\mathbf {b}}_i\}\ne 0\) might lead to biased estimates of the fixed effects [30, 31].

-

4.

The SMSN-NLME model defined in (7)–(8) can be formulated with a hierarchical representation, as follows:

$$\begin{aligned} \mathbf{Y }_i|{\mathbf {b}}_i,U_i=u_i{\mathop {\sim }\limits ^{\mathrm{ind.}}}N_{n_i}(\eta ({\mathbf {A}}_i\varvec{\beta }+{\mathbf {b}}_i,{\mathbf {X}}_i),u_i^{-1}\sigma _e^2{\mathbf {I}}_{n_i}), \end{aligned}$$(11)$$\begin{aligned} {\mathbf {b}}_i|U_i=u_i{\mathop {\sim }\limits ^{\mathrm{ind.}}}{{\rm SN}}_{q}(c\varvec{\varDelta }, u_i^{-1}{\mathbf {D}},\varvec{\lambda }), \end{aligned}$$(12)$$\begin{aligned} U_i{\mathop {\sim }\limits ^{\mathrm{iid.}}}H(\cdot ;\varvec{\nu }). \end{aligned}$$(13)

Let \(\varvec{\theta }=(\varvec{\beta }^{\top },\sigma _e^2,\varvec{\alpha }^{\top },\varvec{\lambda }^{\top },\varvec{\nu }^\top )^{\top }\), then classical inference on the parameter vector \(\varvec{\theta }\) is based on the marginal distribution of \({\mathbf {Y}}=({\mathbf {Y}}^{\top }_1,\ldots ,{\mathbf {Y}}^{\top }_n)^\top\) [22]. Thus, from the hierarchical representation in (11)–(13), the integrated likelihood for \(\varvec{\theta }\) based on the observed sample \({\mathbf {y}}= ({\mathbf {y}}^{\top }_1,\ldots ,{\mathbf {y}}^{\top }_n)^\top\) in this case is given by

which generally does not have a closed form expression because the model function is nonlinear in the random effect. In the normal case, in order to make the numerical optimization of the likelihood function a tractable problem, different approximations to (14) have been proposed, usually based on first-order Taylor series expansion of the model function around the conditional mode of the random effects [15]. Following this idea, we describe next two important results based on Taylor series approximation method for approximating the likelihood function of a SMSN-NLME model. The first uses a point in a neighborhood of \({\mathbf {b}}_i\) as the expansion point. The second uses simultaneously a neighborhood of \({\mathbf {b}}\) and \(\varvec{\beta }\) as expansions points, with the advantage that this approximation is completely linear (in \(\varvec{\beta }\) and \({\mathbf {b}}\)). These approximations can be considered as extensions of the result given in [14, 15, 18, 22].

Theorem 1

Let \(\widetilde{{\mathbf {b}}}_i\) be an expansion point in a neighborhood of \({\mathbf {b}}_i\), for \(i=1,\ldots ,n\). Then, under the SMSN-NLME model as given in (7)–(8), the marginal distribution of \({\mathbf {Y}}_i\) can be approximated as follows:

where \(\widetilde{\varvec{\varPsi }}_i=\widetilde{{\mathbf {H}}}_i {\mathbf {D}}\widetilde{{\mathbf {H}}}_i^{\top }+\sigma ^2_e {\mathbf {I}}_{n_i}\), \(\widetilde{{\mathbf {H}}}_i=\displaystyle \frac{\partial \eta ({\mathbf {A}}_i{\varvec{\beta }}+{{\mathbf {b}}}_i,{\mathbf {X}}_i)}{\partial {{\mathbf {b}}}^{\top }_i}|_{{\mathbf {b}}_i=\widetilde{{\mathbf {b}}}_i},\) \(\widetilde{\bar{\varvec{\lambda }}}_{i}= \displaystyle \frac{\widetilde{\varvec{\varPsi }}_i^{-1/2}\widetilde{\mathbf {H}}_i{\mathbf {D}}{\varvec{\zeta }}}{\sqrt{1+\varvec{\zeta }^{\top }\widetilde{\varvec{\varLambda }}_i\varvec{\zeta }}},\) with \(\varvec{\zeta }={\mathbf {D}}^{-1/2}\varvec{\lambda }, \widetilde{\varvec{\varLambda }}_i=\left( {\mathbf {D}}^{-1}+\sigma _e^{-2}\widetilde{\mathbf {H}}_i^{\top } \widetilde{\mathbf {H}}_i\right) ^{-1}\), and “\({\mathop {\sim }\limits ^{\mathrm{.}}}\)” denotes approximated in distribution.

Proof

For simplicity we omit the sub-index i. Thus, for \(\varvec{\beta }\) fixed and based on first-order Taylor expansion of the function \(\eta\) around \(\widetilde{{\mathbf {b}}}\), we have from (7) that

and the approximate conditional distribution of \({\mathbf {Y}}\) is

or equivalently

The rest of the proof follows by noting that

which can be easily solved by using successively Lemmas 1 and 2 given in [1]. \(\square\)

Theorem 2

Let \(\widetilde{{\mathbf {b}}}_i\) and \(\widetilde{\varvec{\beta }}\) be expansion points in a neighborhood of \({\mathbf {b}}_i\) and \(\varvec{\beta }\), respectively, for \(i=1,\ldots ,n,\). Then, under the SMSN-NLME model as given in (7)–(8), the marginal distribution of \({\mathbf {Y}}_i,\) can be approximated as

where \(\widetilde{\eta }(\widetilde{\varvec{\beta }},\widetilde{{\mathbf {b}}}_i)=\eta ({\mathbf {A}}_i\widetilde{\varvec{\beta }}+\widetilde{{\mathbf {b}}}_i,{\mathbf {X}}_i)-\widetilde{{\mathbf {H}}}_i\widetilde{{\mathbf {b}}}_i-\widetilde{{\mathbf {W}}}_i\widetilde{\varvec{\beta }}\), \(\widetilde{\varvec{\varPsi }}_i=\widetilde{{\mathbf {H}}}_i {\mathbf {D}}\widetilde{{\mathbf {H}}}_i^{\top }+\sigma ^2_e {\mathbf {I}}_{n_i},\) \(\widetilde{\bar{\varvec{\lambda }}}_{i}=\displaystyle \frac{\widetilde{\varvec{\varPsi }}_i^{-1/2}\widetilde{\mathbf {H}}_i{\mathbf {D}}\varvec{\zeta }}{\sqrt{1+\varvec{\zeta }^{\top }\widetilde{\varvec{\varLambda }}_i\varvec{\zeta }}},\) \(\widetilde{{\mathbf {H}}}_i=\displaystyle \frac{\partial \eta ({\mathbf {A}}_i{\widetilde{\varvec{\beta }}}+{{\mathbf {b}}}_i,{\mathbf {X}}_i)}{\partial {{\mathbf {b}}}^{\top }_i}|_{{\mathbf {b}}_i=\widetilde{{\mathbf {b}}}_i},\) \(\widetilde{{\mathbf {W}}}_i=\displaystyle \frac{\partial \eta ({\mathbf {A}}_i{{\varvec{\beta }}}+\widetilde{{\mathbf {b}}}_i,{\mathbf {X}}_i)}{\partial {\varvec{\beta }}^{\top }}|_{\varvec{\beta }=\widetilde{\varvec{\beta }}},\) with \(\varvec{\zeta }={\mathbf {D}}^{-1/2}\varvec{\lambda }, \widetilde{\varvec{\varLambda }}_i=\left( {\mathbf {D}}^{-1}+\sigma _e^{-2}\widetilde{\mathbf {H}}_i^{\top } \widetilde{\mathbf {H}}_i\right) ^{-1}.\)

Proof

As in Theorem 1, and based on first-order Taylor expansion of the function \(\eta\) around \(\widetilde{{\mathbf {b}}}\) and \(\widetilde{\varvec{\beta }}\), we have that

Hence,

and the proof follows by integrating out \(({\mathbf {b}},u).\) \(\square\)

The estimates obtained by maximizing the approximate log-likelihood function \(\ell (\varvec{\theta },\widetilde{{\mathbf {b}}})=\sum ^n_{i=1}\log {f({\mathbf {y}}_i;\varvec{\theta },\widetilde{{\mathbf {b}}}_i)}\) (or \(\ell (\varvec{\theta },\widetilde{{\mathbf {b}}},\widetilde{\varvec{\beta }})=\sum ^n_{i=1}\log {f({\mathbf {y}}_i;\varvec{\theta },\widetilde{{\mathbf {b}}}_i,\widetilde{\varvec{\beta }})}\)) are thus approximate maximum likelihood estimates (MLEs), which can be computed directly through optimization procedures, such as fmincon() and optim() in Matlab and R, respectively. However, since numerical procedures for direct maximization of the approximate log-likelihood function often present numerical instability and may not converge unless good starting values are used, in this paper we use the EM algorithm [6] for obtaining approximate ML estimates via two modifications: the ECM algorithm [19] and the ECME algorithm [17].

Before discussing the EM implementation to obtain ML estimates of a SMSN-NLME model, we present the empirical Bayesian estimate of the random effects \(\widetilde{{\mathbf {b}}}^{(k)}\), which will be used in the estimation procedure and is given in the following result. The notation used is that of Theorem 2 and the conditional expectations \(\widetilde{\tau }_{-1i}\) can be easily derived from the result of Section 2 in Lachos et al. [13].

Theorem 3

Let \(\widetilde{{\mathbf {Y}}}_i={\mathbf {Y}}_i-\widetilde{\eta }(\widetilde{\varvec{\beta }},\widetilde{{\mathbf {b}}}_i)\), for \(i=1,\ldots ,n\). Then the approximated minimum mean-squared error (MSE) estimator (or empirical Bayes estimator) of \({\mathbf {b}}_i\) obtained by the conditional mean of \({\mathbf {b}}_i\) given \(\widetilde{{\mathbf {Y}}}_i=\widetilde{{\mathbf {y}}}_i\) is

where \(\widetilde{\varvec{\mu }}_{bi}=c\varvec{\varDelta }+{\mathbf {D}}\widetilde{{\mathbf {H}}}^{\top }_i\widetilde{\varvec{\varPsi }}^{-1/2}_i\widetilde{{\mathbf {y}}}_{0i}\) and \(\widetilde{\tau }_{-1i}=\text {E}\left\{ U^{-1/2}W_{\varPhi }(U^{1/2}\widetilde{{\mathbf {A}}}_i)|\widetilde{{\mathbf {y}}}\right\}\), with \(W_{\varPhi }(x)=\phi _1(x)/\varPhi (x),\,x\in {\mathbb {R}}\), \(\widetilde{{\mathbf {y}}}_{0i}=\widetilde{\varvec{\varPsi }}^{-1/2}_i(\widetilde{{\mathbf {y}}}_i-\widetilde{{\mathbf {W}}}_i\varvec{\beta }-c\widetilde{{\mathbf {H}}}_i\varvec{\varDelta })\) and \(\widetilde{{\mathbf {A}}}_i=\widetilde{\bar{\varvec{\lambda }}}^{\top }_{i}\widetilde{{\mathbf {y}}}_{0i}.\)

Proof

From (17), it can be shown that the conditional distribution of the \({\mathbf {b}}_i\) given \((\widetilde{{\mathbf {Y}}}_i,U_i)=(\widetilde{{\mathbf {y}}}_i, u_i)\) belongs to the extended skew-normal (EST) family of distributions [2], and its pdf is

Thus, from Lemma 2 in Lachos et al. [13], we have that

and the MSE estimator of \({\mathbf {b}}_i\), given by \(\text {E}\{{\mathbf {b}}_i|\widetilde{{\mathbf {y}}}_i,\varvec{\theta }\}\), follows by the law of iterative expectations. \(\square\)

3 Approximates ML Estimates Via the EM Algorithm

Let the current estimate of \((\varvec{\beta },{\mathbf {b}}_i)\) be denote by \((\widetilde{\varvec{\beta }},\widetilde{{\mathbf {b}}}_i)\) and for simplicity hereafter we omit the symbol “\(\sim\)” in \({\mathbf {H}}_i\) and \({\mathbf {W}}_i\). As in Theorem 2, the linearization procedure adopted in this section consists of taking the first-order Taylor expansion of the nonlinear function around the current parameter estimate \(\widetilde{\varvec{\beta }}\) and random effect estimate \(\widetilde{{\mathbf {b}}}_i\) at each iteration [33, 34], which is equivalent to iteratively solving the LME model

where \(\widetilde{{\mathbf {Y}}}_i={\mathbf {Y}}_i-\widetilde{\eta }(\widetilde{\varvec{\beta }},\widetilde{{\mathbf {b}}}_i),\) \({\mathbf {b}}_i\buildrel {{\rm ind}}\over \sim \text {SMSN}_q(c\varvec{\varDelta },{\mathbf {D}},\varvec{\lambda }, H)\) and \(\varvec{\epsilon }_i\buildrel {\mathrm{ind.}}\over \sim\) \(\text {SMN}_{n_i}({\mathbf {0}},\sigma ^2_e {\mathbf {I}}_{n_i} , H)\). A key feature of this model is that it can be formulated in a flexible hierarchical representation that is useful for analytical derivations. The model described in (19) can be written as follows:

for \(i=1,\ldots ,n,\) where \(\varvec{\varDelta }=\mathbf{D }^{1/2}\varvec{\delta }\), \(\varvec{\varGamma }=\mathbf{D }-\varvec{\varDelta }\varvec{\varDelta }^{\top }\) with \(\varvec{\delta }=\varvec{\lambda }/(1+\varvec{\lambda }^{\top }\varvec{\lambda })^{1/2}\) and \({\mathbf {D}}^{1/2}\) being the square root of \({\mathbf {D}}\) containing \(q(q+1)/2\) distinct elements. \(TN(\mu ,\tau ; (a,b))\) denotes the univariate normal distribution \((N(\mu ,\tau ))\) truncated on the interval (a, b).

Let \(\widetilde{\mathbf {y}}_c=(\widetilde{{\mathbf {y}}}^{\top },{\mathbf {b}}^{\top },{\mathbf {u}}^{\top },{\mathbf {t}}^{\top })^{\top }\), with \(\widetilde{{\mathbf {y}}}=(\widetilde{{\mathbf {y}}}^{\top }_1,\ldots ,\widetilde{{\mathbf {y}}}^{\top }_n)^{\top }\), \({\mathbf {b}}=({\mathbf {b}}^{\top }_1,\ldots ,{\mathbf {b}}^{\top }_n)^{\top }\), \({\mathbf {u}}=(u_1,\ldots ,u_n)^{\top }\), \({\mathbf {t}}=(t_1,\ldots ,t_n)^{\top }\). It follows from (20) that the complete-data log-likelihood function is of the form

where C is a constant that is independent of the parameter vector \(\varvec{\theta }\) and \(K(\varvec{\nu })\) is a function that depends on \(\varvec{\theta }\) only through \(\varvec{\nu }\). Now, from (20) and by using successively Lemma 2 in [1] (see also Lachos et al. [13]), it is straightforward to show that

where \({M}_{i}=[1+{\varvec{\varDelta }}^{\top }{\mathbf {H}}_i^{\top }{\varvec{\varOmega }}_i^{-1}{\mathbf {H}}_i{\varvec{\varDelta }}]^{-1/2}\), \({\mu }_{i}={M}_{i}^2{\varvec{\varDelta }}^{\top }{\mathbf {H}}_i^{\top }{\varvec{\varOmega }}_i^{-1}(\widetilde{{\mathbf {y}}}_i-{\mathbf {W}}_i{\varvec{\beta }}-c\,{\mathbf {H}}_i{\varvec{\varDelta }})\), \({{\mathbf {B}}}_{i}=[{\varvec{\varGamma }}^{-1}+\sigma _e^{-2}\displaystyle {\mathbf {H}}_i^{\top }{\mathbf {H}}_i]^{-1}\), \({{\mathbf {s}}}_i=({\mathbf {I}}_q-\sigma _e^{-2}{{\mathbf {B}}}_{i}{\mathbf {H}}_i^{\top }{\mathbf {H}}_i){\varvec{\varDelta }}\), \({\varvec{\varOmega }}_i=\sigma _e^2{\mathbf {I}}_{n_i}+{\mathbf {H}}_i{\varvec{\varGamma }}{\mathbf {H}}^{\top }_i\), and \({\mathbf {r}}_i=\displaystyle \sigma ^{-2}_e{\mathbf {B}}_{i}{\mathbf {H}}_i^\top (\widetilde{{\mathbf {y}}}_i-{\mathbf {W}}_i\varvec{\beta })\), for \(i=1,\ldots ,n\).

For the current value \(\varvec{\theta }= \widehat{\varvec{\theta }}^{(k)}\), after some algebra the E-step of the EM algorithm can be written as

where \(\varvec{\theta }_1=(\varvec{\beta }^\top ,\sigma ^2_e)^\top\), \(\varvec{\theta }_2=(\varvec{\alpha }^{\top },\varvec{\lambda }^{\top })^{\top }\),

with \(\text {tr}\{{\mathbf {A}}\}\) and \(|{\mathbf {A}}|\) indicating the trace and determinant of matrix \({\mathbf {A}}\), respectively. The calculation of these functions require expressions for \(\widehat{u}^{(k)}_{i}=\text {E}\{U_i|\widehat{\varvec{\theta }}^{(k)},\widetilde{{\mathbf {y}}}_i\}\), \(\widehat{(u{\mathbf {b}})}^{(k)}_i=\text {E}\{U_i{\mathbf {b}}_i|\widehat{\varvec{\theta }}^{(k)},\widehat{{\mathbf {y}}}_i\}\), \(\widehat{(u{\mathbf {b}}{\mathbf {b}}^\top )}^{(k)}_i =\text {E}\{U_i{\mathbf {b}}_i{\mathbf {b}}^{\top }_i|\widehat{\varvec{\theta }}^{(k)},\widetilde{{\mathbf {y}}}_i\}\), \(\widehat{(ut)}^{(k)}_i=\text {E}\{U_iT_i|\widehat{\varvec{\theta }}^{(k)},\widetilde{{\mathbf {y}}}_i\}\), \(\widehat{(ut_2)}^{(k)}_i=\text {E}\{UT^2_i|\widehat{\varvec{\theta }}^{(k)},\widetilde{{\mathbf {y}}}_i\}\) and \(\widehat{(ut{\mathbf {b}})}^{(k)}_i=\text {E}\{U_iT_i {\mathbf {b}}_i|\widehat{\varvec{\theta }}^{(k)},\widetilde{{\mathbf {y}}}_i\}.\) From (21), these can be readily evaluated as

where \(\widehat{c} = c(\widehat{\varvec{\nu }})\), and the expressions for \(\widehat{ u}^{(k)}_i\) and \(\widehat{\tau }_{1i}=\text {E}\{U_i^{1/2}W_{\varPhi }({U_i^{1/2}\widehat{\mu }^{(k)}_{i}}/{\widehat{M}^{(k)}_{i}})| \widehat{\varvec{\theta }}^{(k)},\widetilde{{\mathbf {y}}}_i\}\) can be found in Section 2 from Lachos et al. [13], which can be easily implemented for the skew-t and skew-contaminated normal distributions, but involve numerical integration for the skew-slash case.

The CM-step then conditionally maximize \(Q\left( \varvec{\theta }\mid \widehat{\varvec{\theta }}^{(k)}\right)\) with respect to \(\varvec{\theta }\), obtaining a new estimate \(\widehat{\varvec{\theta }}^{(k+1)}\), as follows:

CM-step 1 Fix \(\widehat{\sigma }_e^{2(k)}\) and update \(\widehat{\varvec{\beta }}^{(k)}\) as

CM-step 2 Fix \(\widehat{\varvec{\beta }}^{(k+1)}\) and update \(\widehat{\sigma }_e^{2(k)}\) as

where \(N = \sum ^n_{i=1}n_i\).

CM-step 3 Update \(\widehat{\varvec{\varDelta }}^{(k)}\) as \(\widehat{\varvec{\varDelta }}^{(k+1)}=\displaystyle \frac{\sum ^n_{i=1}\widehat{(ut{\mathbf {b}})}^{(k)}_i}{\sum ^n_{i=1}\widehat{(ut_2)}^{(k)}_i}.\)

CM-step 4 Fix \(\widehat{\varvec{\varDelta }}^{(k+1)}\) and update \(\widehat{\varvec{\varGamma }}^{(k)}\) as

CM-step 5 (for ECME) Update \(\widehat{\varvec{\nu }}^{(k)}\) by optimizing the constrained approximate log-likelihood function (obtained from Theorem 2):

where \(\varvec{\theta }^{*} = \varvec{\theta }{\setminus } \varvec{\nu }\).

It is worth noting that the proposed algorithm is computationally simple to implement and it guarantees definite positive scale matrix estimate, once at the kth iteration \(\widehat{{\mathbf {D}}}^{(k)}=\widehat{\varvec{\varGamma }}^{(k)}+\widehat{\varvec{\varDelta }}^{(k)}\widehat{\varvec{\varDelta }}^{\top {(k)}}\) and \(\widehat{\varvec{\lambda }}^{(k)}=\displaystyle \widehat{{\mathbf {D}}}^{-1/2(k)}\widehat{\varvec{\varDelta }}^{(k)}/ (1-\widehat{\varvec{\varDelta }}^{\top {(k)}}\widehat{{\mathbf {D}}}^{-1(k)}\widehat{\varvec{\varDelta }}^{(k)})^{1/2}\). The iterations are repeated until a suitable convergence rule is satisfied, e.g., if \(||\widehat{\varvec{\theta }}^{(k+1)}/\widehat{\varvec{\theta }}^{(k)}-1||\) is sufficiently small, or until some distance involving two successive evaluations of the approximate log-likelihood (derived from Theorem 1), like \(|\ell (\widehat{\varvec{\theta }}^{(k+1)},\widetilde{{\mathbf {b}}}^{(k+1)})/\ell (\widehat{\varvec{\theta }}^{(k)},\widetilde{{\mathbf {b}}}^{(k)})-1|\), is small enough. Furthermore, \(\widetilde{{\mathbf {y}}}_i\), \({\mathbf {W}}_i\) and \({\mathbf {H}}_i\), for \(i=1,\ldots ,n\), are updated in each step of the EM-type algorithm, with \(\widetilde{{\mathbf {b}}}_i\) being computed at each iteration using (18).

In addition, standard errors for \(\widehat{\varvec{\theta }}^{*}\) are estimated using the inverse of the observed information matrix obtained from the score vector following the results in [31] (see also [30]) and considering the linear approximation from Theorem 2.

3.1 Starting Values

It is well known that maximum likelihood estimation in nonlinear mixed models may face some computational hurdles, in the sense that the method may not give maximum global solutions if the starting values are far from the real parameter values. Thus, the choice of starting values for an EM-type algorithm in the nonlinear context plays a big role in parameter estimation. In this work we consider the following procedure for obtaining initial values for a SN-NLME model:

-

Compute \(\widehat{\varvec{\beta }}^{(0)}\) and \(\widehat{\sigma }^{2(0)}_e\) and \(\widetilde{{\mathbf {b}}}^{(0)}\) using the classical N-NLME model through the library nlme() in R software, for instance.

-

The initial value for the skewness parameter \(\varvec{\lambda }\) is obtained in the following way: Let \(\hat{\rho }_{l}\) be the sample skewness coefficient of the lth column of \(\widehat{{\mathbf {b}}}^{(0)}\), obtained under normality. Then, we let \(\widehat{\lambda }^{(0)}_{l}=3 \times \text {sign}(\hat{\rho }_{l})\), \(l=1,\ldots ,q\).

Moreover, for ST-NLME, SCN-NLME or the SSL-NLME model we adopt the following strategy:

-

Obtain initial values via method described above for the SN-NLME model;

-

Perform MLEs of the parameters of the SN-NLME via EM algorithm;

-

Use the EM estimates from the SN-NLME model as initial values for the corresponding ST-NLME, SSL-NLME and SCN-NLME models.

-

The initial values for \({\varvec{\nu }}\) are considered as follows: 10 for the ST distribution, 5 for the SSL distribution, and (0.05, 0.8) for the SCN distribution.

Even though these procedures look reasonable for computing the starting values, the tradition in practice is to try several initial values for the EM algorithm, in order to get the highest likelihood value. It is important to note that the highest maximized likelihood is an essential information for some model selection criteria, such as Akaike information criterion \((\mathrm{AIC}, -2\ell (\widehat{\varvec{\theta }},\widetilde{{\mathbf {b}}}^{L})+2\aleph )\), where \(\aleph\) is the number of free parameters, which can be used in practice to select between various SMSN-NLME models. In this work we use the result from Theorem 1 to calculate AIC values.

3.2 Futures Observations

Suppose now that we are interested in the prediction of \({\mathbf {Y}}_i^+\), a \(\upsilon \times 1\) vector of future measurements of \({\mathbf {Y}}_i\), given the observed measurement \({\mathbf {Y}}=({\mathbf {Y}}^{\top }_{(i)},{\mathbf {Y}}^{\top }_i)^{\top }\), where \({\mathbf {Y}}_{(i)}=({\mathbf {Y}}^{\top }_1,\ldots ,{\mathbf {Y}}^{\top }_{i-1}, {\mathbf {Y}}^{\top }_{i+1},\ldots ,{\mathbf {Y}}^{\top }_{n})^\top\). The minimum MSE predictor of \({\mathbf {Y}}^+_i\), which is the conditional expectation \({\mathbf {Y}}_i^{+}\) given \({\mathbf {Y}}_i\) and \(\varvec{\theta }\), is given in the following Theorem. The notation used is the one from Theorem 1.

Theorem 4

Let \(\widetilde{{\mathbf {b}}}_i\) be an expansion point in a neighborhood of \({\mathbf {b}}_i\), \({\mathbf {Y}}_i^+\) be an \(\upsilon \times 1\) vector of future measurement of \({\mathbf {Y}}_i\) (or possibly missing) and \({\mathbf {X}}^+_i\) be an \(\upsilon \times r\) matrix of known prediction regression variables. Then, under the SMSN-NLME model as (7)–(8), the predictor (or minimum MSE predictor) of \({\mathbf {Y}}_i^+\) can be approximated as

where

\(\widetilde{\varvec{\varPsi }}_{i22.1}=\widetilde{\varvec{\varPsi }}^*_{i22}-\widetilde{\varvec{\varPsi }}^*_{i21}\widetilde{\varvec{\varPsi }}^{*-1}_{i11}\widetilde{\varvec{\varPsi }}^*_{i12},\) \(\widetilde{\varvec{\varPsi }}^{*}_{i11}=\widetilde{\varvec{\varPsi }}_i=\widetilde{{\mathbf {H}}}_i {\mathbf {D}}\widetilde{{\mathbf {H}}}_i^{\top }+\sigma ^2_e {\mathbf {I}}_{n_i}\), \(\widetilde{\varvec{\varPsi }}^*_{i12}=\widetilde{\varvec{\varPsi }}^{*\top }_{i21}=\widetilde{{\mathbf {H}}}_i {\mathbf {D}}\widetilde{{\mathbf {H}}}_i^{+\top }\), \(\widetilde{\varvec{\varPsi }}^{*}_{i22}=\widetilde{{\mathbf {H}}}^+_i {\mathbf {D}}\widetilde{{\mathbf {H}}}_i^{+\top }+\sigma ^2_e {\mathbf {I}}_{\upsilon }\), \(\widetilde{\varvec{\varPsi }}^{*-1/2}_i\widetilde{\bar{\varvec{\lambda }}}_{i}^*=(\varvec{\upsilon }^{(1)\top }_i,\varvec{\upsilon }^{(2)\top }_i)^{\top }\), and

with \(\widetilde{\varvec{\varPsi }}^{*}_i = \left( \begin{array}{cc} \widetilde{\varvec{\varPsi }}^{*}_{i11} &{} \widetilde{\varvec{\varPsi }}^{*}_{i12} \\ \widetilde{\varvec{\varPsi }}^{*}_{i21}&{}\widetilde{\varvec{\varPsi }}^{*}_{i22} \end{array}\right) = \sigma _e^2{\mathbf {I}}_{n_i+\upsilon }+\widetilde{{\mathbf {H}}}^*_i{\mathbf {D}}\widetilde{{\mathbf {H}}}_i^{*\top }\), \(\widetilde{\bar{\varvec{\lambda }}}^{*}_{i}= \displaystyle \frac{\widetilde{\varvec{\varPsi }}_i^{*-1/2}\widetilde{{\mathbf {H}}}^*_i{\mathbf {D}}\varvec{\zeta }}{\sqrt{1+\varvec{\zeta }^{\top }\widetilde{\varvec{\varLambda }}^*_i\varvec{\zeta }}}\), \(\widetilde{\varvec{\varLambda }}^*_i=({\mathbf {D}}^{-1}+\sigma _e^{-2}\widetilde{{\mathbf {H}}}^{*\top }_i \widetilde{{\mathbf {H}}}^*_i)^{-1}\), \(\widetilde{{\mathbf {H}}}^*_i=(\widetilde{{\mathbf {H}}}^{\top }_i,\widetilde{{\mathbf {H}}}^{+\top }_i)^{\top }\), \(\widetilde{{\mathbf {H}}}^+_i=\displaystyle \frac{\partial \eta ({\mathbf {A}}_i{{\varvec{\beta }}}+{{\mathbf {b}}}_i,{\mathbf {X}}^+_i)}{\partial {{\mathbf {b}}}^{\top }_i}|_{{\mathbf {b}}_i=\widetilde{{\mathbf {b}}}_i},\) and \(\widetilde{\varvec{\upsilon }}_i=\displaystyle \frac{{\varvec{\upsilon }}^{(1)}_i+\widetilde{\varvec{\varPsi }}^{*-1}_{i11}\widetilde{\varvec{\varPsi }}^*_{i12}{\varvec{\upsilon }}^{(2)}_i}{\sqrt{1+{\varvec{\upsilon }}^{(2)\top }_i\widetilde{\varvec{\varPsi }}^{*}_{i22.1}{\varvec{\upsilon }}^{(2)}_i}}.\)

Proof

Under the notation and result given in Theorem 1, we have that

where \({\eta }(\widetilde{{\mathbf {b}}}_i,{\mathbf {X}}^*_i)=\left( {\eta }^{\top }({\mathbf {A}}_i{\varvec{\beta }}+\widetilde{{\mathbf {b}}}_i,{{\mathbf {X}}}_i),{\eta }^{\top }({\mathbf {A}}_i{\varvec{\beta }}+\widetilde{{\mathbf {b}}}_i,{{\mathbf {X}}}^+_i)\right) ^{\top }\), \({{\mathbf {X}}}^*_i=({{\mathbf {X}}}^{\top }_i,{{\mathbf {X}}}^{+\top }_i)^{\top }\). The rest of the proof follows by noting that \({\mathbf {Y}}_i^*|u_i\sim S\mathrm{N}_{n_i+\upsilon }({\eta }\left( \widetilde{{\mathbf {b}}}_i,{\mathbf {X}}^*_i)-\widetilde{{\mathbf {H}}}^*_i({\widetilde{{\mathbf {b}}}}_i-c\varvec{\varDelta }),u_i^{-1}\widetilde{\varvec{\varPsi }}^*_i,\widetilde{\bar{\varvec{\lambda }}}^*\right)\) and applying the law of iterative expectations. \(\square\)

It can be shown that marginally \({\mathbf {Y}}_i{\mathop {\sim }\limits ^{\mathrm{.}}}\mathrm{SMSN}\left( {\eta }({\mathbf {A}}_i{\varvec{\beta }}+\widetilde{{\mathbf {b}}}_i,{{\mathbf {X}}}_i)-\widetilde{{\mathbf {H}}}_i({\widetilde{{\mathbf {b}}}}_i-c\varvec{\varDelta }),\widetilde{\varvec{\varPsi }}_i,\widetilde{\varvec{\varPsi }}^{-1/2}_i\widetilde{\varvec{\upsilon }}_i;H\right)\), and thence the conditional expectations \(\tau _{-1i}\) can be easily derived from the result of Section 2 from Lachos et al. [13]. In practice, the prediction of \({\mathbf {Y}}^+_i\) can be obtained by substituting the ML estimate \(\widehat{\varvec{\theta }}\) and \(\widetilde{{\mathbf {b}}}^{L}_i\) into (24), that is \(\widehat{{\mathbf {Y}}}^+_i=\widehat{{\mathbf {Y}}}^+_i(\widehat{\varvec{\theta }}, \widetilde{{\mathbf {b}}}_i^{L})\), where \(\widetilde{{\mathbf {b}}}_i^{L}\) is the random effect estimate in the last iteration of the EM algorithm.

4 Simulation Studies

In order to examine the performance of the proposed method, in this section we present the results of some simulation studies. For simplicity, in the simulation studies we fix \(\varvec{\nu }\) at its true value. The first simulation study shows that the proposed approximate ML estimates based on the EM algorithm provide good asymptotic properties. The second study investigates the consequences in population inferences of an inappropriate normality assumption, and additionally it evaluates the efficacy of the measurement used for model selection (AIC) when the result given in Theorem 1 is used.

4.1 First Study

To evaluate the asymptotic behaviour of the proposed estimation method, we performed a simulation study considering the following nonlinear growth-curve logistic model [22]:

where \(t_{j}=100, 267, 433, 600, 767, 933, 1100,1267,1433,1600\). The random effects \(b_{i}\) and the error \(\varvec{\epsilon }_i=(\epsilon _{i1}\ldots ,\epsilon _{i10})^{\top }\) are non-correlated with

We set \(\varvec{\beta }=(\beta _1,\beta _2,\beta _3)^{\top }=(200,700,350)^{\top }\), \(\sigma _e^2=25\), \(\sigma _b^2=100\), \(\lambda =4\), implying in \(\varvec{\varDelta }= 40/\sqrt{17} = 9.7014\), and \(c=-\sqrt{{2}/{\pi }}\, k_1\), where \(k_1\) depends on the specific SMSN distribution considered. Additionally, the samples sizes are fixed at \(n=25,\,50,\,100,\,200,\, 300\) and 500. For each sample size, 500 Monte Carlo samples from the SMSN-NLME model in (26) are generated under four scenarios: under the skew-normal model (SN-NLME), under the skew-t with \(\nu =4\) (ST-NLME), under the skew-slash with \(\nu =2\) (SSL-NLME), and under the skew-contaminated normal model with \(\varvec{\nu }=(0.3,\,0.3)\) (SCN-NLME). The values of \(\varvec{\nu }\) were chosen in order to yield a highly skewed and heavy-tailed distribution for the random effects.

For each Monte Carlo sample, model (26) was fit under the same distributional assumption that the data set was generated. Then we computed the empirical bias and empirical mean square error (MSE) over all samples. For \(\beta _1\), for instance, they are defined as

respectively, where \(\widehat{\beta }^{(k)}_1\) is the approximate ML estimate of \(\beta _1\) obtained through ECM algorithm using the kth Monte Carlo sample. Definitions for the other parameters are obtained by analogy.

Figures 1 and 2 show a graphical representation of the obtained results for bias and MSE, respectively. Regarding to the bias, we can see in general patterns of convergence to zero as n increases. The worst case scenario seems to happen while estimating the scale and skewness parameters of the random effect, which could be caused by the well known inferential problems related to the skewness parameter in skew-normal models, or maybe it would require a sample size greater than 500 to obtain a reasonably pattern of convergence. On the other hand, satisfactory values of MSE seem to occur when n is greater than 400. As a general rule, we can say that both the bias and the MSE tend to approach to zero when the sample size is increasing, indicating that the approximate ML estimates based on the proposed EM-type algorithm provide good asymptotic properties.

4.2 Second Study

The goal of this simulation study is to asses the robustness or bias incurred when one assumes a normal distribution for random effects and the actual distribution is ST. The design of this simulation study is similar to the one in Sect. 4.1, but now 500 Monte Carlo samples were generate considering only a ST model (26) with \(\nu =4\) and \(n=25\). Additional simulations were created by using the same values of \((\varvec{\beta },\sigma _e^2,\lambda )\) in (26) and multiplying the scale parameter \(\sigma ^2_b\) by 0.25 and 6.25, obtaining \(\sigma ^2_b=25\) (small) and \(\sigma _b^2=625\) (large). This aims to verify if the proposed approximate methods are reliable in different settings of the scale parameter \(\sigma ^2_b\). Therefore, three different scenarios are considered and for each scenario we fit model (25) assuming the distributions normal and skew-t with 4 degree of freedom, to each Monte Carlo data set.

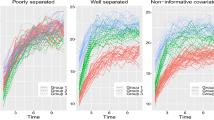

For evaluating the capability of the proposed selection criteria in selecting the appropriate distribution, the model preferred by the AIC criterion was also recorded for each sample. Figure 3 shows example profiles for each of the three sizes of scale components considered. The adjectives “small,” “medium” and “large” are referring to the values assumed for \(\sigma ^2_b\). Note that for this particular model the variability increases with the mean as well as with the scale parameter.

Table 1 presents summary measures for the fixed effects parameter estimates assuming normal and ST distributions for different values of the scale parameter \(\sigma ^2_b\), where the true parameters are indicated in parenthesis, Mean denotes the arithmetic average of the 500 estimates, Bias is the empirical mean bias, MSE is the empirical mean squared error, and finally, \(95\%\) Cov denotes the observed coverage of the \(95\%\) confidence interval computed using the model-based standard error and the critical value = 1.96.

The results in Table 1 suggest that irrespective of the fitted NLME model, the bias and MSE of the fixed effects increase as the scale component becomes larger. Moreover, we notice from this table that the bias and MSE from the ST fit are generally smaller than the ones from the normal fit, indicating that models with skewness and longer-than-normal tails may produce more accurate approximate MLEs. In Fig. 4 we present the empirical MSE for different values of \(n=25, 50, 100, 200\), and 500 and for medium-\(\sigma ^2_b\), illustrating clearly the slower convergence to zero when the normal distribution is inappropriately used.

Therefore, the results indicate that the efficiency in estimating fixed effects in NLME models can be severely degraded when normality is assumed, in comparison to considering a more flexible approach via the ST distribution, corroborating with results from other authors, such as Hartford and Davidian [8] and Litière et al. [16]. Since the main focus of such analysis is usually the evaluation of the fixed effects, this suggests that adopting normality assumptions routinely may lead to inefficient inferences on fixed effects when the true distribution is not normal. The inferences for the variance components are not comparable for the two fitted models since they are in different scales.

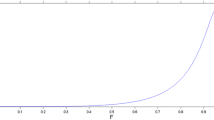

Additionally, from Table 1 we can see that the AIC measure was able to classify the correct model well, indicating that the ST-NLME model presents a better fit than the N-NLME model, and the criteria for both models is illustrated in Fig. 5, where we show the AIC values for each sample and fitted model. Thence we conclude that the result given in Theorem 1 provides a good approximation for the marginal likelihood function. In fact, this approximation is needed in order to make the calculation of the AIC computationally feasible (and easy).

5 Theophylline Kinetics Data-Theoph

The Theophylline kinetics data set was first reported by [3], and it was previously analysed in [22, 23] by fitting a N-NLME model. In this section, we revisit the Theoph data with the aim of providing additional inferences by considering SMSN distributions. In the experiment, the anti-asthmatic drug Theophylline was administered orally to 12 subjects whose serum concentration were measured 11 times over the following 25 h. This is an example of a laboratory pharmacokinetic study characterized by many observations on a moderate number of subjects. Figure 6a displays the profiles of the Theophylline concentrations for the twelve patients.

We fit a NLME model to the data considering the same nonlinear function as in [23], which can be written as

for \(i=1,\ldots ,12\), \(j=1,\ldots ,11\), where \(C_{ij}\) represents the jth observed concentration (mg/L) on the ith patient. \(D_{i}\) represents the dose (mg/kg) administered orally to the ith patient, and \(t_{ij}\) is the time in hours. To verify the existence of skewness in the random effects, we start by fitting a traditional N-NLME model as in [23]. Figure 6b, c depicts the Q–Q plots of the empirical Bayes estimates of \({\mathbf {b}}_i\) and shows that there are some non-normal patterns on the random effects, including outliers and possibly skewness, and therefore supporting the use of thick-tailed distributions.

Hence, we now consider a SMSN distribution for \({\mathbf {b}}_{i}\) and SMN distribution for \({\varvec{\epsilon }}_{i}\), as in (8). Specifically, we consider the Normal, SN, ST, SCN and SSL distributions from the SMSN class for comparative purposes, and the results are presented next.

Table 2 contains the ML estimates of the parameters from the five models, together with their corresponding standard errors calculated via the observed information matrix. The AIC measure indicates that heavy-tailed distributions present better fit that the Normal and SN-NLME models. Particularly, the model with ST distribution has the smaller AIC, being therefore the selected model. The standard errors of \(\varvec{\lambda }\) are not reported since they are often not reliable (see [31], for example), and it is important to notice that the estimates for the variance components are not comparable since they are on different scales.

To asses the predictive performance of the N-NLME and SMSN-NLME models, we remove sequentially the last few points of each response vector, then we compute the ML estimates using the remaining data. The deleted observations are considered as the true values to be predicted. As a measure of precision we use the mean of absolute relative deviation \(|(y_{ip}-\widehat{y}^+_{ip})/y_{ip}|\) (MARD), where p is the time point under forecast. For instance, if we drop out the last five measurements, then the prediction of \({\mathbf {y}}_i=(y_{i7}, y_{i8}, y_{i9}, y_{i10},y_{i11})^{\top },\) denoted by \(\widehat{{\mathbf {y}}}^+_i=(\widehat{y}^+_{i7}, \widehat{y}^+_{i8}, \widehat{y}^+_{i9}, \widehat{y}^+_{i10}, \widehat{y}^+_{i11})^{\top }\), is made using (24), for \(i=1,\ldots ,12\). Figure 7 presents the average of MARD in percentage \((\%)\) when the last 1, 2, 3, 4 and 5 observations are deleted sequentially in each response vector and shows that the heavy-tailed SMSN models provide in general more accurate predictors than the normal model. Particularly, when the last 5 observations are deleted for each subject, the difference between MARD from the ST and normal model is of almost \(6\%\). Thus, the SMSN-NLME model with heavy-tailed distributions not only provides better model fitting, it also yield smaller prediction errors for the Theophylline kinetics data.

Furthermore, to assess the goodness of fit of the selected model, we construct a Healy-type plot [9], by plotting the nominal probability values \(1/n, 2/n, \ldots , n/n\) against the theoretical cumulative probabilities of the ordered observed Mahalanobis distances, which is calculated using the result Theorem 1. The Mahalanobis distances is a convenient measure for evaluating the distributional assumption of the response variable, once if the fitted model is appropriate the distribution of the Mahalanobis distance is known and given, for example, in [31]. If the fitted model is appropriate, the plot should resemble a straight line through the origin with unit slope. We also construct a Healy’s plot for the Normal model for comparison, and the results are presented in Fig. 8. It is clear that the observed Mahalanobis distances are closer to the expected ones in ST-NLME model than in the N-NMLE model, corroborating with the previous results.

6 Discussion and Future Works

Nonlinear mixed effects models are a research area with several challenging aspects. In this paper, we proposed the application of a new class of asymmetric distributions, called the SMSN class of distributions, to NLME models. This enables the fit of a NLME model even when the data distribution deviates from the traditional normal distribution. Approximate closed-form expressions were obtained for the likelihood function of the observed data that can be maximized by using existing statistical software. An EM-type algorithm to obtain approximate MLEs was presented, by exploring some important statistical properties of the SMSN class. According to Wu [33], in complicated models, approximate methods are computationally more efficient and may be preferable to the exact method, specially when it exhibits convergence problems, such as slow convergence or non-convergence.

Furthermore, two simulation studies are presented, showing the potential efficiency gain in fitting a more flexible model when the normality assumption is violated. Moreover, in the analysis of the Theophylline data set the use of ST-NLME models offered better fitting as well as better prediction performance than the usual normal counterpart. Finally, we note that it may be worthwhile comparing our results with other methods such as the classical Monte Carlo EM algorithm or the stochastic version of the EM algorithm (SAEM), which is beyond the scope of this paper. These issues will be considered in a separate future work. Another useful extension would be to consider a more general structure for the within-subject covariance matrix, such as an AR(p) dependency structure as considered in [29].

The Royal Statistical Society (RSS) in a recent tribute to CR Rao on his 100th birthday wrote “Calyampudi Radhakrishna Rao is a formidable name in modern statistics. He has made pioneering contributions to statistical inference, multivariate analysis, design of experiments and combinatorics, robust inference and differential geometric methods and many other areas. Several of his results have become parts of standard textbooks on statistical methodology”. The connection of this paper with Dr. Rao’s research appears on his interest on the theoretical aspects of non-Gaussianity. For a interesting and stimulating reference, we refer to [26].

Finally, the method proposed in this paper is implemented in the software R [25], and the codes are available for download from GitHub (https://github.com/fernandalschumacher/skewnlmm). We conjecture that the methodology presented in this paper should yield satisfactory results in other areas where multivariate data appears frequently, for instance: dynamic linear models, nonlinear dynamic models, stochastic volatility models, etc., at the expense of moderate complexity of implementation.

References

Arellano-Valle RB, Bolfarine H, Lachos VH (2005) Skew-normal linear mixed models. J Data Sci 3:415–438

Azzalini A, Capitanio A (1999) Statistical applications of the multivariate skew-normal distribution. J R Stat Soc 61:579–602

Boeckmann AJ, Sheiner LB, Beal SL (1994) Nonmem users guide-part V: introductory guide. NONMEM Project Group. University of California at San Francisco

Branco MD, Dey DK (2001) A general class of multivariate skew-elliptical distributions. J Multivar Anal 79:99–113

De la Cruz R (2014) Bayesian analysis for nonlinear mixed-effects models under heavy-tailed distributions. Pharm Stat 13(1):81–93

Dempster A, Laird N, Rubin D (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B 39:1–38

Galarza CE, Castro LM, Louzada F, Lachos VH (2020) Quantile regression for nonlinear mixed effects models: a likelihood based perspective. Stat Pap 61(3):1281–1307

Hartford A, Davidian M (2000) Consequences of misspecifying assumptions in nonlinear mixed effects models. Comput Stat Data Anal 34:139–164

Healy MJR (1968) Multivariate normal plotting. J R Stat Soc Ser C (Appl Stat) 17(2):157–161

Hui FKC, Müller S, Welsh AH (2020) Random effects misspecification can have severe consequences for random effects inference in linear mixed models. Int Stat Rev. https://doi.org/10.1111/insr.12378

Lachos VH, Bandyopadhyay D, Dey DK (2011) Linear and nonlinear mixed-effects models for censored HIV viral loads using normal/independent distributions. Biometrics 67(4):1594–1604

Lachos VH, Castro LM, Dey DK (2013) Bayesian inference in nonlinear mixed-effects models using normal independent distributions. Comput Stat Data Anal 64:237–252

Lachos VH, Ghosh P, Arellano-Valle RB (2010) Likelihood based inference for skew-normal independent linear mixed models. Stat Sin 20:303–322

Lin TI, Wang WL (2017) Multivariate-nonlinear mixed models with application to censored multi-outcome aids studies. Biostatistics 18(4):666–681

Lindstrom MJ, Bates DM (1990) Nonlinear mixed-effects models for repeated-measures data. Biometrics 46:673–687

Litière S, Alonso A, Molenberghs G (2007) The impact of a misspecified random-effects distribution on the estimation and the performance of inferential procedures in generalized linear mixed models. Stat Med 27:3125–31447

Liu C, Rubin DB (1994) The ECME algorithm: a simple extension of EM and ECM with faster monotone convergence. Biometrika 80:267–278

Matos LA, Prates MO, Chen MH, Lachos VH (2013) Likelihood-based inference for mixed-effects models with censored response using the multivariate-t distribution. Stat Sin 23:1323–1345

Meng X, Rubin DB (1993) Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 81:633–648

Meza C, Osorio F, De la Cruz R (2012) Estimation in nonlinear mixed-effects models using heavy-tailed distributions. Stat Comput 22(1):121–139

Pereira MAA, Russo CM (2019) Nonlinear mixed-effects models with scale mixture of skew-normal distributions. J Appl Stat 46(9):1602–1620

Pinheiro JC, Bates DM (1995) Approximations to the log-likelihood function in the nonlinear mixed effects model. J Comput Graph Stat 4:12–35

Pinheiro JC, Bates DM (2000) Mixed-effects models in S and S-PLUS. Springer, New York

Pinheiro JC, Liu CH, Wu YN (2001) Efficient algorithms for robust estimation in linear mixed-effects models using a multivariate t-distribution. J Comput Graph Stat 10:249–276

R Core Team (2020) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna. https://www.R-project.org/. Accessed 26 July 2020

Rao CR, Ali H (1998) An overall test for multivariate normality. Student 2:317–324

Rosa GJM, Padovani CR, Gianola D (2003) Robust linear mixed models with normal/independent distributions and Bayesian MCMC implementation. Biom J 45:573–590

Russo CM, Paula GA, Aoki R (2009) Influence diagnostics in nonlinear mixed-effects elliptical models. Comput Stat Data Anal 53:4143–4156

Schumacher FL, Lachos VH, Dey DK (2017) Censored regression models with autoregressive errors: a likelihood-based perspective. Can J Stat 45(4):375–392

Schumacher FL, Matos LA, Lachos VH (2020) skewlmm: scale mixtures of skew-normal linear mixed models. https://CRAN.R-project.org/package=skewlmm. R package version 0.2.0

Schumacher FL, Matos LA, Lachos VH (2021) Scale mixture of skew-normal linear mixed models with within-subject serial dependence. Stat Med (in press). ArXiv preprint arXiv:2002.01040

Verbeke G, Lesaffre E (1996) A linear mixed-effects model with heterogeneity in the random-effects population. J Am Stat Assoc 91:217–221

Wu L (2004) Exact and approximate inferences for nonlinear mixed-effects models with missing covariates. J Am Stat Assoc 99:700–709

Wu L (2010) Mixed effects models for complex data. Chapman and Hall/CRC, Boca Raton

Zhang D, Davidian M (2001) Linear mixed models with flexible distributions of random effects for longitudinal data. Biometrics 57:795–802

Acknowledgements

We are grateful to the anonymous referee, the associate editor and the editor for very useful comments and suggestions, which greatly improved this paper. Fernanda L. Schumacher acknowledges the partial support of Coordenação de Aperfeiçoamento de Pessoal de Nível Superior—Brasil (CAPES)—Finance Code 001, and by Conselho Nacional de Desenvolvimento Científico e Tecnológico—Brasil (CNPq).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Celebrating the Centenary of Professor C. R. Rao” guest edited by, Ravi Khattree, Sreenivasa Rao Jammalamadaka, and M. B. Rao.

Rights and permissions

About this article

Cite this article

Schumacher, F.L., Dey, D.K. & Lachos, V.H. Approximate Inferences for Nonlinear Mixed Effects Models with Scale Mixtures of Skew-Normal Distributions. J Stat Theory Pract 15, 60 (2021). https://doi.org/10.1007/s42519-021-00172-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s42519-021-00172-5