Abstract

The dynamic and interactive nature of Distributed Exascale Computing System leads to a situation where the load balancer lacks the proper pattern for the solution. In addition to analyzing and reviewing the dynamic and interactive nature and its effect on load balancing, this article introduces a framework for managing load balancing that does not need to study the dynamic and interactive nature. This framework proposes a mathematical scheme for the functionality of load-balancing elements and redefines its functions and components. The redefinition makes it possible to determine the constituent parts of the framework and their functionality without the need to analyze the dynamic and interactive nature of the system. The proposed framework can manage and control dynamic and interactive events by reviewing changes in the functionality of resources, the pattern of data collection to execute processes related to the load balancer, and a Scalable tool. In addition to performing the load balancer’s functionality, our framework can continue to function under dynamic and interactive events in distributed exascale systems. On average, this framework has a 43% improvement, unable to respond to dynamic and interactive requests.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In high performance computing systems, the load balancer is responsible for adjusting computing processes, demands, and computing capabilities of the existing resources (defined in the computing environment) (Wang et al. 2014; Ghomi et al. 2017; Jyoti and Shrimali 2020; Amelina et al. 2015). Therefore, the load balancer should be able to manage computing processors in the existing system so that (A) the response time of any processor should exceed what is expected and (B) no computing resource should be idle while executing a scientific or practical application (Domanal et al. 2014; Thakur and Goraya 2017). The load balancer needs precise information about the requirements of computing processors and features of the existing resources in the computing system to achieve this (Khaneghah et al. 2018). If the element has accurate information about computing processors and resources, it can adjust between processor requirements and computing resources and features (Mondal et al. 2016; Mondal et al. 2017; Mondal et al. 2016). Proper adjustment between the two mentioned characteristics leads to a better response time for computation processes, which increases the system's performance (Kołodziej et al. 2014; Qureshi et al. 2014).

Load balancer conventionally has to collect data, decide about the resources and computing processes, and also process transmission (Khan et al. 2017; Pate Ahmadian et al. 2018; Jain and Saxena 2016). The element must describe the status of the processes, process requirements to perform these tasks, and system resources, using specific metrics (Bok et al. 2018; Rao et al. 2003). The element could perform the mentioned tasks in the case of proper and exact definitions for descriptions of processes and resources.

Based on these metrics, the load balancer decides whether it can continue executing the process in a processing element. In its view, the continuation of performing a process depends on the process's requirements, conditions, and time limitations and whether the processing elements can meet them (Mousavi Khaneghah et al. 2018). If these conditions are not met, the component uses process migration to transfer the process from one processing element to another (Khan et al. 2017; Rathore et al. 2020; Rathore and Chana 2016). Suppose the system's processing elements cannot respond to the process demands. In that case, the aspect calls on resource discovery to find the processing capable of meeting the requirements of the process (Pourqasem 2018).

The load-balancer establishes the structure of responding patterns created during design at the runtime (Khaneghah et al. 2018). In traditional computing systems, the selected mechanisms for load balancing, such as Cluster or Grid, are designed to make them compatible with the structure of response control and management related to process demands (Ramezani et al. 2014; Heidsieck et al. 2019; Teylo et al. 2017; Khaneghah and Sharifi 2014). System designers know the computing process’s needs in such traditional computing systems. Based on this information, proceed to define mechanisms for load balancing or resource discovery (Pate Ahmadian et al. 2018; Milani and Navimipour 2016).

The primary assumption in designing a traditional load balancer such as a Cluster or Grid is based on the fact that nothing violates the structure of the designed response during the execution of a scientific application (Khaneghah and Sharifi 2014). Based on this, if a process or processes require computing resources, the load balancer tries to use process migration or resource discovery to choose the resource which can respond to the process (Pate Ahmadian et al. 2018). Since resource allocation is based on the load balancer’s mechanism, it has been considered part of the policy related to the structure of responding patterns in scientific applications (Khaneghah and Sharifi 2014).

The occurrence of dynamic and interactive events in executing computing processes in DECS leads to processes performing operations or requests that were not considered in the primary responding pattern (Khaneghah and Sharifi 2014; Milani and Navimipour 2016; Fiore et al. 2018; Gharb et al. 2019; Dongarra et al. 2011). This event causes the system not to be capable of responding based on the preliminary responding design during the execution of scientific applications. In case of such events, the load balancer must be able to analyze the event and create a proper responding structure based on its nature to continue executing the scientific application (Wang et al. 2016a). These events might need different response patterns (Shahrabi et al. 2018; Alowayyed et al. 2017; Innocenti et al. 2017). The load balancers should be able to create the response structure for such events in an appropriate period. Due to changes in the characteristics of resources and the response structure of processes, traditional systems either cannot be used in DECS or provide the minimum performance possible (Alowayyed et al. 2017; Innocenti et al. 2017; Khaneghah et al. 2018).

In distributed Exascale computing systems, the occurrence of dynamic and interactive events from the point of view of the system manager means the formation of a particular type of request related to the process (or processes) that has not been analyzed in the primary response structure. The occurrence of dynamic and interactive events causes the state of requests in the computing system to change in a way unknown to the load balancer. The load balancer should either stop the system, and the system designer explains the mentioned situation for the load balancer to execute the request, or it must manage the said request based on a mechanism at the time of execution.

When a dynamic and interactive event occurs in a large-scale distributed system, in addition to changing the status of requests in the system, as described in the previous paragraph, it can cause a change in the working process of the distributed load balancer as a central unit of the activities in a large-scale distributed system. The functional nature of the distributed load balancer in computing systems, both traditional and Distributed Exascale systems, is based on collecting information and using a decision-making mechanism for redistributing the load, in addition to creating a mechanism for implementing the program in the shortest time and achieve the system's goal. The load balancer collects the information related to the system (or a part of the system), and based on the analysis obtained by a mechanism in the load balancer, it performs redistribution activities. The way the processes are executed by the operating system, keeping in mind that this is a distributed system, causes the set of activities related to the distributed management unit not to be executed automatically and continuously, instead these activities are performed at different times considering the corrections of the information in the previous step. The consideration of the corrections to the information in the previous stage is conventionally established in traditional computing systems, and the existence of benchmarking tools and data structure information related to the process and, most importantly, the definition of specific situations to carry out the activities of the load balancer, makes it possible to decide on the continuation or discontinuation of it.

In Distributed Exascale systems, at any moment of the execution of distributed load balancer's activities, dynamic and interactive events can affect the load balancer's functioning and sub-activities. A dynamic and interactive event may occur in the large-scale distributed system, which causes (a) the distributed load balancer not to be able to decide on the assumption about the correctness of the information of the previous stage and the status of the large-scale distributed system regarding the correctness of the information to be unknown to the load balancer. (b) The status of the beneficiary elements in the activities of the distributed load balancer should be changed in such a way that the set of activities of the load balancer becomes invalid in terms of redistribution and the mechanism used. (c) The mechanism used by the load balancer cannot manage and redistribute the load for the large-scale distributed computing system. (d) The descriptive and benchmarking indicators used by the distributed management unit become invalid after the dynamic and interactive event occurs.

In each or all of the mentioned situations, the distributed management unit in Distributed Exascale systems must be able to manage the impacts of dynamic and interactive events on its functionality. This article, introducing the ExaLB, presents a mathematical model to manage caused by dynamic and interactive events on the load balancer in distributed Exascale computing systems without the need to stop the execution of system activities.

This paper introduces a mathematical framework to describe the load balancer's performance in DECS. The descriptive function of the load distribution management element in distributed computing systems is investigated to achieve this framework. Based on the review and analysis of the mentioned descriptive function, the framework describing the load balancer operation in the distributed Exascale systems obtained in this article makes it possible to analyze the concept of the event that leads to the call of the load balancer in the distributed computing system. Did Consider this issue in this article, the events leading to the call of the load balancer are considered in two categories, formal events, and dynamic and interactive events. The mentioned two classifications and the analysis of the management of the mentioned events can be used as a criterion for the analysis of dynamic events and how to manage them in the middle of the proposed mathematical framework for the load balancer, taking into account the conditions and limitations imposed by dynamic and interactive events.

2 Related works

Currently, many scientific applications are developed for DECS. In this system, computing resources are connected in an autonomous, transparent, and integrated manner and usually support scalability (Jiang 2016). In high performance computing and distributed systems, a load balancer is used to distribute the tasks fairly to execute the application in the shortest time possible (Chatterjee and Setua 2015).

There are multiple definitions proposed for the scalability of distributed systems. In Domanal et al. (2014), scalability is considered a function of changes in the system performance when a new processing element is added. In Mirtaheri et al. (2013), the system's scalability is introduced as a function with parameters such as the cost of adding a new computing element and system performance when it is being expanded. Determining the relation between load balance and the system's scalability is highly important if this relation is not selected correctly. Scalability would not be capable of improving the performance and also adds to the load balancer's execution time, leading to decreased system efficiency.

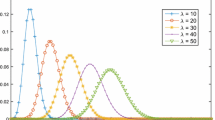

In Distributed Exascale systems, scheduling existing tasks to improve performance is vital (Chatterjee and Setua 2015; Mukherjee et al. 2016). In Mukherjee et al. (2016), a method for efficient load balancing in large-scale systems is introduced in which equivalent Markov models describe parallel servers. This computing system uses a threshold-based load-balancing scheme.

The challenges of DECS consist of managing processes in parallel, the procedure of executing scientific applications, usable processing power, flexibility, and scalability (Wang et al. 2016b). Many computing system management schemes, such as load balancing mechanisms, are designed based on centralized paradigms and have to define a centralized server. This state creates challenges for DECS, such as scalability and single-point-of-failure problems (Wang et al. 2016b). In Wang et al. (2016b), a classification for system management in DECS is proposed that considers the proportion between the server's response time and the client's tolerance. In this categorization, there is a discussion about what pattern of reliability is created by system scalability in the procedure of execution. Based on Wang et al. (2016b), using an architecture based on distributed systems to support extreme parallelism, covering delay time, and creating mechanisms for a reliable and scalable load balancer, are necessary.

The nature of scientific applications requires DECS to use computing systems to reduce the response time and discover the laws governing natural phenomena (http://www.deep-project.EU; Reylé et al. 2016). Fields such as human brain simulation, weather simulation, fluid engineering computations, simulating superconduction in high temperatures, and earthquake imaging are some of the applications that require DECS (http://www.deep-project.EU; Reylé et al. 2016). The nature of these applications (in contradiction to traditional computing applications) is a way in which, during the execution of processes, dynamic and interactive requests are made by them that their structures are not considered during the design (Lieber et al. 2016; Mirtaheri et al. 2014; Straatsma et al. 2017; Dongarra et al. 2014). This event causes DECS to use dynamic mechanisms for load balancers (Milani and Navimipour 2016; Khaneghah 2017). Therefore, computing systems should have the flexibility and compatibility with such variant conditions in these applications to achieve optimal system performance.

In Alowayyed et al. (2017), there has been a discussion on how to improve the capabilities of multiscale computing systems to execute scientific applications that require Petascale and DECS. In Jeannot et al. (2016), a dynamic mechanism for the load balancer in the Charm++ application is proposed.

The authors of Mirtaheri and Grandinetti (2017) have also proposed a distributed dynamic mechanism for the load balancer in DECS. This mechanism uses multiple parameters, such as load transmission and connection delay, to estimate nodes' excessive load. The proposed approach supports dynamic and interactive events. Even though this mechanism for load balancing has a good scalability feature compared to other methods, its performance in Petascale systems has multiple challenges. In Alowayyed et al. (2017), multi-dimensional computing algorithms are proposed for load-balancing functionality. Besides, these algorithms provide fault tolerance and energy management in distributed computing systems to redistribute the load. This paper presents three multi-dimensional models: Extreme Scaling, Heterogeneous Multiscale Computing, and Replica Computing. In this paper, the manner of multi-axis Constance, Falkon is a centralized task scheduler that uses simple sequential scheduling for Multi-Task Computing (MTC) (Wang et al. 2014).

In Bakhishoff et al. (2020), the authors present a mathematical model for managing dynamic and interactive events affecting the operation of the distributed load balancer based on the Discrete-Time Hidden Markov Model. The mathematical model presented in this article provides such a capability for the load balancer that, after analyzing each dynamic and interactive event, manages the activity based on the changes that cause the functionality of the distributed load balancer to be violated. In this article, the concept of the system state is considered to describe the function of the distributed load balancer and the return of the system’s state to a stable condition after the dynamic and interactive event occurs as a method to manage the effects of the dynamic and interactive event on the functionality of the distributed load balancer. The most critical challenge governing the aforementioned mathematical model is in creating the state of the system corresponding to the functionality of the distributed load balancer. If the distributed load balancer uses more variables than the traditional one to describe its state, creating the system's state becomes complicated. Another challenge of this solution is the inability to change the system's state to a stable condition concerning the functionality of the distributed load balancer.

In Wylie (2020), the authors investigate the issue that if the processes are not correctly allocated to the resources, especially the computing resources defined in the Distributed Exascle systems, there is a possibility of the failure of the activities related to the distributed load balancer. In this article, in addition to this challenge of not correctly assigning processes to computing resources, other influential factors for breaking down the function of the distributed load balancer have been investigated. This solution, using the dynamic distributed load balancer (HemeLB) based on process analysis and feature extraction, has managed the effects of dynamic and interactive events on the functionality of the distributed load balancer.

In Lehman et al. (2019), based on the XQueue concept, a dynamic and interactive load-balancing mechanism is considered. This load-balancing mechanism uses a developed model based on the XQueue concept because it must consider dynamic and interactive events and their effects on the operation of the distributed load balancer. The effort of the article is that the proposed solution should be in such a way that the functionality of the distributed load balancer, considering the development of XQueue, is equivalent to the functionality of the distributed load balancer based on XQueue without considering dynamic and interactive events.

The centrality of the work is based on the fact that the load balancer analyzes the state of the computing elements based on an index which is conventionally the state of the CPU usage. This indicator can take more complex forms. The load balancer redistributes the load based on skill processing mechanisms if the state of the calculation element description index changes beyond a specific limit. In these mechanisms, a dynamic and interactive concept is not usually considered.

In the mechanisms that consider dynamic and interactive events, the design of the load balancer is based on considering the unique situation. In these mechanisms, an attempt is made to analyze the impacts of the dynamic and interactive events on the functionality of the distributed load balancer in one or more specific and determined situations. The mentioned conditions describe the constraints and limitations governing the load balancer function in a specific and confident way. A specific and detailed description of the function of the load balancer makes the situations that can be described for the function of the load balancer after the dynamic and interactive event occurs. If, at the time of the dynamic and interactive event, the load balancer is in a state other than the one described in the proposed solution, then the proposed solution cannot respond and manage the impacts of the dynamic and interactive event on the functionality of the distributed load balancer. This event is because the functional nature of the load balancer is based on the implementation of logical sets of activities to implement activities related to the load balancer. In this article, we want to focus on the management of a load balancer in its specific and defined functional state, the activities and functions of the load balancer to implement the load balancer activities, and how to manage the effects of dynamic and interactive events on each of the activities constituting the function. This event makes it possible to provide a general solution for managing the effects of dynamic and interactive events on the functionality of the distributed load balancer.

3 Basic concepts

3.1 Load balancing definition

In traditional computing systems, from a load-balancing point of view, what describes the status of the process is time. The load-balancing element could consider the process three times <execution time, total time, idle time>. Execution time signifies the time allocated for a procedure. Full-time shows the time the process needs to complete its tasks. Idle time indicates when the process is not controlling the central processor. Defining the related elements to load balancing was also created based on the time-based management mentioned. Therefore, determining the processing element from a load-balancing point of view is based on the time it can provide processing power for computing processes. Thus, in computing systems, the adaptability of process requests over resource characteristics is defined by load balancing as a mapping between resource status descriptor and process variables, as shown in Eq. 1.

As shown in Eq. 1, the functionality of the load balancer is a mapping of the process required for accessing the central process to compute resource space in the system (Khaneghah et al. 2018). This mapping should allow the computing process to gain resources quickly and complete its task. Secondly, resource allocation between processes by load balancing should be done in a way that takes advantage of the maximum computing resource capabilities of the system. The definition of load distribution in Eq. 1 shows that it has based on two spaces process computing requests and computing resources capabilities. If only time is considered as the descriptor of resource and process elements in the simplest form, then the mapping in Eq. 1 forms Eq. 2.

The second part of Eq. 1 is also the actual for this equation. In Eq. 2, the definition of load distribution is based on the concept of time. Suppose, at any point, load distribution is activated, and there exists a process that requires a time limit for accessing the central processor. In that case, the load distribution element allocates the resources to the computing process based on the idle time of computing resources. As shown in Eq. 2, in this condition, the load distribution element should be able to allocate resources to processes so that the time requirements of the process are met by the idle time of computing resources. Both constituent variables in Eq. 2 are scalar.

Load distribution elements can include other characteristics for describing processes and computing resources. If the load distribution considers \(n\) dimensions for the description, then Eq. 1 turns into a mapping of \(n\times n\). Determining the number of dimensions in Eq. 1 depends on how the space of the computing process and its requests is defined. For each computing system, a need exists to represent the computing process from the load distribution point of view.

The relation of load distribution in computing systems to other constituent elements of system management is defined based on the framework, as shown in Fig. 1.

As shown in Fig. 1, load balancing is the main element in the management of computing systems. The nature of the system management element is such that load balancing is responsible for the responding structure of the system. The load distribution element should do its responsibilities perfectly in any computing system based on the system’s condition so that the system holds its maximum performance. Since load balancing connects to processes and computing resources of the system, it has precise information about the current condition. This event holds for both centralized and also decentralized distributed computing systems. In distributed systems, since load balancing is connected to the scheduling element, it has complete information about the local resources of the computing system. Also, because of load balancing’s connection to Inter Processing Communication (IPC), the possibility of interaction with other computing resources in the system is defined. Load balancing uses migration and resource discovery elements as management tools. It uses the migration element for process migration and resource discovery to find system resources. The monitoring element, as the tool for monitoring the status of processes and resources of the system, and the allocation element, as the resource allocator for processes, have direct connections to the load balancer. Job management tools are also defined at the highest level in the framework presented in Fig. 1. The architecture of the load balancer shown in Fig. 1 is based on the architecture illustrated in Fig. 2.

In Fig. 2, load balancing has three tasks: collecting data about the resources and processes, analyzing that information, and deciding on process transmission in computing systems (Khaneghah et al. 2018; Mirtaheri and Grandinetti 2017). This element uses existing databases in scheduling, IPC, and allocation units for data collection. On the other hand, load balancing uses databases for data gathering, resource discovery, and monitoring units to analyze the information. The migration management unit does the task of process migration.

In traditional computing systems, load balancing does not gain information about the request nature during the execution of the scientific application. It only collects data about the metrics of resource and process request status. This event is due to the nature of the programs executed in traditional computing systems. The computing system designer views the requests' character and the computing processes' requirements. Therefore, this makes it possible to select the appropriate mechanism for the load balancer by looking at the nature of the requests and the conditions that may occur during the execution of the scientific application. This mechanism should be able to describe the status of the process based on indicators to make decisions about the requirements of the process. The mechanism should also have indicators to tell the status of the resource, based on which it can decide whether the resource is capable of responding to a process request. In traditional computing systems, the patterns of request process events are a definite set. The computing system designer can describe a specific collection at the time of system design, called the Computing Process Request Pattern Set (CPRPS). The mechanism of the load balancer is determined on the basis that it can respond to this set during the execution of the scientific program. At the time of determining the mechanism of the load balancer, the following should be specified: (a) pattern of process and resource status, (b) mechanism of how to collect information, (c) determining which event patterns in the process status description indicate one of the events in existing patterns of CPRPS, (d) what pattern in the source status description state can respond to the pattern formed in CPRPS.

In traditional computing systems, the CPRPS sets and the response pattern to process requests are specified for the system designer based on the Resource State for Process Request Set (RSPRS). Therefore, the tasks of the load balancer consist of (a) collecting process status description information, (b) adapting the process status description to the CPRPS set, (c) determining pattern occurrence, (d) collecting source status information, (e) matching source status information with RSPRS and finally (f) transmission of the process using the process migration management unit of the source processing element to the processing element that can respond to the process request.

3.2 Request B is a traditional request

The request is first sent to the local operating system in this case. The local operating system cannot respond to request B, so B is sent to the computing system’s management unit. The management unit sends the process request to the load balancer. The load balancing creates a CPRPS set for request Β. After the formation of the CPRPS set, the load balancing according to its implementation pattern (centralized, semi-centralized, or distributed), as well as the mechanisms used to collect data, decision making, and process migration, tries to create its own RSPRS set. The data collection mechanism of the load balancer can make its RSPRS set either after the CPRPS collection, at specific intervals, or before the formation of the CPRPS set. The decision-making mechanism for the load balancing seeks to find a processing element at the system level that can respond to request B by adapting the CPRPS set to the RSPRS. Based on its process migration mechanism, the load balancer transmits the process responsible for request B to the destination processing element. Traditional Cluster and Grade computing systems differ in the RSPRS set's pattern. The RSPRS set is formed as shown in Formula 3.

As shown in Formula 3, the set, RSPRS, is a set of \(m\) elements in which each element is in the form \(<Resource, Resource Attribute vector>\). In this set, \({R}_{m}\) represents the \({m}^{\mathrm{th}}\) source, which the load balancer collects its data. For each element \({R}_{m}\) A vector containing \(n\) attributes is considered (the number \(n\) can be a different value for each source). In this vector, \({A}_{m,j}\) represents the status, or value of the descriptor of the \({j}^{th}\) attribute of the \({R}_{m}\) source. In Formula 3, \({A}_{m,j}\) can be a numerical or descriptive value. In traditional cluster computing systems, the number of elements that make up the RSPRS set is a numerical constant. In such systems, for each source \({R}_{m}\), the number of attributes describing the state of the source \({R}_{m}\) is a fixed number, and only during the program's execution do the values of the vector describing the state of the source change. The number of elements that make up the RSPRS suite varies in peer-to-peer computing systems. In these types of systems, the number of vector properties for description associated with each source \({R}_{m}\) does not change. The lack of change in the number of properties related to the descriptor vector of each source \({R}_{m}\) is due to the precise control and management structure related to responding to request B.

Suppose the constituent elements of the RSPRS set are specific. The computing system is a traditional cluster system; when designing the computing system, all the elements of the RSPRS suite are known. Another condition is that the system design provides all RSPRS members' suites to the system management unit. If the designer cannot decide what elements are part of the RSPRS suite during the global activity process, then the computing system is DECS. Still, the designer at the time could not provide all members of the RSPRS set to the system management unit, even though the mechanism and pattern of finding these resources by the computing system management were precise. In that case, this computing system is a peer-to-peer computing system.

Traditional computing systems used a single pattern for the CPRPS set. In Eq. 4, the general form of the CPRPS set is introduced:

in which we have:

-

1.

The \(Reques{t}_{type}\) variable indicates the type of request. The computing system management unit can define acceptable values for the \(Reques{t}_{type}\) variable based on the resource definition pattern, the process requirement pattern, and the nature of the program (or programs) running on the computing system. In traditional computing systems, the acceptable values for the \(Reques{t}_{type}\) variables are specified at design time and do not change during the execution of the scientific program in the system; for example, processes always request access to the CPU source. This state causes the variable \(Reques{t}_{type}\) to accept only the CPU value. The \(Reques{t}_{type}\) a variable can not be limited to the resource type, and the system management unit can define any other value for it (Khaneghah 2017; Bakhishoff et al. 2020). The value defined for the \(Reques{t}_{type}\) a variable must have a structure that responds to requests of the type specified by the \(Reques{t}_{type}\) variable. One of the most critical features of the \(Reques{t}_{type}\) a variable is an ability to define it at design time or the ability to define it at execution time. Using either of these methods constitutes the use of pre-embedded response structures or runtime response structures.

-

2.

The \(Reques{t}_{domain}\) variable indicates in which domain the system management unit should answer the process request. Acceptable values for this variable, such as the \(Reques{t}_{type}\) the system management unit specifies a variable. Typically, this variable can include local, Region, System, Global, or Representation (Bakhishoff et al. 2020). Based on the value of this variable, the load balancer in traditional computing systems decides what processing elements the RSPRS should contain. Acceptable values for the \(Reques{t}_{domain}\) variable, in traditional computing systems, are specified by the designer at the design time. This variable's value affects the system's structure, whether Centralized, Decentralized or Distributed. The variable's value always equals the system in traditional Cluster computing systems. In this computing system, the load balancer uses the information of all processing elements to calculate the RSPRS set. The load balancer in peer-to-peer Grid computing systems uses \(Reques{t}_{domain}\) variable to decide on the maximum acceptable amount for the scalability of the computing system. Scalability of computing systems means changing the processing elements that can be members of the RSPRS set. Creating the RSPRS set means collecting information and specifying the time to manage the load distribution. Conversely, data collection using load balancing is time-consuming, and the computing system must execute more tasks for the load balancer rather than running the target application. On the other hand, choosing a value for \(Reques{t}_{domain}\) variable leads to fewer members in the RSPRS set. The load balancer has to create an appropriate proportion between the two concepts of time that is acceptable for the execution of the load balancer and acceptable values for \(Reques{t}_{domain}\) variable. Without going into the details of resource discovery in peer-to-peer computing systems, the concept of scalability in these systems is to add processing elements (or elements) to the RSPRS suite that leads to a response to request B.

-

3.

\(Reques{t}_{influence}\) and \(Reques{t}_{dependency}\) variables: When in a computing system, request B is generated by the computing process, it may cause changes in the units, or concepts of the computing system, in global activities, and especially in the local computing system. In the process of creating request B, elements and concepts may influence the formation of the request. In traditional cluster computing systems, the susceptibility of the request from the system is at the lowest level, and even it can be said that in this type of computing system, the effect of request B from the system is close to zero. In this type of computing system, when request B is generated in a process, the request is necessary for the CPU type, so the CPU queue of the local processing element is affected by this request. The nature of request B may be such that the processes connected to the process with request B need to be synchronized, which affects the connected processes. The load balancer must change the RSPRS and CPRPS sets under the influence of this process if the time requirements of the requested B are not met in the local processing element and consequently has to use the process migration unit. In peer-to-peer computing systems, the effect occurs in traditional cluster computing systems when request B is generated in a process. The most important effect of request B from the computing system is the ability to perform more than one global activity in the computing system. In this case, request B may be affected by requests from other global activity processes running concurrently on the local processing element. This influence can be due to requesting access to a shared resource in the local processing element. What makes the concept of the influence and susceptibility of request B on the elements and concepts of the computing system to be wholly controlled in traditional systems is the existence of management and control structures created at the time of system design for responding to request B.

-

4.

The matrix \(\left[{R}_{1},\dots .,{R}_{z}\right]\) is a matrix \(Z\times 1\), each of which is a part of the request Β. Dividing request B into Z sub-requests, using a load balancer at the computing or operating system level, allows each processing element of the system to execute part (or parts) of the request separately. There are different patterns for dividing each request B into Z sub-requests. In Bakhishoff et al. (2020), as a peer-to-peer computing system, it uses the operating system model to separate request B into four sub-requests. Each request can generally contain one (or more than one) of the four I / O, Memory, File, or Process requests at the operating system level. In traditional computing systems, typically, each request B only includes a request to access the CPU or process. Therefore, request B can be described by a 1 × 1 matrix in traditional computing systems.

3.3 Dynamic and interactive events

Due to its direct relationship with the process and the resource, the load balancer is entirely dependent on the concept of system complexity and the complexity of scientific applications in need of high performance computing systems. The distributed system manager needs to use more complex patterns if the scientific program becomes complex or the state of the system elements (source or process) changes. The load balancer must use information (both in its simple form and complex form) to create an optimal match between the processes' requests and the resources' characteristics, to maintain the system's structure to continue operating.

In complex computing systems, events may change the status of processes and computing resources in the system. In the management and control of the mentioned events, the load balancing should be in such a way that at any moment when the load balancing is activated, it must be able to maintain the structure of the system to continue operating. Load balancing matches the processing requests and resources in the system. Changing the status of processes and computing resources in complex computing systems affects the concepts, functionality, and relationship of the load balancer with the other system management components. DECS is one of the implementations of complex computing systems.

DECS are computational systems designed to execute applications that are dynamic and interactive. In this type of computing system, both processing elements and computing resources can be changed during program execution. The nature of computational processes is such that during the execution of the computational process, an event may occur in the system that leads to the definition and creation of a new requirement in the system. Such a requirement is not taken into account while designing the system.

In this computing system, the purpose of executing scientific applications is to reduce response time and use processing power to discover the laws governing natural events. In DECS, the program is a set of basic rules governing a natural event and uses these rules to discover other (or primary) laws governing a natural event.

This state allows processes running on a DECS to execute events that create dynamic and interactive events. In Innocenti et al. (2017), it is stated that dynamic and interactive events can be due to new request formation of new interactions (and communications) within or outside the system. These events cause the system to face a request it cannot respond it. This requirement was not considered in the initial responding structure for executing the application on this system.

To investigate the effect of dynamic and interactive events on the function of the load balancer, assume that the peer-to-peer distributed computing system in Bakhishoff et al. (2020) is running a scientific application of a dynamic and interactive nature (http://www.deep-project.EU). At the moment \(t=\alpha\), in the \(\omega\) processing element, request Β is generated. Depending on the nature of the request Β, one of the following two scenarios occurs. Request Β can be either a request with a temporal nature or a request for access to the resource (either a processing resource or any other resource defined in system management).

4 Request B of dynamic and interactive nature

In DECS, in addition to changing the number of constituent elements of the RSPRS set, each source \({R}_{m}\), the number of constituent properties of the description vector of the source \({R}_{m}\) It also varies during system runtime. Since this request is of dynamic and interactive nature, the load balancer encounters a request that nature is unknown to the unit. The unclear nature of the request may cause the load balancer to need to receive information other than the set of information collected about a particular source to respond to it. Therefore, in Exascale distributed computing systems, both the number of constituent elements of the RSPRS set and the number of constituent properties of the vector describe the state of each \({R}_{m}\) source change over time.

In distributed Exascale systems, not all of the \(Reques{t}_{type}\) variable’s values can be specified at design time, and acceptable values for it change during the execution of the scientific application. Also, in this type of computing system, three elements of the system designer, management unit, as well as processes with dynamic and interactive nature can define the value for the \(Reques{t}_{type}\) variable.

To have explicit knowledge of the acceptable values for the \(Reques{t}_{type}\) the variable allows the load balancer to decide on the concept of computing system zoning and also on which element (or elements) has a higher ability to respond to requests with a specific value in \(Reques{t}_{type}\) variable. If the acceptable values for the \(Reques{t}_{type}\) a variable is known, and if an acceptable amount of time of the process’s life has elapsed, the load balancer can decide on the continuation of execution in the process as soon as a request occurs by observing the value for the \(Reques{t}_{type}\) variable. In DECS, the possibility of defining an acceptable new value for the \(Reques{t}_{type}\) variable by the system management unit, as well as processes of interactive and dynamic nature, leads to the creation of new control and management structures for continuing the execution of applications. Creating new management and control structures based on the \(Reques{t}_{type}\) variable causes the load balancer to change the RSPRS set.

In Exascale computing systems, the \(Reques{t}_{domain}\) a variable can take on new values due to the possibility of defining a new global activity. This state expands the concept of the computing system. In peer-to-peer computing systems, the primary purpose of scalability is to obtain new computing resources to continue implementing activities related to scientific applications. While in DECS, the purpose of scalability, in addition to the mentioned concept, is the need for the load balancer to create new control and management structures to respond to dynamic and interactive requests. In DECS, the \(Reques{t}_{domain}\) a variable must be able to consider these conditions as acceptable values. This event makes it possible for the load balancer to define the concept of Scalability + if the nature of the request is distributed Exascale systems are of dynamic and interactive nature. Scalability + is an expansion over the concept of scalability in which the goal of scalability. Scalability + primarily accesses new resources and creates a responsive structure to execute request B.

The two variables \(Reques{t}_{influence}\) and \(Reques{t}_{dependency}\) DECS assigns different values during runtime, depending on request B's dynamic and interactive nature. A request B with dynamic and interactive nature inevitably leads to the creation of a new process, either an administrative process or a process of managing new interaction and communication within or outside the system. This process is fully correlated with the process with request B, which causes the CPRPS set, \(Reques{t}_{domain}\), \(Reques{t}_{dependency}\), \(Reques{t}_{influence}\) As well as \({R}_{1},\dots .,{R}_{z}\) vector to change. In distributed Exascale systems, creating global activity responsive to request B is dynamic and interactive, creating a new set of influences and susceptibilities on other global activities. To analyze the effect and susceptibility of request B and the processes created to respond to it on other elements and concepts in the computing system (and especially other global activities,) each global activity can be described based on the Affine page so that the intersection of the pages with each other shows the influence and susceptibility of any global activity on other global activities (Mirtaheri et al. 2013).

In DECS, the members of the vector \({R}_{1},\dots .,{R}_{z}\) may change during the procedure of responding to request B. Also, the number of constituent elements of the descriptive vector may change during the response to request B. This change is due to the interactions of the process that owns request B with the newly created process or other processes in the system and environment. Changing the components of the vector \({R}_{1},\dots .,{R}_{z}\) means changing the requirements, which is the answer to request Β. The load balancer should be able to take into account changes in the vector \({R}_{1},\dots .,{R}_{z}\) during the response procedure.

5 ExaLB framwork

According to the discussion above, a framework similar to the framework shown in Fig. 3 can be considered a framework for the definition and function of the load balancer in distributed Exascale systems.

As seen in Fig. 3, the load balancer requires the consideration of units that can address the decisions over the nature of the request. The load balancer needs units such as creating appropriate response structures to continue the global execution, considering the effects of executing more than one global activity, and the concept of the system environment.

6 RSPRS/CPRPS rewriting

As stated in Sect. 3.2, the request B type effectively forms the RSPRS set. The analyzer section of the load balancer makes decisions about the condition of the RSPRS set based on the following model, given the nature of request B.

-

1.

If B is of the traditional type and responding to it does not change the number of resources in the system. In this situation, the number of resources that make up the system is constant. The characteristics that describe the status of resources are also fixed. The fact that the above two values are constant causes the two variables \(m\) and \(n\) to have fixed and definite values in Formula 3. This state allows the existing RSPRS set to be used to respond to request B without the need for any modifications.

-

2.

Request B is of the traditional type, but it is impossible to respond to it using the resources available in the system. In this situation, by calling the resource discovery, the load balancer first expands the system intending to find a suitable resource to respond to the process request. Then, by examining the new set of RSPRS, which has been obtained from the addition of the mentioned resource to the previous set, it maps the two sets of the request pattern and the response pattern to each other and tries to establish the balance of load in the system. In this case, in formula 3, the variable \(m\) has a non-fixed value, and the variable \(n\) has a fixed value.

-

3.

Request B is dynamic and interactive. In Formula 3, neither of the variables \(m\) and \(n\) have a constant value due to the system's need for scalability and the lack of information about the cause and nature of request B, respectively. In this condition, the load balancer must first identify the nature of the request and then explore the characteristics of the resources with which it is possible to respond. In this case, the RSPRS set can respond to request B after making fundamental changes.

The task of the Nature Request unit is to determine the nature of the request. This event causes the need to define the request Nature unit in the load balancer in DECS. This unit must determine whether the request formed is of type (a) or (b). This element can decide whether the nature of the request is traditional or due to the dynamic and interactive occurrences based on the processor's behavior, the history of the activities, and the concept of annihilating polynomials (Wylie 2020). Due to the multi-dimensional nature of the process requests and the type of resources required to respond to requests in DECS, Formula 1 can be rewritten into Formula 5 based on the vector pattern.

Formula 5 is the vector form of the load balancer’s functionality. According to Formula 5:

-

Each process can be described as a \(1\times N\) vector in which N is the number of process requests executed in each processing element \(\omega\).

-

Each source in the processing element \(\omega\) can is described based on a \(1\times M\) vector in which M is the number of responses provided by the computing resource to the global computing processes.

-

Each process request is executed in the processing element \(\omega\) based on the \(2\times 2\) matrix in the form \(\left[Req_{type} Req_{Behavior} Req_{history} Req_{NULL}\right]\).

In the \(2\times 2\) matrix mentioned, \(Req_{type}\) Indicates the type of request, which tells what source the process request is available. The load balancer must be able to classify the resources in the computing system to extract the \(Req_{type}\) data. In the case of traditional computing systems, such as cluster, Grid, and peer-to-peer computing systems, \(Req_{type}\) In Exascale peer-to-peer computing system (Khaneghah 2017), each process request can be for one of four resource types of file, input/output, memory, or processing element is always of CPU type. In DECS, the request type can be sources other than the process resource.

Listed in the \(2\times 2\) matrix mentioned above, \(Req_{history}\) indicates the history of the request. From the point of view of the load balancer, each request for a computational process is either an independent request created for the first time or a follow-up request that has resulted from responding to another request. Maintaining a request history allows the load balancer to extract information about the resources required by the process and processing elements connected to the processing element \(\omega\) and respond to the request. From the point of view of the load balancer, the rule stated in formula six is held for each element of the data structure of the link list in \(Req_{history}\).

According to Formula 6, for each element defined in the \({Req}_{history}\) a data structure, there must be a corresponding member in the \(Resource_{histor{y}_{omega}}\) a data structure that represents the source by which the request was answered. This resource can be one of the local resources of the omega computing system or a source outside the omega computing element. Considering Formula 6, the \({Req}_{history}\) data becomes a linked list structure in which two pointers are defined. The first pointer refers to the subsequent subordinate request resulting from the response to the request, and the second pointer to a corresponding element in the data structure, \(Resource_{histor{y}_{omega}}\).

In the \(2\times 2\) matrix mentioned, \(Req_{behavior}\) represents the request behavior. The load balancer can consider different states for \(Req_{behavior}\). The primary purpose of this element is to investigate whether the behavior of global activity’s processes follows a particular pattern. This knowledge is implemented as a link list in which each node represents the unique properties of a process. From the point of view of the load balancer, a process can have a unique attribute (or attributes) regarding the three concepts of time, location, and dependency. These unique attributes of the request process define the request’s behavior. The behavior of each process request that constitutes the global activity follows formula 7.

In Formula 7, the request behavior of a global activity process consists of three concepts: the time limitation (or limitations) governing the request, the location limitation (or limitations) governing the request, and the dependence of the request on any other concept. The two limitations of location and dependency are discrete. Time limitations are of continuous interval type. In the case of a global activity constituent process, some of these attributes may have NULL values. The value of NULL means no limitation on that particular feature. The relationship and impact of these attributes on each other are determined for each process request. In the case of a particular request, certain limitations may be more critical and affect the value of other limitations. Ideally, the result of these three features on each other indicates the behavior of the process request.

Conventionally, the T, L, and D limitations must be independent. \(Req_{behavior}\) is maintained as a linked list in which each element is of the form \(<T,L,D>\). In the procedure of global computing process execution, if during each execution of the process, one of the three elements of the \(Req_{behavior}\) changes, one element is added to the linked list. The load balancer has access to the data set related to the three variables \(<T,L,D>\) at each process execution time. It can use the expected value of this data to decide on the independence or interdependence of one another. If Formula 8 is valid for each execution of the process, then two of the variables in \(<T,L,D>\) are of the independent type, and otherwise, they are interdependent.

In Formula 8, two variables' dependence and independence, are a time function. This event causes a) the load balancer to use regression if not independent for calculating the operator between two variables, and B) two variables can be independent of each other for a particular time and then interdependent, or vice versa.

In the \(2\times 2\) matrix mentioned in formula 5, \(Req_{NULL}\) is the main request and the reason for the formation of global activity. Each global activity is formed in a computational element, such as \(\omega\), to respond to a specific request. This specific request can be described in the form shown in Formula 9.

Formula 9 states that each element of \(Resource_{NULL}\) Is a linear polynomial in which \({x}_{i}\) represents the resources required to meet the main request of the global activity, \({A}_{i}\) represents the weight and importance of the resources requested, \({y}_{j}\) represents the limitations, Application limitations, and \({B}_{j}\) indicates the weight and importance of the limitations. Formula 9 can be rewritten for each B request.

In Formula 10, T is a linear conversion on the request space. This linear conversion represents responding to the request, which is answered under the linear T conversion. If Formula 10 is true, request B is a formal request; otherwise, request B is of dynamic and interactive nature.

-

4.

Each response provided by the source in the processing element \(\omega\) is defined based on a \(2\times 2\) matrix in the form of \(\left[Resource_{type} Resource_{fuctionality} Resource_{history} Resource_{NULL}\right]\).

In the \(2\times 2\) matrix mentioned, the \(Resource_{type}\) element refers to the responding source to the request. The computing system manager can use any pattern to classify the resources in the computing system. This classification must match the \(Req_{type}\) classification defined in the request matrix but does not have to be done as one by one adaption. The manager of the computing system, based on any function (or even, in some cases, based on any relations), can establish a match between the two mentioned concepts. In the computing system manager of Khaneghah (2017), a one-to-one match between the process request for resources and the manageable resources by the system manager is used based on the operating system’s pattern for the classification of resources. The load balancer uses \(Resource_{type}\) Information to define the concept of resource attributes. In this situation, the load balancer defines a set of indicators for each type of resource defined in the system manager unit. Any data structure at the operating system’s kernel can maintain information about these indicators.

One of the most important differences between various load balancer implementations is determining indicators of resource descriptors. The higher the number of indicators the load balancer uses, the more accurate it is in resource status. A clear view enables the load balancer to create an optimal match between the process request and the responding resource. On the other hand, using more indicators to describe the resource increase the time required to gather information about them. In traditional computing systems, the \(Resource_{type}\) In DECS, there may be a process request that (A) does not relate to the type of CPU resource and (B) can not be analyzed by the conventional attributes of resources that the load balancer uses to describe the resource. Typically includes the CPU computing resource. Indicators describing the CPU resource are idle and busy times, or the resource is either available or unavailable.

In distributed Exascale systems, the load balancer typically uses the concept of response history to examine the number of indicators and whether the indicators can respond to process requests. For this purpose, the load balancer utilizes a function as one in Eq. 11.

As can be seen in Formula 11, the load balancer in distributed Exascale systems is a binomial function to check whether the defined indicators are capable of responding to process requests. This function is a two-variable function of time and request, independent variables. The mechanism of function 11 is based on the fact that in the case of a specific request \(Req_{A}\) Examines how successfully the request has been answered locally. The local response level means that the process is initially created locally or is part of a global activity intended to run locally in the processing element. If this amount is more than a certain number, the defined indicators are appropriate, and the load balancer does not need to change the defined indicators. If less, it means there is a need for defining new indicators for the resources in the computing system.

In Formula 11, according to the model presented in Khaneghah (2017), since only the number of global processes is known, to calculate the number of locally responded processes, the difference between the number of global processes responses and the number of all processes responses is used.

The \(Resource_{history}\) indicates the history of resource usage. The load balancer for each resource defined in the computing system maintains two types of source information related to the resource (with its descriptive indicators) and historical information related to the responses of the resource to requests. The second type leads to the creation of a shared data structure between the \(Resource_{history}\) and \(Req_{history}\). Historical information about descriptive indicators represents the model the load balancer uses to describe the resource for the processes in the system. The more dynamic and interactive the processes in the system, the more varied the patterns used by the load balancer to describe the resource.

\(Resource_{functionality}\) indicates the functionality of the resource. This knowledge can be described based on variables such as time, cost, and type of response to existing requests. In traditional computing systems, the resource's function is considered time-independent. In such systems, the load balancer assumes that (A) the function of the resource \(\alpha\) is fixed during the execution of the scientific application, and (B) the independent variable describing the resource \(\alpha\) is constant and equal to the measurable indicator of the resource’s operating time. In DECS, (A) the function of the resource \(\alpha\) changes during the execution of the scientific application, and (B) more than one independent variable can be defined to describe the resource \(\alpha\), and, consequently, the measurable indicator of the resource. In this type of computing system, the finite vector of V_Alpha can be defined for the resource \(\alpha\), whose indices represent evaluation indicators or independent variables that describe the function of the resource. The load balancer uses a formula similar to Eq. 12 to determine what the function of the resource \(\alpha\) is at the moment \(t\), for the process \(Fi\) (which is itself part of a global activity).

In Eq. 12, T is a linear operation on the finite vector space of V_Alpha. If \(f\) is equal to the polynomials defining the state of the resource functionality, \(f\) is necessarily a type of indicator describing the functionality of the resource Alpha if \(f(T)=0\). In this case, the function \(f\) represents the functionality of the resource Alpha at the moment \(t\) from the point of view of the resource management unit for the process \(Fi\). In distributed Exascale systems, each resource Alpha can have different functions in terms of the elements that make up the system manager unit. In Eq. 12, the linear function T is the operator representing the function of the load balancer on the resource Alpha. This operator indicates what activities the load balancer considers to be definable and applicable to the resource Alpha. Based on Eq. 12, we can define an ordered base such as \(\{{V}_{Alph{a}_{1}}, \dots ,{V}_{Alph{a}_{m}}\}\) for the V_Alpha vector, matrix \(U\) can be defined to represent \(T\) based on that. By considering the existing assumption, Equation No. 13 can be defined.

Equation 12 is calculated based on formula 14 in the case where \(m\) is a finite number greater than 2.

Equation 13 solves Eq. 12 in the condition that the resource Alpha is described based on two indicators in which their definition is not a function of a time-independent variable. Equation 14 solves Eq. 12 in the condition that the resource Alpha is based on the \(m\) indicators and is a function of the time-independent variable.

The matrix \(U\) is a description of the activity in time and is defined based on K, the displacement loop with the identical element consisting of all polynomials of T. In general, the definition of the U matrix is \({U}_{ij}={\sigma }_{ij}T-{A}_{ij}I\). Equations 13 and 14 are solutions to find \(f(T)\) at the definite moment \(t\). In the most general case, to determine f (T), it is necessary to calculate the matrix \(U\). The general concept defines the matrix U that any resource can be defined based on two sets of Z, which represents the resource type, and X, which represents the activities that can be performed on the resource. These two sets create K displacement rings with an identical element consisting of all definable polynomials of T. The identical element consists of all definable polynomials of T, which embodies the concept of resource execution (serving) time. This concept can be defined as a familiar concept for all resources. Therefore, in Eqs. 13 and 14, an element I am equal to Busy Time. Two operations of and can are defined for two sets of Z and X. Action \(\otimes\) is the possibility of acting on a specific resource type, and action \(\oplus\) represents the element that performs the task. For example, in the case of the CPU resource type, the possible operations of allocation, reallocation, and the executing element for the scheduler.

In the \(2\times 2\) matrix mentioned, \(Resource_{NULL}\) is the coefficient of resource importance in the execution of global activity. For each activity \(Fi\), each resource Alpha has a coefficient that can be expressed according to Formula 15.

In Formula 15, each element of \(Resource_{NULL}\) Is a linear polynomial in which \({x}_{i}\) denotes global activities that use the resource Alpha, \({A}_{i}\) denotes the time each global activity uses the resource Alpha, \({y}_{j}\) denotes limitations over resources, and \({B}_{j}\) indicates the weight and importance of the limitations.

Formula 15 can be rewritten to Formula 16 for each resource β.

In Formula 16, if the value of \({Resource}_{\beta } \left(T\right) and Resource_{NULL}*{Resource}_{\beta }\left(T\right)\) is zero, then the resource \(\beta\) is a resource with a traditional pattern, and otherwise, the resource \(\beta\) is a resource capable of performing activities of a dynamic and interactive nature. Formula 16, \(T\) is a linear transformation of the resource \(\beta\)’s space. This linear conversion indicates the use of the resource. The request is answered under the linear transformation of \(T\).

6.1 Data gathering

The main task of the load balancer is to establish a proper allocation between the two sets of RSPRS and CPRPS. Ideally, this unit should have a clear view of both sets to carry out its tasks. In DECS, both the CPRPS and RSPRS sets can change at any point in the execution of the scientific application. If process Z in DECS has the request \(\beta\) at \(t=V\), it causes the load balancer to be activated, in which case the load balancer creates an image of the system status at the moment \(t=V\). Creating an image by the load balancer means creating the CPRPS and RSPRS sets associated with the request β and the process Z. Dynamic and interactive events may occur at any point in the system’s execution. Consequently, CPRPS and RSPRS may change. However, the load balancer, from the moment \(t=V\) to the moment of responding to the β request, considers the system status of the request β based on the dual pair RI:: <RSPRS, CPRPS>. Dynamic and interactive events can cause a significant difference between the real RI and the RI at the starting time of the distribution task. Sometimes, it violates the cause of the load balancer’s activation (Khaneghah et al. 2018). For this purpose, RI must be converted from its traditional state to RI (t), which means that the two sets of CPRPS and RSPRS change from time-independent to time-dependent variables.

Rewriting RI based on the variable time means rewriting Eq. 5 with the independent time variable in Eq. 17.

Equation 17 shows that taking a partial derivative of the \(\left[Resource_{type} Resource_{fuctionality} Resource_{history} Resource_{NULL}\right]\) and \(\left[Req_{type} Req_{Behavior} Req_{history} Req_{NULL}\right]\) matrices in terms of the independent variable of time allow each of the two sets of CPRPS and RSPR to be rewritten using the time variable. The independent axial variable of matrices \(\left[Resource_{type} Resource_{fuctionality} Resource_{history} Resource_{NULL}\right]\), and \(\left[Req_{type} Req_{Behavior} Req_{history} Req_{NULL}\right]\) are the resource and the request variables, respectively. According to the definition of axial variables and the need to rewrite based on the independent variable of time, Eq. 17 can be rewritten to Forms 18 and 19.

Equations 18 and 19 are the rewrites of the matrices that make up Eq. 5, Regarding axial variables of resource and request and the independent variable of time. The most critical challenge in calculating Eqs. 18 and 19 is the time and number of times these Equations are to be calculated. The higher the calculation frequency of these Equations, the closer the calculated RI is to the actual RI. Ideally, for each unit of time, the load balancer performs Eqs. 18 and 19, where the calculated RI equals the actual RI. This event increases the cost of executing the load balancer, and this task would need a separate processing element so that calculations could be performed continuously. This event contradicts the nature of the DECS. Therefore, the load balancer uses the concept of a similar matrix in each processing element to calculate the RI close to the actual RI.

From the point of view of the load balancer, if two RI matrices related to the two permissible states are similar, it means that the dynamic and interactive nature of the computing system has not occurred. For this case, the load balancer uses the concept of the axial element. The axial element of the matrix described in Eq. 18 is the \(\left[\frac{\partial Resource_{NULL}}{\partial resource} \frac{\partial Resource_{NULL}}{\partial time}\right]\) element, and in Eq. 19, is the \(\left[\frac{\partial Req_{NULL}}{\partial request} \frac{\partial Req_{NULL}}{\partial time}\right]\) element. The load balancer decides on the similarity of the matrices by comparing the axial element of matrices at the moment \(t=Alpha\) with that of the moment \(t=Alpha+E\). The load balancer also determines the value of \(E\) during system execution. During this time (and calculating the RI), the load balancer can decide on the value of E for each activity, with which the matrices of Eqs. 18 and 19 differ from these matrices at the moment before E.

6.2 Scalability

One of the main features of distributed computing systems is the introduction to scalability. Resource discovery is the central concept of scalability in traditional computing systems (Lehman et al. 2019). The general mechanism governing the scalability in this type of computing system is based on the fact that a request occurs in the system that neither the local operating system of the process nor the load balancer can respond to. Therefore, the load balancer calls the resource discovery. Based on the type of resource requested by the process and resource discovery mechanism, the resource discovery tries to find a processing element outside the system and can respond to the process request. The mechanism used by the resource discovery indicates the structure of the response to the request and is specified when designing the computing system. Defining the resource discovery mechanism at the time of system design is possible due to having information about the response structure required for the implementation of the scientific application by the system designer.

The scalability mechanism in traditional computing systems increases the system's ability to respond to process requests with the arrival of computing resources and new computing processes. This event causes the load balancer to redistribute when scalability occurs. The load balancer must be able to extract the features and capabilities of each element added to the system (or delete information of each element removed from the system) to decide about the possibility of using that element (or the impossibility of using the removed element) in the procedure of responding to the process request(s).

In DECS, scalability has a different definition and functionality due to the system's possibility of dynamic and interactive events. The most crucial difference between scalability in traditional computing systems and DECS is that they have information about the nature of requests, the response structure, and the lack of events that could change the system's state or the environment during scalability.

In traditional distributed computing systems, the nature of the request does not affect the mechanism used to discover the resource or the scalability. The load balancer sends the triad of <Request type, Process, Time, and Location Limitation> to the resource discovery. The resource discovery creates a response structure based on its mechanism by considering the type of request and time and location limitations. In DECS, the nature of conventional requests differs from that of dynamic and interactive requests.

The nature of the request in this computing system refers to why the process has created a request that has led to the need for scalability. In conventional requests, the scalability response structure is created to respond to the process request based on the centrality of the resource discovery mechanism. On the other hand, when a request of dynamic and interactive nature occurs, the reason for the request is not apparent for the load balancing and, consequently, for the resource discovery. If the load balancer fails to determine the nature of the request, the resource discovery may create a response structure that cannot respond to the process request. Any situation, such as creating new processes or the need for interactions and communications within and outside the system, causes requests for a particular resource to be different from others (Khaneghah and Sharifi 2014).

The focus of resource discovery in this computing system is to find the processing element that, in addition to providing a resource that can meet the needs of the process, must also be in line with the nature of the request. In DECS, the load balancer sends a quadruple of < Request Nature, Request Type, Process, Time, and Location Limitation > to the resource discovery. Based on the pattern of arguments received from the load balancer, the resource discovery decides whether to use the traditional concept of resource discovery or the ExaScalability pattern for scalability. In DECS, the nature of the request determines which structure to be created for responding to the process request and which element with what features can respond to that request. In traditional computing systems, this is defined during system design while defining the mechanism for resource discovery.

The resource discovery in the computing system is affected by a set of practical factors. These factors also affect the response to the process request. In traditional computing systems, nothing happens in the computing system that affects the practical factors of the resource discovery during the resource discovery procedure.

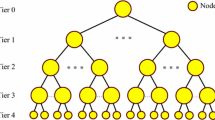

It is assumed that in the distributed system of Khaneghah (2017), to understand the resource discovery scenario in a DECS, an event of dynamic and interactive nature occurs at the moment t = Alpha in one of the members of the computing system. Figure 4 shows the event status leading to scalability in the system.

As seen in Fig. 4, in machine O, a member of the computing system (marked with a lightning symbol), an event of a dynamic and interactive nature occurs. This event can be any of the situations described, the creation of a new process T, or the new interactions and connections within and outside a system. The computing system cannot respond to this request. Up to this point, the system status follows the pattern of a traditionally distributed computing system.

The difference between a traditional computing system and a DECS begins at the moment of request analysis by the system manager. In a traditional computing system, the manager recognizes the nature of the request, so it calls the resource discovery. The resource discovery discovers the resource that can respond to requests based on the predefined mechanism and policy. In DECS, when a request is generated in the system, the system manager does not know the nature of the request, so as shown in Fig. 4, it creates a new global activity for managing the request.

In Fig. 4, the occurrence of a dynamic and interactive event has caused the global activity in machine y to continue, and the manager of machine O has created a response structure for managing the request with dynamic and interactive nature. As with any global activity, the request can be answered on Machine X or transferred to other machines on the DECS.

Scalability in DECS, in addition to its traditional role, must have the load balancer to make decisions while calling for resource discovery or creating a new response structure. The new response structure should not violate system performance.

At the moment of load imbalance in DECS due to a dynamic and interactive event, the load balancer can take one of the two redistribution policies, either going to the following equilibrium or returning to the previous stable distribution status (Khaneghah and Sharifi 2014). In this case, we can define the Effort variable, which represents the coefficient of system call by the load balancer relative to the total system calls executed in a single unit of time. The \(Effor{t}_{Si}\) is calculated by Eq. 20.

As stated in Eq. 20, the \(Effor{t}_{Si}\) is equal to the number of system calls associated with the load balancer relative to the total system calls during the interval when the load balancer is busy redistributing the load and creating a new state of balance in the system. In Eq. 20, the \(MACHINE Z\) is defined because there may be more than one beneficiary machine involved. The \(MACHINE Z\) variable is equal to the total number of beneficiary machines related to the load balancer’s activities. In Eq. 20, the load balancer redistributes the load in the time interval [LB, LB + ε]. If the value of the \(MACHINE Z\) variable is more than one, the variable \({Statistic}_{Sij}\) As shown in Eq. 21, it should be used. The \({Statistic}_{Sij}\) can be calculated by Eq. 21. Note that the status of the load balance is denoted by \(i\) for the time before the load balancer is activated, and the status of the load balance after it is redistributed is shown by \(j\).