Abstract

Focusing on the problem of inefficiency and labor waste in contact and sensing crop altimetric methods, a method is proposed for crop height measurement using machine vision. Firstly, a crops height measurement model was established based on aperture image principle, and then segmentation lines extraction of crops and background was implemented by a series of morphological operations. Secondly, the foreground information is segmented according to the H channel in the HSV color space. To help extract the complete crop area, a global scan was performed on the image using multiple images to obtain a segmentation threshold with a certain fault tolerance, and the inter-frame sum method and large-scale filter template are also adopted for image enhancement. Considering of the uneven height lines and porous holes in the crop area, the boundary line between the crop region and the background is strengthened through morphological operations such as dilation and erosion. Finally, the Sobel operator is applied to detect the horizontal line. The parameters of the height line could be calculated, and thus achieving crop height measurement. Experiment results show that the inter-frame enhanced image is filtered using the median and morphology of the large-scale window, and the complete upper boundary line of rice and wheat is obtained through hole filling to realize height measurement. Average error of crop height is less than 1.6%, and processing time per frame within 50 ms.

Article Highlights

-

(1)

A crops height measurement model was established based on aperture image principle, and then segmentation lines extraction of crops and background was implemented by a series of morphological operations.

-

(2)

The foreground information is segmented according to the HSV color space, and the crop area is selected by the inter-frame sum method and large-scale filter template. Morphological operations such as dilation and erosion are used to optimize the boundary line. Finally, the boundary line is detected by Sobel operator and the crop height could be calculated.

-

(3)

Experiment results show that the inter-frame enhanced image is filtered using the median and morphology of the large-scale window, and the complete upper boundary line of rice and wheat is obtained through hole filling to realize height measurement. Average error of crop height is less than 1.6%, and processing time per frame within 50 ms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Crop height is the most intuitive morphological manifestation that indicates overall crop growth and is also used as a comprehensive reflection of soil, hydrology, meteorology and other factors. Reliable basis for crop species identification, pest prevention and control, yield estimation and reasonable control of plant height in the breeding process could be provided by plant height measurement [1, 2]. To ensure the working stability of the harvesting robot, it is necessary to adjust the height of the puller according to the crop stalk height. Calculation of the real-time feeding amount is also of necessity, which could reflect the load level of the harvester, preventing the overloaded. Thus. the height of the crop stalk is a key parameter during the operation of intelligent harvester.

Traditional crop height was measured by scale, which is time-consuming and inefficient, subject to a large image of subjective factors, leading to difficulty to achieve simultaneous observation of plant height over a large area [3]. Another traditional method is to use the spectral data to calculate the vegetation index to establish a regression model to estimate the plant height indirectly [4, 5]. As a profile method, the spectral index is insensitive to changes in crop height during the late growth period, which can result in saturation. Hence, many experts have conducted research on automatically high-precision, high frequency and high efficiency measurement of crop height. Tao Huilin et al. [6, 7] used drones to obtain hyper spectral images, and used the generated digital elevation model DEM containing terrain elevation information and digital surface model DSM containing elevation information such as crops and trees to predict crop heights and algorithms. The extracted plant height has a high degree of fit with the measured plant height, and the root mean square error is only 6.45 cm. Yang Song et al.[8] used drones to obtain point cloud data of winter wheat, and used statistical analysis methods and moving cuboid filtering to determine the height of the plant, achieving high accuracy for height extraction with low Root Mean Square Error (RMSE) of 6.37 cm and Mean Absolute Error (MAE) of 5.07 cm. Jelle ten Harkel et al. [9] proposed the use of UAV-LiDAR to estimate the crop height of different crops, with a root mean square error at about 10.1 cm. These height measurement methods are costly, complicated to operate, and improper manual operation may cause great losses. ThiPhan et al. [10] used point cloud data for height measurement, but the system is inconvenient to operate, and tracks need to be laid for the laser scanner to move and scan, which has a high cost and not suitable for agricultural planting characteristics. Wan-SooKim et al. [11] proposed a method of using binocular stereo vision to measure the height of various field crops. The stereo image obtained by stereo matching is converted into a disparity map, and the disparity of each pixel is calculated to obtain the depth map. The edge image of the crop could be obtained from depth map, and the highest point and the lowest point of the crop are determined in the edge image to determine the height of the crop. Using point cloud information to determine the height of the crop is computationally intensive, and it is difficult to ensure real-time performance. While monocular vision has the advantages of simple installation and convenient use. This paper will use monocular vision to measure the height of crop.

This paper proposed a crop height measurement method based on machine vision. Firstly, a height measurement model of rice and wheat based on the characteristics of the measurement scene is established, and the foreground information is segmented in the HSV color space according to the H-channel stability. The inter-frame sum method and large-scale convolutional mask are used to enhance the image, and the horizontal line is detected using Sobel operator, and the crop height is calculated according to the horizontal line position. The experimental results show that the method can detect the height of rice and wheat accurately in real time with an average error in and a processing time of less than 50 ms per frame. The next section details the crop height detection algorithm based on machine vision. Section 3 shows the experiment, and Sect. 4 summarizes the content of this paper.

2 Methods

2.1 Principle of crop height measurement

In the crop height measurement system, the camera is placed on the right side of the forward direction of the harvester. The installation diagram is shown in Fig. 1. The optical axis of the camera is parallel to the ground and perpendicular to the forward direction of the harvester. The crop harvester always keeps the full cutting width while harvesting.

According to the camera location and the operating characteristics of the harvester, the principle of pinhole imaging could be used for measurement. As shown in Fig. 2, \(P^{\prime}\) is the position of the optical center, the plane where \(A^{\prime}B^{\prime}C^{\prime}D^{\prime}\) is the imaging plane, and the plane where ABCD is the object plane.

The distance from the camera to the unharvested area, which depends on the width of the harvester’s header, is constant.

where d is the distance from the camera to the object plane, and \(O^{\prime}O\) is the distance from the image plane to the lens.

The same could be obtained as follow.

From Eqs. (2) and (3), it could be seen that the real height of crops at any place in the image is only related to its pixel height, the distance \({|}P^{\prime}O^{\prime}{|}\) from the lens to the image plane as well as the distance d from the camera to the object plane. The ratio between d and \({|}P^{\prime}O^{\prime}{|}\) could be obtained through experimental calibration as Height factor, which are both fixed value during the measurement process. The true height of crops could be calculated by its pixel height in the image.

Calibration should be down for multiple time before experiment to get the height corresponding to a pixel, meanwhile, to obtain the position of crop roots in the image, calculating the average value. The height of crop could be obtained according to the Height factor s and the position of height line \(H_{top}\) in the image.

2.2 Height line extraction of crop based on color features

In the crop height measurement, image background such as trees and sky could interference with the extraction of crop height lines, which is the key point and difficulty in the measurement.

2.2.1 Image acquisition and preprocessing

To reduce the computational load, the large size image captured ought to be scaled first. The crop height line is extracted on the scaled image, and then restore to the coordinates in the original image.

Image scaling has image pyramid-based and interpolation-based methods. The interpolation-based method could be divided into nearest neighbor interpolation, bilinear interpolation, bicubic interpolation, etc. [12]. Since the upper edge position of the crop in the acquired image has a certain range of fluctuations, the distortion at pixel level does not affect the measurement of the crop height. Therefore, this paper mainly focus on edge line obtaining, and ignores the details of the image appropriately, allowing a certain degree of image distortion. The Gaussian pyramid is used to downsample the image.

Gaussian pyramid downsampling first uses the Gaussian kernel to convolve the image for image smoothing, removing noise to ensure the sampling quality. Then simply remove the even-numbered rows and columns for image downsampling. The aspect ratio of the output image remains unchanged.

2.2.2 Crop region extraction based on HSV color space

To extract the height line of crop, crop region should be separated from the background such as sky and trees. Considering that mature crop has obvious color characteristics, image segmentation could be performed according to the color characteristics to obtain the upper boundary line of the crop.

A suitable color space should be selected for image processing before crop area extracting. The RGB color space model is a color model closely related to the human visual system and is widely used in electronic display systems, in which the 3 color components are highly correlated. A change in one of the color components may result in a change in the other, and the three components will all change accordingly in the condition that the brightness of the color changes. Therefore, the RGB color model is not suitable for color-based image segmentation. The HSV color space model is represented by a conical space model, in which hue H is represented by an angle, ranging 0°–360°, which represents the position of the color. In the RGB color space model, the color information is determined by all three components, while the color in the HSV model could be represented only by H. The color expression of the HSV color space model is relatively intuitive, and it is often used for color-based image segmentation in the field of image processing [13,14,15].

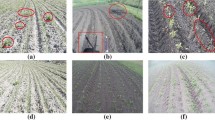

As shown in Fig. 3, analysis is processed on the image converted to HSV color space. The image on the left is the wheat harvest, and the right is the rice harvest. Analyze the three-channel value of a column of pixels in the middle of the image in HSV color space model. It could be seen that the H component values of the crop areas are both below 50, which is obviously different from the background area.

The H values of the rice and wheat regions of multiple images are averaged and a certain error tolerance is added to obtain the threshold coefficient T. Perform a global scan on the image and use the threshold T for thresholding segmentation. Calculate the average H value of the crop regions in multiple images, and add a certain error tolerance to obtain the threshold T. Then scan the image globally, using threshold T or thresholding segmentation.

The thresholding segmentation formula is shown in formula 5. This formula is used to segment the background area and the crop area.

where f(x,y) is the three-channel pixel value of the HSV color space of original image at (x,y), and f(x,y)H is the value of the H channel at (x,y) in the original image. g(x,y) is the HSV color space pixel value at (x,y) after conversion, g(x,y)H , g(x,y)s, and g(x,y)v represent the values of the three channels in HSV color space at (x,y) after conversion. T is the set threshold. When the H channel value of a certain pixel of the original image is greater than the threshold, the three-channel pixel value at that point remains unchanged, otherwise all become zero.

As shown in Fig. 4, after conversion, the pixels in the image whose H, S, and V values are all 0 are the background, and the remaining pixels are the foreground. Figure 4a1 and b1 are the ROI1 regions obtained from the original images of wheat and rice. Figure 4a2 and b2 are the images after color feature processing. It could be seen that the crop regions are preserved completely. Figure 4c1 is an image of crop taken with a handheld camera. It could be seen that the crop region can still be accurately extracted under the interference of distant woods, vehicles and other backgrounds. Due to the particularity of the camera installation, there is little interference in the background area in most cases, and the method of extracting crop regions based on color features could work.

2.2.3 Multi-frame summation to enhanced image

The crop images collected by the smart crop harvester may be locally high. In order to improve the extraction accuracy of the upper boundary of the crop region, and to avoid data errors caused by high local crops, the position of the crop height boundary line obtained in the previous frame of ROI1 area is used to assist the height estimation of this frame of ROI1 area, and obtain ROI2 for image segmentation, which could improve processing accuracy and cut down processing time, achieving accurate measurement of crop height purpose.

As shown in Fig. 4, there are large amount of black, irregular holes in the image obtained by segmentation using color features to be treated, reducing the complexity of the boundary line extraction in the later stage. Since the differences between the height lines of the crop in two adjacent frames is small, the inter-frame sum method could be used to enhance the image to eliminate the holes.

As shown in Fig. 5a–c are n-2th frame, n-1th frame and current nth frame image. It could be seen that the inter-frame sum method fills the black holes at the bottom of the frame, improve The inter-frame sum method fills the black holes at the bottom of the frame, improves the image quality, provides convenience for the extraction of the upper boundary line of the subsequent image, and improves the accuracy of the upper boundary line.

2.2.4 Filling and eliminating holes

The image obtained by the inter-frame sum method still has a large number of holes, so a large-size average filter is used to blur the image to eliminate small holes. The principle of mean filtering is to use a template to slide on the image to realize the traversal of the entire image, calculating the mean value of the pixels falling in the template as the pixel value of the center point of the current template. When the size of the template is large, a wide range of pixels in the neighborhood are taken into account, so a certain degree of holes could be filled.

To reduce the amount of image data, convert the color image to a single-channel grayscale image through formula 6 before meanfiltering. The converted image could still clearly distinguish the crop area and the black background area.

The image is then binarized to obtain a clear crop height line, setting the gray value of the crop region to 255 and the background region to 0.

In order to eliminate holes as much as possible and smooth the upper boundary line of crop region, morphological operations is used to dilate the image. The dilation operation first designs an s*s structure element E to traverse the image, and merges the structure element E with the corresponding area of the image through vector addition, as shown in formula (8). Conversely, the erosion operation performs vector subtraction on structural elements and corresponding image areas.

Dilation is the process of merging all the background points in contact with the object into the it to expand the boundary outwards, which could be used to fill holes in the object and expand the scope. Erosion is a way to eliminate boundary points and shrink the boundary inward, which could eliminate small and meaningless objects. Combining the two operations in sequence can remove isolated noise points, burrs and small bridges, while the image position and shape remain unchanged [16,17,18]. In this paper, dilation is used to fill small cavities and narrow gaps in the crop area and smooth the crop height line, meanwhile it expands the scope of the object area and shifts the crop height line. Therefore, image erosion is used to restore the position of the crop height line, restoring the original size of the object.

It could be seen from Fig. 6 that the dilation operation could greatly reduce the internal holes in the binarized image (6b) and smooth the crop boundary line. Figure 6f compares the boundary lines before and after morphological processing. The red line is the upper boundary line of Fig. 6e, and the green one comes from Fig. 6c. It could be seen that the boundary line before and after morphological processing are basically consistent.

2.2.5 Dividing line extraction

After the inter-frame sum method and large-scale mask filtering to eliminate holes, and then smooth the dividing line through morphological operations such as expansion and erosion, the obtained image has a clear and smooth boundary line between crop region and the background. Thus, Sobel operator is used to extract the boundary.

The Sobel operator is a commonly used extraction template for edge detection. By assigning different weights to pixel values according to their different distances, the sharpness of the grayscale changes at the pixel values can be judged. It is considered that the sharp changes in the grayscale value are the edges. The specific operation is to take a weighted average of the image pixels in different directions. The weight of each pixel is the Sobel convolution kernel, and then the generated new pixel gray value is thresholder to determine the edge information. Generally, to improve the extraction performance of the Sobel operator, a smoothing preprocessing operation is added to reduce the influence of noise. After a series of morphological processing, there is an approximately horizontal dividing line in the crop image. Considering that the pixel-level error does not affect the calculation of the crop height, only the horizontal Sobel operator is used to calculate the pixel gradient and perform threshold segmentation, as shown in Eq. 9

3 Experiments and discussion

In the experimental verification of the crop height, a relatively flat ground with a neat border was selected for harvesting in order to ensure the validity of the experimental data. Video is captured under the condition that the harvester moves in a straight line and keeps full cutting width for harvesting.

In order to verify the extraction accuracy of crop height lines, save an original image every certain number of frames from the captured video. Manually annotate the saved images to obtain the height line of crop, and calculate the position of the height line based on color feature extraction.

To verify the measurement accuracy of the crop height, measure the height of the crop that is about to be harvested by a tape measure, taking the average value as the true height. Detect the crop height in real time during the operation of the harvester, and compare with the real height.

where H represents the set of height values of crop measured by the algorithm in this paper, and h represents the set of actual height values measured manually.

To obtain the position of the crop root and obtain the calibration factor S, experimental calibration needs to be down before measuring. In order to improve the adaptability of the algorithm, the site was changed several times for calibrations, and the average value is taken as the final calibration parameter.

During the operation of the harvester, a video of wheat, a video of rice and a video taken by handheld camera were collected. For these three videos, separately use the algorithm in this paper, the K-means image segmentation algorithm and manual annotation to obtain the crop height line, and analyze the accuracy of the crop height line extraction.

In Figs. 7, 8 and 9, the ordinate is the position of the crop height line, and the abscissa is the frame order of the pictures. It could be seen that the height line extracted by algorithm based on color features is basically the same as the height line labeled manually. In the wheat and rice height line trend chart, K-means algorithm could locate the position of the height line well, but the data points deviate greatly from the true height line. In the video captured by the handheld camera, the performance of K-means for locating the crop boundary decreases due to background interference. Table 1 shows the quantitative analysis of these three sets of data.

Save 100 pictures from each of the above three videos, and use the color feature-based area extraction algorithm in this paper and the K-means-based area extraction algorithm to process separately, recording the processing time of each frame in Fig. 10. It could be seen that the crop region extraction method based on K-means takes time higher than the average in the first few frames, and the time-consuming per frame is volatile. In contrast, the color feature-based crop region extraction method is much more stable in time, and the time-consuming of the three videos are all under 100 ms, approximately one-third of the area extraction algorithm based on K-means. Results show clear advantages in terms of time complexity compared to the method based on K-means.

Make the vehicle harvest 30 m in the state of full cutting width. Compare and analyze the data obtained in these 30 m with the data measured manually. Three sets of measurement data obtained in a paddy field are shown in Table 2 with No. 1, No. 2, and No. 3 shown, and two sets of measurement data obtained in a wheat field are shown in Table 2 with No. 4 and No. 5 shown.

Table 2 shows that under the condition that the true value of the height of crop is 992 mm, the root mean square error is below 20 mm, the average absolute error is below 16 mm, and the maximum error is below 38 mm. While under the condition that the true value of the height of crop is 669 mm, the root mean square error is below 13 mm, the average absolute error is below 12 mm, and the maximum error is below 227 mm. Experiment results show that the algorithm proposed could realize the accurate measurement of the crop height.

4 Conclusions

Aiming at the inefficiency and labor waste of traditional tool measurement methods, a method to measure the height of crops using machine vision based on color feature is proposed. A crop height measurement model based on the principle of aperture image is established to describe the spatial relationship between the collected image and the real crop. A series of morphological operations are performed on the image to segment the crop region. The H channel is selected to segment the image in order to achieve the acquisition of the crop region. The inter-frame sum method is adopted to enhance the image, and Large-scale filtering, dilation, erosion and other operations are also applied for further enhancement, smoothing the dividing line so that a complete crop upper boundary line could be obtained. An appropriate Sobel operator is designed to extract the upper boundary line, so that the true height of the crop could be calculated. The proposed method can extract the crop height line with much greater efficiency, realizing accurately crop height measurement, the average error of crop height is less than 1.6%, and processing time per frame within 50 ms. The algorithm proposed in this paper uses a variety of traditional image processing algorithms superimposed on each other, and achieves good results in terms of accuracy and computational speed. However, the large number of parameter settings in the algorithm determines that the algorithm has little generalizability and cannot achieve results for other kinds of crops. Therefore, adaptive crop height extraction algorithms can be considered in the future to avoid repeated tuning of parameters and improve the efficiency.

References

Youchun D, Shumao W (2010) Vision navigation control system for combine harvester. Trans Chin Soc Agric Mach 41(5):137–142. https://doi.org/10.3969/j.issn.1000-1298.2010.05.028

Yuxian G, Kun L, Yun S et al (2020) Inversion of rice plant height based on multi-temporal TanDEM-X polarization interferometric SAR data. Spectrosc Spectral Anal 40(03):878–884. https://doi.org/10.3964/j.issn.1000-0593(2020)03-0878-07

Hu P, Chapman SC, Wang X et al (2018) Estimation of plant height using a high throughput phenotyping platform based on unmanned aerial vehicle and self-calibration: example for sorghum breeding. Eur J Agron 95:24–32. https://doi.org/10.1016/j.eja.2018.02.004

Matese A, Gennaro SFD, Berton A (2017) Assessment of a canopy height model (CHM) in a vineyard using UAV-based multispectral imaging. Int J Remote Sens 38:8–10. https://doi.org/10.1080/01431161.2016.1226002

Bendig J, Yu K, Aasen H et al (2015) Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int J Appl Earth Observ Geoinform 39:79–87. https://doi.org/10.1016/j.jag.2015.02.012

Huilin T, Liangji X, Haikuan F et al (2019) Estimation of plant height and biomass of winter wheat based on UAV digital image. Trans Chin Soc Agric Eng (Trans CSAE) 35(19):107–116. https://doi.org/10.11975/j.issn.1002-6819.2019.19.013

Huilin T, Haikuan F, Liangji X et al (2020) Estimation of the yield and plant height of winter wheat using UAV-based hyperspectral images. Sensors. https://doi.org/10.3390/s20041231

Yang S, Jinfei W (2019) Winter wheat canopy height extraction from UAV-based point cloud data with a moving cuboid filter. Remote Sens. https://doi.org/10.3390/rs11101239

Ten Harkel J, Bartholomeus H, Kooistra L (2020) Biomass and crop height estimation of different crops using UAV-based lidar. Remote Sens. https://doi.org/10.3390/rs12010017

Anh TTP, Kazuyoshi T, Atsushi R et al (2016) Method for estimating rice plant height without ground surface detection using laser scanner measurement. J Appl Remote Sens. https://doi.org/10.1117/1.JRS.10.046018

Kim W, Lee D, Kim Y et al (2021) Stereo-vision-based crop height estimation for agricultural robots. Comput Electron Agric. https://doi.org/10.1016/j.compag.2020.105937

Moses CJ, Selvathi D (2021) Adaptive edge-based bi-cubic image interpolation. Int J Reason Based Intell Syst 13(2):101–105. https://doi.org/10.1504/IJRIS.2021.10036810

Trigano T, Bechor Y (2020) Fast background removal of JPEG images based on HSV polygonal cuts for a foot scanner device. J Real-Time Image Proc 17:981–992. https://doi.org/10.1007/s11554-019-00850-5

Zhang X, Li J, Yao Q et al (2019) Navigation path detection of cotton film-spreading and seeding machine based on hsv color model. Int Agric Eng J 28(2):121–129

Wang H, Yin W, Wang L et al (2019) Fast edge extraction algorithm based on HSV color space. J Shanghai Jiaotong Univ (Chin Ed) 53(7):765–772. https://doi.org/10.16183/j.cnki.jsjtu.2019.07.001

Shah V, Chourasia V, Kshirsagar R (2021) Efficient T2 brain region extraction algorithm using morphological operation and overlapping test from 2D and 3D MRI images. Int J Biomed Eng Technol 35(4):382–399. https://doi.org/10.1504/IJBET.2021.114815

Hassanin AAIM, Abd El-Samie FE, El Banby GM (2019) A real-time approach for automatic defect detection from PCBs based on SURF features and morphological operations. Multimedia Tools Appl 78:34437–34457. https://doi.org/10.1007/s11042-019-08097-9

Gomaa A, Abdelwahab MM, Abo-Zahhad M (2020) Efficient vehicle detection and tracking strategy in aerial videos by employing morphological operations and feature points motion analysis. Multimedia Tools Appl 79:26023–26043. https://doi.org/10.1007/s11042-020-09242-5

Acknowledgements

The work was supported by National Key Research and Development Program (2022YFD200150301), Domestic study visiting project funded by the cultivation of outstanding top-notch talents training in colleges and universities of Anhui Province (gxgnfx2020159), Key project of natural science research in colleges and universities of Anhui Province (KJ2021A1408), Jiangsu Province Agricultural Science and Technology Independent Innovation Fund Project [CX(22)3091], Primary Research & Developement Plan of Jiangsu Province (BE2022389).

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, S., Wang, L., Xiao, H. et al. Visual measurement method of crop height based on color feature in harvesting robot. SN Appl. Sci. 5, 59 (2023). https://doi.org/10.1007/s42452-023-05287-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-023-05287-x