Abstract

This paper is devoted to the reliability analysis of rotor-bearing systems, based on the combination of Kriging metamodels and the First-Order Reliability Method (FORM). The main motivation arises from the fact that high-fidelity structural models generally lead to high computation costs, which can be strongly alleviated using surrogate models. Since applications to rotating machines have not been sufficiently explored so far, the contribution of the present paper consists in the evaluation of the performance, both in terms of accuracy and computational effort, of a numerical strategy based on the combination of Kriging metamodels and FORM to this type of machines, accounting for their typical frequency domain responses and applicable limit-states. Such an evaluation is made by confronting four different strategies, combining: (i) full finite element models and Monte Carlo simulations; (ii) full finite element models and FORM; (iii) Kriging metamodels and Monte Carlo simulations; (iv) Kriging metamodels and FORM. Results show that the Kriging/FORM strategy provides substantial decrease of computation effort, while keeping satisfactory accuracy of reliability estimations. In addition, a procedure is proposed for improvement of the accuracy of Kriging/FORM reliability estimates, by enriching the Kriging design of experiments in the vicinity of the Most Probable Failure Point.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Assessing the influence of uncertainties in physical systems by using numerical models has become a topic of great interest in science and engineering lately. In this context, the identification of intrinsic uncertainties in model inputs, and quantitative assessment of their impact on the model response, is imperative to support the use of model predictions in a decision-making process [1]. As a result, a great deal of effort has been devoted to the development of theoretical foundations and computational algorithms in the field of knowledge known as Uncertainty Quantification (UQ) [2,3,4].

Within the general scope of UQ, the objective of reliability analysis in a probabilistic framework is to determine the probability that a given structural system will perform acceptably, given that its behavior, predicted by means of a structural model, depends on a set of uncertain parameters, modeled as random variables [5]. Obviously, the choice of the random variables (RVs) (along with others that can be considered as deterministic) must be decided in an early phase. The performance of the system is usually represented in terms of a nonlinear implicit performance function, known as limit-state function (LSF), denoted as \(g({\mathbf {X}})\), where \({\mathbf {X}}\) represents the vector of random variables \(X_{1},X_{2},...,X_{k}\).

From the geometrical point of view, the LSF is a hypersurface, described by a function \(g({\mathbf {X}})=0\) on the k-dimensional space of the random variables, that separates the region \(g({\mathbf {X}})>0\), in which the system performs satisfactorily (also known as safe region), from the region \(g({\mathbf {X}})<0\), in which the system performance is unsatisfactory (unsafe region). Hence, the reliability is the probability that the stochastic system response is found in the safe region, i.e., \(R=P[g({\mathbf {X}})>0]\). Complementarily, the probability of failure (PoF), is defined as \(P_{f}=P[g({\mathbf {X}})<0]=1-R\).

It has been demonstrated that the estimation of reliability directly from the definition above involves the computation of a multiple integral of the continuous joint probability density function (PDF) of the random variables, \(f_{X_{1},X_{2},...,X_{k}}(x_{1},x_{2},...,x_{k})\), over the safe region. However, in the large majority of cases, this integration cannot be performed analytically and becomes impractical to compute numerically as the number of variables increases (the so-called curse of dimensionality [6]). An alternative approach is to estimate the reliability using Monte Carlo sampling (MCS) [7] on the space of the random variables, followed by the use of the structural model to compute the value of the performance function for each of the samples. Again, the high computational cost involved in the calculation of the system responses for a large number of samples, required for achieving statistical convergence, makes this strategy unaffordable in many cases of practical interest where high-fidelity finite element (FE) models have to be used.

To circumvent these difficulties, approximate numerical procedures such as the First- and Second- Order Reliability Method (FORM/SORM) have been developed and successfully used, although they also present some limitations [8]. FORM performs a local approximation of the LSF by a hyperplane in the space of independent standardized Gaussian variables. Given the properties of those variables, especially the rotational symmetry of the multivariate Gaussian PDF, the reliability is determined by finding the minimal distance from the origin of the standardized space to the hypersurface representing the LSF. Hence, the problem of reliability estimation is formulated as a constrained optimization problem. It has been shown that the performance of FORM is satisfactory when the LSF is linear or weakly nonlinear. On its turn, SORM uses a second-order representation of the LSF, thus improving the accuracy of FORM when the performance function is strongly nonlinear [9]. However, the computational cost required by SORM tend to be higher, and convergence difficulties can be encountered.

For most problems of structural mechanics, the LSF is not known explicitly as a function of the random variables. Instead, it is available implicitly, only at discrete points resulting from deterministic finite element simulations [8]. In this case, besides the high computational cost associated with repeated numerical analyses needed to map the limit-state surface, the use of approximate methods such as FORM/SORM can raise additional difficulties as the optimization algorithms typically use gradient-based solvers that require sufficient differentiability of the LSF and additional evaluations of the structural model [6].

The use of surrogate models, also known as metamodels, is being progressively consolidated as an effective means of achieving reduction of the computational cost involved in UQ, and particularly in reliability analysis [10,11,12]. A metamodel is understood as a simplified, computationally efficient substitute of the original model, which is able to represent, with satisfactory accuracy, the input-output relationship established for the quantities of interest in a given range of the random variables. In general, a metamodel is constructed by approximating a limited number of points obtained from simulations performed by using the original high-fidelity model. This procedure is known as training and the set of points used for training is known as design of experiments (DoE). With an explicit, continuous and smooth metamodel, FORM/SORM methods can be promptly used for reliability estimation [8].

One of the first applications of surrogate models in structural reliability problems is reported in [13]. After applying the Response Surface Method (RSM) in combination with an adaptive interpolation scheme to different structural systems, the authors have shown that surrogates can be used efficiently to replace highly nonlinear LSFs.

Besides the well-established RSM, many other types of surrogate modeling techniques are presently available in the literature, as summarized in [14]. These surrogates can be classified into three major groups: Geometric, Heuristic and Stochastic [15]. Among them, Kriging metamodels (also named Gaussian process regression) have been standing out from other surrogates [16,17,18].

The basis of the Kriging formalism is the regression of a Gaussian process, where the function being interpolated is treated as a realization of a Gaussian stochastic process with a covariance function chosen to characterize the correlations between the values of the function.

Both interpolation capability and local adaptability of Kriging surrogate models have proved to be handy features for reliability analyses with implicit nonlinear performance functions. In the early 2000’s, Kaymaz [19] investigated the use of Kriging for solving structural reliability problems, comparing it with the classical RSM. The author modified the DACE Matlab Kriging Toolbox [20] to be used in combination with FORM and MCS. The results revealed that the parameters underlying the Kriging metamodel have significant effect on reliability results. Also, the use of Kriging correlation function with Gaussian form was found to be more suitable for problems with nonlinear LSFs.

Gaspar et al. [21] and Shi et al. [22] assessed the efficiency of Kriging interpolation models as surrogates for nonlinear finite element models in structural reliability problems. The authors compared the accuracy of a Kriging/FORM-based reliability method applied to marine structures, considering different numbers of training points and orders of the polynomials adopted in the regression models. The results showed that Kriging metamodels could provide accurate failure probability estimates as the number of training points increased. In addition, a small effect of the polynomial regression order on the Kriging predictions was noticed.

Various authors have proposed enhanced Kriging-based techniques for structural reliability assessment, most of them focused on adaptive DoEs to improve the Kriging accuracy in the vicinity of the limit-state surface. Bichon et. al [23] developed the Efficient Global Reliability Analysis (EGRA), an active learning technique that uses the expected feasibility function (EFF) to iteratively refine the surrogate training data. Echard et al. [24] combined an active ordinary Kriging surrogate with Monte Carlo sampling (AK-MCS). A learning function based on the Kriging local prediction and local variance, the so-called U-function, was proposed to be used in the enrichment process. Following this work, in reference [25] is presented a strategy combining ordinary Kriging metamodels with the Importance Sampling method (AK-IS) to assess small PoFs. The method enables to correct or validate the FORM approximation with a small number of evaluations of the structural models. More recently, Schöbi and Sudret [26] proposed an adaptive algorithm that uses Polynomial Chaos Expansion (PCE) with Kriging and Monte Carlo sampling (APCK-MCS) to improve the PoF estimation.

When it comes to rotating machines, which can be found in aerospace vehicles, electric power plants, oil and gas production facilities and many other industrial applications, uncertainty quantification is essential in the design process to ensure good performance. In addition, since these machines are very expensive and their operation usually involves high energy (large mass under high rotation speeds), operating conditions must be strictly controlled. However, given the inherent uncertainties affecting structural properties, environmental conditions and external loads, absolute satisfactory performance (including necessary levels of structural safety, as a particular case) cannot be always guaranteed [27]. Instead, a certain level of probabilistic assurance can be secured through reliability analysis.

Stochastic analyses of rotating machines have been addressed by some authors. In reference [28], the authors investigated the effect of uncertain parameters on the dynamic behavior of a flexible rotor supported by two fluid-film bearings. The uncertainties of the bearing parameters (oil viscosity and radial clearance) were modeled using fuzzy dynamic analysis. The results obtained from this approach were compared to counterparts from Monte Carlo simulations. The authors concluded that both approaches led to similar outcomes, and that “fuzzy analysis seems to be more adequate when the stochastic process that models the uncertain parameters of the bearings is not well defined”.

Visnadi and Castro [29] considered uncertainties affecting the stability threshold of rotating systems supported by cylindrical hydrodynamic bearings prone to fluid-induced instability. The parameters considered as random were the bearing clearance and oil temperature. Monte Carlo simulation was used for uncertainty quantification.

Reference [30] addressed rotating machines having journal bearings exhibiting nonlinear behavior, in the presence of uncertainties. Polynomial Chaos Expansion was employed to model the stochastic responses, and the stochastic collocation method was applied to evaluate the expansion coefficients. Sensitivity analysis was performed to identify the most influential random parameters. A convergence comparison between PCE and MCS was also performed.

The nonlinear response of rotor subjected to faults (unbalance, asymmetric shaft, bow, parallel and angular misalignment) was investigated in reference [31]. The authors proposed to use the Harmonic Balance Method in combination with a polynomial chaos expansion. The efficiency and robustness of the proposed methodology is demonstrated by comparison with Monte Carlo simulations for different kinds and levels of uncertainties. The authors concluded that variations of unbalance, bow and parallel misalignment faults can affect all the harmonic components of the rotor system, while variations of faults involving asymmetric section or angular misalignment can affect all the harmonic components.

Based on a nonlinear Jeffcott rotor/stator contact model, the reliability of the system subjected to aleatory and epistemic uncertainty was evaluated in [32]. The authors used a likelihood-based approach for reliability modeling and analysis. For uncertainty quantification, Bayesian techniques were adopted to reduce the computation cost typical of traditional Monte Carlo simulation.

In [33] a Kriging surrogate was used to model the behavior of a tilting-pad fluid-film bearing of a Francis hydropower unit. The equilibrium position of the shaft and the inlet oil temperature were used as input variables, while the bearing supporting forces and the maximum oil film pressure and temperature were considered as output variables.

Also, Sinou et al. [34] demonstrated the effectiveness of using Kriging to predict the critical speeds and vibration amplitudes of a complex flexible rotor in the presence of uncertainties affecting the physical and geometrical parameters of the disks, shaft and bearings. Moreover, the authors investigated the influence of different regression models, spatial correlation functions and training samples on the accuracy of Kriging predictions.

With the motivation of broadening the applications of modern uncertainty quantification techniques to rotating machines, in the present paper a strategy is proposed for the reliability analysis of this category of mechanical systems, consisting in the combination of Kriging surrogate models with the First-Order Reliability Method. This approach provides an adequate balance between computational cost, which is one of the main constraints faced in practical engineering applications, and the accuracy of reliability estimation, which is indispensable for decision making. It is worth pointing out that rotating machines are characterized by distinguished dynamic behavior—especially due to the presence of gyroscopic effects and bearings featuring nonlinear behavior—besides specific failure modes and serviceability requirements. To the authors’ best knowledge, the numerical approach to reliability analysis, as suggested here, has not been applied to rotating machinery so far. Hence, its use in the design cycle of actual machines of industrial interest can contribute to their increased safety and serviceability.

In the remainder, the theoretical foundations underlying reliability analysis and Kriging metamodeling are summarized. Then, the FE model of rotor-bearing systems is briefly addressed, followed by the description of the numerical strategy used to assess the reliability of these systems exhibiting distinct dynamic performance, failure modes and operational constraints. Simulations are then carried out for a particular system of interest and the results are evaluated in terms of accuracy and computational efficiency.

Theory

First-Order Reliability Method (FORM)

FORM consists in locating the point on the limit-state surface that has the greatest probability of occurrence, named design point or most probable point (MPP). This concept explores the rotational symmetry property exhibited by the continuous joint PDF of independent standardized Gaussian random variables. When this requirement is not fulfilled, it is necessary to transform the k original RVs \({\mathbf {X}} = \left\{ X_1, X_2, \ldots , X_{ k } \right\} ^T\) into others with such properties, \({\mathbf {U}} = \left\{ U_1, U_2, \ldots , U_{ k } \right\} ^T\), according to:

where \(\mu _{X_i}\) is the mean of \(X_i\) and \(\sigma _{X_i}\) is the standard deviation of \(X_i\).

The most widely used procedure for performing this isoprobabilistic transformation is known as Rosenblatt transformation [35].

In the U-space, the distance from the origin of the reduced coordinate system to the MPP defines the so-called Hasofer-Lind reliability index \(\beta\) [36]. The geometric interpretation of the reliability index in the centered standardized space can be found in reference [5].

Finding the coordinates \(\mathbf {u^*} = \left\{ u_{1}^{*}, u_{2}^{*}, \ldots , u_{ k }^{*} \right\} ^T\) of the MPP is achieved by solving the following nonlinear constrained optimization problem:

Hence, the reliability index is computed as:

and the first-order approximation of the PoF is then given by:

where \(\Phi (\cdot )\) is the standard normal cumulative distribution function (CDF).

In most cases, the optimization problem given in Eq. 2 must be solved iteratively. Reference [37] suggests the Newton-Raphson method to obtain the reliability index and the PoF. The optimization process is iterated until the specified tolerances for \(\Delta \beta\) and \(\Delta g({\mathbf {X}})\) are met. It is worth noticing that the iterative FORM algorithm requires the computation of the first-order derivatives of the LSF which can lead to difficulties in cases in which the LSF is not continuous. In addition, it requires additional evaluations of the LSF for computations based on finite-difference schemes.

Kriging

As mentioned before, the basis of Kriging is the regression of a Gaussian process with a given form of covariance function. The construction of a Kriging model enforces the interpolation of a limited set of input/output data resulting from runs of a high-fidelity numerical model (such as a FE model), assuming that the observed responses are correlated, according to the correlation function adopted, and maximizing the likelihood of their occurrence. The mathematics of Kriging interpolation is summarized here based on [17].

First, a set of RVs \({\mathbf {X}}=\left\{ X_1, X_2, \ldots , X_ k \right\}\) is chosen, encompassing the parameters considered uncertain in the original FE model, along with the ranges of each variable. Then, a space-filling technique, such as the Latin Hypercube sampling (LHS) [38], is used to define \(n\) samples of these \(k\)-dimensional vectors (i.e., the DoE) that will sufficiently represent the design space, \({\mathbf {x}}^{(1)}, {\mathbf {x}}^{(2)}, \ldots , {\mathbf {x}}^{( n )}\) . After processing, the corresponding values of the LSF are gathered in \({\mathbf {g}}=\left[ g({\mathbf {x}}^{(1)}), g({\mathbf {x}}^{(2)}), \ldots , g({\mathbf {x}}^{( n )}) \right] ^T\).

The computed values of the LSF are assumed to be a realization of a correlated Gaussian random process (even though they come from deterministic computer simulations), as follows:

where \(F({\mathbf {x}},\varvec{\kappa })\) is the deterministic part which gives an approximation of the LSF in terms of its mean. It represents the trend of the Kriging surrogate according to the following regression model:

with \(\mathbf {f({\mathbf {x}})} = \left[ f_{1}({\mathbf {x}}), \dots , f_{m}({\mathbf {x}}) \right] ^{T}\) representing the vector of basis functions and \(\varvec{\kappa } = \left[ \kappa _{1}, \dots , \kappa _{m} \right] ^T\) the vector of regression coefficients. In ordinary Kriging, which is adopted here, the regression model is reduced to a scalar, \(\mu\), denoting the unknown constant mean of the process.

In addition, \(z({\mathbf {x}})\) is a stationary Gaussian process with zero mean and covariance between two points \({\mathbf {x}}^{(i)}\) and \({\mathbf {x}}^{(l)}\) defined by:

where \(\sigma ^{2}\) is the process variance and \(\mathbf {\Psi }_{\theta }\) the correlation function, defined by a set of parameters \(\varvec{\theta }\).

Various types of correlation functions can be used [18]. In the present study, a squared exponential correlation function has been chosen, as given by:

This correlation model has been often used since the publication of the work by Sacks et al. [16]. It has the distinguished property that if \(x_{j}^{(i)}\rightarrow x_{j}^{(l)}\) the random variables \(g({\mathbf {x}}^{(i)})\) and \(g({\mathbf {x}}^{(l)})\) will be highly correlated. On the other hand, if the “distance” between the two points grows, the correlation tends to zero. The parameters \(\theta _{j}\) (\(j=1, \dots , k\)) allow to set the contributions of each RV to the correlation value.

It should be noticed that the Gaussian random field depends upon the parameters \(\mu\), \(\sigma ^{2}\) and \(\theta _{j}\) (\(j=1, ..., k\)). To minimize the error of the surrogate model, these parameters must be estimated by enforcing the maximization of the likelihood of the observed data. According to reference [17], the likelihood function is written as:

The maximum likelihood estimates (MLEs) for \(\mu\) and \(\sigma ^{2}\) are obtained by setting the derivatives of the natural logarithm of the likelihood function to zero, which leads to:

Inserting these expressions back into the natural logarithm of the likelihood function gives the so-called concentrated ln-likelihood function, which, ignoring the constant terms, is presented as:

It is worth noticing that this function depends only on the correlation matrix \(\mathbf {\Psi }_{\theta }\), hence, on the unknown parameters \(\theta _{j}\) (\(j=1, ..., k\)). Forrester et al. [12] suggest the use of a metaheuristic global search method (e.g. Genetic Algorithms, Simulated Annealing, etc.) to find the values which maximize Eq. 12. The MLE of these parameters provide the optimal adjustment of the correlation functions for prediction accuracy, reflecting the relative importance attributed to each variable [17].

Once the numerical optimization problem is solved, the estimates are then used in Eqs. 10 and 11 to compute \(\mu\) and \(\sigma ^{2}\), and finally obtain the correlation matrix.

To make a prediction of the value of the LSF at some new point \(\mathbf {x'}\), denoted as \(g'\), the observed data \({\mathbf {g}}\) is augmented with the new \(( n +1)\)-th observation to give the vector \(\tilde{{\mathbf {g}}}=\left\{ {\mathbf {g}}^T, g' \right\} ^T\). Also, let \(\varvec{\psi }\) define the vector of correlations between the observed data and the new prediction \({g}({\mathbf {x}}')\):

The augmented correlation matrix for the new data set takes the form:

Neglecting the constant terms, the augmented ln-likelihood function is found as:

Substituting \(\tilde{{\mathbf {g}}}\) and \(\tilde{\mathbf {\Psi }}_{\theta }\) in this expression yields:

which only depends on the new prediction \(g'\).

Thus, the MLE for \(g'\) is written as the standard formula for the Kriging predictor:

Kriging also provides the estimation of the local variance of the predictions, which is commonly used to create adaptive DoEs [24,25,26]. Hence, the Kriging variance is given by:

As can be noticed, the Kriging surrogate is developed in such a way that the prediction pass through all the data points, interpolating them. However, to ensure a globally accurate Kriging model it is necessary to assess the prediction accuracy all over the design space, by computing the differences between Kriging predicted values (\({\hat{g}}\)) and the values obtained from the model that is being approximated. In this procedure, a set of test data of size \(n_t\) is distributed on the design space and the predictions at these points are performed. The normalized root mean squared error (NRMSE) is a frequently used metric, defined as:

Generally, a NRMSE lower than 10% indicates a surrogate with satisfactory prediction capability [12].

Finite Element Modeling of Rotor-Bearing Systems

Figure 1 depicts a rotor-bearing system in a generic deformed state, rotating with angular velocity \({\dot{\phi }}\). It is comprised by three basic components: ideally rigid disks, a flexible shaft with distributed mass and stiffness, and bearings that connect the whole system to a base. This later is also assumed to be rigid.

Typical rotor-bearing system configuration (adapted from [39])

In the study of the dynamic characteristics of rotor bearing-systems, the finite element method has proved to be a highly valuable modeling tool, providing a systematic approach for the discretization into a set of finite elements, thus leading to an approximate discrete model (with a finite number of degrees-of-freedom - DOFs) of the original continuous system. The number of DOFs of a FE model depends on the number of elements chosen (i.e., the discretization mesh), and also on the characteristics of element(s) adopted. These later include the specific theory upon which the element formulation is based and the degree of the interpolation polynomials. More precisely, the total number of DOFs of a FE model is the product of the number of elements, the number of nodes per element and the number of DOFs per node. The total number of DOFs eventually defines the number of differential equations of motion and, as result, is directly related to the computational effort involved in response predictions performed based on the FE model.

In the work reported here, beam elements based on Timoshenko’s theory have been used, each element having two nodes and four DOFs per node (two displacements, u and v, and two rotations, \(\theta _x\) and \(\theta _y\), as indicated in Fig. 1).

Details about the FE modeling of rotor-bearing systems have been largely reported in the literature [39,40,41]. For the purposes of the present study, it suffices to state that, under the usual hypotheses of linear elastodynamics, for constant rotation speed \(({\dot{\phi }}={\Omega} )\), a \(N-\)DOF model is represented by a set of N coupled second-order, ordinary differential equations, which can be written in matrix form as:

where \({\mathbf {q}}(t) \in {\mathbb {R}}^N\) is the vector formed by the ensemble of displacements and rotations (see Fig. 1) at all the nodes of the FE model, \({\mathbf {M}}\in {\mathbb {R}}^{N \times N}\) is the inertia matrix, \(\mathbf {C_ s }\in {\mathbb {R}}^{N \times N}\) and \(\mathbf {K_ s }\in {\mathbb {R}}^{N \times N}\) are, respectively, the structural damping and stiffness matrices, \(\mathbf {C_ g }\in {\mathbb {R}}^{N \times N}\) is the gyroscopic matrix, \(\mathbf {C_ b }\in {\mathbb {R}}^{N \times N}\) is the bearing damping matrix and \(\mathbf {K_ b }\in {\mathbb {R}}^{N \times N}\) is the bearing stiffness matrix. In addition, the forcing vector is represented by \({\mathbf {f}}(t) \in {\mathbb {R}}^N\) and includes, as a particular case of interest here, gravitational forces and synchronous excitation induced by the rotor residual unbalance.

Of particular importance are the cases in which the rotor is supported by fluid-film bearings. In these cases, which are considered here, hydrodynamic forces must be calculated based on the numerical resolution of the Reynolds’ equation to determine the pressure distribution in a thin fluid film. The hydrodynamic pressure generated within the film and the load-carrying capacity of the bearing depend on the journal eccentricity, shaft speed, absolute viscosity of the fluid-film, bearing dimensions, and radial clearance [42]. Adopting the assumption that the bearings are short [43], linearized speed-dependent bearing stiffness and damping matrices can be written in closed form as functions of the journal eccentricity and the modified Sommerfeld number [41]. The complete formulation is presented in Appendix A. It has been shown that this simple linear bearing model can deliver accurate results for plain bearings of small slenderness ratios, provided the journal motion has sufficiently small amplitude. However, in situations where the short-bearing approximation fails to describe the dynamic behavior of the rotor-bearing system, more involved models, such as a nonlinear hydrodynamic bearing models should be used [44].

Under the short-bearing assumption, Eq. 20 can be solved for critical speeds and mode shapes using eigenvalue analysis [45]. In addition, for harmonic excitation forces \(\mathbf{f} (t)={{\mathbf {F}}}e^{j \omega t}\) (including synchronous unbalance forces), the steady-state harmonic solution is \(\mathbf{q} (t)={{\mathbf {Q}}}e^{j \omega t}\), with:

where \({\mathbf {Q}}(\omega )\) is the complex response amplitude and \({\mathbf {F}}(\omega )\) is the complex forcing vector, in frequency domain.

It is worth pointing out that the resolution of the system of linear equations given in Eq. 21 provides the harmonic response corresponding to each DOF of the model in terms of amplitude and phase. Whenever some parameters of the FE model are represented by random variables, such responses can then be used to evaluate limit-state functions in the context of reliability problems, which is the objective here.

It is also important to mention that the computation of the responses according to Eq. 21 involves the resolution of a system of N complex equations for many values of \(\omega\) within a frequency band of interest. Such a resolution can be very costly when N is large. Some condensation techniques have been used to reduce the number of equations, such as modal projections [40] but they are likely to introduce inaccuracies in the model. Those condensation techniques are not used here but could be straightforwardly included as and additional step of the numerical procedures.

Numerical Strategy

The flowchart in Fig. 2 outlines the investigation route, in which three main procedure groups are identified, namely: (a) Rotordynamic Analysis, in which a deterministic finite element model is used to simulate the dynamic responses of the rotor-bearing system of interest; (b) Surrogate Modeling, where the Kriging metamodel is constructed based on a training procedure that uses the responses of the FE model for a given DoE constructed by LHS technique; (c) Reliability Analysis in which, for the purpose of benchmarking, reliability is estimated using four different strategies: (i) using the full FE model in combination with MCS (identified as FEM/MCS); (ii) using the full FE model combined with FORM (denoted as FEM/FORM); (iii) using Kriging metamodeling as a surrogate for the FE model, in combination with MCS (Kriging/MCS); and (iv) using Kriging metamodeling combined with FORM (Kriging/FORM).

The very first step involves setting up the basic features of the engineering problem, which are:

-

The rotor-bearing system characteristics (geometry, material properties, boundary conditions, etc.) and its operating conditions;

-

The basic design variables capable of affecting the system dynamic behavior due to their random nature, and the probabilistic models representing these RVs; and

-

The limit-states that will be used to separate the design space into safe and unsafe regions for the purpose of reliability assessment.

Once this information is gathered, the next step consists in constructing a deterministic FE model for the rotor-bearing system. This model works as a high-fidelity tool for rotordynamics analysis, and is used to generate the training data for the Kriging surrogates. At this stage, the Latin Hypercube technique is applied to sample a set of design points throughout the RVs domain, and each of these points is evaluated by the FE model. The observed responses are used for maximizing the likelihood function (Eq. 9) so as to produce the Kriging predictor (Eq. 17). This newly delivered surrogate is then exploited at new points to certify its accuracy. Validation metrics, such as the NRMSE, are used to assess the quality of the predictions. If the Kriging model is considered to perform satisfactorily, it is considered suitable as a proxy for the original FE model.

The final step is to use the Kriging predictor in the reliability analysis process itself. First, reference values for the PoF are obtained via FE models, using both the first-order approximation (FEM/FORM) and the Monte Carlo simulation (FEM/MCS). Afterwards, the Kriging-based reliability is evaluated by means of the Kriging/MCS and the Kriging/FORM strategies. Results from the Kriging/FORM method are then systematically compared with the reference values to assess the strategy effectiveness in terms of computational cost and prediction accuracy.

A computer code was implemented in Matlab® environment, aiming to carry out Kriging metamodeling and the proposed reliability analysis as described in the flowchart in Fig. 2. This new tool has the capability to perform Monte Carlo simulations and FORM-based analysis using implicit limit-state functions associated with the rotor-bearing system problem.

Application Problem

To demonstrate the application of the Kriging/FORM method for reliability analysis of rotor-bearing systems, a simplified engineering problem is proposed, consisting in the reliability assessment based on the linear forced responses of the system discretized by FE as shown in Fig. 3. This system was used in reference [41] to analyze the effect of hydrodynamic bearings on flexible rotors.

The horizontal rotor has two disks and is supported at both ends by cylindrical oil-film bearings. Each of the bearings support different static loads associated to the weight of the rotor. No structural damping is considered. The main physical and geometrical parameters of the rotor are presented in Table 1. It is worth mentioning that some of these parameters will be later considered as random, having the corresponding values presented in Table 1 as their mean values.

The shaft-line FE model is constructed using 6 Timoshenko beam elements for the rotor shaft, which leads to a model with 7 equally spaced nodes and 28 DOFs. The hydrodynamic bearings are placed at nodes 1 and 7 whereas the disks are positioned at nodes 3 and 5. This coarse discretization was chosen after a mesh convergence study to keep the model as simple as possible while presenting a reasonable level of accuracy. The linear journal-bearing model is used for the sake of simplicity.

The FE model was implemented in Matlab®. The main code is provided in [46], and was adapted to fit the purposes of this work.

Deterministic Simulations

First, to evaluate the dynamic features of the rotor-bearing system previously defined, Eq. 21 was solved over a range of rotation speeds and the Campbell diagram shown in Fig. 4 was constructed. As observed, there is a critical speed at 1065 revolutions per minute (RPM), where the curve representing the variation of a natural frequency associated to a lightly damped forward-whirling mode (Fig. 5) intersects the line representing the values of the rotation speed.

In the vicinity of the critical speed, a large amplification is noticed at both left and right bearings, as illustrated in Fig. 6. The amplitudes of the steady-state forced response of the system is given by the semi-major axis of the bearing journal whirl elliptical orbits shown in Fig. 5, considering 2000 g\(\cdot\)mm of mass unbalance arbitrarily located at 0\(^\circ\) in each disk (which leads to in-phase unbalance forces). This value of residual unbalance is compatible with the limits specified by ISO 21940-12 [47] for a balance quality grade G6.3.

Characterization of the Random Variables

Uncertainties in fluid-film temperature due to unpredictable operating conditions, and in bearing clearances due to machining tolerances and potential wear caused by surface interaction, are commonly addressed when assessing the stochastic response of rotor-bearing systems [27,28,29,30]. Usually, oil-film temperature has a nonlinear effect on fluid dynamic viscosity. Higher temperatures associated with lower viscosity values lead to larger operating eccentricity at the bearings [48]. Increases in bearing radial clearances also mean higher eccentricities. In addition, shaft deflections at the bearings can grow significantly depending on the magnitude of the excitation forces, usually caused by an unequal distribution of mass around the axis of rotation, which exhibits an inherent random nature.

Following this reasoning, Table 2 presents the random variables considered in this problem, namely fluid-film temperatures (\(T_{oil}^{L}\), \(T_{oil}^{R}\)), bearing radial clearances (\(C_{r}^{L}\), \(C_{r}^{R}\)) and residual disk unbalances (\(U_{res}^{L}\), \(U_{res}^{R}\)), along with the corresponding means, standard deviations (SD), coefficients of variation (CV) and PDFs. In this table, superscripts \((.)^{L}\) and \((.)^{R}\) refer to the left and right bearings, respectively.

Gaussian distributions are used to model the two temperature-related variables, whereas Gamma distributions are assigned to the other variables for which the support is \({\mathbb {R}}^{+}\). It is also assumed that all the random variables are independent from each other.

The individual influences of the six parameters defined in Table 2 on the vibration amplitudes at both left and right bearings can be assessed in Fig. 7, in which unbalance response amplitudes are shown for different deterministic values of these parameters.

As observed, variations of \(T_{oil}^{L}\) and \(C_{r}^{L}\) exert more significant influence on the response at the left bearing as these parameters directly affect the oil-film stiffness and damping characteristics of this bearing. Likewise, \(T_{oil}^{R}\) and \(C_{r}^{R}\) affect mostly the response at the right bearing. This behavior can be interpreted in terms of more pronounced direct influences as compared to crossed influences involving the bearing parameters. Differently, the effect of increasing residual mass unbalance (either \(U_{res}^{L}\) or \(U_{res}^{R}\)) is equally felt on both bearings, with a proportional increase of shaft deflection near the critical speed.

Definition of the Limit-State Functions

Although a variety of performance criteria could be used to formulate LSFs associated with the dynamic behavior of a rotor-bearing system, here the interest is focused on the amplitudes of the unbalance responses at the bearings. The main reason for this choice is that, from the engineering standpoint, it is broadly recognized that, for safe and reliable operation, it is advisable to limit the maximal journal vibration amplitude relative to the bearing housing. A commonly accepted value is 80% of the designed diametric bearing clearance [49]. Once the amplitude exceeds this threshold, short-term damage often happens and bearing life can be severely shortened due to rubbing effects, which ultimately can lead to catastrophic failure if corrective actions are not undertaken.

In the case of the fluid-film bearings adopted in this study, the designed diametric clearance is twice the radial clearance referred to in Table 1, i.e., 120 \(\upmu\)m. The safe upper vibration amplitude at the bearings to satisfy the criterion above is therefore adopted as 96 \(\upmu\)m, and the associated serviceability limit-state functions for the two bearings are defined as:

where \(Q^{L}({\mathbf {X}})\) and \(Q^{R}({\mathbf {X}})\) indicate the unbalance responses at the left and right bearings, respectively, obtained from the FE model at the critical speed. In addition, \({\mathbf {X}}\) contains the six RVs defined in Table 2.

Kriging Surrogate Models

The implicit performance functions in Eqs. 22 and 23 are approximated by Kriging surrogates as follows:

where \({\hat{Q}}^{L}({\mathbf {X}})\) and \({\hat{Q}}^{R}({\mathbf {X}})\) are the Kriging predictors emulating the underlying FE model responses. To construct these predictors, the LHS technique was used to generate a set of design points on the domains of the RVs, and the rotor response at each of these points was evaluated using the FE model. Then, the observed responses were used to obtain the correlation matrices so as to compose the Kriging predictors, which were eventually used to estimate the responses at new points to evaluate their accuracy.

Figure 8 shows the prediction of the maximum vibration amplitude at the left bearing, using a Kriging surrogate trained with 200 points. Each tile presents a contour plot of the responses (in \(\upmu\)m, and values indicated with the help of the color scale on the right), versus two of the six RVs. The values of these later were considered in the intervals of length equal to eight standard deviations, centered at the mean values, i.e., \(\left[ \upmu _{x_i}-4\sigma _{x_i} \le x_{i} \le \upmu _{x_i}+4\sigma _{x_i}\right]\).

Contour plots of the Kriging prediction of the maximum response amplitude at the left bearing, using 200 training points. The RVs are varied according to the intervals \(\left[ 7^{\circ }C \le T_{oil}^{L,R} \le 63^{\circ }C \right]\), \(\left[ 48~\upmu m \le C_{r}^{L,R} \le 72~\upmu m \right]\), and \(\left[ 1200~g \cdot mm \le U_{res}^{L,R} \le 2800~g \cdot mm \right]\)

As expected, for the left bearing, the parameters \(T_{oil}^{L}\) and \(C_{r}^{L}\) exert strong influences (denoted by large variations of response amplitude values), due to their direct effect on the oil-film stiffness and damping. Also, it is noticed that tile \(U_{res}^{L} \times U_{res}^{R}\) successfully captures the linear relationship between the mass unbalance and the synchronous vibration amplitude.

The effect of the size of the DoE used for Kriging training on the prediction accuracy is illustrated in the scatter plots in Fig. 9, comparing the NRMSE of models generated with 25, 50, 100 and 200 training points with regard to the full FE model results. A number of 1000 verification points sampled on the domain of the six RVs (i.e., \(\left[ \mu _{x_i}-4\sigma _{x_i} \le x_{i} \le \mu _{x_i}+4\sigma _{x_i}\right]\), \(i=1,2, \cdots , 6\)) is used to assess the global accuracy of the four surrogates.

The results show a consistent reduction of NRMSE values as the number of training points increases, indicating a better correlation with FE model results and, consequently, a better predictive capability. The Kriging model generated from 200 training points presents the smallest global error.

It is worth noticing that there are some points laying outside of the nominal diametric clearance limit (120 \(\mu\)m). This is due to the fact that as the bearing clearances are also random variables, the allowed response amplitude at the bearing location will vary according to radial clearance sampled for each verification point.

Similar results are presented for the right bearing. In the tile plot depicted in Fig. 10, the parameters \(T_{oil}^{R}\) and \(C_{r}^{R}\) appear as the most influential on the vibration amplitude, and the tile \(U_{res}^{L} \times U_{res}^{R}\) replicates the behavior previously observed in Fig. 8 for the left bearing. In this case, \(T_{oil}^{L}\) and \(C_{r}^{L}\) do not exert significant effect on the response.

The influence of the training set size on the prediction accuracy is depicted in Fig. 11. Again, the global accuracy of the Kriging interpolation is improved as the number of training points increases.

Contour plots of the Kriging prediction of the maximum response amplitude at the right bearing, using 200 training points. The RVs are varied according to the intervals \(\left[ 7^{\circ }C \le T_{oil}^{L,R} \le 63^{\circ }C \right]\), \(\left[ 48~\upmu m \le C_{r}^{L,R} \le 72~\upmu m \right]\), and \(\left[ 1200~g \cdot mm \le U_{res}^{L,R} \le 2800~g \cdot mm \right]\)

In terms of computational time efficiency, Table 3 presents the CPU time required to construct the Kriging surrogates, using an Intel® CoreTM i5-2450M 2.5 GHz CPU with 6 GB RAM. It should be noticed that, although the results are presented for metamodeling of the responses at the two bearings, it is expected that no significant difference is to be observed between them, since, for a unique set of metamodeling features, the computations are essentially the same.

As observed in Table 3, the cumulated time exhibits a quadratic growth as the number of training points increases, mostly due to the sampling process and the search for the parameters that maximize the likelihood of the observed data. On the other hand, the time spent for FE model simulations grows linearly with the number of training points.

By performing a linear regression of the observed data, the time required to train a Kriging surrogate, \(t_{\text {train}}\), as a function of the number of training points (\(n_p\)) and time required for an individual FE evaluation time (\(t_{\text {FEM}}\)), is found expressed as:

As noticed, depending on the size of the training sample, constructing a Kriging surrogate model might become computationally costly, specially when dealing with extremely time-demanding FE models. However, one of the greatest benefits of using a Kriging metamodels is the fact that the CPU time required to make a single prediction with the surrogate is orders of magnitude shorter than that required for running a FE model simulation (see Table 3). This is an advantageous feature in the scope of reliability analysis.

Reliability Analyses

Before applying the strategy consisting in combining Kriging with FORM (identified as Kriging/FORM) to reliability assessment of the rotor considered here, it was found useful, mainly for benchmarking purposes, to perform similar assessments using three alternative routes, namely: (a) using the FE model combined with Monte Carlo simulation (FEM/MCS); (b) using the FE model in combination with FORM (FEM/FORM); (c) using the Kriging predictors combined with MCS (Kriging/MCS).

Figure 12 shows the convergence curves of the FEM/MCS and Kriging/MCS reliability analyses for the left and right bearings. The referred Kriging model was trained using 200 training points. To ensure adequate convergence, \(10^6\) simulations were carried out. Reference [50] has shown that the percent error in the PoF estimate using the Monte Carlo method can be related to the total number of simulations by the Shooman’s formula:

where \(P_{f}^{MCS}\) is the estimate of the true \(P_f\), and \(N_t\) is the total number of simulations. This percentage corresponds to a probability of 95% that the exact value of \(P_f\) belongs to the interval \(P_{f}^{MCS}(1\pm \epsilon ^{\%})\). Therefore, by performing \(10^6\) simulations and given the anticipated reliability level of this application problem, there is a 95% likelihood that the errors in the estimated probabilities of failure are within 10%.

It can be seen that convergence is achieved after 105 simulations for both approaches and the reliability of the left bearing is slightly higher than that of the right bearing, being, respectively, \(R^L=99.96\%\) and \(R^R=99.88\%\) as per FEM/MCS results. This can be explained by the fact that, since the rotor is not symmetric, the bearings are subjected to different loads. The estimated values of PoF obtained from the Kriging/MCS approach after \(10^6\) simulations is very close to the FEM/MCS reference values, which indicates the satisfactory accuracy of the Kriging surrogate for the reliability assessment. It can be noticed, for both bearings, that the convergence curves of the PoF are less smooth than those corresponding to the statistics of the limit-state functions.

The results obtained for FEM/FORM and Kriging/FORM reliability assessment are depicted in Fig. 13. Again, the referred Kriging model was trained using 200 training points. Convergence tolerances \(\frac{\Delta \beta }{\beta }\) and \(\frac{\Delta g({\mathbf {X}})}{1-g({\mathbf {X}})}\) of the order of \(10^{-3}\) were achieved after few iterations for the FEM/FORM approach, both in terms of LSF and PoF values. For the Kriging/FORM reliability analyses, convergence in terms of PoF and LSF values is obtained after only five iterations. The FORM algorithm rapidly reaches tolerances \(\frac{\Delta \beta }{\beta }\) and \(\frac{\Delta g({\mathbf {X}})}{1-g({\mathbf {X}})}\) of the order of \(10^{-4}\).

Table 4 summarizes the results of the reliability calculation, indicating the PoF values estimated from the four methods. Values in parentheses indicate the relative errors of the estimates, calculated with respect to the FEM/MCS approach. As observed, simply increasing the number of training points does not consistently improve the capability of the surrogate to estimate the true PoF value.

Data from Table 4 is graphically depicted in Fig. 14 to facilitate visualization. It can be noticed that, as a rule, Kriging/FORM reliability estimates converge to the FEM/FORM counterparts as the number of training points increases. For the left bearing, the PoF values predicted by the Kriging models trained with 50 points or more are found between the FEM/MCS and the FEM/FORM estimates. Also, it is noticeable that Kriging/FORM method results follow closely the results of the Kriging/MCS approach. For the right bearing, Kriging/FORM results converge to FEM/FORM and FEM/MCS estimates as the number of training points increases.

Table 5 presents the MPPs estimated by the Kriging/FORM and FEM/FORM reliability analyses. Overall, the two sets of results are very close to each other, regardless of the number of training points. Also, it is worth noticing that the MPPs for the left bearing are characterized by higher oil temperature values and larger radial clearances at this bearing, and lower oil temperatures and smaller radial clearances at the other bearing. The same pattern is observed for the MPPs corresponding to the right bearing.

As an additional probabilistic characterization of the reliability analyses, Fig. 15 allows to compare the PDFs of the LSFs for the two bearings, obtained from \(10^6\) samples generated for the FEM/MCS and Kriging/MCS approaches. For this later, different sizes of DoEs are considered. It can be seen that the Kriging/MCS PDFs approximate increasingly better the FEM/MCS counterparts as the size of DoEs increases. Nevertheless, looking at the cumulative probabilities in the unsafe region (Fig. 16), it is noticeable that an increased number of training points does not necessarily imply better matching between the probability distributions corresponding to FEM/MCS and Kriging/MCS. This is mostly due to the fact that, in problems with high reliability, the limit-state boundary is situated in a low probability zone, where the local rather than the global accuracy of the surrogates determines whether the location of the samples into the safe and unsafe regions is performed correctly.

Assessment of the Accuracy and Computational Efficiency of the Kriging/FORM Method

With the intention of assessing the accuracy and computational efficiency of the Kriging/FORM method in comparison with the three alternative approaches, the results presented in the previous subsection are systematically compared.

Table 6 presents the CPU times required for the reliability computations. Values in parentheses refer to the number of calls of the FE model in each FEM-based analysis. For the FORM algorithm, the number of calls of the performance function is defined by \(1 + n_{i}(2n_{v}+1)\), where \(n_{v}\) is the number of RVs and \(n_{i}\) is the number of iterations.

As noticed, the conventional FEM/MCS demands the highest computational effort. The CPU time required by the Kriging/MCS method grows quadratically with the number of training points, but is still moderate when compared with FEM/MCS. The best performance, in terms of computation time, is presented by the Kriging/FORM approach.

The relationship between CPU times and relative errors in the estimates of PoF, calculated with respect to the FEM/MCS, is depicted in Fig. 17 for the left and right bearings. The number of Kriging training points is indicated on top of the markers.

It can be observed that Kriging surrogates trained with 50 training points or more provide good results when combined with FORM. On average, there is a decrease of five orders of magnitude in the computational effort, while the relative errors are kept lower than 10% (thus within the theoretical error expected for PoFs estimated from MCS, with a 95% confidence level).

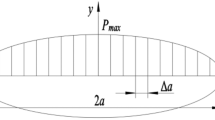

To verify the possibility of improving the accuracy of the Kriging metamodels, while maintaining low computational cost, it was devised to include an additional step in the surrogate training process, consisting in the enrichment of the DoE in the vicinity of MPP identified with Kriging/FORM. The procedure is based on retraining the original surrogate with the inclusion of 10 additional points sampled within a hypercube centered on the design point (MPP) in the standard normal space of the random variables, using LHS. The length of each dimension of the hypercube is chosen to be 10% of the reliability index derived from Kriging/FORM. By doing so, the new training points will be closely distributed around limit-state surface in the region of most interest, as illustrated in Fig. 18 for two random variables. It is important to mention that the enrichment process requires running the FE model only for the additional 10 points incorporated to the DoE, which represents a small increase in the computational effort.

It should be noticed that this enrichment strategy does not fit into the concept of the active learning methods (e.g. EGRA, AK-MCS, AK-IS, etc.), which rely on the stochastic nature of the Kriging prediction to iteratively enrich the surrogate based on a learning function [23,24,25]. Although well-proven, these techniques eventually demand high computational effort, specially in cases in which the PoFs are small. Instead, the procedure proposed here is an attempt to locally refine the Kriging interpolation and improve its estimates by simply adding, at one time, a few points to the DoE in the vicinity of the preliminary MPP. The additional cost involved in this second stage is considerably smaller than the cost of successive updates of the surrogate through an iterative process.

Figure 19 shows the effectiveness of the enriched Kriging surrogates combined with FORM, including, for each surrogate, the total time spent on the training/enrichment process and on the reliability analysis itself. As noticed, the local enrichment of the surrogates brought the PoF estimates closer to the values predicted by FEM/FORM. Using this strategy, even surrogates originally trained with only 25 points were able to effectively predict the probability of failure.

Concluding Remarks

A numerical strategy consisting in the use of Kriging surrogates in combination with the First-Order Reliability Method was evaluated, with focus on the reliability assessment of rotor-bearing systems. Numerical simulations, considering a simple rotor modeled with the finite element method, were performed to appraise the accuracy and computational efficiency of this strategy, in comparison with three alternative procedures.

In general, the Kriging/FORM method presented good convergence, with acceptable errors in the failure probability estimates. The fact that the Kriging interpolation creates a continuous and smooth response surface allowed the FORM algorithm to converge to the MPP after a few iterations. Also, the time required in the reliability assessment when using the Kriging metamodels was remarkably shorter than the one demanded by FEM-based approaches. These results corroborate the effectiveness of the presented numerical approach to reliability analysis of rotating machinery and reinforce its importance, especially when dealing with implicit nonlinear and computationally costly performance functions associated with the distinguished dynamic characteristics of those machines.

The results also enabled to put in evidence some relevant features, in particular the influence of the size of the design of experiments used to train the Kriging surrogates. It was noticed that increasing the number of training points in the Kriging surrogate construction, and therefore the surrogate global accuracy, did not necessarily improve the accuracy of the Kriging-based reliability estimations. This is especially true for problems in which the limit-state frontier is located in a low probability zone, where the correct position of the samples into the safe and unsafe regions relies more on the local rather than the global accuracy of the surrogate. A procedure was devised aiming at improving the accuracy of reliability estimates by a local enrichment of the design of experiments used for Kriging training, in the vicinity of a previous FORM estimation of the most probable failure point. The numerical results also showed the effectiveness of this procedure.

In spite of the fact that, in the present study, a FE model with a small number of degrees-of-freedom, and a particular type of limit-state function were adopted, it is believed that the observed trends will hold when complex FE models with much larger numbers of degrees-of-freedom and other types of LSF applicable to rotating systems are used. Hence, it is believed that the combined use of FE modeling, Kriging metamodeling and FORM can be applied to more involved applications related to rotor-bearing systems.

References

Allaire D, Willcox K (2010) Surrogate modeling for uncertainty assessment with application to aviation environmental system models. AIAA J 48(8):1791–1803

Ghanem R, Higdon D, Owhadi H (2017) Handbook of uncertainty quantification, Vol. 6, Springer

Smith R. C (2013) Uncertainty quantification: theory, implementation, and applications, Vol. 12, Siam

Marelli S, Sudret B (2014) UQLab: an advanced modular software framework for uncertainty quantification, in: SIAM Conference on Uncertainty Quantification, SIAM Conference on Uncertainty Quantification

Lemaire M (2009) Structural reliability. Wiley, Hoboken

Melchers RE, Beck AT (2018) Structural Reliability Analysis and Predicition. Wiley, Hoboken

Metropolis N, Ulam S (1949) The Monte Carlo method. J Am Stat Assoc 44(247):335–341

Haldar A, Mahadevan S (2000) Reliability assessment using stochastic finite element analysis. Wiley, New York

Zhao YG, Ono T (1999) A general procedure for first/second-order reliability method (FORM/SORM). Struct Saf 21:95–112

Sudret B (2012) Meta-models for structural reliability and uncertainty quantification, in: Proceedings of the 5th Asian-Pacific Symposium on Structural Reliability and its Applications, Research Publishing Services, Singapore, pp. 53–76

Moustapha M, Sudret B (2019) Surrogate-assisted reliability-based design optimization: a survey and a unified modular framework. Struct Multidisciplinary Opt 60(5):2157–2176

Forrester AIJ, Sobester A, Keane AJ (2008) Engineering design via surrogate modelling. John Wiley & Sons, West Sussex

Bucher CG, Bourgund U (1990) A fast and efficient response surface approach for structural reliability problems. Struct Saf 7:57–66

Viana FAC, Simpson TW, Vladimir B, Toropov V (2014) Metamodeling in multidisciplinary design optimization: How far have we really come? AIAA J 52(4):670–690

Turner C. J (2005) Hypermodels: Hyperdimensional performance models for engineering design, Ph.D. thesis, The University of Texas at Austin, Austin

Sacks J, Welch WJ, Mitchell TJ, Wynn HP (1989) Design and analysis of computer experiments. Stat Sci 4:409–423

Jones DR (2001) A taxonomy of global optimization methods based on response surfaces. J Global Opt 21:345–383

Kleijnen JPC (2009) Kriging metamodeling in simulation: a review. Euro J Oper Res 192:707–716

Kaymaz I (2005) Application of Kriging method to structural reliability problems. Struct Saf 27:133–151

Lophaven SN, Nielsen HB, Sondergaard J (2002) Dace: a MATLAB kriging toolbox. Technical University of Denmark, Lyngby, Tech. rep.

Gaspar B, Teixeira AP, Soares CG (2014) Assessment of the efficiency of Kriging surrogate models for structural reliability analysis. Prob Eng Mech 37:24–34

Shi X, Teixeira AP, Zhang J, Soares CG (2015) Kriging response surface reliability analysis of a ship-stiffened plate with initial imperfections. Struct Infrastruct Eng 11(11):1450–1465

Bichon BJ, Eldred MS, Swiler LP, Mahadevan S, McFarland J (2008) Efficient global reliability analysis for nonlinear implicit performance functions. AIAA J. 46(10):2459–2468

Echard B, Gayton N, Lemaire M (2011) AK-MCS: an active learning reliability method combining Kriging and Monte Carlo simulation. Struct Saf 33(2):145–154

Echard B, Gayton N, Lemaire M, Relun N (2013) A combined importance sampling and Kriging reliability method for small failure probabilities with time-demanding numerical models. Reliability Eng Syst Saf 111:232–240

Schöbi R, Sudret B (2014) Combining polynomial chaos expansions and kriging for solving stochastic mechanics problems, in: Proceedings of the 7th Int. Conf. on Comp. Stoch. Mech, Greece, pp. 53–76

Castro H. F, Santos J. M. C, Sampaio R (2017) Uncertainty analysis of rotating systems, in: Proceedings..., XVII International Symposium on Dynamic Problems of Mechanic, ABCM

Cavalini AA Jr, Lara-Molina FA, Sales TP, Koroishi EH, Steffen V Jr (2015) Uncertainty analysis of a flexible rotor supported by fluid film bearings. Lat Am J Solids Struct 12(8):1487–1504

Visnadi LB, Castro HF (2019) Influence of bearing clearance and oil temperature uncertainties on the stability threshold of cylindrical journal bearings. Mech Mach Theory 134:57–73

Garoli GY, Castro HF (2019) Analysis of a rotor-bearing nonlinear system model considering fluid-induced instability and uncertainties in bearings. J Sound Vib 448:108–129

Didier J, Sinou J-J, Faverjon B (2012) Study of the non-linear dynamic response of a rotor system with faults and uncertainties. J Sound Vib 331(3):671–703

Yang L, He K, Guo Y (2018) Reliability analysis of a nonlinear rotor/stator contact system in the presence of aleatory and epistemic uncertainty. J Mech Sci Technol 32(9):4089–4101

Dourado A. P, Barbosa J. S, Sicchieri L, Cavalini Jr. A. A , Steffen Jr V, (2018) Kriging surrogate model dedicated to a tilting-pad journal bearing, in: International Conference on Rotor Dynamics, Springer, pp. 347–358

Sinou J.-J, Nechak L, Besset S (2018) Kriging metamodeling in rotordynamics: Application for predicting critical speeds and vibrations of a flexible rotor, Complexity (1264619)

Rosenblatt M (1952) Remarks on a multivariate transformation. Ann Math Stat 23:470–472

Hasofer AM, Lind NC (1974) Exact and invariant second-moment code format. J Eng Mech 100:111–121

Rackwitz R, Fiessler B (1978) Structural reliability under combined random load sequences. Comput Struct 9(5):484–494

McKay MD, Beckman RJ, Conover WJ (1979) A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 21(2):239–245

Nelson HD, McVaugh JM (1976) The dynamics of rotor-bearing systems using finite elements. J Eng Ind 98(2):593–600

Lalanne M, Ferraris G (1998) Rotordynamics prediction in engineering. John Wiley & Sons, New York

Friswell MI, Penny JET, Garvey SD, Lees AW (2010) Dynamics of rotating machines. Cambridge University Press, New York

Hamrock B. J (1991) Fundamentals of fluid film lubrication, Tech. rep., NASA, Washington, DC: NASA, (NASA, RP1255)

Ocvirk F. W (1952) Short bearing approximation for full journal bearings, Tech. rep., Cornell University, Washington, DC: NASA, (NACA, Technical Note 2808)

de Castro HF, Cavalca KL, Nordmann R (2008) Whirl and whip instabilities in rotor-bearing system considering a nonlinear force model. J Sound Vib 317:273–293

Vance JM, Murphy B, Zeidan F (2010) Machinery vibration and rotordynamics. Wiley, Hoboken

Friswell M. I, Penny J. E. T, Garvey S. D, Lees A. W (2010) Dynamics of rotating machines, (Software for Lateral Vibrations) . http://www.rotordynamics.info/

ISO 21940-12, Mechanical vibration—Rotor balancing—Procedures and tolerances for rotors with flexible behavior, Standard, International Organization for Standardization, Geneva (2016)

San Andrés L (2006) Hydrodynamic fluid film bearings and their effect on the stability of rotating machinery, educational Notes RTO-EN-AVT-143, Paper 10

API 541, Form-wound Squirrel Cage Induction Motors—375 kW (500 Horsepower) and Larger, Standard, American Petroleum Institute, Washington, DC (2014)

Shooman ML (1968) Probabilistic reliability. McGraw-Hill Book Co., New York

Ferguson JH, Yuan JH, Medley JB (1998) Spring-supported thrust bearings for hydroelectric generators: influence of oil viscosity on power loss. Tribol Ser 34:187–194

ASTM-D341-17, Standard Practice for Viscosity-Temperature Charts for Liquid Petroleum Products, Standard, American Society for Testing and Materials, West Conshohocken (2017)

Acknowledgements

D.A. Rade acknowledges the Brazilian research agencies Fundação de Amparo à Pesquisa do Estado de São Paulo (FAPESP) (grant #2015/20363-6) and Conselho Nacional de Desenvolvimento Científico e Tecnológico (grant #310633/2013-3) for the financial support to his research work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A. Short Journal-Bearing Model

Appendix A. Short Journal-Bearing Model

The stiffness and damping matrices of the hydrodynamic bearing are given as:

where F is the bearing static load, \(C_{r}\) is the radial clearance, \(\Omega\) is the rotation speed, and:

with

The dimensionless bearing eccentricity ratio given by \(0 \le \varepsilon \le 1\) is the smallest root of the quartic equation in \({\varepsilon ^2}\):

where

is the modified Sommerfeld number for a bearing with length \(L\), diameter \(D\) and radial clearance \(C_{r}\), operating at a particular speed \(\Omega\) with static load \(F\) and fluid-film dynamic viscosity \(\eta\).

The viscosity of typical lubricant used in hydrodynamic bearing applications is, in turn, a function of the oil temperature. The equations presented by reference [51], also found in the ASTM-D341-17 [52], are used to model the temperature effect. The dynamic viscosity, in Pa\(\cdot\)s, is given by:

where, the temperature \(T\) is in \(^\circ\)C and the density \(\rho\) is in kg/m3. This last property is given by:

Also, the coefficients \(A\) and \(B\) are expressed as:

where, \(\nu\) is the kinematic viscosity, in cSt.

Rights and permissions

About this article

Cite this article

Barbosa, M.P.F., Rade, D.A. Kriging/FORM Reliability Analysis of Rotor-Bearing Systems. J. Vib. Eng. Technol. 10, 2179–2201 (2022). https://doi.org/10.1007/s42417-022-00511-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42417-022-00511-1