Abstract

Within the complex domain of construction project management, the accurate anticipation of time overruns is a significant obstacle, particularly within the specific context of the construction sector in Jordan. This study aimed to utilize deep learning, specifically the Multi-Layer Perceptron (MLP), and enhance its overrun predictive ability by incorporating the Coral Reefs Optimization Algorithm (CROA). The approach employed in our study involved the utilization of a comprehensive dataset encompassing diverse aspects of building projects, ranging from financial indicators to project durations. The Multilayer Perceptron (MLP) was utilized as the underlying framework, and the model's parameters were refined utilizing the Covariance Matrix Adaptation Evolution Strategy (CMA-ES) with Rank-One Adaptation (CROA) to enhance the predictive capabilities. The results of our study indicate that the MLP-CROA continuously showed superior performance compared to the standalone MLP across many metrics. Notably, the MLP-CROA had particularly excellent values in metrics such as R-squared, which suggests its solid predictive capabilities. In conclusion, this study highlights the significant potential for transformation in the Jordanian construction industry by integrating deep learning and bio-inspired optimization methodologies. This process improves forecast accuracy and facilitates proactive project management, potentially directing projects toward timely and cost-effective completion.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Time overruns in construction projects may have substantial consequences for the project's overall success. These consequences may include escalated costs, prolonged completion timelines, and diminished customer satisfaction (Kamaruddeen et al., 2020). Hence, the precise assessment and anticipation of schedule overruns are crucial for efficient project management. In recent times, scholars have investigated different methodologies and strategies to tackle this predicament, including utilizing optimization algorithms and deep learning methodologies (Gómez-Cabrera et al., 2023).

Arabiat et al. (2023), the issue of time overruns is a persistent and prevalent difficulty within the construction sector worldwide. The integration of Machine Learning (ML) into the construction industry has emerged as a new development, despite ML's widespread uses in numerous fields. The present work aimed to construct a prognostic model to anticipate the occurrence of these cost overruns. The literature analysis identified measurable risk indicators that are particular to building projects. Based on the dataset from completed projects, the K-nearest neighbor (KNN) model demonstrated an accuracy rate of 83.76%. In comparison, the Artificial Neural Network (ANN) model produced a much higher accuracy rate of 99.28%. This performance was supported by three essential data mining techniques, including clustering, feature selection, and prediction.

Sambasivan and Soon (2007) examined the factors contributing to delays in the building sector of Malaysia and their subsequent impacts. The study focused on the specific setting of Malaysia and offered significant insights into the determinants of schedule overruns in building projects. Ghazali and Wong (2014), an examination of risk rating for tunneling construction projects in Malaysia, revealed that a notable proportion of public projects in Saudi Arabia had delays in meeting their scheduled timelines. These studies underscore the need to understand the origins and hazards linked to schedule overruns in building endeavors.

In order to tackle the issue of assessing and forecasting time overruns, scholars have examined the use of sophisticated methodologies, such as deep learning and optimization algorithms. Eltoukhy and Nassar (2021) developed a support vector machine (SVM) model to analyze time and expense overruns in building projects within the context of Egypt. The model exhibited notable precision in forecasting time overruns, highlighting the promising prospects of using machine learning methodologies in this field.

In conjunction with machine learning, optimization methods have been employed to tackle the problem of time overruns effectively. Bin Seddeeq et al. (2019) conducted research that specifically examined the oil and gas construction business in Saudi Arabia. The findings of this study indicated that a notable proportion of construction projects within this industry had delays in their completion. This research offers significant contributions by shedding light on the frequency of time overruns within the sector. In addition, using optimization techniques, such as the Coral Reefs Optimization (CRO) algorithm, has shown potential in mitigating time delays encountered in building projects.

In summary, the assessment and anticipation of time overruns in construction projects is a multifaceted undertaking that requires a thorough comprehension of the underlying factors and accompanying hazards linked to these delays. Scholars have investigated many approaches, such as deep learning and optimization algorithms, to tackle this issue (Sharma & Gupta, 2021; Gómez-Cabrera et al., 2020). According to Gharaibeh et al. (2020), it is feasible to devise efficient solutions for reducing time overruns and enhancing project results using these methodologies and considering the unique circumstances of building projects.

Within the dynamic and ever-changing realm of the construction sector, the issue of time overruns has become a prevailing obstacle, often disrupting project schedules and influencing various parties involved. With an understanding of the predictive capabilities of Machine Learning (ML), this research aims to develop a robust model that can effectively anticipate time overruns. Based on a thorough examination of relevant literature, this study used measurable risk indicators to develop a model. To verify the model, real-world project datasets were employed, resulting in notable accuracies when using both K-nearest neighbor (KNN) and Artificial Neural Network (ANN) approaches. This undertaking not only addresses the current lack of knowledge regarding utilizing machine learning in construction timelines but also introduces a novel approach that combines clustering, feature selection, and prediction techniques. This sets a precedent for future research and implementing proactive project management in practical settings.

Methodology

Data collection

The analyzed dataset contains a comprehensive record of 190 projects carried out from 2008 to 2022. The primary source of this data is the Ministry of Public Works and Housing, with additional information obtained from Daabes Contract EST. Every record in the collection provides information on the projected and realized expenses, marked as PC and AC, respectively, and the expected and actual durations, represented as PD and AD. In addition, the dataset provides measurements of time and cost disparities through the 'Time Overrun (Days)' and 'Cost Overrun (JOD)' measures. The first metric measures the percentage deviation from the predicted timetable, where negative values indicate delays. The second metric quantifies the percentage difference between estimated and realized costs. The dataset provided is extensive and offers valuable insights into the dynamics of project management. It plays a crucial role in facilitating empirical investigations within the discipline.

Within the complex realm of building projects, many factors intertwine to exert an effect on time overruns. Our objective is to carefully choose characteristics that effectively capture the fundamental aspects of these undertakings to comprehend the intricacies of this phenomenon.

As depicted in Fig. 1, the Planned Cost (PC) and Actual Cost (AC) function as financial indicators, providing valuable information regarding the financial aspects of a project. Divergences between these two elements can serve as indicative of unanticipated obstacles, alterations in scope, or inaccuracies in financial projections, all of which have the potential to impact project schedules. The plots reveal considerable fluctuations in these expenses, which frequently align with notable delays, emphasizing their interdependence. Planned Duration (PD) and Actual Duration (AD) serve as temporal markers, delineating the projected and achieved durations of undertakings. The comparison of different periods provides insight into the precision of initial project estimations and the effectiveness of implementation. The significance of these elements is heightened by the narrative structures, in which deviations in timeframes frequently parallel instances of time exceeding expectations, illustrating the dynamic relationship between strategic planning and practical implementation (Shoar et al., 2022).

Finally, the Cost Overrun (JOD) is a metric that measures the percentage difference between projected and actual costs, and it functions as a financial indicator. This characteristic aligns with the proverbial saying that time holds economic value; delays in financial matters, whether caused by obstacles in procurement or payment delays, have the potential to result in the prolongation of project timelines. The graphs effectively illustrate this association, as shown by the prominent occurrence of expense overruns closely aligning with substantial time overruns.

The selected characteristics, as evidenced by their theoretical foundations and visual associations, highlight the complex and diverse nature of time overruns. The authors collectively develop a narrative that connects the financial, temporal, and execution aspects of construction projects, providing a comprehensive perspective to unravel the mystery surrounding delays in project timelines.

Data preprocessing

Within the domain of data-driven research, the maxim "garbage in, garbage out" underscores the pivotal significance of data pretreatment. The dependability and robustness of the subsequent model heavily depend on the vital importance of ensuring the quality and consistency of the input data. The preprocessing phase was initiated by evaluating the dataset's integrity, explicitly focusing on detecting any missing or abnormal values. The dataset utilized in this investigation demonstrated notable completeness, hence obviating the necessity for data imputation or exclusion (Sohrabi & Noorzai, 2022).

After performing an integrity check, the normalization process was undertaken to mitigate the heterogeneity observed in the scales of various variables (Yin et al., 2022). This stage holds significant importance, particularly when utilizing algorithms that are very responsive to the size of features, such as deep learning models. According to Ashtari et al. (2022), the process of scaling each feature to have a mean of zero and a standard deviation of one serves the purpose of preventing any individual feature from exerting disproportionate effect on the model as a result of its scale. Normalization plays a dual role in improving model convergence and feature importance interpretability. After the process of normalization, the dataset was divided into separate sets for training and testing purposes. The division of data into training and evaluation sets is a fundamental aspect of machine learning, since it allows for the assessment of a model's ability to generalize to new, unknown data after being trained. In our study, a proportion of 80% of the data was designated for training purposes, while the remaining 20% was set aside only for testing. The standard partitioning method guarantees a training set of sufficient size for model learning while also maintaining a test set that is suitable for assessment purposes.

In summary, the pretreatment stages were carefully selected to produce a dataset that is both representative and suitable for deep learning, establishing a solid basis for the subsequent modeling phase.

Neural network architecture

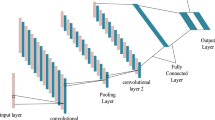

In our endeavor to simulate the complex dynamics that govern time overruns in building projects, we utilized the capabilities and adaptability of neural networks. The architecture selected for our study was a multi-layer perceptron (MLP), a feed-forward artificial neural network. The Multi-Layer Perceptron (MLP) was designed with an input layer that aligns with the number of features in our dataset, many hidden layers, and an output layer that provides the projected time overrun (Natarajan, 2022).

The concealed layers, the central component of our network, consist of a sequence of densely coupled neurons. Every individual neuron undertakes the processing of the input it receives by means of a weighted sum, which is subsequently followed by the application of a non-linear activation function. In our study, the Rectified Linear Unit (ReLU) activation function was selected for the hidden layers of our model. This choice was based on its shown efficacy in introducing non-linearity while maintaining computational efficiency, as highlighted by Gondia et al. (2020). The linear activation function in the output layer was deemed appropriate for our regression-oriented objective. The architectural decisions were justified based on empirical facts and computational factors. Deep architectures, distinguished by several hidden layers, have exhibited exceptional proficiency in capturing intricate correlations among datasets. Nevertheless, the model's depth was adjusted to prevent overfitting and maintain computational feasibility. The adoption of the Rectified Linear Unit (ReLU) activation function was driven by its efficacy in addressing the vanishing gradient problem, a prevalent obstacle encountered in deep neural networks. Consequently, this facilitates more seamless training procedures (Sanni-Anibire et al., 2021).

The neural network architecture employed in our study was carefully crafted to strike a delicate balance between model complexity, interpretability, and computational efficiency. This design choice renders our approach highly suitable for comprehending the intricate aspects of time overruns in building projects in Jordan.

Coral reefs optimization

The Coral Reefs Optimization Algorithm (CROA) is an innovative optimization technique that draws inspiration from biological processes, namely the reproduction and colonization behaviors observed in coral reefs. The basis of this approach is to replicate the processes of coral development, competition, and regeneration, enabling it to navigate intricate optimization terrains (Kaveh, 2014) effectively.

In the present implementation, the corals, which serve as the principal entities in the Coral Reefs Optimization Algorithm (CROA), symbolize prospective resolutions to the optimization problem at hand. The coral reef ecosystem, which serves as the habitat for these corals where they flourish and engage in competitive interactions, has resemblance to the solution space. Corals engage in a range of reproductive mechanisms, including sexual reproduction through methods, such as broadcast spawning and brooding, as well as asexual reproduction. The process of broadcast spawning facilitates the generation of novel possible solutions by amalgamating characteristics from numerous parental corals. The process of brooding is characterized by a very limited scope of exploration, in which progeny are generated with minor alterations from their progenitors (Kaveh & Khavaninzadeh, 2023; Kaveh et al., 2023). In contrast, asexual reproduction emulates the biological phenomenon of budding, resulting in the generation of almost identical corals with minimal variations. The primary focus of the interaction between CROA and our neural network pertained to the optimization of hyper-parameters and weights. Each every coral inside the reef possesses an own arrangement of neural network parameters. The evaluation of the fitness of each coral, which defined its probability of survival and reproductive success, was assessed based on the performance of the relevant neural network configuration on the validation dataset. The objective of the Coral Reproduction and Optimization Algorithm (CROA) was to identify the most effective arrangement that would minimize prediction error. This was achieved by repeated cycles of coral reproduction and competition, as discussed by Kaveh et al. (2008).

The appeal of employing CROA in conjunction with deep learning stems from its capacity to effectively navigate the extensive and complex parameter space of neural networks (Kaveh et al., 2021, 2023; Rofooei et al., 2011). In contrast to conventional optimization methods, which may become trapped in local optima, the bio-inspired mechanisms employed by CROA (Cuckoo Search Algorithm) offer a well-balanced strategy that encompasses both exploration and exploitation. This approach guarantees a more comprehensive search process. The combination of the Construction Risk Ontology Analysis (CROA) and our neural network architecture provided a strong and flexible approach to effectively identify the optimal model for forecasting time overruns in construction projects.

Evaluation metrics

In the field of predictive modeling, the careful selection of suitable assessment metrics is of utmost importance in order to thoroughly evaluate the performance of a model. In order to address the issue of forecasting delays in construction projects, a set of metrics was utilized, with each statistic providing a distinct viewpoint on the accuracy of predictions and the distribution of errors.

The Mean Absolute Error (MAE) is a metric that quantifies the average magnitude of prediction mistakes, irrespective of their direction (Moon et al., 2020). The term is delineated as:

where \(y_i\) represents the actual value, \(\hat{y}_i\) is the predicted value, and \(n\) is the total number of observations.

In contrast, the Mean Squared Error (MSE) places greater emphasis on larger errors by taking their squared values (Akinosho et al., 2020). The concept is articulated as:

The Root Mean Squared Error (RMSE) is a metric that enhances the interpretability of the Mean Squared Error (MSE) by calculating its square root. This transformation allows for the representation of error magnitude in the original unit of measurement (Hu et al., 2021):

The coefficient of determination, commonly referred to as R-squared (R2), quantifies the fraction of variability in the dependent variable that can be accounted for by the independent variables (Uddin et al., 2022). The value of the variable varies inclusively from 0 to 1, and is determined by the following equation:

where \({\text{SS}}_{{\text{res}}}\) is the sum of squares of residuals and \({\text{SS}}_{{\text{tot}}}\) is the total sum of squares.

The Mean Absolute Percentage Error (MAPE) is a metric that offers a relative assessment of error expressed in percentage terms (Moghayedi & Windapo, 2020).

The Mean Bias Deviation (MBD) metric, as described by Maltbie et al. (2021), quantifies the average bias present in the forecasts.

The Relative Absolute Error (RAE) is defined as the quotient of the Mean Absolute Error (MAE) for the specific model under consideration and the MAE of a baseline naive model, as described by Gondia et al. (2020):

The Relative Absolute Error (RAE) is defined as the quotient of the Mean Absolute Error (MAE) for the specific model under consideration and the MAE of a baseline naive model, as described by Gondia et al. (2020):

Each of these indicators provides a nuanced perspective for evaluating the predictions made by the model, so assuring a comprehensive and diverse assessment of its performance.

Results and discussion

Visual diagnostics and algorithm behavior analysis

As depicted in Fig. 2, the Convergence Plot showcases the convergence of the data. The Convergence Plot offers a visual depiction of the iterative advancement of an optimization method, highlighting the evolution of the best fitness or objective value. The evaluation of an algorithm's effectiveness and convergence speed is a crucial diagnostic technique. A steeper slope in this evaluation often signifies a more rapid convergence toward an ideal or sub-optimal solution.

The Diversity Plot is a graphical representation that illustrates the level of variety or heterogeneity observed in the population of solutions during multiple iterations. A high level of diversity within a system suggests an extensive investigation of the search space, whereas a decrease in diversity may imply premature convergence. As seen in Fig. 3. The practice of monitoring variety can contribute to the comprehension of how the algorithm achieves a trade-off between exploration, which involves exploring new regions, and exploitation, which involves refining known good solutions.

The Fitness Distribution Plot, commonly depicted as a histogram, provides a visual representation of the distribution of fitness values within a population at a specific iteration. The presented representation facilitates the identification of the spectrum and concentration of solution attributes, whereby a more even distribution implies a wider exploration, while a skewed distribution indicates a concentration on particular solution attributes. As depicted in Fig. 4.

As depicted in Fig. 5, the exploration of solution space is demonstrated. The plot of Solution Space Exploration is a scatter diagram that visually represents individual solutions within the goal space. This plot is particularly advantageous in the context of multi-objective optimization problems as it offers valuable insights regarding the dispersion and allocation of solutions. It effectively highlights regions of concentrated exploration as well as prospective areas of interest or disregard.

The shown plot illustrates the comparison between the average and worst fitness values throughout a series of iterations. Through the examination of these metrics, scholars are able to acquire valuable knowledge regarding the general well-being of the population as well as the prevalence of inadequate or below-average solutions. Consequently, this allows an evaluation of the algorithm's resilience and reliability—as seen in Fig. 6.

The Parameter Sensitivity Plot plays a crucial role in the process of algorithm tuning and parameter analysis. As seen in Fig. 7. By graphically representing the relationship between the performance outcome and different parameter values, this visualization provides a clear understanding of how changes in parameters affect the effectiveness of the algorithm. It assists in determining the most suitable parameter settings that yield ideal or nearly optimal results, and also reveals the algorithm's ability to withstand fluctuations in parameters.

Model performance

The practicality and deployment potential of predictive models heavily rely on their performance. In our pursuit of forecasting delays in building projects in Jordan, the Multi-Layer Perceptron (MLP) has emerged as a prominent candidate, showcasing impressive predictive capabilities across various evaluation metrics.

As depicted in Fig. 8, the Mean Absolute Error (MAE) for the Multilayer Perceptron (MLP) was recorded as 0.1215, indicating an average magnitude of prediction error of approximately 12.15%. The Mean Squared Error (MSE) and Root Mean Squared Error (RMSE) are often used metrics in the field of statistics to assess the accuracy of a model. In this particular case, the MSE and RMSE were calculated and yielded values of 0.0395 and 0.1986, respectively. These metrics are particularly useful as they give more weight to higher errors, providing a comprehensive evaluation of the model's performance. The combination of these measures indicates that although the model's predictions are often precise, there may be sporadic instances where the predictions stray significantly from the actual values. The R-Squared (R2) metric, which is widely used in regression research, yielded a noteworthy value of 0.9540 for the Multilayer Perceptron (MLP) model, providing valuable insights. This finding indicates that a significant proportion, specifically 95.40%, of the variability observed in the dependent variable (time overruns) can be accounted for by the independent factors included in our dataset, thereby highlighting the robust predictive capability of the model. Upon further examination of relative metrics, it was observed that the Relative Absolute Error (RAE) and Relative Squared Error (RSE) yielded values of 0.6104 and 0.6857, respectively. By comparing the performance of the MLP with that of a naive model, these metrics provide a comparative perspective, indicating that the average errors of our model are approximately 61.04% and 68.57% of the mistakes observed in a simplistic model.

The provided bar chart effectively represents the performance measures of the MLP, offering a visual overview that emphasizes the effectiveness of the model. Each statistic, which is visually depicted as separate bars, provides a concise overview of the model's strengths and areas that could benefit from further improvement.

Within the domain of predictive analytics, the effectiveness, resilience, and applicability of a model are reflected in its performance. This work employed the Coral Reefs Optimization Algorithm (CROA) in conjunction with the Multi-Layer Perceptron (MLP) to offer a novel methodology for forecasting time overruns in building projects. The findings, as explicated by the performance indicators, are both encouraging and enlightening.

The Mean Absolute Error (MAE) of the MLP-CROA exhibited a value of 0.0707, suggesting that the model's average deviation from the true values is around 7.07%. In addition, the Mean Squared Error (MSE) and Root Mean Squared Error (RMSE) yielded values of 0.0115 and 0.1072 correspondingly. The metrics, specifically the root mean square error (RMSE), offer valuable information on the size and dispersion of the prediction inaccuracies, indicating that the model's forecasts are generally close to the actual values. The R-Squared (R2) metric, which is widely regarded as highly informative in regression tasks, exhibited a remarkable value of 0.9914 for the MLP-CROA. This figure indicates that a remarkable 99.14% of the variability in time overruns can be explained by the predictors included in our model, which serves as evidence of the model's strong predictive ability.

Upon delving into the relative metrics, it was observed that the Relative Absolute Error (RAE) and Relative Squared Error (RSE) exhibited values of 0.3549 and 0.1997, correspondingly. The utilization of these metrics, which provide a comparative perspective in contrast to a simplistic benchmark, highlights the superior performance of the MLP-CROA model in terms of its predictive accuracy and precision. Figure 9 depicts the bar chart that accompanies this analysis, serving as visual evidence to support the aforementioned findings. This figure succinctly presents a comprehensive summary of the model's performance. Each statistic, represented by separate bars, highlights a specific aspect of the model's capacity to make predictions, collectively providing an overview of a model that is both strong and skilled.

In summary, based on the evaluation of metrics and visual representations, the results indicate that the combination of deep learning and bio-inspired optimization, as demonstrated by the MLP-CROA model, exhibits considerable potential in the field of construction project forecasting. The findings derived from this analysis provide a foundation for additional enhancements and future implementation in practical situations.

Comparison between the two models

The field of predictive modeling relies heavily on the practice of comparing different models, as this allows for valuable insights about their respective strengths, areas for potential improvement, and suitability for deployment. Within this particular setting, a notable comparison arises between the independent Multi-Layer Perceptron (MLP) and its enhanced variant, the MLP augmented with the Coral Reefs Optimization Algorithm (MLP-CROA).

The provided bar chart depicts a comparative assessment of the two models, showcasing their performance across many parameters in a side-by-side manner. Upon initial examination, it is evident that the MLP-CROA routinely demonstrates superior performance compared to the standalone MLP. This finding is further supported by a comprehensive analysis of specific measures. The Mean Absolute Error (MAE) of the MLP-CROA model demonstrates a significant decrease, indicating that the improved model provides predictions that are, on average, in closer proximity to the true values. In a similar vein, the metrics, such as Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and the relative metrics Relative Absolute Error (RAE) and Relative Squared Error (RSE) serve to demonstrate the MLP-CROA's exceptional predictive capabilities. As seen in Fig. 10. The R-Squared (R2) metric emerges as a particularly informative measure in this comparative analysis. Although both models exhibit high R2 values, showing a robust predictive capacity, the MLP-CROA model's R2 value of 0.9914 implies that it can account for an impressive 99.14% of the variability in time overruns, surpassing the standalone MLP model. The present comparative research serves to emphasize the strengths of the MLP-CROA approach, while also shedding light on the potential for significant transformation that arises from the integration of bio-inspired optimization techniques with deep learning models. By optimizing the model parameters, the Coral Reefs Optimization Algorithm significantly improves the performance of the Multilayer Perceptron (MLP), resulting in more precise and resilient predictions.

Nevertheless, it is imperative to recognize that although the MLP-CROA exhibits enhanced performance, it also involves a more intricate training procedure owing to the inclusion of an additional optimization phase. The intricate nature of this intricacy could potentially result in extended training durations and heightened computational requirements. It is crucial for both academics and practitioners to be aware of these factors when implementing these models.

The potential for predictive modeling in construction projects is attractive due to the synergistic relationship between deep learning and bio-inspired optimization, exemplified by the MLP-CROA. The undeniable improvement in model performance necessitates careful consideration of the trade-off between accuracy enhancements and computational expenses, hence ensuring the model's practicality for real-world implementations.

Discussion

The evaluation of our models, specifically the MLP-CROA, offers significant insight into forecasting time delays in building endeavors. By thoroughly examining the obtained outcomes and comparing them with the findings from the literature review, a comprehensive comprehension of the research field and the potential ramifications of our study may be achieved.

The results of our investigation indicate that the MLP-CROA model demonstrated a higher level of performance in accurately forecasting instances of time overruns. The improved performance can be ascribed to the collaborative effect of the deep learning model's intrinsic capabilities and the optimization proficiency of the Coral Reefs Optimization Algorithm. The impressive performance of our approach necessitates a comprehensive study of the underlying factors contributing to its efficacy. The optimization phase is a crucial step in refining the model parameters. It plays a significant role in navigating the solution space to locate either optimal or close to ideal solutions. This process ultimately leads to an improvement in the accuracy of predictions. The results obtained from our study align with the existing body of literature about time overruns in building projects. Sambasivan and Soon (2007) highlighted the noteworthy role of contractor-related and labor-related factors in contributing to schedule overruns. The predictive capabilities of our model make it a valuable tool for proactively addressing the reasons for time overruns, potentially reducing the negative consequences identified in their research. Mishra et al. (2018) highlighted the significance of time overruns in project performance. This viewpoint is supported by Siyabi and Khaleel (2021), who established a direct correlation between time overruns and project costs and quality. Utilizing our model, which offers precise forecasts, holds significant importance in facilitating proactive project management, thereby ensuring the adherence of projects to predetermined schedules and budgets.

In their study, Ghazali and Wong (2014) emphasized the widespread occurrence of time overruns in building projects, specifically focusing on the setting of Saudi Arabia. The significance of prediction tools, as exemplified by the ones produced in our work, is underscored by the widespread nature of these difficulties. The research conducted in our study utilizes sophisticated procedures that are in line with the prevailing academic trajectory. This is exemplified by Eltoukhy and Nassar's (2021) investigation, where they applied Support Vector Machines to forecast time overruns. Their findings reinforce the considerable promise of machine learning techniques within this field. Our study adds to the expanding corpus of literature that underscores the importance of resolving schedule overruns in building projects. Numerous factors, including contractor performance and labor shortages, have been recognized as influential in this widespread issue. However, incorporating machine learning and optimization techniques, exemplified by our MLP-CROA model, presents a promising pathway for applying predictive analytics in this domain. Our study emphasizes the transformative potential of advanced predictive models in achieving timely and cost-effective project completion, drawing upon the foundational works of researchers, such as Sambasivan and Soon (2007), Mishra et al. (2018), Siyabi and Khaleel (2021), Ghazali and Wong (2014), and Eltoukhy and Nassar (2021).

Conclusion

The construction sector, within the project management domain, finds itself at a pivotal juncture where innovation intersects with tradition. The main aim of this study was to utilize deep learning, in conjunction with the Coral Reefs Optimization Algorithm (CROA), to forecast delays in time completion for construction projects in Jordan. This study used the Multi-Layer Perceptron (MLP) as the foundational deep learning model to analyze a dataset including diverse construction projects, their respective timeframes, and financial parameters. The results of our study were both enlightening and encouraging. The independent Multilayer Perceptron (MLP) had notable prediction capabilities, further improved by incorporating the Cross-Residual Optimization Algorithm (CROA). The MLP-CROA model, after optimization, consistently demonstrated superior performance compared to its non-optimized counterpart across various evaluation measures, ranging from Mean Absolute Error to R-squared. The performance above not only highlights the precision of the model but also its viability for implementation in practical situations.

These findings have significant consequences for the construction industry in Jordan. The issue of time overruns, which frequently lead to cost overextensions, has consistently posed a significant difficulty. The research provides a comprehensive set of tools, including a predictive model, enabling the proactive identification of probable time overruns. This allows project managers to implement corrective measures promptly. Furthermore, the incorporation of bio-inspired optimization methods, shown by the demonstrated effectiveness of the CROA, implies the existence of a broader scope for further investigation. Methods such as CROA can play a crucial role in enhancing forecasting accuracy and optimizing multiple aspects of project management. Upon reflection, it is crucial to recognize the intricacies that arise from the optimization process, notwithstanding the hopeful outcomes. The intricate nature of these phenomena might result in significant computational requirements, a factor that stakeholders need to be aware of. Nevertheless, based on our research findings, the trade-off favors improved accuracy and predictive capacity. In Jordan's construction industry, integrating conventional methodologies with emerging technologies, such as deep learning and optimization algorithms, can serve as a guiding beacon, facilitating the timely and cost-efficient execution of projects.

References

Akinosho, T. D., Oyedele, L. O., Bilal, M., Ajayi, A. O., Delgado, M. D., Akinade, O. O., & Ahmed, A. A. (2020). Deep learning in the construction industry: A review of present status and future innovations. Journal of Building Engineering, 32, 101827.

Ali, K., Amir, E., & Mahdi, M. (2023). Buckling resistance prediction of high-strength steel columns using metaheuristic-trained artificial neural networks. Structures, 56(C), 104853.

Arabiat, A., Al-Bdour, H., & Bisharah, M. (2023). Predicting the construction projects time and cost overruns using K-nearest neighbor and Artificial Neural Network: A case study from Jordan. Asian Journal of Civil Engineering, 24(7), 2405–2414. https://doi.org/10.1007/s42107-023-00649-7

Ashtari, M. A., Ansari, R., Hassannayebi, E., & Jeong, J. (2022). Cost Overrun Risk assessment and prediction in construction projects: A Bayesian network classifier approach. Buildings, 12(10), 1660.

Bin Seddeeq, A., Assaf, S., Abdallah, A., & Hassanain, M. A. (2019). Time and cost overrun in the Saudi Arabian oil and gas construction industry. Buildings, 9(2), 41.

Eltoukhy, M., & Nassar, A. (2021). Using support vector machine (SVM) for time and cost overrun analysis in construction projects in Egypt. International Journal of Civil Engineering and Technology (IJCIET). https://doi.org/10.34218/ijciet.12.3.2021.002

Gharaibeh, L. G., Matarneh, S. T., Arafeh, M., & Sweis, G. (2020). Factors leading to design changes in Jordanian Construction Projects. International Journal of Productivity and Performance Management, 70(4), 893–915. https://doi.org/10.1108/ijppm-08-2019-0412

Ghazali, F., & Wong, H. (2014). Risk ranking for tunnelling construction projects in malaysia. Journal of Construction Engineering and Project Management, 4(1), 29–36. https://doi.org/10.6106/jcepm.2014.4.1.029

Gómez-Cabrera, A., Gutierrez-Bucheli, L., & Muñoz, S. (2023). Causes of time and cost overruns in construction projects: A scoping review. International Journal of Construction Management. https://doi.org/10.1080/15623599.2023.2252288

Gómez-Cabrera, A., Salazar, L. A., Ponz-Tienda, J. L., & Alarcón, L. F. (2020). Lean tools proposal to mitigate delays and cost overruns in construction projects. In Proceedings of the 28th annual conference of the international group for lean construction (IGLC). https://doi.org/10.24928/2020/0049.

Gondia, A., Siam, A., El-Dakhakhni, W., & Nassar, A. H. (2020). Machine learning algorithms for construction projects delay risk prediction. Journal of Construction Engineering and Management, 146(1), 04019085.

Hu, Z., Karami, H., Rezaei, A., Dadras Ajirlou, Y., Piran, M. J., Band, S. S., & Mosavi, A. (2021). Using soft computing and machine learning algorithms to predict the discharge coefficient of curved labyrinth overflows. Engineering Applications of Computational Fluid Mechanics, 15(1), 1002–1015.

Kamaruddeen, A. M., Sung, C. F., & Wahi, W. (2020). A study on factors causing cost overrun of construction projects in Sarawak, Malaysia. Civil Engineering and Architecture, 8(3), 191–199. https://doi.org/10.13189/cea.2020.080301

Kaveh, A. (2014). Advances in metaheuristic algorithms for optimal design of structures (pp. 9–40). Springer.

Kaveh, A., Dadras, E. A., Javadi, S. M., & Geran, M. N. (2021). Machine learning regression approaches for predicting the ultimate buckling load of variable-stiffness composite cylinders. Acta Mechanica, 232, 921–931.

Kaveh, A., Eskandari, A., & Movasat, M. (2023). Buckling resistance prediction of high-strength steel columns using metaheuristic-trained artificial neural networks. Structures, 56(C), 104853.

Kaveh, A., Gholipour, Y., & Rahami, H. (2008). Optimal design of transmission towers using genetic algorithm and neural networks. International Journal of Space Structures, 23(1), 1–19.

Kaveh, A., & Khavaninzadeh, N. (2023, June). Efficient training of two ANNs using four meta-heuristic algorithms for predicting the FRP strength. In Structures (Vol. 52, pp. 256–272). Elsevier.

Maltbie, N., Niu, N., Van Doren, M., & Johnson, R. (2021, August). XAI tools in the public sector: A case study on predicting combined sewer overflows. In Proceedings of the 29th ACM joint meeting on European software engineering conference and symposium on the foundations of software engineering (pp. 1032–1044).

Mishra, A., Bhandari, S., & Jha, T. (2018). Factors affecting performance and time extension of ongoing construction projects under town development fund, Nepal. Journal of Advanced Research in Construction & Urban Architecture, 03(04), 7–25. https://doi.org/10.24321/2456.9925.201805

Moghayedi, A., & Windapo, A. (2020, December). Predicting the impact size of uncertainty events on construction cost and time of highway projects using ANFIS technique. In Collaboration and integration in construction, engineering, management and technology: Proceedings of the 11th international conference on construction in the 21st century, London 2019 (pp. 203–209). Springer.

Moon, H., Williams, T. P., Lee, H. S., & Park, M. (2020). Predicting project cost overrun levels in bidding stage using ensemble learning. Journal of Asian Architecture and Building Engineering, 19(6), 586–599.

Natarajan, A. (2022). Reference class forecasting and machine learning for improved offshore oil and gas megaproject planning: Methods and application. Project Management Journal, 53(5), 456–484.

Rofooei, F. R., Kaveh, A., & Masteri, F. F. (2011). Estimating the vulnerability of concrete moment resisting frame structures using artificial neural networks. International Journal of Operational Research, 1(3), 433–448.

Sambasivan, M., & Soon, Y. (2007). Causes and effects of delays in Malaysian construction industry. International Journal of Project Management, 25(5), 517–526. https://doi.org/10.1016/j.ijproman.2006.11.007

Sanni-Anibire, M. O., Zin, R. M., & Olatunji, S. O. (2021). Developing a machine learning model to predict the construction duration of tall building projects. Journal of Construction Engineering, 4(1), 022–036.

Sharma, S., & Gupta, A. K. (2021). Analysis of factors affecting cost and time overruns in construction projects. Lecture Notes in Civil Engineering. https://doi.org/10.1007/978-981-33-6969-6_6

Shoar, S., Chileshe, N., & Edwards, J. D. (2022). Machine learning-aided engineering services’ cost overruns prediction in high-rise residential building projects: Application of random forest regression. Journal of Building Engineering, 50, 104102.

Siyabi, H., & Khaleel, O. (2021). Cost overrun in construction projects in Oman: Case study. Journal of Student Research. https://doi.org/10.47611/jsr.v10i3.1382

Sohrabi, H., & Noorzai, E. (2022). Risk-supported case-based reasoning approach for cost overrun estimation of water-related projects using machine learning. Engineering, Construction and Architectural Management., 2022, 1.

Uddin, S., Ong, S., & Lu, H. (2022). Machine learning in project analytics: A data-driven framework and case study. Scientific Reports, 12(1), 15252.

Yin, Z., Zahedi, L., Leon, A. S., Amini, M. H., & Bian, L. (2022). A machine learning framework for overflow prediction in combined sewer systems. In World environmental and water resources congress 2022 (pp. 194–205).

Funding

The authors received no funding from any entity for the work they submitted.

Author information

Authors and Affiliations

Contributions

all authors wrote, analyze , prepared figures and reviewed the manuscript .

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shihadeh, J., Al-Shaibie, G., Bisharah, M. et al. Evaluation and prediction of time overruns in Jordanian construction projects using coral reefs optimization and deep learning methods. Asian J Civ Eng 25, 2665–2677 (2024). https://doi.org/10.1007/s42107-023-00936-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42107-023-00936-3