Abstract

The nested error regression (NER) model is a standard tool to analyze unit-level data in the field of small area estimation. Both random effects and error terms are assumed to be normally distributed in the standard NER model. However, in the case that asymmetry of distribution is observed in a given data, it is not appropriate to assume the normality. In this paper, we suggest the NER model with the error terms having skew-normal distributions. The Bayes estimator and the posterior variance are derived as simple forms. We also construct the estimators of the model-parameters based on the moment method. The resulting empirical Bayes (EB) estimator is assessed in terms of the conditional mean squared error, which can be estimated with second-order unbiasedness by parametric bootstrap methods. Through simulation and empirical studies, we compare the skew-normal model with the usual NER model and illustrate that the proposed model gives much more stable EB estimator when skewness is present.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Linear mixed models and their model-based estimators have been recognized as a useful method in small area estimation (SAE). Direct design-based estimates of small area means have large standard errors because small sizes of samples from small areas, and the empirical best linear unbiased predictors (EBLUP) in the linear mixed models provide reliable estimates by “borrowing strength” from neighboring areas and using data of auxiliary variables. Such a model-based method for small area estimation has been studied extensively and actively from both theoretical and applied aspects. For comprehensive reviews of small area estimation, see Ghosh and Rao (1994), Pfeffermann (2013) and Rao and Molina (2015).

Among linear mixed models, Battese et al. (1988) used the nested error regression (NER) model for analyzing unit-level data in the context of SAE. This model consists of the two random variables, namely the random area effects depending on areas and the sampling error terms. Both the random variables are assumed to be normally distributed in standard NER models. While the normality assumption enables us to handle the NER models analytically, there is a growing demand for generalization of distributional assumptions to model a wider variety of characteristics of data. Asymmetry, or skewness, of distributions is one such example. When we analyze positive-valued data like income and price, we transform the data using the logarithm or the Box–Cox transformation to fit to the normality. However, the transformations may not always remove adequately the asymmetry of the data. In such situations, it is preferable to introduce an asymmetric distribution.

As a tool for relaxing the symmetry assumption, the skew-normal distribution suggested by Azzalini (1985) has been studied in the literature. In the linear mixed models, Arellano-Valle et al. (2005) considered the maximum likelihood (ML) estimation in the case where the random effect and/or the error term follow skew-normal distributions. The same model was treated by Arellano-Valle et al. (2007) from a Bayesian perspective. In the context of SAE, Ferraz and Moura (2012) dealt with the Fay–Herriot type area-level model with random effects and sampling errors having normal and skew-normal distributions, respectively, and constructed the hierarchical Bayes estimators. Diallo and Rao (2018) considered the NER model with both random effects and sampling errors having skew-normal distributions, and treated the estimation of complex parameters, such as poverty indicators, based on some optimization techniques. However, the asymptotic properties of the estimators were not investigated analytically.

In this paper, we consider the NER model with only the sampling errors having skew-normal distributions. This is different from the model treated by Diallo and Rao (2018), because the random effects in our model are normally distributed. A reason of this setup is that in our investigation, the estimation of the variance and skewness of the random effects may become unstable unless the number of areas is very large. These distributional assumptions also do not seem inappropriate in our empirical study given in Sect. 6.

In our setup of the NER model with the skew-normal errors, we derive the estimator of the model-parameters based on the moment method. The empirical Bayes (EB) estimators of parameters related to area means are analytically derived, and second-order unbiased estimators of their conditional mean squared errors (CMSE) are provided based on the parametric bootstrap. The performances of the suggested methods are examined through simulation and empirical studies.

The article is organized as follows. In Sect. 2, we provide a brief review on a skew-normal distribution and the setup of our model. Bayesian calculations of the posterior mean and variance are analytically described in Sect. 3. In Sect. 4, the estimation methods for the model-parameters estimation are suggested, and the second-order unbiased estimator of CMSE of the empirical Bayes estimator is provided. The performances of the proposed methods are investigated by simulation and empirical studies in Sects. 5 and 6 respectively. Concluding remarks are given in Sect. 7. Finally, all the technical proofs are provided in the Appendix.

2 Nested error regression models with skew-normal errors

2.1 Skew-normal distributions

We begin by explaining the skew-normal distribution which relaxes symmetry of the normal distribution. It has been widely studied since Azzalini (1985), mainly owing to the mathematical tractability. A random variable Z is said to follow a standard skew-normal distribution, denoted by \({{\mathcal {S}}}{{\mathcal {N}}}({\lambda })\), if the density function is given by

where \(\phi (\cdot )\) and \(\Phi (\cdot )\) are the probability density function (pdf) and cumulative distribution function (cdf) of the standard normal distribution \(\mathcal{N}(0,1)\), respectively. Here \({\lambda }\in {{\mathbb {R}}}\) is a parameter which regulates the skewness of the distribution. When \({\lambda }=0\), \(f_Z(z)\) reduces to \(\phi (z)\), so that \({{\mathcal {S}}}{{\mathcal {N}}}({\lambda })\) includes the standard normal distribution as a special case. Figure 1 shows the density function of \({{\mathcal {S}}}{{\mathcal {N}}}({\lambda })\) for several \({\lambda }\).

For practical use, consider the location-scale transformation of Z, \(Y=\mu +{\sigma }Z\), for \(\mu \in {{\mathbb {R}}}\) and \({\sigma }>0\). Then, Y has a skew-normal distribution \({{\mathcal {S}}}{{\mathcal {N}}}(\mu ,{\sigma }^2,{\lambda })\), whose density function is

Following Azzalini (2013), the mean, variance and the third central moment of Y are, respectively, written as

which gives the skewness

where \({\delta }={\lambda }/\sqrt{1+{\lambda }^2}\) or \({\lambda }={\delta }/\sqrt{1-{\delta }^2}\). The feasible range of \({\gamma }_1\) is \((-{\gamma }_1^{\max },{\gamma }_1^{\max })\) for \({\gamma }_1^{\max } = \lim _{{\lambda }\rightarrow {\infty }}{\gamma }_1 = \lim _{{\delta }\rightarrow 1}{\gamma }_1=\sqrt{2}(4-\pi )/(\pi -2)^{3/2}\approx 0.9953\).

To handle the skew-normal distribution analytically, the following additive representation of \(Z\sim {{\mathcal {S}}}{{\mathcal {N}}}({\lambda })\) is useful:

where \(U_0\) and \(U_1\) are mutually independent random variables with \(U_0\sim \mathcal{N}(0,1)\) and \(U_1\sim \mathcal {TRN}(0,1,0)\). Here \(\mathcal {TRN}_p({\varvec{\mu }},{\varvec{{\Sigma }}},{{\varvec{d}}})\) denotes a p-variate truncated normal distribution with untruncated mean vector \({\varvec{\mu }}\), untruncated covariance matrix \({\varvec{{\Sigma }}}\) and the ith variable truncated below the ith element of \({{\varvec{d}}}\). Omitting p implies a univariate case. For the derivation of the additive representation, see Henze (1986) and Azzalini (1986). This representation is used in the subsequent sections.

It should be remarked that there are some issues in estimation and inference of the skew-normal distribution. First, as described above, the skewness is limited in the admissible range \((-{\gamma }_1^{\max },{\gamma }_1^{\max })\), so that skew-normal distributions cannot treat highly skewed situations. Pewsey (2000) investigated the performances of the estimators of \({\gamma }_1\) based on the moment method (MM) and the ML methods through a simulation study, and shows that as \({\lambda }\) gets larger, more often the MM estimates of \({\gamma }_1\) fall out of the admissible range of \({\gamma }_1\) and the ML estimates reach the boundary values. As the sample size increases, however, the MM and ML estimates take values inside the admissible range more frequently. Another issue, as pointed out by Azzalini (1985), is that the Fisher information matrix for \(Y\sim {{\mathcal {S}}}{{\mathcal {N}}}(\mu , {\sigma }^2, {\lambda })\) becomes singular as \({\lambda }\rightarrow 0\). This means that the standard asymptotic theory cannot be applicable around \({\lambda }=0\). For the details, see Azzalini (1985, 2013), Azzalini and Capitanio (1999) and Pewsey (2000). Thus, in this paper, we treat the case of \({\lambda }\not = 0\).

2.2 Model setup and notations

In this paper, we consider m small areas, and for the ith area we have \(n_i\) observations of \((y_{ij}, {{\varvec{x}}}_{ij}^\top )^\top ,\,j=1,\ldots ,n_i\), where \({{\varvec{x}}}_{ij} = (z_{00},{{\varvec{z}}}_{i0}^\top ,{{\varvec{z}}}_{ij}^\top )^\top \) is a vector of covariates which consists of the following three parts: \(z_{00}=1\) is a constant term which is common in any i and j, \({{\varvec{z}}}_{i0}\) is a \(p_1\)-dimensional vector of covariates which do not depend on j, and \({{\varvec{z}}}_{ij}\) is a \(p_2\)-dimensional vector of covariates which depend on i and j. Let \(N = \sum _{i=1}^mn_i\) denote the total sample size. Then, we consider the following NER model with skew-normal errors:

for \(j = 1,\ldots , n_i\) and \(i = 1,\ldots , m\), where \({\varvec{\beta }}= \big ({\beta }_0, {\varvec{\beta }}_1^\top , {\varvec{\beta }}_2^\top \big )^\top \) is a \((1 + p_1 + p_2)\)-dimensional unknown vector of regression coefficients. Assume that \(v_i\)’s and \({\varepsilon }_{ij}\)’s are mutually independent random variables with \(v_i \sim \mathcal{N}(0, \tau ^2),\, {\varepsilon }_{ij} \sim {{\mathcal {S}}}{{\mathcal {N}}}(0, {\sigma }^2, {\lambda })\). From (4), \({\varepsilon }_{ij}\) is expressed as:

where \(u_{0ij}\)’s and \(u_{1ij}\)’s are mutually independent, \(u_{0ij} \sim {\mathcal {N}}(0,1)\), and \(u_{1ij} \sim \mathcal {TRN}(0,1,0)\). Note that we do not adjust the location of the error term to zero, so that for \({\lambda }\ne 0\), the error has the non-zero mean

This implies that the constant term \({\beta }_0\) differs from that in the normal case.

Let \({{\varvec{y}}}_i = (y_{i1},\ldots ,y_{in_i})^\top \), \({{\varvec{X}}}_i = ({{\varvec{x}}}_{i1},\ldots ,{{\varvec{x}}}_{in_i})^\top \) and \({\varvec{\epsilon }}_i = ({\varepsilon }_{i1},\ldots ,{\varepsilon }_{in_i})^\top \). The model (5) is expressed in a matricial form as

where \(\mathbf{1 }_n\) denotes a vector of size n with all elements equal to one. Also, \({\varvec{\epsilon }}_i\) is written as

where \({{\varvec{u}}}_{0i} = (u_{0i1},\ldots , u_{0in_i})^\top \) and \({{\varvec{u}}}_{1i} = (u_{1i1},\ldots , u_{1in_i})^\top \). Throughout the paper, we use the notations \({{\overline{y}}}_i = n_i^{-1}\sum _{j=1}^{n_i}y_{ij}\) and \({{\overline{{{\varvec{x}}}}}}_i = n_i^{-1}\sum _{j=1}^{n_i}{{\varvec{x}}}_{ij}\). The vector of unknown parameters is denoted by \({\varvec{\omega }}= \big ({\varvec{\beta }}^\top , {\sigma }^2, \tau ^2, {\lambda }\big )^\top \).

3 Bayesian calculation on the predictor

3.1 Bayes estimator

We now consider the problem of estimating (predicting) \({\theta }_i={{\varvec{c}}}^\top {\varvec{\beta }}+v_i\) for known \({{\varvec{c}}}\). The Bayes estimator of \({\theta }_i\), denoted by \({{\hat{{\theta }}}}_i^B({\varvec{\omega }})\), is given by the posterior mean of \({\theta }_i\):

To evaluate \(E[v_i{\,|\,}{{\varvec{y}}}_i]\), it is noted that the conditional density function of \(y_{ij}\) given \(v_i\) and \(u_{1ij}\) is given by

where \(\phi _p(\cdot ;{\varvec{\mu }},{\varvec{{\Sigma }}})\) denotes the density function of \(\mathcal{N}_p({\varvec{\mu }},{\varvec{{\Sigma }}})\), a p-variate normal distribution with mean \({\varvec{\mu }}\) and covariance matrix \({\varvec{{\Sigma }}}\). Also, the density function of \(v_i\) and \(u_{1ij}\) are respectively \(f(v_i)=\phi (v_i;0,\tau ^2)\) and \(f(u_{1ij})=2\phi (u_{1ij})I(u_{1ij}>0)\)

where \(I(\cdot )\) is an indicator function. The conditional density function of \((v_i,{{\varvec{u}}}_{1i})\) given \({{\varvec{y}}}_i\) is written as

To rewrite the conditional density, let

Also, let \({{\varvec{R}}}_i=(1-\rho _i){{\varvec{I}}}_{n_i} + \rho _i \mathbf{1 }_{n_i}\mathbf{1 }_{n_i}^\top \) for the \(n \times n\) identity matrix \({{\varvec{I}}}_n\) and

Denote the (j, k) element of \({{\varvec{R}}}_i\) by \(\rho _{i,jk}\), namely,

Then, the conditional density can be rewritten as

where \({\varvec{\mu }}_i = (\mu _{i1},\ldots ,\mu _{in_i})^\top \) for

and

The derivation of (6) is given in the Appendix. Let

Then, it can be seen that \({{\varvec{w}}}_i{\,|\,}{{\varvec{y}}}_i\sim \mathcal {TRN}_{n_i}(\mathbf{0 },{{\varvec{R}}}_i,-{{\varvec{a}}}_i)\) and

In the last expression, we need to calculate the conditional mean of \(w_{ij}\) given \({{\varvec{y}}}_i\). As shown in Tallis (1961), the moment of a multivariate truncated normal distribution involves multiple integrals. However, Diallo and Rao (2018) pointed out that a simple structure of \({{\varvec{R}}}_i\) allows us to reduce these multiple integrals to one-dimensional integrals on a product of univariate normal distribution’s cdf’s. This simplification leads to a significant reduction of computational cost and gives a clear expression of \(E[w_{ij}{\,|\,}{{\varvec{y}}}_i]\) described in the following lemma. All the proofs in this section are provided in the Appendix.

Lemma 3.1

For \(j=1,\ldots ,n_i\),

where

Using this result, we derive the Bayes estimator \({{\hat{{\theta }}}}_i^B({\varvec{\omega }})\) as presented in the following theorem.

Theorem 3.1

The Bayes estimator of \({\theta }_i\) is given by

It is noted that \({{\varvec{c}}}^\top {\varvec{\beta }}+ n_i\tau ^2({\sigma }^2+n_i\tau ^2)^{-1}({{\overline{y}}}_i-{{\overline{{{\varvec{x}}}}}}_i^\top {\varvec{\beta }})\) corresponds to the Bayes estimator in the NER model under normality. Thus the last term in the parenthesis is interpreted as a correction term for the mean \(\mu _{\varepsilon }\) of the skew-normal error.

3.2 Posterior variance

We next calculate the posterior variance of \({\theta }_i\). Since \({\theta }_i-{{\hat{{\theta }}}}_i^B({\varvec{\omega }})=v_i-E[v_i{\,|\,}{{\varvec{y}}}_i]\), we have

so that we need to calculate the second moments of \({{\varvec{w}}}_i\) given \({{\varvec{y}}}_i\). Using the special structure of \({{\varvec{R}}}_i\), we obtain an easy-to-calculate expression of \(E[w_{ij}w_{ik} {\,|\,} {{\varvec{y}}}_i]\).

Lemma 3.2

For any \(j,k=1,\ldots ,n_i\),

where \(\phi (\cdot ,\cdot ;\rho )\) is the density function of \(\mathcal{N}_2(\mathbf{0 },{{\varvec{R}}})\) for the correlation matrix \({{\varvec{R}}}\) with off-diagonal elements \(\rho \), and

Using Lemma 3.2, we obtain a tractable form of the posterior variance.

Theorem 3.2

The posterior variance of \({\theta }_i\) given \({{\varvec{y}}}_i\) is

where

It is noted that the first term of RHS in (13) is identical to the posterior variance of \({\theta }_i\) in the normal case, and the second term comes from the skewness of the error term.

4 Empirical Bayes (EB) estimator

4.1 Estimation of parameters

Since the Bayes estimator (10) depends on the unknown parameters \({\varvec{\omega }}= \big ({\beta }_0, {\varvec{\beta }}_1^\top , {\varvec{\beta }}_2^\top , {\sigma }^2, \tau ^2, {\lambda }\big )^\top \), we need to estimate them from the given observations. The procedure of estimation is quite similar to the method proposed by Fuller and Battese (1973) with some adjustments made for estimating \({\beta }_0\), \({\sigma }^2\) and \({\lambda }\).

We first consider the estimation of \({\varvec{\beta }}\) in the case that \({\sigma }^2\), \(\tau ^2\) and \({\lambda }\) are known. As described in Sect. 2.2, since the location of the error term is not centered at zero in our setup, it is seen that

where \({\beta }_{0{\varepsilon }} = {\beta }_0 + \mu _{\varepsilon }\). The covariance matrix of \({{\varvec{y}}}_i\) is given by

for \(m_2\) defined in (1). Assuming that the matrix \(\big ({{\varvec{X}}}_1^\top ,\ldots ,{{\varvec{X}}}_m^\top \big )^\top \) is of full rank, one can obtain the best linear unbiased estimator (BLUE) of \({\varvec{\beta }}_{\varepsilon }\) as

Then, the estimator \({{\widetilde{{\varvec{\beta }}}}}\) of \({\varvec{\beta }}\) is obtained as \({{\widetilde{{\varvec{\beta }}}}}= {{\widetilde{{\varvec{\beta }}}}}({\sigma }^2, \tau ^2, {\lambda }) = \big ({{{\tilde{{\beta }}}}}_0, {{\widetilde{{\varvec{\beta }}}}}_1^\top , {{\widetilde{{\varvec{\beta }}}}}_2^\top \big )^\top \) where

In practice, the parameters \(({\sigma }^2, \tau ^2, {\lambda })\) are unknown, so that we need to estimate them beforehand. For those areas with \(n_i \ge 2\), we take the deviations from the group mean \({{\overline{y}}}_i = n_i^{-1} \sum _{j=1}^{n_i} y_{ij}\) for the model (5), which gives

where \({{\tilde{y}}}_{ij} = y_{ij} - {{\overline{y}}}_i,\,{\tilde{{{\varvec{z}}}}}_{ij} = {{\varvec{z}}}_{ij} - {{\overline{{{\varvec{z}}}}}}_i,\,{\tilde{{\varepsilon }}}_{ij} = {\varepsilon }_{ij} - {{\overline{{\varepsilon }}}}_i\), and \({{\overline{{{\varvec{z}}}}}}_i\) and \({{\overline{{\varepsilon }}}}_i\) are defined analogously to \({{\overline{y}}}_i\). In the method of Fuller and Battese (1973), it is sufficient to consider the second moment of \({\tilde{{\varepsilon }}}_{ij}\) for estimating the variance parameter \({\sigma }^2\). However, we use the second and third moment to jointly estimate \({\sigma }^2\) and the skewness parameter \({\lambda }\). Here we see that for \(j = 1,\ldots ,n_i\),

for \(m_3\) defined in (1). It is noted that the third moment can be used for those areas with \(n_i \ge 3\). Assume that \(({\tilde{{{\varvec{z}}}}}_{11},\ldots ,{\tilde{{{\varvec{z}}}}}_{1n_1},\ldots ,{\tilde{{{\varvec{z}}}}}_{m1},\ldots ,{\tilde{{{\varvec{z}}}}}_{mn_m})^\top \) has full column rank and let \(\hat{{\varepsilon }}_{ij}\) be the residual obtained by regressing \({{\tilde{y}}}_{ij}\) on \(\tilde{z}_{ij}\). Since \({\delta }={\lambda }/\sqrt{1+{\lambda }^2}\), based on (15) and (16) we get estimators of \({\sigma }^2\) and \({\delta }\) as the solution of the following equations:

where \(\eta _1 = \sum _{i=1}^m n_i^{-1}(n_i-1)(n_i-2)\), \(\mathrm{RSS}(1) = \sum _{i=1}^m \sum _{j=1}^{n_i} \hat{{\varepsilon }}_{ij}^2\) is the residual sum of squares and \(\mathrm{RSC}(1) = \sum _{i=1}^m \sum _{j=1}^{n_i} \hat{{\varepsilon }}_{ij}^3\) is the residual sum of cubes. Note that the degrees of freedom associated with the residual is taken into account only in the first formula of (17), so that \({{\widehat{m}}}_2\) is an unbiased estimator of \(m_2\) but \({{\widehat{m}}}_3\) is not unbiased. Solving the equations (17) gives the estimators as

We remark that \(\big |{{\widetilde{{\delta }}}}\big | < 1\) is assumed here. As mentioned in Sect. 2.1, however, smaller sample size or larger \(|{\lambda }|\) value may increase the possibility of violating this condition. In this case, we suggest the truncated estimators

This problem is examined in the simulation study presented in Sect. 5.

Next, using \({{\widehat{m}}}_2\), an unbiased estimator \(\widetilde{\tau }^2\) of \(\tau ^2\) is given by

where \(\mathrm{RSS}(2)\) is the residual sum of squares obtained by regressing \(y_{ij}\) on \({{\varvec{x}}}_{ij}\), and

Since \(\widetilde{\tau }^2\) can take a negative value, we truncate \(\widetilde{\tau }^2\) at zero and get the truncated estimator as \({{\hat{\tau }}}^2 = \max (\widetilde{\tau }^2,0)\). Now \({\varvec{\beta }}\) can be estimated as \({{\widehat{{\varvec{\beta }}}}}= {{\widetilde{{\varvec{\beta }}}}}({{\hat{{\sigma }}}}^2, {{\hat{\tau }}}^2, {{\widehat{{\lambda }}}})\). Substituting the estimator \({{\widehat{{\varvec{\omega }}}}}= \big ({{\widehat{{\beta }}}}_0,{{\widehat{{\varvec{\beta }}}}}_1^\top ,{{\widehat{{\varvec{\beta }}}}}_2^\top ,{{\hat{{\sigma }}}}^2,{{\hat{\tau }}}^2,{{\widehat{{\lambda }}}}\big )^\top \) into the Bayes estimator \({{\hat{{\theta }}}}_i^B({\varvec{\omega }})\), one gets the empirical Bayes (EB) estimator \({{\hat{{\theta }}}}_i^{EB}={{\hat{{\theta }}}}_i^B({{\widehat{{\varvec{\omega }}}}})\) of \({\theta }_i\).

Finally, we show the consistency and other asymptotic properties of the estimator \({{\widehat{{\varvec{\omega }}}}}\), which implies that the EB estimator \({{\hat{{\theta }}}}_i^{B}({{\widehat{{\varvec{\omega }}}}})\) converges to the Bayes estimator \({{\hat{{\theta }}}}_i^B({\varvec{\omega }})\) as \(m\rightarrow \infty \). The asymptotic properties are used in the next section for deriving a second-order unbiased estimator of the conditional mean squared error of \({{\hat{{\theta }}}}_i^{EB}\). To this end, we assume the following regularity condition:

(RC) \(n_i\)’s are bounded below and above, that is, there exist positive constants \({\underline{n}}\) and \(\overline{n}\) satisfying \({\underline{n}} \le n_i \le \overline{n}\). Elements of \({{\varvec{X}}}_i\) are uniformly bounded, \(\sum _{i=1}^m {{\varvec{X}}}_i^\top {{\varvec{V}}}_i^{-1}{{\varvec{X}}}_i\) is a positive definite matrix, and \(m^{-1}\sum _{i=1}^m{{\varvec{X}}}_i^\top {{\varvec{V}}}_i^{-1}{{\varvec{X}}}_i\) converges to a positive definite matrix.

As remarked before, the standard asymptotic theory does not hold when \({\lambda }= 0\). Thus, in addition to (RC), the condition \({\lambda }\ne 0\) is required to obtain the usual rate of convergence in the skew-normal case.

Theorem 4.1

If (RC) and \({\lambda }\ne 0\) hold, then

Thus it follows from (18) that \({{\widehat{{\varvec{\omega }}}}}- {\varvec{\omega }}{\,|\,} {{\varvec{y}}}_i = O_p(m^{-1/2})\).

4.2 Measuring uncertainty of the EB estimator

In this section, we evaluate the conditional mean squared error of the EB estimator for measuring the uncertainty. Although the unconditional mean squared error (MSE) is often used as a measure of uncertainty of the predictors, we employ the conditional mean squared error (CMSE) because the area-specific prediction of \({\theta }_i\) given \({{\varvec{y}}}_i\) is our main interest. The CMSE is initially proposed by Booth and Hobert (1998), suggesting that the MSE is inappropriate when small domains are supposed in mixed model settings and researchers focus on area-specific prediction. The CMSE of the EB estimator is defined as

which is decomposed as

because the cross product term is \(E[E[\{{{\hat{{\theta }}}}_i^B({\omega })-{\theta }_i\}\{{{\hat{{\theta }}}}_i^B({{\hat{{\omega }}}})-{{\hat{{\theta }}}}_i^B({\omega })\}\mid {{\varvec{y}}}_1, \ldots , {{\varvec{y}}}_m]\mid {{\varvec{y}}}_i] = E[\{{{\hat{{\theta }}}}_i^B({\omega })-E[{\theta }_i\mid {{\varvec{y}}}_1, \ldots , {{\varvec{y}}}_m]\}\{{{\hat{{\theta }}}}_i^B({{\hat{{\omega }}}})-{{\hat{{\theta }}}}_i^B({\omega })\}\mid {{\varvec{y}}}_i] =0\). Let \(g_{1i}({\varvec{\omega }},{{\varvec{y}}}_i)=E\big [\big \{{{\hat{{\theta }}}}_i^B({\varvec{\omega }})-{\theta }_i\big \}^2{\,|\,}{{\varvec{y}}}_i\big ] \) and \(g_{2i}({\varvec{\omega }},{{\varvec{y}}}_i)=E\big [\big \{{{\hat{{\theta }}}}_i^B({{\widehat{{\varvec{\omega }}}}})-{{\hat{{\theta }}}}_i^B({\varvec{\omega }})\big \}^2{\,|\,}{{\varvec{y}}}_i\big ]\). It is seen that \(g_{1i}({\varvec{\omega }},{{\varvec{y}}}_i)\) is the CMSE of the Bayes estimator and \(g_{2i}({\varvec{\omega }},{{\varvec{y}}}_i)\) involves the uncertainty on the estimation of the model parameters. Since the CMSE of the Bayes estimator corresponds to the posterior variance, from (13) in Theorem 3.2, we have

Also, \(g_{2i}({\varvec{\omega }},{{\varvec{y}}}_i)\) can be approximated as

which implies that \(g_{2i}({\varvec{\omega }},{{\varvec{y}}}_i)=O_p(m^{-1})\).

We derive the second-order unbiased estimator of the CMSE for \({\lambda }\ne 0\) case. Since it is difficult to calculate analytically the second-order approximations of \(g_{1i}({\varvec{\omega }},{{\varvec{y}}}_i)\) and \(g_{2i}({\varvec{\omega }},{{\varvec{y}}}_i)\), we use parametric bootstrap methods. Consider conditioning on the area i, thus \({{\varvec{y}}}_i\) is fixed. A parametric bootstrap sample \({{\varvec{y}}}_k^* = (y_{k1}^*,\ldots ,y_{kn_k}^*)^\top \) is generated from the model

where \(v_i^*\)’s and \({\varepsilon }_{ij}^*\)’s are mutually independent. We construct the estimator \({{\widehat{{\varvec{\omega }}}}}^*\) using the same method as used to obtain \({{\widehat{{\varvec{\omega }}}}}\) except that the bootstrap sample

is used to obtain \({{\widehat{{\varvec{\omega }}}}}^*\). It is noted that the given data \({{\varvec{y}}}_i\) is used in the bootstrap sample, because we consider the conditional expectation given \({{\varvec{y}}}_i\). Since we have the analytical form of \(g_{1i}({\varvec{\omega }},{{\varvec{y}}}_i)\) as (13), a second-order unbiased estimator of \(g_{1i}({\varvec{\omega }},{{\varvec{y}}}_i)\) is given by

where \(E^*[\cdot {\,|\,}{{\varvec{y}}}_i]\) is the expectation with respect to the bootstrap sample. Also, \(g_{2i}({\varvec{\omega }},{{\varvec{y}}}_i)\) is estimated by

It can be shown that \(E[{{\hat{g}}}_{1i} {\,|\,} {{\varvec{y}}}_i] = g_{1i}({\varvec{\omega }},{{\varvec{y}}}_i) + o_p(m^{-1})\) and \(E[{{\hat{g}}}_{2i} {\,|\,} {{\varvec{y}}}_i] = g_{2i}({\varvec{\omega }},{{\varvec{y}}}_i) + o_p(m^{-1})\), thus a second-order unbiased estimator of the CMSE is given by

Proposition 4.1

Assume (RC) and \({\lambda }\ne 0\). Then, \(\widehat{\mathrm{CMSE}}_i\) is a second-order unbiased estimator of the CMSE:

5 Simulation study

In this section we examine the performance of the proposed methods numerically through Monte Carlo simulation. Through this section, we consider the simple nested error regression model \(y_{ij} = \beta _0 + z_{i0}\beta _1 + z_{ij}\beta _2 + v_i + {\varepsilon }_{ij}\) for \(j=1,\ldots ,n,\,i=1,\ldots ,m\), where values of \(z_{i0}\) and \(z_{ij}\) are generated from \(\mathcal{N}(0,1)\) and fixed throughout the simulation runs. For simplicity, we set \(\beta _0 = \beta _1 = \beta _2 = {\sigma }^2 = \tau ^2 = 1\) and conduct the simulation experiment for different values of m, n and \({\lambda }\).

We first investigate the performance of the suggested estimators of the model-parameters. For the parameter \({\lambda }\) we treat the two cases of \({\lambda }= 1\) and \({\lambda }= 6\) which correspond to \({\gamma }_1=0.3873\) and \({\gamma }_1=0.9285\) for \({\gamma }_1\) defined in (3). Concerning (m, n), we treat four combinations \((m, n) = (20, 5),\,(20, 10),\,(70, 5),\,(70, 10)\). Based on \(R = 5,000\) simulation runs, we compute the bias and MSE for the estimator of each parameter, and the value of \(\Pr \big \{\big |{{\widetilde{{\delta }}}}\big |<1\big \}\), the probability that the estimated skewness is in the feasible range introduced in Sect. 2.1. These quantities are respectively calculated as

and those values are reported in Table 1. Note that values for \({{\widehat{{\beta }}}}_0,\,{{\widehat{{\beta }}}}_1\) and \({{\widehat{{\beta }}}}_2\) are multiplied by 100. It is observed that MSEs decrease as m increases, which coincides with the consistency of the estimators. As remarked in Sects. 2.1 and 4.1, it is seen that PFE increases as m and n increase, or \(|{\lambda }|\) decreases. From Table 1, it is seen that the estimator \({{\widehat{{\lambda }}}}\) has large values of the bias and the MSE for \(m=20\), but it gets more stable for \(m=70\). This shows that we need data with large m to estimate \({\lambda }\) precisely.

In the next study, we examine the performance of the estimator of the CMSE given in Sect. 4.2. Without loss of generality, we treat the prediction of \({\theta }_1 = {{\overline{{{\varvec{x}}}}}}_1^\top {\varvec{\beta }}+ v_1\) which is related to the area mean in the area \(i=1\). The model setup is the same as in the previous simulation except that we set \(n_1=\cdots =n_m =n= 5\) throughout the simulation runs. For values of \({\lambda }\) and m, consider the four cases \(({\lambda },m) = (0,20),\,(0,50),\,(3,20),\,(3,50)\). As conditioning values of \(y_{1j}\)’s, we use the qth quantile of the marginal distribution of \(y_{1j}\), denoted by \(y_{1j(q)}\), for \(q = 0.05,\,0.25,\,0.50,\,0.75,\) and 0.95. \(y_{1j(q)}\) is given by \(y_{1j(q)} = {\beta }_0 + z_{10}{\beta }_1 + z_{1j}{\beta }_2 + r_{1j(q)}\) where \(r_{1j(q)}\) is the qth quantile of \(r_{1j} = v_1 + {\varepsilon }_{1j}\). Here \(r_{1j}\) is distributed as \({{\mathcal {S}}}{{\mathcal {N}}}(0, {\sigma }^2+\tau ^2, {{\widetilde{{\lambda }}}})\) where \({{\widetilde{{\lambda }}}}= {\sigma }{\lambda }/\sqrt{{\sigma }^2 + (1+{\lambda }^2)\tau ^2}\). In advance, we calculate the true value of the CMSE for each q value based on \(R = 100{,}000\) simulation runs as follows:

where \({{\varvec{y}}}_{1(q)} = (y_{11(q)},\ldots ,y_{1n(q)})^\top \) and \({{\hat{{\theta }}}}_1^{EB(r)}\) is the EB estimate of \({\theta }_1\) in the rth iteration. After preparing the true values of the CMSE, we calculate the mean of the estimates for the CMSE and the percentage relative bias (RB) based on \(R = 1000\) simulation runs with each 100 bootstrap samples. These values are respectively calculated as

where \(\widehat{\text {CMSE}}_1^{(r)}\) is the estimate of the CMSE in the rth replication. Those values are reported in Table 2. For comparison, we calculate the corresponding quantities of the estimates for the CMSE and the percentage relative bias (RB) under the standard NER model where both the random effect and the error term are assumed to be normally distributed. The results of the proposed skew-normal model and the usual NER model are respectively reported in the columns ‘SN’ and ‘Normal’ in Table 2.

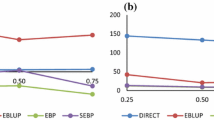

As for the true value of the CMSE in the SN column of Table 2, it can be seen that the CMSE in the case of \({\lambda }= 0\) takes larger values than that in the case of \({\lambda }= 3\). Also, the CMSE decreases insignificantly as m increases when \({\lambda }= 0\). These behaviors may be caused by considerable variation of the estimators in the case of \({{\varvec{a}}}=0\). The result of the RB implies that the CMSE tends to be overestimated in the case of \({\lambda }= 3\), though getting more samples suppress this inflation. Also, the effect of the conditioning value is not negligible when \({\lambda }= 3\): the RB decreases as the conditioning values are located to the right. Comparing the results of the two methods for \({\lambda }= 0\) case, the proposed model gives larger true CMSE and the performance of the CMSE estimate is worse, which is a reasonable consequence caused by the redundancy of the skewness parameter \({\lambda }\). For \({\lambda }= 3\) case, however, the true CMSE is smaller and the effect of the sample size on reduction of both true CMSE and RB is more significant in the skew-normal model. When we use the normal model in the case of \({\lambda }=3\), in contrast, the true CMSE cannot be reduced by increasing the sample size, which may result from inconsistency of the estimators for the parameters. Moreover, the CMSE is seriously underestimated regardless of the sample size. These results motivate us to use the proposed model in the situations where the skewness is present.

6 An illustrative example

We investigate the performance of the suggested model and estimation methods through an illustrative example. We use the posted land price data along the Keikyu train line in 2001. This train line connects the suburbs in the Kanagawa prefecture and the metropolitan area in Tokyo, so that the commuters and students who live in the suburb area in the Kanagawa prefecture take this line to go to Tokyo. Thus the land price is expected to depend on the distance from Tokyo. The posted land price data are available for 52 stations on the Keikyu line. We consider each station as a small area, namely \(m = 52\), and for the ith station, the land price data of \(n_i\) spots are available. Of the 52 stations, 37 stations have at least 3 observations.

For \(j = 1,\ldots ,n_i\), we have a set of observations \((y_{ij}, T_i^*, D_{ij}^*, FAR_{ij}^*)\), where \(y_{ij}\) denotes the log-transformed value of the posted land price (Yen/10,000) per square meter of the jth spot, \(T_i^*\) is the time (minute) it takes from the nearby station i to Tokyo station around 8:30 by train, \(D_{ij}^*\) is the geographical distance (meter) between the spot j and the station i and \(FAR_{ij}^*\) denotes the floor-area-ratio (%) of the spot j. Let \(T_i\), \(D_{ij}\) and \(FAR_{ij}\) be the log-transformed values of \(T_i^*\), \(D_{ij}^*\) and \(FAR_{ij}^*\). Then we consider the NER model with skew-normal errors, described as

where \(v_i\)’s and \({\varepsilon }_{ij}\)’s are mutually independent and distributed as \(\mathcal{N}(0,\tau ^2)\) and \({{\mathcal {S}}}{{\mathcal {N}}}(0,{\sigma }^2,{\lambda })\), respectively.

The left panel of Fig. 2 gives the histogram of \(y_{ij}\) for those areas satisfying \(n_i \ge 3\). It is revealed that the histogram of \(y_{ij}\) has a right-skewed shape, which means that after taking logarithm of the land price there still exists positive skewness. Hence, in this case, it is not appropriate to model the data based on symmetric distributions. However, the sample skewness of \(y_{ij}\)’s for the areas with \(n_i \ge 3\) is 1.317, which is out of the feasible range of the population skewness described in Section 2.1. The plot of the standardized values of \(y_{ij}\) for \(n_i \ge 3\) areas is depicted in Fig. 2, and it is observed that a couple of observations take extremely large values, which yields the strong positive-skewness. In our analysis given below, we remove the first and second largest observations from our analysis, and the resulting sample skewness drops to \({{\widetilde{{\delta }}}}=0.637\).

The estimated values of the model parameters and their standard errors under the proposed model (SN) and the usual NER (Normal) are provided in Table 3, where the standard errors are provided by square roots of the jackknife variance estimators. The values for the two models are identical except for \({\beta }_0, {\sigma }^2\), and \({\lambda }\). The effect of \(T_i\) and \(D_{ij}\) are negative, which implies that the land price decreases as the time for going to Tokyo and/or the distance to the nearby station increase. The estimate of \({\lambda }\) means that the land price data is still skewed to the right after log-transformation, though its standard error is so large. Since in the estimation of \({\lambda }\), we use the data of 37 areas with \(n_i\ge 3\), we need more data to lower the standard error of \({{\widehat{{\lambda }}}}\). The histogram of the predicted random effect and the residual of the proposed model , denoted as \({{\hat{v}}}_i = E[v_i{\,|\,}{{\varvec{y}}}_i]|_{{\varvec{\omega }}= {{\widehat{{\varvec{\omega }}}}}}\) and \(e_{ij} = y_{ij} - {{\varvec{x}}}_{ij}^\top {{\widehat{{\varvec{\beta }}}}}- {{\hat{v}}}_i\) respectively, are presented in Fig. 3. Using the estimates of parameters, the density of \(\mathcal{N}(0, {{\hat{\tau }}}^2)\) for \({{\hat{v}}}_i\) and \({{\mathcal {S}}}{{\mathcal {N}}}(0, {{\hat{{\sigma }}}}^2, {{\widehat{{\lambda }}}})\) for \(e_{ij}\) are depicted in each panel in Fig. 3. The sample coefficient of skewness is \(-0.33\) for \({{\hat{v}}}_i\) and 1.02 for \(e_{ij}\). Though \({{\hat{v}}}_i\) has a week negative skewness, departure of its histogram from the normal density does not seem to be so great. Thus it is reasonable to assume the normal distribution on the random effect. For \(e_{ij}\), positive skewness is observed both in its skewness value and histogram in Fig. 3.

Next we predict (estimate) the land price of a spot with specific values of covariates. As covariates, we set a distance from the nearby station as 1000 m and a floor-area-ratio as 100%. Thus for each i, we want to predict

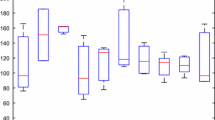

for \(D_0 =\log (1000)\) and \(FAR_0 = \log (100)\). The predicted values of \({\theta }_i\) and their CMSE estimates for 12 selected areas based on 2000 bootstrap samples are provided as the column ‘SN’ in Table 4. These quantities are also calculated under the standard NER model and reported in Table 4 as the column ‘Normal’. It is easily observed that the EB estimates under the proposed model are smaller than those obtained by the usual NER model. As described in Sect.s 2.2 and 4.1, the major source of this gap is the difference between the estimates of \({{\widehat{{\beta }}}}_0\) for the two models, which equals to 0.134. Comparing the estimates of the CMSE, the skew-normal model gives larger values and, especially, in some areas the estimated CMSE in our model are several times larger than those in the normal model. Since the given data has strong positive skewness, it is considered that the values in the ‘SN’ and ‘Normal’ columns are respectively overestimated and underestimated. This point is illustrated in the simulation study presented in the previous section. Concerning the effect of area sample size, from the decreasing value of the CMSE estimate in the ‘Normal’ column it is suggested that the CMSE obtained under the normality assumption is a decreasing function of \(n_i\). In general, decreasing tendency like this is not observed for the conditional MSE but for the unconditional MSE because the former usually also depends on \({{\varvec{y}}}_i\). As shown by Booth and Hobert (1998), however, the leading term of the CMSE does not depend on \({{\varvec{y}}}_i\) only in the model with normality assumption, so that increasing area sample size leads to reduction of the CMSE estimates in this case. In contrast, the estimated values of the CMSE for the proposed method do not appear to depend solely on \(n_i\).

7 Concluding remarks

In this paper, we have considered the NER model with skew-normally distributed errors. Under this model, the Bayesian calculation has been conducted to provide simple forms of the posterior mean and variance. We have constructed the estimators of the model parameters similar to the method by Fuller and Battese (1973) and obtained the EB estimator. The uncertainty of the EB estimator has been studied in terms of the CMSE and the second-order unbiased estimator of the CMSE has been derived with the parametric bootstrap method. In the simulation study, it has been revealed that the proposed method yields the EB estimates with greater accuracy than the standard NER model does when the underlying distribution of the error term has skewness.

These results suggest that the proposed skew-normal model is useful if skewness is observed in the given data. However, it is a major problem that a skew-normal distribution cannot apply to highly skewed situations, which actually happened in our example in Section 6. This issue may be overcome by adopting more heavy-tailed distributions like a skew-t distribution and remains to be solved in the future works.

References

Arellano-Valle, R. B., Bolfarine, H., & Lachos, V. H. (2005). Skew-normal linear mixed models. Journal of Data Science, 3, 415–438.

Arellano-Valle, R. B., Bolfarine, H., & Lachos, V. (2007). Bayesian inference for skew-normal linear mixed models. Journal of Applied Statistics, 34, 663–682.

Azzalini, A. (1985). A class of distributions which includes the normal ones. Scandinavian Journal of Statistics, 12, 171–178.

Azzalini, A. (1986). Further results on a class of distributions which includes the normal ones. Statistica, XLVI, 199–208.

Azzalini, A. (2013). The skew-normal and related families. Cambridge: Cambridge University Press.

Azzalini, A., & Capitanio, A. (1999). Statistical applications of the multivariate skew normal distribution. Journal of the Royal Statistical Society, B 61, 579–602.

Battese, G. E., Harter, R. M., & Fuller, W. A. (1988). An error-components model for prediction of county crop areas using survey and satellite data. Journal of the American Statistical Association, 83, 28–36.

Booth, J. G., & Hobert, J. P. (1998). Standard errors of prediction in generalized linear mixed models. Journal of the American Statistical Association, 93, 262–272.

Butar, F. B., & Lahiri, P. (2003). On measures of uncertainty of empirical Bayes small-area estimators. Journal of Statistical Planning and Inference, 112, 63–76.

Diallo, M., & Rao, J. N. K. (2018). Small area estimation of complex parameters under unit-level models with skew-normal errors. Scandinavian Journal of Statistics, 45, 1092–1116.

Dunnett, C. W., & Sobel, M. (1955). Approximations to the probability integral and certain percentage points of a multivariate analogue of Student’s t-distribution. Biometrika, 42, 258–260.

Ferraz, V. R. S., & Moura, F. A. S. (2012). Small area estimation using skew normal models. Computational Statistics and Data Analysis, 56, 2864–2874.

Fuller, W. A., & Battese, G. E. (1973). Transformations for estimation of linear models with nested-error structure. Journal of the American Statistical Association, 68, 626–632.

Ghosh, M., & Rao, J. N. K. (1994). Small area estimation: an appraisal. Statistical Science, 9, 55–76.

Henze, N. (1986). A probabilistic representation of the “skew-normal” distribution. Scandinavian Journal of Statistics, 13, 271–275.

Pewsey, A. (2000). Problems of inference for Azzalini’s skew-normal distribution. Journal of Applied Statistics, 27, 859–870.

Pfeffermann, D. (2013). New important developments in small area estimation. Statistical Science, 28, 40–68.

Prasad, N. G. N., & Rao, J. N. K. (1990). The estimation of the mean squared error of small-area estimators. Journal of the American Statistical Association, 85, 163–171.

Rao, J. N. K., & Molina, I. (2015). Small area estimation (2nd ed.). Hoboken: Wiley.

Tallis, G. M. (1961). The moment generating function of the truncated multi-normal distribution. Journal of the Royal Statistical Society: Series B, 23, 223–229.

Acknowledgements

We would like to thank the Associate Editor and the two reviewers for many valuable comments and helpful suggestions which led to an improved version of this paper. The research of the second author was supported in part by Grant-in-Aid for Scientific Research from Japan Society for the Promotion of Science (\(\#\) 18K11188, \(\#\) 15H01943 and \(\#\) 26330036).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Proofs

Appendix: Proofs

All the proofs of lemmas and theorems given in the paper are provided here.

Proof of expression (6)

We explain briefly the derivation of the expression (6). The exponent in \(f(v_i,{{\varvec{u}}}_{1i}\mid {{\varvec{y}}}_i)\) is proportional to

which is rewritten as

The first part corresponds to the density \(\phi (v_i;\mu _{v_i}, {\sigma }_{v_i}^2)\). For simplicity, let \({{\varvec{J}}}_{n_i}=\mathbf{1 }_{n_i}\mathbf{1 }_{n_i}^\top \),

Then the second term can be expressed as \({\sigma }^2{{\varvec{u}}}_{1i}^\top {{\varvec{u}}}_{1i} - 2{{\varvec{c}}}_i^\top {{\varvec{u}}}_{1i}-d{{\varvec{u}}}_{1i}^\top {{\varvec{J}}}_{n_i}{{\varvec{u}}}_{1i}\). After completing square, one gets \(\{{{\varvec{u}}}_{1i}-({\sigma }^2{{\varvec{I}}}_{n_i}-d{{\varvec{J}}}_{n_i})^{-1}{{\varvec{c}}}_i\}^\top ({\sigma }^2{{\varvec{I}}}_{n_i}-d{{\varvec{J}}}_{n_i})\{{{\varvec{u}}}_{1i}-({\sigma }^2{{\varvec{I}}}_{n_i}-d{{\varvec{J}}}_{n_i})^{-1}{{\varvec{c}}}_i\}\), which corresponds to the density \(\phi _{n_i}({{\varvec{u}}}_{1i};{\varvec{\mu }}_i,{\sigma }_{u_i}^2{{\varvec{R}}}_i)\). Thus, we have the expression given in (6). \(\square \)

Proof of Lemma 3.1

Following Tallis (1961), we have

where \({{\varvec{a}}}_{i(-k)}\) and \({{\varvec{w}}}_{i(-k)}\) are respectively \((n_i-1)\)-dimensional vector obtained by dropping the kth element of \({{\varvec{a}}}_i\) and \({{\varvec{w}}}_i\), \({{\varvec{w}}}_i^k=({{\varvec{w}}}_{i(-k)}+\rho _ia_{ik}\mathbf{1 }_{n_i-1})(1 - \rho _i^2)^{-1/2}\), and \({{\varvec{R}}}_i^k\) is the matrix of the partial correlation coefficients for \({{\varvec{w}}}_i\). Using results from Dunnett and Sobel (1955), we reduce the two multiple integrals in (19) to one-dimensional integrals. Since the denominator of the fraction in (19) is written as

where \({{\varvec{W}}}=(W_1,\ldots ,W_{n_i})^\top \sim \mathcal{N}_{n_i}(\mathbf{0 },{{\varvec{R}}}_i)\) with \(({{\varvec{R}}}_i)_{qr}=\rho _i\in [0,1)\) for \(q\ne r\). Thus \(W_j\) can be represented as

where for \(j=0,1,\ldots ,n_i\), \(\xi _j\)’s are mutually independently distributed as \(\mathcal{N}(0,1)\). This transformation gives

which corresponds to (8).

Similarly, we see that the numerator in (19) is

where \({{\varvec{W}}}^k=(W_1^k,\ldots ,W_{k-1}^k,W_{k+1}^k,\ldots ,W_{n_i}^k)^\top \sim \mathcal{N}_{n_i-1}(\mathbf{0 },{{\varvec{R}}}_i^k)\) with \(({{\varvec{R}}}_i^k)_{qr}=\rho _i/(1+\rho _i)\) for \(q\ne r\). Thus, analogously to (20), \(W_l^k\) can be expressed as

where \(\xi _l\)’s are mutually independent standard normal variables again. Then it follows that

which gives (9). \(\square \)

Proof of Theorem 3.1

It suffices to transform the second term of (7) into the desired form. Using Lemma 3.1 and the fact that \(({{\varvec{R}}}_i)_{jk}=\rho _i\) for \(j\ne k\), we have

Since

the formula (21) reduces to

which gives the desired expression. \(\square \)

Proof of Lemma 3.2

The outline is the same as in the proof of Lemma 3.1. Using the results of Tallis (1961) and Theorem 3.1, we have

where \({{\varvec{a}}}_{i(-q,r)}\) and \({{\varvec{w}}}_{i(-q,r)}\) are respectively \((n_i-2)\)-dimensional vectors obtained by dropping the qth and rth elements of \({{\varvec{a}}}\) and \({{\varvec{w}}}\),

and \({{\varvec{R}}}_i^{qr}\) is the matrix of the second-order partial correlation coefficients for \({{\varvec{w}}}_i\). The \((n_i-2)\)-fold integral in (23) is written as

where \({{\varvec{W}}}^{qr}\sim \mathcal{N}_{n_i-2}(\mathbf{0 },{{\varvec{R}}}_i^{qr})\) with \(({{\varvec{R}}}_i^{qr})_{st}=\rho _i/(1+2\rho _i)\) for \(s\ne t\). Here the definition of \({{\varvec{W}}}^{qr}\) is analogous to \({{\varvec{W}}}^q\) in the proof of Lemma 3.1. Then using the similar method to (20) with \(\rho _i/(1+2\rho _i)\) instead of \(\rho _i\), we have

where \(\xi _s\)’s are mutually independent standard normal random variables. Then we have

which corresponds to (12). \(\square \)

Proof of Theorem 3.2

It follows from Lemmas 3.1 and 3.2 that

The conditional variance of \(\sum _{j=1}^{n_i}w_{ij}\) given \({{\varvec{y}}}_i\) is

where \(\nu _i({\varvec{\omega }},{{\varvec{y}}}_i)\) is defined as (14). Then it follows from (11), (22) and (24) that

which proves Theorem 3.2. \(\square \)

Proof of Theorem 4.1

First, we derive the desired properties for the parameters \(\big ({\varvec{\beta }}_{{\varepsilon }}^\top ,{\sigma }^2,\tau ^2,{\lambda }\big )\). In general, consider the case that two estimators \({{\hat{{\theta }}}}_1\) of \({\theta }_1\) and \({{\hat{{\theta }}}}_2\) of \({\theta }_2\) have the forms

where \(h_{1i}({{\varvec{y}}}_i)\) and \(h_{2i}({{\varvec{y}}}_i)\) (written as \(h_{1i}\) and \(h_{2i}\) for simplicity) are functions of \({{\varvec{y}}}_i\) such that \(h_{ki} = O_p(1),\,E[h_{ki}] = O(1)\) for \(k = 1, 2\). Since \({{\varvec{y}}}_i\)’s are mutually independent, it is shown that

which means that it is enough to obtain the required results for the unconditional expectations. Note that all the moments of a skew-normal distribution exist. Since the methods of estimating \({\varvec{\beta }}_{\varepsilon }\) and \(m_2\) are the same as those of the usual NER model, it follows from the results of Fuller and Battese (1973) that

The last formula comes from the unbiasedness of \({{\widehat{m}}}_2\).

Next we treat \({{\widehat{m}}}_3\). Let \({{\widehat{{\varvec{\beta }}}}}_2^{FE}\) be the OLS estimator obtained by regressing \(y_{ij}\) on \({\tilde{{{\varvec{z}}}}}_{ij}\). Then, \({{\widehat{{\varvec{\beta }}}}}_1^{FE}\) is written as \({{\widehat{{\varvec{\beta }}}}}_2^{FE} = {\varvec{\beta }}_2 + (\sum _{i=1}^m\sum _{j=1}^{n_i}{\tilde{{{\varvec{z}}}}}_{ij}{\tilde{{{\varvec{z}}}}}_{ij}^\top )^{-1} \sum _{i=1}^m \sum _{j=1}^{n_i} {\tilde{{{\varvec{z}}}}}_{ij}{\tilde{{\varepsilon }}}_{ij}\). It follows from (RC) and \({{\widehat{{\varvec{\beta }}}}}_2^{FE} - {\varvec{\beta }}_2 = O_p(m^{-1/2})\) that

Since \({\tilde{{\varepsilon }}}_{ij}\)’s are independent for different i and \(E[{\tilde{{\varepsilon }}}_{ij}] = 0\), the bias of \({{\widehat{m}}}_3\) is

which is of order \(O(m^{-1})\). From the fact that \({{\widehat{m}}}_3 = \eta _1^{-1}\sum _{i=1}^m\sum _{j=1}^{n_i}{\tilde{{\varepsilon }}}_{ij}^3 + O_p(m^{-1})\) and \(\eta _1^{-1}\sum _{i=1}^m\sum _{j=1}^{n_i}{\tilde{{\varepsilon }}}_{ij}^3 = O_p(m^{-1/2})\), it follows that

The expectation in (25) is bounded under the condition (RC) and the existence of up to the sixth moment of \({\varepsilon }_{ij}\), which leads to \(E[({{\widehat{m}}}_3 - m_3)^2] = O(m^{-1})\). Then we have \({{\widehat{m}}}_2 - m_2 = O_p(m^{-1/2})\) and \({{\widehat{m}}}_3 - m_3 = O_p(m^{-1/2})\). Also, \(E[({{\widehat{m}}}_2 - m_2)({{\widehat{m}}}_3 - m_3)]\) can be treated by Schwarz’s inequality as

The inverse transformation of \((m_2({\sigma }^2,{\lambda }), m_3({\sigma }^2,{\lambda }))\) is derived from (1) and (2) as

Since \({\lambda }\ne 0\), \({\delta }\ne 0\), or \(m_3 \ne 0\), it is easy to check these functions are three times continuously differentiable. Thus, using the Taylor series expansion we have

which, together with the results obtained up to this point, gives

Concerning the truncated estimator \({{\widehat{{\delta }}}}=\max (-1+1/m, \min ({{\widetilde{{\delta }}}}, 1-1/m))\), we consider the case of \(0<{\delta }<1\). For large m, we have \(1-1/m-{\delta }>0\). Then, \(\Pr ({{\widetilde{{\delta }}}}>1-1/m)=\Pr ({{\widetilde{{\delta }}}}-{\delta }> 1-1/m-{\delta })\le E[({{\widetilde{{\delta }}}}-{\delta })^2]/(1-1/m-{\delta })^2\), so that \(\Pr ({{\widetilde{{\delta }}}}>1-1/m)=O(m^{-1})\). This shows the consistency of \({{\widehat{{\delta }}}}\). Using the same arguments as below, we can show that \(E[{{\widehat{{\delta }}}}-{\delta }]=O(m^{-1})\) and \(E[({{\widehat{{\delta }}}}-{\delta })^2]=O(m^{-1})\). These results lead to the asymptotic properties of \({{\widehat{{\lambda }}}}\).

Concerning \({{\hat{\tau }}}^2\), we have \(E[({\tilde{\tau }}^2 - \tau ^2)^2] = O(m^{-1})\), because \((v_i,\, {\varepsilon }_{ij})\)’s are independent for different i and all the moments of \(v_i\) and \({\varepsilon }_{ij}\) exist. Following Prasad and Rao (1990),

which is of order \(O(m^{-1})\). Then we have

which is of order \(O(m^{-1})\). Also, it follows from \(E[{\tilde{\tau }}^2 - \tau ^2] = 0\) that

As for the first term,

which is of order \( O(m^{-1})\). Thus, together with (27), we obtain \(E[{{\hat{\tau }}}^2 - \tau ^2] = O(m^{-1})\).

We have derived the desired properties for the unconditional case, so that from the argument at the beginning of the proof, the statement of the theorem has been checked for \(\big ({\varvec{\beta }}_{\varepsilon }^\top ,{\sigma }^2,\tau ^2,{\lambda }\big )\). Lastly, we need to consider \({\beta }_0\) instead of \({\beta }_{0{\varepsilon }}\). Since \(\mu _{\varepsilon }= {\sigma }\sqrt{2/\pi }{\lambda }/\sqrt{1 + {\lambda }^2}\) is a three times continuously differentiable function of \(({\sigma }^2,{\lambda })\), it follows that

Using this expansion, the desired results can be easily obtained.

It remains to show the expectations of cross terms are \(O(m^{-1})\). Analogously to (26), this can be achieved by Schwarz’s inequality, and the proof is complete. \(\square \)

Proof of Proposition 4.1

From Theorem 4.1, \(g_{1i}({{\widehat{{\varvec{\omega }}}}}, {{\varvec{y}}}_i)\) can be expanded as \(g_{1i}({{\widehat{{\varvec{\omega }}}}},{{\varvec{y}}}_i) = g_{1i}({\varvec{\omega }},{{\varvec{y}}}_i) + G_{1i}({{\widehat{{\varvec{\omega }}}}},{\varvec{\omega }},{{\varvec{y}}}_i) + O_p(m^{-3/2})\) where

Thus we have \(E[g_{1i}({{\widehat{{\varvec{\omega }}}}},{{\varvec{y}}}_i) {\,|\,} {{\varvec{y}}}_i] = g_{1i}({\varvec{\omega }},{{\varvec{y}}}_i) + E[G_{1i}({{\widehat{{\varvec{\omega }}}}},{\varvec{\omega }},{{\varvec{y}}}_i) {\,|\,} {{\varvec{y}}}_i] + o_p(m^{-1})\). It follows from Theorem 4.1 that \(E[G_{1i}({{\widehat{{\varvec{\omega }}}}},{\varvec{\omega }},{{\varvec{y}}}_i){\,|\,}{{\varvec{y}}}_i] = O_p(m^{-1})\), so that applying the same arguments as in Butar and Lahiri (2003) shows \(E[{{\hat{g}}}_{1i} {\,|\,} {{\varvec{y}}}_i] = g_{1i}({\varvec{\omega }},{{\varvec{y}}}_i) + o_p(m^{-1})\). Also, using Theorem 4.1 again, it can be seen that \(E[{{\hat{g}}}_{i2} {\,|\,} {{\varvec{y}}}_i] = g_{2i}({\varvec{\omega }},{{\varvec{y}}}_i) + o_p(m^{-1})\). Then the proposition can be immediately obtained. \(\square \)

Rights and permissions

About this article

Cite this article

Tsujino, T., Kubokawa, T. Empirical Bayes methods in nested error regression models with skew-normal errors. Jpn J Stat Data Sci 2, 375–403 (2019). https://doi.org/10.1007/s42081-019-00038-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42081-019-00038-y