Abstract

Gasification stands as a transformative thermochemical process, ingeniously converting carbon-rich substances like methane (CH4) and a spectrum of hydrocarbons, including ethylene (C2Hn), into a versatile synthesis gas (syngas). This dynamic blend predominantly comprises hydrogen (H2) and carbon monoxide (CO), presenting a potent feedstock for diverse industrial applications. In recent years, the focus on sustainable energy has intensified due to concerns about climate change, energy security, and dwindling fossil fuel reserves. Biomass energy has emerged as a promising alternative, offering the potential for a global circular economy and carbon neutrality, thanks to its abundant resources and reliable energy production. This article introduces two hybrid models that combine Least Square Support Vector Regression (LSSVR) with Dwarf Mongoose Optimization (DMO) and the Improved Grey Wolf Optimization Algorithm (IGWO). These models utilize nearby biomass data to predict the elemental compositions of CH4 and C2Hn. The assessment of both individual and hybrid models has demonstrated that integrating LSSVR with these optimizers significantly improves the accuracy of CH4 and C2Hn predictions. According to the findings, the LSDM model emerges as the top performer for predicting both CH4 and C2Hn, achieving impressive R2 values of 0.988 and 0.985, respectively. Moreover, the minimal RMSE values of 0.367 and 0.184 for CH4 and C2Hn predictions respectively affirm the precision of the LSDM model, rendering it a suitable option for practical real-world applications. Accurate predictions enable the design of systems that efficiently convert a wide range of feedstocks into valuable syngas, which can be employed to generate heat, electricity, fuels, and chemicals. By understanding and optimizing gasification processes, it becomes possible to minimize emissions of pollutants, reduce waste, and mitigate greenhouse gas emissions through carbon capture and utilization technologies.

Graphical abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Background

Rising global energy demand is a persistent concern across social, political, and business domains, expected to continue for decades (Vo Thanh et al. 2024; Vo Thanh et al. 2024). The current energy supply falls short due to climate change and technological shifts, with a heavy reliance on non-renewable fossil fuels like coal, natural gas, and oil. These fossil fuels constitute a large part of global energy consumption, contributing to rising atmospheric carbon dioxide and global warming (Velvizhi et al. 2022; Raud et al. 2019). The adoption of renewable energy (RE) has garnered widespread support as a feasible substitute for fossil fuels and as a strategy to alleviate the harmful consequences of emissions resulting from the combustion of fossil fuels. Renewable energy sources, including solar, wind, hydropower, biomass-biofuels, and other options, have generated significant interest in the pursuit of creating more environmentally friendly and sustainable energy systems with lower carbon emissions (Narnaware and Panwar 2022).

Biomass-derived from agricultural leftovers or forests has the potential to be utilized for the production of various materials and bioenergy that are essential in contemporary societies. When compared to other available resources, biomass stands out as one of the most abundant and widely distributed resources globally. Consequently, biomass holds the promise of serving as a locally and globally accessible renewable energy source (Hakeem et al. 2016). Bioenergy, classified as a renewable energy source, stands as a prospective alternative to conventional fossil fuels. Furthermore, the conversion of bioenergy from waste materials supports the progression of sustainable waste management practices, aligning with the principles of a circular economy and the achievement of carbon neutrality (Ewees et al. 2024; Chen et al. 2021). While renewable energy sources suffer from issues of irregular availability, fluctuation, and unpredictability, the progress in energy storage technologies has notably offered successful resolutions to these problems, ultimately enhancing energy security (Hassan et al. 2022; Malka et al. 2022).

Gasification plays a pivotal role in biomass utilization, providing substantial flexibility in utilizing a variety of feedstock materials and producing diverse output products (Ahrenfeldt et al. 2013). The biomass gasification process involves transforming solid or liquid organic compounds into both a gas or vapor phase and a solid phase. The resulting gas phase, commonly referred to as syngas, possesses significant heating potential and can serve for power generation or the production of Zhang et al. 2024; Qian et al. 2013). Biomass gasification is regarded as a means to expand the utilization of biomass for energy generation, enabling the broader adoption of biomass as an energy source (Wu et al. 2014). Numerous valuable products can be obtained through the gasification of biomass, such as syngas, heat, power, biofuels, fertilizer, and biochar. Syngas can undergo further processing, mainly through the Fischer–Tropsch method, to produce substances like dimethyl ether, methanol, and various chemical feedstocks. Typically, biomass feedstocks are categorized into four primary groups: herbaceous biomass, marine biomass, woody biomass, and manure. The design of the gasifier is typically tailored to yield a specific product, with the choice and optimization of the feedstock material being a critical parameter (McKendry 2002). Gasifiers can be categorized into four main types: moving beds (including downdraft and updraft designs), circulating beds, and fluidized bed gasifiers. These types are distinguished based on how the biomass and gas interact within the gasifier (Vo Thanh et al. 2020; Basu 2010; Ciferno and Marano 2002).

1.2 Related works

The adoption of ML models (Xie et al. 2013; Sadaghat et al. 2024), including ANNs (Jain et al. 1996; Yao 1999; Goh 1995; Eberhart and Shi 1998) and SVM (Awad and Khanna 2015; Rodriguez-Galiano et al. 2015; Zhang and Chapter 2020; Wang 2005), in gasification research is experiencing notable traction (Ascher et al. 2022a; Wang et al. 2021). ML methodologies serve as indispensable instruments for fine-tuning diverse thermochemical processes within bioenergy, notably gasification (Wang et al. 2022; Tang et al. 2021). By leveraging ML models, the efficiency and sustainability of gasification processes can be substantially heightened, simultaneously addressing a spectrum of environmental, economic, and social considerations. Numerous studies have underscored the efficacy of ML models in accurately predicting and optimizing various facets of gasification processes. The innovative application of ML techniques in gasification research has yielded significant advancements across various domains. George et al. (George et al. 2018) utilized an ANN model to achieve remarkable accuracy in predicting syngas production from a lab-scale bubbling fluidized bed gasifier. Ascher et al. (Ascher et al. 2022b) further advanced this frontier by developing a sophisticated ANN model that attained an impressive R2 value of 0.9310, notably predicting gasification technology performance. This breakthrough bears immense potential for revolutionizing fields like energy production and waste management. Shenbagaraj et al. (Shenbagaraj et al. 2021) introduced the ANN models’ capabilities, demonstrating their proficiency in forecasting a broader spectrum of gasification outputs, including syngas composition and yield, with remarkable precision (Han et al. 2008). Additionally, the application of SVM algorithms has shown promise, particularly in estimating optimal oxygen-steam ratios in biomass and coal gasification processes, as demonstrated by the work of Bahadar et al. (Bahadar et al. 2022). Their SVM model exhibited strong alignment with actual data, showcasing its potential to optimize gasification processes effectively. Moreover, Bahadar et al.'s (Tang et al. 2021) investigation into generating hydrogen-rich syngas in co-gasification processes involving coal and biomass highlights the pivotal role of ML in enhancing hydrogen-rich syngas production across diverse gasification scenarios. Further contributions to the field include the work of Baruah et al. (2017), who employed an ANN model to predict gas yields with exceptional precision from a downdraft gasifier. Similarly, Serrano and Castello (2020) leveraged ANN modeling to accurately predict tar production during the gasification of woody biomass. Meanwhile, Ceylan et al. (2021) delved into the application of several ML algorithms to forecast critical factors in gasification processes. Their findings revealed that the Random Forest model outperformed others in predicting product gas generation and gas heating value, underscoring the significant potential of ML in advancing gasification procedure.

As demonstrated in the literature review, numerous researchers have undertaken the task of predicting gasification processes, employing various models for this purpose. However, it is noteworthy that, to date, the LSSVR model has not been utilized for gasification process prediction.

1.3 Objective

The objective of this article is to fill a notable gap in the existing literature by introducing the implementation of the LSSVR model for predicting gasification processes, specifically focusing on CH4 and C2Hn. This research endeavors to contribute novel insights by employing a machine learning approach, specifically LSSVR-based models, to forecast syngas generation in biomass gasification. The study innovatively integrates two optimization techniques, namely the DMO and the IGWO, aimed at augmenting model performance. The selection of these algorithms stems from their demonstrated efficacy in optimizing complex processes and their potential to enhance predictive accuracy in gasification modeling. To assess the efficacy of these optimization techniques and conduct a comparative analysis, the study employs five statistical metrics, prioritizing precision in prediction outcomes. Accurate forecasting of CH4 and C2Hn enables optimization of gasification conditions to maximize syngas production while minimizing unwanted byproducts, thus enhancing overall process efficiency. Additionally, understanding the concentrations of these components allows for the estimation of the energy content of syngas, aiding in assessing the economic viability of gasification operations. Moreover, monitoring CH4 and C2Hn levels is crucial for mitigating environmental concerns, as methane is a potent greenhouse gas, and higher hydrocarbons can contribute to air pollution.

1.4 Research organization

In the initial segment of this study, the introduction is subdivided into four key subsections: background, related work, objectives, and research organization. Moving forward, the second section delves into explanations pertaining to the dataset, the explanation of the algorithms for optimization as well as the model, and the definition of the performance evaluation metrics. In the third section, the results of the study, comprising hyperparameters' outcomes, tables, and figures based on the metrics, are presented. Additionally, section four, titled "Discussion," is divided into three subsections: one for explanations of the advantages and disadvantages of algorithms, the second for comparison between the results of this study and previous studies, and the third for addressing the limitations of the study. In section five, titled "Summary and Conclusion," a succinct summary of the study alongside its significant conclusions are provided. Finally, section six serves as the conclusive segment, containing the references cited throughout the article.

2 Methodological details

2.1 Dataset overview

This research focuses primarily on predicting the elemental compositions of CH4 and C2Hn. To accomplish this, the researchers utilize the LSSVR machine learning regression method, along with the integration of the Dwarf Mongoose Optimization Algorithm and the Improved Grey Wolf Optimizer in the training process. The outcomes of the gasification process are contingent upon the gasifier's design, biomass characteristics, key operational factors such as gasifying agent, air-to-fuel ratio, temperature, pressure, and the nature of bed materials, including the presence or absence of catalytically active substances (Molino et al. 2016).

The process of collecting data involved several stages, which encompassed aligning parameters with the dataset's structure, defining particle size as the smallest dimension, and excluding data related to feedstock type and shape. Notably, volatile matter content (VM) and fixed carbon (Fc) were considered dependent variables that have associations with other compositional parameters. The decision to exclude feedstock with lower heating value (LHV), oxygen content (O), and nitrogen content (N) was based on their strong correlations with other input parameters. The steam-to-biomass ratio (SB) was identified as a factor affecting gasification efficiency, while the Equivalence Ratio (ER) impacted the trade-off between syngas production and combustion (Antonopoulos et al. 2012). Residence time (Rt) emerged as a crucial factor determining gasification efficiency, with values ranging from 16.3 min to 403 min in the study.

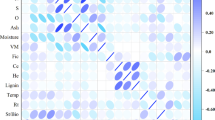

Table 1 and Fig. 1 offer statistical insights and correlation into the input variables as well as the two target variables (CH4 and C2Hn) collected and documented within the database.

2.2 Introduction to machine learning models and optimizers

2.2.1 Least square support vector regression (LS-SVR)

Vapnik (Vapnik 1995) developed the Support Vector Machine (SVM), a powerful supervised learning method used for function approximation, nonlinear classification, and density estimation in nonlinear classification. When it comes to Support Vector Machine for Regression (SVR), its objective is to discover a linear regression function within a higher-dimensional space in a manner that minimizes the function's slope and ensures minimal deviation from the training data (Ayubi Rad and Ayubirad 2017). In this scenario, the provided training data consists of \(\left\{{x}_{i},{y}_{i}\right\}i=\text{1,2},\dots ,l\). The definition of the regression function is as follows:

Typically, in order to take into account the nonlinear relationship between the input and output variables, the SVR algorithm projects the input data into a higher-dimensional space. The mapping function that converts the input \(x\) from the input space to the feature space is represented by the \(\psi \). Consequently, the following formulation may be used to create the linear regression function inside the feature space:

To determine \(f(x)\) such that the difference between the function's output and the true target value for all training dataset points is reduced to a value less than \(\varepsilon \), the method of Epsilon SVR (ε-SVR) is applied. In this case, the challenge of identifying the regression function is represented by the convex optimization problem outlined by the following constraints:

Recognizing that achieving convex optimization and finding an \(f\) function with ε precision may not always be attainable, the introduction of δ and \({\delta }_{i}^{*}\) as slack variables are employed. Thus, the following is a new structure that may be used for the convex optimization problem:

here, the \(c\), with \(c\ge 0\), serves as the trade-off parameter that balances the smoothness of the function \(f\) against the tolerance level for deviations exceeding ε.

The Least Squares Support Vector Machine (LS-SVM) is an improved variation of the SVM algorithm designed for tasks involving classification and regression analysis. LS-SVM deals with the computational challenges of SVM by transforming quadratic optimization problems into a set of linear equations, simplifying the solving process (Okkan and Serbes 2012; Pham et al. 2019). More precisely, By tackling the following optimization problems, LS-SVM simplifies the problem:

The parameter \(\Omega \) serves as a regulatory factor, and it symbolizes how LSSVR achieves a trade-off between minimizing training errors and enhancing the function's smoothness. This balance is achieved by using a Lagrangian function that has the following structure:

where, \({\alpha }_{i}\) represents the Lagrangian multiplier. To solve the optimization problem, both the primal and dual variables need to be equated to zero.

where, \(Q\) and \(e\) are replaced within the Lagrangian function to create a linear system in the following manner:

where:

\(1={\left[1+1,\dots , 1\right]}^{S}\).

The matrix \(\lambda \) can be constructed using the kernel approach, as demonstrated by Eq. (12), leading to the reformulation of the solution for Eq. (11) as expressed in Eq. (13).

The RBF (\({\nu }^{2}\)) can serve as a kernel function representing an infinite-dimensional function space. Additionally, with the values of d and \(\alpha \) that have been derived, the optimal regression function can be formulated in the following:

\({\nu }^{2}\) represents the square of the kernel bandwidth.

In previous studies, several ideal values for \(\Omega \) and \({\nu }^{2}\) have been suggested. For instance, 1/K (where \(K\) is the number of input patterns) and 1 for \(\Omega \) and \({\nu }^{2}\) are proposed by Hsu et al. (Hsu et al. 2003). Aiyer et al. (Aiyer et al. 2014) introduced a trial-and-error method, which can be quite time-intensive, especially when estimating \(\Omega \) and \({\nu }^{2}\), as these parameters can take on any positive real values. Therefore, optimization techniques can be employed to fine-tune these parameters for LSSVR.

2.2.2 Dwarf mongoose optimization (DMO)

The population-based, stochastic metaheuristic Dwarf Mongoose Optimization (DMO) method draws its inspiration from the dwarf mongoose's reported social and foraging behaviors, as noted by Helogale (Agushaka et al. 2022).

DMO starts the problem-solving process by selecting the first set of possible solutions from the mongoose colony. It commences with the generation of an initial candidate solution population. These initial solutions are created randomly, adhering to the specified upper and lower limits as per the requirements of the specific problem under consideration.

d represents the number of dimensions in the underlying problem.

\(n\) indicates the size of the population.

Equation (18) computes the positional attribute of individual elements in a population, which is represented by \({x}_{i,j}\):

\(Va{r}_{Size}\) pertains to the problem's dimensions and the associated value ranges.

where, \(Va{r}_{Min}\) and \(Va{r}_{Max}\) represent the minimum and maximum limits, respectively.

The function \(unifrnd\) functions as a random number generator, creating numbers distributed uniformly.

The DMO algorithm adheres to the conventional metaheuristic strategy, which comprises two clear-cut phases: exploitation and exploration. The initial phase involves an exhaustive and meticulous examination of the specified region by each mongoose, known as the intensification process. On the other hand, the second method entails a less systematic exploration of fresh resources like food supplies or resting spots, referred to as diversification. The operation of the DMO algorithm is characterized by two discernible phases, both guided by three essential social components: the alpha group, scout group, and babysitters. These components have a pivotal role in orchestrating the actions of the solution population, ensuring the algorithm effectively explores and exploits the search space.

-

Alpha Group

The selection of the alpha female (α) to lead the unit of family is determined using Eq. (19).

\(n\) depicts the existing count of mongooses forming the alpha group.

\(peep\) denotes the term for an auditory cue produced by an alpha or dominant female mongoose.

\(bs\) indicates the count of individuals with the mongoose group responsible for nurturing and overseeing young offspring.

Equation (20) calculates a positive correlation observed between the sleeping mound and the availability of abundant nutritional elements:

\(\varphi \) determines a value that follows a uniform distribution within the interval of \([-\text{1,1}]\).

Each time the algorithm runs, the size and quality of the sleeping mound are evaluated, as shown by mathematical Eq. (21):

When an inactive accumulation that was previously not in use is identified, a statistical metric is computed using Eq. (22):

-

Scout Group

Following the fulfillment of the requirements to be a member of a babysitter exchange program, the next step of the procedure is scouting. This stage involves evaluating the location of a potential resting place in relation to the availability of a certain food supply. Taking into account that mongooses do not typically reuse their sleeping places, the scouting team's task is to find a new mound to aid in the further progress of their explorations. The behavior of the mongoose exhibits a unique pattern that involves scouting and foraging within the parameters of the DMO algorithm. This behavior is grounded on the idea that expanding the distance covered while foraging increases the probability of coming across a new resting place. This mechanism is mathematically illustrated through the utilization of Eqs. (23–25):

\(\overrightarrow{M}\) depicts the driving force that propels the mongoose's motion toward a recently established resting mound. \(rand\) stands for a random value uniformly distributed in the interval \([-\text{1,1}]\).

-

Babysitters Group

The group responsible for watching for the small offspring stays watchful over them while the scouting and foraging team searches for a suitable location for food and rest. As some group members choose to defer their foraging or scouting tasks until they meet the requirements for the exchange program, the number of qualified applicants for the babysitter exchange decreases. The DMO algorithm's pseudo-code is provided by Algorithm 2.

2.2.3 Improved grey wolf optimization algorithm (IGWO)

-

Overview of Grey Wolf Optimization Algorithm

The social structure and hunting behaviors of grey wolves inspire the Grey Wolf Optimizer (GWO) algorithm (Wang et al. 2022). It involves three prominent leader wolves, labeled as \(\alpha , \beta , and \delta \), which act as top solutions, guiding a group of ω wolves in search of the global solution. The wolf hunting process consists of three main stages: encircling, hunting, and engaging with the prey.

-

Encircling:

The mathematical representation of how grey wolves encircle their prey is depicted in Eqs. (26) and (27):

\({X}_{P}\) depicts the location of the target.

t indicates the present iteration.

\(C\) and \(A\) determine the vectors of coefficients determined using \(\text{Eqs}\). (28) and (29):

The vector’s components "\(a\)" demonstrate a linear reduction from 2 to 0 as the iterations progress, in accordance with the instructions provided in Eq. (30):

-

Hunting:

The mathematical depiction of wolves' hunting behavior is based on the assumption that \(\alpha , \beta , and \delta \) have superior knowledge about the prey's location. As a result, the ω wolves are required to follow their movements. Equation (31) describes and clarifies this hunting behavior.

\({C}_{1},{C}_{2},and{C}_{3}\) are computed using the calculations outlined in Eq. (32):

\({X}_{\alpha }\), \({X}_{\alpha }\), and \({X}_{\delta }\) denote the positions of the three leading solutions at iteration \(t\).

The values for \({D}_{\alpha }\), \({D}_{\beta }\), and \({D}_{\delta }\) are precisely determined by the equation mentioned earlier.

The hunting process in this algorithm ends when the prey stops moving, a transition controlled by "a" changing from 2 to 0 throughout the course of the iterations, progressively decreasing over time to balance exploration and exploitation. As per guidance (Emary et al. 2017), half of the iterations focus on exploration, while the remaining half shift into the exploitation phase. During exploitation, wolves adjust their positions to random locations between their current positions and the prey's location.

-

Improved Grey Wolf Optimization Algorithm (IGWO)

In GWO, \(\alpha , \beta , and \delta \) guide ω wolves to promising areas in the search space, but this can lead to being trapped in local optima and reduced population diversity, causing premature convergence. To address these issues, an improved variant, the IGWO, has been introduced. IGWO introduces new search strategies and updates steps with a dashed line border. It operates in three phases: initialization, movement, selection, and updating.

-

Initializing phase:

This step involves utilizing Eq. (34) to randomly place all \(N\) wolves in the search space within the preset range \([{l}_{i},{u}_{j}]\):

In each algorithm iteration, the position of the \({i}_{th}\) wolf is represented by a vector \({X}_{i}\left(t\right)=\{{x}_{i1},{x}_{i2},\dots ,{x}_{iD}\)} in the search space. These wolves are organized into a matrix called \(Pop\), with \(D\) columns and \(N\) rows, where \(N\) is the population size, and \(D\) is the problem's dimension. The function \(f({X}_{i} (t))\) is used to calculate the fitness value of \({X}_{i} (t)\).

-

Movement phase:

Grey wolves not only engage in group hunting but also exhibit individual hunting behavior (MacNulty et al. 2007). This inspired the enhancement of GWO with the Dimension Learning-Based Hunting (DLH) strategy. In DLH, wolves learn from their neighbors and become potential candidates for new positions \({X}_{i}\left(t\right)\). This introduces two distinct candidates, with the canonical GWO and DLH strategies contributing to their selection.

-

The canonical GWO search strategy:

\(\alpha \), \(\beta \), and \(\delta \) are the top three wolves in the population in GWO. Using Eqs. (30–32) the coefficients \(a\), \(A\), and \(C\) are computed linearly. Using Eqs. (33–34), prey encircling is calculated from the locations of \({X}_{\alpha }\), \({X}_{\beta }\), and \({X}_{\delta }\). Equation (35) is used to determine the initial candidate for a wolf's new position, which is represented as \({X}_{i-GWO}(t+1)\). On the other hand, to tackle the convergence problems of GWO, a new search approach called Dimension Learning-Based Hunting (DLH) is presented. The new position of a wolf in DLH, \({X}_{i-GWO}(t+1)\), is calculated for every dimension utilizing Eq. (38), in which the wolf gains knowledge from its neighbors and a randomly chosen wolf from \(Pop\). Furthermore, DLH produces an additional candidate for the new position, designated as \({X}_{i-DLH}\left(t+1\right)\). This is achieved by computing a radius, \({R}_{i}(t)\), which depends on the Euclidean distance between the current position of \({X}_{i}\left(t\right)\) and the candidate's position \({X}_{i-GWO} (t+1)\), as described in Eq. (36).

The neighbors of \({X}_{i}\left(t\right)\), represented as \({N}_{i}(t)\), are determined using Eq. (21) with respect to the radius \({R}_{i}(t)\). \({D}_{i}\) is the Euclidean distance between \({X}_{i}\left(t\right)\) and \({X}_{j}\left(t\right)\).

Once the neighborhood around \({X}_{i}\left(t\right)\) is established, a learning process from multiple neighbors is performed utilizing Eq. (37). The \({d}_{th}\) dimension of \({X}_{i-DLH,d}(t+1)\) is determined in this equation by adding the \({d}_{th}\) dimensions of a randomly selected neighbor, \({X}_{n,d}(t)\), from \({N}_{i}(t)\), and a randomly selected wolf, \({X}_{r,d}(t)\), from the population, Pop.

In the selection and updating phase, the initial choice of the superior candidate is made by evaluating the fitness values of two candidates, \({X}_{i-GWO} (t+1)\) and \({X}_{i-DLH} \left(t+1\right),\) through Eq. (38):

In conclusion, the search is continued until the predefined number of iterations \((Maxiter)\) is reached after this procedure is completed for each individual. The iteration counter \((iter)\) is then increased by one.

2.3 Assessment indices

Commonly, a comprehensive evaluation of prediction model performance involves employing a set of model selection criteria that includes multiple goodness-of-fit and error indices. This approach helps mitigate the limitations of individual criteria, which on their own may lead to incorrect decisions (Dodo et al. 2022). It is ordinary to employ the following metrics in assessing the performance of a model.

-

Coefficient of Determination (R2):

The Coefficient of Determination, commonly represented as \({\text{R}}^{2}\), is a statistical measure employed in regression analysis to quantify the degree to which a model elucidates the variance or variability observed in the dependent variable. This metric operates within a range spanning from 0 to 1, where higher values signify an improved alignment of the model with the underlying data. Specifically, an \({\text{R}}^{2}\) value of 0 indicates that the model does not account for any variance, while an \({\text{R}}^{2}\) value of 1 signifies a perfect congruence between the model and the data. It is indicated by the following formula:

-

Error evaluation metrics (MAE, RMSE):

MAE (Mean Absolute Error) and RMSE (Root Mean Square Error) are statistical metrics that provide a means to quantify the error between actual and predicted values, yet they differ in their sensitivity to the magnitude of errors. RMSE places more emphasis on larger errors, rendering it suitable when one seeks to penalize significant deviations substantially. Conversely, MAE treats all errors with equal weight and is particularly robust in the presence of outliers in the dataset. The selection between RMSE and MAE should be contingent upon the specific objectives and characteristics of the predictive modeling problem at hand. These measures are formally expressed in Eqs. (40) and (41) as elaborated below:

-

Mean Relative Absolute Error (MRAE):

MRAE, which stands for Mean Relative Absolute Error, is a statistical measure employed to evaluate the precision of predictive models or the degree of error in forecasting. The average relative absolute error between the expected and actual numbers is quantified. MRAE is typically expressed as a percentage and serves as a reflection of the average relative discrepancy in the model's predictions. It offers valuable insights into how closely the model's forecasts align with the actual data while taking into account the relative scale of the errors. A lower MRAE signifies superior accuracy, whereas a higher MRAE indicates a less accurate model. The formula for computing MRAE is as stated below:

-

RMSE-observations standard deviation ratio (RSR):

The RSR (RMSE-observations standard deviation ratio) is a statistical metric for evaluating predictive models, especially in regression analysis. It combines RMSE and the standard deviation of observed data to gauge a model's accuracy while considering data variability. RSR normalizes RMSE by dividing it by the standard deviation, offering a context-aware assessment. Lower RSR values indicate a better model fit to data, while higher values indicate a poorer fit. Equation (43) indicates RSR:

Within each equation: \(n\): number of samples, \({v}_{i}:\) represents the specific predicted value. \(\overline{v }:\) denotes the average of the predicted values, \({w}_{i}:\) signifies the experimentally measured value, \(\overline{w }:\) stands for the mean of the experimentally measured values.

3 Results

3.1 Hyperparameters’ results

In contrast to parameters, hyperparameters are predefined specifications not directly derived from the dataset. Maximizing model performance heavily depends on fine-tuning hyperparameters, a process demanding extensive experimentation and the application of advanced optimization approaches. Presented in Table 2 are the detailed hyperparameter configurations corresponding to the LSDM and LSIG models, highlighting the critical parameters C and Gamma across both output variables, CH4 and C2Hn. For instance, in the LSDM model, the C hyperparameter maintains a constant value of 1 for both CH4 and C2Hn, underscoring a uniform setting for model optimization. In contrast, the LSIG model exhibits variations in C, with values of 179.926 for CH4 and 240.393 for C2Hn, indicating nuanced adjustments tailored to each output variable.

3.2 Results of evaluation metrics

This article demonstrates the use of enhanced Machine Learning models, specifically Least Square Support Vector Regression (LSSVR), to predict the composition of \({CH}_{4}\) (methane) and \({C}_{2}{H}_{n}\) (hydrocarbons) in the context of biomass gasification. The research incorporates two optimization algorithms, DMO (Dwarf Mongoose Optimization) and the Improved Grey Wolf Optimization Algorithm (IGWO), to create hybrid models known as LSDM and LSIG. The main objectives of this research are to identify the most robust optimization algorithm and to evaluate the predictive performance of both individual and combined hybrid models. This comparative evaluation employs five assessment metrics: \({\text{R}}^{2}\), RMSE, MAE, MRAE, and RSR. The following sections provide a comprehensive analysis of model performance, with dedicated segments for the prediction of \({CH}_{4}\) and \({C}_{2}{H}_{n}\).

-

\({\mathbf{C}\mathbf{H}}_{4}\) syngas forecasting

According to Table 3, it is evident that for the LSSVR, LSIG, and LSDM models in the three phases of training, validation, and testing, the R2 value is highest for the LSDM model, approaching 1. In comparison, the LSIG and LSSVR models take the second and third positions, respectively. For example, in the LSDM model, the R2 values for the mentioned phases are 0.988, 0.974, and 0.978.

RMSE and MSE represent error values based on their definitions. When these values are lower, it indicates better model performance. A thorough examination of Table 3 reveals that the LSDM model demonstrates the best performance, while the LSIG and LSSVR models rank second and third, respectively. In the Train phase of the LSDM model, the lowest RMSE is 0.367, and the lowest MSE is 0.171.

A lower MRAE indicates a greater precision, whereas a higher MRAE shows a less precise model. Therefore, it is concluded that the LSDM, with a value of 0.149 in the Training sector, has the best accuracy.

RSR is a metric used to assess the precision of a predictive model in comparison to real values within a scale from zero to one. When a parameter gradually approaches zero, it signifies a strong level of agreement. In this situation, it is evident that LSDM demonstrates the best performance with a value of 0.111in the Training phase, the LSIG model follows in second place with a value of 0.975, and the LSSVR model ranks last with a value of 0.207.

A scattered representation of the average variety between measured and predicted values is shown in Fig. 2. Detailed dispersion points are derived from RMSE and \({\text{R}}^{2}\), which primarily controls data distribution so that the lower amount reaches a higher density in RMSE. A greater \({\text{R}}^{2}\) value suggests that the line fits the data more closely. General comparisons show that the value of \({R}^{2} \)is relatively high for all three models. According to the scatter data, the LSDM model with lower RMSE and higher \({R}^{2}\) values in all three phases (train, validation, test) of the prediction model appear most appropriate.

Figure 3. presents a comparison between the measured and predicted values for CH4, confirming that a smaller difference between these two values indicates superior model performance. As shown in the diagram, the maximum difference values for the LSSVR, LSIG, and LSDM models occurred during the Test phase.

Figure 4 shows two graphs comparing the number of samples versus the error percentage of CH4 and the error percentage versus its frequency. The error rate differs with the number of samples, as shown in the LSSVR charts. The error of this model ranges from -40 to 100 percent, with a high frequency between -20 and 0. For the LSIG and LSDM hybrid models, the error percentage compared to the number of samples is superior compared to the basic model. In LSIG, the error range is -80 to 100 percent, and the highest frequency is -10 to 0, while for the LSDM model, the error range is -20 to 60, and the highest frequency is -5 and 0.

-

\({\mathbf{C}}_{2}{\mathbf{H}}_{\mathbf{n}} \)syngas forecasting

After reviewing the data presented in Table 4, it is clear that the LSDM model demonstrates superior performance compared to the LSSVR and LSIG models in terms of R2 values for all three phases: training, validation, and testing. Specifically, the LSDM model achieved R2 values of 0.985, 0.979, and 0.973, respectively.

RMSE and MSE serve as metrics for measuring errors, and lower values signify improved model performance. A detailed analysis of Table 5 underscores that the LSDM model exhibits superior performance, with the LSSVR and LSIG models occupying the second and third positions, respectively. In the training phase of the LSDM model, the most favorable RMSE is 0.184, and the most favorable MSE is 0.046.

A lower MRAE signifies greater precision, while a higher MRAE reflects a less precise model. Consequently, it can be deduced that the LSDM, with a value of 0.274 in the validation sector, exhibits the highest level of accuracy.

RSR, a metric employed for evaluating the precision of a predictive model concerning real values on a scale ranging from zero to one, indicates a strong level of agreement as a parameter approaches zero. In this context, it is clear that the LSDM exhibits the most favorable performance, boasting a value of 0.129 in the training phase.

In Fig. 5, the comparisons indicate that the LSDM model, which boasts lower RMSE and higher R2 values in all three phases of the prediction model (training, validation, testing) with values of 0.985, 0.977, and 0.973 (for R2), and 0.184, 0.284, and 0.337 (for RMSE), respectively, is the most appropriate option for forecasting \({\text{C}}_{2}{\text{H}}_{\text{n}}\).

Figure 6. provides a visual comparison of the measured and predicted values for \({\text{C}}_{2}{\text{H}}_{\text{n}}\), affirming that a reduced disparity between these values reflects a higher level of model performance. As depicted in the graph, the largest differences for the LSSVR, LSIG, and LSDM models were observed in the training phase.

Figure 7. displays two graphs that illustrate the relationship between the number of samples and the error percentage for \({\text{C}}_{2}{\text{H}}_{\text{n}}\), as well as the error percentage versus its frequency. The error rate varies with the number of samples, particularly evident in the LSSVR charts, where the model's error ranges from -80 to 100 percent, with a notable frequency between -20 and 10. Conversely, for the LSIG and LSDM hybrid models, the error percentage in relation to the number of samples outperforms the basic model. In LSIG, the error range is -70 to 100 percent, with the highest frequency occurring around -20 to 15, while for the LSDM model, the error range is -30 to 70, and the highest frequency is approximately -10 to 5.

4 Discussion

4.1 Advantages and disadvantages of model and algorithms

4.1.1 Advantages

The LSSVR is a versatile method that excels in both linear and nonlinear regression tasks. It harnesses the kernel trick, enabling it to operate effectively in high-dimensional spaces while handling small datasets efficiently. One of its key strengths lies in its robustness against overfitting, thanks to its regularization parameter. Inspired by the social behavior of dwarf mongooses, the DMO algorithm offers a novel approach to optimization. It is adept at solving single-objective and multi-objective optimization problems with simplicity in implementation and understanding.

DMO effectively explores search spaces and avoids getting trapped in local optima, making it a valuable tool across various domains. The IGWO algorithm builds upon the foundation of the original Grey Wolf Optimization (GWO) by enhancing its performance. It exhibits faster convergence rates and employs a more efficient search mechanism by incorporating multiple wolf packs. IGWO proves effective for tackling both single-objective and multi-objective optimization problems, providing robust solutions to complex optimization challenges.

4.1.2 Disadvantages

The LSSVR excels in handling both linear and nonlinear regression tasks but can be memory-intensive for large datasets due to the storage requirements for support vectors. Additionally, it is computationally expensive during the training phase and demands careful selection of hyperparameters such as the regularization parameter and kernel function for optimal performance.

The DMO algorithm offers a unique perspective inspired by social behavior but may experience slower convergence rates compared to more established optimization algorithms. It is sensitive to parameter tuning, including factors like population size and mutation rate, which can affect its efficiency. DMO also lacks extensive theoretical groundwork and empirical validation compared to more widely studied algorithms in the optimization field.

The IGWO algorithm shares common traits with many metaheuristic algorithms. It requires meticulous parameter fine-tuning for achieving optimal performance across different problem domains. Efficiency may vary based on problem characteristics and parameter settings, highlighting the need for careful implementation. Despite its effectiveness, IGWO's theoretical analysis remains relatively limited compared to traditional optimization algorithms with more established theoretical frameworks.

4.1.3 Specifications

The LSSVR technique is rooted in support vector machines (SVM) principles, aiming to minimize the empirical risk while incorporating a regularization term to prevent overfitting. Its performance is commonly assessed utilizing metrics like coefficient of determination (R-squared)or mean squared error (MSE), highlighting its efficacy in regression tasks across various domains.

The DMO algorithm draws inspiration from the cooperative foraging behavior observed in dwarf mongooses. It leverages social interactions and leader–follower dynamics to guide the optimization process, striking a balance between exploration (seeking new solutions) and exploitation (refining existing solutions). This approach ensures robust performance in both single-objective and multi-objective optimization scenarios, emphasizing simplicity and effectiveness in implementation. The IGWO algorithm enhances the original GWO by simulating the hierarchical and hunting behaviors of grey wolf packs more effectively. It represents solutions using vectors and utilizes a dynamic search mechanism to explore the solution space comprehensively. IGWO's convergence criteria typically involve a maximum number of iterations or a threshold for improvement, ensuring efficient convergence and optimal solutions for complex optimization problems.

4.2 Comparison between the present study and existing publications

A few ranges of studies focused on the prediction of the gasification process such as CH4 and C2Hn. For example, Ascher et al. in two different studies investigate predicting these values via implementing machine learning models. The first study was conducted by Ascher et al. (Ascher et al. 2022b) based on the ANN model, achieved R2 values of 0.52 and 0.799 for C2Hn and CH4, along with RMSE values of 0.118 and 0.18 for C2Hn and CH4. The second study by Ascher et al. (Ascher et al. 2024) based on the GBR model, Achieved R2 values of 0.9 and 0.87 for CH4 and C2Hn, along with RMSE values of 0.4 and 0.85 for C2Hn and CH4. In this study, the LSSVR model was employed as the foundational framework, undergoing enhancement through the integration of DMO and IGWO algorithms. Upon evaluating the results obtained, it was found that the integration of DMO into the LSSVR model demonstrated exceptional applicability, yielding an R2 value of 0.988 and 0.985, as well as an RMSE of 0.184 and 0.367 for C2Hn and CH4, surpassing the performance of the other two models in this study.

4.3 Limitations of the study

While the study demonstrates promising results in predicting syngas production from biomass gasification, there are several limitations that should be acknowledged. Firstly, the models were evaluated based on specific input variables and performance criteria, which may not capture the full complexity of biomass gasification processes. Additionally, the study focuses primarily on CH₄ and C₂Hₙ compositions, overlooking other important components of syngas. Future research could explore the prediction of a broader range of syngas components and consider the impact of varying process conditions and feedstock characteristics. Future works could investigate alternative optimization methods or hybrid approaches to further improve predictive performance. Additionally, experimental validation of the models using real-world biomass gasification data would strengthen their applicability and reliability. Overall, addressing these limitations and conducting further research could provide a more comprehensive understanding of biomass gasification processes and enhance the development of predictive models for sustainable energy production.

5 Summary and conclusion

Mounting global energy demand and environmental concerns related to fossil fuels, including natural gas, coal, and oil, are driving the widespread adoption of renewable energy sources like solar, wind, hydropower, and biomass-biofuels to address carbon emissions and establish sustainable energy systems. Biomass, derived from agricultural leftovers and forests, offers a promising and widely available resource for the production of materials and bioenergy, supporting waste management and renewable energy sustainability, with the aid of advanced energy storage technologies to enhance energy security. Gasification is a crucial process in biomass utilization, offering flexibility in converting various feedstock materials into syngas with significant heating potential for power generation and biofuel production, thereby expanding biomass usage as a source of energy. This article presents the LSSVR single model and two hybrid models, LSIG (LSSVR optimized with IGWO) and LSDM (LSSVR optimized with DMO), which employ input variables to predict elemental compositions (\({\text{CH}}_{4}\) and \({\text{C}}_{2}{\text{H}}_{\text{n}}\)). These models were assessed using established performance criteria. The results demonstrate that both introduced optimizers, particularly LSDM, significantly enhance the accuracy of \({\text{CH}}_{4}\) and \({\text{C}}_{2}{\text{H}}_{\text{n}}\) predictions when combined with LSSVR. Notably, LSDM achieved outstanding performance with the highest R2 values of 0.988 for CH_4 and 0.985 for \({\text{C}}_{2}{\text{H}}_{\text{n}}\), indicating its exceptional accuracy. Furthermore, LSDM's minimum RMSE values of 0.367 for \({\text{CH}}_{4}\) and 0.184 for \({\text{C}}_{2}{\text{H}}_{\text{n}}\) underscore its precision. Additionally, a comparative analysis revealed that LSDM was more accurate in predicting \({\text{CH}}_{4}\) and \({\text{C}}_{2}{\text{H}}_{\text{n}}\). In summary, this research highlights the potential of optimization methods for forecasting syngas production and provides valuable insights into biomass gasification.

Data availability

No datasets were generated or analysed during the current study.

Abbreviations

- CH4 :

-

Methane

- C2Hn :

-

Ethylene

- syngas:

-

Synthesis gas

- H2 :

-

Hydrogen

- CO:

-

Carbon monoxide

- LSSVR:

-

Least Square Support Vector Regression

- SVM:

-

Support Vector Machine

- DMO:

-

Dwarf Mongoose Optimization

- IGWO:

-

Improved Grey Wolf Optimization Algorithm

- RE:

-

Renewable energy

- ML:

-

Machine learning

- AI:

-

Artificial intelligence

- VM:

-

Volatile matter content

- Fc:

-

Fixed carbon

- LHV:

-

Lower heating value

- O:

-

Oxygen content

- NO:

-

Nitrogen content

- SB:

-

Steam-to-biomass ratio

- ER:

-

Equivalence Ratio

- Rt:

-

Residence time

- R2 :

-

Coefficient of Determination

- RMSE:

-

Root Mean Square Error

- MAE:

-

Mean Absolute Error

- MRAE:

-

Mean Relative Absolute Error

- RSR:

-

RMSE-observations standard deviation ratio

- ψ:

-

Mapping function

- ε:

-

Precision

- δ and \({\delta }_{i}^{*}\) :

-

Slack variables

- c:

-

Trade-off parameter

- \(\Omega \) :

-

Regulatory factor

- \({\alpha }_{i}\) :

-

Lagrangian multiplier

- Q and e:

-

Lagrangian function

- λ:

-

Kernel trick

- \({\nu }^{2}\) :

-

Square of the kernel bandwidth

- \(K\) :

-

Number of input patterns

- d:

-

Number of dimensions in the underlying problem

- n:

-

Size of the population.

- \(Va{r}_{Size}\) :

-

Problem's dimensions

- \(Va{r}_{Min}\) :

-

Minimum limit of limits

- \(Va{r}_{Max}\) :

-

Maximum limit of limits

- \(peep\) :

-

Acoustic signa

- \(bs\) :

-

Count of individuals

- \(\varphi \) :

-

Uniform distribution

- \(\overrightarrow{M}\) :

-

Driving force

- \(rand\) :

-

Random value

References

Agushaka JO, Ezugwu AE, Abualigah L (2022) Dwarf mongoose optimization algorithm. Comput Methods Appl Mech Eng 391:114570

Ahrenfeldt J, Thomsen TP, Henriksen U, Clausen LR (2013) Biomass gasification cogeneration–A review of state of the art technology and near future perspectives. Appl Therm Eng 50:1407–1417

Aiyer BG, Kim D, Karingattikkal N, Samui P, Rao PR (2014) Prediction of compressive strength of self-compacting concrete using least square support vector machine and relevance vector machine. KSCE J Civ Eng 18:1753–1758

Antonopoulos I-S, Karagiannidis A, Gkouletsos A, Perkoulidis G (2012) Modelling of a downdraft gasifier fed by agricultural residues. Waste Manag 32:710–718

Ascher S, Sloan W, Watson I, You S (2022b) A comprehensive artificial neural network model for gasification process prediction. Appl Energy 320:119289

Ascher S, Wang X, Watson I, Sloan W, You S (2022) Interpretable machine learning to model biomass and waste gasification. Bioresour Technol 364:128062. https://doi.org/10.1016/j.biortech.2022.128062

Ascher S, Watson I, You S (2022a) Machine learning methods for modelling the gasification and pyrolysis of biomass and waste. Renew Sustain Energy Rev 155:111902

Awad M, Khanna R. Support Vector Regression BT - Efficient Learning Machines: Theories, Concepts, and Applications for Engineers and System Designers. In: Awad M, Khanna R, editors., Berkeley, CA: Apress; 2015, p. 67–80. https://doi.org/10.1007/978-1-4302-5990-9_4.

Ayubi Rad M, Ayubirad MS (2017) Comparison of artificial neural network and coupled simulated annealing based least square support vector regression models for prediction of compressive strength of high-performance concrete. Sci Iran 24:487–496

Bahadar A, Kanthasamy R, Sait HH, Zwawi M, Algarni M, Ayodele BV et al (2022) Elucidating the effect of process parameters on the production of hydrogen-rich syngas by biomass and coal Co-gasification techniques: a multi-criteria modeling approach. Chemosphere 287:132052

Baruah D, Baruah DC, Hazarika MK (2017) Artificial neural network based modeling of biomass gasification in fixed bed downdraft gasifiers. Biomass Bioenerg 98:264–271

Basu P. Biomass gasification and pyrolysis: practical design and theory. Academic press; 2010.

Ceylan Z, Ceylan S (2021) Application of machine learning algorithms to predict the performance of coal gasification process. Elsevier, Appl. Artif. Intell. Process Syst. Eng., pp 165–186

Chen W-H, Farooq W, Shahbaz M, Naqvi SR, Ali I, Al-Ansari T et al (2021) Current status of biohydrogen production from lignocellulosic biomass, technical challenges and commercial potential through pyrolysis process. Energy 226:120433

Ciferno JP, Marano JJ. Benchmarking biomass gasification technologies for fuels, chemicals and hydrogen production. US Dep Energy Natl Energy Technol Lab 2002.

Dodo UA, Ashigwuike EC, Emechebe JN, Abba SI (2022) Prediction of energy content of biomass based on hybrid machine learning ensemble algorithm. Energy Nexus 8:100157

Eberhart RC, Shi Y. Evolving artificial neural networks. Proc. Int. Conf. neural networks brain, vol. 1, PRC; 1998, p. PL5--PLI3.

Emary E, Zawbaa HM, Grosan C (2017) Experienced gray wolf optimization through reinforcement learning and neural networks. IEEE Trans Neural Networks Learn Syst 29:681–694

Ewees AA, Vo Thanh H, Al-qaness MAA, Abd Elaziz M, Samak AH. Smart predictive viscosity mixing of CO2–N2 using optimized dendritic neural networks to implicate for carbon capture utilization and storage. J Environ Chem Eng 2024;12:112210. https://doi.org/10.1016/j.jece.2024.112210.

George J, Arun P, Muraleedharan C (2018) Assessment of producer gas composition in air gasification of biomass using artificial neural network model. Int J Hydrogen Energy 43:9558–9568

Goh ATC (1995) Back-propagation neural networks for modeling complex systems. Artif Intell Eng 9:143–151

Hakeem KR, Jawaid M, Rashid U. Biomass and bioenergy. Springer; 2016.

Han P, Li DZ, Wang Z (2008) A study on the biomass gasification process model based on least squares SVM. Energy Conserv Technol 1:3–7

Hassan MA, Bailek N, Bouchouicha K, Ibrahim A, Jamil B, Kuriqi A et al (2022) Evaluation of energy extraction of PV systems affected by environmental factors under real outdoor conditions. Theor Appl Climatol 150:715–729

Hsu C-W, Chang C-C, Lin C-J. A practical guide to support vector classification 2003.

Jain AK, Mao J, Mohiuddin KM (1996) Artificial neural networks: a tutorial. Computer (long Beach Calif) 29:31–44

MacNulty DR, Mech LD, Smith DW (2007) A proposed ethogram of large-carnivore predatory behavior, exemplified by the wolf. J Mammal 88:595–605

Malka L, Daci A, Kuriqi A, Bartocci P, Rrapaj E (2022) Energy storage benefits assessment using multiple-choice criteria: the case of Drini River Cascade. Albania Energies 15:4032

McKendry P (2002) Energy production from biomass (part 1): overview of biomass. Bioresour Technol 83:37–46

Molino A, Chianese S, Musmarra D (2016) Biomass gasification technology: The state of the art overview. J Energy Chem 25:10–25

Narnaware SL, Panwar NL (2022) Biomass gasification for climate change mitigation and policy framework in India: a review. Bioresour Technol Reports 17:100892

Okkan U, Serbes ZA (2012) Rainfall–runoff modeling using least squares support vector machines. Environmetrics 23:549–564

Pham QB, Yang T-C, Kuo C-M, Tseng H-W, Yu P-S (2019) Combing random forest and least square support vector regression for improving extreme rainfall downscaling. Water 11:451

Qian K, Kumar A, Patil K, Bellmer D, Wang D, Yuan W et al (2013) Effects of biomass feedstocks and gasification conditions on the physiochemical properties of char. Energies 6:3972–3986

Raud M, Kikas T, Sippula O, Shurpali NJ (2019) Potentials and challenges in lignocellulosic biofuel production technology. Renew Sustain Energy Rev 111:44–56

Rodriguez-Galiano V, Sanchez-Castillo M, Chica-Olmo M, Chica-Rivas M (2015) Machine learning predictive models for mineral prospectivity: an evaluation of neural networks, random forest, regression trees and support vector machines. Ore Geol Rev 71:804–818

Sadaghat B, Ebrahimi SA, Souri O, Yahyavi Niar M, Akbarzadeh MR (2024) Evaluating strength properties of Eco-friendly Seashell-Containing Concrete: Comparative analysis of hybrid and ensemble boosting methods based on environmental effects of seashell usage. Eng Appl Artif Intell 133:108388. https://doi.org/10.1016/j.engappai.2024.108388

Serrano García D, Castelló D. Tar prediction in bubbling fluidized bed gasification through artificial neural networks 2020.

Shenbagaraj S, Sharma PK, Sharma AK, Raghav G, Kota KB, Ashokkumar V (2021) Gasification of food waste in supercritical water: an innovative synthesis gas composition prediction model based on Artificial Neural Networks. Int J Hydrogen Energy 46:12739–12757

Tang Q, Chen Y, Yang H, Liu M, Xiao H, Wang S et al (2021) Machine learning prediction of pyrolytic gas yield and compositions with feature reduction methods: Effects of pyrolysis conditions and biomass characteristics. Bioresour Technol 339:125581

Vapnik VN. The nature of statistical learning theory. Theory 1995.

Velvizhi G, Balakumar K, Shetti NP, Ahmad E, Pant KK, Aminabhavi TM (2022) Integrated biorefinery processes for conversion of lignocellulosic biomass to value added materials: Paving a path towards circular economy. Bioresour Technol 343:126151

Vo Thanh H, Dai Z, Du Z, Yin H, Yan B, Soltanian MR et al (2024) Artificial intelligence-based prediction of hydrogen adsorption in various kerogen types: Implications for underground hydrogen storage and cleaner production. Int J Hydrogen Energy 57:1000–1009. https://doi.org/10.1016/j.ijhydene.2024.01.115

Vo Thanh H, Sugai Y, Sasaki K (2020) Application of artificial neural network for predicting the performance of CO2 enhanced oil recovery and storage in residual oil zones. Sci Rep 10:18204. https://doi.org/10.1038/s41598-020-73931-2

Vo Thanh H, Zhang H, Dai Z, Zhang T, Tangparitkul S, Min B (2024) Data-driven machine learning models for the prediction of hydrogen solubility in aqueous systems of varying salinity: Implications for underground hydrogen storage. Int J Hydrogen Energy 55:1422–1433. https://doi.org/10.1016/j.ijhydene.2023.12.131

Wang S, Wen Y, Shi Z, Zaini IN, Jönsson PG, Yang W (2022) Novel carbon-negative methane production via integrating anaerobic digestion and pyrolysis of organic fraction of municipal solid waste. Energy Convers Manag 252:115042

Wang K, Zhang J, Shang C, Huang D (2021) Operation optimization of Shell coal gasification process based on convolutional neural network models. Appl Energy 292:116847

Wang L. Support vector machines: theory and applications. vol. 177. Springer Science & Business Media; 2005.

Wu Y, Yang W, Blasiak W (2014) Energy and exergy analysis of high temperature agent gasification of biomass. Energies 7:2107–2122

Xie G, Wang S, Zhao Y, Lai KK (2013) Hybrid approaches based on LSSVR model for container throughput forecasting: a comparative study. Appl Soft Comput 13:2232–2241

Yao X (1999) Evolving Artificial Neural Networks. Proc IEEE 87:1423–1447

Zhang F, O’Donnell LJ. Chapter 7 - Support vector regression. In: Mechelli A, Vieira SBT-ML, editors., Academic Press; 2020, p. 123–40. https://doi.org/10.1016/B978-0-12-815739-8.00007-9.

Zhang H, Wang P, Rahimi M, Vo Thanh H, Wang Y, Dai Z et al (2024) Catalyzing net-zero carbon strategies: Enhancing CO2 flux Prediction from underground coal fires using optimized machine learning models. J Clean Prod 441:141043. https://doi.org/10.1016/j.jclepro.2024.141043

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

The author contributed to the study's conception and design. Data collection. simulation and analysis were performed by " Wei Cong ".

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix A

Appendix A

The code of LSSVR-DMO and LSSVR-IGWO:

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cong, W. Machine learning and LSSVR model optimization for gasification process prediction. Multiscale and Multidiscip. Model. Exp. and Des. (2024). https://doi.org/10.1007/s41939-024-00552-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41939-024-00552-x