Abstract

In structural engineering applications, such as the construction of foundations, earth dams, rock fill, slope stability assessment, and roads and railroads, the undrained shear strength (USS) is an essential metric. In recent times, certain empirical and theoretical methodologies have emerged in the estimation of the USS by utilizing soil characteristics and conducting field tests. The majority of these methodologies entail underlying correlation-based presumptions, thus yielding imprecise outcomes. Furthermore, traditional methods often exhibit minimal efficiency in terms of time and cost. Since these types of examinations are deemed not economical, employing distinctive approaches in projecting their outcomes appears essential. The advancement of artificial intelligence (AI) techniques leads to the development of novel models and algorithms. Utilizing these techniques allows researchers to select a predictive approach as an alternative to experimental methodologies. The present study implemented the AI methodology to assess the USS of soils with high sensitivity. The multi-layer perceptron (MLP) was employed as a means to address a problem in the development of a machine learning methodology. This approach employs empirical samples to address a specific problem. Four predictor variables, overburden weight (OBW), liquid limit (LL), sleeve friction (SF), and plastic limit (PL), were utilized for training the models. To enhance the resultant output, a set of three optimizers, namely the dynamic control cuckoo search (DCCS), smell agent optimization (SAO), and bonobo optimizer (BO), were employed. This research significantly advances USS evaluation for sensitive soils by employing the MLP and three optimizers. It introduces a sophisticated AI approach, promising improved accuracy and efficiency compared to traditional methods in geotechnical engineering.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The undrained shear strength (USS) is the soil’s ability to resist shear stresses and is commonly utilized as a measure of this capability. When performing calculations related to various geotechnical topics, such as settlement induced by external forces, the USS value is widely standard as a crucial parameter (Behnam Sedaghat et al. 2023; Akbarzadeh et al. 2023; Sharma and Singh 2018). Accurate evaluation of USS is crucial for the authentic assessment of its sub-structure constancy. The USS value of the soil can be assessed through either in situ evaluations or laboratory examinations.

Geosciences, integral to geotechnical engineering, involve the study of Earth materials and their interactions. Essential components include soil mechanics, the cone penetration test (CPT) for in-situ analysis, USS as a key parameter for sub-structure stability, and laboratory examinations for precise property determination. Recent advancements include the application of machine learning (ML), such as support vector machines (SVM) (Wang 2005; Kordjazi et al. 2014) and artificial neural networks (ANN) (Mojumder 2020; Sulewska 2017; Shahin et al. 2001; Majdi and Rezaei 2013), to predict geotechnical properties, showcasing an evolving interdisciplinary approach in the field. The CPT is a geotechnical method involving a cone-tipped probe pushed into the ground, measuring resistance to penetration. It efficiently assesses soil properties, including shear strength and USS. CPT conducted on site are efficient for identifying types of soil, evaluating their properties such as shear strength, and various other geotechnical applications. Compared to traditional methods such as boring and experimental testing, in-situ CPT is a reliable, efficient, and cost-effective technique for evaluating soil characteristics. The CPT examination provides valuable and in-depth information about soil stiffness and strength that can aid in determining the soil’s ultimate bearing capacity (UBC), making it a useful tool for obtaining consistent soil profiles. In recent years, certain experimental methods have been expanded in order to estimate the USS using parameters obtained from in-situ cone penetration testing (Lunne 1982; Senneset 1982; Khajeh et al. 2021). Despite this, a variety of methods involve certain assumptions and judgments when selecting appropriate factors for correlation coefficients, such as the cone tip factor, \({N}_{{\text{kt}}}\) in CPT profiles and USS, which can have an impact on the calculation of shear strength. The assessment of the USS may be imprecise for different site scenarios due to this issue. For a true stability assessment of sub-structures, the USS should be evaluated accurately. Lab or field testing can be used to calculate the USS value of the soil. The quality of the collected data determines how accurate laboratory tests, such the undrained triaxial compression test and the direct shear test, can be (Prasad et al. 2007; Buò et al. 2019).

Additionally, this precision is significantly influenced by the friction coefficient of penetrometers at diverse depths (Tran et al. 2017; Tran and Sołowski 2019). The USS tests conducted in laboratories are found to be both laborious and time-consuming. The quality of laboratory conditions and imprecisions encountered in USS values obtained from soil samples also contribute to the challenges mentioned above (Zumrawi 2012). Experimental correlation techniques are frequently employed when measurements related to soil’s USS are absent or unreliable. The utilization of these methodologies is predicated upon the distinct attributes intrinsic to the soil (Hansbo 1957; Chandler 1988; Larsson 1980; Mbarak et al. 2020).

In recent years, the field of geotechnical engineering has experienced a notable proliferation in the application of ML techniques. The extensive utilization of diverse analytical models for the prediction of geotechnical properties of soils has been comprehensively documented in the existing literature (Onyelowe et al. 2021, 2022, 2023a, 2023b, 2023c; Gnananandarao et al. 2020). Specifically, the friction capacity of driven piles (Samui 2008), the soil’s shear strength (Ly and Pham 2020), the bearing resistance of shallow foundations (Kuo et al. 2009), and the peak friction angle of soils (Padmini et al. 2008) have been estimated using advanced methodologies such as SVM, ANN, and regression tree analysis (Kanungo et al. 2014). In contrast to single ML models like the least square support vector machine (LSSVM), hybrid ML models have been used in geotechnical research to improve the prediction power of models. Using cuckoo search optimisation (CSO) to forecast soil shear strength is one example of this (Tien Bui et al. 2019). The shuffling frog leaping algorithm (SFLA), salp swarm algorithm (SSA), wind-driven optimisation (WDO), and elephant herding optimisation (EHO) are some of the hybrid models that have been used to estimate soil shear strength (Moayedi et al. 2020).

A number of civil engineering applications, such as the investigation of frozen soil liquefaction and foundation settling using piles, have seen positive outcomes from the application of ANN (Tavana Amlashi et al. 2023; Das and Basudhar 2006; Ikizler et al. 2010; Nejad and Jaksa 2017; Yuan et al. 2022). The utilization of ANNs for the estimation of USS from CPT is proposed as a means of mitigating the limitations of traditional methods (Samui and Kurup 2012).

ANN methodologies exhibit a propensity to iterate the learning process of the human brain through prior examples and are then acquired by means of various mathematical algorithms. An ANN was employed to conduct a survey aiming to develop a model with improved accuracy in estimating USS through the utilization of CPT data. The data presented in this study consisted of empirical investigations and uneventful soil examinations conducted across different sites within Louisiana. Numerous ANN models were trained by incorporating diverse soil properties, such as sleeve friction (SF) and cone tip resistance. The results of the investigation demonstrate that the ANN approach exhibited superior performance in estimating the UBC of soil relative to the conventional method. The perspective mentioned above emphasizes the promising utility of these methodologies in the analysis of soil (Abu-Farsakh and Mojumder 2020). In a previous study, Bayesian optimization was utilized to optimize Random Forest (RF) and data-driven extreme gradient boosting (XGB) algorithms for the purpose of uncovering the relationships that exist between soil properties and the USS (Zhang et al. 2021). In previous studies, notably in Jamhiri et al. (2021, 2022), TA-based models demonstrated commendable results; however, a critical imperative exists to explore and implement innovative models grounded in ML and metaheuristic algorithms.

This paper uniquely contributes by unveiling the potential of employing the MLP model, integrating novel optimization algorithms, with a focus on predicting USS from CPT records. The study introduces a ML model designed to predict USS, leveraging an experimental dataset from reputable sources. Employing an MLP, the study constructs robust composite models integrating bonobo optimizer (BO), dynamic control cuckoo search (DCCS), and smell agent optimization (SAO) techniques for USS prediction in soils. This amalgamation enhances predictive precision by optimizing model parameters, ensuring robust and efficient predictions for USS. The resulting composite models exhibit a heightened ability to handle complex relationships within the dataset, improving adaptability to diverse data patterns.

Moreover, the streamlined optimization process of metaheuristics contributes to efficient convergence, ultimately bolstering model efficiency and generalization. In essence, this integration offers a synergistic approach that significantly augments the overall performance and versatility of the MLP model in soil applications. Various performance metrics, including R2, RMSE, NRMSE, and n10_index, are thoughtfully examined to evaluate the suggested models’ correctness.

2 Materials and methodology

2.1 Data gathering

The present study segments the USS data acquired through experimental techniques into four input variables, namely plastic limit (PL), liquid limit (LL), overburden weight (OBW), and SF. Subsequently, the USS value assumes the role of the identified output parameter. The dataset was stratified into two distinct phases: a train phase, which accounted for \(70\%\) of the data, and a test phase, which comprised \(30\%\) of the data. To predict the USS of soil from the CPT, the CPT dataset recorded from corresponding bore log data was gathered from different sites in Louisiana (Mojumder 2020). A quantitative representation of the parameters used in the model creation is shown in Table 1. This table exhibits certain distinct characteristics, encompassing the highest permissible value (Max) and the measure of variation denoted by the standard deviation (St. Dev). In the context of statistical analysis, the terms standard deviation (SD), mean (M), and minimum (Min) are often employed. The present investigation has yielded findings on the extreme values of SF, with maximum and minimum values of 1.4 and 0.02, respectively. Additionally, the variables \(L\)\(\left( {50,12} \right)\), LL\(\left( {133,24} \right)\), and OBW\(\left( {3.64,0.03} \right)\) were observed. Furthermore, the study set a target range for USS, with the upper and lower limits for this variable set at 5670 and 100, respectively.

2.2 Multi-layer perceptron (MLP)

The MLP is a frequently employed Neural Network methodology, typically trained using the backpropagation algorithm. The MLP has been designed specifically for modeling asset processes and facilitating the acquisition of knowledge through estimation and train techniques. MLP Neural Networks have gained recognition as a valuable tool for modeling complex and nonlinear processes that frequently occur in real-world scenarios. This is largely due to the innate adaptability of MLP networks, which possess formidable approximation capabilities (Moayedi and Hayati 2018). Three interconnected layers make up the architecture of an MLP model: Input, Output, and Hidden. The number of predictor variables is displayed by a few nodes in the input layer. Moreover, a single hidden layer within an MLP possesses the capability to represent multifarious functions through its respective hidden neurons proficiently. An insufficient quantity of neurons results in suboptimal functionality of neural networks. On the other hand, MLP neural networks pose challenges not only with regard to the complexity of training but also with a proclivity to overfitting. The nodes located in the output layer are correlated with the number of variables that have been modeled.

An MLP Neural Network is used in the function modeling challenge using one predictor to create a generalization of the nonlinear function \((h)\) in which \(X\in {R}^{D}\to Y\in {R}^{1}\). The variables denoted as \(Y\) and \(X\) correspond to the input and output parameters, in that order. The \(h\) denotes the function as mentioned above, and it is mathematically expressed in the form of Eq. (1):

M1 and M2 represents the weight matrixes of the output and hidden layers, respectively. s1 and s2 are bias vectors of the hidden and output layers, respectively. kb referred to the initiation purpose.

Both academic writing and real-world applications frequently use the activation functions of the tan–sigmoid and log–sigmoid. The equations in question have been denoted as Eqs. (2, 3) collectively:

\(T\) denotes the input activation function.

2.3 Dynamic control cuckoo search (DCCS)

The Cuckoo birds are renowned for their magnificence, likely due to their forceful reproductive method or their melodious vocalizations (Yang and Deb 2009; Mareli and Twala 2018; Nguyen et al. 2016). Certain species of birds lay their eggs in shared nests, allowing them to selectively abandon some of the eggs in order to increase the likelihood of successfully hatching their own. Some bird species exhibit obligatory brood parasitism, where they deposit their eggs in the nests of other bird species, leading to a boost in their reproductive success. To simplify the identification of the standard DCCS algorithm, three idealized rules are utilized:

-

1.

The nests that hold excellent eggs are preserved to ensure their availability for future generations.

-

2.

Every individual bird lays a singular egg that is subsequently placed into any nest at random.

-

3.

The quantity of host nests that currently prevail remains steady, and the probability of a host bird detecting a cuckoo’s egg is denoted as qb, which is a value that falls within the range of 0 and 1.

The final conjecture can be approximated through the introduction of a proportion pa of alternative nests (containing new and distinct solutions) among the set of n host nests.

One way to express the local random walk is as Eq. (4):

\({x}_{j}^{r}\) and \({x}_{{\text{l}}}^{r}\) are different answers selected randomly using a permutation. \(F(u)\) is a Heaviside-related function. \(\delta\) is a value chosen at random from a regular distribution. \(as\) demonstrates the step’ s magnitude. ⊗ is the vectors’ entry-wise output.

To execute the global random walk, Lévy flights are used:

\(\alpha > 0\) is the scaling factor for step size, and

The initial solution was developed regarding the succeeding:

A random number generator with a uniform distribution between \(0\) and \(1\) is referred to as a rand. The jth nest’s highest range is represented by bU, and its lowest range by bL.

Figure 1 shows the flowchart of DCCS combined with MLP.

2.4 Bonobo optimizer (BO)

The BO is an advanced meta-heuristic algorithm that draws inspiration from the reproducible techniques and societal conduct of Bonobos. Das et al. (2019) created a population-oriented version of the BO algorithm. In essence, bonobos are divided into smaller groups, termed fission, to search for food and rest during the night. The incorporation of the method into the BO algorithm aimed to improve the search process’s efficiency. The algorithm examines the natural techniques and solutions used by bonobos as it has attained the optimal level of response. The Bonobo species uses four distinct methods to reproduce and create new bonobos, including extra-group mating, promiscuous, restrictive, and consortship (Das et al. 2019). The mating strategies may experience modification contingent upon the prevailing phase circumstance, whether negative (NP) or positive (PP). The current study clarifies the relationship between the PP state and the bonobo community under circumstances that provide enough food availability, genetic variety among the bonobos, advantageous mating results, and defense against potential threats from neighboring populations. Conversely, the NP denotes a detrimental circumstance within the broader societal context.

2.4.1 Promiscuous and restricted mating methods

The stage probability parameter, denoted as mm, corresponds to the mating behavior exhibited by the Bonobo species. Initially, the value of the variable representing the amount of mm is set at a value of 0.5, with incremental upgrades occurring throughout successive iterations. According to Eq. (8), the value is deemed to be identical or lower than mm.

bo = bonobo. \(n\_{{\text{bo}}}_{{\text{j}}}\) and \({a}_{j}^{{\text{bo}}}\) are the jth the variables of the new progenies. j is a variable number between zero and one. e stands for the number of variables. In q1, a random value between \(0\) and \(1\) is determined. \({{\text{bo}}}_{j}^{i}\) and \({{\text{bo}}}_{j}^{m}\) determine the values of the bonobos’ ith and mth associated variables, respectively. \({t}^{a}\) and \({t}^{t}\) are called division coefficients for \({a}^{{\text{bo}}}\) and mth bonobos, respectively.

When the ith bonobo achieves a more favorable outcome compared to the mth bonobo, it results in promiscuous mating. The flag has been denoted with the number 1 under these circumstances. Alternatively, in order to restrict pairing, a value of −1 is attributed to the \({a}^{{\text{bo}}}\) combination.

2.4.2 Methods of mating extra-group and consort ships

If the value of the part mm is lower than that of \(q\), the occurrence of these mating types will ensue. Alternatively, if the value of \({q}_{2}\) is equivalent to or lower than the probability of extra-group mating for the given group (\({m}_{{\text{xgm}}}\)), the resultant outcome will involve a bolstering of the solution through the process of extra-group mating.

The initial value of the me was set to 0.5, followed by gradual improvements aligned with the evolution’s inherent characteristics. This process enhances the search algorithm to produce the most promising results. The present study utilizes the notation \({\text{Var}}_{{{\text{ - min}}_{j} }}\) and \({\text{Var}}_{{{\text{ - max}}_{j} }}\) to represent the lower and upper limits of the jth variable correspondingly.

Alternatively, in certain scenarios, the application of the consortship mating technique leads to the production of a distinctive progeny, wherein the quantity of \(q_{2}\) exceeds that of \(m_{{{\text{xgm}}}}\), in accordance with Eq. (10):

In the present set of equations, the variables \(q_{1} ,q_{2} ,q_{3} ,q_{4} ,q_{5}\) are defined as random quantities that assume values within the range of zero and one. The schematic representation of the proposed algorithm coupled with MLP is depicted in Fig. 2.

2.5 Smell agent optimization (SAO)

The sense of smell plays a critical role in upholding the world from its onset. The majority of living organisms detect the existence of noxious substances in their surroundings through their olfactory receptors (Buck 2004; Sakalli et al. 2020; Axel 2005). Incorporating the human sense of smell into the development of SAO is a normal occurrence (Axel 2005; Chapman and Cowling 1990; Abdechiri et al. 2013). The SAO’s overarching structure is determined by three modes, primarily derived from the smell perception steps. Firstly, the olfactory agent detects the olfactive molecules, evaluates their spatial position, and subsequently discerns a course of action; to either pursue the origin of the scent or disregard it. Secondly, the agent follows the scent particles in its quest to locate the source of the odor, relying on its previous modes of decision-making. Finally, the final mode of operation serves to impede agents from becoming entrapped within localized minima, hence safeguarding the agent’s ability to maintain its trail.

2.5.1 Sniffing mode

The initial stage of the process involves randomly identifying a location for the commencement of diffusion of odor molecules towards the agent, given that olfactory molecules possess a tendency to propagate in the direction of their target. The smell molecules can be initialized through the utilization of the mathematical formula designated as Eq. (11):

\(N\) is the overall number of scent molecules. \(D\) stands for the total number of the decision variables.

The position vector in Eq. (11) can be produced by applying Eq. (12), which allows the agent to choose its own optimal location inside the search space.

The terms “\({\text{ub}}\)” and “\({\text{lb}}\)” denote the upper and lower bounds established as a result of the variables of decision, correspondingly. r0 refers to a random value from zero to one.

Every individual scent molecule is allocated a fundamental velocity with which it diffuses from the smell source through the application of Eq. (13).

Every individual smell molecule serves as a potential indication of a potentially viable solution. The locus of potential explanations is derived from the situation vector \(x_{i}^{\left( t \right)} \in R^{N}\), as demonstrated in Eq. (11), and the molecular rapidity \({\upupsilon }_{i}^{\left( t \right)} \in { }R^{N}\), as articulated in Eq. (13). The velocity of the molecules is increased through the implementation of Eq. (14):

The expression \(\Delta t = 1\) denotes that the agent advances along the path of the optimization process in a parallel manner. The spatial coordinates of smell molecules are determined using Eq. (15):

Each smell molecule possesses unique diffusion velocities, which facilitate its positional updates and evaporation during the process of scent analysis. The calculation of the revised velocity of scent molecules is accomplished through the utilization of Eq. (16):

The update variable for velocity, denoted by \({\upupsilon }\), is obtained by applying Eq. (17):

The term \(k\) denotes the smell fixation factor that serves to normalize the impact of both mass and temperature on the scent molecules’ kinetic energy.

The mass and temperature of the scent molecules are denoted by \(m\) and \(T\), respectively.

Equation (15) is used to assess the scent molecule’s fitness at the updated sites.

Consequently, the process of sniffing has been accomplished, thereby enabling the determination of the precise location of the agent denoted as \(x_{{{\text{agent}}}}^{t}\).

2.5.2 Trailing mode

In the second operational mode, the simulated behaviors of an agent are directed toward the determination of the source of a particular smell. During the process of searching for a smell source, the agent can detect a new location that has a higher concentration of smell molecules through smell perception. The agent seizes the opportunity to explore the novel location by utilizing Eq. (18):

r2 and r3 are numbers from zero to one. r2 penalizes the stimulus of \({\text{olf}}\) on \(x_{{{\text{agent}}}}^{t}\) and r3 penalizes the effect of the \({\text{olf}}\) on \(x_{{{\text{worst}}}}^{t}\).

The agent records \(x_{{{\text{agent}}}}^{t}\) and the \(x_{{{\text{worst}}}}^{t}\) gained from the mode of smelling. The aforementioned data play a pivotal role in the algorithm’s capacity to establish a state of equilibrium between exploitation and exploration, as denoted by Eq. (18).

2.5.3 Random mode

Smell molecules may differ in intensity over time if there is a considerable segmentation in their distance from one another. This variance might confuse the agent, which would cause the odor to disappear and make tracking extremely difficult. Due to its incapacity to remember trailing information, the agent is prone to being stuck in local minima. When the previously described situation occurs, when the agent enters the random mode, as shown by Eq. (19),

Step length is denoted by SL. r4 is a random number that stochastically penalizes the quantity of SL.

Figure 3 indicates the scheme of SAO coupled with MLP.

2.6 Methods for evaluating performance

A range of evaluators were employed to appraise the application of hybrid models in predicting the USS’s value. The coefficient of determination (R2), Root mean Square Error (RMSE), Normalized Root mean square error (NRMSE), and n10_index are among the performance measures that are examined in this study. By calculating the difference between expected and actual values, these metrics are frequently used in statistical analysis to assess the precision and accuracy of models. The coefficient denoted as R2 is indicative of the extent of linear correlation between the actual and anticipated quantities. The RMSE is the square root of the average squared difference between estimated and actual values. In contrast, NRMSE normalizes RMSE by dividing it by the range of observed values, offering a dimensionless metric for more interpretable model accuracy assessment, especially across datasets with varying scales. Additionally, the n10_index is a performance metric that evaluates the percentage of predicted values falling within a factor of 10 of the corresponding actual values.

Equations (20–23) provide the values of these metrics as stated above.

The sample numbers are denoted by \(n\), the experimental value is shown by bi, the predicted value is shown by di, the experimental amount’s mean is represented by \(\overline{b}\) and the predicted value’s mean is denoted by \(\overline{d}\).

3 Results and discussion

3.1 Hyperparameters and convergence curves

Hyperparameters are predefined settings in ML that significantly impact a model’s performance and optimization. This study focuses on only one crucial hyperparameter (Neuron), and its effects on the MLP model’s performance, detailed in Table 2. The research underscores the importance of carefully fine-tuning this hyperparameter for optimal results. This involves a deliberate and judicious selection and adjustment process to achieve precision and robust generalization while mitigating issues like overfitting and prolonged training.

This study examined how well nine models (three models in three layers) predicted USS by tracking their convergence progress using RMSE as the selected metric. The results of these evaluations are showcased in Fig. 4. Models had initial RMSE values of just under 200 for MLSA3 to just above 650 for MLBO1. As the convergence process proceeded, there was a conspicuous decrease in RMSE values for all models. Considering the models’ performance during the iterations 0 to 150, it became evident that the MLDC3 model experienced the best performance and finished its convergence process with almost 50 for RMSE value.

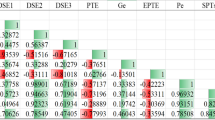

3.2 Comparing models’ performance

In the current work, nine different hybrid model types were created to forecast the USS utilizing MLP in conjunction with the DCCS, BO, and \(SAO\) algorithms. A training set and a test set, comprising \(70\%\) and \(30\%\) of the data, respectively, are separated out of the data in these hybrid models. Four statistical measures (R2, RMSE, NRMSE, and n10_index) were used to obtain a comprehensive assessment of the effectiveness of the deployed optimizers. The results are shown in Table 3. This section determines which model is better than the other by analyzing statistical metrics.

The R2 for first layer models clearly shows that, with \(0.9816\) and \(0.9610\) in the training and testing phases, respectively, MLDC1 achieves the greatest results. When RMSE, NRMSE, and n10_index are compared as three different evaluator types during the training and testing phase, MLDC1 has the lowest mistakes.

Moving on to the second layer, MLDC2 is related to the maximum values of R2 = 0.9906 and R2 = 0.9783 in the training and testing sections. With scores of \(102.13\) and \(142.44\) in the RMSE training and testing phases, respectively, that model (MLDC2) has the best performance. Moving on to the third layer, the MLDC3 shows the best results for all four criteria, indicating that it may be used to estimate USS value.

It is clear from comparing all nine models that the maximum values of R2, RMSE, NRMSE, and n10_index for MLDC3, MLBO1, MLBO1, and MLDC3 are, respectively, 0.9949, 257.79, 1.8414, and 0.8143 during the training phase. Furthermore, for MLDC3, MLBO2, MLBO2, and MLDC3, the maximum values of R2, RMSE, NRMSE, and n10_index in the testing portion are 0.9905, 244.99, 4.0833, and 0.8333, respectively. None of the nine models have received adequate training since the R2 values for each model in the test section are less than those in the train section. All things considered, out of the nine hybrid models, MLDC3 has the top ratings from four evaluators. It can therefore be applied appropriately in practical settings.

Figure 5 displays fragmented presentations that illustrate the relationship between the measured and anticipated values of the USS. The two assessment sets that the numerical data in question relates to are RMSE and R2. By acting as a distributed controller, RMSE causes an increase in density when its evaluation metric’s value decreases. In addition, the Test and Train data points are moved along the central axis by the R2 evaluator. Numerous other variables are included in this picture, such as the centerline at position \(Y = X\), a linear regression model, and two lines that are drawn at \(Y = 0.9X\) and \(Y = 1.1X\), respectively, below and above the centerline. The incorrect prediction of values that are greater than or less than the actual values results from the intersections of the line’s upper and lower ends, respectively.

In this investigation, the LSSVR model was combined with three different optimizer strategies that were applied throughout the training and testing stages to create three unique models. The results of this investigation are shown in Fig. 5. Given the uniform directionality and closeness of the data points to the centerline, the R2 of MLDC3 seems to be more favorable than that of the other models. The empirical data showed that the precision of the Train phase values withdrew from that of the test phase in every case, but it was especially apparent in the setting of MLSA2. Overall, the results show that the MLDC3 hybrid model, which combines the MLP approach with the third-layer SAO optimizer, produced the best results in terms of R2 and RMSE during the learning and validation stages when the data from Fig. 5 is taken into account. The reason for this discovery is that the model performs better in R2 and can make smaller mistakes.

The agreement between the measured and anticipated USS values for each of the three hybrid model categories is evaluated in Fig. 6. The two separate sections of the diagrams are the ones devoted to validating and learning models. When an MLP is used in conjunction with a DCA method, the projected USS of hybrid models in the first layer MLDC1 shows superior conformity with evaluated testing and training data. Moving on to the second and third layers, MLDC2 and MLDC3 have the best accordance status in the Train and Test models, respectively. Conversely, the combination of MLP and BBO in the third layer, or MLBO3, clearly displays the least favorable agreement status of models.

Figure 7 shows the discrepancies between the measured USS values for three different hybrid model types in \(3\) layers with nine models total. This figure shows that the training and testing sets of models’ maximum errors for MLBO2 are between more than −\(60\%\) and \(150\% .\) Conversely, in both the train and test models, minimum error values are associated with MLDC3, where the majority of errors are concentrated in the range of \(- 50\%\) to \(90\% .\)

The distribution of errors in the anticipated USS values is shown by the box plot in Fig. 8. It is clear that all \(3\) models have a graph with a sharp state during the training phase of the MLP model, which indicates greater dispersion; however, during the Test phase, these curves have taken on a flatter shape, indicating that the data has become less accurate and more dispersed. Therefore, all of the created models in this layer have typically been trained incorrectly. In the test and train phases, MLDC2 had the best prediction performance in the second layer. Nearly every result in the third layer is in the range of \(0\)% in MLDC3, which performs the best out of all the \(9\) hybrid models.

4 Conclusion

To determine the soil’s USS, a multi-layer perceptron (MLP) model was employed in the current study. Even though the results obtained using the traditional method were effective, they had several shortcomings. The laboratory method is considered inefficient in terms of time management and involves significant costs. Artificial intelligence (AI) was used in place of the software-based technique to overcome the aforementioned constraints. It was noted that the system’s estimation of the USS showed a high degree of accuracy. The created models used four input variables OBW, PL, LL, and SF with the goal parameter USS to achieve the intended outcome. The correctness of the suggested models was evaluated using five different performance indicators in this study: R2, RMSE, NRMSE, and n10_index. The study used three different meta-heuristic optimisation techniques BO, DCCS, and SAO to improve the system’s performance. It can be inferred and calculated from the results that:

The characteristics that were researched were used to construct prediction models for the USS estimation. The suggested models demonstrated a good degree of accuracy in forecasting the USS when compared to the experimental data.

-

In MLDC1, MLBO1, MLSA1, MLDC2, MLBO2, MLSA2, MLDC3, MLBO3, and MLSA3, respectively, the sprinkling value of the projected data in the Test phase compared to the Train phase dropped by 2.1, 1.54, 2.26, 1.24, 0.94, 2.65, 0.42, 3, and 0.94%.

-

The models suggested may understate the USS by an average of about 160, according to the contrast of measured and anticipated values. In the learning phase, the MLBO1 had the largest error in the RMSE(257.9), while in the validation phase, the MLBO2 had the largest error (249.99); the MLDC3 had the lowest error (73.47).

References

Abdechiri M, Meybodi MR, Bahrami H (2013) Gases Brownian motion optimization: an algorithm for optimization (GBMO). Appl Soft Comput 13(5):2932–2946

Abu-Farsakh MY, Mojumder MAH (2020) Exploring artificial neural network to evaluate the undrained shear strength of soil from cone penetration test data. Transp Res Rec 2674(4):11–22

Akbarzadeh MR, Ghafourian H, Anvari A, Pourhanasa R, Nehdi ML (2023) Estimating compressive strength of concrete using neural electromagnetic field optimization. Materials 16(11):4200

Axel R (2005) Scents and sensibility: a molecular logic of olfactory perception (Nobel lecture). Angew Chem Int Ed 44(38):6110–6127

Behnam Sedaghat G, Tejani G, Kumar S (2023) Predict the maximum dry density of soil based on individual and hybrid methods of machine learning. Adv Eng Intell Syst 2(3). https://doi.org/10.22034/aeis.2023.414188.1129

Buck LB (2004) Unraveling the sense of smell. Les Prix Nobel the Nobel Prizes 2004:267–283

Chandler RJ (1988) The in-situ measurement of the undrained shear strength of clays using the field vane. ASTM International, West Conshohocken, PA, USA

Chapman S, Cowling TG (1990) The mathematical theory of non-uniform gases: an account of the kinetic theory of viscosity, thermal conduction and diffusion in gases. Cambridge University Press, New York

Das SK, Basudhar PK (2006) Undrained lateral load capacity of piles in clay using artificial neural network. Comput Geotech 33(8):454–459

Das AK, Pratihar DK (2019) A new bonobo optimizer (BO) for real-parameter optimization. In: 2019 IEEE region 10 symposium (TENSYMP), IEEE, pp 108–113

Di Buò B, Selänpää J, Länsivaara TT, D’Ignazio M (2019) Evaluation of sample quality from different sampling methods in Finnish soft sensitive clays. Can Geotech J 56(8):1154–1168

Gnananandarao T, Khatri VN, Dutta RK (2020) Bearing capacity and settlement prediction of multi-edge skirted footings resting on sand. Ing Inv 40(3):9–21

Gnananandarao T, Khatri VN, Onyelowe KC, Ebid AM (2023a) Implementing an ANN model and relative importance for predicting the under drained shear strength of fine-grained soil. In: Basetti V, Shiva CK, Ungarala MR, Rangarajan SS (eds) Artificial intelligence and machine learning in smart city planning. Elsevier, Amsterdam, pp 267–277

Gnananandarao T, Onyelowe KC, Murthy KSR (2023b) Experience in using sensitivity analysis and ANN for predicting the reinforced stone columns’ bearing capacity sited in soft clays. In: Basetti V, Shiva CK, Ungarala MR, Rangarajan SS (eds) Artificial intelligence and machine learning in smart city planning. Elsevier, Amsterdam, pp 231–241

Gnananandarao T, Onyelowe KC, Dutta RK, Ebid AM (2023c) Sensitivity analysis and estimation of improved unsaturated soil plasticity index using SVM, M5P, and random forest regression. In: Basetti V, Shiva CK, Ungarala MR, Rangarajan SS (eds) Artificial intelligence and machine learning in smart city planning. Elsevier, Amsterdam, pp 243–255

Hansbo S (1957) New approach to the determination of the shear strength of clay by the fall-cone test. Statens geotekniska institut, Stockholm

Ikizler SB, Aytekin M, Vekli M, Kocabaş F (2010) Prediction of swelling pressures of expansive soils using artificial neural networks. Adv Eng Softw 41(4):647–655

Jamhiri B, Xu Y, Jalal FE, Chen Y (2021) Hybridizing neural network with trend-adjusted exponential smoothing for time-dependent resistance forecast of stabilized fine sands under rapid shearing. Transp Infrastruct Geotechnol 10:62–81

Jamhiri B, Jalal FE, Chen Y (2022) Hybridizing multivariate robust regression analyses with growth forecast in evaluation of shear strength of zeolite–alkali activated sands. Multiscale Multidiscip Model Exp Des 5(4):317–335

Kanungo DP, Sharma S, Pain A (2014) Artificial neural network (ANN) and regression tree (CART) applications for the indirect estimation of unsaturated soil shear strength parameters. Front Earth Sci 8:439–456

Khajeh A, Ebrahimi SA, MolaAbasi H, Jamshidi Chenari R, Payan M (2021) Effect of EPS beads in lightening a typical zeolite and cement-treated sand. Bull Eng Geol the Environ 80(11):8615–8632. https://doi.org/10.1007/s10064-021-02458-1

Kordjazi A, Nejad FP, Jaksa MB (2014) Prediction of ultimate axial load-carrying capacity of piles using a support vector machine based on CPT data. Comput Geotech 55:91–102

Kuo YL, Jaksa MB, Lyamin AV, Kaggwa WS (2009) ANN-based model for predicting the bearing capacity of strip footing on multi-layered cohesive soil. Comput Geotech 36(3):503–516

Larsson R (1980) Undrained shear strength in stability calculation of embankments and foundations on soft clays. Can Geotech J 17(4):591–602

Lunne T (1982) Role of CPT in North Sea foundation engineering

Ly H-B, Pham BT (2020) Prediction of shear strength of soil using direct shear test and support vector machine model. Open Constr Build Technol J 14(1):41–50

Majdi A, Rezaei M (2013) Prediction of unconfined compressive strength of rock surrounding a roadway using artificial neural network. Neural Comput Appl 23:381–389

Mareli M, Twala B (2018) An adaptive Cuckoo search algorithm for optimisation. Appl Comput Inform 14(2):107–115. https://doi.org/10.1016/j.aci.2017.09.001

Mbarak WK, Cinicioglu EN, Cinicioglu O (2020) SPT based determination of undrained shear strength: regression models and machine learning. Front Struct Civ Eng 14:185–198

Moayedi H, Hayati S (2018) Applicability of a CPT-based neural network solution in predicting load-settlement responses of bored pile. Int J Geomech 18(6):6018009

Moayedi H, Gör M, Khari M, Foong LK, Bahiraei M, Bui DT (2020) Hybridizing four wise neural-metaheuristic paradigms in predicting soil shear strength. Measurement 156:107576

Mojumder MAH (2020) Evaluation of undrained shear strength of soil, ultimate pile capacity and pile set-up parameter from cone penetration test (CPT) using artificial neural network (ANN). Louisiana State University and Agricultural & Mechanical College

Nejad FP, Jaksa MB (2017) Load-settlement behavior modeling of single piles using artificial neural networks and CPT data. Comput Geotech 89:9–21

Nguyen TT, Truong AV, Phung TA (2016) A novel method based on adaptive cuckoo search for optimal network reconfiguration and distributed generation allocation in distribution network. Int J Electr Power Energy Syst 78:801–815. https://doi.org/10.1016/j.ijepes.2015.12.030

Onyelowe KC, Gnananandarao T, Nwa-David C (2021) Sensitivity analysis and prediction of erodibility of treated unsaturated soil modified with nanostructured fines of quarry dust using novel artificial neural network. Nanotechnol Environ Eng 6(2):37. https://doi.org/10.1007/s41204-021-00131-2

Onyelowe KC, Gnananandarao T, Ebid AM (2022) Estimation of the erodibility of treated unsaturated lateritic soil using support vector machine-polynomial and-radial basis function and random forest regression techniques. Cleaner Mater 3:100039

Padmini D, Ilamparuthi K, Sudheer KP (2008) Ultimate bearing capacity prediction of shallow foundations on cohesionless soils using neurofuzzy models. Comput Geotech 35(1):33–46

Prasad KN, Triveni S, Schanz T, Nagaraj LTS (2007) Sample disturbance in soft and sensitive clays: analysis and assessment. Mar Georesour Geotechnol 25(3–4):181–197

Sakalli E, Temirbekov D, Bayri E, Alis EE, Erdurak SC, Bayraktaroglu M (2020) Ear nose throat-related symptoms with a focus on loss of smell and/or taste in COVID-19 patients. Am J Otolaryngol 41(6):102622

Samui P (2008) Prediction of friction capacity of driven piles in clay using the support vector machine. Can Geotech J 45(2):288–295

Samui P, Kurup P (2012) Multivariate adaptive regression spline and least square support vector machine for prediction of undrained shear strength of clay. Int J Appl Metaheuristic Comput 3(2):33–42

Senneset K (1982) Strength and deformation parameters from cone penetration tests

Shahin MA, Jaksa MB, Maier HR (2001) Artificial neural network applications in geotechnical engineering. Aust Geomech 36(1):49–62

Sharma LK, Singh TN (2018) Regression-based models for the prediction of unconfined compressive strength of artificially structured soil. Eng Comput 34(1):175–186. https://doi.org/10.1007/s00366-017-0528-8

Sulewska MJ (2017) Applying artificial neural networks for analysis of geotechnical problems. Comput Assist Methods Eng Sci 18(4):231–241

Tavana Amlashi A, Mohammadi Golafshani E, Ebrahimi SA, Behnood A (2023) Estimation of the compressive strength of green concretes containing rice husk ash: a comparison of different machine learning approaches. Eur J Environ Civil Eng 27(2):961–983. https://doi.org/10.1080/19648189.2022.2068657

Tien Bui D, Hoang N-D, Nhu V-H (2019) A swarm intelligence-based machine learning approach for predicting soil shear strength for road construction: a case study at Trung Luong National Expressway Project (Vietnam). Eng Comput 35(3):955–965

Tran Q-A, Sołowski W (2019) Generalized interpolation material point method modelling of large deformation problems including strain-rate effects–application to penetration and progressive failure problems. Comput Geotech 106:249–265

Tran Q-A, Solowski W, Karstunen M, Korkiala-Tanttu L (2017) Modelling of fall-cone tests with strain-rate effects. Procedia Eng 175:293–301

Wang L (2005) Support vector machines: theory and applications, vol 177. Springer Science & Business Media, Berlin, Heidelberg

Yang X-S, Deb S (2009) Cuckoo search via Lévy flights. In: 2009 World congress on nature & biologically inspired computing (NaBIC), IEEE, pp 210–214

Yuan J, Zhao M, Esmaeili-Falak M (2022) A comparative study on predicting the rapid chloride permeability of self-compacting concrete using meta-heuristic algorithm and artificial intelligence techniques. Struct Concr. https://doi.org/10.1002/suco.202100682

Zhang W, Wu C, Zhong H, Li Y, Wang L (2021) Prediction of undrained shear strength using extreme gradient boosting and random forest based on Bayesian optimization. Geosci Front 12(1):469–477

Zumrawi MME (2012) Prediction of CBR value from index properties of cohesive soils. Univ Khartoum Eng J 2(ENGINEERING)

Author information

Authors and Affiliations

Contributions

Weiqing Wan: writing-original draft preparation, conceptualization, supervision, project administration. Minhao Xu: methodology, software, validation, formal analysis.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Test dataset | ||||

|---|---|---|---|---|

SF | LL | PL | OBW | USS |

0.9 | 77 | 34 | 1.85 | 2390 |

0.47 | 81 | 39 | 1.98 | 1370 |

0.4 | 63 | 32 | 2.11 | 1090 |

0.92 | 68 | 35 | 2.53 | 3280 |

0.21 | 71 | 37 | 0.13 | 570 |

0.11 | 88 | 36 | 0.65 | 120 |

0.71 | 66 | 29 | 1.72 | 1470 |

0.52 | 73 | 25 | 1.85 | 2420 |

0.38 | 85 | 29 | 1.99 | 1560 |

0.42 | 57 | 32 | 2.25 | 1460 |

1.02 | 79 | 25 | 2.51 | 2280 |

0.67 | 42 | 15 | 2.66 | 2140 |

0.2 | 100 | 32 | 0.19 | 350 |

0.11 | 75 | 36 | 0.3 | 100 |

0.12 | 56 | 23 | 0.52 | 130 |

0.39 | 33 | 16 | 1.16 | 2890 |

0.57 | 61 | 23 | 1.92 | 1780 |

1 | 41 | 19 | 0.14 | 2250 |

0.75 | 61 | 23 | 0.69 | 2320 |

0.87 | 61 | 23 | 0.96 | 2320 |

0.87 | 61 | 23 | 1.16 | 2320 |

0.18 | 61 | 23 | 1.28 | 2320 |

0.3 | 61 | 23 | 1.39 | 2320 |

0.53 | 24 | 12 | 1.76 | 2210 |

0.56 | 51 | 28 | 0.27 | 1650 |

0.85 | 60 | 29 | 0.46 | 1680 |

0.69 | 70 | 36 | 0.59 | 1770 |

0.46 | 87 | 42 | 0.67 | 1390 |

0.26 | 85 | 39 | 0.74 | 660 |

0.27 | 58 | 33 | 0.87 | 620 |

0.39 | 86 | 41 | 0.94 | 750 |

0.9 | 100 | 45 | 1.01 | 1240 |

0.82 | 103 | 46 | 1.1 | 1360 |

0.53 | 76 | 18 | 0.77 | 760 |

1.1 | 32 | 20 | 1.24 | 1380 |

0.22 | 49 | 29 | 0.15 | 870 |

0.35 | 39 | 22 | 0.8 | 1250 |

0.27 | 32 | 19 | 1.03 | 950 |

0.28 | 70 | 35 | 1.19 | 1220 |

0.26 | 88 | 41 | 1.32 | 1600 |

0.64 | 43 | 23 | 1.68 | 1980 |

0.62 | 52 | 29 | 1.84 | 4460 |

0.76 | 47 | 29 | 0.25 | 1020 |

0.77 | 82 | 41 | 0.83 | 1270 |

0.45 | 84 | 39 | 1.03 | 1680 |

0.8 | 64 | 33 | 1.39 | 1510 |

0.83 | 42 | 24 | 1.77 | 1700 |

0.16 | 32 | 23 | 1.19 | 420 |

0.16 | 39 | 26 | 1.39 | 563 |

0.18 | 40 | 27 | 1.47 | 527 |

0.15 | 69 | 31 | 1.64 | 375 |

0.62 | 70 | 31 | 2.5 | 571 |

0.53 | 85 | 34 | 2.1 | 1366 |

0.14 | 55 | 25 | 0.3 | 260 |

0.07 | 63 | 24 | 1.27 | 570 |

0.14 | 76 | 32 | 1.44 | 740 |

0.15 | 65 | 27 | 1.55 | 650 |

0.13 | 51 | 21 | 1.66 | 410 |

0.16 | 73 | 26 | 1.91 | 1290 |

0.16 | 72 | 17 | 3.03 | 1610 |

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wan, W., Xu, M. The implementation of a multi-layer perceptron model using meta-heuristic algorithms for predicting undrained shear strength. Multiscale and Multidiscip. Model. Exp. and Des. 7, 3749–3765 (2024). https://doi.org/10.1007/s41939-024-00435-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41939-024-00435-1