Abstract

Crop classification plays a crucial role in ensuring food security, agricultural policy development, and effective land management. Remote sensing data, particularly Sentinel-1 and Sentinel-2 data, has been widely used for crop mapping and classification in cloudy regions due to their high temporal and spatial resolution. This study aimed to enhance the classification accuracy of grain crops, specifically barley and wheat, by integrating Sentinel-1 synthetic aperture radar (SAR) and Sentinel-2 multispectral instrument (MSI) data. The study utilized two classification models, random forest (RF) and classification and regression trees (CART), to classify the grain crops based on the integrated data. The results showed an overall accuracy (OA) of 93%, and a Kappa coefficient (K) of 0.896 for RF, and an OA of 89.15% and K of 0.84 for the CART classifier. The integration of both radar and optical data has the potential to improve the accuracy of crop classification compared to using a single-sensor classification technique. The significance of this study is that it demonstrates the effectiveness of integrating radar and optical data to improve crop classification accuracy. These findings can be used to support crop management, environmental monitoring, and policy development, particularly in areas with cloud cover or limited optical data. The study’s implications are particularly relevant in the context of global food security, where accurate crop classification is essential for monitoring crop health and yield estimation. Concisely, this study provides a useful approach for crop classification using Sentinel-1 and Sentinel-2 data integration, which can be employed to support sustainable agriculture and food security initiatives.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Agriculture is widely acknowledged as the fundamental pillar of human existence, exerting significant control over the economy (Ennouri & Kallel, 2019). Wheat (Triticum ssp.) and barley (Hordeum vulgare L.), two of the approximately 30 types and 360 species that comprise the Triticeae tribe (subfamily Pooideae, family Poaceae), are among the most economically significant temperate cereal crops (Schnurbusch, 2019). Hence, it is crucial for both the public and private sectors to have reliable agricultural information at their disposal in order to make informed decisions on agricultural policies, provide crops at minimum cost, and ensure food security (Lira Melo de Oliveira Santos et al., 2019).

The monitoring of agricultural areas is crucial to mitigate the challenges facing the world such as population growth, increased food demand, climate change (Heupel et al., 2018), and changes in consumption habits (Lira Melo de Oliveira Santos et al., 2019). Addressing these challenges requires temporal and spatial information on crop distribution, as well as location-based crop classification maps to predict crop harvest forecasts during the growing season (Hütt & Waldhoff, 2018). Crop maps provide comprehensive temporal and spatial information on agricultural fields, which are developed and managed through diverse social or policy activities that significantly impact biochemical, hydrological, climatic, biological, economic, and human health cycles.

While some local governments still rely on manual labor to implement social policies, identify farm characteristics, and estimate the amount and type of crops harvested in a given area (Kobayashi et al., 2020), new techniques are essential for more efficient and accurate agricultural monitoring.

In the past 10 years, the remote sensing field has made a significant progress in the development of spectral-spatial classification techniques (Du et al., 2020). Remote sensing is a crucial agricultural practice for crop classification and acquiring information about farmlands, both locally and globally. It plays a vital role in designing and implementing agricultural policies, managing crops, and ensuring food security (Arias et al., 2020). Precision agriculture has greatly benefited from the widespread use of remote sensing in the last few decades. Crop identification and categorization enable the estimation of the geographical variation covered by different crops, which is a significant factor in field mapping (Khaliq et al., 2018). Although field mapping provides exceptional precision, it requires a substantial amount of time and effort. The availability of opportunities for field studies is limited by high mountain vegetation, which has a shorter vegetation season and is less accessible than low lands. The development of technology has led to the frequent use of remote sensing data, which are characterized by increased objectivity and spatial coverage (Wakulińska & Marcinkowska-Ochtyra, 2020).

Remote sensing is a widely used and effective technique for creating crop distribution maps, providing fast and efficient mapping of agricultural land cover for various global applications (Remelgado et al., 2020). By utilizing remote sensing data spanning large areas and multiple time and spatial resolutions, crop types can be identified through changes in their reflectance properties throughout the year, with emphasis on the temporal aspect (Heupel et al., 2018). Multispectral and multi-temporal remote sensing data have been employed to generate crop maps, showcasing their ability to assess vegetation status across different periods (Khaliq et al., 2018). Remote sensing–based approaches have proven to be an efficient method for crop classification and assessment of crop areas, surpassing conventional ground-based surveys which are expensive and time-consuming (Baidar, 2020).

Satellite land monitoring offers information about biodiversity, surface characteristics, and spatial differences at a given time, which are very rich sources of information for identifying crop species and can be utilized to monitor them throughout the growth cycle (Arias et al., 2020). Aerial imagery is one of the most valuable sources of information for automated land classification and the development of various crops grown in different agricultural areas worldwide. It can estimate the area of certain crops, monitor their health, and predict their production (Crnojević et al., 2014). The classification is performed using a variety of spectral bands from multispectral time-series data. Along with time-series data, several vegetation indices produced from multispectral images have also been utilized to augment the information and more accurately distinguish areas of vegetation and non-vegetation (Khaliq et al., 2018). Visible and infrared sensors have been widely used for crop type classification and crop area assessment (McNairn et al., 2002).

Sentinel-2 data can be regarded as revolutionary in this situation because, in addition to having a short revisit period (5 days), it also offers the best possible spectral and spatial resolution. Due to the physiognomic changes occurring in vegetation, the larger amount of data gathering allows for the creation of multi-temporal compositions, i.e., images consisting of information collected at various times during the growing season. This may have a discernible effect on the classification outcomes (Wakulińska & Marcinkowska-Ochtyra, 2020). Recently, the availability of free Sentinel images with high spatial and temporal accuracy has provided ample opportunities for agricultural activities (Baidar, 2020).

Supervised classifiers that employ the maximum-likelihood method are the key components of conventional classification. However, these traditional classifiers are no longer able to comprehend the intricacy of such important data, since fine resolution data has recently become more accessible (Palchowdhuri et al., 2018). Moreover, the radiation reflectance in wheat and barley is very similar. Thus, it is necessary to use Sentinel-1 and Sentinel-2 to make the classification results more accurate. Therefore, the classification methods employed in this study are based on the classification and regression tree (CART) and random forest (RF) algorithms. The selection of these classification methods is based on their wide-ranging use in land use categorization.

Synthetic aperture radar (SAR) data offers the capability to meet the strict data requirements for effective crop monitoring. It is unaffected by clouds or haze and can collect data during both day and night. Additionally, microwaves in SAR data can penetrate plants more deeply compared to Sentinel-2 wavelengths. The SAR signal is significantly impacted by plant water content (Beriaux et al., 2021). The frequency and polarization of SAR data provide a complex depiction of topography, surface roughness, soil moisture, and canopy structure. On the other hand, optical data utilizes the visible, near-infrared, and shortwave infrared range of electromagnetic radiation, enabling researchers to gather vital information about water content, leaf pigments, and overall vegetation health (Orynbaikyzy et al., 2020).

This study aims to bridge the gap between optical and SAR data by demonstrating how their combination can enhance crop mapping accuracy. Specifically, the study seeks to achieve three main objectives: (1) showcase the effectiveness of combining Sentinel-1 and Sentinel-2 data in improving classification accuracy, (2) identify crop type classes with high accuracy resulting from the integration of different sensors, and (3) evaluate different methods for crop mapping. The significance of this research lies in its contribution to the ongoing efforts to improve classification approaches in crop mapping. The findings of this study will provide valuable insights to efficiently classify crop fields, thereby enhancing our understanding of the agricultural landscape.

Data and Methodology

Study Area

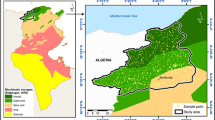

The Harir plain in the northeastern part of Iraqi Kurdistan is the focus of this study, covering an area of 4742.6 ha located between latitudes 36° 23′ 17″ N and 36° 40′ 05″N and longitudes 44° 8′ 1″ E and 44° 29′ 15″E, approximately 47.3 km away from Erbil city. The study area shares a boundary with Soran district from the north and northeast, Ranyah from the south, and Akre in the northwest (Fig. 1). The climate in the region is semi-arid, similar to that of the Mediterranean region, with hot and dry summers and cold and rainy winters, according to Köppen classification (Koeppe & Ridgley, 1958). The annual average rainfall is 543 mm, with inadequate precipitation during October and November (Hussein et al., 2017). The study area consists of four types of soil, which are a mixture of lithology with limestone, brown soils with medium thickness, and deeply covered with lucky dust stone, according to the soil classification (Buringh, 1960).

Data Source and Preprocessing

The Sentinel-1 and Sentinel-2 satellite programs place a strong emphasis on land use and land cover monitoring, particularly in regions experiencing rapid changes (Steinhausen et al., 2018). In this study, optical and SAR imageries from the two sensors Sentinel-2 and Sentinel-1 were utilized. The selection of these two sensors was primarily based on their availability, as well as the high spatial, spectral, and temporal resolutions they provide. Both Sentinel-1 and Sentinel-2 data were obtained from the Google Earth Engine (GEE) platform, taking into account the Sentinel-2 product’s cloudy coverage of less than 10%, and the difference between both images is only 2 days.

Sentinel-1 is equipped with a C-band in four imaging modes (EW, IW, SM, and WV) with various spatial resolutions (10, 20, 60 m) and covers an antenna that transmits microwave signals to an exact region of the Earth’s surface for synthetic aperture radar (SAR) imaging. It is possible to measure the microwave energy reflected to the spacecraft. The radar concept is used to produce SAR images, which use the time delay of backscattered signals to produce an image. SAR images are appropriate and valuable for evaluating alterations on the Earth’s surface caused by the SAR system’s operation at all times and in all-weather situations (Moumni & Lahrouni, 2021a).

The present study depends on SAR data Interferometric Wide (IW) swath mode in dual polarization mode (VV, VH) from 19 April 2022 (Table 1). Additionally, the SAR data had to be preprocessed after they were acquired to extract useful information for the classification procedure. SAR data are created by the coherent interaction of the emitted microwave with the targets, unlike optical images. As a result, they are impacted by speckle noise, which results from the coherent addition of signals scattered by ground scatters dispersed at random among the pixels. The fluctuation of pixel values around a mean that corresponds to the target’s intended backscattering coefficient is referred to as speckle (Mahdavi et al., 2018). A SAR image appears visually noisier than an optical one. Speckle is caused by the interference waves reflected from numerous elementary scatters, which appear as grainy noise in SAR images. To reduce radar speckle, a smoothing filter with the function focal mean and a smoothing radius of 50 m was used. The process of speckle filtering improves image quality by removing speckles (Filipponi, 2019). A considerable body of study has been done on modeling and eliminating speckle (Ashok & Patil, 2014; Touzi, 2002; Yuan et al., 2018). Therefore, a speckle noise removal filter is necessary before display and further analysis.

Sentinel-2 is the second dataset that was used in this study which was gained from 21 May 2022. Sentinel-2A is equipped with high-resolution optical equipment with spatial resolutions of 10 m, 20 m, and 60 m, and 13 bands with a wavelength range of 443 to 2190 nm. To identify crop types, ten spectral bands in addition to normalized difference vegetation index (NDVI) were employed, including blue, green, red, red edge 1, red edge 2, red edge 3, NIR, red edge 4, SWIR1, and SWIR2. Bands 1 (coastal aerosol), 9 (water vapor), and 10 (SWIR-cirrus) were removed from the analysis since crop type mapping was not relevant to them. Each of the bands of vegetation red edge (5, 6, 7, 8A) and SWIR (11, 12) was resampled from 20 to 10 m spatial resolution. In addition, the normalized difference vegetation index (NDVI) was generated in Sentinel-2 using bands NIR (8) and red (4) and was combined with Sentinel-2 bands to improve the classification technique.

Methodology

The methodology used in this study consisted of four key steps: data collection, preprocessing, classification, and accuracy assessment. Figure 2 illustrates the sequence of these steps. Since the first two steps have already been discussed, the third and fourth stages will be discussed in detail in the following section.

Sampling Strategy

The reference polygons were divided into training polygons (70%) and testing polygons (30%) based on crop type and field size. The study separated training and testing polygons to evaluate how well models performed across different crop types and field sizes. The polygons used for training were sampled using stratified random sampling, resulting in 182 polygons (57,883 pixels) for all crop types. The study gathered different random samples according to class sizes, such as wheat (70 polygons, 26,499 pixels), barley (18 polygons, 7281 pixels), uncultivated land (63 polygons, 17,708 pixels), airport and residential areas (33 polygons, 1546 pixels), bare land (16 polygons, 1311 pixels), grassland (14 polygons, 3538 pixels), and random samples. In addition, 30% of the samples were used as testing points for validation. Furthermore, 92 polygons (24,786 pixels), which is 30% of all samples for all crop types, were used for testing (Table 2). The study employed a random forest and classification and regression trees (CART) classifier. Classified images were exported from GEE to Google Drive and then downloaded.

Image Classification

Google Earth Engine (GEE), a cutting-edge cloud-based platform for the processing of remote sensing data, was used for image classification. Remote sensing images (Sentinel-1 and Sentinel-2) are available in GEE. The choice of an appropriate classification technique is a crucial step in the effective synergetic classification of crop classes, together with the accurate selection and preprocessing of satellite input data. In this study, random forest and CART classification were used.

Random forest is a novel approach to developing decision trees. Each tree is constructed by replacing each fixed design with a distinct group of existing patterns. The total number of available methods is used to determine the size of the selected class (Felegari et al., 2021). The nonparametric RF technique proposed by Breiman (2001) was used to classify digital images into crop types using Google Earth Engine (GEE). These algorithms were chosen for their reliability and accuracy in classification (Denize et al., 2019), as well as their consistently excellent performance, ease of parameterization, and robustness (Belgiu & Drăguţ, 2016). Numerous studies that focused on crop type mapping have shown that RF results are often reliable (Balzter et al., 2015; Inglada et al., 2015; Onojeghuo et al., 2018).

RF is a classification algorithm that consists of several decision trees. Each tree is created from randomly selected training pixels (Bouslihim et al., 2022; Nguyen et al., 2021). The RF method is a flexible ensemble learning approach that combines K binary classifications. Each tree is generated by applying a unique learning algorithm to subsets of the input variable sets that were divided using the Gini index as one of the attribute value tests (Akbari et al., 2020; Pelletier et al., 2016). To build a prediction model, the RF classifier only requires the determination of two parameters: the desired number of classification trees (k) and the number of prediction variables (m), which are used in each node to grow the tree (Rodriguez-Galiano et al., 2012). Furthermore, the RF classifier operates effectively with large datasets. Recent research has demonstrated that RF can integrate several remote sensing characteristics with categorical land use data to improve classification performance and distinguish between forests and other ground covers (Tatsumi et al., 2015).

CART is a sophisticated method based on a decision tree (DT) classifier that is created from a collection of training data. It is a nonparametric modeling technique that may be used to interpret reactions of a dependent variable using a collection of independent continuous or categorical variables (Zheng et al., 2009). CART is a binary multivariate statistical method that can process continuous and categorical variables as targets and predictors. No binning is necessary or advised, and data are handled in their raw state (Steinberg and Colla, 2009). Hartfield et al. (2013) showed that the CART classifier was not only precise but also processed a huge quantity of data in a very short amount of time. The benefit of CART is that it is easy to comprehend, visualize, and interpret. CART can handle both categorical and numerical data (Ray, 2019). CART analysis has another advantage, which is a mostly automated “machine learning” technique. In other words, the analyst needs little to no input based on the complexity of the study (Lewis, 2000).

Accuracy Assessment

A confusion matrix was used to calculate the overall accuracy (OA), Kappa coefficient, producer’s accuracy (PA), and user’s accuracy (UA) of the classification results (Steinhausen et al., 2018). The confusion matrix is a tabulation of categorized map and reference data for sample computation. Qualitative assessment of the classification results can be done by comparing the images, while statistical tools such as the confusion matrix and the Kappa index allow for quantitative assessment of the land use and land cover classification accuracy (Moumni & Lahrouni, 2021a). The overall accuracy (OA) is defined as the percentage of correctly classified sampled pixels (Eq. 1), while the Kappa coefficient is a measure of classification performance that takes into account the chance agreement between the prediction and reference data (Eq. 2). UA denotes the likelihood of accurately categorizing a certain labeled sample, while PA denotes the percentage of a given reference class that is correctly classified. In this study, 30% of the 182 samples were used, and the error matrix of each categorized image was used to calculate Kappa and overall accuracy for accuracy assessment (Table 2).

Xii = number of diagonal pixels (correctly classified)

N = total number of pixels.

A 0 The obtained OA or the actual percentage of classified land

A c Probability of obtaining a correct classification.

Results

Image Classification

The random forest and CART classifiers were used to produce the crop type map in the study area. The total area of concern is about 4742.6 ha as shown in Table 3 and Fig. 3, and the exact areas of the crop types in this study are listed in the table. According to the random forest classification, wheat covers the largest area with a total of about 1812.5 ha (38.2%), while the area of the same category with the CART classification is 1741 ha (36.7%). Barley, uncultivated land, and grassland occupy the largest areas with 9.4%, 37.6%, and 8.5%, respectively, according to the random forest classification. Meanwhile, according to the CART classification, the largest areas are occupied by uncultivated land (34%) and grassland (9.4%). It was also observed that the areas of bare land, residential land, and airport are the lowest, with 3.9% and 2.3%, respectively, according to the random forest classification, and 4.1% and 2.7%, respectively, according to the CART classification.

Accuracy Assessment

In addition, using Sentinel-1 (SAR) data, the RF and CART classification achieved overall accuracies (OA) of 72.79% and 65.04%, respectively, with Kappa coefficients of 0.58 and 0.49 (Table 4). The highest producer’s accuracies (PA) for both algorithms were obtained for uncultivated land (83% and 81.7%), followed by airport and road (79.3%) in the RF classification, then wheat (74.8% and 74.6%), bare land (59.5% and 50.8%), barley (38.4% and 27.5%), and grassland (18.4% and 12.6%), respectively. The PAs for uncultivated, airport and road, wheat, and bare land are relatively high. The PA rate for uncultivated land is 84.48% because out of a total of 6683 wheat pixels, 294 pixels were classified as wheat, 460 pixels as barley, 52 pixels as airport and roads, 273 pixels as bare land, and 149 pixels as grassland in the RF classifier. In the CART classifier, the PA rate for uncultivated land is 81.38% due to 528 barley pixels, 50 airport and road pixels, 231 bare land pixels, and 156 grassland pixels being misclassified out of a total of 6661wheat pixels (Table 5). The PAs for grassland and barley are less than 35%, indicating a significant omission error.

In addition, the user’s accuracies (UA) are highest for uncultivated land (88.86% and 81.5%), followed by wheat (86.75% and 72.8%), airport and road (52.39% and 53.2%), bare land (34.7% and 31.79%), barley (27.13% and 28.6%), and grassland (6.89% and 14.1%) for both RF and CART classifiers, respectively. The UA rate for uncultivated land is 88.86% because 376 pixels are classified as wheat, 272 pixels are classified as barley, 19 pixels are classified as airport and roads, 106 pixels are classified as bare land, and 65 pixels are classified as grassland in RF, while the rate of UA for uncultivated land is 81.5% in CART classifier due to 484 pixels being classified as wheat, 480 pixels classified as barley, 54 pixels classified as airport and road, 213 pixels classified as bare land, and 160 pixels classified as grassland (Table 5). The PAs for barley, airport and road, and bare land are less than 60%, which indicates a significant omission error.

The Sentinel-2 classification image showed lower omission and commission errors as well as higher overall and Kappa values compared to Sentinel-1. The overall accuracy and Kappa values for RF and CART classification were 89.6801, 0.84532 and 85.093, 0.7808, respectively (Table 6). According to these findings, Sentinel-1 SAR data were found to determine land cover types less accurately than Sentinel-2 data at the same spatial resolution. The producer’s accuracy was high for the airport and roads (99.34% and 96.63%), uncultivated land (92.03% and 95.60%), wheat (90.66% and 91.93%), and bare land (81.25% and 68.94%), but it decreased for the classes of barley and grassland (less than 70%). The user’s accuracy for all classes was quite high except for barley in the RF algorithm. The highest user’s accuracy was observed for airport and road (99.34% and 96.63%), followed by uncultivated land (92.03% and 95.60%), wheat (90.66% and 91.93%), bare land (81.25% and 68.94%), and grassland (78.99% and 72.17%) for both RF and CART classifiers, respectively (Table 7). The lowest user’s accuracy was observed for barley (61.46% and 71.91%) for both algorithms, respectively. When the classification results of Sentinel-1 were compared with those of Sentinel-2, it was observed that the overall accuracy and Kappa of Sentinel-2 were much higher than those of Sentinel-1, and the classification accuracy of Sentinel-2 classes was also much better than that of Sentinel-1.

Improving Classification Accuracy

This study aimed to improve the pixel-level classification results by combining Sentinel-1 SAR data with Sentinel-2 (optical) data. Two Sentinel-1 bands were merged with Sentinel-2 multi-spectrum image bands, and the RF and CART classifiers were used for integrating SAR data and optical data. The results showed that combining Sentinel-1 and Sentinel-2 images produced a more accurate product. The overall map accuracy for the combined Sentinel-1 and Sentinel-2 in the study area was 93.044% and 89.153% for OA and 0.896 and 0.840 for Kappa, respectively, for both RF and CART classifiers (Tables 8 and 9). In contrast, the Sentinel-1 image alone produced the lowest accuracy of all the analyses (72.79% and 65.04% of OA and 0.57 and 0.48 of Kappa).

When comparing the classification maps obtained from Sentinel-1 and Sentinel-2 with those obtained from the combined optical and SAR Sentinel data, it was observed that the crop type maps had greater overall map accuracy. All classes demonstrated improved accuracy when compared to the Sentinel-1 or Sentinel-2 classification alone. The improved class accuracies resulted in fewer errors of both PA and UA, especially in the wheat, barley, bare land, and grassland classes. The highest PA was obtained for airport and road (98.77% and 96.63%), followed by uncultivated land (96.53% and 95.60%), wheat (93.92% and 91.93%), bare land (97.02% and 90.13%), and grassland (89.30% and 72.17%) for both RF and CART classifiers, respectively. These increased class accuracies resulted in lower errors of UA and PA, especially in the airport and roads (Tables 8 and 9).

Discussion

Sentinel-1 Images

The classification applied to the Sentinel-1 image demonstrates that Sentinel-1 SAR data can discriminate between different crop types. Several conditions affect the backscattering of different surface covers, and the microwave’s ability to detect surface roughness aids in classifying different types of crops. Figure 4 presents the analysis of discrete classes using polarization (VV, VH), with the highest mean values in airport and roads, while grassland has the lowest mean values. Polarization VH is weaker for all six classes compared to VV polarization. In the box plot, it can be observed that VV and VH bands are much more suitable for separating wheat and uncultivated land, grassland, and bare land, which have a very slight ability to separate wheat and barley due to their backscatters being very similar. The overall accuracy for wheat and barley is 86.6% and 27%, respectively. Therefore, using Sentinel-1 alone is not able to separate wheat and barley properly (Table 4). The results show that the backscatter of wheat and barley is very similar. When Sentinel-1 is used alone, wheat and barley have nearly identical backscatter information.

Spectral Reflectance Values of Sentinel-2 Bands

The study also observed that some classes tend to overlap when their spectral responses are relatively similar in different wavelengths for the bands investigated in the study of variation by the spectral response (surface reflectance) of each class (Fig. 5). The best way to separate classes is by using the green band to separate wheat, bare land, and almost all classes. The red wavelength is also suitable for separating wheat, uncultivated land, airport, grassland, and barley, while the near-red and red edge bands are largely similar in terms of separating wheat, barley, and grassland.

Crop Classification

To ensure the protection and management of cereal crops, agricultural land must be regularly monitored. Additionally, information on crop types can be used to assess crop acreage and yield, which is crucial for ensuring food security. This study aimed to investigate the capability of the widely accessible Sentinel-1 and Sentinel-2 satellites to detect six important classes (wheat, barley, uncultivated land, airport and road, bare land, and grassland) across the study area. The RF and CART methods, which have been shown to provide high classification accuracy in previous studies (Akar & Gungor, 2012; Bayas et al., 2022; Delalay et al., 2019; Firas Mohammed Ali et al., 2015; Kaszta et al., 2016), were used in this study for both Sentinel-1 and Sentinel-2 data. The study confirmed that the application of optical and radar data is commonly recognized as a means to improve RF (De Luca et al., 2022; Manakos et al., 2020; Tavares et al., 2019) and CART classification (Thamilselvan & Sathiaseelan, 2015; Zheng et al., 2009). The results of the study showed that RF is more precise than the CART method.

Improving Classification Accuracy

These results indicate that the SAR image–derived data did not have a significant impact on the classification, while the optical data improved the classification compared to Sentinel-1 (Tables 4 and 5). The OA of Sentinel-1A was 72.79% and 65.04%, while the OA for Sentinel-2 was 89.68% and 85.09%, which is consistent with the results of previous studies (De Luca et al., 2022; Manakos et al., 2020; Rao et al., 2021). The study accurately mapped crop types, particularly when employing both sensors. The highest accuracy was recorded for uncultivated land, airport and residential areas, and wheat, while the lowest accuracy was recorded for barley, which was consistent across sensor combinations. The combination of Sentinel-1 and Sentinel-2 images improved the overall accuracy of cereal crop classification for both methods (RF, CART) compared to the results obtained using only optical or radar single sensitivity (Fig. 6).

When utilizing the RF method for optical and radar data, 173 pixels of wheat were categorized as barley, 80 pixels as uncultivated land, 3 pixels as an airport and road, 1 pixel as bare land, and 78 pixels as grassland. As a result, when the RF algorithm was applied, the PA for the wheat region was 93.92%, and the UA was 97.07%, while the PA for wheat was 90.18, and the UA was 88.78 when the CART algorithm was applied.

Additionally, combining Sentinel-1 and Sentinel-2 images and adding the Sentinel-2 NDVI layer increased the overall accuracy and Kappa coefficient of the classification (Fig. 6). The overall accuracy for RF was 94.03%, and the Kappa coefficient was 0.91, while the overall accuracy for CART classifier was 89.00%, and the Kappa coefficient was 0.83, which is consistent with other findings in different geographical areas that rely on combining optical and radar data. Despite increased visit times and enhanced spatial resolutions of Sentinel systems, combining Sentinel-2 MSI, Sentinel-1 bands, and NDVI provides a workable alternative for routine crop categorization and monitoring. The study concluded that this combination of sensors provides a versatile and attractive approach.

Despite the high accuracy achieved in this study, some misclassifications were observed in certain classes. For example, some wheat pixels were classified as barley or uncultivated land, and some grassland pixels were classified as airport area. This could be due to factors such as similar spectral characteristics and land use changes in the study area. Additionally, the accuracy of classification may be affected by the quality of the input data, especially in areas with cloud cover or other atmospheric conditions. The integration of Sentinel-1 SAR data and Sentinel-2 optical data has been shown to significantly improve the accuracy of land cover classification in the study area. The results of this study could be used to support decision-making and policy development related to land use planning and natural resource management. In addition, the methodology and techniques used in this study could be applied to other regions and areas facing similar challenges related to land use change and crop monitoring.

In summary, the research on improving crop classification accuracy with integrated Sentinel-1 and Sentinel-2 data offers the potential for enhanced accuracy, improved feature extraction, and reduced sensitivity to environmental conditions. However, it also faces challenges related to data availability, complexity of data integration, computational requirements, and generalizability to different crops and regions. These factors should be carefully considered when interpreting and applying the findings of this research in practical applications.

Conclusions

In conclusion, this study highlights the importance of population diversity and effective planning to meet the demands of farmed crops. Remote sensing technology, particularly the use of satellite images, has become an essential tool for crop monitoring and management. The integration of Sentinel-1 SAR and Sentinel-2 optical data through the RF and CART classifiers improved the accuracy of crop type classification. RF produced the highest OA, and CART produced the highest Kappa value, demonstrating that combining SAR and optical data yields more accurate classification than using either one alone. However, some misclassifications were observed, which could be due to several factors, such as the spectral similarity of some classes in some pixels.

The findings of this study have significant implications for crop monitoring and management, providing a more accurate and efficient approach for identifying crop types and their respective characteristics. This method can be useful for policymakers, agricultural researchers, and farmers in making informed decisions regarding crop management and improving the productivity of farmed crops. Furthermore, the method developed in this study can be extended to other areas to improve crop monitoring and management in various regions of the world. Future research should focus on refining the classification method to further improve accuracy and reduce misclassifications. Overall, the results of this study demonstrate the potential of remote sensing technology for crop monitoring and management, providing a valuable tool for sustainable agriculture practices.

References

Akar Ö, Gungor O (2012) Rastgele orman algoritması kullanılarak çok bantlı görüntülerin sınıflandırılması. J Geodesy Geoinformation 1:139–146. https://doi.org/10.9733/jgg.241212.1t

Akbari E, Darvishi Boloorani A, Neysani Samany N, Hamzeh S, Soufizadeh S, Pignatti S (2020) Crop mapping using random forest and particle swarm optimization based on multi-temporal Sentinel-2. Remote Sens 12(9):9. https://doi.org/10.3390/rs12091449

Ali FM, Fgee EB, Zubi ZS (2015) Predicting performance of classification algorithms. Int J Comput Eng Technol 6(2):19–28

Arias M, Campo-Bescós MÁ, Álvarez-Mozos J (2020) Crop classification based on temporal signatures of Sentinel-1 observations over Navarre province, Spain. Remote Sens 12(2):2. https://doi.org/10.3390/rs12020278

Ashok HG, Patil DR (2014) Survey on change detection in SAR images. In: IJCA Proceedings on National Conference on Emerging Trends in Computer Technology, pp 4–7

Baidar, T. (2020) Rice crop classification and yield estimation using multi-temporal Sentinel-2 data: a case study of terai districts of Nepal. http://repositori.uji.es/xmlui/handle/10234/187006

Balzter H, Cole B, Thiel C, Schmullius C (2015) Mapping CORINE land cover from Sentinel-1A SAR and SRTM digital elevation model data using random forests. Remote Sens 7(11):11. https://doi.org/10.3390/rs71114876

Bayas S et al (2022) Land use land cover classification using different ml algorithms on sentinel-2 imagery. In: HG Ashok, Patil DR (eds) Advanced machine Intelligence and Signal Processing. Springer Nature Singapore, Singapore. Int J of Comput Appl 2014:761–777

Belgiu M, Drăguţ L (2016) Random forest in remote sensing: a review of applications and future directions. ISPRS J Photogramm Remote Sens 114:24–31. https://doi.org/10.1016/j.isprsjprs.2016.01.011

Beriaux E, Jago A, Lucau-Danila C, Planchon V, Defourny P (2021) Sentinel-1 time series for crop identification in the framework of the future CAP monitoring. Remote Sens 13(14):14. https://doi.org/10.3390/rs13142785

Bouslihim Y, Kharrou H, Abdelhalim M, Attou T, Bouchaou L, Chehbouni A (2022) Comparing pan-sharpened Landsat-9 and Sentinel-2 for land-use classification using machine learning classifiers. J Geovisualization Spat Anal 6:35. https://doi.org/10.1007/s41651-022-00130-0

Breiman L (2001) Random forests. Mach Learn 45:5–32

Buringh P (1960) Soils and soil conditions in Iraq. The Ministry of Agriculture, Baghdad, Iraq. Available at: https://edepot.wur.nl/480098

Crnojević V, Lugonja P, Brkljač BN, Brunet B (2014) Classification of small agricultural fields using combined Landsat-8 and RapidEye imagery: case study of northern Serbia. J Appl Remote Sens 8(1):083512. https://doi.org/10.1117/1.JRS.8.083512

De Luca G, Silva MN, Di Fazio S, Modica G (2022) Integrated use of Sentinel-1 and Sentinel-2 data and open-source machine learning algorithms for land cover mapping in a Mediterranean region. Eur J Remote Sens 55(1):52–70. https://doi.org/10.1080/22797254.2021.2018667

Delalay M, Tiwari V, Ziegler A, Gopal V, Passy P (2019) Land-use and land-cover classification using Sentinel-2 data and machine-learning algorithms: operational method and its implementation for a mountainous area of Nepal. J Appl Remote Sens 13:014530. https://doi.org/10.1117/1.JRS.13.014530

Denize J, Hubert-Moy L, Betbeder J, Corgne S, Baudry J, Pottier E (2019) Evaluation of using Sentinel-1 and -2 time-series to identify winter land use in agricultural landscapes. Remote Sens 11(1):37. https://doi.org/10.3390/rs11010037

Du P, Bai X, Tan K, Xue Z, Samat A, Xia J, Li E, Su H, Liu W (2020) Advances of four machine learning methods for spatial data handling: a review. J Geovisualization Spat Anal 4(1):1–25. https://doi.org/10.1007/s41651-020-00048-5

Ennouri K, Kallel A (2019) Remote sensing: an advanced technique for crop condition assessment. Math Probl Eng 2019:e9404565. https://doi.org/10.1155/2019/9404565

Felegari S, Sharifi A, Moravej K, Amin M, Golchin A, Muzirafuti A, Tariq A, Zhao N (2021) Integration of Sentinel 1 and Sentinel 2 satellite images for crop mapping. Appl Sci 11(21):10104. https://doi.org/10.3390/app112110104

Filipponi F (2019) Sentinel-1 GRD preprocessing workflow. International Electronic Conference on Remote Sensing. MDPI

Hartfield KA, Marsh SE, Kirk CD, Carrière Y (2013) Contemporary and historical classification of crop types in Arizona. Int J Remote Sens 34(17):6024–6036. https://doi.org/10.1080/01431161.2013.793861

Heupel K, Spengler D, Itzerott S (2018) A progressive crop-type classification using multitemporal remote sensing data and phenological information. PFG-J Photogramm Remote Sens Geoinf Sci 86(2):53–69. https://doi.org/10.1007/s41064-018-0050-7

Hussein SO, Kovács F, Tobak Z (2017) Spatiotemporal assessment of vegetation indices and land cover for Erbil city and its surrounding using modis imageries. J Environ Geogr 10(1–2):31–39. https://doi.org/10.1515/jengeo-2017-0004

Hütt C, Waldhoff G (2018) Multi-data approach for crop classification using multitemporal, dual-polarimetric TerraSAR-X data, and official geodata. Eur J Remote Sens 51(1):62–74. https://doi.org/10.1080/22797254.2017.1401909

Inglada J, Arias M, Tardy B, Hagolle O, Valero S, Morin D, Dedieu G, Sepulcre G, Bontemps S, Defourny P, Koetz B (2015) Assessment of an operational system for crop type map production using high temporal and spatial resolution satellite optical imagery. Remote Sens 7(9):12356–12379. https://doi.org/10.3390/rs70912356

Kaszta Ż, Van De Kerchove R, Ramoelo A, Cho MA, Madonsela S, Mathieu R, Wolff E (2016) Seasonal separation of African savanna components using Worldview-2 imagery: a comparison of pixel- and object-based approaches and selected classification algorithms. Remote Sens 8(9):763. https://doi.org/10.3390/rs8090763

Khaliq A, Peroni L, Chiaberge M (2018) Land cover and crop classification using multitemporal Sentinel-2 images based on crops phenological cycle. In: 2018 IEEE Workshop on Environmental, Energy, and Structural Monitoring Systems (EESMS). IEEE

Kobayashi N, Tani H, Wang X, Sonobe R (2020) Crop classification using spectral indices derived from Sentinel-2A imagery. J Inf Telecommun 4(1):67–90. https://doi.org/10.1080/24751839.2019.1694765

Koeppe CE, Ridgley DC (1958) Weather and climate.

Lewis RJ (2000) An introduction to classification and regression tree (CART) analysis. Annual meeting of the society for academic emergency medicine in San Francisco, California, vol 14. Department of Emergency Medicine Harbor-UCLA Medical Center Torrance, San Francisco, CA, USA

Mahdavi S, Salehi B, Moloney C, Huang W, Brisco B (2018) Speckle filtering of synthetic aperture radar images using filters with object-size-adapted windows. Int J Digit Earth 11(7):703–729. https://doi.org/10.1080/17538947.2017.1351582

Manakos I, Kordelas GA, Marini K (2020) Fusion of Sentinel-1 data with Sentinel-2 products to overcome non-favourable atmospheric conditions for the delineation of inundation maps. Eur J Remote Sens 53(sup2):53–66. https://doi.org/10.1080/22797254.2019.1596757

McNairn H, Ellis J, Van Der Sanden JJ, Hirose T, Brown RJ (2002) Providing crop information using RADARSAT-1 and satellite optical imagery. Int J Remote Sens 23(5):851–870. https://doi.org/10.1080/01431160110070753

Melo L, de Oliveira Santos C, Augusto Camargo Lamparelli R, Figueiredo GKDA, Dupuy S, Boury J, Luciano AC, Torres RD, Le Maire G (2019) Classification of crops, pastures, and tree plantations along the season with multi-sensor image time series in a subtropical agricultural region. Remote Sens 11(3):334. https://doi.org/10.3390/rs11030334

Moumni A, Lahrouni A (2021a) Machine learning-based classification for crop-type mapping using the fusion of high-resolution satellite imagery in a semiarid area. Scientifica 2021:8810279. https://doi.org/10.1155/2021/8810279

Nguyen TTH, Chau TNQ, Pham TA, Tran TXP, Phan TH, Pham TMT (2021) Mapping land use/land cover using a combination of radar Sentinel-1A and Sentinel-2A optical images. IOP Conf Ser: Earth Environ Sci 652(1):012021. https://doi.org/10.1088/1755-1315/652/1/012021

Onojeghuo AO, Blackburn GA, Wang Q, Atkinson PM, Kindred D, Miao Y (2018) Mapping paddy rice fields by applying machine learning algorithms to multi-temporal Sentinel-1A and Landsat data. Int J Remote Sens 39(4):1042–1067. https://doi.org/10.1080/01431161.2017.1395969

Orynbaikyzy A, Gessner U, Mack B, Conrad C (2020) Crop type classification using fusion of Sentinel-1 and Sentinel-2 data: assessing the impact of feature selection, optical data availability, and parcel sizes on the accuracies. Remote Sens 12(17):2779. https://doi.org/10.3390/rs12172779

Palchowdhuri Y, Valcarce-Diñeiro R, King P, Sanabria-Soto M (2018) Classification of multi-temporal spectral indices for crop type mapping: a case study in Coalville, UK. J Agric Sci 156(1):24–36. https://doi.org/10.1017/S0021859617000879

Pelletier C, Valero S, Inglada J, Champion N, Dedieu G (2016) Assessing the robustness of random forests to map land cover with high resolution satellite image time series over large areas. Remote Sens Environ 187:156–168. https://doi.org/10.1016/j.rse.2016.10.010

Rao P, Zhou W, Bhattarai N, Srivastava AK, Singh B, Poonia S, Lobell DB, Jain M (2021) Using Sentinel-1, Sentinel-2, and Planet imagery to map crop type of smallholder farms. Remote Sens 13(10):1870. https://doi.org/10.3390/rs13101870

Ray S (2019) Exploring machine learning classification algorithms for crop classification using Sentinel 2 data. Int Arch Photogramm Remote Sens Spat Inf Res 42:573–578. https://doi.org/10.5194/isprs-archives-XLII-3-W6-573-2019

Remelgado R, Zaitov S, Kenjabaev S, Stulina G, Sultanov M, Ibrakhimov M, Akhmedov M, Dukhovny V, Conrad C (2020) A crop type dataset for consistent land cover classification in Central Asia. Sci Data 7(1):250. https://doi.org/10.1038/s41597-020-00591-2

Rodriguez-Galiano VF, Ghimire B, Rogan J, Chica-Olmo M, Rigol-Sanchez JP (2012) An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J Photogramm Remote Sens 67:93–104. https://doi.org/10.1016/j.isprsjprs.2011.11.002

Schnurbusch T (2019) Wheat and barley biology: towards new frontiers. J Integr Plant Biol 61:198–203. https://doi.org/10.1111/jipb.12782

Steinberg D, Colla P (2009) CART: classification and regression trees. The Top Ten Algorithms in Data Mining 9:179

Steinhausen MJ, Wagner PD, Narasimhan B, Waske B (2018) Combining Sentinel-1 and Sentinel-2 data for improved land use and land cover mapping of monsoon regions. Int J Appl Earth Obs Geoinf 73:595–604. https://doi.org/10.1016/j.jag.2018.08.011

Tatsumi K, Yamashiki Y, Canales Torres MA, Taipe CLR (2015) Crop classification of upland fields using random forest of time-series Landsat 7 ETM+ data. Comput Electron Agric 115:171–179. https://doi.org/10.1016/j.compag.2015.05.001

Tavares PA, Beltrão NES, Guimarães US, Teodoro AC (2019) Integration of Sentinel-1 and Sentinel-2 for classification and LULC mapping in the urban area of Belém, eastern Brazilian Amazon. Sens 19(5):1140. https://doi.org/10.3390/s19051140

Thamilselvan P, Sathiaseelan JGR (2015) A comparative study of SVM, RF and CART algorithms for image classification. Proceedings of the National Conference on Emerging Trends in Advanced Computing (ETAC), India.

Touzi R (2002) A review of speckle filtering in the context of estimation theory. IEEE Trans Geosci Remote Sens 40(11):2392–2404. https://doi.org/10.1109/TGRS.2002.803727

Wakulińska M, Marcinkowska-Ochtyra A (2020) Multi-temporal Sentinel-2 data in classification of mountain vegetation. Remote Sens 12(17):2696. https://doi.org/10.3390/rs12172696

Yuan J, Lv X, Li R (2018) A speckle filtering method based on hypothesis testing for time-series SAR Images. Remote Sens 10(9):1383. https://doi.org/10.3390/rs10091383

Zheng H, Chen L, Han X, Zhao X, Ma Y (2009) Classification and regression tree (CART) for analysis of soybean yield variability among fields in Northeast China: the importance of phosphorus application rates under drought conditions. Agric Ecosyst Environ 132(1):98–105. https://doi.org/10.1016/j.agee.2009.03.004

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Consent to Participate

Not applicable.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Faqe Ibrahim, G.R., Rasul, A. & Abdullah, H. Improving Crop Classification Accuracy with Integrated Sentinel-1 and Sentinel-2 Data: a Case Study of Barley and Wheat. J geovis spat anal 7, 22 (2023). https://doi.org/10.1007/s41651-023-00152-2

Accepted:

Published:

DOI: https://doi.org/10.1007/s41651-023-00152-2