Abstract

Several successful cache-based attacks have provided strong impetus for developing side channel resistant software implementations of AES. One of the best-known countermeasures—use of a “minimalist” 256-byte look-up table—has been employed in the latest (assembly language) versions. Software and hardware pre-fetching and out-of-order execution in modern processors have served to further shrink the attack surface. Despite these odds, we devise and implement two strategies to retrieve the complete AES key. The first uses adaptively chosen plaintext and random plaintext in a 2-round attack. The second strategy employs only about 50 blocks of random plaintext in a novel single round attack. The attack can be extended to spying on table accesses during decryption in a ciphertext-only attack. We also present an analytical model to explain the effect of false positives and false negatives and capture various practical tradeoffs involving number of blocks of plaintext, offline computation time for key retrieval and success probability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

AES is the most widely used secret key cipher and is known to be hard to crack even with highly advanced cryptanalytic techniques such as those described in [1,2,3,4]. However, its software implementation, while extremely efficient, has been shown to be susceptible to various side channel attacks. Not surprisingly, “hardened” implementations have been developed. One of these, included in cryptographic libraries such as OpenSSL [5], is now the default software version. The primary goal of this work is the design and implementation of a cache-based side channel attack that makes even the latest OpenSSL version vulnerable.

Each round of AES uses field operations in GF(28). Because field operations are computationally expensive, look-up tables are employed to greatly improve performance. The most efficient implementation of AES uses four 1 KB tables. During encryption, the tables typically reside in cache and occupy 64 lines or blocks (assuming a 64 byte block size as in most x86 machines). Based on even partial knowledge of the sequence of blocks accessed during encryption, it has been shown that the entire AES key may be retrieved [6]. In the sequel, we confine usage of the terms block and line to “block of plaintext” and “line of cache” respectively.

Various measures have been put in place to thwart cache-based attacks. Beginning with OpenSSL-1.0.0a, for example, a single 256-byte S-Box table has been employed. Such a table occupies only 4 lines of cache and so accesses to the table cause 2 bits of each byte of the AES key to be leaked (rather than 4 bits as in the four table implementation). This “minimalist” look-up table architecture has been acknowledged to be very hard to compromise in [7,8,9,10,11]. Indeed, almost all cache attacks on AES have targeted the 4 table [8, 12,13,14,15] or a “compressed” 2KB table implementation [10] rather than the single 256-byte table. The latter was intended to provide resistance to side channel attacks at the expense of reduced performance. To partly offset the performance hit, it was coded in x86 assembly and is commonly referred to as the “assembly” version.

To further defend against cache attacks, the default software implementation (OpenSSL Version 1.0.0a and beyond) pre-fetches the S-Box table at the start of each round of encryption. Thus, the attacker or spy is unable to distinguish between a line pre-fetched and one actually accessed as part of encryption resulting in false positives. Another source of false positives is the out-of-order execution in all modern processors. In the event of a stall caused by, for example, a cache miss, the processor attempts to execute instructions further upstream from the current instruction. This results in an even larger number of false positives as explained in Section 3. Finally, aggressive hardware pre-fetching further increases the rate of false positives.

The principal contribution of this work is the design of two attacks on the side channel resistant version of the OpenSSL implementation of AES. Both of these attacks leak out the complete 128-bit AES key. The first (called the Two Round Attack) uses information obtained by the spy about cache-resident table accesses made by the victim during the first two rounds of encryption. It uses adaptively chosen plaintexts for the first round attack and random plaintexts for the second round attack. The second attack (called the Single Round Attack) uses a less restrictive attack scenario based only on table accesses in the second round with random plaintexts. We demonstrate experimentally that we require fewer than 50 blocks of plaintext to recover the entire AES key. We also develop an analytical model to predict the number of plaintexts required and compare these estimates with experimentally obtained values. While the attacks described here use known plaintext, the second attack could also be adapted to work by snooping on the decryption of known ciphertext.

The paper is organized as follows. Section 2 introduces background material and describes the operation of the spy software. Section 3 explains the details of the First and Second Round attacks. It also contains an analytical model and a comparison between experimental and model results. Section 4 outlines our strategy to obtain the AES key in a less restrictive attack scenario. Section 5 summarizes related work and Section 6 concludes the paper. For ready reference, the notations used in this paper are summarized in Table 1.

2 Background

We first review the basics of AES and cache. Various cache-based attacks and scenarios are summarized. Finally, we outline the experimental setup used to test our key retrieval approaches.

2.1 AES Basics

AES is a substitution-permutation network. It supports a key size of 128, 192, or 256 bits and block size = 128 bits. A round function is repeated a fixed number of times (10 for key size of 128 bits) to convert 128 bits of plaintext to 128 bits of ciphertext. The 16-byte input or plaintext P = (p0,p1,...,p15) may be arranged column wise in a 4×4 array of bytes. This “state array” gets transformed after each step in a round. At the end of the last round, the state array contains the ciphertext.

All rounds except the last involve four steps—Byte Substitution, Row Shift, Column Mixing, and a Round Key operation (the last round skips the Column Mixing step). The round operations are defined using algebraic operations over the field \( GF\left (2^{8} \right )\). For example, in the Column Mixing step, the state array is pre-multiplied by the matrix B given below.

The original 16-byte secret key K = (k0,k1,...,k15) (arranged column wise in a 4×4 array of bytes) is used to derive 10 different round keys to be used in the round key operation of each round. The round keys are denoted K(r), r = 1,2,...10. Each element in P, K, C, and B belongs to the field \( GF\left (2^{8} \right ) \) and is represented as two hexadecimal characters. Let \(x^{\left (r\right )} =(x_{0}^{\left (r\right )} ,. . .,x_{15}^{\left (r\right )} )\) denote the input to round r (i.e. the state array at the start of round r). The initial state \(x^{\left (1\right )} =(x_{0}^{\left (1\right )} ,. . .,x_{15}^{\left (1\right )} )\) is computed by \(x_{i}^{\left (1\right )} =p_{i} \oplus k_{i}, 0 \leq i \leq 15\).

In a software implementation, field operations are replaced by relatively inexpensive table lookups thereby speeding encryption and decryption. In the version of OpenSSL targeted in this paper, a single 256-byte S-Box table is used. The ith entry (byte) of the table contains S(i) where S is the AES substitution function.

2.2 Cache Basics

All modern processors have multiple levels of cache intended to bridge the latency gap between main memory and the CPU. The machines targeted in this paper are Intel Core i3-2100 and Intel Core i7-3770. These have three levels of cache (private L1 32KB I-cache and 32KB D-cache, 256KB L2 cache and 3MB L3 cache shared between all cores).

The granularity of data transfer between different levels of cache is a block or line. On our targeted machines, the line size = 64 bytes. The lines of a cache are grouped into sets—a line from main memory is mapped to exactly one set though it may occupy any position in that set. The number of lines in a set is the associativity of the cache. In the machines we worked with, L1 and L2 caches are 8-way set associative while L3 is 12-way set associative.

To speed up AES encryption, the S-Box table is typically cache resident. It contains 256 entries and each entry occupies 1 byte. So the table fits into only 4 lines of cache. The first two bits of the table index specify the cache line number and the remaining 6 bits specify the element within the line.

2.3 Types of Attacks and Attack Scenarios

Cache-based side channel attacks belong to several categories. Timing-driven [13] attacks measure the time to complete an encryption. Trace-driven [16] attacks create profiles of a cache hit or miss for every access to memory during an encryption. Access-driven [12] attacks need information only about which lines of cache have been accessed, not their precise order. The attacks presented in this paper belong to the last category.

Various techniques are used to determine which cache lines have been accessed by a victim process. In the Prime+Probe approach [12], the attacker fills the cache with its own data. It waits for the victim to perform an encryption whereby some of the attacker data is evicted. The attacker then probes each cache line—a higher reload time for a cache line indicates that the cache line was evicted by the victim. In the Evict and Time method [12], the attacker first measures the time (T1) to complete an encryption, evicts a specific cache set, and then again measures the time (T2) taken to complete an encryption on the same plaintext. If T2 is greater than T1, it concludes that the evicted line is used in encryption.

In the Flush+Reload technique [10, 17], the attacker first flushes a line from all levels of cache, then waits for the victim to perform the encryption, and finally calculates the reload time of the previously flushed line. A lower reload time indicates that the line is in cache and was brought by the victim.

We consider two possible attack scenarios. In the first, a victim process runs on behalf of a data storage service provider who securely stores documents from multiple clients and furnishes them on request after due authentication. The same key or set of keys is used to encrypt documents from different clients prior to storage. In the second scenario, two entities, A and B, exchange encrypted messages. The victim, on B’s machine, decrypts blocks of ciphertext received from A. Thus, in the first scenario, the spy attempts to obtain the cache line numbers during encryption of plaintext while in the second scenario, it obtains the line numbers during decryption of ciphertext.

2.4 Experiment Setup

To test our key retrieval algorithms, we used the following experiment setup. The victim, V, performs AES encryptions using the assembly version of OpenSSL (v-1.0.2p). A multithreaded spy, co-located on the same core as the victim, attempts to infer the line numbers of the AES table accessed. The experiments were performed on Intel(R) Core(TM) i3-2100 CPU @ 3.10GHz and Intel(R) Core(TM) i7-3770 CPU @ 3.40GHz running Debian 8.0 with kernel version 3.18.26.

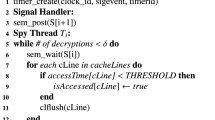

The spy program creates a high-resolution POSIX timer (used by all the spy threads) and an array of binary semaphores—sem[i] is the semaphore associated with Threadi. All but one of the semaphores are initialized to 0. So all threads are blocked on their respective semaphores except for the one that is initialized to 1.

The following is the sequence of events involved in probing the cache lines accessed by V.

- (1)

The unblocked thread say Threadi

(a) probes each of the four cache lines of the table to determine which has/have been accessed by V.

(b) it flushes all four lines of the cache-resident table

(c) it initializes a timer to \(\sim \)850 ns in Core i7 (\(\sim \)1100 ns in Core i3) and then blocks on its semaphore.

- (2)

At this point, all spy threads are blocked on their semaphores and V is scheduled next (its resumes performing encryptions).

- (3)

On expiration of the timer, the kernel sends a signal to a signal handler which unblocks Threadi+ 1. V is preempted and Threadi+ 1 begins execution.

The spy code spawns about 200 threads which execute in round robin fashion. Between two successive threads, V runs for about 850–1100 ns. Due to the large number of cache misses encountered by V, it is able to complete only about \(\left (\frac {1}{160} \right )^{th}\) of an encryption during this time. Thus, V should be scheduled roughly 160 times for it to complete a single encryption.

A cache miss results in the next (or previous) line [18] being pre-fetched causing the spy to wrongly infer that the latter was accessed in the previous run of the victim. To defeat the effect of lines pre-fetched during the execution of the victim process, the number of accesses made by the victim during each of its runs was minimized. This was accomplished by limiting the POSIX timer interval to \(\sim \) 850/1100 ns.

The Intel Core i3/i7 incorporates aggressive prefetchers which track and remember the forward and backward strides of the 16 most recently accessed 4KB pages [19]. So, we programmed the spy to access 32 randomly selected pages between two consecutive accesses to the AES tables. Each spy thread receives about 20000 ns of CPU time.

3 Strategy I - Two Round Attack

The first attack strategy involves two steps. The first step determines which lines of the AES table were accessed by the victim in the first round to obtain the first two bits of each of the 16 bytes of the AES key. The second round attack snoops on the accesses made in the second round to obtain the remaining six bits of each byte of the AES key.

3.1 First Round Attack

Table 2 shows the bytes of a block of plaintext, P, in the order that they are processed by the OpenSSL software. The next row lists the bytes of the key, K, in the same order. This is followed by two rows containing the two most significant bits of each byte of the plaintext and the key. For brevity, we refer to the two most significant bits of a byte, x, as a twit and denote it \(x^{\prime }\). The remaining six bits of the byte are denoted \(x^{\prime \prime }\).

The sequence of cache line numbers accessed during the first round (R1) of encryption is shown in the sixth row and is the XOR of the corresponding twits of P and K. The sequence of cache line numbers accessed in round 2 (R2) is shown in the last row.

A spy thread attempts to infer the line or lines of the AES table accessed by V during its previous run. Table 3 indicates that the first 20 threads observe all four lines accessed. Lines 0, 1, and 2 are reported as accessed by the next two scheduled threads while only lines 1 and 2 are reported as accessed by the next thread and so on. (L1 = {0,1,2,3}, L2 = {0,1,2}, and L3 = {1,2}). In virtually all of our measurements, |L1| = 4, |L2| = 3, |L3| = 2, and |L4| = 1. Also, \(L_{1} \supset L_{2} \supset L_{3} \supset L_{4}\). Another observation is that the cardinalities of the Li’s exhibit a periodicity which helps identify the end of a round and the beginning of the next round.

The OpenSSL software pre-fetches all four lines of the S-Box table at the start of each round. Hence, the first thread scheduled in a round sees all four lines accessed. A spy thread flushes all four lines of the table before it is blocked. Further, the design of the spy code ensures that a run of the victim is sufficiently small so that only one or two lines are accessed. Yet, the next scheduled thread and indeed many more also see all four lines accessed. This is attributed to the out of order execution in modern processors.

When a cache miss occurs, the execution of the instruction causing the miss is suspended. To maximize CPU utilization, the processor looks for an opportunity to execute instructions further upstream from the stalled instruction. Some of those instructions also cause a cache miss due to the need to access cache lines flushed out by the previously executing spy thread. So, at a given point in time, there could be several outstanding requests for data transfer from main memory to cache. While these requests are being serviced, the victim is suddenly pre-empted. This causes the next spy thread to see more table accesses made during the previous run than were actually consumed during that run.

During the execution of a round, 16 byte-sized table elements are first accessed and loaded into the CPU registers. This is followed by a computation phase wherein field multiplications and additions involved in the MixColumn step are performed. Consider the last access to an element in a round. As before, while this element is being fetched, the processor attempts to execute instructions upstream from the current instruction. These instructions involve only computation, not memory access. So the spy thread scheduled next sees a solitary access to the table \((\lvert L_{4} \rvert = 1)\). So the last (or last few) accesses in a round will be unambiguously reported by spy threads but there exists considerable ambiguity in identifying the lines accessed early on in each round.

The goal of the First Round Attack is to deduce the twit of each byte of the AES key. For this purpose, we need the complete set of cache line accesses. However, as shown in Table 3, successive spy threads report that all four lines have been accessed—in effect revealing no useful information about the first eight or so accesses. We next devise a strategy whereby we can still obtain the twits of all 16 bytes of the AES key.

Starting with a random block of plaintext, we construct a set of 16 blocks such that the encryption of the ith block results in all of the last i cache accesses to the same line of the lookup table. From the line number of the (i − 1)th access, the twit of the (i − 1)th byte of the AES key is obtained and the next block of plaintext to be encrypted is crafted. We exemplify our approach with the same initial random plaintext and random key from Table 2.

Table 4 shows the cache access patterns as reported by the spy during an encryption of successive blocks of plaintext. The first row shows that the last access is to line 2 (l1 = 2). The twit of eleventh byte of the key is computed as \(k_{11}^{\prime } = p_{11}^{\prime } \oplus l_{1} = 11 \oplus 10 = 01\). We wish all accesses to be eventually to line 3Footnote 1. For this purpose, we modify the twit of eleventh byte of the plaintext to \(\pi _{11}^{\prime } = k_{11}^{\prime } \oplus 11 = 01 \oplus 11 = 10\).

Row 2 shows the access pattern during encryption of the modified plaintext. The last access is to line 3 (as desired) but the 2nd last access is to line 1 (l2 = 1). Row 2 shows the derivation of \(k_{6}^{\prime }\) and the value of \(\pi _{6}^{\prime }\) (the new twit value of p6).

As shown in Table 4, each successive plaintext is chosen so that the number of accesses to the last cache line within a row increases with row number. The twits of all 16 bytes of the key are obtained with fewer than 16 blocks of plaintext and encryptions. In practice, however, this may not be the case. Experimental error could cause an unexpected pattern to occur. In that event, we rollback and proceed from an earlier row. On average, roughly 6 errors occur per sample. Overall, we require approximately 15 distinct plaintexts to obtain the twits of each of the 16 key bytes. Due to the rollbacks, the number of encryptions performed is about 21.5 on average per sample.

3.2 Second Round Attack

3.2.1 Algorithm and Description

Inputs to a round expressed as a 4 × 4 byte matrix are indices to the AES table (S-Box). This matrix is also the output of the previous round. Based on the transformations that the state array undergoes in the first round, we can relate each element of the input of the second round to bytes of the plaintext and key.

Given that it is easiest to identify the line accesses made at the end of a round, we focus on expressions for the indices of the last 4 table accesses, i.e., \(x_{11}^{(2)}\), \(x_{6}^{(2)}\), \(x_{1}^{(2)}\), and \(x_{12}^{(2)}\) (Eqs. 1–4). Collectively, these equations involve all 16 bytes of the AES key. The known variables on the RHS of these equations are bytes of the plaintext and twits of the bytes of the AES key. The first two bits of the table index (LHS) comprise the cache line number obtained (with some ambiguity) from the spy input.

Our strategy is to compute the two most significant bits of the byte value of the RHS as a function of known quantities and the minimum possible number of unknown bits of the key. Algorithm 1 outlines the procedure to obtain a set of candidate keys containing the correct key with very high probability.

Algorithm 1 creates four relational schema each with five attributes. The first four attributes are the least significant six bits of different bytes of the AES key. The fifth attribute comprises the two most significant bits of the output of the S-Box applied to a byte of the key (for example s(k12)′ in Eq. 1). Four relations are created which are instances of the four schema. Each is populated with all possible combinations of bit values of the attributes; thus, there are a total of 226 tuples in each relation. Each tuple is associated with a score (initially zero).

For each tuple in relation ri and for a given plaintext, the RHS of Eqn. i is evaluated by substituting values from the tuple, the plaintext, and bits of the key obtained from the Round 1 attack. The score of the tuple is incremented if the most significant two bits of the computed byte (RHS) match one of the line numbers reported by the spy for the specific cache line access during encryption of that plaintext. This is repeated for each tuple and for all the blocks of plaintext.

After scores are computed for all tuples in the four relations, the top ni tuples in relation ri are selected. A relational join is performed on the surviving tuples between relations r1 and r4 and also between the reduced r2 and r3. Each join output includes eight 6-bit attributes. Together, the two join outputs contain possible values of the least significant six bits of all 16 bytes of the AES key.

Each tuple in r1 ⋊⋉ r4 is paired with a tuple in r2 ⋊⋉ r3 which, together with the bits obtained in the Round 1 attack, form a possible AES key. These potential keys are each tested for correctness by a verification program which produces a sequence of cache line numbers accessed during encryption of successive blocks of plaintext. In particular, the last two accesses in rounds 3–10 are matched against those provided by the spy. The correct key will be inferred because only in that case will the inputs provided by the spy match those provided by the verification program in all/most cases.

The join predicate is actually the conjunction of two sub-predicates. Consider for example, r1 ⋊⋉ r4. To join a tuple, t1 from r1 with a tuple, t2 from r4, we assemble k12 from \(k_{12}^{\prime \prime }\) (in t2) and \(k_{12}^{\prime }\) (from the First Round Attack). We then compute s(k12)′ and compare it with the value of the fifth attribute in t1. To check for a match of the second sub-predicate, compute s(k13)′ and compare it with the value of the fifth attribute in t2. Only if both sub-predicates are satisfied is the tuple formed by concatenating t1 and t2 included in r1 ⋊⋉ r4. A similar computation is performed to compute r2 ⋊⋉ r3.

3.2.2 Experiments and Results

A basic strategy is to select only the top scoring tuples from each relation and perform the joins and Cartesian product on these reduced relations. Table 5 shows the overall success probability of this strategy. It also shows the number of subkeys with the top score in each relation ri, 1 ≤ i ≤ 4 for different number of plaintexts, b encrypted with the same key. The reported results are averaged over 1000 samples. The numbers in parentheses represent the probability that the correct subkey appears in the set of top scorers.

The number of top scorers is highest in r4 across all values of b. In each relation, the number of top scorers decreases with increasing b. Also, the probability of getting the correct key in the set of top scorers increases with b. Thus, it is mostly possible to find the correct subkeys in r1 and r2 with only about 30 blocks of plaintext but it requires about 50 and 70 plaintexts respectively to harvest the correct subkeys from r3 and r4.

After picking the top scorers in r1 and r4, we found that r1 ⋊⋉ r4 returned zero tuples in some samples (Table 5 shows that the cardinality of r1 ⋊⋉ r4 is less than 1 for b = 30,40,...). Another interesting observation is that the probability of the correct subkey appearing in the set of top scores is close to the overall success probability and hence is the key factor in the overall success of obtaining the complete AES key.

The probability of successfully retrieving the AES key is directly related to the quality of inputs provided by the spy threads. False positives (spurious cache line accesses) and false negatives (failing to report the correct line accesses) both have a detrimental effect. Further, the above strategy of selecting subkeys with the top scores yields very limited success. To improve the success probability of finding the correct key, we should include many more subkeys especially from r4.

The false positive and false negative rates are dependent on noise-induced experimental error and a host of design parameters such as the number of spy threads and the timer setting (Section 2). The model presented next provides insight into the dependence of success probability on false positive/false negative rate and also on ni—the number of top-ranking subkeys collected from each relation ri (prior to the join).

Let Xc and \(X_{in}^{(k)}\), 1 ≤ k ≤ s − 1,s = 226, respectively denote the random variables associated with the scores of the correct and incorrect subkey values and let pc and pin denote their respective success probabilities. These variables are binomially distributed, i.e., \(X_{c} \sim B(p_{c}, b)\) and \(X_{in}^{(k)} \sim B(p_{in}, b)\), 1 ≤ k ≤ s − 1 where b is the number blocks of plaintexts used.

Let \(f_{n}^{(i)}\), \(f_{p}^{(i)}\) respectively denote the experimentally determined average rates of false negatives and false positives corresponding to Eqn. i. Where understood, we drop the superscript for brevity. The rate of false negatives may be interpreted as the probability of occurrence of a false negative. The occurrence of a false negative and the correct subkey receiving a boost are events that are complements of each other. Hence,

Each line number reported by the spy contributes to an increase by 1 of the scores of roughly 25% of the incorrect subkeys (since the number of cache line numbers is 4). In the event of a false negative, the number of reported accesses on average is fp while it is fp + 1 in the absence of a false negative. So,

In Algorithm 1, we pick the top ni scorers. We are interested in the probability of the correct subkey being one among the top ni scorers. This is equivalent to having, at least s − ni incorrect subkeys scoring less than the correct subkey. Let \(Y_{in}^{(k)}\) denotes the random variable associated with the score of kth lowest scorer among incorrect subkey values. So,

\(Y_{in}^{(1)}\), \(Y_{in}^{(2)}\), … , \(Y_{in}^{(k)}\), … , \(Y_{in}^{(s-1)}\) are called order statistics of \(X_{in}^{(1)}\), \(X_{in}^{(2)}\), … , \(X_{in}^{(k)}\), … , \(X_{in}^{(s-1)}\). The following result from order statistics is used in our model.

Lemma 1

LetX(1), … , X(s− 1) be s − 1 random samples from a discrete distribution with cumulative mass function, FX(n) = P(X ≤ n) = Pn. LetY(1), … , Y(s− 1)denote the order statistics of the sample. Then,

To have the correct subkey value among topniscorers whenXc = n + 1, we should have\(Y_{in}^{(s-n_{i})} \leq n\). So, the probability of the correct subkey value being amongnitop scorers is

Substituting from Lemma 1 into above expression yields the following.

Theorem 1

After scorings = 226candidate subkey values usingbblocks of plaintext, the probability of the correct subkey ending up as one of the topniscorers is

wherePn = P(Xin ≤ n).

Success in finding the correct key is limited by the difficulty in finding the correct subkey in r4. So we studied the effect of \(f_{n}^{(4)}\), \(f_{p}^{(4)}\), and n4 on the success probability of the latter. We generated 1000 samples—each sample comprises a random key and 100 randomly generated blocks of plaintext. We created two subsets of samples using only the first 25 blocks for each sample. Each sample in the first had resulted in success when n4 = 100 and each sample in the second resulted in success when n4 = 10000 but failed when n4 = 100. The average values of \(f_{n}^{(4)}\) for the subsets were 0.08 and 0.18 respectively while the average values of \(f_{p}^{(4)}\) were 0.81 and 0.94 respectively. Based on the analytical model, success probability versus n4 for each set of false positive and false negative rates was plotted (Fig. 1).

To obtain a reasonable subset of samples with low \(f_{n}^{(4)}\), we selected samples with \(f_{n}^{(4)}\) between 0.04 and 0.12 (average value = 0.08). Our preliminary findings indicated that the success probability is not highly correlated with \(f_{p}^{(4)}\), so it was not used as a filter in selecting the subset. We plotted the number of samples resulting in success with n4 equal to each value on the x-axis of Fig. 1. A similar plot was obtained for samples with \(f_{n}^{(4)}\) in the higher range between 0.16 and 0.24. As shown in Fig. 1, the graphs for the two subsets are well separated with a close match between model and experimental results. The model estimates tend to be generally conservative and especially so in the case of higher false negatives at higher values of n4.

We conducted a limited investigation on the effect of individual success probabilities (in r1, r2, r3, r4) on the overall success probability. To achieve individual success probability = 0.9 for each ri, at b = 30, we required n1 = n2 = 10, n3 = 103, and n4 = 105 (Fig. 2a). For these values, we succeeded in retrieving the correct key in about 80% of 1000 samples under consideration. This overall success probability is considerably higher than the successes in the four relations were independently distributed. In the latter case, the success probability would have been (0.9)4 or 65%. This is explained by the observation that the false negatives (and false positives) in the four relations are correlated across a large number of samples. For b = 40 and with the same choice of ni values (Fig. 2b), the overall success probability is about 90%.

4 Strategy II - Single Round Attack

The next attack is both simpler and more realistic compared with to the attack of the previous section. It is simpler because it relies on the cache line accesses occurring in only the second round of encryption and that too only the last four accesses. It is more realistic since it obviates the need to craft plaintext based on cache accesses made during encryption of the previous block of plaintext.

As in strategy I, we create four relations, each with five attributes. However, unlike the Round 2 Attack in strategy I, we need to determine all eight bits (not just six) of each byte of the AES key. So the relational schema employed are

The initial number of the tuples in each relation is now 234 (unlike 226 in round 2 of Approach I). Otherwise, the steps in this approach are very similar to those of Algorithm 1.

We generated a total of 145 samples. An experiment involved scoring the 234 tuples in each relation for varying number of plaintexts. Figure 3a and b respectively show plots of the rank of the correct subkey in r3 and r4 as a function of the number of blocks of plaintext used (for 6 samples).

With 40 blocks, individual success probability of 0.9 is achieved in each relation, with n1 = n2 = 1, n3 = 103, n4 = 106. The overall success probability at these values is around 0.85. With the same choice of ni values but with 50 blocks, overall success probability increases to 0.9.

In this strategy, the “Joins and Cartesian Product” step is slightly different from Algorithm 1. Due to the nature of the fifth attribute in relations considered, the join predicate is the conjunction of four sub-predicates. Each sub-predicate checks for the equality of two twits where the first twit is the fifth attribute of a tuple. To illustrate the computation of say, the second sub-predicate, consider joining four tuples t1, t2, t3, and t4 from r1, r2, r3, and r4 respectively. The expression s(k15) ⊕ k2 ⊕ k6 is computed using the value of k15 in t3, k2 in t1, and k6 in t4. The two most significant bits of the result are then compared with the value of attribute, a2 in t2. If they match, three other sub-predicates involving a2, a3, and a4 are computed. Only if all four sub-predicates are satisfied is the tuple obtained by concatenating t1, t2, t3, and t4 included in r1 ⋊⋉ r2 ⋊⋉ r3 ⋊⋉ r4.

After the Scoring and Elimination step but before the join (for same choice of ni values as above), we have 1 × 1 × 103 × 106 = 109 ≈ 230 candidate keys. The probability of a candidate satisfying a sub-predicate is 0.25, since we have four roughly equi-probable outcomes. So, on average, the number of candidate keys reduces by a factor of 28 after the join involving a match on four sub-predicates. So, roughly 222 candidate keys survive for Verification.

In the Verification step, we compare last 2 accesses of rounds 3–10 (8 rounds). Using this information, we can score candidate keys as earlier. Each candidate key’s score starts with zero and is incremented whenever an access matches with spy reported access. For each block of plaintext, we can score a maximum of 8 × 2 = 16 times. Even if we consider accesses corresponding to 10 blocks (which are already used in initial scoring), we can score each candidate key 160 times. By comparison, the maximum achievable score is considerably higher than the maximum score of, say 50, with r4 using strategy 1 (relation size = 226 and b = 50).

In practice, a scoreboard for each relation ri with five attributes (each of size 1 byte) requires 5 × 234 bytes or 85.89-GB storage. It is not feasible to maintain such a massive scoreboard in main memory and moving it partially to secondary memory will increase overall time. With an increase in the number of plaintext blocks, the number of top-scoring subkeys greatly reduces and only subkeys with higher scores could be saved. Another possibility is to store the subkey count on the first pass through the tuples and then store only the top scorers in the second pass.

Compared with Algorithm 1, the major drawback of this strategy was the time required for retrieving the correct key. Algorithm 1 was implemented in Python. It took 40 to 50 min on an Intel (R) Core (TM) i5-4440 CPU @ 3.10 GHz system for retrieving a key using 50 blocks of plaintext. We re-wrote in C the compute intensive portion of Algorithm 1 (lines 9 to 18) which scores 226 possible subkeys per equation. This was integrated into the Python code to bring down the time taken for retrieving the subkeys to under a minute. The same approach was used for strategy II implementation, where 234 subkeys were scored. It took around 3–4 hrs to get the key using 50 blocks of plaintext on a 4 core machine. Since the application is highly parallelizable, the speedup is expected to be close to the number of cores even with around 50 cores.

5 Related Work

It was first mentioned by Hu [20] that cache memory can be considered as a potential vulnerability in the context of covert channels to extract sensitive information. Later Kocher [21] demonstrated the data-dependent timing response of cryptographic algorithms against various public key systems. Based on his work, Kelsey et al. [22] mentioned the prospects of using cache memory to perform attacks based on cache hits in S-box ciphers like Blowfish. One formal study of such attacks using cache misses was conducted by Page [16].

Tromer et al. [9] proposed an approach and analysis for the access-driven cache attacks on AES for the first two rounds. They introduced the Prime+Probe technique for cache attacks and targeted 4 table-based (OpenSSL-0.9.8) implementation which spans over 64 cache lines. As a countermeasure, they suggested a 256-byte S-Box table (spanning 4 cache lines) and pre-fetching all table entries before every round. Neve et al. [23] designed an improved access-driven cache attack on the last round of AES on a single-threaded processor.

Gullasch et al. [10] proposed an efficient access-driven cache-based attack when attacker and victim use a shared crypto library. They targeted OpenSSL-0.9.8n 4 table (4KB lookup table) implementation and the compressed 2KB lookup table-based implementation of AES to retrieve the key using 100 blocks of plaintext. However, their key retrieval algorithm failed to recover key with S-box implementation. Yarom and Falkner [17] introduced the Flush+Reload technique which is effective across multiple cores and virtual machine boundaries. They conducted a cross-core attack on the Last Level Cache (LLC) with the spy and the victim executing concurrently on two different cores. Later, Irazoqui et al. [8] used the Flush + Reload technique for cross virtual machine attack and recovered the AES secret key with 219 encryptions.

Irazoqui et al. [24] introduced a new shared Last Level Cache attack by exploiting huge pages to work across virtual machines using the Prime+Probe technique. A similar attack was proposed by Liu et al. [25] on various versions of GnuPG. An attack on LLC was introduced by Kayaalp et al. [26], which does not use the huge pages or shared data or the knowledge of virtual-to-physical page mappings.

Gotzfried et al. [27] demonstrated that Intel SGX enclaves are vulnerable to cache-based side channel attack by attacking OpenSSL-0.9.7a AES using Prime+Probe technique when the victim is running inside the enclave. They assumed that the victim and attacker run as a single process in two different threads running in two logical CPUs sharing the same physical core. The above attacks targeted OpenSSL’s 4 table or 5 table (T-Table)-based C implementation of AES. Moghimi et al. [28] attacked both the T-Table-based and S-Box implementations of AES using Prime+Probe method in Intel SGX. They modified the kernel to interrupt the victim running inside SGX enclave and obtain information about cache accesses during encryption. With noise-free simulated data, they recovered 64 bits of the key with 500 blocks of plaintext, 80 bits of the key with 1500 blocks of plaintext, and 96 bits of the key with thousands of blocks of plaintext. Our attack does not use a compromised OS and recovers the full key with 40–50 plaintext blocks.

6 Conclusions

We successfully launched two cache-based side channel attacks on the assembly implementation of AES which uses a single 256-byte lookup table. The first of these attacks involves two rounds. In the first round, we crafted about 15 blocks of plaintext to recover the first two bits of each of the 16 bytes of the AES key. In the second round, we obtained the remaining 6 bits of each byte of the key with 40 blocks.

The novelty in the second attack (Single Round Attack) is that it involved spying on the cache accesses in the second round alone. We obtained all 128 bits of the AES key with about 50 blocks of random plaintext and a 90% success rate. The main advantage of this attack is that it does not require specially crafted plaintext, so the attack scenario is more realistic. On the other hand, the main drawback of the Single Round Attack is that the off-line key retrieval takes about 5 hrs on a 4-core machine. However, since it is highly parallelizable, we expect it to take under 30 min on 50 cores.

Notes

When we targeted lines 0, 1, or 2, we observed that lines 1, 2, or 3 respectively would be pre-fetched by the hardware causing ambiguity. But, when we targeted line 3, we noticed that line 4 was pre-fetched. That, however, did not affect our results since line 4 is not part of the lookup table.

References

Biryukov A, Dunkelman O, Keller N, Khovratovich D, Shamir A (2009) Key recovery attacks of practical complexity on AES variants with up to 10 rounds., https://eprint.iacr.org/2009/374

Bogdanov A, Khovratovich D, Rechberger C (2011) Biclique cryptanalysis of the full AES. In: International conference on the theory and application of cryptology and information security. Springer, pp 344–371

Gilbert H, Peyrin T (2009) Super-Sbox cryptanalysis: improved attacks for AES-like permutations. https://eprint.iacr.org/2009/531

Tao B, Wu H (2015) Improving the biclique cryptanalysis of AES. In: Australasian conference on information security and privacy. Springer, pp 39–56

OpenSSL Software Foundation OpenSSL Project, https://www.openssl.org/

Ashokkumar C, Giri RP, Menezes B (2016) Highly efficient algorithms for AES key retrieval in cache access attacks. In: 2016 IEEE European symposium on security and privacy (EuroS P), pp 261–275

RedHat (2014) It’s all a question of time - AES timing attacks on OpenSSL., https://access.redhat.com/blogs/766093/posts/1976303

Irazoqui G, Inci MS, Eisenbarth T, Sunar B (2014) Wait a minute! A fast, Cross-VM attack on AES. In: Research in attacks, intrusions and defenses. Springer, pp 299–319

Tromer E, Osvik D, Shamir A (2010) Efficient cache attacks on AES and countermeasures. J Cryptol 23(1):37–71

Gullasch D, Bangerter E, Krenn S (2011) Cache games – bringing access-based cache attacks on AES to practice. In: Proceedings of the 2011 IEEE symposium on security and privacy, Washington, DC, USA: IEEE Computer Society SP ’11, pp 490–505

Apecechea GI, Inci MS, Eisenbarth T, Sunar B (2014) Fine grain cross-VM attacks on Xen and VMware are possible!. IACR Cryptology ePrint Archive, 248

Osvik D, Shamir A, Tromer E (2006) Cache attacks and countermeasures: the case of AES. In: Pointcheval D (ed) Topics in cryptology CT-RSA 2006. Vol. 3860 of Lecture notes in computer science, Springer, pp 1–20

Bernstein DJ (2005) Cache-timing attacks on AES

Acıiçmez O, Brumley BB, Grabher P (2010) New results on instruction cache attacks. In: Cryptographic hardware and embedded systems, CHES 2010. Springer, pp 110–124

Neve M, Seifert JP, Wang Z (2006) A refined look at Bernstein’s AES side-channel analysis. In: Proceedings of the ACM symposium on information, computer and communications security. ACM, pp 369–369

Page D (2002) Theoretical use of cache memory as a cryptanalytic side-channel. IACR Cryptology ePrint Archive, 169

Yarom Y, Falkner KE (2013) Flush+ reload: a high resolution, low noise, L3 cache side-channel attack. IACR cryptology ePrint Archive, 448

Bhattacharya S, Rebeiro C, Mukhopadhyay D (2016) A formal security analysis of even-odd sequential prefetching in profiled cache-timing attacks. In: Proceedings of the hardware and architectural support for security and privacy 2016, ACM, p 6

Intel Corporation (2016) Intel® 64 and IA-32 architectures optimization reference manual. , number 248966-033, 2016

Hu WM (1992) Lattice scheduling and covert channels. In: Proceedings of the IEEE symposium on security and privacy, Washington, DC, USA: IEEE Computer Society SP ’92, pp 52– 61

Kocher PC (1996) Timing attacks on implementations of Diffie-Hellman, RSA, DSS, and other systems. In: Proceedings of the 16th annual international cryptology conference on advances in cryptology, London, UK: Springer CRYPTO ’96, pp 104– 113

Kelsey J, Schneier B, Wagner D, Hall C (2000) Side channel cryptanalysis of product ciphers. J. Comput. Secur. 8:141–158

Neve M, Seifert JP (2006) Advances on access-driven cache attacks on AES. In: International workshop on selected areas in cryptography. Springer, pp 147–162

Irazoqui G, Eisenbarth T, Sunar B (2015) S$A: a shared cache attack that works across cores and defies VM Sandboxing–and its application to AES. In: IEEE symposium on security and privacy. IEEE, pp 591–604

Liu F, Yarom Y, Ge Q, Heiser G, Lee RB (2015) Last-level cache side-channel attacks are practical. In: IEEE symposium on security and privacy, pp 605–622

Kayaalp M, Abu-Ghazaleh N, Ponomarev D, Jaleel A (2016) A high-resolution side-channel attack on last-level cache. In: Proceedings of the 53rd annual design automation conference. ACM, p 72

Götzfried J, Eckert M, Schinzel S, Müller T (2017) Cache attacks on Intel SGX. In: Proceedings of the 10th European workshop on systems security. ACM, p 2

Moghimi A, Irazoqui G, Eisenbarth T (2017) CacheZoom: How SGX amplifies the power of cache attacks. In: International conference on cryptographic hardware and embedded systems. Springer, pp 69–90

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

C., A., Roy, B., Venkatesh, M.B.S. et al. “S-Box” Implementation of AES Is Not Side Channel Resistant. J Hardw Syst Secur 4, 86–97 (2020). https://doi.org/10.1007/s41635-019-00082-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41635-019-00082-w