Abstract

We deduce a structurally inductive description of the determinantal probability measure associated with Kalai’s celebrated enumeration result for higher-dimensional spanning trees of the \((n-1)\)-simplex. As a consequence, we derive the marginal distributions of the simplex links in such random trees. Along the way, we also characterize the higher-dimensional spanning trees of every other simplicial cone in terms of the higher-dimensional rooted forests of the underlying simplicial complex. We also apply these new results to random topology, the spectral analysis of random graphs, and the theory of high dimensional expanders. One particularly interesting corollary of these results is that the fundamental group of a union of \(o(\log n)\) determinantal 2-trees has Kazhdan’s property (T) with high probability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Forty years ago, Kalai (1983) introduced, to spectacular effect, a generalization of the graph-theoretic notion of a tree to higher-dimensional simplicial complexes, called \({\mathbb {Q}}\)-acyclic simplicial complexes for the triviality of their rational reduced homology groups in every dimension. However, recent authors (Linial and Peled 2019; Kahle and Newman 2022; Mészáros 2022) appear to have settled on simply calling these hypertrees. For \(0\le k<n\), let \({\mathscr {T}}_{n,k}\) denote the set of k-dimensional hypertrees on the vertex set \([n]:=\{1,2,\dots n\}\). Kalai noticed, among other things, that \({\tilde{H}}_{k-1}(T)\) (assume integer coefficients throughout) is a finite group for all \(T\in {\mathscr {T}}_{n,k}\) and moreover that

which is seen to be a generalization of Caley’s formula by recalling that \(\vert {{\tilde{H}}}_0(T)\vert =1\) for all \(T\in {\mathscr {T}}_{n,1}\), due to trees being connected. This suggests a natural probability measure (Lyons 2003, 2009) \(\nu =\nu _{n,k}\), on \({\mathscr {T}}_{n,k}\) defined on atoms by \(\nu _{n,k}(T)=n^{-{{n-2}\atopwithdelims ()k}}\vert {{\tilde{H}}}_{k-1}(T)\vert ^2\).

Seemingly unrelated to this measure, consider the k-dimensional Linial-Meshulam complex, denoted \({\mathcal {Y}}_k(n,p)\) and defined (Linial and Meshulam 2006; Meshulam and Wallach 2009) to be the random k-dimensional simplicial complex on [n] with full \((k-1)\)-skeleton wherein each k-face is included independently and with probability p. Let \(\mu _{n,k}\) denote the probability density for \({\mathcal {Y}}_k(n,(n+1)^{-1})\).

Let \({\mathcal {T}}_{n,k}\) denote a random complex distributed according to \(\nu _{n,k}\), and let \({\mathcal {Y}}_{n,k}\) denote a random complex distributed according to \(\mu _{n,k}\). Our main result is the following structure theorem for random hypertrees distributed according to \(\nu _{n,k}\) (see Sect. 2 for definitions):

Theorem 1

Assume \(n>k+1\). Then

where \({\mathcal {T}}_{n-1,k-1},{\mathcal {Y}}_{n-1,k-1}\) are statistically independent. Also

where \({\mathcal {T}}_{n-1,k},{\mathcal {Y}}_{n-1,k}\) are statistically independent.

The first claim of this theorem states that a vertex link in \({\mathcal {T}}_{n,k}\) can be simulated by first sampling \({\mathcal {T}}_{n-1,k-1}\) and then adding each missing \((k-1)\)-face independently with probability 1/n. This effectively gives us the law of the set of faces in \({\mathcal {T}}_{n,k}\) which contain the vertex n. The second claim tells us that the remaining faces of \({\mathcal {T}}_{n,k}\) which do not contain the vertex n can be simulated by sampling \({\mathcal {T}}_{n-1,k}\) and then deleting each of its k-faces independently with probability 1/n.

This simple idea of decomposing a hypertree into two collections of faces—those which contain a designated vertex and those which do not—turns out to be quite powerful. However, the idea is nothing new. For example, we can see this decomposition in use by Linial and Peled (2019) at Kalai’s suggestion to inductively construct collapsible hypertrees.

By iterating our formula for the law of a vertex link, we can also determine a similar expression for the law of the link of a simplex of arbitrary dimension.

Theorem 2

The law of the link of any \((j-1)\)-dimensional simplex in \({\mathcal {T}}_{n,k}\) is equivalent to that of \({\mathcal {T}}_{n-j,k-j}\cup {\mathcal {Y}}_{k-j}(n-j,j/n)\) where these two \((k-j)\)-dimensional complexes are independent.

Proof

We prove this by induction on the dimension of the simplex. By relabeling vertices, we may assume that the \((j-1)\)-simplex we are interested in is \(\{n-j+1,\dots ,n\}\). The base case \(j=1\) follows from Theorem 1. Inducting, we have

It is well known and easily verified from the definitions that the law of a vertex link of a Linial–Meshulam complex is a codimension-1 Linial–Meshulam complex on one fewer vertices with the same face probability. So, since the link operation is distributive over unions of k-complexes (see Sect. 2), this is equivalent to

where the last equality in law follows from the independence of the two Linial–Meshulam complexes and the inclusion–exclusion principle. \(\square \)

1.1 Applications to random topology

Theorem 2 has several applications to random topology. Indeed, Garland’s method (Garland 1973) and its refinements (see Żuk 1996, 2003; Oppenheim 2018, 2020), Żuk’s criterion among them, have proven to be highly effective tools for extracting global information about a pure k-dimensional simplicial complex using only information found in the \((k-2)\)-dimensional links of the complex.

Theorem 3

(Garland’s method) Let X be a pure k-dimensional simplicial complex. Suppose that, for all \((k-2)\)-faces \(\tau \in X\), we have \(\lambda ^{(0)}({\text {Link}}(\tau ,X))\ge 1-\varepsilon >0\). Then \(\lambda ^{(k-1)}(X)\ge 1-k\varepsilon .\)

Theorem 4

(Żuk’s criterion) Let X be a pure 2-dimensional simplicial complex. Suppose that, for all 0-faces \(\tau \in X\), we have that \(\lambda ^{(0)}({\text {Link}}(\tau ,X))>1/2\) and \({\text {Link}}(\tau ,X)\) is connected. Then the fundamental group \(\pi _1(X)\) has Kazhdan’s property (T).

The \(\lambda ^{(k-1)}(X)\) mentioned in the above statement of Garland’s method refers to the smallest nonzero eigenvalue of the top-dimensional up-down Laplacian of X under a particular weighted inner product (see Lubotzky 2018; Gundert and Wagner 2014 for greater detail). This eigenvalue will be referred to as the spectral gap of X. In the special case where \(k=1\) and X is a connected graph, \(\lambda ^{(0)}(X)\) corresponds to the second smallest eigenvalue of the reduced Laplacian of X. Fortunately, the spectral gap of a random graph, in one form or another, is already quite well studied (Feige and Ofek 2005; Coja-Oghlan and Lanka 2009; Oliveira 2009; Tikhomirov and Youssef 2019; Hoffman et al. 2019). Combining our characterization of the links in \({\mathcal {T}}_{n,k}\) with the best known techniques for the kind of spectral gap estimation we would like to do, we find the following:

Proposition 5

Let \(\delta >0\) be an arbitrary fixed constant, and let \({\mathcal {X}}\) be the union of \(\lceil \delta \log n\rceil \) jointly independent copies of \({\mathcal {T}}_{n,k}\) with k fixed. Then, for any fixed \(s>0\), there exists a constant \(C>0\) so that \(\vert \lambda ^{(k-1)}({\mathcal {X}})-1\vert \le \frac{C}{\sqrt{\log n}}\). with probability \(1-o(n^{-s})\).

Proof

This follows immediately from Garland’s method in combination with Lemma 36 and a union bound on the probability that any one-dimensional link of \({\mathcal {X}}\) fails to meet the criteria of Garland’s method. \(\square \)

Applying Żuk’s criterion in the case \(k=2\) gives the following corollary:

Corollary 6

Let \(\delta >0\) be an arbitrary fixed constant, and let \({\mathcal {X}}\) be the union of \(\lceil \delta \log n\rceil \) jointly independent copies of \({\mathcal {T}}_{n,2}\). Then, for any fixed \(s>0\), we have with probability \(1-o(n^{-s})\) that \(\pi _1({\mathcal {X}})\) has Kazhdan’s property (T).

Particularly in computer science, there is a growing interest in generating families of graphs with large spectral gap, which are usually called expander graphs, and probabilistic constructions have been offered as a way to do this quickly and successfully with exceedingly high probability. For example, the authors of Hoffman et al. (2019) prove that the Erdős–Rényi random graph \({\mathcal {G}}(n,p)\) (in particular the random infinite family \(\{{\mathcal {G}}(n,p(n))\}_{n\ge 1}\)) achieves the same spectral gap as found in Lemma 36 and with equally high probability when \(np\ge (1/2+\delta )\log n\) for any fixed \(\delta >0\). Moreover, they show that the assumption \(\delta >0\) is necessary for this to hold. The best known expander graph families have been constructed explicitly and have asymptotically (with respect to vertex count) constant average degree. So, while the Erdős–Rényi random graph can succeed at being a reliable expander, it is only able to do so if it is allowed an expected average degree far exceeding \(\frac{1}{2}\log n\).

Our characterization of the one-dimensional links of a determinantal (n, k)-tree as the union of a determinantal \((n-k+1,1)\)-tree with an independent \({\mathcal {G}}(n-k+1,(k-1)/n)\) makes it, or perhaps the union of a small number of independent copies of it, a potential naturally occurring candidate for a random expander graph with constant expected average degree. Lemma 36 shows that the number of superimposed independent copies of this graph required to match the result for \({\mathcal {G}}(n,p)\) is no more than \(\delta \log n\) for every \(\delta >0\). In particular, the resulting graph has an expected average degree of around \((k+1)\delta \log n\), improving upon \({\mathcal {G}}(n,p)\)’s required expected average degree by a factor of arbitrary finite size.

1.2 Outline

Section 2 is primarily devoted to establishing notation and basic homological definitions as well as providing background for the deterministic study of simplicial spanning trees. The only truly novel result of this section is Theorem 18. In Sect. 3 the results of Sect. 2 are applied to the spanning trees of the \((n-1)\)-simplex to give Theorem 1. Section 4 establishes the tools required to prove Proposition 5 and Corollary 6.

2 Homological trees: simplicial and relative

A chain complex is a sequence of abelian groups, \(\{{\mathcal {C}}_j\}_{j\in {\mathbb {Z}}}\), called chain groups, linked by group homomorphisms \(\partial _j:{\mathcal {C}}_j\rightarrow {\mathcal {C}}_{j-1}\) called boundary maps which satisfy \(\partial _j\partial _{j+1}=0\) for all \(j\in {\mathbb {Z}}\), or equivalently \({\text {Ker}}\partial _j\supseteq {\text {Im}}\partial _{j+1}\). The jth homology group of a chain complex such as this is defined to be the quotient group \({\text {Ker}}\partial _j/{\text {Im}}\partial _{j+1}\). Since we will only be considering finitely-generated free \({\mathbb {Z}}\)-modules for our chain groups, we can represent these boundary maps by integer matrices. By the structure theorem for finitely-generated abelian groups, the jth homology group can be expressed as the direct sum of a free abelian group, which is isomorphic to \({\mathbb {Z}}^{\beta _j}\) and called its free part, and a finite abelian group called its torsion subgroup which is a direct sum of finite cyclic groups. The rank \(\beta _j\) of the free part is called the jth Betti number. We will require the following standard fact from homological algebra which gives two equivalent formulas for what is commonly called the Euler characteristic of a chain complex.

Lemma 7

Suppose \(({\mathcal {C}}_{\#},\partial _{\#})\) is a chain complex such that each chain group is freely and finitely generated and only finitely many of the chain groups are nontrivial. Let \(f_j\) denote the rank of \({\mathcal {C}}_j\) for each \(j\in {\mathbb {Z}}\). Then

2.1 Simplicial

Fix an integer \(n\ge 1\). The set \([n]:=\{1,2,\dots ,n\}\) will be our vertex set. For \(-1\le j\le n-1\), a j-dimensional abstract simplex, or j-face, is a subset of [n] with cardinality \(j+1\). We denote the set of all j-faces on [n] by \({[n]\atopwithdelims (){j+1}}\). All faces will be oriented according to the usual ordering on [n]. As such, we will be denoting elements of \({[n]\atopwithdelims (){j+1}}\) by \(\{\tau _0,\tau _1,\dots ,\tau _j\}\), where it is to be implicitly understood that \(1\le \tau _0<\tau _1<\dots <\tau _j\le n\). Let \(\partial =\partial _k^{[n]}\) be the matrix thats rows and columns are indexed respectively by \({[n]\atopwithdelims ()k}\) and \({[n]\atopwithdelims (){k+1}}\), and for which, given \(\sigma \in {[n]\atopwithdelims ()k}\) and \(\tau \in {[n]\atopwithdelims (){k+1}}\), we set

to account for our choice of orientation for each face. In particular, \(\partial _0^{[n]}\) is the \({[n]\atopwithdelims ()0}\times {[n]\atopwithdelims ()1}\) all-ones matrix.

An abstract simplicial complex with vertices in [n] is a nonempty subset \(X\subseteq \bigcup _{j\ge -1}{[n]\atopwithdelims (){j+1}}\) which, for every pair of subsets \(\sigma \subseteq \tau \), satisfies \(\tau \in X\implies \sigma \in X\). Let \({\mathscr {A}}_n\) denote the set of all abstract simplicial complexes on [n]. It is easily verified with this definition that \({\mathscr {A}}_n\) is closed under intersection and union. We write \(X_j:={[n]\atopwithdelims (){j+1}}\cap X\) and define the dimension of X to be \(\dim X:=\sup \{k\ge -1:X_k\ne \emptyset \}\). If X has dimension k, X is said to be pure if, for every \(-1\le j<k\) and every j-face \(\sigma \in X\), there exists a \(\tau \in X_k\) such that \(\sigma \subset \tau \). An important example is \(K^k_n:=\bigcup _{j=0}^{k+1}{[n]\atopwithdelims ()j}\), the complete k-dimensional complex on [n].

Given a matrix M with entries indexed over the set \(S\times T\), for \(A\subseteq S\) and \(B\subseteq T\), we write \(M_{A,B}\) to denote the submatrix of M with rows indexed by A and columns indexed by B. We will also occasionally write \(M_{\bullet ,B}\) for the case \(A=S\). As a point of clarification for this notation, transposes are handled by the convention of writing \(M_{A,B}^t\) to mean \((M_{A,B})^t=(M^t)_{B,A}\).

Given \(X\in {\mathscr {A}}_n\), we define its chain complex \(({\mathcal {C}}_{\#},\partial _{\#})\) by \({\mathcal {C}}_j(X):={\mathbb {Z}}^{X_j}\), and \(\partial _j^X:=\partial _{X_{j-1},X_j}\). Note then that we have \({\mathcal {C}}_j(X)=0\) for all \(j\ge n\) and all \(j<-1\)—because \(\vert X_{-1}\vert =\vert \{\emptyset \}\vert =1\). We denote the jth homology group of this chain complex by \({{\tilde{H}}}_j(X)\). It is equivalent to the jth reduced simplicial homology group, hence the notation. Note that, with this chain sequence, it makes sense to consider \({\tilde{H}}_{-1}={\text {Ker}}\partial ^{[n]}_{-1}/{\text {Im}}\partial ^{[n]}_0\) as well, although we can see that this group is always trivial since \(\partial ^{[n]}_0\) is surjective.

Lemma 8

\(\partial ^{[n]}_k\partial ^{[n]t}_k+\partial ^{[n]t}_{k-1}\partial ^{[n]}_{k-1}=n{\text {Id}}\).

Proof

This follows by explicit computation of matrix entries via (1) combined with the chain sequence identity \(\partial ^{[n]}_{k-1}\partial ^{[n]}_k=0\) which can also be seen to hold by explicit computation of matrix entries via (1). A more detailed explanation along these lines for the cases \(k\ge 2\) can be found in the proof of Lemma 3 in Kalai (1983), and the case \(k=0\) is straightforward. For the case \(k=1\), \(\partial ^{[n]}_1\partial ^{[n]t}_1\) is the combinatorial Laplacian of the complete graph on [n]. Hence \(\partial ^{[n]}_1\partial ^{[n]t}_1=n{\text {Id}}-{\text {J}}\) where \({\text {J}}\) is the \(n\times n\) all-ones matrix. Noticing that \(\partial ^{[n]t}_0\partial ^{[n]}_0={\text {J}}\) then completes the proof. \(\square \)

Fix a \(\Delta \in {\mathscr {A}}_n\) and set \({\mathscr {C}}_{n,k}(\Delta ):=\left\{ X\in {\mathscr {A}}_n:K_n^{k-1}\cap \Delta \subseteq X\subseteq K_n^k\cap \Delta \right\} \). We will call elements of this set the (n, k)-complexes of \(\Delta \), or just k-complexes when the context is clear. Note this definition makes sense even if \(\dim \Delta >k\), but in this case \({\mathscr {C}}_{n,k}(\Delta )={\mathscr {C}}_{n,k}(K_n^k\cap \Delta )\). Building upon work done by Duval et al. (2008, 2011, 2015), Bernardi and Klivans (2015) define a higher-dimensional forest of \(\Delta \) to be a subset \(F\subseteq \Delta _k\) such that \(\partial _{\Delta _{k-1},F}\) is injective. Our definition of a forest will be equivalent to this one except that we will consider F to be an entire element of \({\mathscr {C}}_{n,k}(\Delta )\) by adding to it the \((k-1)\)-skeleton of \(\Delta \), more similar to the situation in Duval et al. (2008). That is to say, an \(F\in {\mathscr {C}}_{n,k}(\Delta )\) is called a k-forest of \(\Delta \) if \(\beta _k(F)=0\), in which case we write \(F\in {\mathscr {F}}_{n,k}(\Delta )\). It is easily verified that this is equivalent to Definition 3 in Bernardi and Klivans (2015) by noting that \({\tilde{H}}_k(F)={\text {Ker}}\partial _k^F\) is a free group and thus is 0 if and only if its rank is 0. A k-forest \(T\in {\mathscr {F}}_{n,k}(\Delta )\) with \(\vert T_k\vert ={\text {rank}}\partial _k^{\Delta }\) (the maximal possible value for a forest) is said to be a k-tree of \(\Delta \). Let \({\mathscr {T}}_{n,k}(\Delta )\) denote the set of k-trees of \(\Delta \)—(Bernardi and Klivans 2015) calls these the spanning forests of \(\Delta \). In the case \(\Delta =K_n^{n-1}\), or just \(\Delta \supseteq K_n^k\), these are Kalai’s k-dimensional \({\mathbb {Q}}\)-acyclic simplicial complexes mentioned in the introduction, and in this case we will suppress the \((\Delta )\) in all the above notation. The following result from Duval et al. (2015) generalizes Proposition 2 from Kalai (1983) to general k-trees of \(\Delta \in {\mathscr {A}}_n\):

Lemma 9

For \(X\in {\mathscr {C}}_{n,k}(\Delta )\), if any two of the following conditions hold, then so does the other condition and moreover \(X\in {\mathscr {T}}_{n,k}(\Delta )\):

-

\(\vert X_k\vert ={\text {rank}}\partial _k^{\Delta }\),

-

\(\beta _{k-1}(X)=\beta _{k-1}(\Delta )\),

-

\(\beta _k(X)=0\).

2.2 Relative

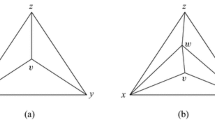

Given a pair \((X,Y)\in {\mathscr {A}}_n^2\) with \(X\supseteq Y\), we can define another chain complex by \({\mathcal {C}}_j(X,Y):={\mathbb {Z}}^{X_j\setminus Y_j}\) with boundary maps \(\partial _j^{X/Y}:=\partial _{X_{j-1}{\setminus } Y_{j-1},X_j{\setminus } Y_j}\). The homology groups of this chain complex are called relative homology groups and denoted \(H_j(X,Y)\). We can recover ordinary reduced homology from this by taking Y to be \(\{\emptyset \}\) or any complex with a single 0-face and no larger faces. Moreover, since homology is homotopy invariant, we also have that \(H_j(X,Y)\cong {{\tilde{H}}}_j(X)\) as long as Y is contractible. An important example of a contractible complex, the simplicial cone of a complex \(\Delta \in {\mathscr {A}}_{n-1}\) is defined by

We also define for any \(X\in {\mathscr {C}}_{n,k}({\text {Cone}}(n,\Delta ))\) the complexes

More generally, for any simplex \(\tau \) of dimension at most k,

The next lemma defines what we will call the binomial correspondence \({\mathscr {C}}_{n,k}({\text {Cone}}(n,\Delta ))\xrightarrow {\sim }{\mathscr {C}}_{n-1,k}(\Delta )\times {\mathscr {C}}_{n-1,k-1}(\Delta )\) for its relationship to the binomial recurrence formula \({n\atopwithdelims (){k+1}}={{n-1}\atopwithdelims (){k+1}}+{{n-1}\atopwithdelims ()k}\) in the special case that \({\text {Cone}}(n,\Delta )\supseteq K_n^k\).

Lemma 10

For \(\Delta \in {\mathscr {A}}_{n-1}\), the map \(\varphi (X):=({\text {Proj}}(n,X),{\text {Link}}(n,X))\) is an injection from \({\mathscr {C}}_{n,k}({\text {Cone}}(n,\Delta ))\) into \({\mathscr {C}}_{n-1,k}(\Delta )\times {\mathscr {C}}_{n-1,k-1}(\Delta )\), and its (left) inverse is given by \(\varphi ^{-1}(F,R)=F\cup {\text {Cone}}(n,R)\) which extends to an injection of \({\mathscr {C}}_{n-1,k}(\Delta )\times {\mathscr {C}}_{n-1,k-1}(\Delta )\) into \({\mathscr {C}}_{n,k}({\text {Cone}}(n,\Delta ))\).

Proof

For brevity, set \(\Delta _{(j)}:=K_n^j\cap \Delta \). Since \(\varphi ^{-1}\) is clearly also a right inverse of \(\varphi \), the only thing to show is that the images of these restrictions lie where we claim they do. Starting with \(\varphi \), we first recall that

So for \(X\in {\mathscr {C}}_{n,k}({\text {Cone}}(n,\Delta ))\) we have

Thus \(\Delta _{(k-1)}\subseteq {\text {Proj}}(n,X)\subseteq \Delta _{(k)}\) and \(\Delta _{(k-2)}\subseteq {\text {Link}}(n,X)\subseteq \Delta _{(k-1)}\) as desired. As for \(\varphi ^{-1}\), we have

due to the assumption that \((F,R)\in {\mathscr {C}}_{n-1,k}(\Delta )\times {\mathscr {C}}_{n-1,k-1}(\Delta )\). \(\square \)

Theorem 11

(Excision Theorem) For any \(A,B\in {\mathscr {A}}_n\), we have \(H_*(A,A\cap B)\cong H_*(A\cup B,B)\).

Theorem 12

(Long exact sequence of a triple) For \(A\subseteq B\subseteq C\in {\mathscr {A}}_n\), arrows exist for which the following sequence is exact:

That is, the kernel of each arrow in this diagram is equal to the image of the preceding arrow.

Lemma 13

For any \(\Delta \in {\mathscr {A}}_n\), \(X\in {\mathscr {C}}_{n,k}(\Delta )\), and \(Y\in {\mathscr {C}}_{n,j}(X)\) where \(j\le k\), any two of the following conditions imply the other:

-

\(\vert X_k\vert -\vert Y_k\vert ={\text {rank}}\partial _k^{\Delta /Y}\),

-

\(\beta _{k-1}(X,Y)=\beta _{k-1}(\Delta ,Y)\),

-

\(\beta _k(X,Y)=0\).

Proof

The long exact sequence of the triple \((\Delta ,X,Y)\) contains the sequence

The exactness of this sequence implies that

It suffices to show that the first bulleted condition is equivalent to the vanishing of the middle term in (2). Indeed, by the definition of relative homology, the rank-nullity theorem, dimensional considerations, and the fact that \(\Delta ,X\) have the same \((k-1)\)-skeleton, we have

By most of the same considerations, \(\beta _k(\Delta ,Y)-\beta _{k+1}(\Delta ,Y)=\dim {\text {Ker}}\partial _k^{\Delta /Y}-\vert \Delta _{k+1}\vert +{\text {rank}}\partial _{k+2}^{\Delta }\). So

is equal to 0 if and only if the first bulleted condition holds. The result therefore follows from (2). \(\square \)

Whenever two, and therefore all three, of the conditions of this lemma hold, we will call (X, Y) a relative (n, k)-forest of \(\Delta \). We will denote the set of such pairs by \({\mathscr {F}}_{n,k}^{{\text {rel}}}(\Delta )\). In the special case that Y is contractible (or just that \(\dim Y<k-2\)) we get that \(X\in {\mathscr {T}}_{n,k}(\Delta )\) if and only if \((X,Y)\in {\mathscr {F}}_{n,k}^{{\text {rel}}}(\Delta )\). We therefore have the following corollary which gives the original (Kalai 1983) three equivalent necessary and sufficient pairs of conditions for a k-complex of a homologically connected \(\Delta \) (i.e. \(\beta _{k-1}(\Delta )=0\)) to be a k-tree of \(\Delta \):

Corollary 14

For any \(\Delta \in {\mathscr {A}}_n\) with \(\beta _{k-1}(\Delta )=0\) and \(X\in {\mathscr {C}}_{n,k}(\Delta )\), any two of the following conditions imply the other, and in particular are equivalent to the statement that \(X\in {\mathscr {T}}_{n,k}(\Delta )\):

-

\(\vert X_k\vert ={{n-1}\atopwithdelims ()k}\),

-

\(\beta _k(X)=0\),

-

\(\beta _{k-1}(X)=0\).

An \(R\in {\mathscr {C}}_{n,k-1}(\Delta )\) is called a \((k-1)\)-root of \(\Delta \) if \(\partial ^{\Delta /R}_k\) is surjective and has full rank (Bernardi and Klivans 2015, Definition 9). Whenever any two of the conditions in the following lemma hold for a pair \((X,Y)\in {\mathscr {C}}_{n,k}(\Delta )\times {\mathscr {C}}_{n,k-1}(\Delta )\), we will call (X, Y) a rooted (n, k)-forest of \(\Delta \) and write \((X,Y)\in {\mathscr {F}}_{n,k}^{{\text {root}}}(\Delta )\). Equivalently, a rooted (n, k)-forest of \(\Delta \) is a pair \((X,Y)\in {\mathscr {F}}_{n,k}(\Delta )\times {\mathscr {C}}_{n,k-1}(\Delta )\) such that Y is a \((k-1)\)-root of X (Bernardi and Klivans 2015, Definition 12).

Lemma 15

For \(X\in {\mathscr {C}}_{n,k}(\Delta )\) and \(Y\in {\mathscr {C}}_{n,k-1}(\Delta )\), any two of the following conditions imply the other:

-

\(\vert X_k\vert =\vert X_{k-1}\setminus Y_{k-1}\vert \),

-

\(\beta _{k-1}(X,Y)=0\),

-

\(\beta _k(X,Y)=0\).

Proof

By Lemma 7, we have \(\sum _{j=-1}^k(-1)^j(\vert X_j{\setminus } Y_j\vert )=\sum _{j=-1}^k(-1)^j\beta _j(X,Y)\), which simplifies to

Indeed, since \(Y_j=X_j=\Delta _j\) for all \(j<k-1\), we have \({\mathcal {C}}_j(X,Y)=0\) for all \(j<k-1\). As \(H_j(X,Y)\) is a quotient of a subgroup of \({\mathcal {C}}_j(X,Y)\), the former vanishes with the latter. \(\square \)

Corollary 16

For any \(\Delta \in {\mathscr {A}}_n\), we have

Proof

Clearly \({\mathscr {F}}_{n,k}^{{\text {root}}}(\Delta )\supseteq \{(X,Y)\in {\mathscr {F}}_{n,k}^{{\text {rel}}}(\Delta )\cap \big ({\mathscr {C}}_{n,k}(\Delta )\times {\mathscr {C}}_{n,k-1}(\Delta )\big ):\beta _{k-1}(\Delta ,Y)=0\}\). For the other inclusion, suppose that \((X,Y)\in {\mathscr {F}}_{n,k}^{{\text {root}}}(\Delta )\). Then the long exact sequence in the proof of Lemma 13 implies that \(0=\beta _{k-1}(X,Y)\ge \beta _{k-1}(\Delta ,Y)\ge 0\). Thus \((X,Y)\in {\mathscr {F}}_{n,k}^{{\text {rel}}}(\Delta )\) since \(\beta _{k-1}(X,Y)=0=\beta _{k-1}(\Delta ,Y)\) and \(\beta _k(X,Y)=0\). \(\square \)

Lemma 17

Suppose \(\Delta \in {\mathscr {A}}_n\) and that \(F\in {\mathscr {C}}_{n,k}(\Delta )\), \(R\in {\mathscr {C}}_{n,k-1}(\Delta )\) satisfy \(\vert F_k\vert =\vert \Delta _{k-1}\setminus R_{k-1}\vert \), and write \({\overline{R}}:=\Delta _{k-1}\setminus R_{k-1}\). Then \(\det \partial _{{\overline{R}},F_k}\ne 0\iff \vert \det \partial _{{\overline{R}},F_k}\vert =\vert H_{k-1}(F,R)\vert \iff (F,R)\in {\mathscr {F}}_{n,k}^{{\text {root}}}(\Delta )\).

Proof

The relative chain complex for the pair (F, R) is  since \(R_k=\emptyset \), \(F_{k-1}{\setminus } R_{k-1}=\Delta _{k-1}{\setminus } R_{k-1}={\overline{R}}\), and \(F_{k-2}=R_{k-2}\). We therefore have \(H_{k-1}(F,R)={\mathbb {Z}}^{{\overline{R}}}/\partial _{{\overline{R}},F_k}{\mathbb {Z}}^{F_k}\) and so, provided \(\det \partial _{{\overline{R}},F_k}\ne 0\), we have \(\vert H_{k-1}(F,R)\vert =\vert \det \partial _{{\overline{R}},F_k}\vert \)—this is seen most easily by putting \(\partial _{{\overline{R}},F_k}\) in Smith normal form. Having \(\vert H_{k-1}(F,R)\vert =\vert \det \partial _{{\overline{R}},F_k}\vert \) clearly implies \(\vert H_{k-1}(F,R)\vert <\infty \) which, by the previous lemma, implies that \((F,R)\in {\mathscr {F}}_{n,k}^{{\text {root}}}(\Delta )\). Finally, \((F,R)\in {\mathscr {F}}_{n,k}^{{\text {root}}}(\Delta )\implies \beta _k(F,R)=0\implies {\text {Ker}}\partial _{{\overline{R}},F_k}=H_k(F,R)=0\implies \det \partial _{{\overline{R}},F_k}\ne 0\). \(\square \)

since \(R_k=\emptyset \), \(F_{k-1}{\setminus } R_{k-1}=\Delta _{k-1}{\setminus } R_{k-1}={\overline{R}}\), and \(F_{k-2}=R_{k-2}\). We therefore have \(H_{k-1}(F,R)={\mathbb {Z}}^{{\overline{R}}}/\partial _{{\overline{R}},F_k}{\mathbb {Z}}^{F_k}\) and so, provided \(\det \partial _{{\overline{R}},F_k}\ne 0\), we have \(\vert H_{k-1}(F,R)\vert =\vert \det \partial _{{\overline{R}},F_k}\vert \)—this is seen most easily by putting \(\partial _{{\overline{R}},F_k}\) in Smith normal form. Having \(\vert H_{k-1}(F,R)\vert =\vert \det \partial _{{\overline{R}},F_k}\vert \) clearly implies \(\vert H_{k-1}(F,R)\vert <\infty \) which, by the previous lemma, implies that \((F,R)\in {\mathscr {F}}_{n,k}^{{\text {root}}}(\Delta )\). Finally, \((F,R)\in {\mathscr {F}}_{n,k}^{{\text {root}}}(\Delta )\implies \beta _k(F,R)=0\implies {\text {Ker}}\partial _{{\overline{R}},F_k}=H_k(F,R)=0\implies \det \partial _{{\overline{R}},F_k}\ne 0\). \(\square \)

Essentially all of the content of this last lemma is proven in greater detail in Lemmas 14 and 17 of Bernardi and Klivans (2015).

Theorem 18

Set \(T=F\cup {\text {Cone}}(n,R)\) where \(F\in {\mathscr {C}}_{n-1,k}(\Delta )\) and \(R\in {\mathscr {C}}_{n-1,k-1}(\Delta )\) for any \(\Delta \in {\mathscr {A}}_{n-1}\). Then \({{\tilde{H}}}_*(T)\cong H_*(F,R)\), and \(T\in {\mathscr {T}}_{n,k}({\text {Cone}}(n,\Delta ))\) if and only if \((F,R)\in {\mathscr {F}}_{n-1,k}^{{\text {root}}}(\Delta )\). Moreover the binomial correspondence restricts to a bijection \(\varphi :{\mathscr {T}}_{n,k}({\text {Cone}}(n,\Delta ))\xrightarrow {\sim }{\mathscr {F}}_{n-1,k}^{{\text {root}}}(\Delta )\).

Proof

Since F has no k-faces containing n and \(R\subseteq F\), we have \(F\cap {\text {Cone}}(n,R)=R\). Thus, by excision, the pairs \((T,{\text {Cone}}(n,R))\) and (F, R) have the same relative homology in every dimension. But, since \({\text {Cone}}(n,R)\) is contractible, \({{\tilde{H}}}_*(T)\cong H_*(F,R)\). Therefore \(T\in {\mathscr {T}}_{n,k}({\text {Cone}}(n,\Delta ))\) if and only if \((F,R)\in {\mathscr {F}}_{n-1,k}^{{\text {root}}}(\Delta )\) by Lemmas 9 and 15. The bijection then follows from Lemma 10. \(\square \)

Corollary 19

Set \(T=F\cup {\text {Cone}}(n,R)\), where \(F\in {\mathscr {C}}_{n-1,k}\) and \(R\in {\mathscr {C}}_{n-1,k-1}\). Then \({{\tilde{H}}}_*(T)\cong H_*(F,R)\), and \(T\in {\mathscr {T}}_{n,k}\) if and only if \((F,R)\in {\mathscr {F}}_{n-1,k}^{{\text {root}}}\). Moreover the binomial correspondence restricts to a bijection \(\varphi :{\mathscr {T}}_{n,k}\xrightarrow {\sim }{\mathscr {F}}_{n-1,k}^{{\text {root}}}\).

Proof

This follows by recalling that \({\mathscr {T}}_{n,k}({\text {Cone}}(n,\Delta ))={\mathscr {T}}_{n,k}((\Delta \cap K_{n-1}^k)\cup \{\sigma \cup \{n\}:\sigma \in \Delta \cap K_{n-1}^{k-1}\})\). Thus if \(\Delta \supseteq K_{n-1}^k\), then \((\Delta \cap K_{n-1}^k)\cup \{\sigma \cup \{n\}:\sigma \in \Delta \cap K_{n-1}^{k-1}\}=K_n^k\). \(\square \)

3 Determinantal measure

In this section we will narrow our focus to the case \(\Delta \supseteq K_n^k\) and begin considering the probabilistic aspects of k-trees and rooted k-forest of such a \(\Delta \). Specifically, after defining the measure \(\nu _{n,k}\) mentioned in the introduction, we will show (Corollary 21) that it has an alternative expression in terms of \({\text {Proj}}\) and \({\text {Link}}\). Following a brief exploration of determinatal probability measures during which we derive the containment probabilities for \(\nu \) (Lemma 22), we leverage our alternative expression for \(\nu _{n,k}\) to determine the probability densities for \({\text {Proj}}{\mathcal {T}}_{n,k}\) and \({\text {Link}}{\mathcal {T}}_{n,k}\) in terms of of the containment probabilities we just found for \(\nu \) (Lemma 23). We then use this to prove Theorem 1.

For ease of reading, we will for this section abuse notation by identifying each j-complex of \(K_n^{n-1}\) with its set of j-dimensional faces. For any \(X\in {\mathscr {C}}_{n,j}\), we also will let \({\overline{X}}\) denote the j-complex thats set of j-faces is the complement (with respect to \({[n]\atopwithdelims (){j+1}}\)) of \(X_j\). Define the submatrix \({{\widehat{\partial }}}\) of \(\partial \) by deleting all rows of \(\partial \) that correspond to elements of \({[n]\atopwithdelims ()k}\) which contain the vertex n (thus \({{\widehat{\partial }}}_0^{[n]}=\partial _0^{[n]}\)). We have the following corollary of Lemma 17:

Corollary 20

Suppose \(T\in {\mathscr {C}}_{n,k}\) satisfies \(\vert T_k\vert ={{n-1}\atopwithdelims ()k}\). Then \(\det {{\widehat{\partial }}}_{\bullet ,T}\ne 0\iff \vert \det {{\widehat{\partial }}}_{\bullet ,T}\vert =\vert {\tilde{H}}_{k-1}(T)\vert \iff T\in {\mathscr {T}}_{n,k}\).

Proof

Let \(R={\text {Cone}}\left( n,K_{n-1}^{k-2}\right) \) so that \(\vert T_k\vert +\vert R_{k-1}\vert ={n\atopwithdelims ()k}\), and \(\partial _{{\overline{R}},T}={{\widehat{\partial }}}_{\bullet ,T}\). This now follows from Corollary 14 and Lemma 17. \(\square \)

This last corollary was originally proven for the cases \(k\ge 2\) (Kalai 1983, Lemma 2) by Kalai, who combined this with the Cauchy–Binet formula and some deft linear algebra to show (Kalai 1983, Theorem 1) that

Recalling Caley’s formula and understanding that \({{\widehat{\partial }}}_0^{[n]}=\partial _0^{[n]}\) and \(\tilde{H}_{-1}(T)=0\), the cases \(k=0,1\) are also seen to hold. This gives us a natural probability measure \(\nu =\nu _{n,k}\) on \({\mathscr {T}}_{n,k}\). Namely,

This measure was originally formulated in greater generality by Lyons ( Lyons (2003), Section 12) and was further expanded upon in Lyons (2009). This version of the measure has also been considered again recently by authors such as Kahle and Newman (2022) and Mészáros (2022).

Corollary 21

Suppose \(T\in {\mathscr {T}}_{n,k}\), and let \(F={\text {Proj}}(n,T)\) and \(E={\text {Link}}(n,T)\). Then

Proof

The first equality follows from Lemma 10. The rest follows from Corollary 19, the original definition \(\nu _{n,k}(T)=\frac{\vert {{\tilde{H}}}_{k-1}(T)\vert ^2}{n^{{n-2}\atopwithdelims ()k}}\), and Lemma 17. \(\square \)

Probability measures defined in the above manner are said to be determinantal (Lyons 2003), as are the random variables associated to them. The following lemma is a special case of Theorem 5.1 from Lyons (2003):

Lemma 22

Let R, S be finite sets and let M be an \(R\times S\) matrix of rank \(\vert R\vert \). Let \(\mu \) be the determinantal measure on S which is defined by \(\mu (T)=\frac{\det M_{\bullet ,T}^2}{\det (MM^t)}\) for all \(T\subseteq S\) of size \(\vert R\vert \). Let \(P:=M^t(MM^t)^{-1}M\) (this is the matrix of the projection onto the rowspace of M). Then, for any \(B\subseteq S\),

Determinantal random variables enjoy the negative associations property (Lyons 2003, Theorem 6.5), which can be stated for random variables on \({\mathscr {C}}_{n,k}\) as follows: A function \(f:{\mathscr {C}}_{n,k}\rightarrow {\mathbb {R}}\) is called increasing if \(f(X)\le f(X\cup Y)\) for every \(X,Y\in {\mathscr {C}}_{n,k}\). The k-faces of a random variable \({\mathcal {X}}\in {\mathscr {C}}_{n,k}\) are said to be negatively associated if, for every pair of increasing functions \(f_1,f_2\) and every \(Y\in {\mathscr {C}}_{n,k}\), we have

We will make use of negative associations when we go to prove our applications to random topology.

As we see from Lemma 22, for any \(B\in {\mathscr {C}}_{n,k}\) and \(A\in {\mathscr {C}}_{n,k-1}\), we have

where \(P_{n,k}:={{\widehat{\partial }}}^t({{\widehat{\partial }}}{{\widehat{\partial }}}^{t})^{-1}{{\widehat{\partial }}}\). Our use of \({{\widehat{\partial }}}\) is actually a choice of basis for the rowspace of \(\partial \), and an arbitrary one at that. Let A be any \((k-1)\)-root of \(K_n^{n-1}\)—this implies that \(\vert A_{k-1}\vert ={{n-1}\atopwithdelims ()k}\) (Kalai 1983, Lemma 1). Then, by applying a change of basis, we also have the equivalent definition(s)

all of which correspond to the same \(P_{n,k}\). Mészáros (2022, Lemma 14) determined that

By Lemma 8, we also have \({\text {Id}}-P_{n,k}=\frac{1}{n}\partial ^{[n]}_{k+1}\partial ^{[n]t}_{k+1}=:Q_{n,k}\). By Lemma 17 and Cauchy–Binet, we therefore have the following lemma:

Lemma 23

Suppose \(B\in {\mathscr {C}}_{n,k}\) and \(A\in {\mathscr {C}}_{n,k-1}\). Then

Lemma 24

For all \(F\in {\mathscr {C}}_{n-1,k}\) and \(E\in {\mathscr {C}}_{n-1,k-1}\), we have

and

Proof

By Lemma 23, Corollary 21, and the Cauchy–Binet formula, we have

and

as desired. \(\square \)

Theorem 1 makes the claim that there exist certain relationships between the laws of certain random variables. An equivalent way of saying this is that there are couplings of these random variables for which these relationships hold with probability 1. In this sense, the following corollary is just a more detailed restatement of Theorem 1:

Corollary 25

Using the notation from Theorem 1, there are couplings \(\pi _{n,k}\) and \(\lambda _{n,k}\) of \({\mathcal {T}}_{n,k}\), \({\mathcal {T}}_{n-1,k}\), \({\mathcal {Y}}_{n-1,k}\) and \({\mathcal {T}}_{n,k}\), \({\mathcal {T}}_{n-1,k-1}\), \({\mathcal {Y}}_{n-1,k-1}\) respectively such that, marginally, \({\mathcal {T}}_{n-1,k},{\mathcal {T}}_{n-1,k-1}\) are independent of \({\mathcal {Y}}_{n-1,k},{\mathcal {Y}}_{n-1,k-1}\) respectively, and

Namely,

and

Proof

It suffices to show that \(\pi _{n,k}\) has the correct marginal densities, as the proof for \(\lambda _{n,k}\) is practically identical. Summing \(\pi _{n,k}\) over all T clearly produces the independent coupling of \({\mathcal {T}}_{n-1,k}\), \({\mathcal {Y}}_{n-1,k}\). For the remaining marginal, Lemma 24 gives us that

where in the last line we used that \(\mu _{n-1,k}(S:T'{\setminus } S={\text {Proj}}(n,T))\) is the probability that \(\vert {\text {Proj}}(n,T)\vert \) faces from \(T'\) were not chosen to be included in \({\mathcal {Y}}_k(n-1,1/n)\), which occurs with probability \((1-1/n)^{\vert {\text {Proj}}(n,T)\vert }\), and \(\vert T'\setminus {\text {Proj}}(n,T)\vert \) faces were chosen to be included in \({\mathcal {Y}}_k(n-1,1/n)\), which occurs with probability \(n^{-\vert T'\setminus {\text {Proj}}(n,T)\vert }\). Thus

which, summed over \(Y'\) and then \(T'\), gives the desired marginal. \(\square \)

4 Spectral estimates

All of this section is in service of proving our applications to random topology, Proposition 5 and Corollary 6. Lemma 36 at the end of this section is sufficient to do just that. Corollary 27 allows us to prove Lemma 36 by connecting it to a different problem which is solved by the Kahn–Szemerérdi argument. In Sects. 4.1 and 4.2 below, we go into detail about this argument and generalize it to a slightly larger class of random graph that includes the kinds described in Sect. 4.3 which are used to prove Lemma 36.

Before we can do this any of this, we need to introduce notation for this slightly larger class of random graphs and find suitable analogs for the inequalities of Bernstein and Chernoff which are necessary for the Kahn–Szemerérdi argument.

Let A be the adjacency matrix of a random graph \({\mathcal {G}}={\mathcal {G}}(n,p,E,M)\) on [n] satisfying the following:

-

1.

\({\mathbb {P}}[e\in {\mathcal {G}}]=p\in (0,1)\) for all \(e\in {[n]\atopwithdelims ()2}\).

-

2.

There is a fixed constant \(E\ge 1\) and an \(M=M(n)>0\) such that, for every \(t\in [0,M]^{[n]\atopwithdelims ()2}\), we have

$$\begin{aligned}{\mathbb {E}}\exp \left( \sum _{1\le i<j\le n}t_{ij}A_{ij}\right) \le E\prod _{1\le i<j\le n}\left( 1-p+pe^{t_{ij}}\right) . \end{aligned}$$

The choice to make E a fixed constant is just for convenience. All of the results of this section can be easily adapted to work for \(E=E(n)\) an arbitrary fixed polynomial in the variable n.

For this section, we use asymptotic notation, o() and O(), to describe the behavior of a function of n as \(n\rightarrow \infty \).

Proposition 26

Let \({\mathcal {G}}\) be defined as above with \(p=\frac{\delta \log n}{n}\) (\(\delta \) an arbitrary constant), and \(M\ge \frac{3}{5}(n/p)^{\frac{1}{2}}\). Then, for any fixed \(s>0\), we have with probability at least \(1-o(n^{-s})\) that

Proof

This will follow from Lemmas 30, 31, 32, and 33. \(\square \)

Corollary 27

Let \(L:={\text {Id}}-D^{-\frac{1}{2}}AD^{-\frac{1}{2}}\) where D is the degree matrix of \({\mathcal {G}}={\mathcal {G}}\left( n,\frac{\delta \log n}{n},O(1),\frac{3n}{5\sqrt{\delta \log n}}\right) \). Suppose there are positive integers \(s=O(1)\) and \(m=m(n)\) such that \({\mathbb {P}}[\min _{i\in [n]}\deg _{{\mathcal {G}}}(i)\ge m]=1-o(n^{-s})\). Let \(\lambda _1(L)\le \lambda _2(L)\le \cdots \le \lambda _n(L)\) denote the eigenvalues of L. Then, with probability \(1-o(n^{-s})\), we have \(\lambda _1(L)=0\) and

Proof

Since \({\mathcal {G}}\) is connected with sufficiently high probability, we will treat \({\mathcal {G}}\) as though it were connected almost surely. As such, we know that L has minimal eigenvalue 0 with multiplicity one, and the corresponding eigenvector is \(D^{\frac{1}{2}}{\textbf{1}}\). Thus we are interested in bounding the quantity

from above by some \(\lambda :=O(\sqrt{\log n}/m)\). Equivalently, we would like to show that

Without loss of generality, we can assume that x is a unit vector. Let

Noting that \(u^tAx=u^tDx=0\) and \(\cos \theta \frac{{\text {tr}}D}{\sqrt{n}}=-\sin \theta v^tD{\textbf{1}}\) (both of these follow from the assumption that \(x\perp D{\textbf{1}}\)), we have

So we have

which we would like to show is positive. Solving for \(v^tAv\), it suffices to show that

By our minimum degree assumption, it would even suffice to show that \(v^tAv\le \lambda m\). The result now follows from Proposition 26. \(\square \)

In order to prove Lemma 31, we are going to need a version of Bernstein’s inequality that works on weighted sums of centered edge indicators of \({\mathcal {G}}\).

Theorem 28

(Bernstein’s inequality) Let \({\mathcal {G}}\) be as above. Suppose \(\vert c_{ij}\vert \le c\) for some fixed c and all \(i<j\), and that \(\varepsilon \ge 0\) is such that \(M\ge \frac{\varepsilon }{p\sum _{i<j}c_{ij}^2+2c\varepsilon /3}\). Then, for any \(H\subseteq {[n]\atopwithdelims ()2}\), we have

Proof

By a Chernoff bound, the numerical bounds \(1+x\le e^x\le 1+x+\frac{3x^2}{6-2x}\) for \(x\le 3\), and our assumptions about \({\mathcal {G}}\), we have for \(t\le \frac{3}{c}\) that

where \(\sigma ^2:=p\sum _{(i,j)\in H}c_{ij}^2\). Taking \(t=\frac{\varepsilon }{\sigma ^2+2c\varepsilon /3}\), we have \(2-2ct/3=\frac{2\sigma ^2+2c\varepsilon /3}{\sigma ^2+2c\varepsilon /3}\), and thus

Recalling that we were required to assume that \(t\le \frac{3}{c}\), what we have shown holds for our choice of t as long as \(\varepsilon \ge -3\sigma ^2c^{-1}\). \(\square \)

We will also want to be able to apply a near-optimal Chernoff bound to uniformly weighted sums of edge indicators.

Lemma 29

For \({\mathcal {G}}\) as above with \(M\ge \log \frac{(1-p)\varepsilon }{1-p\varepsilon }\) where \(\varepsilon \ge 3\), we have

Proof

As with the previous proof,

For \(\varepsilon \ge 3\), this is bounded above by \(E\exp \left( -\frac{\varepsilon \log \varepsilon }{3} p\vert H\vert \right) \). \(\square \)

To prove Proposition 26, we will adapt the Kahn–Szemerérdi argument. Let

The following lemma is a special case of Claim 2.4 in Feige and Ofek (2005).

Lemma 30

Suppose \(\vert x^tAy\vert \le c\) for all \(x,y\in T\). Then \(\vert v^tAv\vert \le 4c\) for all \(v\in S\).

We now write

where \({\mathcal {L}}:=\left\{ (i,j)\in [n]^2:(x_iy_j)^2\le \frac{p}{n}\right\} \) and \({\mathcal {H}}:=[n]^2\setminus {\mathcal {L}}\).

4.1 Light couples

Lemma 31

Suppose \(M\ge \frac{3}{5}(n/p)^{\frac{1}{2}}\) in the definition of \({\mathcal {G}}\). For any constant \(s>0\), we have

Proof

We can bound the contribution from the light couples as follows: It is known (Feige and Ofek 2005, Claim 2.9) that \(\vert T\vert \le 18^{n}\). So, by applying a union bound, we have

Towards applying Bernstein’s inequality, define centered random variables

so that

Note that each \(B_{ij}^2\le \frac{4p}{n}\) a.s., and \(\sum _{i<j}{\mathbb {E}}B_{ij}^2\le p\sum _{i<j}2\big ((x_iy_j)^2+(x_jy_i)^2\big )\le 2p\) by taking advantage of the fact that \(\vert x\vert ,\vert y\vert \le 1\). Thus, by Bernstein’s inequality,

Taking \(\varepsilon =1\), this shows that \(\sum _{(i,j)\in {\mathcal {L}}}\vert x_iA_{ij}y_j\vert <6\sqrt{np}+{\mathbb {E}}\sum _{(i,j)\in {\mathcal {L}}}\vert x_iA_{ij}y_j\vert \) with probability at least \(1-Ee^{-3n}\). By Lemma 2.6 of Feige and Ofek (2005), we also have \({\mathbb {E}}\sum _{(i,j)\in {\mathcal {L}}}\vert x_iA_{ij}y_j\vert \le \sqrt{np}\), thus giving us the desired bound. Our use of Bernstein’s inequality requires us to have \(M\ge \frac{6\sqrt{np}}{2p+\frac{8\sqrt{p}}{\sqrt{n}}\sqrt{np}}=\frac{3}{5}(n/p)^{\frac{1}{2}}\). \(\square \)

4.2 Heavy couples

For \(B,C\subseteq [n]\), let \(e(B,C):=\vert \{(i,j)\in {\mathcal {G}}:i\in B,\,j\in C\}\vert \) and \(\mu (B,C):=p\vert B\vert \vert C\vert \). The following is a weakened form of Lemma 9.1 in Hoffman et al. (2019).

Lemma 32

Suppose we have constants \(c_0,c_1,c_2>1\) and a graph G on [n] with \(\max _{i\in [n]}\deg _G(i)\le c_0np\), and, for all \(B,C\subseteq [n]\), one or more of the following hold:

-

\(e(B,C)\le c_1\mu (B,C)\)

-

\(e(B,C)\log \frac{e(B,C)}{\mu (B,C)}\le c_2(\vert B\vert \vee \vert C\vert )\log \frac{n}{\vert B\vert \vee \vert C\vert }\)

Then \(\sum _{(i,j)\in {\mathcal {H}}}\vert x_iA_{ij}y_j\vert = O(\sqrt{np})\).

Lemma 33

Suppose that \(p=\frac{\delta \log n}{n}\) for some fixed but arbitrary \(\delta >0\), and \(M\ge \frac{3}{5}(n/p)^{\frac{1}{2}}\). For any \(s>0\), there are fixed constants \(c_0,c_1,c_2>1\) so that the conditions of Lemma 32 hold for \({\mathcal {G}}\) with probability at least \(1-o(n^{-s})\).

In terms of probabilistic bounds, the proof of this will only rely on Lemma 29. We note then that the condition \(M\ge \frac{3}{5}(n/p)^{\frac{1}{2}}\) is overkill since Lemma 29 only ever requires that we have M be greater than a fixed constant.

Proof

Assume without loss of generality that \(c_0\ge 3\). First note that, due to the monotonicity of \(x\log x\) for \(x\ge 1\), the second bulleted condition is equivalent to the statement \(e(B,C)\le r_1\mu (B,C)\) where \(r_1\ge 1\) solves \(r_1\log r_1=\frac{c_2(\vert B\vert \vee \vert C\vert )\log \frac{n}{\vert B\vert \vee \vert C\vert }}{\mu (B,C)}\). So we can rewrite the two bulleted conditions as the single condition \(e(B,C)\le r\mu (B,C)\) where \(r:=r_1\vee c_1\). By Lemma 29 and a union bound, we have

and

Thus we can choose a constant \(c_0\) large enough to make \({\mathbb {P}}[\max _{i\in [n]}\deg _{{\mathcal {G}}}(i)>c_0np]=o(n^{-s})\). Using this fact and the resulting (high probability) inequality

we have, in the case \(\vert B\vert \vee \vert C\vert \ge n/e\), that

So suppose that \(\vert B\vert \vee \vert C\vert <n/e\). By a union bound over all possible pairs \(\{i,j\}\subset \left[ \lfloor n/e\rfloor \right] \) (\(i\le j\), without loss of generality) of sizes for the sets B, C, it suffices to show that

Recalling that \({n\atopwithdelims ()j}\le \left( \frac{ne}{j}\right) ^j\) for all \(j\in [n]\), this can be done by showing that

for all \(1\le i\le j<n/e\). Indeed, since \(j\log \frac{n}{j}\) is monotone increasing for \(1\le j<n/e\) (Feige and Ofek 2005, Lemma 2.12), we have

Taking \(c_2\ge 3s+21\) therefore gives the desired bound. \(\square \)

4.3 Link unions

Let \(T_1,T_2,\dots ,T_m\) be jointly independent copies of \({\mathcal {T}}_{n,k-j}\) with k fixed. Then, if \(T_1',T_2',\dots ,T_m'\) are jointly independent copies of \({\mathcal {T}}_{n+j,k}\), we can use Theorem 2 to couple these random trees so that

where \(p:=1-\left( 1-\frac{j}{n+j}\right) ^m\sim \frac{jm}{n}\). Since each \(T_i\) is determinantal, the k-faces of each \(T_i\) are negatively associated (to be abbreviated NA)—see the discussion following Lemma 22 for the definition of NA. Moreover, the k-faces in \(T:=\bigcup _{i\in [m]}T_i\) are also NA, as

is increasing in T as a function \({\mathscr {C}}_{n,k}\rightarrow {\mathbb {R}}\), and any set of increasing functions evaluated over disjoint subsets of a set of NA objects is itself a set of NA objects (Joag-Dev and Proschan 1983). By the same reasoning and the fact that sets of jointly independent random variables are NA sets, we also have that the set of \((k-j)\)-faces of \(L_m^{k,j}(n)\) is NA. We now narrow our focus to the case \(j=k-1\), in which

is a graph with negatively associated edges each appearing with probability \(q:=1-(1-p)(1-\frac{2}{n})^m\sim \frac{(k+1)m}{n}\). This implies that G is of type \({\mathcal {G}}\left( n,q,1,\infty \right) \). Toward applying Corollary 27 to this G, we will assume that \(m=\lceil \delta \log n\rceil \) for some arbitrary constant \(\delta >0\). In order to get a sufficiently strong lower bound on the minimum degree of G, we need to have a very strong bound on the moment generating function of \(\deg _G(n)\) for negative inputs.

Lemma 34

Let \(p_0:=1-\left( 1-\frac{k-1}{n+k-1}\right) ^m\left( 1-\frac{1}{n}\right) ^m\sim \frac{km}{n}\). Then for all \(t<0\) we have

Proof

Let \(\ell :={\text {Link}}(n,\cup _{i\in [m]}T_i)\), and let \(B_i:={\textbf{1}}\{(i,n)\in {\mathcal {G}}(n,p)\}\), where \({\mathcal {G}}(n,p)\) is as above and, in particular, independent of \(\ell \). Then

Note that \(\ell \overset{{\mathscr {L}}}{=}{\text {Binom}}\left( [n-1],p'\right) \cup R\) where \(p':=1-(1-\frac{1}{n})^m\), and R is a random subset of \([n-1]\) formed by independently and uniformly choosing (with replacement) m vertices from \([n-1]\)—this follows from the \(k=1\) case of Theorem 1. Thus

Now note that

Thus

We therefore have

Since \(t<0\), we have \(1-p_0+p_0e^t\le 1\), and thus

Toward controlling the size of R, let \(R=R_m\), and let \(R_j\) be defined as R after only the first j independent samplings of \([n-1]\). Then, letting \(v_1,v_2,\ldots ,v_m\) be the random vertex selections, we have

Thus, for \(s>0\),

Taking expectations and iterating then gives

Thus, by Chernoff’s inequality, we have

for any \(s>0\). Taking \(s=\log \frac{2j(n-1)}{m(m-1)}\), gives

Thus \(\vert R\vert \ge m-j+1\) with probability at least \(1-\left( \frac{em^2}{2jn}\right) ^j\). Recall the summation by parts formula:

Setting \(f_j=e^{-t(m-j)}\) and \(g_j={\mathbb {P}}[\vert R\vert =j]\), we have

where the last inequality uses the bound \(j^j\ge j!\). Thus, for \(t<0\) we have

\(\square \)

Lemma 35

For \(0\le j\le m\), we have \({\mathbb {P}}[\min _{i\in [n]}\deg _G(i)\le m-j]\le (1+o(1))n^{1-j}e^{-km}\).

Proof

For any \(t>0\), we have by a union bound that

Letting \(t=r\log n\) for any \(r\in (0,1)\) gives

Taking \(r\rightarrow 1\) from the left then gives \((1+o(1))n^{1-j}e^{-km}\). \(\square \)

Lemma 36

Let L be the reduced Laplacian of G as defined above with \(m=\lceil \delta \log n\rceil \) and \(\delta >0\) an arbitrary constant (so that \({\mathbb {P}}[\min _{i\in [n]}\deg _G(i)\ge m-s+2]=1-o(n^{-s})\) for any fixed s). Then for any fixed s, we have \(\lambda _1(L)=0\) and \(\lambda _2(L)=1-O\left( 1/\sqrt{\log n}\right) \) with probability \(1-o(n^{-s})\).

Proof

This follows from the previous lemma and Corollary 27. \(\square \)

On behalf of all authors, the corresponding author states that there is no conflict of interest.

References

Bernardi, O., Klivans, C.: Directed rooted forests in higher dimension. Electron. J. Combin. (2015). https://doi.org/10.37236/5819

Coja-Oghlan, A., Lanka, A.: The spectral gap of random graphs with given expected degrees. Electron. J. Combin. 16, 138–138 (2009)

Duval, A., Klivans, C., Martin, J.: Simplicial matrix-tree theorems. Trans. Am. Math. Soc. (2008). https://doi.org/10.1090/S0002-9947-09-04898-3

Duval, A.M., Klivans, C.J., Martin, J.L.: Cellular spanning trees and Laplacians of cubical complexes. Adv. Appl. Math. 46(1), 247–274 (2011). https://doi.org/10.1016/j.aam.2010.05.005. (Special issue in honor of Dennis Stanton)

Duval, A.M., Klivans, C.J., Martin, J.L.: Cuts and flows of cell complexes. J. Algebraic Comb. 41(4), 969–999 (2015). https://doi.org/10.1007/s10801-014-0561-2

Feige, U., Ofek, E.: Spectral techniques applied to sparse random graphs. Random Struct. Algorithms 27(2), 251–275 (2005). https://doi.org/10.1002/rsa.20089

Garland, H.: p-Adic curvature and the cohomology of discrete subgroups of p-adic groups. Ann. Math. 97, 375 (1973)

Gundert, A., Wagner, U.: On eigenvalues of random complexes. Israel J. Math. (2014). https://doi.org/10.1007/s11856-016-1419-1

Hoffman, C., Kahle, M., Paquette, E.: Spectral gaps of random graphs and applications. Int. Math. Res. Not. 2021(11), 8353–8404 (2019). https://doi.org/10.1093/imrn/rnz077

Joag-Dev, K., Proschan, F.: Negative association of random variables with applications. Ann. Stat. 11(1), 286–295 (1983). https://doi.org/10.1214/aos/1176346079

Kahle, M., Newman, A.: Topology and geometry of random 2-dimensional hypertrees. Discrete Comput. Geom. 4, 1229–1244 (2022)

Kalai, G.: Enumeration of q-acyclic simplicial complexes. Israel J. Math. 45, 337–351 (1983)

Linial, N., Meshulam, R.: Homological connectivity of random 2-complexes. Combinatorica 26, 475–487 (2006)

Linial, N., Peled, Y.: Enumeration and randomized constructions of hypertrees. Random Struct. Algorithms 55(3), 677–695 (2019). https://doi.org/10.1002/rsa.20841

Lubotzky, A.: High dimensional expanders. In: Proceedings of the International Congress of Mathematicians: Rio de Janeiro 2018, pp. 705–730. World Scientific (2018)

Lyons, R.: Determinantal probability measures. Publ. Math. l’IHÉS 98, 167–212 (2003)

Lyons, R.: Random complexes and \(\ell ^2\)-betti numbers. J. Topol. Anal. 01(02), 153–175 (2009). https://doi.org/10.1142/S1793525309000072

Meshulam, R., Wallach, N.: Homological connectivity of random k-dimensional complexes. Random Struct. Algorithms 34, 408–417 (2009)

Mészáros, A.: The local weak limit of \(k\)-dimensional hypertrees. https://doi.org/10.1090/tran/8711

Oliveira, R.I.: Concentration of the adjacency matrix and of the laplacian in random graphs with independent edges. arXiv preprint arXiv:0911.0600 (2009)

Oppenheim, I.: Local spectral expansion approach to high dimensional expanders part I: descent of spectral gaps. Discrete Comput. Geom. 59(2), 293–330 (2018)

Oppenheim, I.: Local spectral expansion approach to high dimensional expanders part II: mixing and geometrical overlapping. Discrete Comput. Geom. 64(3), 1023–1066 (2020)

Tikhomirov, K., Youssef, P.: The spectral gap of dense random regular graphs. Ann. Probab. 47(1), 362–419 (2019). https://doi.org/10.1214/18-AOP1263

Żuk, A.: La propriété (t) de kazhdan pour les groupes agissant sur les polyedres. C. R. Acad. Sci. Sér. 1, Math. 323(5), 453–458 (1996)

Żuk, A.: Property (t) and Kazhdan constants for discrete groups. Geom. Funct. Anal. 13, 643–670 (2003). https://doi.org/10.1007/s00039-003-0425-8

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Vander Werf, A. Simplex links in determinantal hypertrees. J Appl. and Comput. Topology 8, 401–426 (2024). https://doi.org/10.1007/s41468-023-00158-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41468-023-00158-1