Abstract

A family of the estimators adjusting the maximum likelihood estimator by a higher-order term maximizing the asymptotic predictive expected log-likelihood is introduced under possible model misspecification. The negative predictive expected log-likelihood is seen as the Kullback–Leibler distance plus a constant between the adjusted estimator and the population counterpart. The vector of coefficients in the correction term for the adjusted estimator is given explicitly by maximizing a quadratic form. Examples using typical distributions in statistics are shown.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is known that the maximum likelihood estimator (MLE) does not necessarily give the smallest values of typical indexes of errors, where an error is defined as the deviation of a parameter estimate from its true value, which is typically squared and averaged over a range of the associated observable variables. For instance, consider \( X_{i} \mathop \sim \limits^{{{\text{i}} . {\text{i}} . {\text{d}} .}} {\text{N}}(\mu ,\sigma^{2} )\,\,(i = 1, \ldots ,n) \) and \( \bar{X} = n^{ - 1} \sum\nolimits_{j = 1}^{n} {X_{j} } \) with n being the sample size. Then, it is known that among the family of the estimators of \( \sigma^{2} \) given by \( c_{n} \sum\nolimits_{j = 1}^{n} {(X_{j} - \bar{X}} )^{2} \), where \( c_{n} \) is a constant depending on n, \( c_{n} = 1/(n + 1) \) gives the smallest mean square error (see, e.g., DeGroot and Schervish 2002, p. 431; for the associated problems about variance estimation, see Ogasawara 2015). Note that the above estimator is \( n/(n + 1) \) times the normal-theory MLE.

Discrepancy functions are defined in many ways using different definitions of distances. In the above case, the squared Euclid distance averaged over the distribution is used. This example is a fortunate one in that the constant \( c_{n} \) is obtained as an exact one. In many other cases, it is difficult to obtain exact solutions. Using an additive term of order \( O_{p} (n^{ - 1} ) \) to the MLE, Ogasawara (2015) obtained a solution minimizing the asymptotic mean square error up to order \( O(n^{ - 2} ) \) denoted by \( {\text{MSE}}_{{ \to O(n^{ - 2} )}} \) in this paper.

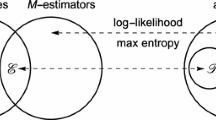

The Euclid distance is an intuitively appealing natural one, especially for a single parameter. On the other hand, in the multi-parameter case, the overall error index for the MLEs of the parameters is defined in many ways since the MSEs for several estimators are given by the matrix MSE, which is the sum of the corresponding covariance matrix and the outer product of the same vectors of biases (see, e.g., Giles and Rayner 1979). One of the natural definitions of the overall index is the sum of the MSEs of the estimators, which is called the total MSE (TMSE) by Gruber (1998, p. 117). Ogasawara (2014b) obtained an optimal value of the added term of order \( O_{p} (n^{ - 1} ) \) similar to the single parameter case, which minimizes the \( {\text{TMSE}}_{{ \to O(n^{ - 2} )}} \) and a similar solution for the linear predictor given by the multiple MLEs with known weights for the predictor. Another overall index appropriate especially in the multi-parameter case is the log-likelihood, which will be used in this paper. Note that using this index, the problem of different ways of summarizing the matrix MSE is avoided. Further, since likelihood is used, the log-likelihood index is unchanged by reparametrization.

In the following section, the predictive expected log-likelihood will be defined and used. Then, a family of the estimators adjusting the MLE by adding a higher-order term is defined, whose asymptotically optimal value will be derived. The family has two typical sub-families giving shrinkage (or expanded) estimators and bias-adjusted estimators. Examples will be given using frequently used distributions (gamma, exponential, Poisson, Bernoulli and normal), where the corresponding optimal adjustment using the \( {\text{MSE}}_{{ \to O(n^{ - 2} )}} \) will also be shown for comparison. The conditions for the relative sizes of the optimal coefficients by maximizing the asymptotic predictive expected log-likelihood and by minimizing the \( {\text{MSE}}_{{ \to O(n^{ - 2} )}} \) will be given for a simple case.

2 The asymptotic predictive expected log-likelihood and its maximization

Let \( {\varvec{\uptheta}} \) be a \( q \times 1 \) vector of parameters with \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \) and \( {\varvec{\uptheta}}_{0} \) being its MLE and the population counterpart, respectively. Let X be a \( n \times p \) matrix of n realized values of the random vector \( {\mathbf{x}}^{*} = (x_{1}^{*} , \ldots ,x_{p}^{*} )^{\prime} \). Then, the log-likelihood of \( {\varvec{\uptheta}} \) given X averaged over n observations is denoted by \( \bar{l}({\varvec{\uptheta}},{\mathbf{X}}) \). When X is replaced by the corresponding random matrix \( {\mathbf{X}}^{*} \) with its rows being independent copies of \( {\mathbf{x}}^{*} \), we have \( \bar{l}({\varvec{\uptheta}},{\mathbf{X}}^{*} ) \) whose expectation over its range is

where \( f({\mathbf{Z}}|{\varvec{\uptheta}}) \) is the density of \( {\mathbf{X}}^{*} = {\mathbf{Z}} \). In the case of discrete distributions, (1) should be replaced by the corresponding summation with \( f({\mathbf{Z}}|{\varvec{\uptheta}}_{0} ) \) seen as a probability mass. The expectation (1) is maximized when \( {\varvec{\uptheta}} = {\varvec{\uptheta}}_{0} \) by Jensen’s inequality. That is, \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \) does not maximize \( \bar{l}^{*} ({\varvec{\uptheta}}) \), which is the expected log-likelihood of \( {\varvec{\uptheta}} \).

It is known that under regularity conditions

(see, e.g., Sakamoto et al. 1986, Eq. (4.21)).

The asymptotic difference \( n^{ - 1} (q/2) \), when multiplied by 2, is a half of \( n^{ - 1} \) times the correction term in the Akaike information criterion (AIC; Akaike 1973). The amount of the difference is seen as an undesirable bias of \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \). In this paper by a modification of \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \), say \( {\tilde{\varvec{\uptheta }}} \), we will maximize \( \bar{l}^{*} ({\tilde{\varvec{\uptheta }}}) \) among a family of the estimators using optimal coefficients yielding \( {\tilde{\varvec{\uptheta }}} \) from \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \). So, we will maximize the following quantity:

which is called the mean expected log-likelihood (Sakamoto et al. 1986, p. 60) when \( {\tilde{\varvec{\uptheta }}} \) is \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \). Since Z in (3) can be seen as a set of quantities associated with prediction to be given in the future, (3) is called the predictive expected log-likelihood in this paper.

When Z is a set of quantities in the future, (1) to (3) are associated with predictive likelihood or the predictive posterior distribution though the direction of prediction is reversed, since Z in the future is integrated out (see Fisher 1956, Chapter 5, Section 7; Hinkley 1979; Lejeune and Faulkenberry 1982; Leonard 1982; Bjornstad 1990; Konishi and Kitagawa 1996, 2008, Section 9.3).

Recall that when \( {\varvec{\uptheta}} = {\varvec{\uptheta}}_{0} \), \( \bar{l}^{*} ({\varvec{\uptheta}}) \) in (1) is maximized. Consequently, (3) is also maximized when \( {\tilde{\varvec{\uptheta }}} = {\varvec{\uptheta}}_{0} \). Since \( {\varvec{\uptheta}}_{0} \) does not depend on X, the maximum is \( {\text{E}}_{{{\mathbf{X}}^{*}}} \{ \bar{l}^{*} ({\varvec{\uptheta}}_{0} )\} = \bar{l}^{*} ({\varvec{\uptheta}}_{0} ) \). Take the difference

where \( L^{*} ({\varvec{\uptheta}}_{0} ) \) and \( L^{*} ({\tilde{\varvec{\uptheta }}}) \) are \( \exp \{ \bar{l}^{*} ({\varvec{\uptheta}}_{0} )\} \) and \( \exp \{ \bar{l}^{*} ({\tilde{\varvec{\uptheta }}})\} \), respectively. The quantity \( \exp \{ \bar{l}^{*} ({\varvec{\uptheta}})\} \) is interpreted as a pseudo-likelihood of \( {\varvec{\uptheta}} \) corresponding to the predictive expected log-likelihood. The value of (4) is non-negative, and is seen as a discrepancy of \( {\varvec{\uptheta}}_{0} \) and \({\tilde{\varvec{\uptheta}}}\) based on the Kullback and Leibler (1951) distance. So, the maximization of the predictive expected log-likelihood in terms of \( {\tilde{\varvec{\uptheta }}} \) is given by finding \( {\tilde{\varvec{\uptheta }}} \) such that the distance of (5) is minimized. Incidentally, Lawless and Fredette (2005) used the Kullback–Leibler distance for evaluation of the goodness of an estimator.

In practice, \( f({\mathbf{X}}|{\varvec{\uptheta}}_{0} ) \) or \( f({\mathbf{Z}}|{\varvec{\uptheta}}_{0} ) \) may not be true, where another alternative true density of \( {\mathbf{X}}^{*} \) is denoted by \( f_{\text{T}} ({\mathbf{X}}|{\varvec{\upzeta}}_{0} ) \) with \( {\varvec{\upzeta}}_{0} \) being a \( q^{*} \times 1 \) vector of parameters for the alternative model. The vector \( {\varvec{\uptheta}}_{0} \) under model misspecification is defined as \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \) when infinitely many observations are available. Under model misspecification, we deal with the cases satisfying

where the subscript “T” indicates that the subscripted operator is defined under the alternative true distribution. That is, in this case the expectation is taken using the density \( f_{\text{T}} ({\mathbf{X}}|{\varvec{\upzeta}}_{0} ) \) rather than \( f({\mathbf{X}}|{\varvec{\uptheta}}_{0} ) \). Examples satisfying (5) will be illustrated in Sect. 4.5. In the following, the possible model misspecification is considered unless otherwise stated.

3 A family of the estimators maximizing the asymptotic predictive expected log-likelihood

3.1 The asymptotic predictive expected log-likelihood for the family of estimators

Define a family of estimators

where \( {\text{diag}}({\mathbf{k}}) = \left[ {\begin{array}{*{20}l} {k_{1} } \hfill & {} \hfill & {} \hfill & 0 \hfill \\ {} \hfill & {k_{2} } \hfill & {} \hfill & {} \hfill \\ {} \hfill & {} \hfill & \ddots \hfill & {} \hfill \\ 0 \hfill & {} \hfill & {} \hfill & {k_{q} } \hfill \\ \end{array} } \right], \) \({\mathbf{k}} = (k{\kern 1pt}_{1} , \ldots ,k{\kern 1pt}_{q} )^{{\prime}} = O(1) \) is the vector of unknown coefficients to be derived, and \( {\hat{\varvec{\upalpha}}} = {\varvec{\upalpha}}({\hat{\varvec{\uptheta }}}_{\text{ML}}) \) is a differentiable arbitrary function of \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \). Two typical cases are \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} \) and \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\upbeta}}}_{1} \), where \( n^{ - 1} {\varvec{\upbeta}}_{1} \) is the vector of the asymptotic biases of the elements of \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \) with the assumption that \( {\text{E}}_{\text{T}} ({\hat{\varvec{\uptheta }}}_{\text{ML}}) = {\varvec{\uptheta}}_{0} + n^{ - 1} {\varvec{\upbeta}}_{1} + O(n^{ - 2} ) \), and \( {\hat{\varvec{\upbeta}}}_{1} \) is a sample version of \( {\varvec{\upbeta}}_{1} \). As mentioned earlier, these cases give two sub-families of estimators. When \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} \) and \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\upbeta}}}_{1} \), the following notations are used:

where S in \( {\hat{\varvec{\uptheta }}}_{{{\text{S}}k}} \) indicates the shrinkage when \( k_{i} \)’s are hopefully positive, B in \( {\hat{\varvec{\uptheta }}}_{{{\text{B}}k}} \) indicates bias adjustment which does not necessarily mean bias reduction, and \( {\mathbf{I}}_{(q)} \) is the \( q \times q \) identity matrix.

Recalling the definition of \( \bar{l}^{*} ({\varvec{\uptheta}}) \) in (1), let

for simplicity of notation, where \( {\mathbf{x}}^{ \langle j \rangle } = {\mathbf{x}} \otimes \cdots \otimes {\mathbf{x}} \) (j times of x) is the j-fold Kronecker product of x. Then,

where \( \bar{l}^{*} ({\varvec{\uptheta}}) \) does not include stochastic quantities \( {\mathbf{X}}^{*} \) or \( {\mathbf{Z}}^{*} \) until \( {\varvec{\uptheta}} \) is evaluated at, e.g., stochastic \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \).

The predictive expected log-likelihood of \( {\hat{\varvec{\uptheta }}}_{{{\text{A}}k}} \) under possible model misspecification is written as:

Note that

where \( {\varvec{\upalpha}}_{0} = {\varvec{\upalpha}}({\varvec{\uptheta}}_{0} ) \). Then, using the Taylor series expansion about \( {\varvec{\uptheta}}_{0} \) under regularity conditions with the assumptions \( {\hat{\varvec{\uptheta }}}_{\text{ML}} - {\varvec{\uptheta}}_{0} = O_{p} (n^{ - 1/2} ) \) and \( {\hat{\varvec{\upalpha}}} - {\varvec{\upalpha}}_{0} = O_{p} (n^{ - 1/2} ) \), we have

where \( \{ \cdot \}_{{O_{p} ( \cdot )}} \) and \( \{ \cdot \}_{O( \cdot )} \) indicate the orders of the quantities in braces for clarity.

Define

where \( \partial \bar{l}/\partial {\varvec{\uptheta}}_{0} = \partial \bar{l}({\varvec{\uptheta}},{\mathbf{X}}^{*} )/\partial {\varvec{\uptheta}}|_{{{\varvec{\uptheta}} = {\varvec{\uptheta}}_{0} }} = O_{p} (n^{ - 1/2} ) \) (compare with \( \partial \bar{l}^{*} /\partial {\varvec{\uptheta}}_{0} = {\mathbf{0}} \)). Let \( \hat{\bar{l}}_{\text{ML}}^{*} \equiv \bar{l}^{*} ({\hat{\varvec{\uptheta }}}_{\text{ML}}) \). Then, the expectation of (12) is

with

where \( {\text{vec}}( \cdot ) \) is the vectorizing operator stacking the columns of a matrix sequentially with \( {\text{vec}}^{\prime }( \cdot ) = \{ {\text{vec}}( \cdot )\} ^{\prime } \), \( {\text{acov}}(\, \cdot \,\,,\, \cdot \,) \) is the asymptotic covariance of order \( O(n^{ - 1} ) \) for two variables, \( {\text{diag}}({\mathbf{x}}) = {\text{diag}}({\mathbf{x}}^{{\prime}} ) \), \( ( \cdot )_{j} \) indicates the j-th element of a vector, and \( ( \cdot )_{j \cdot } \) is the j-th row of a matrix. Since the a-th element of

is

where \( ( \cdot )_{ab} \) indicates the (a, b)th element of a matrix, we have

where \( {\text{Diag}}( \cdot ) \) is the diagonal matrix whose diagonal elements are those of a matrix in parentheses and \( {\mathbf{1}}_{(q)} \) is the \( q \times 1 \) vector of 1’s.

It is known that \( {\varvec{\upbeta}}_{1} \) under possible model misspecification is given as:

where \( {{\partial^{2} \bar{l}}}/{{\partial {\varvec{\uptheta}}_{0} \partial {\varvec{\uptheta}}_{0}^{\prime} }} - {\text{E}}_{\text{T}} \left( {{{\partial^{2} \bar{l}}}/{{\partial {\varvec{\uptheta}}_{0} \partial {\varvec{\uptheta}}_{0}^{\prime} }}} \right) \) \( \equiv ({\mathbf{M}})_{{O_{p} (n^{ - 1/2} )}} \). The result of (18) is typically derived by the inverse expansion of \( \partial \bar{l}/\partial {\varvec{\uptheta}}|_{{{\varvec{\uptheta}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} }} = {\mathbf{0}} \) at \( {\varvec{\uptheta}} = {\varvec{\uptheta}}_{0} \) in terms of \( {\hat{\varvec{\uptheta }}}_{\text{ML}} - {\varvec{\uptheta}}_{0} \) (see, e.g., Ogasawara, 2010, Eq. (2.4)). Using (18), the first term in brackets on the right-hand side of (14) becomes

where \( {\text{E}}_{\text{T}} ( \cdot )_{{ \to O(n^{ - 2} )}} \) indicates that the expectation is taken up to order \( O(n^{ - 2} ) \), and

3.2 The vector of maximizing coefficients

The k maximizing (20) without the remainder term is given by

where \( {\text{diag}}^{ - 1} ({\varvec{\upalpha}}_{0} ) = \{ {\text{diag}}({\varvec{\upalpha}}_{0} )\}^{ - 1} \) with the assumption of its existence.

The maximized value without the remainder term is

As will be addressed later, (22) and (23) become considerably simplified in the case of the canonical parameters of the exponential family since \( {{\partial^{j} \bar{l}}}/{{(\partial {\varvec{\uptheta}}_{0} )^{ \langle j \rangle } }} = {{\partial^{j} \bar{l}^{*} }}/{{(\partial {\varvec{\uptheta}}_{0} )^{ \langle j \rangle } }}(j = 2,3, \ldots ) \) and \( {\mathbf{M}} = {\mathbf{O}} \). The result is summarized.

Result 1

The vector k of coefficients maximizing the asymptotic predictive expected log-likelihood averaged over observations up to order \( O(n^{ - 2} ) \) among the family of the estimators \( {\hat{\varvec{\uptheta }}}_{{{\text{A}}k}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} - n^{ - 1} {\text{diag}}({\mathbf{k}}){\hat{\varvec{\upalpha}}} \) using a differentiable vector function \( {\hat{\varvec{\upalpha}}} = {\varvec{\upalpha}}({\hat{\varvec{\uptheta }}}_{\text{ML}} ) \) under possible model misspecification is given by \( {\mathbf{k}}_{{{\text{A}}\hbox{max} }} \) in (22) with the maximized value being (23).

When a common coefficient k in k is used, k becomes \( {\mathbf{k}} = k{\mathbf{1}}_{(q)} \). The result in this case is summarized as:

Result 2

When \( {\mathbf{k}} = k{\mathbf{1}}_{(q)} \) in Result 1, \( {\mathbf{b}}_{\text{A}} \) and \( {\mathbf{A}}_{\text{A}} \) become scalars as:

respectively. Then, the maximizing k in \( {\mathbf{k}} = k{\mathbf{1}}_{(q)} \) is

with the maximized value without the remainder term being

In the case of the canonical parameters in the exponential family, M vanishes in Result 2 as in Result 1. When \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} \), \( \partial {\varvec{\upalpha}}_{0}^{\prime} /\partial {\varvec{\uptheta}}_{0} \) becomes \( {\mathbf{I}}_{(q)} \) and can be omitted in Results 1 and 2. In this case, the contribution of the trace term in (26) to \( k_{{{\text{A}}\hbox{max} }} \) is

where \( {\text{acov}}_{{\theta_{0} }} ( {\hat{\varvec{\uptheta }}}_{\text{ML}} ) \) is the asymptotic covariance matrix of order \( O(n^{ - 1} ) \) for \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \) under correct model specification, \( {\text{acov}}_{\text{T}} ({\hat{\varvec{\uptheta }}}_{\text{ML}}) \) is the corresponding matrix under possible model misspecification, and \( {\text{acov}}_{{\theta_{0} }}^{ - 1} ({\hat{\varvec{\uptheta }}}_{\text{ML}} ) = \{ {\text{acov}}_{{\theta_{0} }} ({\hat{\varvec{\uptheta }}}_{\text{ML}} )\}^{ - 1} \). Since the trace in (28) becomes q under correct model specification, the scaled trace value \( {\text{tr\{ }}n^{ - 1} \,{\text{acov}}_{{\theta_{0} }}^{ - 1} ({\hat{\varvec{\uptheta }}}_{\text{ML}} )n\,{\text{acov}}_{\text{T}} ({\hat{\varvec{\uptheta }}}_{\text{ML}} )\} /q \) is called the dispersion ratio in this paper. When the dispersion ratio is relatively large, \( k_{{{\text{A}}\hbox{max} }} \) in (26) tends to be large.

An advantage of the restricted vector \( {\mathbf{k}} = k{\mathbf{1}}_{(q)} \) over the unrestricted one is that the solution \( k_{{{\text{A}}\hbox{max} }} \) is available even when some but not all of the elements of \( {\varvec{\upalpha}}_{0} \) are zero. In this case, \( {\text{diag}}^{ - 1} ({\varvec{\upalpha}}_{0} ) \) was unavailable unless k is shortened by omitting its elements corresponding to the zero elements of \( {\varvec{\upalpha}}_{0} \).

When the model is correctly specified, we have \( - {\varvec{\Lambda}} = {\varvec{\Gamma}} = {\mathbf{I}}_{0} \), where \( {\mathbf{I}}_{0} \) is the Fisher information matrix per observation and the dispersion ratio becomes 1, which gives

Result 3

The vector k of coefficients maximizing the asymptotic predictive expected log-likelihood under the conditions of Result 1 and, in addition, under correct model specification is given by

yielding

where \( {\text{E}}_{{\theta_{0} }} ( \cdot ) \) indicates that the expectation is taken under correct model specification. The maximized value without the remainder term is

As in Result 2, we have

Result 4

When \( {\mathbf{k}} = k{\mathbf{1}}_{(q)} \) in Result 3 under correct model specification,

and

The maximized value without the remainder term is

As before, canonical parametrization in the exponential family gives simplification. Under non-canonical parametrization, an example of the vanishing term with M after taking the expectation is the normal distribution as will be illustrated later.

When \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} \) with the vanishing M, \( k_{{{\text{A}}\hbox{max} }} \) of (34) becomes as simple as:

with the maximized value

Note that the term \( {\text{E}}_{\text{T}} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \) or \( {\text{E}}_{{\theta_{0} }} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \) appears throughout the associated results for clarity and completeness though the term is irrelevant for deriving \( {\mathbf{k}}_{{{\text{A}}\hbox{max} }} \). So, the term is seen as a constant. However, when the evaluation of the term is required, the result can be obtained, which is given in the appendix. The term of order \( O(n^{ - 1} ) \) in \( {\text{E}}_{{\theta_{0} }} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \) was given in (2) as \( - n^{ - 1} q/2 \), where q is the q times the unit dispersion ratio under correct model specification. Under possible model misspecification, the term is \( n^{ - 1} {\text{tr}}({\varvec{\Gamma \Lambda }}^{ - 1} )/2 \), where \( - {\text{tr}}({\varvec{\Gamma \Lambda }}^{ - 1} ) \) is also the q times the dispersion ratio.

The terms \( {\text{E}}_{\text{T}} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \) and \( {\text{E}}_{{\theta_{0} }} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \) are associated with the asymptotic bias correction higher than those in the AIC and Takeuchi information criterion (TIC; Takeuchi 1976; Stone 1977; Konishi and Kitagawa 2008), which was derived by Konishi and Kitagawa (2003), and Ogasawara (2017) in different expressions. In this bias correction, many of the terms in \( {\text{E}}_{{\theta_{0} }} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \) and \( {\text{E}}_{\text{T}} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \) are cancelled since the amount of bias correction is \( {\text{E}}_{{\theta_{0} }} (\hat{\bar{l}}_{\text{ML}} )_{{ \to O(n^{ - 2} )}} - {\text{E}}_{{\theta_{0} }} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \) or \( {\text{E}}_{\text{T}} (\hat{\bar{l}}_{\text{ML}} )_{{ \to O(n^{ - 2} )}} - {\text{E}}_{\text{T}} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \).

The maximizing coefficients in Results 1–4 depend on unknown population values, e.g., \( {\varvec{\upalpha}}_{0} \). Although we can have their sample counterparts, it can be shown that when the population values are replaced by their estimates, the associated asymptotic results do not hold. This difficulty can be solved by several ways, which will be addressed after (46) in the next section.

4 Examples

In this section, examples are given using typical distributions in statistics. The results in Sects. 4.1 to 4.4 are obtained under correct model specification while those in Sect. 4.5 are shown under model misspecification. In Sect. 4.4, the multi-parameter cases are illustrated using the normal distribution. In these examples, the corresponding results derived by minimizing \( {\text{MSE}}_{{ \to O(n^{ - 2} )}} \) are also given for comparison. Ogasawara (2015) obtained the coefficient of the bias adjustment minimizing the \( {\text{MSE}}_{{ \to O(n^{ - 2} )}} \) for a single parameter and a parameter in the parameter vector. Ogasawara (2014b) extended the result to the multi-parameter case, where all the parameters in a vector parameter are simultaneously considered. Ogasawara (2014b) used \( {\text{TMSE}}_{{ \to O(n^{ - 2} )}} \) (see Sect. 1) and the linear predictor, where only a single coefficient k was used. For comparison to the results in Sect. 4.4, Ogasawara’s (2014b) result is extended using unconstrained vector k of coefficients and an arbitrary vector \( {\hat{\varvec{\upalpha}}} \) as follows.

Let \( {\hat{\varvec{\uptheta }}}_{{{\text{A}}k}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} - n^{ - 1} {\text{diag}}({\mathbf{k}}){\hat{\varvec{\upalpha}}} \) as before. Then, under possible model misspecification

where \( n^{ - 1} {\varvec{\upbeta}}_{2} \) is the \( q \times 1 \) vector of the asymptotic variances of order \( O(n^{ - 1} ) \) for the elements of \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \), and \( n^{ - 2} {\varvec{\upbeta}}_{\Delta 2} \) is the corresponding vector of the added higher-order asymptotic variances of order \( O(n^{ - 2} ) \). Then,

Result 5

The vector of the coefficients minimizing \( {\text{TMSE}}_{{ \to O(n^{ - 2} )}} \) defined in (38) under possible model misspecification is

with the minimized \( {\text{TMSE}}_{{ \to O(n^{ - 2} )}} \) being

Result 6

When \( {\mathbf{k}} = k{\mathbf{1}}_{(q)} \) in Result 5,

with the minimized \( {\text{TMSE}}_{{ \to O(n^{ - 2} )}} \) being

Result 5 is new. Result 6 is slightly generalized than that in Ogasawara (2014b) in that an arbitrary vector \( {\hat{\varvec{\upalpha}}} \) is used. When \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} \) and \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\upbeta}}}_{1} \), \( {\mathbf{k}}_{{{\text{A}}\hbox{min} }} \) is written as \( {\mathbf{k}}_{{{\text{S}}\hbox{min} }} \) and \( {\mathbf{k}}_{{{\text{B}}\hbox{min} }} \), respectively.

As addressed earlier, canonical parametrization in the exponential family gives simplified results. Two additional results by this parametrization under correct model specification are given. For this case, it is known that

(see, e.g., Ogasawara, 2013), where \( {\mathbf{I}}_{0}^{{({\text{D}}j)}} = - {{\partial^{j + 2} \bar{l}}}/{{\partial {\varvec{\uptheta}}_{0} (\partial {\varvec{\uptheta}}_{0}^{\prime} )^{ \langle j + 1 \rangle } }}\,\,(j = 1,2, \ldots ) \) with j = 2 for later use, which is the j-th derivative of the information matrix evaluated at the population value. From (43), after some algebra, we have

Recalling Result 4 and defining \( k_{{{\text{B}}\hbox{max} }} ,\,\,b_{\text{B}} \) and \( a_{\text{B}} \) as \( k_{{{\text{A}}\hbox{max} }} ,\,\,b_{\text{A}} \) and \( a_{\text{A}} \), respectively, when \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\upbeta}}}_{1} \) (\( k_{{{\text{S}}\hbox{max} }} ,\,\,b_{\text{S}} \) and \( a_{\text{S}} \) are similarly defined for later use when \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} \)), (44) gives

In the case of a single parameter, it is known that for \( \hat{\theta }_{{{\text{B}}k}} = \hat{\theta }_{\text{ML}} - n^{ - 1} k\hat{\beta }_{1} \),

(Ogasawara, 2013, Result 2; Ogasawara, 2014a, Erratum), where [k/ss] stands for the ratio of the excess kurtosis to the squared skewness of the single sufficient statistic, and \( i_{0} ,\,\,\,i_{0}^{{({\text{D}}1)}} \) and \( i_{0}^{{({\text{D}}2)}} \) are scalar counterparts of \( {\mathbf{I}}_{0} ,\,\,\,{\mathbf{I}}_{0}^{{({\text{D}}1)}} \) and \( {\mathbf{I}}_{0}^{{({\text{D2}})}} \), respectively.

Note that the minimizing coefficients in Results 5 and 6 given above generally depend on unknown population values, which are not available in practice and cannot automatically be replaced by their sample counterparts. The situation is similar to that of the maximizing coefficients in Sect. 3. This difficulty can be solved in several ways (see Ogasawara 2014b, pp. 203–204; Ogasawara 2016, Section 7). That is, (i) in some fortunate cases (e.g., Examples 1.1 to 2.3, 4.2, 4.4 and 4.5 shown later), the minimizing coefficients do not depend on unknown values. (ii) Some cases have lower/upper bounds for the minimizing coefficients (e.g., Example 3.1 shown later). (iii) In many cases, we have prior information on the range of unknown values. When the minimizing coefficients are monotonic functions of the population values, the range gives bounds similar to those of (ii). (iv) When another independent sample of size \( O(n) \) is available as in cross validation, the estimated minimizing coefficients using the second sample can be used without changing the asymptotic results given in Results 5 and 6.

4.1 Gamma distribution

Example 1.1

The canonical parameter \( ( - 1/\beta ) \), when the shape parameter \( (\alpha ) \) is given, with its biased MLE in the gamma distribution.

The density of the gamma distribution using the scale parameter \( \beta \), given the shape parameter \( \alpha \), is \( f(x^* = x|\alpha ,\beta ) = {{{x^{\alpha - 1}} \exp ( - x/{\beta} )}}/{{{\text{\{}\beta^{\alpha } \varGamma (\alpha ){\text{\}}}}}} \,\,\,(x > 0)\), where the notations similar to \( {\hat{\varvec{\upalpha}}} \) and \( {\varvec{\upbeta}}_{1} \) used earlier are used for their familiarity as long as confusion does not occur. The case of \( \alpha = 1 \) gives the exponential distribution. When \( \alpha \) is a positive integer, we have the Erlangian distribution.

The canonical parameter is \( \theta = - 1/\beta \) (negative rate). Then, \( f(x^* = x|\alpha ,\theta ) = x^{\alpha - 1} ( - \theta )^{\alpha } \exp (\theta x)/\varGamma (\alpha )\,\,\,(x > 0) \). From, e.g., Ogasawara (2014a, Example 3), \( \hat{\theta }_{\text{ML}} = - \alpha /\bar{x},\,\,\,\,\beta_{1} = \theta_{0} /\alpha ,\,\,\,i_{0} = \text{var} (x^{*} ) = \alpha /\theta_{0}^{2} , \) \( \beta_{2} = i_{0}^{ - 1} = \theta_{0}^{2} /\alpha \) and [k/ss] = 3/2, where \( \bar{x} \) is the sample mean. Then,

-

1.

\( \hat{\theta }_{\text{Smax}} \) (see (36)): The notation \( \hat{\theta }_{\text{Smax}} \) synonymous with \( \hat{\theta }_{{{\text{S}}k_{S\hbox{max} } }} \) is used for simplicity with other simple ones defined similarly:

$$ k_{\hbox{Smax} } = 1/(\theta_{0}^{2} i_{0} ) = 1/\alpha . $$(47) -

2.

\( \hat{\theta }_{\text{Bmax}} \) (see (34)): Use \( \partial \beta_{1} /\partial \theta_{0} = 1/\alpha \), then \( k_{\hbox{Bmax} } = {{(\partial \beta_{1} /\partial \theta_{0} )}}/({{\beta_{1}^{2} i_{0} }}) = ({1/\alpha })/({1/\alpha }) = 1 \). Since \( \hat{\beta }_{1} = \hat{\theta }_{\text{ML}} /\alpha \), we find that

$$ \hat{\theta }_{\text{Smax}} = \hat{\theta }_{\text{Bmax}} = \left( {1 - \frac{{n^{ - 1} }}{\alpha }} \right)\hat{\theta }_{\text{ML}} . $$(48) -

3.

\( \hat{\theta }_{\text{Smin}} \) (see (41)): \( k_{\text{Smin}} = \theta_{0}^{ - 2} (\beta_{2} + \theta_{0} \beta_{1} ) = \theta_{0}^{ - 2} (\theta_{0}^{2} \alpha^{ - 1} + \theta_{0} \theta_{0} \alpha^{ - 1} ) = 2/\alpha \).

-

4.

\( \hat{\theta }_{\text{Bmin}} \) (see (41)): Since \( \hat{\beta }_{1} = \hat{\theta }_{\text{ML}} /\alpha \), we have \( k_{\text{Bmin}} = \alpha k_{\text{Smin}} = 2 \), which is also given by \( k_{\text{Bmin}} = 5 - 2[{\text{k/ss}}] = 2 \).

From 3 and 4,

It is found that the amount of the correction in (49) is two times that of (48). The relatively larger correction in \( \hat{\theta }_{\text{Smin}} \) and \( \hat{\theta }_{\text{Bmin}} \) than in \( \hat{\theta }_{\text{Smax}} \) and \( \hat{\theta }_{\text{Bmax}} \) is a typical tendency. It is to be noted that the formulas of (48) and (49) do not depend on the unknown canonical parameter \( \theta_{0} \).

Example 1.2

The scale parameter \( (\beta ) \), when the shape parameter \( (\alpha ) \) is given in the gamma distribution (a non-canonical parameter with its unbiased MLE).

Since \( {\text{E}}_{{\theta_{0} }} (x^{*} ) = \alpha \beta_{0} \) using the population scale parameter \( \beta_{0} \), the scale parameter is a scaled expectation parameter. So, only \( k_{\hbox{Smax} } \) and \( k_{\hbox{Smin} } \) are obtained. Preliminary results are

-

1.

\( \hat{\theta }_{\text{Smax}} \) (see (34)):

$$ k_{\text{Smax} } = \frac{1}{{\beta_{0}^{2} (\alpha \beta_{0}^{ - 2} )}}\left\{ {\beta_{0} \left( { - \frac{2\alpha }{{\beta_{0}^{3} }}} \right)\frac{{\beta_{0}^{2} }}{\alpha } + 1} \right\} = - \frac{1}{\alpha } $$(51)and

$$ \hat{\theta }_{\text{Smax}} = (1 - n^{ - 1} k_{{{\text{S}}\hbox{max} }} )\hat{\theta }_{\text{ML}} = \left( {1 + \frac{{n^{ - 1} }}{\alpha }} \right)\hat{\theta }_{\text{ML}} = \left( {1 + \frac{{n^{ - 1} }}{\alpha }} \right)\frac{{\bar{x}}}{\alpha } $$(52) -

2.

\( \hat{\theta }_{\text{Smin}} \) (see (41)):

$$ k_{\text{Smin}} = \beta_{2} \theta_{0}^{ - 2} + \beta_{1} \theta_{0}^{ - 1} = \beta_{2} \theta_{0}^{ - 2} = 1/\alpha $$(53)$$ {\text{and}}\;\hat{\theta }_{\text{Smin}} = \left( {1 - \frac{{n^{ - 1} }}{\alpha }} \right)\hat{\theta }_{\text{ML}} = \left( {1 - \frac{{n^{ - 1} }}{\alpha }} \right)\frac{{\bar{x}}}{\alpha }. $$(54)

Note that (52) is an expected result since (52) should be asymptotically equal to the negative of the reciprocal of (48). The results are puzzling in that \( \hat{\theta }_{\text{Smax}} \) in (52) is an inflated estimator while \( \hat{\theta }_{\text{Smin}} \) in (54) is a shrinkage estimator. Since the amount of the added bias in (52) and (54) is the same with the reversed direction, (54) looks better than (52) since the variance of (54) is smaller than that of (52). Note, however, that this is based on the viewpoint of the Euclid distance. When the viewpoint of the Kullback–Leibler distance (see (4)) is employed, (52) is better than (54).

Example 1.3

The exponential distribution under arbitrary parametrization.

This case partially reduces to those in Examples 1.1 and 1.2 when \( \alpha = 1 \). That is, under canonical parametrization \( (\theta = 1/\beta ) \) using the rate parameter \( \theta \),

-

1.

$$ \hat{\theta }_{\text{Smax}} = \hat{\theta }_{\text{Bmax}} = (1 - n^{ - 1} )\hat{\theta }_{\text{ML}} = (1 - n^{ - 1} )/\bar{x} . $$(55)

-

2.

$$ \hat{\theta }_{\text{Smin}} = \hat{\theta }_{\text{Bmin}} = (1 - 2n^{ - 1} )\hat{\theta }_{\text{ML}} = (1 - 2n^{ - 1} )/\bar{x} . $$(56)

The pleasantly simple result of (55) was obtained by Takezawa (2014; see also 2015) as an exact one in that (55) maximizes the exact predictive expected log-likelihood among the family of \( k_{n} \hat{\theta }_{\text{ML}} \) rather than the asymptotic expectation. Recall that the parametrization \( \theta = - 1/\beta \) was used in Example 1.1. When \( \theta = 1/\beta \) is used, the reflected observable variable \( - x^{*} \) is to be used, if necessary, without changing the essential results.

When the scale or expectation parameter \( \beta \) is used, from (52) and (54) with \( \alpha = 1 \), we have

-

3.

$$ \hat{\theta }_{\text{Smax}} = (1 + n^{ - 1} )\hat{\theta }_{\text{ML}} = (1 + n^{ - 1} )\bar{x} , $$(57)

-

4.

$$ \hat{\theta }_{\text{Smin}} = (1 - n^{ - 1} )\hat{\theta }_{\text{ML}} = (1 - n^{ - 1} )\bar{x} . $$(58)

Again simple results are obtained. Note that Takezawa (2014, 2015) gave only (55) among (55) to (58). However, (57) is not an exact one. For illustration, we derive the exact solution \( k_{n\,\hbox{max} } \) maximizing the exact predictive expected log-likelihood among the family of \( k_{n} \hat{\theta }_{\text{ML}} = k_{n} \hat{\beta }_{\text{ML}} \).

First, we have

where \( x_{ \cdot } \) is the variable corresponding to the sum of n observed variables, which follows the Erlangian distribution with the parameters n and \( \beta \).

Differentiating (59) with respect to \( k_{n} \) and setting the result to zero, we obtain

giving

The above result obtained directly was given for illustration, which is also derived by using Takezawa’s (2014) exact solution as:

where \( \hat{\theta }_{\text{ML}}^{ - 1} \) is the MLE of the canonical parameter. Equation (62) is based on the invariant property of the likelihood irrespective of parametrization.

When \( \beta^{*} \equiv \log \beta \) (the log scale or the log inter-event time parameter) is used,

From (63), \( k_{n\hbox{max} } = 1 - \hat{\theta }_{\text{ML}}^{ - 1} \log (1 - n^{ - 1} ) \) is obtained, though the additive exact solution \( \hat{\theta }_{\text{ML}} - \log (1 - n^{ - 1} ) \) and its asymptotic approximation \( \hat{\theta }_{\text{ML}} + n^{ - 1} \) are simpler.

When we again compare the results in (55) and (57), (55) gives the unbiased estimator up to order \( O(n^{ - 1} ) \) and simultaneously gives variance reduction. On the other hand, bias and variance are both inflated in (57). It is of interest to see that the correction factor \( (1 - n^{ - 1} ) \) in (55) and (58) is the same though the parametrization is different.

4.2 Poisson distribution

Example 2.1

The canonical parameter \( (\log \lambda ) \) in the Poisson distribution with its biased MLE.

Let \( \lambda \) be the source or the expectation parameter in the Poisson distribution. Then, \( \theta = \log \lambda \) is the canonical parameter with its probability function \( \Pr (x^{*} = x|\theta ) = \exp( - e^{\theta } )e^{\theta x} /x! = \exp(\theta x - e^{\theta } )/x!\,\,\,(x = 0,1,2, \ldots ) \). Preliminary results are

(Ogasawara 2014a, Example 2).

-

1.

$$ \hat{\theta }_{{{\text{S}}\hbox{max} }} \left( {{\text{see }}\left( { 3 6} \right)} \right)\colon\;k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\theta_{0}^{2} i_{0} }} = \frac{1}{{\theta_{0}^{2} e^{{\theta_{0} }} }}. $$(65)

-

2.

\( \hat{\theta }_{{{\text{B}}\hbox{max} }} \) (see (34)): Use \( \partial \beta_{1} /\partial \theta_{0} = e^{{ - \theta_{0} }} /2 \), then,

$$ k_{{{\text{B}}\hbox{max} }} = \frac{{\partial \beta_{1} /\partial \theta_{0} }}{{\beta_{1}^{2} i_{0} }} = \frac{{e^{{ - \theta_{0} }} /2}}{{(e^{{ - \theta_{0} }} /2)^{2} e^{{\theta_{0} }} }} = 2 . $$(66) -

3.

\( \hat{\theta }_{{{\text{S}}\hbox{min} }} \) (see (41)):

$$ \begin{aligned} k_{{{\text{S}}\hbox{min} }} = \beta_{2} \theta_{0}^{ - 2} + \beta_{1} \theta_{0}^{ - 1} = e^{{ - \theta_{0} }} \theta_{0}^{ - 2} - (e^{{ - \theta_{0} }} /2)\theta_{0}^{ - 1} \hfill \\ \,\,\,\,\,\,\,\,\,\,\, = e^{{ - \theta_{0} }} \theta_{0}^{ - 1} \{ \theta_{0}^{ - 1} - (1/2)\} = k_{{{\text{S}}\hbox{max} }} - (e^{{ - \theta_{0} }} /2)\theta_{0}^{ - 1} \,\,\,(\theta_{0} \ne 0). \hfill \\ \end{aligned} $$(67) -

4.

$$ \hat{\theta }_{{{\text{B}}\hbox{min} }} \;\left( {{\text{see }}\left( { 4 6} \right)} \right)\colon\;k_{{{\text{B}}\hbox{min} }} = 5 - 2[{\text{k/ss}}] = 3. $$(68)

Note that as in the gamma distribution, the amount of correction in (68) is larger than that of (66). However, (67) is smaller (larger) than (65) when \( \theta_{0} > 0\,\,\,(\theta_{0} < 0) \). The value of (67) can be negative, zero and positive.

Example 2.2

The source or the expectation parameter \( (\lambda ) \) in the Poisson distribution (a non-canonical parameter).

Basic results are

-

1.

$$ \hat{\theta }_{{{\text{S}}\hbox{max} }} \left( {{\text{see }}\left( { 3 4} \right)} \right)\colon\;k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\lambda_{0}^{2} \lambda_{0}^{ - 1} }}\left\{ {\lambda_{0} \left( { - \frac{1}{{\lambda_{0}^{2} }}} \right)\lambda_{0} + 1} \right\} = 0. $$(70)

-

2.

$$ \hat{\theta }_{{{\text{S}}\hbox{min} }} \left( {{\text{see }}\left( { 4 1} \right)} \right)\colon\;k_{{{\text{S}}\hbox{min} }} = \beta_{2} \theta_{0}^{ - 2} + \beta_{1} \theta_{0}^{ - 1} = \beta_{2} \theta_{0}^{ - 2} = \lambda_{0} \lambda_{0}^{ - 2} = \lambda_{0}^{ - 1} . $$(71)

Equation (70) indicates that the MLE is optimal. The amount of correction in \( \hat{\theta }_{{{\text{S}}\hbox{min} }} \) is larger than that of \( \hat{\theta }_{{{\text{S}}\hbox{max} }} \).

Example 2.3

The parameter of the mean inter-event time \( (\beta = 1/\lambda ) \) (a non-canonical parameter with its biased MLE).

Preliminary results are

(see Ogasawara 2015).

-

1.

$$ \hat{\theta }_{{{\text{S}}\hbox{max} }} \left( {{\text{see }}\left( { 3 4} \right)} \right)\colon\;k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\beta_{0}^{2} \beta_{0}^{ - 3} }} = \left\{ {\beta_{0} \left( { - \frac{1}{{\beta_{0}^{4} }}} \right)\beta_{0}^{3} + 1} \right\} = 0. $$(73)

-

2.

\( \hat{\theta }_{{{\text{B}}\hbox{max} }} \) (see (34)): Since \( \partial \beta_{1} /\partial \theta_{0} = 2\beta_{0} \), we have

$$ k_{{{\text{B}}\hbox{max} }} = \frac{1}{{\beta_{0}^{4} \beta_{0}^{ - 3} }} = \left\{ {\beta_{0}^{2} \left( { - \frac{1}{{\beta_{0}^{4} }}} \right)\beta_{0}^{3} + 2\beta_{0} } \right\} = 1. $$(74) -

3.

$$ \hat{\theta }_{{{\text{S}}\hbox{min} }} \left( {{\text{see }}\left( { 4 1} \right)} \right)\colon\;k_{{{\text{S}}\hbox{min} }} = \beta_{2} \theta_{0}^{ - 2} + \beta_{1} \theta_{0}^{ - 1} = \beta_{0}^{3} \beta_{0}^{ - 2} + \beta_{0}^{2} \beta_{0}^{ - 1} = 2\beta_{0} . $$(75)

-

4.

\( \hat{\theta }_{{{\text{B}}\hbox{min} }} \) (see (41)): Using \( \alpha_{0} = \beta_{1} = \beta_{0}^{2} \) under correct model specification,

$$ k_{{{\text{B}}\hbox{min} }} = \frac{1}{{\beta_{1}^{2} }}\{ n\,{\text{acov}}_{{\theta_{0} }} (\hat{\theta }_{\text{ML}} ,\hat{\beta }_{1} ) + \beta_{1}^{2} \} = 2 + 1 = 3 . $$(76)Note that again \( k_{{{\text{S}}\hbox{max} }} = 0 \) as is expected.

4.3 Bernoulli distribution

Example 3.1

The canonical parameter (logit) with its biased MLE.

Basic results are

(Ogasawara 2014a, Example 1), where \( \kappa_{j} ( \cdot ) \) indicates the j-th cumulant of a variable; \( \hbox{sk}( \cdot ) \) is the skewness of a variable; and \( \hbox{kt}( \cdot ) \) is the excess kurtosis of a variable.

-

1.

$$ \hat{\theta }_{{{\text{S}}\hbox{max} }} \;\left( {{\text{see }}\left( { 3 6} \right)} \right)\colon\;k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\eta_{0}^{2} \pi_{0} (1 - \pi_{0} )}}. $$(78)

-

2.

\( \hat{\theta }_{{{\text{B}}\hbox{max} }} \) (see (34)): Since

$$ \begin{aligned} \frac{{\partial \beta_{1} }}{{\partial \theta_{0} }} = - \frac{1}{2}\frac{\partial }{{\partial \eta_{0} }}\frac{{1 - 2\pi_{0} }}{{\pi_{0} (1 - \pi_{0} )}} = \frac{{\pi_{0} (1 - \pi_{0} )}}{{\pi_{0} (1 - \pi_{0} )}} + \frac{{(1 - 2\pi_{0} )^{2} \pi_{0} (1 - \pi_{0} )}}{{2\{ \pi_{0} (1 - \pi_{0} )\}^{2} }} \hfill \\ = 1 + \frac{{(1 - 2\pi_{0} )^{2} }}{{2\pi_{0} (1 - \pi_{0} )}} > 1, \hfill \\ \end{aligned} $$$$ \begin{aligned} k_{{{\text{B}}\hbox{max} }} = \frac{{\partial \beta_{1} /\partial \theta_{0} }}{{\beta_{1}^{2} i_{0} }} = \left\{ {1 + \frac{{(1 - 2\pi_{0} )^{2} }}{{2\pi_{0} (1 - \pi_{0} )}}} \right\}/\left[ {\left\{ {\frac{{1 - 2\pi_{0} }}{{2\pi_{0} (1 - \pi_{0} )}}} \right\}^{2} \pi_{0} (1 - \pi_{0} )} \right] \hfill \\ = \frac{{2\{ 2\pi_{0} (1 - \pi_{0} ) + (1 - 2\pi_{0} )^{2} \} }}{{(1 - 2\pi_{0} )^{2} }} = 2 + \frac{{4\pi_{0} (1 - \pi_{0} )}}{{(1 - 2\pi_{0} )^{2} }} > 2\,\,\,(\pi_{0} \ne 0,\,\,0.5,\,\,\,1). \hfill \\ \end{aligned} $$(79) -

3.

\( \hat{\theta }_{{{\text{S}}\hbox{min} }} \) (see (41)):

$$ \begin{aligned} k_{{{\text{S}}\hbox{min} }} = \beta_{2} \theta_{0}^{ - 2} + \beta_{1} \theta_{0}^{ - 1} = \{ \pi_{0} (1 - \pi_{0} )\}^{ - 1} \eta_{0}^{ - 2} - \frac{{1 - 2\pi_{0} }}{{2\pi_{0} (1 - \pi_{0} )}}\eta_{0}^{ - 1} \hfill \\ = \frac{1}{{\pi_{0} (1 - \pi_{0} )\eta_{0}^{2} }}\left\{ {1 - \frac{1}{2}(1 - 2\pi_{0} )\eta_{0} } \right\} > \frac{1}{{\pi_{0} (1 - \pi_{0} )\eta_{0}^{2} }} = k_{{{\text{S}}\hbox{max} }} \hfill \\ \,(\pi_{0} \ne 0,\,\,0.5,\,\,\,1;\,\,\,(1 - 2\pi_{0} )\eta_{0} < 0). \hfill \\ \end{aligned} $$(80) -

4.

\( \hat{\theta }_{{{\text{B}}\hbox{min} }} \) (see (46)):

$$ k_{{{\text{B}}\hbox{min} }} = 5 - 2[{\text{k/ss}}] = 5 - 2\left\{ {1 - \frac{{2\pi_{0} (1 - \pi_{0} )}}{{(1 - 2\pi_{0} )^{2} }}} \right\} = 3 + \frac{{4\pi_{0} (1 - \pi_{0} )}}{{(1 - 2\pi_{0} )^{2} }} > 3 $$(81)

In the above results, the relative sizes of corrections by optimal coefficients are found to be similar to those in the Poisson distribution. All the optimal coefficients depend on the unknown parameter. The lower bound 3 for \( k_{{{\text{B}}\hbox{min} }} \) is a known one while the lower bound 2 for \( k_{{{\text{B}}\hbox{max} }} \) is a new one.

Example 3.2

The source or the expectation parameter \( (\pi ) \)(a non-canonical parameter).

Basic results are

-

1.

\( \hat{\theta }_{{{\text{S}}\hbox{max} }} \) (see (34)):

$$ \begin{aligned} k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\pi_{0}^{2} \{ \pi_{0} (1 - \pi_{0} )\}^{ - 1} }}\left[ {\pi_{0} \left\{ { - \frac{{1 - 2\pi_{0} }}{{\pi_{0}^{2} (1 - \pi_{0} )^{2} }}} \right\}\pi_{0} (1 - \pi_{0} ) + 1} \right] \hfill \\ = \frac{{1 - \pi_{0} }}{{\pi_{0} }}\left( { - \frac{{1 - 2\pi_{0} }}{{1 - \pi_{0} }} + 1} \right) = 1. \hfill \\ \end{aligned} $$(83) -

2.

\( \hat{\theta }_{{{\text{S}}\hbox{min} }} \) (see (41)):

$$ k_{{{\text{S}}\hbox{min} }} = \beta_{2} \theta_{0}^{ - 2} + \beta_{1} \theta_{0}^{ - 1} = \beta_{2} \theta_{0}^{ - 2} = \pi_{0} (1 - \pi_{0} )\pi_{0}^{ - 2} = \frac{{1 - \pi_{0} }}{{\pi_{0} }} > 0\,\,\,\,(\pi_{0} \ne 0,\,\,1). $$(84)

We find that \( k_{{{\text{S}}\hbox{max} }} \) does not depend on the unknown parameter. The relative size of \( k_{{{\text{S}}\hbox{max} }} \) and \( k_{{{\text{S}}\hbox{min} }} \) depends on the unknown parameter, which is given as follows:

4.4 The univariate normal distribution

For convenience, the variance parameter \( \sigma^{2} \) is also denoted by \( \phi \). Preliminary results are

where

is used.

Example 4.1

The parameters \( \mu \) and \( \phi \,\,( = \sigma^{2} ) \) with unconstrained k (a non-canonical parameter vector with its partially biased MLE).

Since \( \,{\varvec{\upbeta}}_{1} = (0, - \phi_{0} )^{{\prime}} ,\,\,\,{\hat{\varvec{\uptheta }}}_{{{\text{B}}\hbox{max} }} \) and \( \,{\hat{\varvec{\uptheta }}}_{{{\text{B}}\hbox{min} }} \) are not available.

-

1.

\( \,{\hat{\varvec{\uptheta }}}_{{{\text{S}}\hbox{max} }} \) (see (31)):

where \( \,c_{\text{v}} \) is the coefficient of variation assuming \( \mu_{0} \ne 0 \). It is found that the second element −4 of \( {\mathbf{k}}_{{{\text{S}}\hbox{max} }} \) gives an estimator asymptotically equal to the estimator called “the third variance” by Takezawa (2012, Eq. (21)) using a different method though with a common predictive viewpoint. Note that the adjusted estimator for a variance in (88) and Takezawa’ third variance are inflated estimators over the usual unbiased one and the normal-theory MLE.

-

2.

\( \,{\hat{\varvec{\uptheta }}}_{{{\text{S}}\hbox{min} }} \) (see (39)):

It is of interest to see that both the elements of \( \,{\mathbf{k}}_{{{\text{S}}\hbox{max} }} \) and \( \,{\mathbf{k}}_{\hbox{Smin} } \) for \( \mu_{0} \) are the same and depend on only \( \,c_{\text{v}} \). Since \( c_{\text{V}}^{2} \) in (88) and (89) is positive under the conditions \( \mu_{0} \ne 0 \) and \( \phi_{0} = \sigma_{0}^{2} > 0 \), the adjusted estimator \( (1 - n^{ - 1} c_{\text{V}}^{2} )\bar{x} \) is a shrinkage estimator when \( 1 - n^{ - 1} c_{\text{V}}^{2} \ge 0 \). If \( \mu_{0} \) is close to zero, \( c_{\text{V}}^{2} \) becomes very large. While this may seem odd, the result is reasonable, which can be explained as follows. When \( \mu_{0} \) is close to zero, the large \( c_{\text{V}}^{2} \) gives small \( 1 - n^{ - 1} c_{\text{V}}^{2} \) as long as \( 1 - n^{ - 1} c_{\text{V}}^{2} \ge 0 \). Consequently, this gives a smaller adjusted estimator \( (1 - n^{ - 1} c_{\text{V}}^{2} )\bar{x} \), which is closer to the small population value than the usual estimator \( \bar{x} \). Since \( 1 - n^{ - 1} c_{\text{V}}^{2} \) is an asymptotic result, \( 1 - n^{ - 1} c_{\text{V}}^{2} \) can become negative with finite n. In this case, \( 1 - n^{ - 1} c_{\text{V}}^{2} \) may be replaced by zero.

When k for \( {\hat{\varvec{\uptheta }}}_{{{\text{B}}\hbox{max} }} \) and \( \,{\hat{\varvec{\uptheta }}}_{{{\text{B}}\hbox{min} }} \) is shortened as a scalar only for \( \phi_{0} \), the solutions become similar to those in Example 4.2 shown next.

Example 4.2

The parameters \( \mu \) and \( \phi \,\,( = \sigma^{2} ) \) with \( {\mathbf{k}} = k{\mathbf{1}}_{(2)} \) (a non-canonical parameter vector with its partially biased MLE).

This is given for illustration using \( {\mathbf{k}} = k{\mathbf{1}}_{(2)} \). Note that generally the equal value k for \( \mu \) and \( \phi = \sigma^{2} \) is meaningless. However, when \( c_{\text{V}} \) is known to be proportional to \( 1/\sigma \) with \( \mu > 0 \), the equal k can be employed.

-

1.

\( \,{\hat{\varvec{\uptheta }}}_{{{\text{S}}\hbox{max} }} \) (see (34)):

$$ \begin{aligned} k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\begin{array}{*{20}c} {(\mu_{0} ,\phi_{0} )} \\ {} \\ \end{array} {\left( {\begin{array}{*{20}c} {1/\phi _{0} } & 0 \\ 0 & {1/(2\phi _{0}^{2} )} \\ \end{array} } \right)} \left( \begin{aligned} \mu_{0} \hfill \\ \phi_{0} \hfill \\ \end{aligned} \right)}} \hfill \\ \times \left\{ {\begin{array}{*{20}c} {(\mu_{0} ,\phi_{0} )} \\ {} \\ \end{array} {\left( {\begin{array}{*{20}l} 0 \hfill & { - 1/\phi _{0}^{2} } \hfill & 0 \hfill & 0 \hfill \\ { - 1/\phi _{0}^{2} } \hfill & 0 \hfill & 0 \hfill & { - 1/\phi _{0}^{3} } \hfill \\ \end{array} } \right)} (\phi_{0} ,0,0,2\phi_{0}^{2} )^{{\prime}} + 2} \right\} \hfill \\ = \left( {\frac{{\mu_{0}^{2} }}{{\phi_{0} }} + \frac{1}{2}} \right)^{ - 1} \left\{ {\phi_{0} \left( { - \frac{1}{{\phi_{0} }} - \frac{2}{{\phi_{0} }}} \right) + 2} \right\} = - \frac{{2\phi_{0} }}{{2\mu_{0}^{2} + \phi_{0} }} = - \frac{{2c_{\text{v}}^{2} }}{{2 + c_{\text{v}}^{2} }} < 0. \hfill \\ \end{aligned} $$(90) -

2.

\( \,{\hat{\varvec{\uptheta }}}_{{{\text{B}}\hbox{max} }} \) (see (34)): Using \( \frac{{\partial {\varvec{\upbeta}}_{1}^{\prime} }}{{\partial {\varvec{\uptheta}}_{0} }} = \left( {\begin{array}{*{20}c} 0 & 0 \\ 0 & { - 1} \\ \end{array} } \right) \),

$$ \begin{aligned} k_{{{\text{B}}\hbox{max} }} = \frac{1}{{\begin{array}{*{20}c} {(0, - \phi_{0} )} \\ {} \\ \end{array} {\left( {\begin{array}{*{20}c} {1/\phi _{0} } & 0 \\ 0 & {1/(2\phi _{0}^{2} )} \\ \end{array} } \right)} \left( \begin{aligned} \,\,\,0 \hfill \\ - \phi_{0} \hfill \\ \end{aligned} \right)}} \hfill \\ \times \left\{ {\begin{array}{*{20}c} {(0, - \phi_{0} )} \\ {} \\ \end{array} {\left( {\begin{array}{*{20}l} 0 \hfill & { - 1/\phi _{0}^{2} } \hfill & 0 \hfill & 0 \hfill \\ { - 1/\phi _{0}^{2} } \hfill & 0 \hfill & 0 \hfill & { - 1/\phi _{0}^{3} } \hfill \\ \end{array} } \right)} (\phi_{0} ,0,0,2\phi_{0}^{2} )^{{\prime}} - 1} \right\} \hfill \\ = 2\left\{ {\phi_{0} \left( {\frac{1}{{\phi_{0} }} + \frac{2}{{\phi_{0} }}} \right) - 1} \right\} = 4. \hfill \\ \end{aligned} $$(91) -

3.

\( \,{\hat{\varvec{\uptheta }}}_{{{\text{S}}\hbox{min} }} \) (see (41)):

$$ \begin{aligned} k_{{{\text{S}}\hbox{min} }} = \frac{1}{{{\varvec{\uptheta}}_{0}^{\prime} {\varvec{\uptheta}}_{0} }}({\mathbf{1}}_{{_{(2)} }}^{\prime} {\varvec{\upbeta}}_{2} + {\varvec{\uptheta}}_{0}^{\prime} {\varvec{\upbeta}}_{1} ) \hfill \\ = \frac{1}{{(\mu_{0} ,\phi_{0} ) (\mu_{0} ,\phi_{0} )^{{\prime}}}}\{ {\mathbf{1}}_{{_{(2)} }}^{\prime} (\phi_{0} ,2\phi_{0}^{2} )^{{\prime}} + (\mu_{0} ,\phi_{0} )(0, - \phi_{0} )^{{\prime}} \} = \frac{{\phi_{0} (1 + \phi_{0} )}}{{\mu_{0}^{2} + \phi_{0}^{2} }} > 0. \hfill \\ \end{aligned} $$(92) -

4.

\( \,{\hat{\varvec{\uptheta }}}_{{{\text{B}}\hbox{min} }} \) (see (41)): Using \( {\varvec{\upbeta}}_{1} = (0, - \phi_{0} )^{\prime} \) and

$$ \begin{aligned} n\,{\text{acov}}_{{\theta_{0} }} ({\hat{\varvec{\uptheta }}}_{\text{ML}} ,{\hat{\varvec{\upbeta}}}_{1}^{\prime} ) = \left( {\begin{array}{*{20}c} 0 & 0 \\ 0 & { - n\,{\text{avar}}(\hat{\phi }_{\text{ML}} )} \\ \end{array} } \right) = \left( {\begin{array}{*{20}c} 0 & 0 \\ 0 & { - 2\phi _{0}^{2} } \\ \end{array} } \right) , \hfill \\ k_{{{\text{B}}\hbox{min} }} = \frac{1}{{{\varvec{\upbeta}}_{1}^{\prime} {\varvec{\upbeta}}_{1} }}( - 2\phi_{0}^{2} + {\varvec{\upbeta}}_{1}^{\prime} {\varvec{\upbeta}}_{1} ) = \frac{1}{{\phi_{0}^{2} }}( - 2\phi_{0}^{2} ) + 1 = - 1. \hfill \\ \end{aligned} $$(93)

Note that \( - \{ n/(n + 1)\} k_{{{\text{B}}\hbox{min} }} = n/(n + 1) \) is the exact solution as explained in Sect. 1.

Example 4.3

The mean parameter \( \mu \) when the variance \( \phi \,\,( = \sigma^{2} ) \) is known (the canonical and expectation parameter).

We have

Then,

-

1.

$$ \,\hat{\theta }_{{{\text{S}}\hbox{max} }} \left( {{\text{see }}\left( { 3 6} \right)} \right)\colon\;k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\mu_{0}^{2} \phi_{0}^{ - 1} }} = \frac{{\phi_{0} }}{{\mu_{0}^{2} }} = c_{\text{v}}^{2} . $$(95)

-

2.

$$ \,\hat{\theta }_{{{\text{S}}\hbox{min} }} \left( {{\text{see }}\left( { 4 1} \right)} \right)\colon\;k_{{{\text{S}}\hbox{min} }} = \frac{1}{{\mu_{0}^{2} }}\{ n\,\hbox{var}(\hat{\theta }_{\text{ML}} ) + \mu_{0} \times 0\} = \frac{{\phi_{0} }}{{\mu_{0}^{2} }} = c_{\text{v}}^{2} . $$(96)

We see that the optimal coefficients are the same. Note that the results hold even under model misspecification, since \( nvar(\bar{x}) = \phi_{0} \) holds under arbitrary distributions when it exists with \( {\varvec{\Gamma}} = - {\varvec{\Lambda}} = \phi_{0}^{ - 1} \).

Example 4.4

The variance parameter \( \phi \,\,( = \sigma^{2} ) \) when the mean \( \mu \) is known (a non-canonical parameter with its unbiased MLE).

Basic results are

where the last equation is the same as (87). Then,

-

1.

$$ \,\hat{\theta }_{{{\text{S}}\hbox{max} }} \; \left( {{\text{see }}\left( { 3 4} \right)} \right)\colon\;k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\phi_{0}^{2} (2\phi_{0}^{2} )^{ - 1} }}\{ \phi_{0} ( - \phi_{0}^{3} )^{ - 1} 2\phi_{0}^{2} + 1\} = - 2. $$(98)

-

2.

$$ \,\hat{\theta }_{{{\text{S}}\hbox{min} }} \; \left( {{\text{see }}\left( { 4 1} \right)} \right)\colon\;k_{{{\text{S}}\hbox{min} }} = \frac{1}{{\phi_{0}^{2} }}\{ n\,{\text{avar}}(\hat{\theta }_{\text{ML}} ) + \phi_{0} \times 0\} = 2. $$(99)

Example 4.5

The precision parameter \( \theta = 1/\sigma^{2} = 1/\phi \), when the mean \( \mu \) is known (the canonical parameter with its biased MLE; Ogasawara 2013, Example 5).

The vector of the canonical parameters, when \( \mu \) and \( \phi \) are unknown, is \( \{ \mu /\phi ,\,\, - 1/(2\phi )\}^{\prime} \) with the vector of sufficient statistics \( (x^{*} ,x^{*2} )^{\prime} \). Note, however, that the use of the parameter \( \mu /\phi \) may be limited. Let \( \theta = 1/\phi \). Then, \( \theta \) is the precision parameter (the reciprocal of variance). When \( \mu_{0} \) is known, define \( \theta (\mu_{0} ,\,\, - 0.5)^{\prime} \equiv \theta {\mathbf{d}} \), where \( \theta \) is a single parameter with the sufficient statistic

whose mean over observations is \( - \frac{1}{2}n^{ - 1} \sum\nolimits_{j = 1}^{n} {(x_{j}^{*} - \mu_{0} )^{2} } + \frac{{\mu_{0}^{2} }}{2} \).

Basic results are

(Ogasawara 2014a, Example 5). Then,

-

1.

$$ \,\hat{\theta }_{{{\text{S}}\hbox{max} }} \left( {{\text{see }}\left( { 3 6} \right)} \right)\colon\;k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\theta_{0}^{2} (\phi_{0}^{2} /2)}} = 2. $$(102)

-

2.

\( \,\hat{\theta }_{{{\text{B}}\hbox{max} }} \) (see (34)): Using \( \beta_{1} = 2\theta_{0} \),

$$ k_{{{\text{B}}\hbox{max} }} = \frac{2}{{(2\theta_{0} )^{2} (\phi_{0}^{2} /2)}} = 1. $$(103) -

3.

$$ \,\hat{\theta }_{{{\text{S}}\hbox{min} }} \; \left( {{\text{see }}\left( { 4 1} \right)} \right)\colon\;k_{{{\text{S}}\hbox{min} }} = \beta_{2} \theta_{0}^{ - 2} + \beta_{1} \theta_{0}^{ - 1} = 2\theta_{0}^{2} \theta_{0}^{ - 2} + 2\theta_{0} \theta_{0}^{ - 1} = 4. $$(104)

-

4.

$$ \,\hat{\theta }_{{{\text{B}}\hbox{min} }} \; \left( {{\text{see }}\left( { 4 6} \right)} \right)\colon\;k_{{{\text{B}}\hbox{min} }} = 5 - 2[{\text{k/ss}}] = 2. $$(105)

The result of (102) is an expected one from (98) in Example 4.4. Note that the distribution of \( x^{*} \) is the same in Examples 4.4 and 4.5 with different parametrization, i.e., \( \theta = \phi \) or \( \theta = 1/\phi \). Then, from (98), \( \,\hat{\theta }_{{{\text{S}}\hbox{max} }} \) in Example 4.5 is asymptotically equal to the reciprocal of \( \,\hat{\theta }_{{{\text{S}}\hbox{max} }} \) in Example 4.4, which gives, for Example 4.5, \( \,\{ (1 + 2n^{ - 1} )\hat{\phi }_{\text{ML}} \}^{ - 1} = (1 - 2n^{ - 1} )\hat{\theta }_{\text{ML}} + O_{p} (n^{ - 2} ) \) yielding (102).

4.5 Misspecified models

Example 5.1

The misspecified exponential distribution when the gamma distribution with \( \,\alpha \ne 1 \) is true.

This example is the case when one of the parameters in a distribution is fixed at an incorrect value. In this example, the density

is used irrespective of the situation where the true density is

Then,

The dispersion ratio defined earlier is

Since \( \beta_{1} = \lambda_{1} /\alpha^{2} \) depends on \( \alpha \) and \( \lambda_{1} \), only \( k_{{{\text{S}}\hbox{max} }} \) and \( k_{{{\text{S}}\hbox{min} }} \) are considered.

-

1.

\( \,\hat{\theta }_{{{\text{S}}\hbox{max} }} \) (see (26)):

$$ k_{{{\text{S}}\hbox{max} }} = \frac{{{\text{tr}}({\varvec{\Gamma \Lambda }}^{ - 1} )}}{{{\varvec{\uptheta}}_{0}^{\prime} {\varvec{\Lambda \uptheta }}_{0} }} = \frac{{(\alpha /\lambda_{1}^{2} )( - i_{0} )^{ - 1} }}{{\theta_{0}^{2} ( - i_{0} )}} = \frac{1}{\alpha } \ne 1. $$(110) -

2.

\( \,\hat{\theta }_{{{\text{S}}\hbox{min} }} \) (see (41)):

$$ k_{{{\text{S}}\hbox{min} }} = \frac{1}{{\theta_{0}^{2} }}\{ n\,{\text{avar}}_{\text{T}} (\hat{\theta }_{\text{ML}} ) + \theta_{0}^{2} \alpha^{ - 1} \} = \frac{1}{{\lambda_{0}^{2} }} \times \frac{{\alpha \lambda_{0}^{4} }}{{\lambda_{1}^{2} }} + \alpha^{ - 1} = \frac{2}{\alpha } \ne 2 . $$(111)

It is found that that \( k_{{{\text{S}}\hbox{max} }} \) is equal to the dispersion ratio, which is not equal to the unit value given by the true model. Similarly, \( k_{{{\text{S}}\hbox{min} }} \) in (111) is two times the dispersion ratio, which is not equal to 2 given by the true model (see (56)).

Example 5.2

The misspecified Poisson distribution when the Bernoulli distribution is true.

This example is the case when a distribution is used under the situation where the true distribution is a different type of distribution. In this example, the source or expectation parameter in the Poisson distribution is used:

when the true distribution is

Basic results are

Note that the dispersion ratio \( \pi_{0} (1 - \pi_{0} )/\pi_{0} = 1 - \pi_{0} \,\,(\pi_{0} \ne 0) \) is smaller than 1. Then,

-

1.

\( \,\hat{\theta }_{{{\text{S}}\hbox{max} }} \) (see (26)):

$$ k_{{{\text{S}}\hbox{max} }} = \frac{1}{{\pi_{0}^{2} ( - \pi_{0}^{ - 1} )}}\left\{ {\pi_{0} \left( { - \frac{{1 - \pi_{0} }}{{\pi_{0}^{2} }}} \right)( - \pi_{0} ) - (1 - \pi_{0} )} \right\} = 0. $$(115) -

2.

\( \,\hat{\theta }_{{{\text{S}}\hbox{min} }} \) (see (41)):

$$ k_{{{\text{S}}\hbox{min} }} = \frac{1}{{\theta_{0}^{2} }}\{ n\,\hbox{var}_{\text{T}} (\hat{\theta }_{\text{ML}} ) + \theta_{0} \times 0\} = \frac{1}{{\pi_{0}^{2} }}\pi_{0} (1 - \pi_{0} ) = \frac{{1 - \pi_{0} }}{{\pi_{0} }}. $$(116)

Recall that the zero value of \( k_{{{\text{S}}\hbox{max} }} \) holds when the Poisson distribution is true (see (70)).

5 Different criteria

5.1 Comparison of optimal coefficients using different criteria

In Sect. 4, the tendency of the relatively large amount of correction by \( k_{{{\text{A}}\hbox{min} }} \) over that by \( k_{{{\text{A}}\hbox{max} }} \) was observed. The difference can be partially explained using the case of a single parameter.

Theorem 1

For a single parameter under correct model specification

Proof

From (34) in Result 4 and (41) in Result 6, we have

where m = M in the case of a single parameter. Since \( \beta_{1} = i_{0}^{ - 2} n{\text{E}}_{{\theta_{0} }} \left( {{{\partial \bar{l}}}/{{\partial \theta_{0} }} \otimes m} \right) - \{{1}/{{(2i_{0}^{2}) }}\}\left( { - {{\partial^{3} \bar{l}^{*} }}/{{\partial \theta_{0}^{3} }}} \right) \) (see (18)) and \( n\,{\text{acov}}_{{\theta_{0} }} (\hat{\theta }_{\text{ML}} ,\hat{\alpha }) = i_{0}^{ - 1} {{\partial \alpha_{0} }}/{{\partial \theta_{0} }} \), (118) becomes

Let \( {\text{sign}}( \cdot ) = - 1,\,\,\,0,\,\,\,1 \) depending on the sign of the real quantity in parentheses, then the following result is obtained.

Corollary 1

For a single canonical parameter in the exponential family under correct model specification

Proof

From (34) with the vanishing m and (41) as in Theorem 1, we have

Corollary 1 is a special case of Theorem 1 and is alternatively obtained by \( \beta_{1} = - i_{0}^{{({\text{D1)}}}} /(2i_{0}^{2} ) \) with the vanishing m in (18). Using Corollary 1, the following results are obtained.

Corollary 2

Under the same conditions as in Corollary 1 ,

The second equation of (123) shows that \( k_{{{\text{B}}\hbox{min} }} \), when available, is always greater than \( k_{{{\text{B}}\hbox{max} }} \) by 1. The first equation shows the following result.

Corollary 3

Under the same conditions as in Corollary 1 , the relative size of \( k_{{{\text{S}}\hbox{min} }} \) and \( k_{{{\text{S}}\hbox{max} }} \) is given as follows:

Proof

It is known that

(Ogasawara 2013, Eq. 3.1). Using (125) and Corollary 2, (124) follows. Q.E.D.

In Example 1.1 for the gamma distribution, \( \beta_{1} = \theta_{0} /\alpha \) gives \( \beta_{1} /\theta_{0} = 1/\alpha > 0 \) yielding \( k_{{{\text{S}}\hbox{min} }} > k_{{{\text{S}}\hbox{max} }} \). In Example 2.1 for the Poisson distribution, \( \beta_{1} = - e^{{ - \theta_{0} }} /2 < 0 \) (see (64)). However, \( \theta_{0} ( = \log \lambda_{0} ) \) can be negative, 0 or positive (see the comment after (68)), giving an undetermined property of (120) or relative size. In Example 3.1 for the Bernoulli distribution, \( \beta_{1} /\theta_{0} = - {{(1 - 2\pi_{0}) }}/\{{{2\pi_{0} (1 - \pi_{0} )}}\}\eta_{0}^{ - 1} > 0\,\,\,(\pi_{0} \ne 0,\,\,\,0.5,\,\,\,1) \), which gives \( k_{{{\text{S}}\hbox{min} }} > k_{{{\text{S}}\hbox{max} }} \). In Example 4.3 for the univariate normal distribution, \( \beta_{1} = 0 \) and \( {\text{sk}}(x^{*} ) = 0 \), giving \( k_{{{\text{S}}\hbox{min} }} = k_{{{\text{S}}\hbox{max} }} \). On the other hand, in Example 4.5 for the same normal distribution under a condition different from that in Example 4.3, \( {\text{sk}}(s_{d} ) = - \sqrt 8 < 0 \), where \( s_{d} \) corresponds to \( x^{*} \) in Example 4.3. Since in Example 4.5 \( \theta_{0} = \phi_{0}^{ - 1} > 0 \), (124) gives \( k_{{{\text{S}}\hbox{min} }} > k_{{{\text{S}}\hbox{max} }} \) (see (102) and (104)).

Recall that the term with \( i_{0}^{{({\text{D}}1)}} \) or \( - \partial^{3} \bar{l}^{*} /\partial \theta_{0}^{3} \) comes from the last term except the remainder term on the right-hand side of the last equation of (12). Since the other terms including k have the common factor \( - \partial^{2} \bar{l}^{*} /\partial {\varvec{\uptheta}}_{0} \partial {\varvec{\uptheta}}_{0}^{\prime} = O(1) \), we find that in the case of a single parameter, the minimization of \( {\text{MSE}}_{{ \to O(n^{ - 2} )}} \) is equal to the maximization of the asymptotic predictive expected log-likelihood up to order \( O(n^{ - 2} ) \) when we neglect the last term with the third derivative.

In the case of multiple parameters, these two criteria do not give the same optimal sets of coefficients k. This suggests the following new distances to be minimized.

Definition 1

The generalized mean square error and the scale-free mean square error up to order \( O(n^{ - 2} ) \) are defined as:

and

respectively.

It is obvious that (126) is a Mahalanobis distance under correct model specification, which is also scale free as for (127). In (126) and (127), \( {\varvec{\Lambda}} \) is an unknown non-stochastic quantity like the target vector \( {\varvec{\uptheta}}_{0} \). Minimization of (126) and (127) can be done in similar manners as before with the explicit solutions minimizing the corresponding quadratic forms. Note that minimizing \( {\text{GMSE}}_{{ \to O(n^{ - 2} )}} \) is equivalent to maximizing the asymptotic predictive expected log-likelihood up to order \( O(n^{ - 2} ) \) neglecting the term of the third derivative but considering the off-diagonal elements of the second-derivative matrix with the different diagonal elements in the case of multiple parameters. So, the optimal coefficients obtained by this criterion may be situated between those by minimizing the \( {\text{TMSE}}_{{ \to O(n^{ - 2} )}} \) and those by maximizing the asymptotic predictive expected log-likelihood.

5.2 Composite correction vector

So far, the functional form of \( {\hat{\varvec{\upalpha}}} = {\varvec{\upalpha}}({\hat{\varvec{\uptheta }}}_{\text{ML}}) \) is assumed to be given, whose typical cases are \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \) and \( {\hat{\varvec{\upbeta}}}_{1} ( = {\varvec{\upbeta}}_{1} ({\hat{\varvec{\uptheta }}}_{\text{ML}})) \). Let \( {\hat{\varvec{\upalpha}}}^{(1)} \) and \( {\hat{\varvec{\upalpha}}}^{(2)} \) are two arbitrary vectors similar to \( {\hat{\varvec{\upalpha}}} \). Then, define the composite vector using a fixed weight w as follows:

The vector \( {\hat{\varvec{\upalpha}}}_{w} \) can be used as a special case of \( {\hat{\varvec{\upalpha}}} \). An example of \( {\hat{\varvec{\upalpha}}}_{w} \) is \( {\hat{\varvec{\upalpha}}}_{w} = w{\hat{\varvec{\uptheta }}}_{\text{ML}} + (1 - w){\hat{\varvec{\upbeta}}}_{1} \), which is expected to yield an intermediate effect between those when \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\uptheta }}}_{\text{ML}} \) for shrinkage and when \( {\hat{\varvec{\upalpha}}} = {\hat{\varvec{\upbeta}}}_{1} \) for bias adjustment.

References

Akaike H (1973) Information theory and an extension of the maximum likelihood principle. In: Petrov BN, Csáki F (eds) Proceedings of the 2nd international symposium on information theory. Académiai Kiado, Budapest, pp 267–281

Bjornstad JF (1990) Predictive likelihood: a review. Stat Sci 5:242–265

DeGroot MH, Schervish MJ (2002) Probability and statistics, 3rd edn. Addison-Wesley, Boston

Fisher RA (1956) Statistical methods and scientific inference. Oliver and Boyd, Edinburgh

Giles DEA, Rayner AC (1979) The mean squared errors of the maximum likelihood and natural-conjugate Bayes regression estimators. J Econometr 11:319–334

Gruber MHJ (1998) Improving efficiency by shrinkage: The James-Stein and ridge regression estimators. Marcel Dekker, New York

Hinkley D (1979) Predictive likelihood. Ann Stat 7:718–728

Konishi S, Kitagawa G (1996) Generalized information criteria in model selection. Biometrika 83:875–890

Konishi S, Kitagawa G (2003) Asymptotic theory for information criteria in model selection—functional approach. J Stat Plan Inference 114:45–61

Konishi S, Kitagawa G (2008) Information criteria and statistical modeling. Springer, New-York

Kullback S, Leibler RA (1951) On information and sufficiency. Ann Math Stat 22:79–86

Lawless JF, Fredette M (2005) Frequentist prediction intervals and predictive distributions. Biometrika 92:529–542

Lejeune M, Faulkenberry GD (1982) A simple predictive density function. J Am Stat Assoc 77:654–657

Leonard T (1982) Comment (on Lejeune & Faulkenberry, 1982). J Am Stat Assoc 77:657–658

Ogasawara H (2010) Asymptotic expansions for the pivots using log-likelihood derivatives with an application in item response theory. J Multivar Anal 101:2149–2167

Ogasawara H (2013) Asymptotic cumulants of the estimator of the canonical parameter in the exponential family. J Stat Plan Inference 143:2142–2150

Ogasawara H (2014a) Supplement to the paper “Asymptotic cumulants of the estimator of the canonical parameter in the exponential family”. Econ Rev (Otaru University of Commerce), 65 (2 & 3), 3–16. Permalink: http://hdl.handle.net/10252/5399. Accessed 20 Nov 2016

Ogasawara H (2014b) Optimization of the Gaussian and Jeffreys power priors with emphasis on the canonical parameters in the exponential family. Behaviormetrika 41:195–223

Ogasawara H (2015) Bias adjustment minimizing the asymptotic mean square error. Commun Stat Theory Methods 44:3503–3522

Ogasawara H (2016) Optimal information criteria minimizing their asymptotic mean square errors. Sankhyā B 78:152–182

Ogasawara H (2017) Asymptotic cumulants of some information criteria. J Jpn Soc Comput Stat (to appear)

Sakamoto Y, Ishiguro M, Kitagawa G (1986) Akaike information criterion statistics. Reidel, Dordrecht

Stone M (1977) An asymptotic equivalence of choice of model by cross-validation and Akaike’s criterion. J R Stat Soc B 39:44–47

Takeuchi K (1976) Distributions of information statistics and criteria of the goodness of models. Math Sci 153:12–18 (in Japanese)

Takezawa K (2012) A revision of AIC for normal error models. Open J Stat 2:309–312

Takezawa K (2014) Estimation of the exponential distribution from a viewpoint of prediction. In: Proceedings of 2014 Japanese Joint Statistical Meeting, 305. University of Tokyo, Tokyo, Japan (in Japanese)

Takezawa K (2015) Estimation of the exponential distribution in the light of future data. Br J Math Comput Sci 5:128–132

Acknowledgements

This work was partially supported by a Grant-in-Aid for Scientific Research from the Japanese Ministry of Education, Culture, Sports, Science and Technology (JSPS KAKENHI, Grant No. 26330031).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Shuichi Kawano.

Appendix

Appendix

1.1 The asymptotic predictive expected log-likelihood for the maximum likelihood estimator

In this appendix, \( {\text{E}}_{\text{T}} (\hat{\bar{l}}_{\text{ML}}^{*} )_{{ \to O(n^{ - 2} )}} \) in (14) is obtained, where

\( {\text{E}}_{\text{T}} (\hat{\bar{l}}_{\text{ML}}^{*} ) = {\text{E}}_{\text{T}} \{ \bar{l}^{*} ({\hat{\varvec{\uptheta }}}_{\text{ML}} )\} = \int_{{R({\mathbf{X}})}} {\bar{l}^{*} \{ {\varvec{\uptheta}}_{\text{ML}} ({\mathbf{X}})\} f_{\text{T}} ({\mathbf{X}}|{\varvec{\upzeta}}_{0} ){\text{d}}{\mathbf{X}}} \) (see (10)). For this expectation, we use the expansion of \( {\hat{\varvec{\uptheta }}}_{\text{ML}} \) by Ogasawara (2010, p. 2151) as follows:

where \( {\text{v}}( \cdot ) \) is the vectorizing operator taking the non-duplicated elements of a symmetric matrix and \( {\text{v}}^{{\prime}} ( \cdot ) = \{ {\text{v}}( \cdot )\}^{{\prime}} \).

Using (129), the matrices \( {\varvec{\Lambda}}^{(2 - j)} \,\,(j = 1,2) \) and \( {\varvec{\Lambda}}^{(3 - j)} \,\,(j = 1, \ldots ,4) \) are implicitly defined by

The expectation to be derived is

In (131), the asymptotic expectation is derived term by term. In the following, the notation, e.g., \( ({\varvec{\Lambda}}^{(2 - 1)} )_{(e:ab,c,d)} \) indicates an element of \( {\varvec{\Lambda}}^{(2 - 1)} \) corresponding to the e-th row and the column denoted by “ab, c, d” which corresponds to \( ({\mathbf{M}})_{ab} \,\,(a \ge b),\,\,\,\partial \bar{l}/\partial ({\varvec{\uptheta}}_{0} )_{c} \) and \( \partial \bar{l}/\partial ({\varvec{\uptheta}}_{0} )_{d} \) in \( {\mathbf{l}}_{0}^{(2 - 1)} \). The notation, e.g., \( \sum\nolimits_{(g,\,h)}^{(2)} {} \) indicates the summation of two terms exchanging g and h; \( \sum\limits_{a \ge b}^{{}} {( \cdot )} \equiv \sum\limits_{b = 1}^{q} {\sum\limits_{a = b}^{q} {( \cdot )} } \); and \( \lambda^{ab} = ({\varvec{\Lambda}}^{ - 1} )_{ab} \).

-

1.

$$ \begin{aligned} {\text{E}}_{\text{T}} \left\{ {\frac{1}{2}\frac{{\partial^{2} \bar{l}^{*} }}{{(\partial {\varvec{\uptheta}}_{0}^{\prime} )^{ \langle 2 \rangle } }}({\hat{\varvec{\uptheta }}}_{\text{ML}} - {\varvec{\uptheta}}_{0} )^{ \langle 2 \rangle } } \right\} \hfill \\ = \frac{1}{2}n^{ - 1} {\text{vec}}^{{\prime}} ({\varvec{\Lambda}})n{\text{E}}_{\text{T}} \{ ({\varvec{\Lambda}}^{(1)} {\mathbf{l}}_{0}^{(1)} )^{ \langle 2 \rangle } \} \hfill \\ \,\,\, + \frac{1}{2}n^{ - 2} {\text{vec}}^{{\prime}} ({\varvec{\Lambda}})\bigg[ {2n^{2} } {\text{E}}_{\text{T}} \{ ({\varvec{\Lambda}}^{(2)} {\mathbf{l}}_{0}^{(2)} ) \otimes ({\varvec{\Lambda}}^{(1)} {\mathbf{l}}_{0}^{(1)} )\} \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, + 2n^{2} {\text{E}}_{\text{T}} \{ ({\varvec{\Lambda}}^{(3)} {\mathbf{l}}_{0}^{(3)} ) \otimes ({\varvec{\Lambda}}^{(1)} {\mathbf{l}}_{0}^{(1)} )\} + n^{2} {{\text{E}}_{\text{T}} \{ ({\varvec{\Lambda}}^{(2)} {\mathbf{l}}_{0}^{(2)} )^{ \langle 2 \rangle } \} } \bigg] \hfill \\ \,\,\, + O(n^{ - 3} ) \hfill \\ \end{aligned} $$(132)

where, e.g., \( \mathop [\limits_{{({\text{A}})}} \cdot \mathop ]\limits_{{({\text{A}})}} \) is for ease of finding correspondence.

-

2.

$$ \begin{aligned} {\text{E}}_{\text{T}} \left\{ {\frac{1}{6}\frac{{\partial^{3} \bar{l}^{*} }}{{(\partial {\varvec{\uptheta}}_{0}^{\prime} )^{ \langle 3 \rangle } }}({\hat{\varvec{\uptheta }}}_{\text{ML}} - {\varvec{\uptheta}}_{0} )^{ \langle 3 \rangle } } \right\} \hfill \\ = n^{ - 2} \frac{{\partial^{3} \bar{l}^{*} }}{{(\partial {\varvec{\uptheta}}_{0}^{\prime} )^{ \langle 3 \rangle } }}\left[ {\frac{{n^{2} }}{6}{\text{E}}_{\text{T}} \{ ({\varvec{\Lambda}}^{(1)} {\mathbf{l}}_{0}^{(1)} )^{ \langle 3 \rangle } \} + \frac{{n^{2} }}{2}{\text{E}}_{\text{T}} \{ ({\varvec{\Lambda}}^{(2)} {\mathbf{l}}_{0}^{(2)} ) \otimes ({\varvec{\Lambda}}^{(1)} {\mathbf{l}}_{0}^{(1)} )^{ \langle 2 \rangle } \} } \right] \hfill \\ \,\,\, + O(n^{ - 3} ) \hfill \\ \end{aligned} $$(133)

-

3.

$$ \begin{aligned} {\text{E}}_{\text{T}} \left\{ {\frac{1}{24}\frac{{\partial^{4} \bar{l}^{*} }}{{(\partial {\varvec{\uptheta}}_{0}^{\prime} )^{ \langle 4 \rangle } }}({\hat{\varvec{\uptheta }}}_{\text{ML}} - {\varvec{\uptheta}}_{0} )^{ \langle 4 \rangle } } \right\} \hfill \\ \,\,\,\,\,\,\,\, = \frac{{n^{ - 2} }}{8}{\text{vec}}^{{\prime}} ({\varvec{\Lambda}}^{ - 1} {\varvec{\Gamma \Lambda }}^{ - 1} )\frac{{\partial^{4} \bar{l}^{*} }}{{(\partial {\varvec{\uptheta}}_{0} )^{ \langle 2 \rangle } (\partial {\varvec{\uptheta}}_{0}^{\prime} )^{ \langle 2 \rangle } }}\,{\text{vec}}({\varvec{\Lambda}}^{ - 1} {\varvec{\Gamma \Lambda }}^{ - 1} )\,\, +\, O(n^{ - 3} ). \hfill \\ \end{aligned} $$(134)

In 1, 2 and 3, when the model is true \( {\text{E}}_{\text{T}} ( \cdot ) = {\text{E}}_{{\theta_{0} }} ( \cdot ) \) and \( - {\varvec{\Lambda}} = {\varvec{\Gamma}} = {\mathbf{I}}_{0} \). Especially, the term of order \( O(n^{ - 1} ) \) becomes

That is, the expectation is asymptotically smaller than \( \bar{l}^{*} ({\varvec{\uptheta}}_{0} ) = \bar{l}_{0}^{*} \) by \( n^{ - 1} q/2 \) up to this order.

About this article

Cite this article

Ogasawara, H. A family of the adjusted estimators maximizing the asymptotic predictive expected log-likelihood. Behaviormetrika 44, 57–95 (2017). https://doi.org/10.1007/s41237-016-0004-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41237-016-0004-6