Abstract

As evident from classical results on random polynomials, it is difficult to derive the probability distribution of the number of real roots \(N_{n}(\mathbb {R})\) of a random polynomial of degree n, and even if derived, the distribution is not of any standard form. In this article, we construct a class of random polynomials of degree \(2(n+1)\) such that the distribution of \(N_{2(n+1)}(\mathbb {R})\) belongs to the scale family of binomial distributions. For the constructed class of random polynomials, we further notice that as \(n \rightarrow \infty\), the expected proportion of real roots \(E\left( \frac{N_{2(n+1)}(\mathbb {R})}{2(n+1)}\right)\) need not converge to 0, in contrast to most of the existing literature on random polynomials which show \(E(N_{n}(\mathbb {R})) = o(n)\) as \(n \rightarrow \infty\) that, in turn, implies that asymptotically the majority of the roots of the random polynomial are non-real. The second result of this article shows that in fact for any given \(p \in [0,1]\), the construction can be engineered in such a way that the random polynomial has light-tailed coefficients and \(E(N_{2(n+1)}(\mathbb {R})) \sim 2(n+1)p\) as \(n \rightarrow \infty\). Hence, for the class of random polynomials, that we have constructed in this article, asymptotically the number of real roots can be arbitrarily large. Compared to Kac polynomials, which consist of light-tailed random coefficients, the amount of research done for random polynomials whose coefficients are non-identical/dependent/heavy-tailed, is relatively scarce. In the final part of the present article, we give the third and final result that concerns random polynomials with heavy-tailed coefficients. We extend the second result to show that for any given \(p \in (0,1]\), we can construct non-Kac, random polynomials with heavy-tailed, stochastically dependent coefficients for which \(E(N_{2(n+1)}(\mathbb {R})) \sim 2(n+1)p\) as \(n \rightarrow \infty\). All these results are based on the assumption that all the coefficients of the constructed class of random polynomials are continuous random variables. We conclude the article with a discussion of how they would change if instead, we assume that the coefficients are general random variables and how far the results derived in this article can be extended to some higher degree random polynomials of the same structure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Polynomials are one of the most simple mathematical functions. For a polynomial \(p_{n}(x)=\sum _{i=0}^{i=n}a_{i}x^{i}\) of degree n defined over \(\mathbb {R}\), \(\alpha\) is called a root of \(p_{n}(x)\) if \(p_{n}(\alpha )=0\). Extensive research has been done to study the properties of different characteristics of polynomials. Examples of such characteristics include the roots themselves, the number of real roots, irreducibility, etc. When the coefficients \(A_{i}\)s are random variables, all the characteristics of a polynomial (e.g. the number of real roots) become random. Such polynomials denoted as \(F_{n}(x) = A_{0}+A_{1}x+\cdots +A_{n}x^{n}\) are called random polynomials. They have applications in the theory of polynomials. Properties of random polynomials lead to the formulation of new hypotheses about polynomials, which are otherwise difficult to discover. This approach to understanding statistical properties to compute a deterministic real picture is a typical example of a probabilistic method. Apart from that, random polynomials have applications in mathematical physics. Wigner (1955, 1958) modeled heavy atom energies with eigenvalues of random matrix and a vast literature studying the behaviour of those eigenvalues emerged subsequently. Eigenvalues of random matrices are roots of random characteristic polynomials and hence, the theory of random polynomials finds applications in their study too. Random polynomials are also used in the study of quantum systems. Multidimensional quantum systems are approximated by mathematical equations and in these approximations, one often needs to locate the roots of polynomials of high degree whose coefficients are rapidly-varying erratic functions of the energy (Bogomolny et al. 1996). As a result, these coefficients may be considered as random variables, even in a small energy interval; therefore making the underlying polynomial a random one. Sometimes, random polynomials arise as solutions of stochastic differential equations. Bharucha-Reid and Sambandham (2014) have discussed random Legendre polynomial which arises as a solution to a stochastic version of Legendre equation. Finally, random polynomials are also useful in complexity theory, where they are utilized to calculate the average case complexity of numerical algorithms. Emiris et al. (2010) used properties of random polynomials, in order to calculate the average case complexity of the bisection method.

To contrast with trigonometric random polynomials, orthogonal random polynomials, and other classes of random functions, often random polynomials are also referred to as algebraic random polynomials. The simplest form an algebraic random polynomial can take is referred to as Kac polynomial.

Definition 1

(Kac Polynomial) Let n be a positive integer, \(c_{0},\ldots , c_{n}\) be deterministic numbers, and A be a random variable (which we call the atom distribution) of mean zero and finite nonzero variance. Consider the random polynomial \(F_{n}(x) = c_{0} A_{0}+ c_{1} A_{1}x+\cdots + c_{n} A_{n}x^{n}\), where \(A_{0}, \ldots , A_{n}\) are jointly independent copies of A. It is referred to as Kac Polynomial if \(c_{0}=c_{1}=\cdots = c_{n} = 1\).

In practice, one usually normalizes the atom distribution A to have unit variance. However, the normalization does not affect the zeroes of \(F_{n}\). A detailed exposition of Kac Polynomials is available in the books (Bharucha-Reid and Sambandham 2014) and (Farahmand 1998). Other choices of values for \(c_{0},\ldots , c_{n}\) lead to non-Kac polynomials. Among them, Weyl polynomials and Elliptic Polynomials demand separate attention. These random polynomials are investigated along several different lines. The distribution of the roots on the complex plane is of significant interest to Mathematicians. Another line of work studies the no of real roots of the random polynomials of degree n, which is denoted by \(N_{n}(\mathbb {R})\). As the polynomial is random, the number of real roots \(N_{n}(\mathbb {R})\) is also random. A significant amount of literature exists on random polynomials which studied the asymptotic behaviour of the expected number of real roots \(E(N_{n}(\mathbb {R}))\) (Bloch and Pólya 1932; Littlewood and Offord 1939, 1943, 1938). Subsequently, Kac (1943) was able to derive an exact expression of \(E(N_{n}(\mathbb {R}))\) for finite n albeit when all the coefficients of the random polynomial are Gaussians with mean zero. In a different line of work, Wang (1983) and Yamrom (1972) gave a more accurate asymptotic representation of \(E(N_{n}(\mathbb {R}))\) that ultimately culminated with the work (Wilkins 1988) where the authors obtained an asymptotic series for \(E(N_{n}(\mathbb {R}))\).

In stark contrast, there exist very few works that studied the distribution of the random variable \(N_{n}(\mathbb {R})\). Maslova (1975) proved that if coefficients satisfy the conditions \(P(A_{i} = 0)=0, E(A_{i}) = 0\), and \(E(|A_{i}|^{2+\epsilon }) < \infty\) for some \(\epsilon > 0\) then \(N_{n}(\mathbb {R})\) asymptotically follows some Gaussian distribution. To the best of our knowledge, there does not exist any article studying the exact distribution of \(N_{n}(\mathbb {R})\) for finite n. In this article, we construct a class of random polynomials such that the distribution of \(N_{n}(\mathbb {R})\) belongs to the scale family of binomial distributions. We observe that for the constructed class of random polynomials as \(n \rightarrow \infty\), the expected proportion of real roots \(E(\frac{N_{n}(\mathbb {R})}{n})\) need not converge to 0, in contrast to most of the existing literature on random polynomials which show \(E(N_{n}(\mathbb {R})) = o(n)\) as \(n \rightarrow \infty\) that, in turn, implies that asymptotically the majority of the roots of the random polynomial are non-real. Curious by the observation then we investigate whether for any given \(p \in [0,1]\) we can construct random polynomials for which \(E(N_{n}(\mathbb {R})) \sim np\) as \(n \rightarrow \infty\). The second result of this article shows that indeed such a construction is possible albeit using light-tailed random coefficients.

Compared to Kac polynomials, the amount of research done for random polynomials whose coefficients are non-identical/dependent/heavy-tailed is relatively scarce. Recently, Matayoshi (2012) considered the case where the coefficients of a random polynomial are dependent but form a stationary sequence of N(0, 1) distributions and obtained that \(E(N_{n}(\mathbb {R})) \sim \frac{2}{\pi } \log n\) as \(n \rightarrow \infty\). Nezakati and Farahmand (2010) considered the case where the sequence of coefficients is distributed according to a Gaussian process with stationary covariance function \({\textit{Cov}}(A_{i},A_{j}) = 1-\frac{|i-j|}{n}\) and obtained that \(E(N_{n}(\mathbb {R})) = O(\sqrt{\log n})\) for \(n \rightarrow \infty\). Rezakhah and Shemehsavar (2005, 2008) studied random polynomials and \(N_{n}(\mathbb {R})\) when the coefficients are generated by Brownian motion process and hence non-stationary. Their work was further extended by Mukeru (2019), who considered coefficients generated by successive increments of the fractional Brownian motion process.

A different line of work explored random polynomials with heavy-tailed coefficients. While most of the existing works considered coefficients that are either Gaussians or follow some distributions (may not be the same though for every coefficient) that have all order moments finite, Ibragimov and Maslova (1971a, 1971b) relaxed this condition considerably to establish asymptotic behaviour of \(E(N_{n}(\mathbb {R}))\) for iid coefficients which only have finite variance. Recently, Do et al. (2018) derived the asymptotic behaviour of \(E(N_{n}(\mathbb {R}))\) for \(A_{i}\) such that \(E|A_{i}|^{2+\epsilon }\) are uniformly bounded but possibly with non-identical distributions. The scope of the result (Do et al. 2018) is much wider since it is derived for generalized random polynomials which are more general functions than random polynomials and can accommodate fractional degrees, unlike random polynomials whose degree can only be a positive integer. In the final part of the present article, we give the third and final result that concerns random polynomials with heavy-tailed coefficients. We extend the second result to show that for any given \(p \in (0,1]\), we can construct non-Kac, random polynomials with heavy-tailed, stochastically dependent coefficients for which \(E(N_{n}(\mathbb {R})) \sim np\) as \(n \rightarrow \infty\).

All the results derived in this article are based on the assumption that all coefficients of the constructed class of random polynomials are continuous random variables. We conclude the article with a discussion of how they would change if instead, we assume that the coefficients are general random variables and how far the results derived in this article can be extended to some higher degree random polynomials of the same structure.

2 Expected Number of Real Roots, Kac–Rice Formula and Beyond

Any discussion on random polynomials is incomplete without the Kac–Rice formula. It is, in particular used to count the expected number of real roots of a random polynomial whose all coefficients are Gaussians. It is built on the following result from real analysis which counts the number of real roots of a continuously differentiable function F(x). Let F(x) be continuous for \(a\leqslant x\leqslant b\), continuously differentiable for \(a<x<b\), and have a finite number of turning points (that is, only a finite number of points at which \(F^{\prime }(x)\) vanishes in (a, b)). Then the number of real roots of F(x) in the interval (a, b) is denoted by N(a, b), and is given by the formula

In this formula, multiple roots are counted once, and if either a or b is a zero it is counted as \(\frac{1}{2}\). This formula is then applied to calculate the number of real roots \(N_{n}(a,b)\) in the interval (a, b) and \(E(N_{n}(a,b))\) of a random polynomial of degree n whose all coefficients are Gaussians.

Definition 2

(Kac–Rice Formula) Kac–Rice formula gives an integral representation of \(E(N_{n}(a,b))\) as follows

where \(\textbf{g}=\left( a_{0}, a_{1}, \ldots , a_{n}\right)\) is a point in \(\mathbb {R}^{n+1}, f(s, t; x)\) denotes the joint probability density of \(h(x, \omega )\) and \(h^{\prime }(x, \omega )\) for \(x \in \mathbb {R}\) at \(h(x, \omega )=s\), \(h^{\prime }(x, \omega )=t\) where \(h(x, \omega ), x \in \mathbb {R}\) is a real-valued function. Further simplification of Kac–Rice formula when all the coefficients are iid Gaussian leads to the following results (see Bharucha-Reid and Sambandham 2014).

Case I Suppose that all the coefficients of the random polynomial \(F_{n}(x)\) are identically but not necessarily independent Gaussians with mean \(m(\ne 0)\), variance 1; and let the joint density function of the coefficients at the point \(\left( a_{0}, a_{1}, \ldots , a_{n}\right)\) be

where \(M^{-1}\) is the moment matrix with \(\rho _{i j}=\rho , 0<\rho <1, i \ne j\). Then using the Kac–Rice formula one gets

where

Then one obtains the following:

Case II When all the coefficients of \(F_{n}(x)\) are identically but not necessarily independent Gaussians with mean \(m = 0\), variance 1; one gets

where \(M^{-1}\) is the moment matrix with \(\rho _{i j}=\rho , 0<\rho <1, i \ne j\). Then using the Kac–Rice formula, Bharucha-Reid and Sambandham (2014) proved that

where \(A_{n}, B_{n}, C_{n}\) are similar in values as stated in Case I. For large n

Subcase I If all the random coefficients are iid normal random variables with mean \(m(\ne 0)\) and variance one; that is the density function of each \(A_{k}\) is

then

Then one can obtain

Subcase II If all the random coefficients are iid standard normal random variables.

Then one gets

While all these results concern Gaussian random polynomials, some classical papers studied \(F_{n}(x)\) with coefficients that are iid uniformly distributed on \((-1,1)\) or iid discrete random variables that take values \(+1\) or \(-1\) with probability \(\frac{1}{2}\) (except the leading coefficient \(A_{n}\) which is 1, a.s.). Under those conditions one can show that for each \(n\geqslant 0\), for some \(n_{0}>0\),

and

where \(\alpha\) and K are absolute constants.

3 Main Results

In this section, we present the main results of this article. We construct a random polynomial as follows

where \(\left\{ A_{3 n-2}\right\} _{n \geqslant 1}=\left\{ A_{1}, A_{4}, A_{7}, \ldots \right\}\) is a sequence of iid continuous random variables following common CDF \(F_{1}\), \(\left\{ A_{3 n-1}\right\} _{n \geqslant 1}=\left\{ A_{2}, A_{5}, A_{8}, \ldots \right\}\) is a sequence of iid continuous random variables following common CDF \(F_{2}\), and \(\left\{ A_{3 n}\right\} _{n \geqslant 1}=\left\{ A_{3}, A_{6}, A_{9}, \ldots \right\}\) is a sequence of iid continuous random variables following common CDF \(F_{3}\). The CDFs \(F_{1}, F_{2}\), and \(F_{3}\) need not be the same. We further assume that the three sequences of random coefficients are jointly independent. This particular form is considered mainly out of mathematical curiosity. However, note that, they are the characteristic polynomials associated with block diagonal and block triangular random matrices with \(2 \times 2\) blocks and the associated real roots are the real eigenvalues of these block random matrices. Hence, studying these roots shed light on the behaviour of eigenvalues of certain random matrices. Owing to this special form of the coefficients we can bypass the Kac–Rice formula and a direct calculation yields a closed-form expression for \(E(N_{2(n+1)}(\mathbb {R}))\). In fact, direct calculation leads to a nice expression for the distribution of the random variable \(N_{2(n+1)}(\mathbb {R})\).

3.1 Exact Distribution of \(N_{2(n+1)}(\mathbb {R})\)

Theorem 1

Let \(N_{2(n+1)}(\mathbb {R})\) be the no. of real roots of \(F_{2(n+1)}(x)\). Then

A consequence of the above result is that the expected number of real roots of \(F_{2(n+1)}(x)\) is

and if \(p \in (0,1)\) then as \(n \rightarrow \infty\) we get

where

Note that one may also count \(N_{2(n+1)}(\mathbb {R})\) excluding multiplicity of zeroes. In either case, the above-mentioned results remain unchanged.

Proof of Theorem 1

Let us consider the random quadratic function \(f(x)=A_{1}+A_{2} x+A_{3} x^{2}\) where \(A_{1}, A_{2}\) and \(A_{3}\) are continuous random variables with CDFs \(F_{1},F_{2}\) and \(F_{3}\), respectively. Suppose \(N(\mathbb {R})\) counts the number of real roots excluding multiplicity.

or,

Now, since \(A_{1}, A_{2}, A_{3}\) are jointly independent continuous random variables, \(A_{2}{ }^{2}-4 A_{1} A_{3}\) is also a continuous random variable and hence, \(P\left( A_{2}^{2}-4 A_{1} A_{3}=0\right) =0\). If we denote \(P\left( A_{2}^{2}-4 A_{1} A_{3}>0\right)\) by p and set \(q=1-p\)

or,

Now consider

and note that

Let \(m_{k}(\mathbb {R})\) be the number of real roots of \(f_{k}(n)\). Since, \(N_{2(n+1)}(\mathbb {R})\) denotes the number of real roots of \(F_{2(n+1)}(x)\)

where,

With that we end up showing that

\(\square\)

Then by property of Binomial distribution, it follows that

or, equivalently

Simple application of De-Moivre–Laplace CLT implies that if \(p \in (0,1)\) then

In simplified notation

or, equivalently

Note that, we have counted \(N(\mathbb {R})\) excluding the multiplicity. However, even if we count \(N(\mathbb {R})\) including the multiplicity we get

Now, since \(A_{1}, A_{2}\) and \(A_{3}\) are jointly independent continuous random variables, \(A_{2}{ }^{2}-4 A_{1} A_{3}\) is also a continuous random variable. Hence, \(P\left( A_{2}^{2}-4 A_{1} A_{3}=0\right) =0\) and so \(P\left( A_{2}^{2}-4 A_{1} A_{3} \ge 0\right) = P\left( A_{2}^{2}-4 A_{1} A_{3} > 0\right) = p\) and \(q=1-p\).

Consequently, all the results of Theorem 1 remains unchanged.

3.2 Proportion of Real Roots

For the constructed class of random polynomials as \(n \rightarrow \infty\), the expected proportion of real roots \(E(\frac{N_{2(n+1)}(\mathbb {R})}{2(n+1)})\) need not converge to 0, in contrast to most of the existing literature on random polynomials which show \(E(N_{n}(\mathbb {R})) = o(n)\) as \(n \rightarrow \infty\) that, in turn, implies that asymptotically the majority of the roots of the random polynomial are non-real. Curious by the observation then we investigate whether for any given \(p \in [0,1]\) we can get CDFs \(F_{1},F_{2}\) and \(F_{3}\) such that for the constructed \(F_{2(n+1)}(x)\), \(E(N_{2(n+1)}(\mathbb {R})) \sim 2(n+1)p\) as \(n \rightarrow \infty\). The second result of this article searches for an answer to this question.

Theorem 2

Given any \(p \in [0,1]\) it is possible to construct CDFs \(F_{1},F_{2}\), and \(F_{3}\) of continuous light-tailed random variables such that for the constructed \(F_{2(n+1)}(x)\), \(E(N_{2(n+1)}(\mathbb {R})) \sim 2(n+1)p\) as \(n \rightarrow \infty\).

Proof of Theorem 2

Let us fix \(p \in [0,1]\).

Case I \(p=0\). \(F_{1}, F_{2}\) and \(F_{3}\) are CDFs associated with U[2, 3], U[0, 1] and U[2, 3], respectively. Then see that \(P\left( A_{2}^{2}> 4 A_{1} A_{3} \right) = 0\) since \(4A_{1} A_{3} \ge 16\) w.p. 1 but \(A_{2}^{2} \le 1\) w.p. 1.

Case II \(p=1\). \(F_{1}, F_{2}\) and \(F_{3}\) are CDFs associated with U[0, 1], U[3, 4] and U[0, 1], respectively. Then see that \(P\left( A_{2}^{2}> 4 A_{1} A_{3} \right) = 1\) since \(4A_{1} A_{3} \le 4\) w.p. 1 but \(A_{2}^{2} \ge 9\) w.p. 1.

Case III \(p \in (0,1)\). This is the most interesting case and we choose \(F_{1}\) and \(F_{3}\) to be CDFs associated with U[0, 1] and U[0, 1], respectively. Then, we choose \(F_{2}\) judiciously. Let us define \(F_{2}\) as follows

Firstly, it is easy to show that \(F_{2}(x)\) is a CDF associated with a continuous random variable \(A_{2}\) with density as follows

Then,

Therefore, for any given \(p \in [0,1]\), we can choose CDFs \(F_{1},F_{2}\) and \(F_{3}\) associated with continuous light-tailed random variables such that for the constructed \(F_{2(n+1)}(x)\), \(E(N_{2(n+1)}(\mathbb {R})) = 2(n+1)p\) and hence, \(E(N_{2(n+1)}(\mathbb {R})) \sim 2(n+1)p\) as \(n \rightarrow \infty\). \(\square\)

3.3 Heavy-Tailed Random Polynomial

In this final part of the article, we investigate the distribution of \(N_{n}(\mathbb {R})\) and the asymptotic behaviour of \(E(N_{n}(\mathbb {R}))\) when \(A_{i}\) s are heavy-tailed random variables. First, we show that when \(A_{i}\) s are heavy-tailed random variables then the coefficients of \(F_{n}(x)\) are also heavy-tailed. However, note that by heavy-tailed random variables here we refer to those random variables for which the MGF does not exist in any neighbourhood of zero. There are alternative stronger definitions of heavy-tailed random variables.

Proposition 3

If \(x_{1}, x_{2} \ge 2\) then \(x_{1} x_{2} \ge x_{1}+x_{2}\).

Proof of Proposition 3.

Proposition 4

If \(\int _{0}^{\infty } e^{t x} f(x) d x\) diverges to infinity, when \(f(\cdot )\) is a p.d.f, then \(\int _{k}^{\infty } e^{t x} f(x) d x\) also diverges infinity, where \(K \in \mathbb {R}^{+}\).

Proof of Proposition 4.

Now,

\(\therefore \int _{0}^{k} e^{t x} f(x) d x\) is bounded. Since, \(\int _{0}^{k} e^{t x} f(x)+\int _{k}^{\infty } e^{t x} f(x) d x\) diverges to infinity, we conclude that \(\int _{k}^{\infty } e^{t x} f(x) d x\) also diverges to infinity.

Proposition 5

If at least one of \(Y_{1}, Y_{2}, \ldots , Y_{n}\) is a heavy-tailed random variable, then \(\prod _{i=1}^{n} Y_{i}\) and \(\sum _{i=1}^{n} Y_{i}\) are also heavy-tailed.

Proof of Proposition 5. First, we show that \(Y_{1}Y_{2}\) is heavy-tailed random variable. Note that, \(E\left( e^{t Y_{1} Y_{2}}\right) =\int _{0}^{\infty } \int _{0}^{\infty } e^{t y_{1} y_{2}} f_{Y_{1}}\left( y_{1}\right) f_{Y_{2}}\left( y_{2}\right) d y_{1} d y_{2}\) \(\geqslant \int _{2}^{\infty } \int _{2}^{\infty } e^{t y_{1} y_{2}} f_{Y_{1}}\left( y_{1}\right) f_{Y_{2}} \left( y_{2}\right) d y_{1} d y_{2}\)

\(\geqslant \int _{2}^{\infty } \int _{2}^{\infty } e^{t\left( y_{1}+y_{2}\right) } f_{Y_{1}}\left( y_{1}\right) f_{Y_{2}}\left( y_{2}\right) d y_{1}d y_{2}\) \(=\left\{ \int _{2}^{\infty } e^{t y} \cdot f_{Y_{1}}\left( y_{1}\right) d y_{1}\right\} \left\{ \int _{2}^{\infty } e^{t y_{2}} f_{Y_{2}} \left( y_{2}\right) d y_{2}\right\}\)

By putting \(k=2\) in Proposition 4, we have: \(\int _{2}^{\infty } e^{t y_{i}} f_{Y_{i}}\left( y_{i}\right) d y_{i}\) diverges to infinity for at least one of \(i=1,2\).

\(\Longrightarrow \left\{ \int _{2}^{\infty } e^{t y} \cdot f_{Y_{1}}\left( y_{1}\right) d y_{1}\right\} \left\{ \int _{2}^{\infty } e^{t y_{2}} f_{Y_{2}}\left( y_{2}\right) d y_{2}\right\}\) diverges to infinity \(\Rightarrow E\left( e^{t Y_{1} Y_{2}}\right)\) is not finite, where \(t>0\).

\(\Longrightarrow Y_{1} Y_{2}\) is also a heavy-tailed random variable. By repeated application of the previous result then we can show that if at least one of \(Y_{1}, Y_{2}, \ldots , Y_{n}\) is a heavy-tailed random variable, then \(\prod _{i=1}^{n} Y_{i}\) is also heavy-tailed.

Now, we show that if at least one of \(Y_{1}, Y_{2}, \ldots , Y_{n}\) is a heavy-tailed random variable then \(\sum _{i=1}^{n} Y_{i}\) is also heavy-tailed. Note that, \(E\left( e^{t Y_{i}}\right)\) diverges to infinity, \(\forall t>0\), for at least one of \(i=1(1) \textrm{n}\). Now, \(P\left( e^{t Y_{i}} \geqslant 0\right) =1\) \(\Longrightarrow P\left( e^{t\left( Y_{1}+Y_{2}+\ldots +Y_{n}\right) } \geqslant e^{t Y_{i}}\right) =1, \forall i=1(1) n\) \(\Longrightarrow E\left( e^{t\left( Y_{1}+Y_{2}+\cdots +Y_{n}\right) }\right) \geqslant E\left( e^{t Y_{i}}\right) \forall i=1(1) n\). Hence, \(E\left( e^{t\left( y_{1}+\cdots +y_{n}\right) }\right)\) also diverges to infinity, which in turn, implies \(Y_{1}+Y_{2}+\cdots +Y_{n}\) is also a heavy-tailed random variable.

Now, since each coefficient in the expanded polynomial is formed by the adding products of \(A_{i}\), we conclude that they are heavy-tailed if all the \(A_{i}\)s are heavy-tailed. Note that, Theorem 1 does not specify whether \(F_{1},F_{2}\), and \(F_{3}\) are CDFs associated with continuous light-tailed or heavy-tailed random variables, and hence the result is directly applicable to the heavy-tailed coefficients, too. So, if we take the \(A_{i}\) to be heavy-tailed continuous random variables then also \(\frac{N_{2(n+1)}(\mathbb {R})}{2}\) will follow a binomial distribution. However, now for any given \(p\in [0,1]\), finding \(F_{1},F_{2}\), and \(F_{3}\) which are CDFs associated with continuous heavy-tailed random variables such that the \(E(N_{2(n+1)}(\mathbb {R})) \sim 2(n+1)p\) as \(n \rightarrow \infty\) may be tricky. In what follows, we show that for any given \(p\in (0,1]\) we can choose continuous heavy-tailed distributions \(F_{1},F_{2}\), and \(F_{3}\) such that \(E(N_{2(n+1)}(\mathbb {R})) \sim 2(n+1)p\) as \(n \rightarrow \infty\). We further show that for no choice of \(F_{1},F_{2}\), and \(F_{3}\) we can extend this result to \(p=0\) case.

Theorem 6

For any given \(p \in (0,1]\), it is possible to design continuous heavy-tailed distributions \(F_{1},F_{2}\), and \(F_{3}\) such that \(E(N_{2(n+1)}(\mathbb {R})) \sim 2(n+1)p\) as \(n \rightarrow \infty\). Furthermore, it is not possible to choose continuous heavy-tailed distributions \(F_{1},F_{2}\) and \(F_{3}\) such that \(E(N_{2(n+1)}(\mathbb {R})) = o(n)\) as \(n \rightarrow \infty\).

Proof of Theorem 6

Let us fix \(p \in (0,1]\).

Case I \(p=1\). \(F_{1}, F_{2}\), and \(F_{3}\) are CDFs associated with \({\textit{Pareto}}(1,1), {\textit{Pareto}}(1,1)\), and \(-{\textit{Pareto}}(1,1)\), respectively. Then see that \(P\left( A_{2}^{2}> 4 A_{1} A_{3} \right) = 1\) since \(4A_{1} A_{3} < 0\) w.p. 1 but \(A_{2}^{2} \ge 0\) w.p. 1.

Case II \(p \in (0,1)\). In this more interesting case we choose both of \(F_{1}\) and \(F_{3}\) to be CDF of \({\textit{Pareto}}(\alpha _{1},\beta )\) and \(F_{2}\) to be CDF of \({\textit{Pareto}}(2\alpha ,\beta )\) where \(\alpha , \alpha _{1} > 0\) and \(\beta > 0\) are to be selected later. Let us denote the pdf associated with \(F_{1}\) and \(F_{3}\) by \(f_{1}\) and \(f_{3}\). Then,

Now, we calculate \(E(\frac{1}{A^{\alpha }_{1}})\). See that

Hence, \(P\left( A_{2}^{2}-4A_{1}A_{3}>0\right) = \frac{\beta ^{2 \alpha }}{4^{\alpha }} E(\frac{1}{A^{\alpha }_{1}}) E(\frac{1}{A^{\alpha }_{3}}) = \frac{\alpha ^{2}_{1}}{(\alpha + \alpha _{1})^{2} 4^{\alpha }}\). We set \(\alpha _{1} = 1\) to get \(P\left( A_{2}^{2}-4A_{1}A_{3}>0\right) = \frac{1}{(\alpha + 1)^{2} 4^{\alpha }}\). Since the function \(g(x) = \frac{1}{(x + 1)^{2} 4^{x}}\) is continuous and strictly monotonically decreasing on \(\mathbb {R}^{+}\) and as \(\lim _{x \rightarrow 0^{+} } g(x) =1\) and \(\lim _{x \rightarrow \infty } g(x) =0\) so for any \(p \in (0,1)\) there exists a unique \(x_{0}\) such that \(g(x_{0}) = p\), and then we set \(\alpha = x_{0}\). That leads to \(P\left( A_{2}^{2}-4A_{1}A_{3}>0\right) = p\).

Therefore, for any given \(p \in (0,1]\), we can choose CDFs \(F_{1},F_{2}\), and \(F_{3}\) associated with continuous heavy-tailed random variables such that for the constructed \(F_{2(n+1)}(x)\), \(E(N_{2(n+1)}(\mathbb {R})) = 2(n+1)p\) and hence \(E(N_{2(n+1)}(\mathbb {R})) \sim 2(n+1)p\) as \(n \rightarrow \infty\). Now we show that it is not possible to choose continuous heavy-tailed distributions \(F_{1},F_{2}\), and \(F_{3}\) such that \(E(N_{2(n+1)}(\mathbb {R})) = o(n)\) as \(n \rightarrow \infty\). We would prove it by contradiction. Suppose there are such continuous heavy-tailed distributions \(F_{1},F_{2}\), and \(F_{3}\). Recall that \(A_{1} \sim F_{1}\) and \(A_{2} \sim F_{2}\) are independent random variables. One can get \(a<b\) and \(b>0\) such that \(P(a \le 4A_{1}A_{3} \le b) > 0\). So,

which implies that \(F_{2}\) has bounded support thereby contradicting the assumption \(F_{2}\) is a heavy-tailed distribution. \(\square\)

3.4 Simulation Studies

The theoretical findings obtained in the previous subsections are supplemented via simulation studies. We study the distribution of \(N_{2(n+1)}(\mathbb {R})\) for three different sample sizes. We plot the relative frequency histogram of \(N_{10}(\mathbb {R}), N_{50}(\mathbb {R})\) and \(N_{200}(\mathbb {R})\). These quantities denote the number of real roots of random polynomials of degree 10, 50 and 200, respectively. We consider different probability distributions for the coefficients \(A_{0}, \ldots , A_{n}\), to carry out the simulation studies. First, we consider the case when \(F_{1}, F_{2}\), and \(F_{3}\) are N(0, 1) (Fig. 1).

Then we consider the two cases when \(F_{1}, F_{2}\), and \(F_{3}\) are LN(0, 1), and \(F_{1}, F_{2}\), and \(F_{3}\) are C(0, 1). Note that, LN(0, 1) does not admit finite MGF, and hence, as per our definition of a heavy-tailed random variable, LN(0, 1) is a heavy-tailed distribution. On the other hand, standard Cauchy distribution, denoted by C(0, 1), does not admit finite mean as well as finite MGF and hence, qualifies as a heavy-tailed distribution in a much stronger sense (Figs. 2 and 3).

Although, the theorems of the previous subsections are based on the assumption that \(F_{1}, F_{2}\), and \(F_{3}\) are continuous distributions; it is curious to see how the distribution of \(N_{2(n+1)}(\mathbb {R})\) is modified, if instead, we assume that the coefficients are discrete random variables (Figs. 4 and 5).

As n becomes larger, the distribution \(N_{2(n+1)}(\mathbb {R})\) can be approximated by normal distribution more accurately. Hence, unlike the distribution \(N_{10}(\mathbb {R})\) and \(N_{50}(\mathbb {R})\), which are skewed, the distribution of \(N_{200}(\mathbb {R})\) is symmetric and bell-shaped. For each simulation study, 10,000 samples are generated to compute the respective histogram.

3.5 Discussion and Concluding Remarks

The results derived in this article are based on the assumption that the coefficients are continuous random variables. It is curious to see how are they modified if instead, we assume that the coefficients are general random variables. The exact distribution of \(\frac{N_{2(n+1)}(\mathbb {R})}{2}\) remains unchanged if roots are counted including the multiplicity. However, if roots are counted excluding multiplicity, in that case, \(N_{2(n+1)}(\mathbb {R})\) follows a distribution that is the \((n+1)\)-fold convolution of a 3-point discrete distribution supported on \(\{0,1,2\}\) of which Bernoulli is a special case.

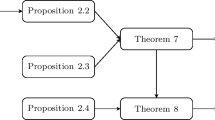

Another question that arises naturally is whether the results derived in this article can be extended to some higher degree random polynomials of the same structure. A theorem similar to Theorem 1 can be stated for \(F_{3(n+1)}(x) =\left( A_{1}+A_{2} x+A_{3} x^{2}+A_{4} x^{3}\right) \cdots \left( A_{4n+1}+A_{4n+2} x+A_{4n+3} x^{2} + A_{4n+4} x^{3}\right)\) and one can show that \(\frac{N_{3(n+1)}(\mathbb {R}) - (n+1)}{2} \sim {\text {Bin}}\left( n+1, P\left( 4(A_{3}^{2} - 3A_{2}A_{4})^{3} - (2A_{3}^{3} - 9 A_{2}A_{3}A_{4} +27A_{1}A_{4}^{2})^{2}>0\right) \right)\). However, in that case, the binomial success probability is of nontrivial form, rendering an extension of Theorems 2 and 6 difficult. Beyond the third-degree polynomial, the scaled and centered value of the number of real roots is no longer binomially distributed. When k is even, the distribution of \(\frac{N_{k(n+1)}(\mathbb {R})}{2}\) is \((n+1)\)-fold convolution of a \(\frac{k}{2}\)-point discrete distribution supported on \(\{0,1,2,\ldots ,\frac{k}{2}\}\), and when k is odd, the distribution of \(\frac{N_{k(n+1)}(\mathbb {R}) -(n+1)}{2}\) is \((n+1)\)-fold convolution of a \(\frac{k-1}{2}\)-point discrete distribution supported on \(\{0,1,2,\ldots ,\frac{k-1}{2}\}\). Needless to mention for the above-mentined cases an extension of Theorems 2 and 6 seems infeasible.

References

Bharucha-Reid AT, Sambandham M (2014) Random polynomials: probability and mathematical statistics: a series of monographs and textbooks. Academic Press, London

Bloch A, Pólya G (1932) On the roots of certain algebraic equations. Proc Lond Math Soc 2(1):102–114

Bogomolny E, Bohigas O, Leboeuf P (1996) Quantum chaotic dynamics and random polynomials. J Stat Phys 85:639–679

Do Y, Nguyen O, Vu V (2018) Roots of random polynomials with coefficients of polynomial growth. Ann Probab 46(5):2407–2494

Emiris IZ, Galligo A, Tsigaridas EP (2010) Random polynomials and expected complexity of bisection methods for real solving. In: Proceedings of the 2010 international symposium on symbolic and algebraic computation, pp 235–242

Farahmand K (1998) Topics in random polynomials, vol 393. CRC Press, Boca Raton

Ibragimov IA, Maslova NB (1971a) On the expected number of real zeros of random polynomials I. Coefficients with zero means. Theory Probab Appl 16(2):228–248

Ibragimov IA, Maslova NB (1971b) On the expected number of real zeros of random polynomials. II. Coefficients with non-zero means. Theory Probab Appl 16(3):485–493

Kac M (1943) On the average number of real roots of a random algebraic equation. Bull Am Math Soc 49(4):314–320

Littlewood J, Offord A (1938) On the number of real roots of a random algebraic equation. J Lond Math Soc 1(4):288–295

Littlewood JE, Offord AC (1939) On the number of real roots of a random algebraic equation. II. In: Mathematical proceedings of the Cambridge philosophical society, vol 35. Cambridge University Press, Cambridge, pp 133–148

Littlewood JE, Offord AC (1943) On the number of real roots of a random algebraic equation (III). Rec Math [Mat Sbornik] NS 12(3):277–286

Maslova NB (1975) On the distribution of the number of real roots of random polynomials. Theory Probab Appl 19(3):461–473

Matayoshi J (2012) The real zeros of a random algebraic polynomial with dependent coefficients. Rocky Mt J Math 1015–1034

Mukeru S (2019) Average number of real zeros of random algebraic polynomials defined by the increments of fractional Brownian motion. J Theor Probab 32(3):1502–1524

Nezakati A, Farahmand K (2010) Real zeros of algebraic polynomials with dependent random coefficients. Stoch Anal Appl 28(3):558–564

R Core Team (2022) R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. R Foundation for Statistical Computing. https://www.R-project.org/

Rezakhah S, Shemehsavar S (2005) On the average number of level crossings of certain gaussian random polynomials. Nonlinear Anal Theory Methods Appl 63(5–7):555–567

Rezakhah S, Shemehsavar S (2008) Expected number of slope crossings of certain gaussian random polynomials. Stoch Anal Appl 26(2):232–242

Wang YJ (1983) Bounds on the average number of real roots of a random algebraic equation. Chin Ann Math Ser A 4(5):601–605

Wigner E (1955) Characteristic vectors of bordered matrices with infinite dimensions. Ann Math 62:548–564

Wigner EP (1958) On the distribution of the roots of certain symmetric matrices. Ann Math 67(2):325–327

Wilkins JE (1988) An asymptotic expansion for the expected number of real zeros of a random polynomial. Proc Am Math Soc 103(4):1249–1258

Yamrom B (1972) On the average number of real zeros of random polynomials. In: Doklady Akademii Nauk, vol 206. Russian Academy of Sciences, pp 1059–1060

Acknowledgements

We thank the anonymous reviewer for his/her valuable suggestions, which lead to a much improved version of the previously submitted draft. All the simulation studies are done and all the associated graphs are plotted using the statistical software R (version R 4.2.3) (R Core Team 2022).

Funding

No funding was used for carrying out this research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Praharaj, S., Guha, S. An Interesting Class of Non-Kac Random Polynomials. J Indian Soc Probab Stat 24, 545–564 (2023). https://doi.org/10.1007/s41096-023-00166-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41096-023-00166-5