Abstract

The primary factor governing the accuracy of the vibration test is the sensor placement. Positioning of sensors for conducting the modal analysis test must be done with utmost care. Conducting a trial test on massive structures like dams, bridges, high-rise buildings, etc. is generally challenging and expensive. With the availability of advanced finite element software packages, it is possible to simulate models and obtain satisfactory and reliable results. Therefore, a pretest planning of sensor positions can be done, based on results obtained from finite element analysis. There are only limited number of earlier studies in the field of optimization of sensors for Structural Health Monitoring of civil engineering structures. In the practice of Vibration-based Structural Health Monitoring of structures, in order to detect both bending and twisting modes, the common practice is to fix the sensors on both sides of the structure and at equal spacing. When the sensors are placed in this fashion, there might be sharing of information between adjacent sensors, thus unnecessarily escalating the instrumentation cost. Sensor optimization helps in reducing the cost of instrumentation and maximizing the individuality in the information obtained from the sensors. The current study utilizes multiple criteria-based sensor optimization for Structural Health Monitoring of a bridge. The optimization strategy utilizes two criteria: (i) Triaxial Effective Independence and Threshold of Redundancy, in order to ensure the individuality in the information gathered from the sensors. Based on the above criteria, it was found that for a redundancy value of 0.60, 27 accelerometers were required for observing 12 modes. As the optimization strategy involves a least square estimation, it was observed that when the sensors were increased beyond 25, the condition number of Fisher Information Matrix (FIM) was stabilized to 1.5 and the mean of trace of estimation error variance minimized to a value of 0.17. The modal information extracted from modal test conducted based on the optimized layout of sensors was in good agreement with the analytically obtained results. A maximum variation of 8.55% for modal frequency and 10.13% for damping ratio was observed. In addition to these two criteria, the study also uses an information entropy-based criterion to fine tune the number and position of sensors in the final sensor set. The proposed method is also compared with two other state-of-the-art optimization methods—Genetic Algorithm (GA) and Particle Swarm Optimization (PSO). Information Entropy Index (IEI) was used to compare the layout obtained from proposed method and state-of-the-art method. The IEI values for the layout based on the proposed method were 22–40% lower than those obtained using GA and PSO.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The position of sensors in a vibration test must be decided with utmost care, as it has a profound effect on the quality of the modal test. Usually, for structures like aircraft, spacecraft, vehicles, etc., the test engineer is knowledgeable about the structural behaviour. Hence, the sensor positions are decided based on engineering judgement and insights obtained from earlier research. However, in the case of massive structures like dams, bridges, etc., conducting a trial test might turn out to be a tedious job and rather expensive. However, with the availability of present-day robust finite element packages, it is possible to conduct simulations under near identical conditions as that of the original structure, making it easy to obtain a complete picture of the probable structural behaviour. As adopting a dense network of sensors would turn out to be expensive especially with limited budget, the optimization of sensors is an ongoing research topic. There has been very few research investigations in the area of sensor optimization for modal test in the field of civil engineering [1]. Kammer (2005) modified the key findings from the initial attempt in sensor optimization by Shah and Udwadia (1978) and introduced the novel idea of Effective Independence (EI). EI is a measure of information carried by each sensor regarding the observability of modes [2,3,4,5,6]. Kammer’s approach adopted expansion of the initial sensor set in an iterative fashion, as it reduced the computation effort considerably. The attractive feature about this method was that it allowed the analysts to include the positions in the sensor set where they absolutely want to measure. Thus, the method could be started with even only one sensor in the initial set and could later be expanded to the desired number of sensors. The target of sensor optimization is to maximize the mode observability by increasing the quantity of information obtained from each sensor and also to avoid the potential chances of choosing low vibration energy areas. One of the previous attempt to exclude the selection of areas with low vibration energy was utilization of mass matrix for weighing the candidate Degree Of Freedom (D.O.F) [7]. This method helped in the inclusion of points with large vibration energy in the final sensor set, thereby also increasing the signal-to-noise ratio. One of the variants of weighing EI method is Effective Independence Driving-Point Residue method (EFI-DPR), where the EIs are weighted based on the residues of the driving point. The residues were found to be proportional to the peak in the frequency response function at the driving point [8, 9]. The conventional method of maximizing the linear independence of mode shape vectors is to minimize the off-diagonal elements present in the Modal Assurance Criterion matrix (Min MAC). Li et al. (2008) adopted a computational algorithm which was proven to be simple and faster compared to the usual approach of maximizing the Fisher Information Matrix (FIM) matrix. Fisher Information (FI) quantifies how informative a vector of observation is about a parameter. Fisher Information Matrix (FIM) consists of a covariance matrix of the score vector. Score vector is defined as the vector of first partial derivative of the log-likelihood function with respect to its parameter. The algorithm was dependent on the relation between modal kinetic energy and effective independence, as the conventional method of EI is dependent on determining either Eigen Value Decomposition (EVD) or inverse of FIM, which is computationally demanding. The QR decomposition method is based on determining the norms of the orthonormal modes of modal matrix. The QR downdating steps utilized householder transformation and Gram-Schmidt process. The QR method was applied to a numerical example and compared to conventional methods, in terms of floating point operations required to solve the problem. It was found that the flops required for QR method, Projection Matrix Approach and EVD of FIM were 2,22,600, 7,02,000 and 4,39,200, respectively. Hence, the QR method was found to outperform the other two approaches [10]. Vincenzi and Simonini (2017) studied the influence of errors in modelling and uncertainties in parameters in sensor placement strategies, especially for modal test and Structural Health Monitoring (SHM). The sensor placement used an exponential correlation function and covariance matrix for determining the Information Entropy (I.E). The determinant of FIM was based on Cholesky factorization and Singular Value Decomposition (SVD). It was found that the exponential correlation function depends on modal vector and distance between the sensors. A case study was conducted for a steel footbridge in Corregio (Italy). The correlation function and stiffness of the connecting elements of the truss members influenced the accuracy of the modal identification [11]. Jaya et al. (2020) determined the optimal location of sensors for SHM applications based on the minimization of the expected value of the distance between the expanded mode shape and real mode shape. The criterion was applied to two case studies: (i) simple cantilever beam and (ii) industrial milling tower. It was observed that maximizing the independence of columns of FIM does not guarantee that the expanded mode shapes would agree with the real mode shapes, especially in the existence of modelling errors and measurement noise. In this method, an exhaustive search was done among all possible layouts which would minimize the distance between the two mode shapes. The sensor set based on distance approach reduced the square of normal distance up to 24% for cantilever and 40% for milling tower compared to the conventional algorithms (Genetic Algorithm and Sequential Sensor Placement). The efficacy of the proposed method was also demonstrated with Monte Carlo Simulation [12]. Zhang and Liu (2016) applied Kalman Filter-based approach for optimal placement of multiple type of sensors such as strain gauges, accelerometers, displacement transducers. The OSP aimed to reconstruct the response of structural elements which are not equipped with sensors. The objective function was dependent on minimizing the variance of unbiased estimate of the state of a structure. The Kalman Filter-based approach was applied on a case study structure of simply supported over hanging steel beam under different types of excitations. The response reconstructed was found to be superior to the conventional methods [13]. Kulla (2019) applied the Bayesian estimation for generating sensor data with less noise based on output only modal identification. Later virtual sensing was used to expand the algorithm to estimate full field response. Sensor noise influences the accuracy of physical measurement. The upper limit of variance of sensor noise has been determined, and its effect of accuracy of virtual sensing has been studied [14]. Instead of adopting an iterative approach, earlier researchers have also been on the application of evolutionary methods for sensor placement optimization. The frequently used methods were multi-objective Genetic Algorithm and firefly algorithm. However, the EI-based methods were found to be more efficient than the conventional methods. But the EI-based method had an inherent drawback of tendency of spatial clustering. This drawback was resolved by the key work by Stephan (2012). The algorithm utilized an additional criteria of redundancy of information to avoid sensor clustering. However, the algorithm was applied only to uniaxial sensors [15]. The EI-based method was modified by Kim et al. (2018) [16] which involved an additional part accounting for uncertainty in the model called stochastic effective independence (SEFI). The information entropy-based sensor optimization proposed by Papadimitriou (2004) accounts for the uncertainty involved in the estimation of modal parameters. The amount of mutual information between neighbouring sensors was avoided by considering the effect of error in the modelling and measurement noise in the sensor placement strategy. The study utilized bayesian statistical inference for accounting the uncertainty, and also an asymptotic estimate was used for quantifying the uncertainty involved in the models with large data [17]. Tran-Ngoc et al. (2018) used Genetic Algorithm and Particle Swam Optimization for finite element model updating of NamO bridge in Vietnam. The uncertainty related to the connection of truss members was mitigated by considering three support conditions, namely (i) pinned, (ii) rigid, (iii) semirigid. For each condition, the natural frequencies were compared between numerical and experimental modal analyses. It was inferred that semirigid support condition gave maximum agreement between the numerical and experimentally obtained natural frequencies. For determining modal frequencies experimentally, an ambient vibration test was conducted. The objective function for model updating was based on mode shape and natural frequencies. PSO and GA were utilized for converging the objective function to minimum. It was also inferred that PSO outperforms GA in terms of number of iterations required for convergence to find the best solution [18]. Lenticchia et al. (2018) studied the feasibility of application of OSP for seismic vulnerability study of concrete vault structures. The structure chosen for the study was Turin Exhibition Centre, Nervi, Italy. The study compared the effect of degradation of non-structural elements on the placement of sensors. OSP was applied for two different conditions of the structure (i) undamaged, (ii) damaged: deterioration of infill walls. It was inferred that the presence of degradation in non-structural elements influenced the seismic behaviour of the vault structure and eventually the optimal sensor locations [19]. In the recent applications of Structural Health Monitoring in civil engineering structures, uniaxial sensors have been replaced by triaxial sensors. The triaxial sensors were found to reduce the instrumentation effort as they are more compact in size and also provide information about all the three axes unlike uniaxial sensors. Based on the research work conducted in the past, it was inferred that usual approach adopted to place the sensors for health monitoring of bridges is fixing the sensors on both sides of the bridge and at equal spacing. In order to ensure the identification of both horizontal and vertical bending modes, both horizontal and vertical components of accelerations are measured. But in this approach, there might be chances that two neighbouring sensors might be sharing same information and that would be resulting wastage of the sensor considering their high cost. For a developing country like India, the primary agenda is to extend the serviceable life of the structures of strategic importance in order to ensure judicious utilization of the funds allocated for their operation and maintenance. Optimization of the sensors plays a key role in reducing the cost of implementation of SHM system. Moreover, in earlier studies sensor placement optimization strategies have been adopted in structures like Spacecraft, Aeroplane, Truss, and Lab Scale structures, as there has been very minimal application of sensor optimization strategy for health monitoring applications in structures such as dams, bridges, etc. In this study, the opportunity for the feasibility of optimal sensor placement for successful implementation of a vibration-based health monitoring system of a full-scale structure has been explored. Establishment of a Vibration-based Structural Health Monitoring system under plausibility of fund becomes challenging; hence, it was decided to ensure judicious use of sensors through optimization. An effective sensor placement strategy is proposed in the current study for the health monitoring of a bridge structure in Tiruchirappalli city of Tamil Nadu, India. The study utilizes a combination of Triaxial Effective Independence and Redundancy Information criteria for forming the initial sensor set and fine tuning the final sensor set based on the measure of mutual information. The approach adopted is also compared with the conventional evolutionary algorithms. The triaxial accelerometers are fixed on the positions determined by the optimization results and are found to contribute toward successful completion of the vibration test. It has also been inferred that conventional algorithms lead to clustering of sensors, which eventually indicates the majority of sensors sharing mutual information and violating the judicious use of the sensors. The suboptimal methods based on Triaxial Effective Independence and redundancy of information ensure the sensors share as minimum mutual information as possible. Thus, the individuality in the information from each sensor is ensured.

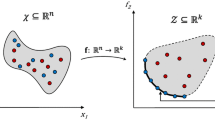

Theoretical background of Triaxial Effective Independence method

In order to extract modal information accurately, sensor placement must be planned with utmost care, because the success of modal testing relies on the positioning of sensors. As mentioned in introduction, the sensors must be well distributed on the structure, i.e. there must be no clustering of sensors as clustering of sensors indicates considerable sharing of mutual information among them. The vibration analysis must ensure the judicious use of sensors and also ensure maximum information is obtained from the modal test. The sensor placement strategy adopted in this study is iterative in nature. These iterative strategies are called Sequential Sensor Placement methods (SSP). The SSP methods can be classified in to two types. (i) Forward Sequential Sensor Placement (FSSP), (ii) Backward Sequential Sensor Placement (BSSP). In FSSP, after the decision on candidate sensor positions, one sensor is added in turn to the initial sensor set and the objective function is evaluated. After adding each sensor to the initial set, the sensor position which causes largest decrease in the objective function is removed from the candidate set and placed in the optimal set, whereas in BSSP, the candidate sensor set consists of thousands of candidate location. In each iteration, a single location is removed, and the cost function is evaluated. The sensor location which results in smallest increase in the cost function would be included in the optimal set. For finite element models with fine mesh, BSSP has been found to be computationally expensive compared to FSSP. Thus, FSSP is more preferable also in the condition where a constraint in the form of Redundancy Information is used.

The sensor placement strategy consists of two stages.

Stage I: Forming the Initial Sensor Set:

In this study, FSSP was adopted. Based on the Triaxial Effective Independence (TEI), an initial sensor set with modal matrix \({\Phi }_{m}\) having linearly independent columns was formed. TEI is defined as a measure of decrease in the amount of information in the initial set due to removal of a sensor position. Thus, the first stage started with computation of norm (spectral radius) of elementary information matrix \(Q_{3i}\) for each position i and sorted in decreasing order. The position with the highest norm was removed from candidate set and placed in initial set. In addition to this position, the vibration analyst has the choice to add positions to the initial set, where they absolutely want to measure the vibration. Let, the initial information matrix be \({\varvec{Q}}_{0}\) which is singular having rank u < n and eigen vectors represented by \({\varvec{\psi}}_{0}\). These vectors will be spanning in u-n dimensional space. Thus, the orthogonal projector P is represented as

Let \(\overline{{{\varvec{Q}}_{{\varvec{c}}} }}\) be the information in the candidate set which is orthogonal to \({\varvec{Q}}_{0}\, i.e\) the information contained in the updated candidate sensor set \({\varvec{Q}}_{{\varvec{c}}}\) which can be obtained using the orthogonal projector P.

From Eq. (2), the position with highest contribution to \(\overline{{{\varvec{Q}}_{{\varvec{c}}} }}\) can be determined. Reference [2] explains the procedure for deriving TEI in terms of \(\overline{\varvec{Q}}_{{\varvec{c}}}\). Let \(\overline{EfI}_{3i}\) represent the Triaxial Effective Independence value of each ith position.

where \(I_{3}\) = 3 × 3 identity matrix, \({\Phi }_{3i}\) = modal matrix consisting of three rows with each row representing the translation along each axis for ith sensor position, \(\overline{EfI}_{3i}\) values range between 0 and 1. The location with the highest TEI value is removed from the candidate set and placed in the initial sensor set. This step is repeated till the initial sensor set is full rank.

Stage II: Formation of the final sensor set.

To obtain the remaining positions, mean of trace of estimation error variance is adopted as the objective function and considering Redundancy Information as constraint. Redundancy Information measures the amount of information mutually shared between sensors at node i and node j and is computed using the following equation:

\({\varvec{Q}}_{3i}\) = elementary FIM at the ith node

\({\varvec{Q}}_{3j}\) = elementary FIM at the jth node.

The \(R_{ij}\) value of 1 indicates sensors share no mutual information and a value of 0 indicating the sensors bring the same information. One position from the current candidate set is added to the final sensor set, and the mean of trace of the estimation error variance is computed based on Eq. [10]. The location which gives the least value of the objective function is selected. The computation of estimation error variance is based on estimation theory as follows:

In structural dynamics, the displacement of a structure under dynamic loading is expressed as:

where \({\mathbf{x}}\left( t \right)\) = Displacement response with \(N_{s} x1\) dimension, where \(N_{s}\) = Number of sensors, \({\mathbf{q}}\left( t \right)\) = Modal Co-ordinates with n × 1 dimension, \(n\) = number of mode shape vectors selected, \({\Phi }\) = Modal matrix containing n selected mode shape \({\Phi }_{k}\), with dimension of \(N_{s} x n\), \(q_{k}\) = Modal coordinates corresponding to \({\Phi }_{k}\), \(N = { }\) Total Degrees of freedom in the FE Model.

The vector \({\mathbf{x}}\left( t \right)\) can be divided in to xm(t) and xd(t) indicating measured and unmeasured part of the response vector \({\mathbf{x}}\left( t \right)\).

Let y(t) denote the measured response and is given by:

where\({\Phi }_{m}\) represents measured degrees of freedom, \(\varepsilon \left( t \right)\) denotes the error during measurement which is inevitable and is of Gaussian White Noise (GWN) nature. Thus, it is important to minimize the residual \(\varepsilon_{{\text{y}}}\)(t),

where \(\hat{q}\left( t \right)\) = estimate of modal co-ordinates.

Modal co-ordinate estimation is treated as a least square estimation problem. Thus, for the inverse to exist, the number of parameters (mode shapes) must be smaller than the number of observations (Number of sensors), thus paving the way for the first assumption.

Assumption 1: (i) \(\varepsilon \left( t \right)\) = Gaussian, uncorrelated with zero mean.

(ii) Number of sensors is greater than the number of mode shapes.

Thus, homoscedasticity solution for modal co-ordinates is expressed as

Equation 8 is a condition of homoscedasticity as it assumes equal confidence in all the measurement points and in variance of the measurement noise; however, if there is more confidence in some points, then the condition of heteroscedasticity may be considered. Thus follows the second assumption.

Assumption 2: If there is more confidence in some points compared to other measurement points, then heteroscedasticity (weighted least square) of \(\hat{q}\left( t \right)\) must be considered.

W = weighing matrix (diagonal matrix)

\(\sigma_{i}^{2}\) = Measurement variance for each sensor.

where \(\mathop \sum \nolimits_{q} =\) \(\left( {{\Phi }_{m}^{T} W{\Phi }_{m} } \right)^{ - 1}\), \(\mathop \sum \nolimits_{q} =\) Error covariance matrix.

From the unmeasured degrees of freedom, the variance of estimation error for displacement estimate is expressed as:

where \({\Phi }_{d}\) represents unmeasured degree of freedom. The measurement noise was considered as uncorrelated and equal at all location of sensor. Thus, the error covariance matrix takes the following expression:

where Q represents the Fisher Information Matrix. In Effective Independence, the objective is to maximize the FIM (trace or determinant). Thus, Q can also be represented in terms of contribution from each sensor as:

m = number of sensor degree of freedoms, i = ith row of \({\Phi }\).

In the case of triaxial sensors, Eq. 12 becomes

\(m_{n}\) = number of candidate sensor location, \(\sigma_{0}^{2}\) = 1 (assumed).

The objective for is to minimize mean of trace of estimation error variance for the unmeasured DOFs

Implementation of Optimization

The methodology explained above was implemented as follows:

-

1.

The location where the analysts absolutely want the sensor to be fixed was chosen.

-

2.

First Stage: Formation of Initial Sensor Set based on Sequential Sensor Placement and Triaxial Effective Independence criterion.

-

(i)

For all the chosen sensor location (candidate set), \({\varvec{Q}}_{3i}\) is calculated and sorted based on the norm. The sensor location with largest norm is selected as first node.

-

(ii)

Sensors in the candidate set are ranked based on the \(\overline{{ EfI3_{i} }}\) value, and the sensors with highest contribution are placed in the initial sensor set till the rank is full.

-

(i)

-

3.

Second Stage: For the initial sensor set formed, the \(R_{ij}\) is calculated and all the sensor nodes having \(R_{ij}\) less than the threshold value \(R_{0}\) are removed from the in the initial set. Figure 1 shows the flowchart for the optimization strategy adopted for programming in MATLAB

Metaheuristic algorithms (Genetic Algorithm and Particle Swarm Optimization)

Genetic Algorithm

The Genetic Algorithm (G.A) was invented in the 1970s by John Holland. The theory of evolution-based mechanism called Natural Selection was the motivation behind G.A [20]. The implementation of G.A consists of 4 stages: Selection, Crossover, Mutation, Evaluation.

-

(i)

Selection Operator: This refers to the process of selecting two or more parents from the population for crossing. The purpose of selection is to identify the fittest individual in the population in hope that their offsprings have higher fitness.

-

(ii)

Methods: Roulette Wheel Selection, Boltzman Selection, Tournament Selection, Rank Selection, Stochastic Universal Sampling.

-

(iii)

Mutation: This step adds diversity to the selection process and alters the individuals randomly. Example: Inverse Mutation, Displacement Mutation, Reversing Mutation, Scramble Mutation, Big Flipping Mutation.

-

(iv)

Crossover: This operation is carried out during the mating process. A crossover point is selected between parent pairs randomly. The commonly used crossover methods are single point crossover, uniform crossover, k-point crossover, partially mapped crossover, cycle crossover, shuffle crossover, and order crossover.

In this study, the optimization employs a simple G.A method. Here, the number of variables to be optimized is the number of sensors NO; the value of variable can range from 1 to ND where ND represents number of degrees of freedom chosen for forming the Fisher Information Matrix. From a population of possible solutions, the initial parents are chosen by Roulette wheel selection. The probability of crossover and mutation has been adopted as 0.9 and 0.01, respectively. The fitness function adopted for deciding the best sensor configuration is Eq. (14); this equation represents the Information Entropy (I.E). The information entropy directly implies the uncertainty in the adopted sensor configuration. Thus, I.E is related to the determinant of the FIM matrix.

The I.E estimated for a sensor configuration δ using Eq. 14 is used to determine the Information Entropy Index (I.E.I) as given in Eq. 15. I.E.I is a measure of uncertainty in the estimate of parameters relative to the uncertainty obtained for a reference configuration.

where I.E.I = Information Entropy Index, \({\varvec{h}}\left( {{\varvec{\delta}},{\varvec{\theta}},{\varvec{\sigma}}} \right)\) = Information entropy for sensor configuration \({\varvec{\delta}}\), h(\({\varvec{\delta}}_{{{\mathbf{ref}}}} ,{\varvec{\theta}}_{0} ,{\varvec{\sigma}}_{0} )\) = Information entropy for reference configuration (\({\varvec{\delta}}_{{{\mathbf{ref}}}}\)). In the reference configuration, the sensors are considered to be placed at all the nodes. Thus, I.E.I acts as a measure of effectiveness of each adopted sensor configuration (δ), \({\varvec{Q}}\left( {{\varvec{\delta}},{\varvec{\theta}}} \right)\) = Fisher Information for sensor configuration (\({\varvec{\delta}}\)), \({\varvec{N}}_{{\varvec{\theta}}}\) = Number of modal parameters.

The detailed derivation of Eqs. 16 and 17 can be obtained in reference [6]. Flowchart for implementation of G.A in MATLAB (Algorithm) is shown in Fig. 2.

Particle Swarm Optimization

Particle Swarm Optimization (P.S.O) is a nature inspired algorithm developed by Eberhart and Kennedy in 1995 [21]. This method mimics the fish schooling or bird flocking behaviour. There exists no supervisor or central control to give orders on how to behave in a group. It is based on two concepts: Self-organization and Division of labour. It uses a number of agents (particles) forming a swarm moving around in the search space looking for best solution. Each particle is treated as N-dimensional space which adjusts its “flying condition” according to its own experience (p Best) as well as experience of other particles (g Best) where p Best represents the personal best position and g best represents global best position. P.S.O finds solution through an iterative manner. The method randomly generates a swarm consisting of particles. Every particle represents a solution in the N-dimensional search space, where N represents the number of variables to be optimized.

The status of the ith particle is obtained from the following two vectors as represented in Fig. 3

-

(i)

Current position \({\varvec{X}}_{{\varvec{i}}} \left( {\varvec{t}} \right) = {\varvec{x}}_{{{\varvec{i}}1}} ,{\varvec{x}}_{{{\varvec{i}}2}} , \ldots ,{\varvec{x}}_{{{\varvec{in}}}}\)

-

(ii)

Flight Velocity \({\varvec{V}}_{{\varvec{i}}} \left( {\varvec{t}} \right) = {\varvec{v}}_{{{\varvec{i}}1}} ,{\varvec{v}}_{{{\varvec{i}}2}} , \ldots ,{\varvec{v}}_{{{\varvec{in}}}}\)

Figure 3 shows the P.S.O terminologies.

In every iteration, the particle moves to a new position and finds the p Best. The updated velocity and position of each particle are obtained from Eq. (16) & (17)

where \({\varvec{v}}_{{\varvec{i}}} \left( {\varvec{t}} \right)\) = Current velocity of particle i, \({\varvec{x}}_{{\varvec{i}}} \left( {\varvec{t}} \right)\) = Current position of particle i, \({\varvec{w}}\) = Inertial weight factor at the tth iteration, r1,r2 = two independent random numbers ranging between 0 and 1, c1,c2 = learning rates treated as constants.

The objective function chosen for the implementation of P.S.O is Information Entropy (I.E) which is obtained from Eq. 14 explained in section "Genetic Algorithm". Figure 4 represents the flowchart of P.S.O implementation in MATLAB.

Performance evaluation of the optimization method

Three methods of sensor optimization, namely Triaxial Effective Independence, Genetic Algorithm, and Particle Swam Optimization, were applied on a case study structure (Cauvery Bridge) in Tiruchirappalli city. The Cauvery bridge was built in the year 1976 across River Cauvery and plays an important role in linking the city of Tiruchirappalli with the island of Srirangam. This bridge is deemed to be a strategically important structure as it facilitates both the movement of raw materials to and finished products from major industries that fall under the purview of the public sector undertakings overseen by the Government of India. Figure 5 shows the location of Tiruchirappalli City in the geographic map of India. The motivation behind selecting this bridge is the excessive vibration experienced during a routine maintenance inspection by the bridge authority (Trichy Padalur Toll Plaza). It was inferred that the bearing of the girder was leaning, which was later replaced by POT bearings and bridge was restored to its original condition. The inspection team implements a vibration-based monitoring system for the bridge in order to have a keen watch on the bridge behaviour. Hence, for the implementation of the real time monitoring system for the bridge, Science Engineering Research Board (SERB) had provided a funding for this project under the category of Impacting Research Innovation and Technology (IMPRINT-IIC). Thus, in the context of pretest planning for successful implementation of the vibration study of the bridge, it was decided to utilize the finite element analysis (F.E.A) results of the bridge modelled in SAP 2000. The modal information obtained from the bridge was used for the pretest planning of optimal sensor placement strategy.

Location of Tiruchirappalli City (https://www.google.com)

Description of the structure

The finite element model of the bridge under study was developed in SAP 2000. Table 1 describes the specifications of the structure. The Cauvery bridge is a reinforced concrete T beam bridge with a total length of 630 m with 15 spans, and each of the span acts individually as simply supported span. Each span of the bridge has two one-way lane with a span of 42 m and a width of 10.5 m. The bridge is modelled as a reinforced concrete bridge with four T Girders of 1.8 m depth and 130 mm width at 2.5 m spacing and eight number of cross-diaphragm of depth 1.5 m and 100 mm width. The bridge deck has a total thickness of 250 mm and is provided with 7.5 m wide carriageway. The deck and girders were modelled with shell and beam elements. Figure 6 shows the finite element model of the bridge in SAP 2000.

As the results obtained from modal analysis of the structure in SAP 2000 would be utilized for the formation of Fisher Information Matrix, the nodes present on the surface of the structure were considered. For the formation of the Fisher Information Matrix 6,9 and 12 Modes were considered. As the sensors cannot be placed inside the structure, the nodes present on the surface of the structure were chosen in the formation of candidate sensor set.

Optimization results

Threshold of redundancy for redundancy of information-based optimization

The first step in the methodology is to decide the number of modes to be adopted and the redundancy value to be fixed in order to control the amount of information shared between the sensors. The value of redundancy ranges from 0 to 1 (0% to 100%). The value of 1 indicates that two sensors are orthogonal, meaning they do not share any information about the mode shapes. If the value of redundancy is kept at a high value of 1, then the algorithm will stop before reaching the desired number of sensors and a lot of degrees of freedom will be deleted from the final sensor set. If it is kept low, then the algorithm will include lot of close spaced positions in the final sensor set which is likely to lead to sensor clustering. Hence, it is attempted to find the variation in the number of sensors with respect to the choice of number of mode shapes and also to adopt the value of redundancy, rather than assuming a value. The algorithm used takes into account different redundancy values and the number of mode shapes to be considered for identification. As from Fig. 7, it can be inferred that the number of sensors required drops down drastically at the redundancy value of 0.60 for all different modes considered. Hence, the redundancy threshold has been adopted as 0.60, corresponding to 12 number of modes; 28 accelerometers are required to conduct the modal test. In the Triaxial Effective Independence method, the maximum value of determinant of FIM was obtained as 7.55 × 1027. The value of determinant was found to reduce considerably with the reduction in the number of modes and number of sensors as shown in Fig. 8. Equations 8 and 9 approach estimation of modal coordinates as a least square approximation problem, which involves inversion of modal matrix. Also according to Eq. 3, the value of Triaxial Effective Independence depends on the pseudo-inverse of the information contained in the candidate sensor set (\(\overline{{{\varvec{Q}}_{{\varvec{c}}} }}\)); the efficiency of the pseudo-inverse depends on the condition number of the matrix \(\overline{{{\varvec{Q}}_{{\varvec{c}}} }}\). Hence, stability of matrix plays a crucial role. Therefore, condition number becomes an important metric. Condition number of the eigen matrix: condition number is defined as the ratio of the largest singular value to the lowest singular value. It measures the sensitivity of matrix operations to error in the input. Hence, the lower the condition number, the better the sensor configuration. A condition number close to 1 is a good indicator of the invertibility and linear independency among the columns of the matrix. Figure 9 shows the variation in condition number; it is inferred that irrespective of the number of modes, the condition number for lower number of sensors is ranging from 1018 to 103, indicating low number of sensors would produce an ill-conditioned matrix producing unreliable results, and hence, the number of sensors should be chosen such that the condition number stabilizes close to a value of 1, which would help the analyst to get reliable system identification results. In this study, the condition number was found to get stabilized from 3 to 1.5 when the number of sensors are beyond 25 for all the three modes. Mean of trace of estimation error variance is a measure of success of modal expansion. The holistic idea of modal expansion is to be able to reconstruct the response of the part of the structure not instrumented with sensors as exactly as possible. Thus, Eq. 10 which measures the mean of trace of estimation error variance of the unmeasured degrees of freedom has been chosen as the objective function. It can be inferred from Fig. 10.

When the sensor number increase beyond 25, the mean of the trace is found to stabilize to value of 0.17 for 12 observable modes, 0.19 for 9 modes, and 0.20 for 6 modes.

Information Entropy Index as specified in Eq. 15 was adopted as the parameter for comparing the efficiency of sensor layout obtained from the three algorithms (GA, PSO, and Triaxial Effective Independence). Figures 11, 12 and 13 show the sensor layout obtained from each of these methods. From the layouts obtained from GA and PSO, it can be seen that the sensors are clustered on the areas of highest contribution to modal information. When such clustering occurs, the sensors would be bringing a higher proportion of mutual information, indicating minimum individuality in the information from each sensor. Hence, the layout obtained from Triaxial Effective Independence and Redundancy Information has been adopted for conducting the modal test of the bridge.

Information Entropy Index is a measure of difference in the uncertainty in estimation of parameters for a sensor configuration with respect to a reference sensor configuration. Here the reference configuration is the one in which all the nodes are considered to be instrumented.

From Fig. 14, it can be inferred that for all number of observable modes, the I.E.I values follow same trend for number of sensors ranging from 5 to 20. However beyond 20 number of sensors; the I.E.I Values for Triaxial Effective Independence were found be to lower than the conventional method of G.A and P.S.O by 22% to 40%. The better performance of proposed method is due to the adoption of Sequential Sensor Placement, unlike metaheuristic methods, where the sensor clustering contributed to higher I.E.I. Thus, for a smaller number of sensors (5 to 20), the I.E.I values are the very high in the order 1011 to 103, indicating unidentifiability of modes. This phenomenon can also be related to the high condition number of the FIM matrix, which is an indication of an ill-conditioned FIM matrix, thus leading to unidentifiability of modes. Thus, the given number of sensors which are spatially well distributed yields more information than the clustered distribution.

Field application of optimized layout

In order to test the efficiency of the sensor layout obtained from Triaxial Effective Independence method, a vibration study was conducted on the bridge. The measurement of the vibrations of Cauvery bridge under prevailing conditions was done with the use of equipment detailed as follows. The 8 MEMS Triaxial Industrial Accelerometers have a sensitivity of 330 mV/g and their frequency ranging from 0.025 Hz-200 Hz. Data acquisition was done using the NI cRIO 9040 real-time controller and NI 9234 I/O voltage module. The LabVIEW FPGA–based Graphical programme was installed in cRIO for executing data acquisition and processing. The cRIO was capable for functioning as a real time and to act as a standalone controller for data acquisition, processing, and releasing alert protocols. Cabled type instrumentation was used for the field test, as detailed below. The Triaxial accelerometers were attached to a portable dynamic signal conditioning module (can function in stand-alone mode for 72 h) by utilizing coaxial cables with Bayonet Neil Concelman (BNC) connectors. The later was also connected to the real-time controller (NI cRIO 9040), as shown in Fig. 15. It was ensured by the manufacturer that the lengthy cables used had very minimal loss of data. The sampling of the signal was done at 500 samples per second during testing using a Butterworth Band-pass filter with a range between 0.2 and 30 Hz. The instrumentation chosen for this study includes both roving and stationary accelerometer. The accelerometers to measure the vibration were mounted on a mild steel plate using magnetic mount. The plate was fastened to the concrete surface using strong epoxy adhesive (3 M DP 420) to obtain good bonding. The total number accelerometers needed for the study was 28; therefore, out of 8 accelerometers, 4 of which are stationary accelerometers while rover accelerometers make up the balance 4. Data were obtained for a time period of 45 min. After every 45 min, the vibration data were saved in a TDMS file format and the same file was accessed by the data analysis program and the modal information was obtained. This method was adopted to facilitate real-time processing of the vibration data.

Thus, whenever there is a shortage in the availability of sensors, the method of roving of sensors can be adopted. In this method, at least two accelerometers must be kept as stationary and the rest can be roved to the desired number of points.

Criteria for evaluation

In order to assess the sensor configuration quality, the obtained results need to be evaluated based on certain criteria. Three different criteria are considered in this study and they were (i) Determinant of the Fischer Information Matrix(FIM): determinant ensures the variance of the modal co-ordinates estimated. Thus, the higher the determinant, the lower will be the variance. It is a quantitative representation of the modal information carried by the sensors. (ii) Condition number of the eigen matrix: condition number is defined as the ratio of the largest singular value to the lowest singular value. It measures the sensitivity of matrix operations to error in the input. Hence, the lower the condition number, the better the sensor configuration (iii) Modal Assurance Criterion (MAC): MAC is a measure of correlation or degree of similarity between two mode shapes or modal vectors. The MAC value ranges between 0 and 1; thus, a good configuration should have smaller off-diagonal elements. Therefore, as a criterion for assessment maximum off-diagonal terms have been used as an indicator.

where \(\left\{ {\emptyset_{n}^{e} } \right\}\) indicates the mode shapes from experiments and \(\left\{ {\emptyset_{n}^{a} } \right\}\) stands for the mode shapes obtained by numerical prediction.

Field test results

As discussed in section "Field application of optimized layout", a field test was conducted to validate the efficiency of optimization strategy and the LabVIEW program for data analysis installed in cRIO for facilitating real-time monitoring. The experimental natural frequencies were obtained using Operational Modal Analysis (O.M.A). Three methods of O.M.A were used, namely Covariance driven Stochastic Subspace Identification (Cov-SSI), Frequency Domain Decomposition (FDD), and Least Square Complex Exponential (LSCE). There was a good agreement between analytical and experimental results with a maximum variation of 10.13% for modal frequencies and 8.55% for the damping ratios, as shown in Table 2. As an additional evaluation criteria, MAC matrix was obtained for 6 modes observed and the lowest off diagonal value of 0.19. The graphical representation of the MAC matrix obtained using the SSI method is shown in Fig. 16. From the figure, it is evident that the diagonal values fall in the range of 0.97 to 0.99 indicating that there is a good degree of consistency between the mode shapes.

The lower MAC values of off diagonal elements of MAC matrix indicate linear independence among the mode shapes. Thus, it can be confirmed that the deflection shapes obtained from Operational Modal Analysis are mode shapes of the structure and not Operational Deflection Shapes (ODS). If the shapes were generated from ODS, there would be considerable values in majority of the off-diagonal elements. Hence, the adopted sensor layout has contributed to detection of modal properties of the structure under study. From the field test, six modal frequencies were identified; the inability to identify other modes may be attributed to insufficient ambient excitation, as, for conducting the modal test, service loads were considered as the excitation force. Hence, during the data acquisition, the ambient loads were found to exciting these six modes.

Conclusions

In this study, the feasibility of sensor optimization for practical application of a bridge structure in India has been studied. The sensor optimization was given major emphasis because for a developing country like India, where the Structural Health Monitoring (SHM) applications on structures are still in its embryonic stage and there also exists paucity of funds for establishment of SHM systems. Thus, such situations call for optimization of sensors. The study also explains the method of implementing a Vibration-based Structural Health Monitoring system for bridges in India. For the optimization strategy, the modal analysis results obtained from finite element analysis of bridge structure in SAP 2000 were utilized. The mode shapes obtained were used for formation of the Fisher Information Matrix. Three methods of optimization were considered, namely Genetic Algorithm (GA), Particle Swam Optimization (PSO), and Triaxial Effective Independence (TEI). The Triaxial Effective Independence method depends on maximizing the determinant of FIM matrix, thus ensuring linear independence among the columns of the matrix. The linear independency influences the accuracy of modal parameter estimation. In the Triaxial Effective Independence method, an addition feature of redundancy of information has also been adopted as a constraint. The constraint is utilized to avoid sensor clustering and to ensure spatial distribution. From the optimization result based on Triaxial Effective Independence and redundancy of information (RI) criterion, it was inferred that in order to observe 12 modes, 28 accelerometers would be required for a redundancy value of 0.60. Additional parameters were measured to evaluate the efficacy of the number of accelerometers obtained. As the estimation of modal coordinates has been modelled as a least square estimation problem, the invertibility of modal matrix had to be examined. Thus, variation in the condition number with increase in number of sensors was evaluated. It was found that when the sensor number increased beyond 25, the condition number stabilized to a value of 1.5. For evaluating the efficacy of modal expansion, mean of trace of estimation error variance of unmeasured degrees of freedom has been chosen, as modal expansion aims at reconstruction of responses of the structural elements not instrumented with sensors in such a way that they match with the actual response when subjected to excitation. Here the mean of trace was found to converge to 0.17 for 12 observable modes, 0.19 for 9 modes, and 0.20 for 6 modes. Thus, the layouts obtained from GA, PSO, and TEI were compared on the basis of Information Entropy Index (IEI). The layout based on TEI criterion was found to result in least IEI value. Thus, the layout was adopted for field test. The field test was conducted based on the real-time data acquisition and analysis software developed in LabVIEW and installed in a real-time controller. The modal parameters were obtained by operational modal analysis. In operational modal analysis, in service loads are considered as excitation forces. Hence, six modes of vibration of structure were identified. There was a good agreement between analytical and experimental results with a maximum variation of 10.13% for modal frequencies and 8.55% for the damping ratios. For comparing the mode shapes, Modal Assurance Criteria (MAC) were employed. The diagonal elements were found to be in a range of 0.99 to 0.97 and off-diagonal elements between 0.00 and 0.19, thus indicating linear independency.

Availability of data and materials

Data and information are provided based on reasonable request.

References

Rainieri C, Fabbrocino G (2014) Operational modal analysis of civil engineering structures, an introduction and guide for applications. Springer, New York. https://doi.org/10.1007/978-1-4939-0767-0

Kammer DC (2005) Sensor set expansion for modal vibration testing. Mech Syst Sig Proces 19:700–713. https://doi.org/10.1016/j.ymssp.2004.06.003

Nieminen V, Sopanen J (2023) Optimal Sensor Placement for triaxial accelerometers for modal expansion. Mech Syst Sig Process 184:1–21. https://doi.org/10.1016/j.ymssp.2022.109581

Shah P, Udwadia FE (1978) A methodology for optimal sensor location for identification of dynamic systems. J Appl Mech 45:188–196

Udwadia FE (1994) Methodology for optimal sensor locations for parametric identification in dynamic systems. J Eng Mech 120(2):368–390

Papadimitriou C (2004) Optimal sensor placement for parametric identification of structural systems. J Sound Vib 278:923–947. https://doi.org/10.1016/j.jsv.2003.10.063

Papadopolous M, Garcia E (1998) Sensor placement methodologies for dynamic testing. AIAA J 36:256–263

Meo M, Zumpano G (2005) On the optimal sensor placement technique for a bridge structure. Eng Struct 27:1488–1497. https://doi.org/10.1016/j.engstruct.2005.03.015

Ghung T, Moore D (1993) On-orbit sensor placement and system identification of space station with limited instrumentation. Proceedings of 11th International Modal Analysis Conference

Li DS, Li HN, Fritzen CP (2009) A note on fast computation of effective independence through QR downdating for sensor placement. Mech Syst Sig Proces 23:1160–1168. https://doi.org/10.1016/j.ymssp.2008.09.007

Vincenzil L, Simonini L (2017) Influence of model errors in optimal sensor placement. J Sound Vib 389:119–133. https://doi.org/10.1016/j.jsv.2016.10.033

Jaya MM, Ceravolo R, Fragonara LZ, Matta E (2020) An optimal sensor placement strategy for reliable expansion of mode shapes under measurement noise and modelling error. J Sound Vib 487:1–23. https://doi.org/10.1016/j.ymssp.2011.05.019

Zhang CD, Xu YL (2016) Optimal multi-type sensor placement for response and excitation reconstruction. J Sound Vib 360:112–128. https://doi.org/10.1016/j.jsv.2020.115511

Kulla J (2019) Bayesian virtual sensing in structural dynamics. Mech Syst Sig Proces 115:479–513. https://doi.org/10.1016/j.ymssp.2018.06.010

Stephan C (2012) Sensor placement for modal identification. Mech Syst Sig Proces 27:461–470. https://doi.org/10.1016/j.ymssp.2011.07.022

Kim T, Youn B, Oh H (2018) Development of a stochastic effective independence (SEFI) method for optimal sensor placement under uncertainty. Mech Syst Sig Proces 111:615–627. https://doi.org/10.1016/j.ymssp.2018.04.010

Papadimitriou C, Lombaert G (2012) The effect of prediction error correlation on optimal sensor placement in structural dynamics. Mech Syst Sig Proces 28:105–127. https://doi.org/10.1016/j.ymssp.2011.05.019

Ngoc TH, Khatir S, De Roeck G, Nguyen-Ngoc L, Wahab MA (2018) Model updating for Nam O bridge using particle swarm optimization algorithm and genetic algorithm. Sensors 18:1–20. https://doi.org/10.3390/s18124131

Lenticchia E, Ceravolo R, Antonaci P (2018) Sensor placement strategies for the seismic monitoring of complex vaulted structure of the modern architectural heritage. Shock Vib 2018:1–14. https://doi.org/10.1155/2018/3739690

McCall J (2005) Genetic algorithms for modelling and optimisation. J Comput Appl Math 184:205–222. https://doi.org/10.1016/j.cam.2004.07.034

Eberhart R, Kennedy J (1995) A new optimizer using particle swarm theory. In: Proceedings of the Sixth International Symposium, Nagoya, Japan, pp 39–43

Acknowledgements

This research work has received financial grant from Science Engineering Research Board (SERB) under category IMPRINT IIC, National Instruments—Bangalore, VI Solutions Pvt. Ltd—Bangalore, and Optithought Pvt. Ltd—Chennai.

Author information

Authors and Affiliations

Contributions

AS took part in methodology development, LabVIEW, and MATLAB programming, field test, data collection and analysis, writing. NR involved in writing, review and editing. GG took part in writing, technical reviewing, methodology development, and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors would like to declare that there is no competing interest in the results and data of this investigative research study.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

For this type of study, no informed consent is required.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

S., A., Radhakrishnan, N. & George, G. Multiple criteria-based sensor optimization for Structural Health Monitoring of civil engineering structures. Innov. Infrastruct. Solut. 9, 188 (2024). https://doi.org/10.1007/s41062-024-01456-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41062-024-01456-y