Abstract

In big data science, the classic frequent pattern mining is fundamental to various pattern mining applications. Extensive research on this mining has been undertaken for nearly 30 years but left with no reliable mining approach. One of the main issues is the lack of study on the imperative pattern frequency distribution theory. With an emphasis on mining reliability and methodological change, this paper makes up the absent theory, which consists of a bundle of findings on the frequency distribution properties. The primary property is that the frequency distribution curves from different pattern generation modes are quasi-concave and ultimately resultant bell-shaped curves over large datasets. All the findings are well-formed with no exogenous input but rigorous mathematical proofs that every classic pattern mining approach should observe. This paper thus builds up a solid block of the theoretical foundation for rational and ultimately reliable pattern mining. Moreover, the findings inspire interesting rethinking and new conceptions not merely in pattern mining but also extended deeply to set theory and combinatorics. With this inspiration plus the pure mathematic nature of the explorations presented, the contributions of this study may not be restricted to pattern mining only but spring to data science in general or even broader.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The classic frequent pattern mining starting from the itemset mining [1] is a fundamental technology to retrieve information from various classic datasets. Its applications include general-purpose association-rule mining [2, 3] or causality mining [4, 5], which in turn are foundations for diverse domain-specific mining, such as medical [6,7,8], biological [9, 10], chemical [11,12,13], and genomic mining [14,15,16]. Meanwhile, the basic concepts and methods of the classic mining are the starting points of the mining over nonclassical datasets, e.g., the stream data [17, 18], uncertain data [19, 20], or heterogeneous data [21, 22].

As such, thousands of research articles related to classic pattern mining have been published over a quarter of a century, and most of them focus on algorithm design and implementation to pursue mining efficiency. However, none of the previous mining approaches is reliable, as proved in the first stage of the author’s study [23]. One of the reasons for the unreliable mining is the lack of well-established mining theories but mostly algorithms due to a seeming convention in the pattern mining research circle. That convention requires empirical results to prove one’s mining approach. As experienced by the present author, many journals explicitly or implicitly refuse theoretic articles on pattern mining without empirical results. Such a policy certainly hinders deep theoretical studies, such that there is no generally accepted mining reliability theory established in the literature to date.

We know it is important to require empirical results to verify an approach, but only if the results are reliable. However, it is not the case in pattern mining up to now. Notice first that, for an arbitrarily given dataset, we do not know how many and what patterns exist in it beforehand. Then, without a reliability theory, by what can we testify and trust a mining result from a mining approach to be reliable? The important convention and requirement then become spoiled, and the reality is, without reliability testification, authors in an article merely use empirical results produced from their own algorithm to prove the advantages of that algorithm declared by the authors. Such proof is indeed a “circular proof” only, but interestingly, few people noticed the phenomenon or wanted to change it.

The above spoiled convention implies that people are eager to head in the practical mining without much attention to the soundness of the mining theory thus reliability, or they did not take there are critical issues of the mining theory but only the mining efficiency. Instead, the author’s research has proved and will further prove that the unreliability of previous mining approaches is due to their embodied theoretical fallacies. And there is a need to emphasize that an unreliable mining approach is valueless or even harmful, however efficient it is, because the unreliable information retrieved from that approach may lead to a misunderstanding of a world or even costly wrong decision making.

After we noticed the above, the issue now becomes how to undertake the reliability study. The author finds that we need first to fix some more fundamental issues. The thing is, without a proper reliability theory, we could not determine whether a mining approach is reliable, but conversely, we can tell if it is unreliable—by the rationality of its mining results. That is, a mining approach must firstly be rational, then reliable. The author’s previous study has proved the irrationality of the mining results from all previous approaches with two critical issues, the probability anomaly and the dissatisfaction of the equilibrium condition [23], which shall be briefly iterated in the next section.

Another major theoretical miss is the pattern frequency distribution theory. Without it, there is no rule to guide and check if the central work—finding out the result patterns and their respected frequencies over a dataset—is well done. Accordingly, the main contribution of this paper is the establishment of the pattern frequency distribution theory to meet the needs. This theory, the last study summarized in Sect. 2.2, and the theory to render the selective mining approach as future work are the imperative blocks of the theoretic foundation toward the expected rational and ultimately the reliable pattern mining.

The second contribution is the stimulating sooner realization of reliable pattern mining through objective and methodological change. The first change is prioritizing the mining rationality and reliability over efficiency. An efficient approach is significant only if it achieves reliability. This change requires another change: to put theoretical study and establishment before empirical mining techniques and algorithms. The third is to pay more attention to intrinsic data properties than exogenous input in the theoretical pursuance. If people say previous works have developed some mining theories, those theories are mostly approach-dependent and often with exogenous inputs. They are thus not generally applicable and at least unmatured since no reliable mining approach has grown up from them yet. The studies presented in this paper and before are wholly from the intrinsic natures of the dataset to work on and with no exogenous input but rigorous mathematic proofs that every classic pattern mining approach should observe.

Another contribution is extensiveness and enlightenment. The findings presented in this paper are purely mathematic derivations. As in many cases, a pure mathematic formulation may not find its immediate usage or all usages, but sooner or later, people may find its usefulness even in different subjects or fields. This study thus means to open a new way to solve the pattern mining problem fully mathematically, and as to see soon, inspires some rethinking and new conceptions, not merely in pattern mining itself but also extended deeply to set theory and combinatorics. This inspiration and the pure mathematic endowments would attract more people’s attention to and interest in pattern mining or big data science in general, or even broader. More explicitly, compared with a pattern consisting of singleton elements, the substances of our world comprise quantum particles in modern physics. Then, if we could solve the pattern mining problem mathematically, why could not we find a similar way to understand the world at large, particularly in the search for new genes or innovation of new materials, for instance?

The structure and contents of this paper are: after a summary of the drawbacks of previous works on frequent pattern mining in Sect. 2, Sect. 3 presents the properties of raw pattern frequency distributions under the full enumeration pattern generation regime. Section 4 is on the properties of the distributions in the reduced pattern generation mode, including their empirical verifications, followed by a brief discussion in Sect. 5 and then is the conclusion part of this paper.

2 The mining problem and previous work

The classic pattern mining is originally the itemset mining [1] studied initially in the data mining history. The dataset, such as Table 1, used for the mining is abstracted from market transactions and typically presented in previous research articles. Below presents the details of the dataset natures and the mining problem.

2.1 The terminology and the mining model

Table 1 is a running example of the classic dataset to be used in this paper and named as DBo. The table has u rows and two columns, where column VID represents an application domain \(\varOmega \) of n distinct elements, while TID means the key attribute in database notation. Each row is a tuple with a tuple ID, \(T_i \) \((i = 1, 2, ..., u)\). The \(V_i\) \( (i = 1, 2, ..., n)\) in each cell of column VID means a value from \(\varOmega \). For example, in a market itemset mining problem, a TID could represent a transaction ID, while a \(V_i\) indicates an “item” from the domain \(\varOmega \) of merchandise. A combination of k distinct \(V_i\)s is named as a pattern \(Z_k = (V_iV_j...V_s)\) of length k. The process to enumerate the patterns is called pattern generation.

In statistics terminology, the dataset DBo is a sample of the real-world application at hand. The cardinality u of DBo is the sample size; a record (tuple) is an original observation, or a realized event of the sampling [24], hence a subset of \(\varOmega \). A TID can be taken as a sample label or trial ID, and column VID refers to the set of events [25].

In addition to the above attributes, Table 1 called a “classic dataset” is abstract and characterized in the following [23]:

-

(a)

The classical data nature: each \(V_i\) is of the same nature of the element in set theory. That is, \(V_i\) is unique and atomic (indivisible) in a tuple.

-

(b)

The dataset is de-semantic, where each \(V_i\) is expressed as a discrete (ID) number or a keyword to represent an object (element or item).

-

(c)

The dataset is static.

-

(d)

No random walk in the dataset is presumed in previous work since there is no pre-knowledge to assume which tuple or which element (item) to be so.

Based on the above introduction of the dataset, the fundamental pattern mining problem can be stated as below:

Problem 1

Output all patterns of the elements of any length k \((k > 0)\) from the universe \(\varOmega \) given in DBo, such that the frequentness \(s_z\) of a pattern Z satisfies \(s_z \ge s_{min}\).

Conventionally, \(s_z\) is called the “support” of pattern Z, and \(s_{min}\) is a user predefined frequentness threshold. \(s_z\) is defined as [1, 2]:

where S(Z), or \(S_z\) or F(Z) noted alternatively in the literature, is the number of occurrences of a pattern Z, called “absolute support” or “absolute frequency” of Z over the database; \(u = |DBo|\) is the total number of tuples, i.e., the cardinality of DBo. For instance, \(S(V_1V_2V_3) = 2\), \( S(V_1V_2) = 3\), and \(s(V_1V_2) = \nicefrac {3}{10} = 0.3\), from Table 1.

Problem 2.1 and the related dataset form the classic mining problem. It acts as the simplest hence fundamental pattern mining model. A sound solution for the model is thus of particular importance since only after the simplest mining problem has been well-solved could we properly proceed to more complex mining problems. However, no reliable mining approach has been developed after so many research works being published. Everyone would ask, why? The introduction part above has answered the question principally, while the following subsection presents further explanations.

2.2 The previous works and their drawbacks

As a continuation of the author’s previous work [23] (“the last study,” hereafter), which has presented a review on some known previous mining approaches, this paper does not assume to make such a further review since this paper is not on specific mining approaches. A more important reason is that all previous approaches are unreliable. As such, a summary of the causes of the unreliability as below would be adequate instead. Readers who want to know more information about the previous approaches can refer to several surveys [1, 2, 26, 27].

The last study investigated two fundamental issues of previous works: the ill-formed support measure (\(s_z\)), with their summation \(\sum (s_z)\) being much larger than 1 in an application, thus a serious “probability anomaly” issue. The second is the full enumeration pattern generation mode used, which produces an excessive number of patterns from any mining application. The two together lead to crucial overfitting issues in previous approaches, where overfitting means a spurious pattern being falsely taken to be a real frequent one.

The last study starts with a comparison between the concerned pattern mining problem and the classic frequency-based probability problem, where each row (tuple) of the sample dataset used stores only one event (pattern), with an assumption that there is no correlation between any two observations. As such, the accumulative event frequency equals the cardinality of the dataset u, and the frequentness (probability) of each event Z, f(Z), equals the ratio of its total occurrences F with u, that is, \(f(Z) = F(Z)/u\), which indeed is the origin of the s(Z) stated in (2.1).

Now, in pattern mining, the only difference is that each tuple of the dataset used, such as Table 1, may hold multiple patterns. If we separate those patterns from each tuple and store each pattern in a single tuple of a virtual dataset (DBv), and suppose the cardinality of DBv had increased from DBo’s u to w, then the frequentness of a pattern expressed in (2.1) should be changed into:

which then not only explores the cause of probability anomaly and overfitting problems but also is a remedy to them: From (2.1) and (2.2), \(r_s = s_z/ s'_z= w/u \gg 1\) in real applications, which then causes \(\sum s_z \gg 1\) and probability anomaly rises. Meanwhile, \(s_z\) greatly inflates the real frequentness of Z and overfitting comes up. \(r_s\) is thus named the primary overfitting ratio. From the running example Table 1, \(\sum s_z > 11\), and the larger the datasets, the severer the probability anomaly and the overfitting.

Another cause of the overfitting problem is the mode used to separate the patterns from each tuple of a dataset. Conventional approaches generally use or are based on the full enumeration mode to use each element repeatedly in a tuple to generate every possible pattern to fulfill the job, but this mode is not feasible in classic pattern mining. It is just because, as listed in Sect. 2.1, each element (item) in a tuple is a singleton, unique and indivisible, as reflected in the calculation of pattern length and tuple length. That means an element could not be used more than once to form different patterns since no element could belong to more than one pattern within the same tuple, and notice that the dataset is already historical and static.

Meanwhile, the “downward closure property,” which says any super-pattern could not be more frequent than its sub-patterns, has almost been taken as a golden rule and widely used in previous approaches. However, this property is not intrinsic to any dataset but only a phenomenon of the full enumeration mode. The profound reason for this property is that shorter patterns recapture some frequencies of their super-patterns. It thus leads to the biased frequentness evaluation toward short against long patterns and biases toward generated against originally observed ones.

Indeed, previous researchers have felt the problem of too many resultant patterns from mining applications and proposed different reduction approaches to solve it. For instance, to use the “interestingness” [28] or “weighted” [29] measures and the like to modify the conventional \(s_z\). These measures, especially the former, are rather complex with various exogenous inputs and hence not effective. Another example is the use of a “concise” or “condensed” result set such as the “maximal” or “closed” [30] pattern set to represent the whole mining result set, but these approaches are still based on the full enumeration mode such that for any given pattern Z, they produce the same S(Z) and s(Z). That is, although various solutions were attempted, they did not sight into the real problems as above and thus could not work well.

Instead, the solution proposed by the last study is the selective mining mode. This mode means a partition of the elements of each tuple. Each part of the partition then forms a pattern. The key is in how to select a proper partition for each tuple. That is how the mode is named so. This mode comes out from systematic analysis and the establishment of several theorems. The proposal first introduces the “equilibrium condition” to guide and quantify the mining.

The primer equilibrium condition means, for each tuple, the count \(C(e_i)\) of any given element \(e_i\) in its result pattern set cannot be more than the count \(S(e_i)\) of the same element from that tuple. That is,

Aggregately, the sum of the lengths of all the resulted patterns could not be more than that of the lengths of all the tuples of an entire dataset. That is,

where \(Z_i\) is the ith pattern in the pattern set, and \(b_j\) is the length of tuple j; both i and j are cardinal numbers.

In the initial stage of mining, because every element stands equally (the 4th feature of the classic dataset, refer to the previous subsection), the above two formulas take strict equality. A notice here is that (2.3) implies (2.4), but not vice versa.

Here are the three out of five theorems presented in the last study for the birth of the selective mode. One is that the number of possible patterns from a given data tuple can only be less than linear to the tuple length, while conventionally, it is exponential to the length, which means the number of result patterns should have been much fewer even without using any proposed reduction approach. At the end of Sect. 4, we will see an example of the difference in the mining result sets between the new and conventional approaches. The second is that the selective pattern generation mode is the only feasible mode in classic pattern mining. The third is that patterns produced from the selective mode are conjunction-issue-free. It then further justifies the use of f(Z) (2.2) above since it confirms that f(Z)s are directly additive and sum to 1. The probability anomaly issue is then automatically gone. This theorem also clarifies that the super-sub patterns can only be produced from different tuples of a dataset, another major point not aware of in the previous literature.

Finally, the last study concluded that any mining approach should satisfy at least the three rationality criteria: probability anomaly free, the use of the selective pattern generation mode, and compliance with the equilibrium condition. However, no previous approach did or could claim the satisfaction of the above criteria. That means no mining approach to date is rational yet, let alone reliable. This present paper will present further criteria to see soon.

For the detailed reasoning of the above issues and their solutions and other contents not presented above, interested readers may refer to the original work [23].

We now turn back to the topic of the pattern frequency distribution theory, which affects the rationality thus reliability of the pattern mining approach either but virtually absent in previous works. What we can find are few articles on estimating the number of patterns in applications [31, 32]. However, they are based on the full enumeration pattern generation mode again. Their declared estimation accuracy thus could not hold instead. Meanwhile, such an estimation problem is not the focus of the present paper. That means we could not get significant references from the literature to discuss the main topic of this paper, and we finish this part here.

Hereunder we will get into the main body of this paper to present the properties of patterns frequency distributions. We will use the notation F(Z) instead of S(Z) to represent the pattern frequency and use p(Z) or f(Z) rather than \(s'(Z)\) to represent the probability (frequentness) of the pattern. Since f(Z) is linear to F(Z) as defined in (2.2), the shapes of the frequency and frequentness distribution curves will be the same. As such, for simplicity, our discussion will be mainly on the frequency distributions.

Since a pattern is naturally a combination of one or more different elements, combinatorics will be a basic theory to study the mining. In this paper, we use the notation \(C_i^k\) to mean the number of combinations of k elements selected from a set of i different elements. Another notice is that the empty set \(\varnothing \) could not be a pattern, and \(F(\varnothing )\) be undefined. The reasons will clear up before the end of Sect. 3.

Lastly, this paper does not consider the effect of a frequentness threshold such as \(s_{ min}\) mentioned before. It is not only because that the \(s_{ min}\) and its setting-up are problematic, as addressed in the last study [23], but also notice that the use of \(s_\mathrm{min}\) in previous works is mainly to reduce the size of the resultant pattern set. An issue is that when a user wants to look into the result set with a smaller \(s_{ min}\), the only way is to rerun the mining software, which could be very costly and take up to dozens of hours to run over a large dataset. With the new selective mining approach, delivering the entire result pattern set to the user will no longer be a big problem since the set size will greatly decrease. It is then a trivial issue for a user to look at the results with whatever \(s_{ min}\) s/he likes. Since this paper is mainly a theoretical work, it is more than needed but required to keep the generality and completeness of our discussion, and we thus set the minimum frequency (or the absolute support in conventional notation) F(Z) to be 1 to cover all the patterns of an application in this paper.

3 The properties of raw pattern frequency distributions

A pattern Z generated from the full enumeration mode is named a raw pattern and its frequency F(Z) the raw frequency. Although only the selective pattern generation mode is feasible in the classic mining, there are still reasons we need to study the raw pattern frequency distributions from the full enumeration mode. Firstly, the full enumeration mode is equal to the repeatable sampling covered in most probability textbooks. In combinatorics, the mode represents the typical question to get all possible combinations of any number of elements from a set of different elements. In accordance, as we will see later, the study of the raw pattern frequency distributions will not only fulfill theoretical completeness but also lay a foundation for the distribution theory with the reduced pattern generation mode. The reduced mode may not be fully the selective mode, but it reduces the number of patterns from the full enumeration mode. Secondly, in practice, it is a way to find out the real patterns from a full set of all possible patterns. Then the study of the raw pattern frequency distributions is again imperative. For simplicity, the word “raw” may be omitted hereafter.

In real applications, the number of possible patterns is usually huge. It will thus be overwhelming in this paper to look into each pattern and its frequency. Instead, this paper presents an overall pattern frequency distribution theory referring to every collection of patterns of the same length. We start the discussion from a vertical pattern generation approach.

3.1 The vertical pattern generation approach

In the full enumeration pattern generation mode, the common way is horizontally to generate patterns from each tuple of an original dataset DBo. For instance, from tuple \(T_1\) of Table 1, we can generate patterns \(V_1\), \(V_1V_4\), etc. However, the vertical approach [33, 34] is more helpful to derive the basic formula to use in this paper. In this approach, we first transform the original dataset DBo, e.g., Table 1, into its “dual” table, named DBd, as shown in Table 2, such that:

That is, the transformation exchanges the roles of TID and VID such that VID in DBd acts as the key attribute, with each \(V_i\) \( (i = 1, 2, ..., n)\) representing a set of \(T_j\)s that holds the same \(V_i\) in the original database DBo. For example, in DBo (Table 1), \(V_1\) is referred by \(T_1, T_4, T_5, T_9\) and \(T_{10}\). So, in DBd, \(V_1\) refers to those \(T_j\)s in turn. With this vertical approach, a pattern \(Z_k = (VpVq{\dots }Vs)\) of length k is a combination of k elements vertically from the column VID of DBd. The frequency of a pattern of a single element \(V_i\) is the number of corresponding \(T_i\)s held in row \(V_i\) of of DBd, while the frequency of a pattern of k \((k > 1)\) elements is the number of the “intersected contents” (\(I_c\)), that is, the number of \(T_i\)s commonly referred by each of the elements. More formally, we define:

Notice that, here we are not interested in what the intersected contents are, but only in the number of such contents.

Another notice is the \(|VpVq{\dots }Vs|\) above is not the length of the pattern \(Z_k\) but the count of its intersected contents \(I_c\).

For instance, in DBd (Table 2), \(V_1\) refers to \(\{T_1, T_4, T_5, T_9, T_{10}\}\), \(V_4\) refers to \(\{T_1, T_2, T_5, T_7\}\). Then, \(I_c(V_1V_4\)) = \(\{T_1, T_5\}\), and \( F (V_1V_4) = |I_c(V_1V_4)|\) \(= 2\).

Recall that \(\varOmega \) represents the universe of \(V_i\)s in DBo, now we use \(U_t\) to mean the universe of \(T_{j}\)s in DBd, i.e., \(T_j (j = 1, 2, ..., u)\) becomes the element of \(U_t\). Notice that the same \(V_i\) may not present in every tuple of DBo. Otherwise, \(V_i\) is removable from the dataset to simplify the problem. As such, \(V_i\) refers to a proper subset of \(U_t\), i.e., \(V_i \subset U_t\). Then, the correspondences of the DBo and DBd are:

where |X| means the number of elements (the cardinality) of X.

3.2 The inclusion–exclusion principle and the pattern frequencies

From the above subsection and DBd (Table 2) where the universe \(U_t = \{T_1, T_2 \dots T_u\}\) with \(|U_t| = u\), and each \(V_i \) \((i = 1, 2 \dots n)\) represents a (overlapped) subset of \(|U_t|\), then by set theory, if n and u are finite, we have:

From a very basic set operation (\(n = 2\)):

where \(V_1V_2\) is a shorthand for \(V_1 \cap V_2\).

Extending the above into (3.6) and considering (3.7), we have:

Formula (3.8) is referred as the “inclusion–exclusion principle” [35] since the alternating signs presented in the formula imply the compensations of possible excessive inclusion or exclusion of the elements (\(I_c\)) involved in every (VpVq...Vs) during the calculation. In this paper, we use this principle as the starting point to explore more general laws governing pattern frequency distributions under the full enumeration regime.

In (3.8), each \(\sum \) term represents a sum of the raw frequencies of a “collective” of patterns of the same length. To avoid the notation confusions and to simplify expression (3.8), we introduce the following definitions:

Firstly, we use \(\varPhi _k\) to mean a collection of patterns of length k, and \(H_k\) to be the “sub-cumulative raw frequency” of the \(\varPhi _k\), and \(C_k\) the number of patterns within \(\varPhi _k\). More formally:

Definition 1

The “collection of raw patterns of the same length k” :

where \(j= 1, 2, ..., C_k\), and \(Z^j\) is the jth pattern within \(\varPhi _k\).

Note that the number j above is for enumeration, i.e., j is a cardinal but not an ordinal number.

For instance, from Table 1, \(\varPhi _1 = \{V_i|i = 1, 2, ..., 8\}\), \(\varPhi _2 = \{V_1V_2, V_1V_3 ..., V_7V_8\}\), and so on.

Definition 2

The “sub-cumulative raw frequency” of \(\varPhi _k\):

We will see examples of \( H_k\)s in Table 3 later.

Then, (3.8) can be reformulated as:

The “inclusion–exclusion principle” then becomes easy to express by (3.11). The above concepts and formulas are fundamental, based on which we shall explore a set of interesting properties of the pattern frequency distributions in the rest of this paper.

3.3 The calculation of \(H_k\)s and the accumulative raw frequency \(w_0\)

As mentioned before, w is generally used as the accumulative pattern frequency. In particular, we use \(w_0\) to mean the raw accumulative in the full enumeration mode.

3.3.1 The basic formulas

Based on combinatorics, the basic formula for \(w_0\) would be:

where \(b_i = |T_i|\) is the number of elements held by a tuple \(T_i\) in the original dataset DBo, \(u = |DBo|\), and note again, the empty set \(\varnothing \) is not taken as a pattern, thus \(\sum _{j = 1}^{j = i}C_{i}^j = 2^{i} - 1\).

The computation cost of \(w_0\) from (3.12) is more than linear to the data size u. As such, it may take hours or days to get \(w_0\) in current desktop system when the concerned dataset u is in trillions or even larger. For this, a cheaper formulae has been developed in [23]:

where \(g_i\) is the number of tuples each holding i elements in the original datasets DBo, hence:

and \(\alpha = Max(T_i)\), the longest tuple length.

The full series of \(g_i\)s of a dataset is named the “\(g_i\) distribution” . For instance, the \(g_i\) distribution of Table 1 is (2, 3, 2, 2, 0, 1).

Now, we define

as the sum of the frequencies of all patterns that can be generated from tuples of length i, then,

Hereunder we present an even more efficient way to calculate \(H_k\) and \(w_0\).

3.3.2 The vector-expression formulas

Since \(H_k\) represents the accumulated frequency of a collective of all patterns of the same length, then:

and,

(3.16) and (3.18) produce the same \(w_0\), but they represent different pattern generation strategies. Equation (3.16) refers to the case where patterns of different lengths \(\le i\) are generated in a loop i from tuples of same length i, while (3.18) refers to the case where patterns of the same length k are generated in a loop k from all tuples of length \(\ge k\).

Equations (3.17) and (3.18) can be in either vector or matrix expressions, and we introduce the vector approach first. For this, we define

as a “gathering vector” of dimension (\(\alpha - k + 1)\). In particular, when \(k = 1\), \(\mathbf {G_1}\) is the entire series of \(g_i\) distribution. And,

as a “setup vector” of dimension (\(\alpha - k + 1)\). In particular, when \(k = 1\), \(\varTheta _{\mathbf {1}}\) is called an “initial setup vector” , and

In addition, we define a vector \(\mathbf {E_k}\) as a “w-product” of \(\mathbf {G_k}\) and \(\varTheta _{\mathbf {k}}\), expressed as \(\mathbf {E_k} = \mathbf {G_k} \circ \varTheta _{\mathbf {k}}\), where \(\mathbf {E_k}\), \(\mathbf {G_k}\) and \(\varTheta _{\mathbf {k}}\) are of the same dimension, and each element \(e_i\) of \(\mathbf {E_k}\) being the product of \(g_iC_i^k, (i = k, k + 1,\dots , \alpha )\). That is,

Notice that (3.17) can be expressed as a dot product of \(\mathbf {G_k}\) and \(\varTheta _{\mathbf {k}}\):

On the other hand, we can have:

where \(\mathbf {B_k}\) is termed as a “base vector” of dimension (\(\alpha - k + 1\)), with all elements being 1:

Among other significances, the use of the above vector formulae enables the calculation of \(H_k\)s recursively without involving any exponent operation through the following derivations.

Extending (3.17), we have:

Since, from combinatorics:

then (3.26) becomes:

where \(\mathbf {A_k}\) is named an “adoptive vector” , each of its element being (\(i - k\)). Notice that i starts from \(k + 1\), thus \(\mathbf {A_k} = (1, 2, \dots , \alpha - k)\), which is exactly the first section of the \(\varTheta _{\mathbf {1}}\) series up to (\(\alpha - k\)). In programming point of view, \(A_k\) is a result of right shift of \(\varTheta _{\mathbf {1}}\) by (\(k - 1)\) positions, noted as:

Meanwhile, vector \(\mathbf {E'_k}\) is a copy of \(\mathbf {E_k}\) with its first element being cutoff. For instance, if \(\mathbf {E_k} = (2, 3, 5)\), then \(\mathbf {E'_k} = (3, 5)\). That is, each element of \(\mathbf {E'_k}\), \((e'_k)^i = e_k^i\), starting from i = k + 1.

On the other hand, with the same formulation of (3.24), we have:

Since \(\mathbf {B_{k}}\) (similar to \(\mathbf {B_{k+1}}\)) is a base vector of every element being 1, the main issue of the computation of \( H_k\) (and \(H_{k+1}\)) is now the computation of \(\mathbf {E_{k}}\) (and \(\mathbf {E_{k+1}}\)). Comparing (3.28) with (3.29), we can easily see that any element \(e_{k + 1}^i\) of \(\mathbf {E_{k+1}}\) is the computation result from (3.28):

and elementally,

Note that the superscript i of the above \( e_{k + 1}^i \) is a global index, which is easier than a local index to express the relation of two vectors \(\mathbf {E_{k}}\) and \(\mathbf {E_{k+1}}\) of different dimensions.

Now, (3.21), (3.22), (3.23), (3.29) and (3.30) form a recursive program to compute all the \( H_k\)s, starting from \(\varTheta _1\) and \(G_1\) only. That is, from (3.22), we have:

where \(\mathbf {G_1}\) is the vector of the whole series of \(\mathbf {G_k}\) starting at \(k = 1\), and \({{\varTheta }}_{{\textbf {1}}} = (1, 2, \dots , \alpha \)).

Then, by (3.30), \(\mathbf {E_2}\), and similarly \(\mathbf {E_3}\), and so on, will be obtained recursively, and so the \(\mathbf {H_k}\)s, as described below.

3.3.3 The tabular recursive approach to compute \(H_k\)s

Table 3 is an example of the use of the above formulae to compute all \(H_k\)s recursively as well as \(w_0\) over dataset DBo (Table 1). The first row of Table 3 lists the elements of \({{\varTheta }}_{{\textbf {1}}}\), which is just an enumeration from 1 to \(\alpha \) (here \(\alpha \) = 6), while the second row lists the elements of \(\mathbf {G_1}\) (the full \(g_i\) series). These two lists are the only inputs.

The bold numbers in each of the following 6 rows of the table are the elemental results of \(\mathbf {E_k}\)s, and they together form an upper triangular matrix, named as “enumeration triangle matrix” \(\varLambda \). Each row of the \(\varLambda \) forms an \(\mathbf {E_k} (k = 1, 2, \dots ,\alpha )\). For instance, row 3 \((k = 1)\) represents \(\mathbf {E_1}\) (refer to 3.31), resulted from multiplying the corresponding elements of \(\mathbf {G_1}\) and \({{\varTheta }}_{{\textbf {1}}}\). Row 4 is corresponding to \(k = 2\) globally, but in the recursive approach, it means \(k + 1 = 2\) where \(k = 1\) preset.

To compute \(\mathbf {E_2}\) and \(H_2\), right shift \({{\varTheta }}_{{\textbf {1}}}\) by one column and get \(A_{1}\), or left shift \(\mathbf {E_1}\) by one column to get \(\mathbf {E'_1}\). Then according to (3.30), the first element of \(\mathbf {E_2}, e_2^2 =\nicefrac {1}{2}(1 * 6) = 3\) (note the first element of \(\mathbf {E_2}\) starts from the second column, which is reflected in the superscript of \(\mathbf {E_2}, e_2^2 \)). The whole row 4 represents the elemental results of the w-product of (6, 6, 8, 0, 6) and (1, 2, 3, 4, 5) divided by 2. Similarly, row 5 is the result of the w-product of (6, 12, 0, 15) and (1, 2, 3, 4) divided by 3, and so on.

Finally, the sum of a row of the \(\varLambda \) triangle gives a \(H_k\), while the sum of a column being an \(F_i\) (refer to 3.15). Additionally, as a checkpoint, the main diagonal elements of the triangle \(\varLambda \) are just a copy of the \(\mathbf {G_1}\) (the second row)!

The above example well-demonstrates the beauty of formulae (3.29) through to (3.31), and the tabular approach developed is superb in both programming easiness and computation efficiency. This approach involves no exponent or combinatorics operations, and all the intermediate results in Table 3 are reused. Efficiency is thus its most important feature. Notice that the maximum tuple length \(\alpha \) is not linear to the data size u but usually would not exceed a hundred or thousand in an application. The computation cost of this approach will thus be in only minutes and nearly constant over any large dataset, while the cost of the preliminary formula (3.12) could be in multiple hours, as stated before. In other words, the above approach realizes the full scalability of the calculation of \(H_k\) and \(w_0\). It would be even more significant if this approach could develop a way to reach the scalability of pattern mining in general, which is recognized as a critical issue in pattern mining [36].

Additionally, this tabular approach is easily extensible with the change of the dataset. For instance, if \(\alpha \) increased, we only need to add more required columns and rows on the right and the bottom of the table. Secondly, if the \(g_i\) distribution changed, we only need to update the affected columns and \(F_i\)s and \(H_k\)s.

3.4 The parity property of odd and even length pattern frequencies

Besides the efficient \(H_k\) computations, there is an interesting relation between the sums of the frequencies of odd and even length patterns from (3.11).

where the upper bound “\(\nicefrac {\alpha }{2}\)” on the left side should be changed into \((\alpha + 1)/2\) and the right side to \((\alpha - 1)/2\) if \( \alpha \) is odd. We use \(H_{ odd}\) and \(H_{ even}\) to mean the accumulative of raw frequencies of patterns of odd lengths and even lengths, respectively:

Then, (3.32) becomes:

Adding \(H_{ odd}\) to both sides of (3.34), and notice that \(H_{ odd} + H_{ even} = w_0\), we get:

That is,

As measures of frequencies, \(H_{ odd}\) and \(H_{ even}\) each must be an integer. We have the following proposition to guarantee it:

Proposition 1

\(w_0 + u\) or \(w_0 - u\) is always even, and \(w_{0}\) is of the same parity of u.

Proof

From (3.12), \(w_0 = \sum _{j = 1}^{j = u}2^{b_j} - u\), and let \( y = \sum _{j = 1}^{j = u}2^{b_j}\). Since \(b_j = |T_j| > 0\), it follows that y is always even. Then, \( w_0 + u = y - u + u = y\), and \(w_0 - u = y - u - u = y - 2u\). In both cases, the results are even, and the first part of the proposition is proved. At the same time, it is easy to see that, if u is even (or odd), \(w_0\) is then even (or odd), and the second part of the proposition is proved. \(\square \)

The above formulas and results can be verified from Table 3. Following, we introduce significant laws governing all of the \(H_k\) distributions.

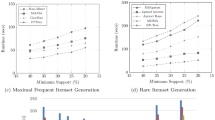

3.5 The \(H_k\) frequency distribution curve

If we plot the \(H_k\) distribution \((k, H_k)\) and link all of the \(H_k\) value points together as shown in Fig. 1, we get a curve of “raw collective frequency distribution curve” , or simply “\(H_k\) curve” . Interestingly, the curve can be expressed as a relation between every adjacent \(H_k\) and \(H_{k+1}\) as what follows:

Theorem 1

From the classic dataset and by full enumeration pattern generation mode, the \(H_k\) curve can be expressed as:

where n is the number of distinct elements presented in the dataset; \(\alpha \) is the maximum length of the tuples thus patterns; \(R_k\) is a “collective frequency reducer” , or abbreviated as “reducer” ; and

The above theorem can be proved either qualitatively or quantitatively. To save space, here we present the quantitative proof only.

Proof

We start the proof with the simplest case of a dataset of one tuple only, its length being \(\alpha \) (scenario 1), then,

According to combinatorics,

we then have:

Now we extend the above to the case of a dataset of u \((u > 1)\) tuples of the same length \(\alpha \) (scenario 2), then,

Accordingly,

Comparing the above two equations, we get:

The above two scenarios together represent the preliminary case featured with the same length of every tuple of a given dataset, and in this case \(R_k\) = 1. The top curve in Fig. 1 depicts the preliminary \(H_k\) curve.

Now in the general case that the tuple lengths may vary, our primary work is to prove \(R_k < 1\) . The proof runs with matrix expressions.

From (3.23), \(H_k =\sum _{i = k}^{i = \alpha }g_iC_i^k = \mathbf {G}_{\textbf {k}}\cdot {{{\varTheta }}}_{\textbf {k}}\), we now transform it with the non-bold \(G_k\) and \({{{\varTheta }_{k}}}\) as the matrix expression for \(H_k\):

where \(G_k\) is an \(1 * (\alpha - k+1)\) “gathering matrix” , starting from \(g_k\): \(G_k = (g_k, g_{k+1}, \dots , g_\alpha )\); \({{{\varTheta }}}_{k}\) is an \((\alpha - k+1) * 1\) “setup matrix” , and \({{{\varTheta }}}_{k} = ( C_k^k, C_{k+1}^k, \dots , C_\alpha {}^k)^T\), particularly, when \(k = 1, {{{\varTheta }}}_{1}\) being an \(\alpha * 1\) “initial setup matrix” , and \({{{\varTheta }}}_{1} = (1, 2, ..., \alpha )^T\); \(I_k\) is an \((\alpha - k+1) * (\alpha - k+1)\) identity (thus idempotent) matrix, with all elements of the main diagonal being 1 while the rest being 0.

Similar to \( H_k , H_{k+1}\) can be expressed as:

On the other hand, by (3.28),

where \({{{\varTheta }}}_{k}^{\prime }\) is a sub-matrix of \({{{\varTheta }}}_{k}\) without the first row; similarly \(G_{k+1}\) is a copy of \(G_k\) without the first element; \(A_k\) is a diagonal matrix of dimension \((\alpha - k) * (\alpha - k)\) and called an “adoptive matrix” , its main diagonal elements \(a_{jj} = \frac{i - k}{\alpha - k}\) with \(j = i - k\) and starting from \(i = k + 1\). As such, except the last element \(a_{tt} = 1\), where \(t = \alpha - k\), all the rest \(a_{jj} < 1\).

Now, we define a diagonal matrix \(A_k^+\) of dimension \((\alpha - k + 1) * (\alpha - k + 1)\), with its first element \(a_{11} = 0\), and the rest submatrix of dimension \((\alpha - k) * (\alpha - k)\) being the same as \(A_k\). A better understanding of the above may refer to the following examples of the matrixes related to the running example with \(k = 3\):

\(I_k = \begin{bmatrix} 1 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} 0 &{} 0 \\ 0 &{} 0 &{} 1 &{} 0 \\ 0 &{} 0 &{} 0 &{} 1 \end{bmatrix}, A_k = \begin{bmatrix} \nicefrac {1}{3} &{} 0 &{} 0 \\ 0 &{} \nicefrac {2}{3} &{} 0 \\ 0 &{} 0 &{} 1 \end{bmatrix}, \)

\( A_k^+ = \begin{bmatrix} 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} \nicefrac {1}{3} &{} 0 &{} 0 \\ 0 &{} 0 &{} \nicefrac {2}{3} &{} 0 \\ 0 &{} 0 &{} 0 &{} 1 \end{bmatrix}, \)

\(G_k = \begin{bmatrix} 2&2&0&1 \end{bmatrix}, \) \(G_{k+1} = \begin{bmatrix} 2&0&1 \end{bmatrix} \) (refer to Table 3),

\({{{{\varTheta }}}_{k} = \begin{bmatrix} 1&4&10&20 \end{bmatrix}}^T, \) \({{{{\varTheta }}}_{k+1} = \begin{bmatrix} 4&10&20 \end{bmatrix}}^T. \)

With the above formulas, (3.42) can be reformulated as:

Now (3.43) and (3.40) \(H_k = \sum _{i = k}^{i = \alpha }g_iC_i^k = G_k I_k {{{\varTheta }}}_{k}\) become comparable. Notice that the last element \(\alpha _{tt}\) of \(A_k\) (the same to \(A_k^+\)) is always 1 as stated before, while ever other element of \(A_k^+\) is less than that of \(I_k\), respectively. Notice also that some element(s) of \(G_k\) can be zero (but never be negative), while the last element \(g_{\alpha } > 0\) in any case (otherwise the longest tuple length will not be \(\alpha \)). There then have two alternative outcomes of the comparisons between \(G_k A^+_k {{{\varTheta }}}_{k}\) and \(G_k I_k {{{\varTheta }}}_{k}\).

(1) In a general case of more than one element of \(G_k\) series being positive, then compared with (3.40), there must be:

It means there exists an \(R_k\), such that

where \(0< R_k < 1\) must be true to satisfy (3.45).

(2) In a special case of only \(g_{\alpha }\) being positive, then compared with (3.40), both \(G_k A^+_k {{{\varTheta }}}_{k}\) and \(G_k I_k {{{\varTheta }}}_{k}\) degrade to the same scalar value \(g_{\alpha } C^k_{\alpha }\). That is, in this case,

The above means \(R_k = 1\) compared with (3.45).

Consider the above two cases together, and referring to (3.45), (3.43) can be generally presented as:

In summary, \(0 < R_k \le 1\) is always true in any case, and the above formula is exactly (3-36). Theorem 3.1 is then fully proved. \(\square \)

From the above proof, we can get further corollaries as below.

Corollary 1

The distribution of original data tuples of lengths less than k does not have effect on \(R_s\) with \(s \ge k\).

The above is obvious since \(G_k\) starts from its kth element. Indeed, this corollary can be alternatively stated that \(R_k\) is determined by all and only the \(g_i\)s with \((i \ge k)\),

Corollary 2

The necessary and sufficient condition for \(R_k = 1\) is that the kth through to the \({(\alpha - 1)}\)th members (inclusive) of the \(G_k\) series equal to zero.

Corollary 3

If \(R_k = 1\), then all \(R_s = 1\), where \(k \le s < \alpha \) (note \(R_k\) series is ended at \(R_{\alpha - 1}\)).

The two corollaries above are related. The proof of Corollary 2 is implied in the derivation of (3.46). The proof can also be seen from Corollary 1. Since only \(g_i\)s with \(i \ge k\) can affect \(R_k\), but if all \(g_i\)s = 0 except \(g_\alpha \), then only \(g_\alpha \) determines \(H_k\) and \(H_{k+1}\), which then means no reducer exists between \(H_k\) and \(H_{k+1}\), hence \(R_k = 1\).

Corollary 3 comes directly from Corollary 2 that, if there are successive m 0s in the \(g_i\) distribution from \(i = \alpha - 1\) backwardly, then there are m 1s in the right section of \(R_k\) series. Particularly, at k = 1, if \(R_1 = 1\), then all \(R_is = 1\). It turns to be the preliminary case stated before, where all the original data tuples are of the same length \(\alpha \) (that is, there is only \(g_\alpha \) being nonzero).

We will see the verifications of the above in Example 1 soon, Tables 4 and 7 later.

The following subsections present other important properties of the \(H_k\) curve.

3.6 The quasi-concavity of the \(H_k\) curve

Theorem 2

(\(H_k\) quasi-concavity theorem) If \(R_k\) is non-decreasing, then the \(H_k\) curve expressed in (3.36) is strictly quasi-concave downward over \(0 < k \le \alpha \), and it reaches its apex value at \(k = q \le \nicefrac {\alpha }{2}\).

“Quasi-concavity” is used in real-valued function study [37]. If a function \(f(\mathbf {Z})\) is strictly quasi concave within a domain E, then there exists a \(\mathbf {Z}^* (\mathbf {Z}^* \in E)\) such that \(f(\mathbf {Z})\) is increasing for \(\mathbf {Z} < \mathbf {Z}^*\) and \(f(\mathbf {Z})\) is decreasing for \(\mathbf {Z} > \mathbf {Z}^*\) [37], where \(\mathbf {Z}\) can be a vector of multidimensional variables. We use this concept not only for better understanding but also for formal applications of the properties of the \(H_k\) distributions. The only difference here is that the “quasi-concavity” property applies to discrete \(H_k\) values (refer to Fig. 1).

Proof

If all \(R_k\)s = 1, it refers to the preliminary case that all u tuples of a given dataset are in the same length \(\alpha \), and \(H_k = u C_{\alpha }^k \) (3.39). The quasi-concavity property of the \(H_k\) curve is identical to that of the curve formed by the full series \(C_{\alpha }^k\) \( (k = 1, 2, \dots , \alpha \)). Notice in this case \(H_k\) is symmetric since \(C_{\alpha }^{\alpha - k} = C_{\alpha }^k\). When \(\alpha \) is an even number, \(H_k\) is strictly quasi-concave and reaches its maximum value \(H^*\) at \(k = \frac{\alpha }{2}\). When \(\alpha \) is an odd number, \(H_k\) gets its two maximum values at \(k = \frac{\alpha - 1}{2}\) and \(k + 1 = \frac{\alpha + 1}{2}\). However, since there is no other integer between k and \(k + 1\), and since the preliminary case is not much an issue in this study, we take that the preliminary \(H_k\) curve is generally strict quasi-concave hereafter.

The strict quasi-concavity of the preliminary \(H_k\) curve (3.19) can be viewed from the depiction of the top curve in Fig. 1.

Now we prove the quasi-concavity property in the general case with \(R_k < 1\). Let us first look at the slope of the \(H_k\) curve, \(\nicefrac {\varDelta H_k}{\varDelta k}\), with \(\varDelta k = 1\), which is the smallest interval of k.

Since \(H_k > 0\), the sign of the slope \(\frac{\varDelta H_k}{\varDelta k}\) is determined by \((R_k\frac{\alpha - k}{k + 1} - 1)\). Notice that,

-

(i)

\(\nicefrac {(\alpha - k)}{(k + 1)}\) is a strictly decreasing function of k, since:

$$\begin{aligned} \frac{\varDelta [{\nicefrac {(\alpha - k)}{(k + 1)}}]}{\varDelta k} = - \frac{\alpha + 1}{(k + 1)(k + 2)} < 0 \end{aligned}$$ -

(ii)

Without the effect of \(R_k\) (thus in the preliminary case), \(\frac{\alpha - k}{k + 1}\) will be positive and leads \(H_k\) to increase until reaching the apex at \(k = \frac{\alpha }{2}\) if \(\alpha \) is even, or \(k = \frac{\alpha + 1}{2}\) and \(k = \frac{\alpha - 1}{2}\) if \(\alpha \) is odd as stated before, where \(\frac{\alpha - k}{k + 1} - 1 = 0\). After that \(\frac{\alpha - k}{k + 1} -1\) becomes negative and \(H_k\) decreases.

-

(iii)

With the effect of \(0< R_k < 1\): At the early stage (\(k \ll \alpha \)), \(\frac{\alpha - k}{k + 1} \gg 1\), while \(R_k\) being non-decreasing as given in the theorem, they then together lead \(H_k\) to increase with k but at a reduced rate compared with that in case ii above since \(R_k < 1\). Ultimately \((R_k\frac{\alpha - k}{k + 1} - 1) \rightarrow 0 \) at a point q such that \(H_k\) reaches its apex value \(H_q\), but q can only be less than \(\frac{\alpha }{2}\) due to the reduction effect of \(R_k\), and the value \(H_q\) would become much smaller than \(H^*\) without the effect of \(R_k\) (as seen in Fig. 1). Once the \(H_q\) has been reached, the slop factor \((R_k\frac{\alpha - k}{k + 1} - 1)\) becomes negative and keeps decreasing with k increasing since \(\frac{\alpha - k}{k + 1}\) decreases with k. It means \(H_k\) will then be monotonically decreasing until the end, regardless of \(\alpha \) being odd or even.

In summary, with the given condition of the theorem, \(H_k\) has one and only one apex at q with \(q \le \nicefrac {\alpha }{2}\), and \(H_k\) is strictly increasing for \(k < q\) but strictly decreasing for \(k > q\). \(H_k\) is thus strictly quasi-concave, and the theorem is fully proved. \(\square \)

Note, when \(\alpha \) is not very large, \(H_k\) might get its maximum value at \(k = 1\) (refer to example case b in Table 4 later), but such a case does not affect the soundness of the theorem. Another point to note is that Theorem 3.2 stated a sufficient condition of \(R_k\) to keep an \(H_k\) curve quasi concave, while following are the more precise description of \(R_k\) against this condition:

Corollary 4

If the \(R_k\) series is not decreasing, and q is the apex point of a quasi-concave \(H_k\) curve, then:

and

Proof

Notice \(0 < R_k \le 1\) as specified in (3.37), and from (3.36), we have \(\frac{k + 1}{\alpha - k} = R_k\frac{H_k }{H_{k+1}}\), where \(\frac{H_k }{H_{k+1}} < 1\) for \(k < q\) because of the \(H_k\) quasi concavity as given. Then, \(\frac{k + 1}{\alpha - k} < R_k \le 1\) before q, and (3.48) is proved. Meanwhile, notice that \(\frac{k + 1}{\alpha - k}\) is an increasing function of k before \(k = \frac{\alpha }{2}\), while \(q \le \frac{\alpha }{2}\). We then take \(k = q - 1\), such that \(\frac{k + 1}{\alpha - k}\) reaches its maximum value before pint q as \(\frac{q}{\alpha - q + 1}\), which, however, is less than \(R_k\) as specified in (3.48). Because of the monotonicity of \(R_k\), \(\frac{q}{\alpha - q + 1}< R_k\) will hold for the whole interval of \([q, \alpha )\), and (3.49) is proved. \(\square \)

Example 1

From Table 1, the \(g_i\) distribution is {2, 3, 2, 2, 0, 1} with \(\alpha = 6\); the \(H_k\) series is \(\{28, 36, 30, 17, 6, 1\}\) (from Table 3), which is quasi-concave with its apex point at \(q = 2 < \alpha /2 = 3\). It then proves Theorem 2. The \(R_k\) series is {0.514, 0.625, 0.756, 0.882, 1}, which well-demonstrate the monotonicity of \(R_k\)s. Notice also that \(g_5 = 0\), and \(R_5 = 1\), which then verifies Corollary 2. \(\frac{k + 1}{\alpha - k} |_{k = 1}= 2/5 = 0.4 < R_1 = 0,514\), \( \frac{q}{\alpha - q + 1} = 2/5 = 0.4 < R_2 = 0.625\) , and Corollary 4 is verified. Empirical results and verifications of the above from real application datasets are given in Table 7 and “Appendix” of this paper.

Quasi-concavity is a significant property of the \(H_k\) curve. At this point, a question may arise: would the condition of non-decreasing \(R_k\) hold in most of the classic pattern mining applications, such that the property could be typical? The following theorem answers it.

Theorem 3

For an ordinary \(g_i\) distribution, the \(R_k\) series is non-decreasing, and the smaller the k relative to \(\alpha \), the stronger the condition \(R_{k+1} \ge R_k\) to hold.

Note that the requirement of “ordinary” distribution means it is similar to many other distributions typically denser around the middle of \(\alpha \) while diminishing toward the two ends, but with no requirement as of a normal \(N(\mu , \sigma ^2\)), or \(\beta \) distribution, or other quasi-concave distribution in general. It even allows multimode and scattered distributions, as long as the extra mode does not appear in the right tail of the distribution. The above will become evident in the proof below.

The proof can be done through the basic \(H_k\) expression (3.17), the vector (3.24), or the matrix expression (3.40). However, by any expression, the proof of the above theorem could not be simple but intricate and lengthy. Hereunder we use the matrix expression to prove the theorem.

Proof

Notice that the preliminary case with only \(g_\alpha \) being nonzero is a special case of the ordinary \(g_i\) distribution, and we already know in that case \(R_k = 1\) for all ks. The proof is thus on the general situation and starts from (3.40):

where \(I_k\) is omitted since \(G_k\) is a matrix of a single row while \({{{\varTheta }}}_{k}\) a single column.

Similarly, \(H_{k+1} = G_{k+1} I_{k+1} {{{\varTheta }}}_{k+1} = G_{k+1}{{{\varTheta }}}_{k+1}\). On the other hand, from (3.36),

then,

A non-decreasing \(R_k\) means \(\nicefrac {\varDelta {R_k}}{\varDelta {k}} \ge 0\). Take the smallest \(\varDelta {k} = 1\), then the condition becomes \(\varDelta {R_k} \ge 0\), and notice:

Since the denominator \([(\alpha - k)G_{k}{{{\varTheta }}}_{k}]^2 > 0\), we can examine the numerator only, and note it as \(\delta {R_k}\):

The above looks good, but it is still difficult to prove whether \(\delta {R_k} \ge 0\). For instance, it is not straightforward to see whether \(G_{k+2}{{{\varTheta }}}_{k+2} G_k{{{\varTheta }}}_{k} - (G_{k+1}{{{\varTheta }}}_{k+1})^2\) is positive or not since the entries of each involved matrix are all variables and the dimensions of the matrixes are variables too. A feasible strategy to get around the problem is to simplify (3.51) with reasonable approximations, since we only need to know the sign of \(\delta {R_k}\) rather than its exact value. Let

where \(X_1 = G_{k+2}{{{\varTheta }}}_{k+2} G_k{{{\varTheta }}}_{k}, X_2 = (G_{k+1}{{{\varTheta }}}_{k+1})^2\), and \(X_3 = G_{k+1}{{{\varTheta }}}_{k+1}G_{k}{{{\varTheta }}}_{k}\).

In block expression,

where \({{{\varTheta }}}_{k}^\prime \) as stated before is the same \({{{\varTheta }}}_{k}\) without the first element 1 (note the initial element of \({{{\varTheta }}}_{k}, {{{\theta }}}_{k} = C_k^k = 1)\). Meanwhile, let \({{{\varTheta }}}_{k+2}^+\) be an extended \({{{\varTheta }}}_{k+2}\) with an added element 1 in the beginning, that is, \({{{\varTheta }}}_{k+2}^+ = \begin{bmatrix} 1 \\ {{{\varTheta }}}_{k+2} \end{bmatrix}.\)

Then, we look at \(X_3\) first:

which can be safely approximated to:

For \(X_1\), we reformulate it with an augmentation of \(G_{k+2}\) into \(G_{k+1}\) and \({{{\varTheta }}}_{k+2}\) into \({{{\varTheta }}}_{k+2}^+\), but keep the value of \(X_1\) unchanged:

When k is not large relative to \(\alpha \), \(g_{k+1}\) would be much smaller than the product of \(G_{k+1}{{{\varTheta }}}^{+}_{k+2}\) since the result of it is a sum of products of a series of \(g_i\)s and a series of \({{{\theta }}}_{k}\)s, such that \(g_{k+1}\) can be ignored. Another reason is that the discrepancy caused by the ignorance could be (partially or fully) compensated by the ignorance of the positive \(g_k\) in \((g_k + G_{k+1}{{{\varTheta }}}_{k}^{\prime })\) in both \(X_1\) (3.55) and \(X_3\) (3.53). That is, we can take (3.55) to be:

With the above, (3.52) is approximated as:

Our second strategy of the simplification is to reduce the number of \({{{\varTheta }}}\) matrixes by representing both \({{{\varTheta }}}_{k+2}^+\) and \({{{\varTheta }}}_{k}^{\prime }\) with \({{{\varTheta }}}_{k+1}\) only. To do so, we define:

where \(N_{k+1}\) is a diagonal matrix of dimension \((\alpha - k) * (\alpha - k)\), each of its main diagonal entries being the corresponding entry of the original single column matrix \({{{\varTheta }}}_{k+1}\), and \(B_{k+1}\) is a single column matrix of dimension \((\alpha - k) * 1,\) with every entry being 1.

Hereunder, for simplicity we use s and t to be the indices of all the matrix entries, where \(t = i - k\), and \(s = i - (k + 1) + 1 = i - k\), such that both s and t start from 1 in the following operations.

Now, let the single column matrix \({{{\varTheta }}}_{k+2}^+ = N_{k+1}D_{k+1}\), where \(D_{k+1}\) is a single column matrix of dimension \((\alpha - k) * 1\), with the first entry being \(d_1 = 1\) and the rest \(d_s = \nicefrac { (s - 1)}{(k + 2)} = \nicefrac {(i - k - 1)}{(k + 2)}, (i = k + 2, k + 3, \dots , \alpha )\), based on the relation \(C_i^{k + 2} = C_i^{k + 1} * \nicefrac {(i - k - 1)}{(k + 2)}\).

Similarly, let \({{{\varTheta }}}_{k}^{'} = N_{k+1}E_k\), where \(E_k\) is a single column matrix of dimension \((\alpha - k) * 1\) with each entry \(e_t = \nicefrac {(k + 1)}{t} = \nicefrac {(k + 1)}{(i - k)}, (i = k + 1, k + 2,\dots ,\alpha )\), based on the relation \(C_i^{k} = C_i^{k + 1} * \nicefrac {(k + 1)}{(i - k)})\).

Since \(C_i^{k + 1}\)presents the same in \(C_i^{k + 2}\) and \(C_i^{k}\) in the above two paragraphs, it thus can be omitted in later operations.

Notice that the result of \(G_{k+1}{{{\varTheta }}}_{k}\) is a scalar value, so is its transpose, \(G_{k+1}{{{\varTheta }}}_{k}^{'} = {{{{\varTheta }}}_{k}^{\prime \tau }}G_{k+1}^{\tau } = E_k^{\tau } N_{k+1}^{\tau }G_{k+1}^\tau \). Then, (3.56) becomes:

where

In the same way, from (3.54), take

where \(\tilde{E}_{k+1} = B_{k+1}E_k^{\tau }\) is a square matrix of \((\alpha - k) * (\alpha - k)\), each row of it being the replication of \(E_k^\tau \) , that is, \(\tilde{e}_{st} = e_t\). Similarly,

where \(\tilde{I}_{k+1}= B_{k+1}{B^{\tau }_{k+1}}\) is a square matrix of \((\alpha - k) * (\alpha - k)\), with every entry being 1 as a result of the matrix multiplication.

We now can further simplify the condition of \(\delta R_k\) in (3.51) into the following:

where

and,

That is, with the above manipulations we now reach a neat expression of the condition \(\delta R_k \) as:

Equation (3.66) represents a typical quadric equation \(q(\mathbf {x}) = XAX^{\tau } = \sum _s\sum _t{a_{st}x_sx_t}\) in matrix operation theory [38] where \(\mathbf {x}\) is an array of variables (\(x_i\)s), and A is a coefficient matrix. Here \(A = Q_{k+1}\), and \(\mathbf {x} = G_{k+1}\), such that \(x_1 = g_{k+1}, x_2 = g_{k+2}\), and \(x_s = g_{k+s}\) in general. Notice that every \(x_s\) is nonnegative in this case.

The proving of \(\delta R_k \ge 0\) is now equal to proving if \(q(\mathbf {x})\ge 0\). In matrix theory, for the above quadratic form, matrix A can always be manipulated into a symmetric matrix [39], and the proving of \(q(\mathbf {x}) \ge 0\) is equal to identifying whether A is semi-positive definitive. For this, a couple of methods, e.g., eigenvalue and principle minors approaches [38, 40], have been developed, but none of them is applicable to our case, simply because those approaches apply to the constant matrix A only. However, we are dealing with the problem applicable to any possible k and \(\alpha \). As such, each entry of \(Q_{k+1}\) in (3.66) is a function of the variable k, s and t and so are the dimensions of \(G_{k+1}\) and \( Q_{k+1}\). Nevertheless, we can still manage to prove \(\delta R_k \ge 0\) analytically as below.

From the above elaboration, the key issue to prove \(\delta R_k \ge 0\) is to prove the positivity of \( Q_{k+1}\), which in turn is to prove the positivity of \( M_{k+1}\). It is because \( N_{k+1}\) is a diagonal matrix with each diagonal entry \({{{\theta }}}_{ss}\) being positive; then, from (3.65), the positivity of \( Q_{k+1}\) is determined by the positivity of the matrix \( M_{k+1}\). We now trace back from (3.64):

Recall that \(D_{k+1}\) is a single column matrix of \((\alpha - k)\) rows, with \(d_1 = 1\) and the rest entry \(d_s = \nicefrac {(s - 1)}{(k + 2)}\). \(E_k^\tau \) is a single row matrix of \((\alpha - k)\) columns, with its general entry \(e_t = \nicefrac {(k + 1)}{ t}\). Then, their product \( Y_{k+1}\) is an \((\alpha - k) ) * (\alpha - k) \) square matrix. The general entry of \(Y_{k+1}\) is:

A primer feature of (3.67) is \(y_{rt} > y_{pt}\) if \(r > p\), except \(y_{1t}\), the first row of \(Y_{k+1}\) since \(d_1 = 1\). That is, the row \(y_{1t}\) is just the \(E_{k+1}\) itself:

Particularly, the first entry \(y_{11} = 1 * (k + 1) \ge 2\). In general, \(y_{1t} > y_{st}\) unless \(s > k + 2\), which means \(y_{rt}\) is more favorable than what is generally expressed in (3.67) to lead \(\delta {R_k} \ge 0\). As such, we can safely consider the general entry \(y_{st}\) as expressed in (3.67) only in the rest analysis.

Consequently, the matrix \(P_{k+1} = Y_{k+1} - \tilde{I}_{k+1}\) is also a square matrix of dimension \((\alpha - k)\), and its first entry being \(p_{11} \ge 1\), while the general entry being:

Similarly, \(M_{k+1} = (k + 1) (\alpha - k) P_{k+1} + (\alpha +1) \tilde{E}_{k+1}\) is again a square matrix of dimension \((\alpha - k)\), with its first entry being certainly positive. The general entry of \(M_{k+1}\) is:

where

Notice that, from (3.68), \(y_{st}\) can never be equal to 1, thus \(p_{st}\) and \(m_{st}\) can never be zero but only negative or positive. Since \((k + 1) / [(k + 2) t] > 0\), to see whether \(m_{st} \ge 0\) we only need to see the positivity of z.

Set \(z \ge 0\), we get:

which can be simplified as:

where \(a = (k + 1) / (k + 2)\), which is near to 1 when k is relatively large, and

\(b = [(\alpha +1) + (\alpha + 1)^2] / [(\alpha - k) (k + 2)] > 0\).

In analytic geometry, (3.71) represents a 3D (s, t, z) plane, while the strict equation of (3.73), i.e., \(t = a * s + b\), represents an intersection line AB between that plane and the plane \(z = 0\), as depicted in Fig. 2, where the number a is the slope of the line, and the angle \(\varphi \) is in the range of \(33^\circ< \varphi < 45^\circ \) since \(2/3 \le a < 1\). The number b is the intersect of the line AB with the axis Ot, and notice \(b > 0\), which means line AB can only be apart upwardly from the diagonal line \(t = s\) within the matrix area.

In our case, line AB in Fig. 2 means a boundary line such that all the matrix entries on the left side of the line are positive, while only those entries above the line (the dashed area of Fig. 2) are negative, which is inferable from (3.72). The balance of the aggregative positivity and negativity of the \(M_{st}\)s then determines the positivity of the \(\delta R_k\). We are now approaching the point to prove the theorem with the following observations.

Observation 1:

That is, \(M_{st}\) is increasing along the direction s in Fig. 2. It means that the matrix entries around the left bottom corner are the most positive.

Observation 2:

For the above, notice that the second term

\({2 (k + 1) (k + 2) (\alpha - k)t}\)

is a cubic of k, it is thus more dominant than other ones. Meanwhile, notice that “\(t > s\)” is the plane equation of the upper triangle in Fig. 2. As such, \(\nicefrac {\varDelta M_{st}}{\varDelta t} < 0\) holds, which can also be numerically verified, although we do not have to do so here to save space. As follows, \(M_{st}\) is decreasing with t increasing. It is the primary reason that the \(M_{st}\)s in the upright area above the line AB in Fig. 2 are negative as stated before, and the closer to the upright corner, the more negative of the \(M_{st}\)s.

Observation 3:

Now, look at the elements of the \(\delta R_k\):

where the \({{{\theta }}}_s\) (or \({{{\theta }}}_t\)) series is the lower section (from \( i = k + 1\)) of the s (or t) column of Pascal’s triangle (refer to Table 5 in subsection 3.9), \({{{\theta }}}_s\) (or \({{{\theta }}}_t\)) is then increasing with s (or t) increasing.

Observation 4:

The ordinary \(g_i\) distribution is dense in the middle section.

Now, if we use the \(M_{st}\) matrix as shown in Fig. 2 to represent the elemental positivity distribution of the \(\delta R_k\), from the above observations, we will see that the elements at the left bottom corner will be the most positive, then the middle part, while those in the rest area above line AB being negative.

Finally, notice that the boundary line AB is upper apart from the diagonal line, as shown in Fig. 2.

With all of the above observations, we can conclude that not only the area but also the degree of the positivity of the elemental \(\delta R_k\) distribution is dominant over that of the negativity. That is, aggregately \(\delta R_k \ge 0\) would generally hold in the case of an ordinary \(g_i\) distribution.

However, in the case of an unordinary \(g_i\) distribution, we need to consider two possible exceptions of the above general conclusion.

One is that, when \( k \rightarrow \alpha \), if some \(g_i\)(s) is (are) outstanding in the right tail, then ultimately \(\delta R_k < 0\) may happen. It is because, recall that the \(X_1\) Eq. (3.56) is an approximation of (3.55) when k is not close to \(\alpha \). When \( k \rightarrow \alpha \), we need to look at the original Eq. (3.55) \( X_1= [G_{k+1}{{{\varTheta }}}^{+}_{k+2} - g_{k+1}] (g_k + G_{k+1}){{{\varTheta }}}_{k}^{\prime }\) again. In this case, the dimension (\(\alpha - k)\) of the matrix becomes small with a large k, and so does the product of \(G_{k+1}{{{\varTheta }}}^{+}_{k+2}\). As such, a large \(g_{k+1}\) may lead \( X_1\) to become negative, and ultimately \(\delta R_k < 0\) may take place. We call this phenomenon an “island exception,” or “exception 1.”

The other case is, when k becomes small relative to \(\alpha \), so does the intercept b of line AB in Fig. 2. That means the line will shift leftwards, and the ratio of the positive area over negative one of the elemental \(\delta R_k\) distribution will decrease. If at this time one or more \(g_i\)s falling deeply in the left tail of the \(g_i\) distribution, then the positivity of the \(\delta R_k\) will be further undermined, and \(\delta R_k < 0 \) may happen. We call this a “cliff exception,” or “exception 2.”

However, the adverse effect of the cliff exception is much weaker than that of the island exception. It is because a smaller k means a larger dimension \((\alpha - k)\) of the matrix. Meanwhile, the angle \(\varphi \) in Fig. 2 will become smaller with a smaller k. Thirdly, the most positive \(M_{ st}\) area (the left bottom corner) remains unchanged. All of these aspects together mean it will be much harder to overthrow a positive \(\delta R_k\) into a negative one than that in the island exception.

At this point, one may ask, what effect would be if the two exceptions happen together? A brief answer is, since shorter tuples do not affect the \(R_k\)s of larger k as stated before, the cliff exception then does not reinforce the effect of the island exception. Similarly, the island exception will not increase but reduce the adverse effect of the cliff exception since more short patterns will be generated from more long tuples. Notice why \(R_k = 1\) holds in the preliminary case but \(R_k < 1\) in the other cases, simply because of the reduced number of long tuples.

Finally, we have two important notices from empirical studies (refer to Table 7 in Sect. 4). Firstly, the situation of \(\delta R_k < 0 \) is rear to happen even if either of the exceptions happens (if not too severely). Secondly, even if some case(s) of \(\delta R_k < 0 \) took place in an application, the quasi-concavity of the concerned \(H_k\) curve may still hold.

A conclusion is then: in an ordinary \(g_i\) distribution, \(\delta R_k \ge 0 \) will generally hold, and the smaller the k, the easier to maintain \(\delta R_k \ge 0 \) . Theorem 3.3 is now fully proved. \(\square \)

Example 2

For a better understanding, the following is a small example based on the running example to demonstrate the related operations and the degree of the discrepancy made by the approximation in the above proof. As given, \(\alpha = 6\), and take \(k = 3\) with other information as below:

\(G_k = \begin{pmatrix} 2&2&0&1\end{pmatrix}, G_{k+1} = \begin{pmatrix} 2&0&1\end{pmatrix}, G_{k+2} = \begin{pmatrix} 0&1\end{pmatrix}\),

\({{{\varTheta }}}_{k} = {\begin{pmatrix}1&4&10&20 \end{pmatrix}}^\tau , {{{\varTheta }}}_ k'= {\begin{pmatrix} 4&10&20 \end{pmatrix}}^\tau , \)

\({{{\varTheta }}}_{k+1} = \begin{pmatrix} 1&5&15 \end{pmatrix}^\tau , {{{\varTheta }}}_{k+2} = {\begin{pmatrix} 1&6\end{pmatrix}}^\tau \),

\({{{\varTheta }}}_{k+2}^+ = {\begin{pmatrix} 1&1&6 \end{pmatrix}}^\tau , \)

\(D_{k+1} = {\begin{pmatrix} 1&1/5&2/5 \end{pmatrix}}^\tau , E_k^\tau = \begin{pmatrix} 4&2&4/3 \end{pmatrix}\).

Then, by (3.51), the original formula:

By the approximated (3.57),

The above demonstrates that the approximation keeps the same sign of \(\delta {R_k}\), and the discrepancy between the precise and the approximated numerical values of \(\delta {R_k}\) is around 12% in this small dimension matrix example. More appreciably, the approximation significantly simplified the proof of Theorem 3. Due to space limitations, we can only leave the exercise of the operations to reach (3.66) \(\delta R_k = G_{k+1}Q_{k+1}G^T_{k+1}\), through (3.67)–(3.70) to the interested readers.

Example 3

Followed are a few illustrative examples (cases) to see the main points of theorem 3 and the relations between the \(g_i\) and the \(R_k\) distributions, as well as the quasi-concavity of the \(H_k\) curves, as shown in Table 4. Case a as the base case refers to the original data of Table 1, which results in an increasing \(R_k\) series and a quasi-concave \(H_k\) curve. Cases b to d demonstrate the minimum change of \(g_k\)(s) required to have a decreased \(R_k\) from the base case, where the bold numbers indicate the position k in column 2, the \(g_k\) in column 3, the \(R_k\) in column 4, and the apex of a concerned \(H_k\) curve in column 5. Column 2 and 3 demonstrate that the smaller the k, the more significant change of \(g_k\)s is required to get a decreased \(R_k\). Case b gives a typical example of the “cliff exception”.

Note that, since the \(H_k\)s are integers, the \(R_k\)s should be fractions, but for an easier comparison of the magnitudes of the \(R_k\)s, the decimals are used in the table.

The above examples demonstrate the resilience of the quasi-concavity of the \(H_k\) curve that minor decreasing \(R_k\)(s) may not alternate the concavity. The former four \(H_k\) series in Table 4 remain strict quasi-concave, despite the (sharp) changes of their \(g_i\) distributions and the decreasing \(R_k\)s.

Case e of Table 4 is purposely constructed to give an example of the “island exception” and a non-quasi-concavity \(H_k\) curve. However, from this case, we see how odd the underlying \(g_i\) distribution is and how persistently the decreasing \(R_k\)s hold such that the non-concavity of \(H_k\) could take place.

In real applications, if decreasing \(R_k\)s are observable occasionally, the non-quasi-concave \(H_k\) curve is seldom to see. Table 7 and “Appendix” of this paper presents empirical evidence in this regard, where a few out of hundreds of \(R_k\)s are decreasing, while all the concerned \(H_k\) curves maintain quasi-concavity.

The above indicates that the condition of monotonic \(R_k\) specified in Theorem 2 to have a quasi-concave \(H_k\) curve is stronger than required. A conclusion is that quasi-concavity is a typical property of the \(H_k\) curves.

After we have seen the quasi-concavity property, one may ask if a \(H_k\) curve can be (full) concave. The following part answers.

3.7 The full concavity interval of the \(H_k\) curve

Theorem 4

An \(H_k\) curve can be strictly concave downward within an interval \(E = [a, b]\), if the following condition holds:

where \(\alpha \) is the maximum length of all the data tuples, and the leftmost boundary of the interval can reach at \(a = 1\), while the rightmost boundary

For the proof, notice first the definition of the “concavity”: if a function f\((\mathbf {z})\) is concave over an interval E, then for any three points \(\mathbf {z_1}, \mathbf {z_2}, \mathbf {z_3}\) within E, such that \(\mathbf {z_2} = \lambda \mathbf {z_1} + (1- \lambda )\mathbf {z_3}\), where \(\lambda \in (0, 1)\), and \(\mathbf {z}\) can be a vector of multidimensional variables, then the following relation holds [37]:

Alternatively, set \(\lambda = \frac{1}{2}\), the above becomes [37]:

where \(\mathbf {z_2}\) is in the middle of \(\mathbf {z_1}\) and \(\mathbf {z_3}\): \(\mathbf {z_2} =\frac{1}{2}(\mathbf {z_1} + \mathbf {z_3})\).

Notice that the function f\((\mathbf {z})\) is “strict concave” if the above weak inequality functions change into strict inequality. This paper will mainly use the strict concave function, but the words “strict” may be dropped. On the other hand, we may add the word “full” to the concavity for readers to reflect the difference between it and the quasi-concavity. Intuitively, the full concave curve is rounder than a quasi-concave one within the same interval in their depictions. Following is the proof of the above theorem.

Proof

We use (3.78) and take any three consecutive points \(k, k+1\) and \(k+2\) of the domain of the \(H_k\) curve to check whether they satisfy (3.78). In this case, \(\lambda = \frac{1}{2}\) and \(k+1 = \frac{1}{2} (k + (k+3))\). Then, the related \(H_k\) values must satisfy: \(\frac{1}{2}(H_k + H_{k+2}) < H_{k+1}\). By (3.36), it means:

Manipulating the above and removing \(H_k\) since\(H_k > 0\), we get the condition for \(H_k\) concavity:

which is the necessary and sufficient condition (3.75) specified in the theorem. In other words, (3.75) is a specification of (3.78) in the case of \(H_k\) curve.

To get the solution of k in terms of \(\alpha \), we consider the preliminary case first, where\( R_k = R_{k+1} = 1\), and (3.75) becomes:

Solution (of k) from the above inequality is \(p< k < r\), where

Since p and r each must be an integer, the above should be precisely expressed as

where \(\lceil {(x)}\) means the ceiling of x, a minimum integer \(p \ge x\); \({(y)}\rfloor \) means the floor of y, a maximum integer \(r \le y\).

After we have specified the above, however, we will mainly use (3.80) and (3.81) in the following context for simplicity.

In the preliminary case, p is the left end of the concavity interval of the \(H_k\) curve, but r is not the ultimate right boundary yet, since, based on the above formulations, if \(k = r\) is a solution to (3.79), then \( k+1\) and \( k+2\) will be included in the concave interval as well. That is:

which is then the rightmost boundary of the full concave interval, as specified in (3.76) of Theorem 4.

It is easy to find out from (3.80) and (3.81), in the preliminary case the two boundaries, p and b, are symmetric against \(\alpha /2\) (the middle of the maximum tuple length), which is consistent with what introduced before that the preliminary \(H_k\) curve is symmetrical, and for \(\alpha \le 4\), the above solution covers the full range of the \(H_k\) curve. However, for \(\alpha > 4\) in the preliminary case, only the middle section of the \(H_k\) curve is full concave, while its right and left tails are quasi-concave only.

Now, in the general case where the uniformed data tuple length does no longer hold, and \(R_k < 1\) takes the role. An interesting question is then, whether the full concavity interval will increase or decrease in this case. To find out the precise answer directly from the problem (3.75) is impossible since there are three variables \(k, R_k, R_{k+1}\) within one function. Nevertheless, we can have a pretty good approximate solution for it as below.

Notice that, despite the monotonic property of \(R_k\), in general, \(R_{k+1}\) may only be slightly larger than \(R_k\), considering there is a series of \(R_k\)s in an application. We then take an approximation of \(R_{k+1} = R_k\), such that (3.75) becomes:

After manipulation, the above becomes:

The approach to solve the above is firstly to find the two roots of the corresponding equation of the above:

Let the two roots of the above be \( r_1\) and \(r_2\) in terms of k. Since \(0 \le R_k \le 1\), there will be the relation \(0< r_1< R_k < r_2 \le 1\) to satisfy (3.83).

Equation (3.84) is a typical quadratic function, and we can get its two roots as below:

where \(r_1\) and \(r_2\) represent the two concavity boundaries in terms of k. Our job is then to find out the satisfactory ks in terms of \(\alpha \).

The solution for (3.85) is every k, \( 0< k < \alpha - 1\) (recall again that \(R_k\) series ends at \( \alpha - 1)\). That is, the left boundary theoretically can be anywhere before the right boundary, which further implies that the left boundary can stretch leftmost such that \(a = k = 1\) as declared in the theorem in the most favorable case.