Abstract

Search application has been one of the many domains wherein multi-robot systems (MRS) have been applied over the past few years. Such applications include, but are not limited to, environmental monitoring, battlefield surveillance, search and rescue, oil and gas exploration, etc. A particular case of MRS which benefits from a large number of agents, and hence a potentially higher performance under such applications, is denoted as swarm robotics. Nonetheless, the deployment of swarm robotics approaches to fulfil search missions has not yet received the proper attention. This paper tackles the problem of target searching by proposing a novel algorithm combining the Darwinian principle of survival-of-the-fittest with an ion-based repulsion mechanism. The proposed repulsion-based robotic Darwinian particle swarm optimization was benchmarked against the traditional RDPSO, the robotic PSO and the distributed PSO, under a multi-robot target searching task. The results depict the superiority of the proposed approach in terms of both speed and search result. Also, the proposed approach shows a superior performance when it is compared with other approaches as the number of robots decreases.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Despite being a field under constant growth, the use of multi-robot systems (MRS) to fulfil target search missions has not yet received the proper attention. Yet, MRS offer several advantages over single solutions, or even human rescuers, within search applications. Besides providing a natural fault tolerance mechanism, the use of multiple robots is especially preferable when the area is either hazardous or inaccessible to humans (Suarez and Murphy 2011). This is even more evident with swarm robotics. Swarm robotics offers several major benefits over the conventional search techniques, such as the robustness of the swarm to individual units failure or run-time addition of new units, the scalability of emergent behaviours to swarms of different sizes, the leveraging of self-organization principles of environmental noise and individual differences, and the synergetic effect whereby the work of the swarm is greater than the sum of the work by the individual units (known as superlinearity) (Floreano and Mattiussi 2008).

One of the most popular swarm optimization algorithms is the particle swarm optimization (PSO) (Eberhart and Kennedy 1995). PSO is a metaheuristic and population-based optimization approach inspired by the flocking behaviour of birds. PSO consists of a swarm of agents, called particles, represented by their position and velocity vectors. Also, each particle represents a solution for the optimization problem. Hence, to find a suitable solution (which is known as a higher fitness solution), particles must explore the search space considering the past experience of the entire swarm.

The PSO clearly reveals the effect of information sharing between particles by updating local and global information, which affects the velocity and the final position of particles. Also, there is a stochastic exploration effect due to the introduction of the random multipliers, which is balanced between exploration and exploitation. Given its simplicity in terms of implementation, and reduced computational and memory complexity, the PSO has been successfully adapted to MRS for searching task, wherein the behaviour of each robot is defined by similar rules and similar difference equations from its original version (Couceiro et al. 2011a, 2012a; Hereford et al. 2007).

Bearing these ideas in mind, multiple alternative approaches have been trying to mimic the behaviour of birds and insects, and even the Darwinian principle inherent to all living creatures, to develop cooperative MRS capable of dealing with real-world challenges, such as target searching. One of these approaches was robotic Darwinian particle swarm optimization (RDPSO) (Couceiro et al. 2011a); a particle swarm optimization (PSO) (Eberhart and Kennedy 1995)-based evolutionary approach adapts to real-world MRS.

Despite the positive results obtained by using the RDPSO algorithm (Couceiro et al. 2014), robots still take a considerable amount of time to converge towards the solution, especially with smaller swarms. Although one would expect to have a large number of robots in every swarm-based robotic algorithm (more than hundreds or even thousands of units), in real-world scenarios this assumption does not always hold, since it is limited by cost or even scenario dimensions. As an aggravating condition, the RDPSO dynamically divides the whole population of robots into multiple swarms, thus reducing even more the number of cooperative agents and, consequently, the emergent convergence.

Bearing this limitation in mind, this paper proposes a novel mechanism to improve robots’ convergence rate based on the repulsion between similar ions. This mechanism intends to provide a suitable diversity level among collaborative robots. Diversity between robots means the ability to maintain a minimal distance to each other, thus leading to a faster and more accurate search. This is a phenomenon observed between ions with similar charge, in which the closer they get, the more they repel each other. This mechanism is applied to the RDPSO algorithm under a small robotic population (below 10), increasing the diversity level of robots, which efficiently allows to avoid local optima and solve the problem of slow convergence rate. The proposed approach is evaluated as a multi-robot target searching task by comparing it with the traditional RDPSO (Couceiro et al. 2012a), the robotic PSO (RPSO) (Couceiro et al. 2011a) and the distributed PSO (DistPSO) (Hereford et al. 2007).

2 Related Work

2.1 Robotic Target Searching Algorithms

Over the past few years, some algorithms were designed to solve the tasks such as optimization problems. They have been adapted to embrace the principles associated with real robots. One of the first adapted versions of the PSO for handling real-world constraints, such as obstacles, was presented by Pugh and Martinoli (2006, 2007). The main difference between the algorithm presented by those authors, denoted hereafter as EPSO, and the classical PSO is that each robot (or particle) only takes the information of robots within a fixed radius \(r_{c}\) (omnidirectional communication) into consideration. The authors used the Braitenberg obstacle avoidance algorithm (Braitenberg 1986), so if a robot is executing a step of the algorithm and avoids an obstacle, it will continue moving in its new direction but will not modify its internal velocity representation. Although such methodology makes it possible to decouple the high-level behaviour of robots from collision avoidance routines, such strategy may be unfeasible if one needs to study the stability of the algorithm considering obstacles influence over robots (Couceiro et al. 2012c), or even define adaptive methodologies to systematically adjust all the algorithm parameters based on contextual information (Couceiro et al. 2012a).

Similarly, Hereford et al. presented a distributed PSO (DistPSO) in swarm platforms (Hereford et al. 2007; Hereford and Siebold 2008). As in the previous method and most of swarm robotic alternatives, there is no central agent to coordinate the robots movements or actions. The authors constrained the movement of particles within a limited cone to avoid the omnidirectionality inherent to the common PSO. Although this strategy seems practical, this could be achieved by considering and manipulating the dynamical characteristics of robots, as it will be proposed in this article. The algorithm presented by Hereford et al. (2007) and Hereford and Siebold (2008) assumed that the synchronization of robots the way that robots would only compute a novel position if all other robots had exchanged the necessary information (e.g. individual solutions). Also, a robot would only share its position if its solution is the best solution in the whole swarm. This makes the reduction in the amount of communication traffic possible, though it forces the robots to stop after each iteration in order to handle all relevant information. This is an interesting strategy when using broadcasting mechanisms since robots can share information among themselves without requiring lots of communication traffic. Such strategy would not significantly improve the algorithm’s performance if the team do not benefit from ad hoc communication with multi-hop properties.

A biologically distributed algorithm inspired by glow-worm behaviour was presented and applied in MRS by Krishnanand and Ghose (2009a, b). Similarly to the RDPSO, the glow-worm swarm optimization (GSO) algorithm features an adaptive decision domain which enables the formation of subgroups in the population, where the goal is to partition the population of robots to track multiple sources concurrently. In practice, the GSO acts like a PSO with the best neighbourhood solution information. In fact, to begin a search, a robot chooses a neighbour to be its leader and moves towards it. The most probable choice for the leader is the one with the highest luciferin value, i.e. the luminescence quantity that represents the individual solution, thus corresponding to the most probable direction of the source. As a result of this leader selection, subgroups formed within the population begin to search for nearby solutions. As no evolutionary techniques were used, it is shown that all of the members of a single cluster will converge to the leader at some finite time, and members of overlapping clusters will converge to one of the leaders asymptotically.

Another interesting approach was presented by Gazi and Passino (2002, 2004) in which the swarm is modelled based on attractant/repellent profiles as aggregations of foraging swarm (AFS). This kind of attractant/repellent profiles is consistent with biological observations (Warburton and Lazarus 1991), where the inter-individual attraction/repulsion is based on an interplay between attraction and repulsion forces with the attraction dominating on large distances and the repulsion dominating on short distances. The authors presented a stability and convergence analysis of their algorithm. To that end, they carried out a behavioural analysis followed by several simulation experiments so as to define the most adequate values of the system parameters. This is worth mentioning that most of the above-mentioned works defined the parameters by using a trial-and-error mechanism. Hence, some sort of mathematical formalism, such as stability analysis, is required to enable the obtaining of such comparable performance. Despite this, the authors did not present any mechanism for sub-optimal solutions avoidance. Therefore, the convergence of the swarm cannot be proved in the general case, thus demonstrating the difficulty of obtaining general guarantees for progress properties.

The RDPSO, initially proposed by Couceiro et al. (2011a, b), similar to PSO, basically consists of a population of robots that collectively move in the search space (e.g. catastrophic scenario, city) to search for the optimal solution (e.g. number of victims, number of passengers); each robot is characterized by its pose (i.e. position and heading) and performance. For instance, if we have a group of mobile olfactory robots that are trying to find a gas leak in an indoor environment (c.f. Jatmiko et al. 2006; Marques and de Almeida 2005), each robot’s state is comprised of its pose and the corresponding value of gas density. The RDPSO allows having multiple dynamic swarms, thus enabling a distributed approach because the network that might have been comprised of the whole swarm of robots is divided into multiple smaller networks (one for each group/swarm). This makes it possible to decrease the number of nodes (i.e. robots) and the information exchanged between robots of the same network. In other words, robots interaction with other robots through communication is confined to local interactions inside the same group (swarm), thus making RDPSO scalable to large populations of robots. More details about this work will be highlighted throughout this article for comparison purposes with the other strategies.

Many works have been extending the PSO to MRS over the past few years. Couceiro et al. presented an evolutionary extension of the PSO to MRS by showing that the RDPSO can overcome problems related to obstacle avoidance, robot dynamics, sub-optimal solutions and communication constraints (e.g. Couceiro et al. 2012b, c, 2013).

The behaviour of robot \(n\) under the RDPSO algorithm can then be described by the following discrete equations at each discrete time, or iteration, \(t \in {\mathbb{N}}_{0}\):

The parameters \(\alpha\), \(0 < \alpha \le 1\), and \(\rho_{i}\), \(\rho_{i} > 0\), with \(i = 1,2,3,4\), assign weights to the inertial influence, the local best (cognitive component), the global best (social component), the obstacle avoidance component and the enforcing communication component when determining the new velocity. Coefficients \(r_{i}\), \(i = 1,2,3,4\), are random matrices wherein each component is generally a uniform random number between 0 and 1. The variables \(v_{n} \left[ t \right]\) and \(x_{n} \left[ t \right]\) represent the velocity and position vector of robot \(n\), respectively, and \(\chi_{i} \left[ t \right]\) denotes the best position of the cognitive, social, obstacle and MANET matrix components. The fractional coefficient \(\alpha\) let us to describe the robot’s trajectory because of its inherent memory property. The cognitive \(\chi_{1} \left[ t \right]\) and social components \(\chi_{2} \left[ t \right]\) are similar in PSO algorithm, where \(\chi_{1} \left[ t \right]\) represents the local best position and \(\chi_{2} \left[ t \right]\) represents the global best position of robot \(n\). The obstacle avoidance component \(\chi_{3} \left[ t \right]\) is represented by the position of each robot. It optimizes a monotonically decreasing or increasing function \(g\left( {x_{n} \left[ t \right]} \right)\) that represents the distance to a sensed obstacle. In an obstacle-free environment, the obstacle susceptibility weight \(\rho_{3}\) is set to zero. However, in real-world scenarios, obstacles need to be taken into account and the value of \(\rho_{3}\) depends on several parameters that are related to the main goal (i.e. minimize a cost function or maximize a fitness function) and the sensing information (i.e. monotonicity of \(g\left( {x_{n} \left[ t \right]} \right)\)). The MANET component \(\chi_{4} \left[ t \right]\) is represented by the position of the nearest neighbour; it increases by the maximum communication range \(d_{\hbox{max} }\) towards robot’s current position. A higher \(\rho_{4}\) may enhance the ability to maintain the network connected and will guarantee a specific range or signal quality between robots.

A-RPSO (adaptive robotic PSO) is another robotic target searching algorithm which was proposed by Dadgar et al. (2015). A-RPSO is based on RPSO; however, A-RPSO was equipped with a mechanism to solve the local optima trapping and slow convergence rate. This mechanism functions are based on checking the status of each robot and entire swarm (all the robots) per each iteration. In other words, this mechanism tries to solve the above-mentioned defects by weakening the ability of robots to track the “present best” and “global best”. Even though this mechanism leads to a faster search, it needs very large amounts of communication between robots. Sombolestan et al. (2018) proposed a two-phase searching algorithm for target searching. In the first phase, a Braitenberg-based searching procedure was used if robot does not receive any signals from the hidden target. In the second phase, as soon as the robot receives the first identifying signal from the hidden target, a reinforcement learning-based searching was applied for finding optimal path-planning to reach the target. Tan et al. proposed a multi-swarm hybrid FOA-PSO (MFPSO) algorithm for robot target searching. MFPSO, using an improved FOA (fruit fly optimization algorithm), is used to find the next optimal robot position. Also, by using a multi-swarm strategy, the level of diversity and exploration increased (Tang et al. 2019). Feng et al. proposed an improved multi-robot olfactory search method based on PSO for locating two types of time-varying indoor particle sources. The first type is periodic sources and the second one is decaying sources. This method considers both particle concentrations and indoor air velocities by including an upwind term in the standard PSO algorithm (Feng et al. 2019).

2.2 Approaches to Generate Diversity Between Members of a Population

In general, population-based optimization algorithms like PSO have the potential to be trapped in local optima. Another important drawback of these algorithms is the deficiency of slow convergence rate. For example, in PSO, particles tend to converge rapidly; thus, the diversity between particles rapidly decreases. However, the performance of PSO is highly dependent on the diversity between particles and the diversity is essential for a healthy search (Pehlivanoglu 2013). Diversity among particles leads to premature convergence avoidance and help us to escape from local optima (Wang et al. 2013). In addition to that, more diversity results in higher exploration rate. Thus, increasing the diversity between robots when we decide to use PSO to control our robots is essential.

To keep the diversity level between members of population stable, some sort of mechanisms were applied to population-based algorithms. The authors in Turky and Abdullah (2014) presented the electromagnetic algorithm (EA) for maintaining the population diverse. For example, one of these mechanisms simply considers the replacement of the worse solutions with a set of random solutions. In addition to this, authors in Abedinpourshotorban et al. (2015) proposed a physics-inspired optimization algorithm which was inspired by the behaviour of electromagnets with different polarities. This algorithm uses a randomness mechanism to keep diversity and avoid local minima. MOA is another optimization algorithm which is inspired by the principles of magnetic field theory. MOAs benefits form a novel operator to keep the population diverse. This operator was called explosion. When the diversity between the members of population is less than a certain threshold, the explosion operator will be activated and scatter the population in the search space (Tayarani-N and Akbarzadeh-T 2014).

Alternatives to this have been presented in works such as Riget and Vesterstrøm (2002), wherein an Attractive and Repulsive PSO (A-RPSO) was introduced. In that method, the authors used a diversity measure to control the swarm, in which a repulsive phase is considered to repel particles over the best local and global solutions found so far. The authors from Zhang et al. (2005) and Tang et al. (2015) decided to control particles’ diversity by changing the impact of inertia weight. Also, still in Tang et al. (2015), the authors used an elitist learning strategy to enhance population diversity.

Despite the good performance of the above-mentioned approaches in terms of providing diversity among particles, these approaches do not work in MRS. In fact, those mechanisms that are based on replacing a member of the swarm with a new solution for the purpose of diversity generation do not work in MRS. When particles are being removed or relocated, robots need to stick to the physical laws and mobility constraints that they were designed with. Moreover, approaches that use a randomization step to provide diversity may not be acceptable by MRS, because they may lead to long travelling distance from the current position of the particle to the new position, which would inevitably result in a waste of energy consumption.

Authors in Dadgar et al. (2015) addressed the problems of “slow convergence rate” and “premature convergence” for MRS domains. Also, they solved the above-mentioned problems by checking the evolution speed of each robot and the amount of aggregation (adjacency) of swarm in all iterations. In detail, by decreasing the evolutionary speed for a robot or increasing the aggregation degree, the ability of robots to track the “present best” and “global best” decreases. However, this method needs larger amounts of communication between robots (especially for calculating the aggregation of swarm).

In Rastgoo et al. (2015), authors proposed an approach named ML-PSO for robotic target searching. ML-PSO is a combination of a local search mechanism with a modified PSO algorithm, whereas in the modified PSO, the movement of particles (robots) is the same as basic PSO, except that ML-PSO added a component to increase the global searching. These modifications on PSO allow ML-PSO to overcome the problem of premature convergence; it also reduces the search time.

3 Repulsion-Based RDPSO

In this article, we proposed a mechanism to improve the diversity of the search fostered by robots; then, we validated our approach using the RDPSO algorithm. We named the proposed approach as repulsion-based RDPSO (RbRDPSOFootnote 1). As we previously mentioned, diversity between robots (particles) leads to a faster convergence rate, which also improves the global search ability. However, as it was previously mentioned in Sect. 2.2, most mechanisms that are capable of generating diversity will not work in MRS. In this article, to provide the diversity between robots, we herein propose a novel approach that has never been applied in the context of robotic target searching, based on the “repulsion between similar ions”. This mechanism works well in MRS and has three advantages. The first one is of the easiness in adapting such mechanism in the robotic domain. The second one is the intelligibility of this approach as an extension of biologically inspired phenomena, following the same principles of swarm intelligence. Finally, the proposed approach is easily adapted to any PSO-based robotic approach, for example, the RDPSO. This improvement leads to an outstanding performance in comparison with other state-of-the-art approaches in robotic domain (especially in terms of successful search mission).

In this paper, we assumed that the target emits a signal that other robots can sense. Moreover, robots are equipped with sensors that let them sense the target and detect obstacles. In general, sensors have been used to sense targets (e.g. cameras), and robots can determine their position if they are in a specific range of distance, rs. These assumptions are popular in this domain.

Bearing this idea in mind, our method can keep a suitable diversity among the robots during the search mission using a repulsion mechanism. This causes a higher exploration rate, and it also avoids “slow convergence rate” problem.

In the proposed method, each robot updates its velocity using Eq. (3), which is an extension of Eq. (1):

\(\chi_{5} \left[ t \right]\) denotes an attractive position which leads to minimizing robots’ overlapping search area. In other words, position \(\chi_{5} \left[ t \right]\) is directly related to robots’ diversity. Similar to other parameters, \(\rho_{5}\) is the repulsion sensitivity coefficient and r5 is a random number uniformly distributed within the range [0, 1]. If the search area of a robot has no overlap with other robots, then \(\rho_{5} = 0\).

To find \(\chi_{5} \left[ t \right]\), for a kth robot, we create a (2rs + 1 × 2rs + 1) dimension matrix, \(\varPhi_{k}\), where the centre of this matrix corresponds to the current position of robot k. The width of each cell of \(\varPhi_{k}\) is one square, in which we fill \(\varPhi_{k}\) according to Euclidean distance of centre of each cell to the other robots:

where \(\varPsi_{ij}^{m}\) for the kth robot is a value in the range of [0,1]. The value of \(\varPsi_{ij}^{m}\) tends to zero when the mth robot is far from the kth robot and no overlap between their search areas is observed (in other words, the distance between the kth and mth robot is bigger than 2rs, where half of 2rs is related to the mth robot and the other half is related to the kth robot). On the other hand, the value of \(\varPsi_{ij}^{m}\) tends to 1 when the mth robot is so close to the \(k\)th robot (their search areas match). \(x\left[ {d_{ij} } \right]\) and \(y\left[ {d_{ij} } \right]\) denote the position centre of the ith row and the jth column of the matrix \(\varPhi_{k}\).

It is, however, noteworthy that the search area of several robots may present some overlap with the one of a given \(k\)th robot. Therefore, one should consider the impact caused by all robots as:

where \(V_{ij}^{k}\) denotes the final value for the ith row and jth column cell of \(\varPhi_{k}\). NR is the number of robots. After calculating \(\varPhi_{k}\) for all robots within the population, we initialize \(\chi_{5} \left[ t \right]\) with a cell of \(\varPhi_{k}\) which has a minimum value (if several cells have minimum value, then \(\chi_{5} \left[ t \right]\) is initialized by the nearest one). For example, Fig. 1 shows a portion of search space around the blue robot. Also, the corresponding matrix for this robot is shown in Fig. 1. The values of this matrix are filled according to the distance of red robot and the centre of each cell. If the distance between the red robot and the centre of each cell is superior to 2rs + 1, then the value of this cell is set to zero (in this example, rs = 3). The nearest cell to the current position of the robot is highlighted with the grey colour. Note that the blue robot is placed in the centre of this matrix.

In general, the velocity updates within the RDPSO help to attract the robot towards the best member of the swarm (which has the highest fitness value among all of the robots). Therefore, the diversity between robots decreases. If robots get too close to each other (very low diversity), then robots’ velocity drops to smaller values. This is due to robots’ current position being close to their local and global best positions. This leads to a small value for differences between the current position of the robot with local and global best (see the last term of Eqs. (1) and (3)) and, as a consequence, robots’ motion is almost inexistent. In the proposed method, the new component \(\rho_{5} r_{5} \left( {\chi_{5} \left[ t \right] - x_{n} \left[ t \right]} \right)\) prevents robots from getting too close to each other and it leads to an increment in the level of diversity between robots. Figure 2 shows the impact of this component.

In Fig. 2a, there are two robots at iteration t. Sensed area, wherein robots can detect the exact position of the target, has been shown by the grey colour. Suppose that the blue robot (right one) is the nearest to the target. This robot is known as the “best robot” or gBest (global best). The second red robot (behind the “best robot”) tracks the “best robot”, following the RDPSO original equation (Eq. (1)). By following the original RDPSP, in the next iteration (t + 1), the red robot may get too close to the blue robot (Fig. 2b). However, the new component \(\rho_{5} r_{5} \left( {\chi_{5} \left[ t \right] - x_{n} \left[ t \right]} \right)\) of Eq. (3) does not allow robots to get close to each other by minimizing the overlapped sensed area. As a result, the diversity between them increases and it leads to a higher exploration rate (Fig. 2c).

It is noteworthy that a high diversity in the final phase may lead to a slow convergence rate. Hence, in the final phase, one must prevent diversity from becoming too high (Sun et al. 2011). This problem can be solved by decreasing the impact of \(\rho_{5}\) after each iteration:

where \(U\) and \(L\) are the upper and lower bounds for \(\rho_{5}\), respectively. Also, t is the current number of iterations and \({\text{maxItr}}\) is the maximum number of iterations allowed (termination condition). Thus, our approach will not have a large diversity in the final phase.

The main steps of the proposed method are described in Algorithm 1, where Ps is the population size. The fitness function is represented by F(·), and “Fi” indicates the fitness value retrieved by the \(i\)th robot. To consider any constraints on the robot’s motion, the function “Satisfying Consistency()” is used. This function checks the robot’s constraints for movement (e.g. max admissible velocity). If some unsatisfied constraints exist, this function alters the velocity such that it can fulfil those requirements.

It is noteworthy that the fitness value for a robot in the proposed method depends on the distance between the robot and the target. The fitness function used in this article is the normalized inverse of the Euclidean distance within the range [0, 1]. If sensors cannot sense any signal, the fitness function is set to zero. In contrast, if the robot gets too close to the target, its fitness value converges towards one.

Note that robots do not know the position of the target. However, robots can sense the target using their sensors. In other words, emitted signal from the target allows the robot to sense it and determine a locally favourable direction. However, estimating the “local” direction towards the source (in a short period of time) does not mean that the source is actually there. In most real-world search problems, local measurements only give a clue about the source direction, then the robots should move towards that direction for a short period of time [and distance], and they have to re-evaluate their perception iteratively so as to find [probably] a new direction. This is the way that many biological creatures (e.g. moth) work. For instance, the signal quality of radio transmitters could be used to sense another robot, while an array of microphones could be considered to find victims through sound.

4 Experiments and Results

To demonstrate the superiority of the proposed method in robotic target searching problems, we selected three PSO-based approaches for benchmarking: RPSO (Couceiro et al. 2011a), RDPSO (Couceiro et al. 2014) and DistPSO (Hereford et al. 2007). We used identical values for the corresponding parameters shared by all searching algorithms: the inertial influence \(\rho_{1} = 1\), the cognitive component \(\rho_{2} = 2\) and the social component \(\rho_{2} = 2\). Also, we use identical value for the obstacle avoidance component (\(\rho_{4} = 1\)). The initial sub-swarm for the proposed approach and the RDPSO algorithm is determined by \(\hbox{min} ({\text{number}}\,{\text{of }}\,{\text{robots}}/4 , 3)\) (Couceiro et al. 2012a). Finally, rs = 15 (the radius which robot can detect the exact position of the target).

To determine the performance of the proposed method, several experiments regarding the number of robots and environment type were performed and the results have been presented here. All experiments were simulated in a self-developed simulator, denoted as “Robotic Target Searching Simulator”. This simulator has several features that make it ideal for the evaluation of the proposed approach (Dadgar et al. 2015):

One can define the number of robots, targets and obstacles.

Robot can move continuously (like a real-life robot).

One can define obstacles and targets visibility ranges.

One can define different shapes for obstacles.

One can create a new environment by simply changing the initial value for the number of robots, environment size, etc.

The initial point for each robot is represented by a blue star (Fig. 3). Also, obstacles and target are represented by black rectangles and the concentric circles, respectively. The obstacle visibility range is set up to be 15 squares. In all of our experiments, the position of obstacles and target is randomly selected.

To terminate the search mission, two termination conditions were considered (whichever comes first):

- 1.

Maximum iterations (it depends on the environment size and remains constant regardless of the method).

- 2.

If the fitness retrieved from at least one robot is better than 0. 99 (the maximum value is 1 and it is achieved when the robot is right next to the target).

4.1 Trajectory Comparison

By showing an example of the trajectory of the swarm for each algorithm, we are going to determine the performance of the proposed method. As shown in Fig. 3, all robots are going to start from the top left corner of the search space. The RPSO and DistPSO present a small level of diversity in each iteration, which leads to a slow progression of robots (Fig. 3a, b). In these algorithms, the position of the best robot (which is known by the highest fitness value), most of the time, corresponds to both “global best” and “personal best”. This leads to a near-zero movement for this robot (according to characteristics of PSO algorithm). So, the “best robot” has a considerably slow motion; as a consequence, it allows other robots to get too close to the “best robot” quickly. This leads to a slow progression towards the target as the gBest and pBest are too close to the current position of robots. By observing Fig. 3a, b, one will understand that all the robots lie in a small region and they search a region together. This situation leads to small progression speed and significantly decreases the exploration rate.

On the other hand, both RDPSO and RbRDPSO try to keep a high level of diversity between robots. In fact, in both methods, robots are divided into several sub-swarms, which leads to higher diversity, as each sub-swarm has its own gBest. In other words, robots are attracted to the gBest of their own sub-swarm, instead of a single gBest.

In comparison with the RDPSO algorithm, the proposed approach not only keeps sub-swarms diverse, but it also provides a suitable level of diversity among members within each sub-swarm. This leads to larger exploration rate, and larger exploration rate results in higher progression speed (in some cases); this speed is higher when it is compared with three other approaches. Also, as it was mentioned before, the larger exploration rate helps robot to avoid local optima.

4.2 The Impact of Different Environments and Different Population Sizes

This section presents several experiments; each of them was performed with different numbers of robots and in normal and complex environments. The normal environment is an environment with some obstacles when the complex environments have more obstacles in comparison with normal ones. Once again, the initial value for each parameter of each approach is identical. We also assumed that the positions of robots and obstacles are not similar. Therefore, the chance of a single robot’s initial position to be close to target in complex environment is more than the normal environment. In some cases, this may make the search look faster and be more successful in complex environments than normal ones.

Tables 1 and 2 compare the performances of searching algorithms in terms of mean and standard deviation (denoted as STD) of running time. Each row in Tables 1 and 2 shows an experiment based on the type of environment. Each one of these experiments has been repeated 30 times per each algorithm. The average and the standard deviation of iterations for successful search are reported in Tables 1 and 2. The size of the environment in all of the experiments of this section is 400 × 400 squares (cells). The size of the square depends on the robot’s size. In fact, the proposed method is independent from the robot size so it can work for a broad variety of robot configurations. In detail, RbRDPSO needs several simple operations to be applicable on small simplistic robots.

For example, as one may see in the first row of Table 1, RbRDPSO has a 90% success rate (27 out of 30 experiments) and the mean and standard deviation of the successful experiment are 193.66 and 35.93, respectively. RDPSO has better performance in comparison with RPSO and DistPSO, as it could find target in 20 experiments (67% success rate). However, RPSO can find target in 12 experiments (40% success rate) and DistPSO can find target in 11 experiments (37% success rate). Besides, the average number of iterations in RbRDPSO is better than any other approach. This means not only is RbRDPSO more successful in terms of target finding in most scenarios, but it is also faster than the others (in some cases).

Tables 1 and 2 show the performance of the proposed approached in comparison with other approaches, especially when the population size is small. In other words, by taking a close look at these tables, one can see that the difference of success rate between the proposed method and the other method is much more considerable for small size of the population. Please note that as the number of robots in PSO-based algorithms decreases, the performance of these approaches usually decreases too. Nevertheless, our approach can face small populations in a different manner and still be functional. To highlight the importance of this benefit, we considered a robotic searching mission in an unknown and harmful environment which robots might break down (e.g. robot stuck in a pit). It is then important to continue the search mission, even if robots get lost. It is worth mentioning that, in this section, we studied relatively small environments. In Sect. 4.3, we studied the impact of environment size in detail.

Note that each row of Tables 1 and 2 is a separate problem (in terms of the obstacles positioning, robots positioning and the number of robots). According to the nature of the problem, it is possible that a problem with more robots needs more time than another problem with fewer robots.

To exploit the performance of the proposed method further, a pairwise test was done. Table 3 shows the results of this test where each row of this table shows 50 distinct experiments according to given type of problem and the number of robots. Each experiment runs 30 times per each algorithm. The size of all environments in Table 3 is 500 × 500 squares. In Table 3, “+” means that our proposed method exhibited better performance. Also, “−” means that other methods exhibited better performance than the RbRDPSO. This higher performance for one experiment means that one of the approaches had higher success rate when it was compared with other ones. If both approaches have similar success rate, the faster one is considered to be the winning approach of that experiment. Since Table 3 summarizes 1000 different experiments, it clearly shows the effectiveness of each approach. As one can see in Table 3, RbRDPSO is the winner (due to its better performance) in comparison with RPSO, DistPSO and RDPSO. In 991, 990 and 829 experiments (out of 1000), our approach performed better than the former approaches; it is a significant improvement. Also, as one can see the superiority of performance in the early rows (smaller population size) of Table 3 is more compared to the late rows of Table 3.

4.3 Impact of Environment Size

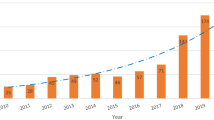

In this section, the impact of the environment size on robotic target searching is investigated. All approaches in this section utilize five robots (medium population size). To show the impact of environment size, in the beginning, 20 different environments were simulated for a given specific size. Afterwards, searching algorithms were tested in these different environments (each algorithm ran 30 times per each environment). The average time for successful search mission is reported in Fig. 4. Also, the successful searching rate is reported in Fig. 5. Figures 4 and 5 show the performance of the proposed method. Figure 5 shows higher success rates for the proposed approach when compared with other approaches.

Note that in small search spaces, the performance of every algorithm is not obvious and even a weak algorithm can find the target with a few iterations (time); thus, studying a large search space to investigate the performance of searching algorithm is important.

Finally, it is worth mentioning that we determined an upper bound for maximum iterations. We considered two cases to do that. In the first case, we supposed that there is a limitation based on the battery consumption, and in the second case we considered a due date for mission accomplishment. This means that finding target is acceptable, only if robots can find the target within a specific amount of time. If we did not consider this upper bound for maximum iterations, then maybe the success rate for all approaches tends to 1 (or near 1).

5 Conclusion and Future Work

In this paper, we used the repulsion mechanism between similar ions as an inspiration, so as to delineate a new robotic target searching strategy that was then evaluated on the RDPSO algorithm. The proposed method provides several key advantages for robotic target searching, such as its capability to provide high diversity between robots; as a result, our proposed method can overcome the “slow convergence rate” problem and, in addition, it can avoid local optima. The proposed approach tries to reduce the overlapping search area; it then leads to higher exploration rate. These features lead to an efficient robotic searching algorithm. To verify the performance of the proposed method, several experiments were conducted in simulated environments. Experimental results show the superiority of the proposed method in comparison with other search approaches, especially when we have a small population of robots.

Notes

A preliminary report of RbRDPSO was published in AISP2017 (Dadgar et al. 2017).

References

Abedinpourshotorban H, Shamsuddin SM, Beheshti Z, Jawawi DNA (2015) Electromagnetic field optimization: a physics-inspired metaheuristic optimization algorithm. Swarm Evolut Comput 26:8–22

Braitenberg V (1986) Vehicles: experiments in synthetic psychology. MIT Press, London

Couceiro MS, Rocha RP, Ferreira NMF (2011a) A novel multi-robot exploration approach based on particle swarm optimization algorithms. In: 2011 IEEE international symposium on safety, security, and rescue robotics (SSRR), pp 327–332. IEEE

Couceiro MS, Rocha RP, Ferreira NMF (2011b) Ensuring ad hoc connectivity in distributed search with robotic darwinian particle swarms. In: 2011 IEEE international symposium on safety, security, and rescue robotics (SSRR), pp 284–289. IEEE

Couceiro MS, Machado JAT, Rocha RP, Ferreira NMF (2012a) A fuzzified systematic adjustment of the robotic Darwinian PSO. Robot Auton Syst 60(12):1625–1639

Couceiro MS, Martins FML, Rocha RP, Ferreira NMF, Sivasundaram S (2012b) Introducing the fractional order robotic Darwinian PSO. In: AIP conference proceedings—American Institute of Physics, vol 1493, no 1, p 242

Couceiro MS, Martins FML, III-Solum RDJ, Rocha RP, Ferreira NMF (2012c) Analysis and parameter adjustment of the RDPSO towards an understanding of robotic network dynamic partitioning based on Darwin’s Theory. Int Math Forum 7(32):1587–1601

Couceiro MS, Martins FML, Clemente F, Rocha RP, Ferreira NMF (2013) Towards a further understanding of the robotic darwinian PSO. In: Madureira A, Reis C, Marques V (eds) Computational intelligence and decision making. Springer, Berlin, pp 13–22

Couceiro MS, Vargas PA, Rocha RP, Ferreira NMF (2014) Benchmark of swarm robotics distributed techniques in a search task. Robot Auton Syst 62(2):200–213

Dadgar M, Jafari S, Hamzeh A (2015) A PSO-based multi-robot cooperation method for target searching in unknown environments. Neurocomputing 177:62

Dadgar M, Couceiro MS, Hamzeh A (2017) RDPSO diversity enhancement based on repulsion between similar ions for robotic target searching. In: Artificial intelligence and signal processing conference (AISP), pp 275–280. IEEE

Eberhart RC, Kennedy J (1995) A new optimizer using particle swarm theory. In: Proceedings of the sixth international symposium on micro machine and human science, New York, vol 1, pp 39–43

Feng Q, Cai H, Li F, Liu X, Liu S, Xu J (2019) An improved particle swarm optimization method for locating time-varying indoor particle sources. Build Environ 147:146–157

Floreano D, Mattiussi C (2008) Bio-inspired artificial intelligence: theories, methods, and technologies. MIT Press, London

Gazi V, Passino KM (2002) Stability analysis of swarms in an environment with an attractant/repellent profile. In: Proceedings of the 2002, American Control Conference, vol 3, pp 1819–1824. IEEE

Gazi V, Passino KM (2004) Stability analysis of social foraging swarms. IEEE Trans Syst Man Cybernet B Cybernet 34(1):539–557

Hereford J, Siebold M (2008) Multi-robot search using a physically-embedded particle swarm optimization. Int J Comput Intell Res 4(2):197

Hereford JM, Siebold M, Nichols S (2007) Using the particle swarm optimization algorithm for robotic search applications. In: Swarm intelligence symposium. SIS 2007. IEEE, pp 53–59. IEEE

Jatmiko W, Sekiyama K, Fukuda T (2006) Modified particle swarm robotic for odor source localization in dynamic environment. Int J Intell Control Syst 11(3):176–184

Krishnanand KN, Ghose D (2009a) A glowworm swarm optimization based multi-robot system for signal source localization. In Design and control of intelligent robotic systems. Springer, Berlin, pp 49–68

Krishnanand KN, Ghose D (2009b) Glowworm swarm optimization for simultaneous capture of multiple local optima of multimodal functions. Swarm Intell 3(2):87–124

Marques L, de Almeida AT (2005) Finding odours across large search spaces: a particle swarm-based approach. In: Armada MA, Santos PG (eds) Climbing and walking robots. Springer, Berlin, pp 419–426

Pehlivanoglu YV (2013) A new particle swarm optimization method enhanced with a periodic mutation strategy and neural networks. IEEE Trans Evolut Comput 17(3):436–452

Pugh J, Martinoli A (2006) Multi-robot learning with particle swarm optimization. In: Proceedings of the fifth international joint conference on autonomous agents and multiagent systems, pp 441–448. ACM

Pugh J, Martinoli A (2007). Inspiring and modeling multi-robot search with particle swarm optimization. In: Swarm intelligence symposium, 2007. SIS 2007. IEEE, pp 332–339. IEEE

Rastgoo MN, Nakisa B, Zakree A, Nazri M (2015) A hybrid of modified PSO and local search on a multi-robot search system. Int J Adv Robot Syst 12(7):86

Riget J, Vesterstrøm JS (2002) A diversity-guided particle swarm optimizer-the ARPSO. Department of Computer Science, University of Aarhus, Aarhus, Denmark, Technical Report 2

Sombolestan SM, Rasooli A, Khodaygan S (2018) Optimal path-planning for mobile robots to find a hidden target in an unknown environment based on machine learning. J Ambient Intell Human Comput 10:1841

Suarez J, Murphy R (2011) A survey of animal foraging for directed, persistent search by rescue robotics. In: 2011 IEEE international symposium on safety, security, and rescue robotics (SSRR), pp 314–320. IEEE

Sun J, Fang W, Palade V, Wu X, Xu W (2011) Quantum-behaved particle swarm optimization with Gaussian distributed local attractor point. Appl Math Comput 218(7):3763–3775

Tang K, Li Z, Luo L, Liu B (2015) Multi-strategy adaptive particle swarm optimization for numerical optimization. Eng Appl Artif Intell 37:9–19

Tang H, Sun W, Yu H, Lin A, Xue M, Song Y (2019) A novel hybrid algorithm based on PSO and FOA for target searching in unknown environments. Appl Intell 49:2603

Tayarani-N M-H, Akbarzadeh-T M-R (2014) Magnetic-inspired optimization algorithms: operators and structures. Swarm Evolut Comput 19:82–101

Turky AM, Abdullah S (2014) A multi-population electromagnetic algorithm for dynamic optimisation problems. Appl Soft Comput 22:474–482

Wang H, Sun H, Li C, Rahnamayan S, Pan J-S (2013) Diversity enhanced particle swarm optimization with neighborhood search. Inf Sci 223:119–135

Warburton K, Lazarus J (1991) Tendency-distance models of social cohesion in animal groups. J Theor Biol 150(4):473–488

Zhang X, Du YP, Qin GQ, Qin Z (2005) Adaptive particle swarm algorithm with dynamically changing inertia weight. J Xi’an Jiaotong Univ 39:1039–1042

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Dadgar, M., Couceiro, M.S. & Hamzeh, A. RbRDPSO: Repulsion-Based RDPSO for Robotic Target Searching. Iran J Sci Technol Trans Electr Eng 44, 551–563 (2020). https://doi.org/10.1007/s40998-019-00245-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40998-019-00245-z