Abstract

In unknown environments, multiple-robot cooperation for target searching is a hot and difficult issue. Swarm intelligence algorithms, such as Particle Swarm Optimization (PSO) and Fruit Fly Optimization Algorithm (FOA), are widely used. To overcome local optima and enhance swarm diversity, this paper presents a novel multi-swarm hybrid FOA-PSO (MFPSO) algorithm for robot target searching. The main contributions of the proposed method are as follows. (1) The improved FOA (IFOA) provides a better value for the improved PSO (IPSO) to find the next optimal robot position value. (2) Multi-swarm strategy is introduced to enhance the diversity and achieve an effective exploration to avoid premature convergence and falling into local optima. (3) An escape mechanism named MSCM (Multi-Scale Cooperative Mutation) is used to address the limitation of local optima and enhance the escape ability for obstacle avoidance. All of the aspects mentioned above lead robots to the target without falling into local optima and allow the search mission to be performed more quickly. Several experiments in four parts are performed to verify the better performance of MFPSO. The experimental results show that the performance of MFPSO is much more significant than that of other current approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Mobile robots can be used in many applications, such as warehousing, industrial transportation, ground-cleaning equipment, target searching and so on. There are many different scenarios for searching applications. Wei et al. [1] studied the multi-robot task allocation problem in the search and retrieval domain. Robots were dispatched for searching and rescuing victims in a disastrous area [2]. Senthilkumar et al. [3] used ant-type robots to explore and cover the terrain, leaving marks on the terrain. In [4], the robot was thought to be a particle, and different niches were employed to localize different odour sources.

In recent decades, more and more studies have shown that intelligent algorithms are suitable for developing intelligent systems and providing solutions for robot cooperation. The collective intelligence behavior of colonies of ants, flocks of birds, swarms of bees and other insect or animal swarms has attracted the attention of researchers [5]. Some examples of swarm behavior inspired optimization algorithms are the Fruit Fly Optimization Algorithm (FOA) [6,7,8], Artificial Bee Colony (ABC) algorithm [9, 10], Ant Colony Optimization (ACO) [11, 12], Artificial Fish Swarm Algorithm (AFSA) [13] and Particle Swarm Optimization (PSO) algorithm [14,15,16]. The bat algorithm (BA) [17] is also a random search mechanism based on a colony like PSO, as one of the newest swarm intelligence optimization algorithms, it has few reports on application of robot cooperation. Brain Storm Optimization algorithm (BSO) was a novel swarm intelligence algorithm [18], which mimics the creative problem solving process in human beings. Some researchers have applied BSO algorithm in the fields of path planning and satellite formation reconfiguration [19]. PSO as a meta-heuristic optimization algorithm has been used to solve complex optimization problems. Masoud Dadgar et al. [20] used multiple robots for target searching in unknown environments based on an Adaptive Robotic PSO (A-RPSO) approach. A-RPSO takes into account obstacle avoidance and acts as the control mechanism for robots. In [21, 22], PSO was used for path planning and navigation. PSO was used for obstacle avoidance in [23,24,25,26]. Cai et al. [27, 28] used a modified PSO for cooperative multi-robot target searching in unknown and complex environment, and the cooperation rules of the potential field function acted as the fitness function.

An algorithm for target searching should have the following constraint conditions [29]. (1) The calculation of the search algorithm is simple. The search algorithm should be adapted to robot limitations regarding battery power, processor speed and memory capacity. (2) It is better to have distributed control than centralized one. (3) The search algorithm should have a minimum amount of communication among the robots. To avoid taking a long time to share information among robots, the search algorithm needs an appropriate mechanism. In robotic target searching, PSO is a common approach. Each robot is considered as one particle. Moving and communication between robots are similar to the particles in a swarm set by a PSO formulation, and the algorithm is simple to grasp and implement. PSO-based algorithm has been proved to be successful in optimizing search type problems [20].

In this paper, a novel multi-swarm hybrid FOA-PSO algorithm (MFPSO) is proposed for target searching in unknown environments. Cooperative exploration is an important subject for mobile robots, especially for target searching in complex and unknown environment. As for those applications, the robots know that the search area is bounded but robots know nothing about the locations of obstacles and target. It is necessary to make the following assumptions. (1) The target sends out a signal, such as light or heat, which allows the robots to sense it. (2) Different types of sensors have different types of detection signals, for instance, some passive sensors can be utilized to detect light or heat. Each robot must be equipped with a special sensor based on the type of the target. The robots must also be equipped with sensors to detect obstacles. (3) The robots have a mechanism for communication with each other.

The remainder of the paper is as follows. The related work, including algorithms based on PSO for target searching is reviewed in section 2. In section 3, the proposed approach of MFPSO is described in detail including the improved PSO, improved FOA and MSCM mechanism. The effectiveness of MFPSO will be verified by various experiments in section 4. Finally, the conclusion is drawn in section 5.

2 Related work

2.1 Swarm intelligence algorithms for target searching

The most famous swarm intelligence (SI) algorithms that have been used for robotic target searching are presented in this section.

Glowworm Swarm Optimization (GSO) is a distributed algorithm inspired by the behavior of glowworms. In [30, 31], GSO was studied via different benchmarks. Compared with other algorithms, the GSO fails in most cases. Krishnanand and Ghose used GSO in multi-robot systems [32, 33]. The performance of GSO depends on the range of communication.

The bee swarm optimization algorithm is inspired by the behavior of honeybees. In [34], a novel algorithm based on bee swarm optimization algorithm was proposed that can automatically find an object if the object is emitting the strongest intensity of a radio frequency. This algorithm exhibited better performance than classical PSO for the mentioned problem, but was not tested to find all targets or unique targets.

The aggregations of foraging swarm (AFS) approach was presented by Gazi and Passino [35, 36]. The swarm is modelled as aggregations of foraging swarms based on attractant/repellent profiles. The Robotic Darwinian PSO (RDPSO) algorithm is superior to AFS, the performance of GSO is slightly better than that of AFS for smaller populations [31].

2.2 PSO-based algorithms for target searching

PSO-based algorithms have been widely used in robotic target searching and proved to be successful in optimizing the search type problem. A distributed PSO algorithm was used to guide robots for locating targets in a hazardous environments by Derr et al. [37]. The approach was developed and analyzed on multi-robot-multi-target search, but there is no cooperation between sub-swarms. A mechanical PSO for robotic target searching was proposed by Tang et al. [38]. Mechanical-PSO is a new research window for swarm mobile robots, which takes into account the mechanical properties of the robot. At present, Mechanical-PSO has a very strong hypothesis and has been tested in the environment without obstacle. A distributed PSO (dPSO) algorithm has also been proposed, which uses a large number of small mobile robots for target searching [29]. Each particle in PSO represents a robot. One of the advantages of dPSO is that it is easily scalable to a large number of robots.

Two extensions of PSO (named “RPSO” and “RDPSO”) have also been proposed [39, 40]. RPSO (Robotic PSO) consists of a population of robots that collectively searches in the search space. RDPSO (Robotic Darwinian PSO) introduces the “punish-reward” mechanism to enhance the ability to jump out of local optima. In both algorithms, multi-robot systems based on PSO technology perform a distributed exploration task while taking into account obstacle avoidance.

The A-RPSO algorithm is a target-searching algorithm for environments with obstacles. A-RPSO has a property that causes robots to diverge immediately if they are about to converge. This property is important for escaping local optima. The amount of information transmitted among the robots is small and has the benefit of scalability, which implies that any number of robots can be used in A-RPSO [20].

PSO is an optimization technique based on swarm intelligence. PSO possesses the advantages of a simplified computing method, rather quick convergence speed, fewer control parameters, greater ease of implementation, etc. Although PSO-based algorithms are attractive methods for robotic target searching problems, there are several challenges. (1) They face the challenge of premature convergence, and they can easily fall into local optima. Each member of the swarm follows the “personal best” and “global best.” The velocities of particles (robots) rapidly approaches zero when robots are about to converge to a local optimum, which cause the robots to fall into the local optimum [41, 42]. As each particle’s velocity approaches zero, all the particles tend to reach equilibrium. They do not have sufficient exploration and exploitation abilities, and it is not easy for the particles (robots) to escape from local optima. Therefore, all approaches using PSO for target searching should have a mechanism to escape from local optima. (2) They are slow to converge to the target location in some situations. (3) It is difficult to set the empirical parameters.

Most of the above algorithms are compared in [20], and the conclusion is that A-RPSO is better than the other algorithms in almost all experiments. Compared with RPSO and A-RPSO for multi-swarm robots and large environments in particular, the proposed MFPSO approach exhibits better performance.

3 MFPSO approach

There are three main contributions in the proposed MFPSO approach. First, an improved PSO (IPSO) with adaptive inertial weight and obstacle avoidance is proposed, and an improved FOA (IFOA) algorithm is introduced to increase diversity and provide better values for IPSO to find the next optimal value of the robots’ position in each iteration. Second, Multi-swarm strategy is introduced. Instead of having one swarm of robots searching for the target, robots are divided into several sub-swarms, and each swarm uses its own members to search for a better area independently in the search space, which can improve the diversity and achieve effective exploration to avoid premature convergence and falling into local optima, expand the search area and improve the search speed. Finally, the Multi-Scale Cooperative Mutation (MSCM) mechanism is used to address the limitation of local optima, enhance the escape ability for obstacle avoidance and improve the search effectiveness.

A block diagram of the MFPSO approach is shown in Fig.1; the PSO-based module is mainly composed of improved PSO (IPSO), IFOA and Multi-Swarm strategy module; the MSCM module can be invoked when necessary. The relevant inputs include the environmental information and target information obtained by sensors, and the output is the position of the robot.

3.1 Improved PSO approach

3.1.1 PSO algorithm

PSO is an intelligent approach that simulates the behavior of a swarm of particles. Each particle is represented by its position vector \( {x}_i^t \) and velocity vector \( {v}_i^t \). The particles fly at a certain speed \( {v}_i^t \) in the search space, and the quality of a particle is determined by a predetermined fitness function. \( p{B}_i^t \)(“personal best”) and \( g{B}_i^t \)(“global best”) are the best previous position of particle i and the best previous position among all particles, respectively. The update equations of velocity and position in the process of particle iteration are as follows:

where i represents the particle number and t represents the current iteration. r1 and r2 are random numbers distributed uniformly within the range [0,1]. The constants c1 and c2 are the coefficients of the cognition and social components, respectively. ω is called the inertial weight.

3.1.2 Improved PSO algorithm

In MFPSO, the improved PSO algorithm (IPSO) also updates its position by Eq.(2), and each robot updates its velocity by the following equation:

where \( pbes{t}_i^t \) is the best previous position of robot i and \( gbes{t}_m^t \) is the best previous position in the mth sub-swarm. Robot’s velocity and position must satisfy the constraints.

Obstacles avoidance

In MFPSO, obstacle avoidance needs to be taken into account, IPSO tries to use the last term in Eq.(3) to avoid obstacles. \( o{p}_i^t \) is an attractive position that is suitably located away from any obstacles. The position \( o{p}_i^t \) can be located where the distance from the robot to the obstacles is maximized. r1 and r3 are random numbers distributed uniformly within the range [0,1]. It’s different from A-RPSO, c1 and c2 are initialized as c1 = c2 = 2, and c3 is the obstacle sensitivity coefficient; the value of c3 is determined using Eq.(4) for each robot. If no obstacle is sensed in a certain radius then c3 is 0. Oi is the sensing intensity of the sensor; the closer the robot is to the obstacle, the greater its value is. Oi is proportional to the sensor’s induction value within the range [0,1].

Adaptive inertial weight

In Eq.(3), the adaptive inertial weight \( {\omega}_i^t \) represents the variance of the ith robot in the tth iteration. The value of \( {\omega}_i^t \) depends on the “evolutionary speed” factor \( {h}_i^t \) by Eq.(6) and the “aggregation degree” factor s by Eq.(7). If a robot has slow progress in terms of fitness, then the “evolutionary speed” for that robot is decreased. If robots lie close to each other, the “aggregation degree” increases. By decreasing the “evolutionary speed” or increasing the “aggregation degree”, the impact of inertial weight is increased, which leads to a weakening of the ability of the robots to track the “present best” and “global best”. These factors help robots to maintain diversity and prevent premature convergence in the search mission [20].

where ωini is the initial value for the inertial weight. The values of a and β are constant in the range of [0,1]. \( {R}_i^t \) is determined by Eq.(8) where U and L are the upper and lower bounds, \( {R}_i^t \) helps MFPSO to expore more environment and increase the success rate. maxItr is the maximum number of iterations. ωini=1, U=1.5, L=0.5 and maxItr=1500 in this paper. \( f\left( pbes{t}_i^t\right) \) is the fitness value of \( pbes{t}_i^t \). FtBest is the best fitness in the current iteration, and \( \overline{F_t} \) is the mean fitness of all robots in the tth iteration. In A-RPSO, each robot updates its velocity in a manner similar to that of Eq.(3); the difference is \( gbes{t}_m^t \), which represents the global best, and the value of c1、c2 and c3 are also different from MFPSO.

3.1.3 Fitness function

Eq.(9) is the model of the fitness function. \( f\left({x}_i^t\right) \) is the fitness value of \( {x}_i^t \) (the position for robot i in the t th iteration). \( Dist\left({x}_i^t\right) \) is the distance from the robot i to the target, as shown in Fig. 3. Msen is the maximum range for detecting the target. pow is the energy of the target at the current time. Mpow is the maximum energy of the target. In this study, it is assumed that the energy emitted by the target does not change over time in the search process and that there is enough power for the robot to search, and pow=Mpow=1. Msen is the diagonal length of the environment map. The robot moves toward a position where the fitness function value is larger.

3.2 Improved FOA (IFOA) approach

3.2.1 Fruit Fly optimization algorithm (FOA)

In this paper, we will first introduce the original FOA technique [6,7,8]. Compared with other SI-based optimization algorithms, FOA is easy to understand and computationally inexpensive. Especially it has good effect in solving the problem of multi-swarm [7]. The FOA can be divided into several main steps, which are described as follows:

-

Set 1:

Initialize the location of the fruit fly swarm. Init x _ axis; Init y _ axis.

-

Set 2:

Give a random direction and distance for the search of food using osphresis by an individual fruit fly.

where x and y represent the location coordinates.

-

Set 3:

Because the location cannot be known, the distance(Dist) to the origin is thus first estimated, and then the smell concentration judgment value (S) is calculated, the value of which is the reciprocal of the distance.

-

Set 4:

Substitute the smell concentration judgment value into the smell concentration judgment function (also called the Fitness function) to find the smell concentration (Smelli) of the individual location of the fruit fly.

-

Set 5:

Identify the optimal smell concentration value in the swarm, denoted by the maximum value.

-

Set 6:

Reserve the identified optimal smell concentration value and the fruit fly swam will use their vision to fly towards the location.

-

Set 7:

Enter iterative optimization to repeat the implementation of Steps 2–5, and judge if the smell concentration is superior to the previous iterative smell concentration; otherwise, go back to Step 2.

3.2.2 IFOA approach

In the original FOA, when the global optimal was transferred to a too converged swarm, a loss of diversity occurred. This behavior is quite similar to being trapped in a local optimum or premature convergence [8]. In the proposed approach of the Improved FOA (IFOA) algorithm as shown in Fig. 2, instead of using only one swarm of robots for target finding, the swarm is divided into several sub-swarms that sub-swarms move independently in the search space, and one sub-swarm FOA particle is employed as each robot (the sub-swarm has the same population size). The sub-swarm of fruit flies searches ahead to obtain a better value for the robot to find the next best position. The strategy that one sub-swarm is employed for each robot can enhance the diversity and achieve an effective exploration to avoid local optima and premature convergence, further optimize the input value of the improved PSO (IPSO) in each iteration and improve the search speed of MFPSO.

In IFOA, set the sub-swarm number as M, the max iterations as tmax and the population size of fruit flies as Ps. i = 1, 2, ..., Ps, which represents each population of fruit flies, and m = 1, 2, ..., M, which represents each sub-swarm. In each iteration, IFOA uses the position of the current robot as the location of the corresponding sub-swarm of fruit flies. A random direction and distance is given for individual fruit flies in each swarm using osphresis to search for the target.

where the x _ axism is the current position of sub-swarm m, and Z(t) is the exploration radius; λ = 2 ∼ 5, α = 2 ∼ 10, and in the experiments λ = 2, α = 4. As the number of iterations increases, the diversity of solution vectors gradually decreases. A large Z(t) value may increase the diversity for global exploration.

The fitness function is established on the basis of the signal intensity of the target which is a function of the distance between the fruit fly and the target.

3.3 MSCM mechanism

If a robot encounters an obstacle, the robot begins obstacle avoidance. At this point, if the robot’s position is the local optimal position but not global optimal position, the robot is able to avoid the obstacles and move to the next position. However, the fitness value of the next position maybe worse than the previous position, the robot will move towards the direction of the previous best position. The robot will return to the best position repeatedly because that position has the better fitness value at present. This phenomenon is referred to as local oscillations for movement. In some situations, the robot is even blocked by obstacles and cannot search forward. To address this problem, the proposed approach has an escape mechanism to mutate the position of the robot and jump away from the local optimum. Based on the new location of the robot swarm, a new optimal value is iteratively searched for to find the target.

The original PSO does not have a high probability of mutation. Consequently, an improved algorithm of MFPSO based on a Multi-Scale Cooperative Mutation (MSCM) mechanism is presented. The escape capability of PSO depends on the uniform variation scale. The uniform variation has strong ability to escape from the original point. However, we cannot predict the distance between the local optima of the function. Therefore, it is impossible to give the appropriate variation scale. If the initial scale is too large, the fitness of the new position escape cannot be guaranteed to be better than the existing optimal solution, which is particularly true in the later stage of the algorithm’s evolution, as the optimal solution may exist in the vicinity of the existing optimal solution [43]. In this manner, the algorithm is unable to determine the better position by simple uniform mutation. Finally because of the limitation of the number of iterations, the algorithm cannot converge to the global optimal solution.

In view of the above problems, a multi-scale cooperative PSO algorithm is presented. A Gaussian mutation operator for jumping out from local optima is employed in the proposed approach.

3.3.1 Gaussian mutation operator

The assumed mutation scale is M, and the variance of the Gaussian mutation operator is initialized as follows [8]:

where \( {\delta}_i^{t_0} \) is the variance of the ith scale in the t0th iteration, and its initial value is generally set as the range of the optimal variable. With increasing iterations, the variance of the multi-scale Gauss mutation operator will be adjusted accordingly. The specific adjustment is as follows: (1) according to the fitness value to sort the robots in the swarm; and (2) the sorted robots are grouped to generate M sub-swarms, and the number of robots in each sub-swarm is P = N/M. The fitness value of each sub-swarm is calculated as follows:

where \( f\left({x}_i^m\right) \) represents the fitness value of the ith robot in the mth sub-swarm, and \( Fi{t}_m^t \) indicates the fitness value of the mth sub-swarm in the tth iteration.

The standard deviation of each sub-swarm is adjusted dynamically according to the fitness value. The adjustment process is described as follows:

-

(1)

The minimum and maximum values of all sub-swarms are obtained, denoted as Fitmin and Fitmax, respectively.

-

(2)

According to Eq.(19), the fitness value of each sub-swarm is set in the tth iteration.

With the iteration of the algorithm, the mutation operator may be very large. The standard deviation of the mutation operator is set via Eq. (20):

It will be repeated until \( {\delta}_m^t>W/4 \), where W is the width of the variable space to be optimized; W = 10 in the paper.

-

(3)

According to the standard deviation of each sub-swarm, the location of the swarm is defined as follows:

where conditional represents \( f\left({x}_i+\mathit{\operatorname{rand}}\left(0,1\right)\times {\delta}_i^t\right)> \). f(xi + rand (0, 1) × W) and \( f\left({x}_i+\mathit{\operatorname{rand}}\left(0,1\right)\times {\delta}_i^t\right)= \). \( \mathit{\max}\left(f\left({x}_i+\mathit{\operatorname{rand}}\left(0,1\right)\times {\delta}_j^t\right)\right) \), j = 1, 2, ..., M.

3.3.2 MSCM algorithm

According to the description of 3.2.1, the MSCM algorithm is described as follows.

-

Set 1:

Calculate the fitness valuef(xi),sort(f(xi)).

-

Set 2:

The sorted robots are grouped to generate M sub-swarm.SubSwarmj = Separation(f(xi)), j = 1, 2, ..., M.

-

Set 3:

Calculate the fitness of each sub-swarm according to Eq. (18).

-

Set 4:

Calculate the standard deviation of each sub-swarm according to Eq. (19).

-

Set 5:

According to Eq. (20), while \( {\delta}_m^t>W/4 \), m = 1, 2, ..., M, and then \( {\delta}_m^t=\mid W/4-{\delta}_m^t\mid \).

-

Set 6:

Determine the new position of robots according to Eq. (21).

If the new location is not suitable for the robot such as in an obstacle, then repeat step 6 until the new location is suitable for the robot to move to.

3.4 Implementation of MFPSO

In MFPSO, each particle represents a robot. Moving and communication between robots are similar to particles in the swarm set by the PSO formulation. The pseudo code of MFPSO is presented in Algorithm 1.

The MFPSO approach is obtained following the basic steps below, which is showed in Algorithm 1.

-

(1)

Initialization. Set the parameters of the MFPSO algorithm such as the maximum number of iterations maxItr and number of robots Pop. And set the maximum number of iterations for a robot’s best fitness value to not increase, Tmax=3 in this paper. The robots are divided into M sub-swarms, with N robots in each swarm, and each robot employs a group of fruit fly particles.

-

(2)

The position of the current robot is the position of the corresponding sub-swarm of fruit flies in each iteration. Use the IFOA algorithm to provide a better PSO input value, which means for the improved PSO to find a better robot position. Update the best fitness and best position of each fruit fly sub-swarm. The output value of each sub-swarm of the IFOA is the input value of the improved PSO (IPSO) when the robot is looking for the next location.

-

(3)

The fitness function f(xi) for each robot is computed and the personal best value f(pbesti) is obtained. The best value f(gbestm) and best position gbestm are found in each swarm.

-

(4)

Update the velocity and position of each robot in each swarm by Eq. (3) and Eq. (2) respectively.

-

(5)

If the best fitness value of the robot is not increased after Tmax iterations, and the escape condition (te > Tmax) is satisfied, the MSCM of the escape mechanism is used to make the robot escape from the location. Then, a suitable new position for the robot is found, updating the position and velocity of each robot. After the robot escapes to the new position, the search algorithm is re-executed, and we return to step 2.

-

(6)

If f(xi) > 0.95, and it means that the robot is near the target, the proposed approach becomes the global PSO version (multi-swarm strategy is no longer adopted), and then all robots form a single swarm to search for the target. If one of the robot’s fitness value is greater than a certain value (0.99 in this paper) and its corresponding position is the target position, or the number of iterations is greater than maxItr, the stop condition is satisfied; otherwise, go to step 2.

Each sub-swarm robots searches for its best fitness value and best position independently. Each sub-swarm has the same robot population size for easy implementation. Under the multi-swarm strategy, the multiple sub-swarms can effectively explore the search area, which can avoid premature convergence and falling into local optima, and the MFPSO algorithm is expected to achieve good performance.

4 Experiments and analysis

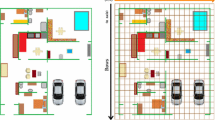

In this section, we conduct a series of simulation experiments to validate the effectiveness and efficiency of our proposed MFPSO approach. All of the simulation experiments are implemented in MATLAB R2010a. The simulator used for the experiments based on the “Robotic Target Searching Simulator” in [20], and the robots in the simulator can move continuously, like real robots. As shown in Fig. 3, the environment’s spatial size is 300 × 300 squares. The search space includes all potential solutions is the same as the search area described in the dataset. There are six robots and one target. The target is represented by the concentric circles, and obstacles are represented by black rectangles. The initial point of each robot is represented by a six-pointed star. In the simulation experiments, the positions of obstacles and the target are initialized randomly. In addition, the robots know nothing about the environment, such as the locations of obstacles and the target. But the robots know the search area and the number of targets. Some assumptions for the simulation experiments are as follows. (1) The target can emit a large sufficient signal, such as heat or light, and the robot receives the signal via sensors. (2) The maximum distance that the sensors can detect obstacles is set to 10 squares. (3) If at least one robot’s fitness is greater than 0.99, or the maximum number of iterations maxItr = tmax = 1500 is reached, or no improvement occurs during the last 10 iterations, terminate the search mission.

To verify the effectiveness and efficiency of the proposed algorithm, several experiments in four parts are presented, which are the trajectories of robots target searching, and influence of environment size, population size and different environments.

4.1 Trajectories of robots target searching

In this section, the influence of parameters in MFPSO is studied. By comparing the trajectories of several searching algorithms, the superiority of the MFPSO algorithm can be seen. As shown in Fig. 4, there are six robots whose positions are dispersed at the top space in the environment. RPSO and A-RPSO are compared with MFPSO. To more effectively compare the three algorithms’ trajectories, the robots pause in iterations 20 and 50 (e.g., Fig. 4a, b) during the target search simulation. From the experimental results, RPSO in iterations 20 to 50, has slow progress, and A-RPSO has better performance. The position of the best robot is often close to the “global best” and “personal best,” which leads the second and third parts of Eq.(3) to have nearly zero value, and the best robot has slower motion. In RPSO, the robots search at approximately iteration 40, some of the robots meet the obstacle in front of them, and the robots begin to avoid obstacles, but the position of the robot is the “local best.” The robot always has the tendency to move to the optimal point, so the robots move back and return to the best position, which occurs repeatedly until one robot reaches a new better position of the “local best.” In RPSO and A-RPSO, all robots search in the same region at approximately iteration 70, which leads to low diversity between robots, so the robots have slow motion, and the exploration rate is decreased. In A-RPSO, the problem somehow repeats but has a better solution because of the smart change for the inertial weight, the inertial weight leads to weakening the ability of robots to track the “present best” and “global best.” As a result, the robots search in a larger region, and the diversity and speed of robots are increased in A-RPSO. Robots in A-RPSO find the target in iteration 95, but in RPSO, they find it at iteration 113.

In MFPSO, instead of only one swarm of robots searching for the target, the six robots are split into several sub-swarms. In the process of experiment, the number of swarms has been set to 3 and the fruit fly population has been set to 10 for each robot when the algorithm is initialized. It would seem obvious that the six robots are divided into 3 sub-swarms, and the robots of each sub-swarm search for the target individually in the search space. Each sub-swarm of robots cooperates with the others. MFPSO also has an adaptive inertial weight by Eq. (6). If a robot has slow progress, “evolutionary speed by Eq. (7)” for that robot decreases. And if robots try to move closer to each other, then the factor “aggregation degree by Eq. (8)” increases. By increasing “aggregation degree” or decreasing “evolutionary speed,” the impact of inertial weight is increased, which helps robots to keep diversity. As shown in Fig. 4i, when the right sub-swarm has found the target in iteration 68, the other sub-swarm of robots still has a long way to go from the position of the target. And the elapsed time of RPSO, A-RPSO and MFPSO are 27.389242 s, 22.519030 s, 13.162226 s respectively. To summarize, MFPSO tries to maintain diversity between robots. The several sub-swarms can enhance the diversity and have a higher searching speed, expanding the search area, achieving an effective exploration, and avoiding local optima and premature convergence. The number of iterations and elapsed time are less than that of the contrasting algorithms. MFPSO is more effective and efficiency than the two other algorithms.

In Fig. 5, the initial positions of six robots are in the same region (top right corner of the search space). The robots lie very close to each other, and the diversity of robots is getting smaller. Because their velocity vector values are small, the robots have slow motion at all search times. Both RPSO and A-RPSO face the problem of slow convergence rate. Although the approach of A-RPSO has made some improvements, which help the robots to move better and prevent them from lying too close to each other, there is still only one global optimal solution, and the effect of solving the problem of diversity is not very obvious.

MFPSO has a better solution because of the multi-swarm strategy, instead of using only one swarm of robots for target searching, the swarm is divided into several sub-swarms; sub-swarms move independently in the search space, so several sub-swarms improve the diversity of robots and improve the search speed. And the smart change for the inertial weight helps to keep diversity between robots. Moreover, IFOA has better divergence when searching for the optimal solution, and each robot takes advantage of the fruit flies to obtain a better value for the robot to find the next best position. The strategy in which each robot employs one sub-swarm of fruit flies can enhance the diversity and achieve an effective exploration to avoid local optima and premature convergence. MFPSO can quickly find the target in iteration 79, while RPSO finds the target in iteration 113 and A-RPSO finds the target in iteration 92. And the elapsed time of RPSO, A-RPSO and MFPSO are 27.389242 s, 20.743172 s, 15.424626 s respectively. Compared to the RPSO and A-RPSO approaches, MFPSO has better performance.

As observed in Fig. 6, when the robots have dispersed position, the location of robots will be scattered rather than aggregated in the same place. There are six robots that have dispersed positions to find the target. There are two robots placed on the northern side of the search space, and the other four robots lie on the bottom region of the search space. In RPSO and A-RPSO, all robots try to move closer to the “global best” robot. Because the best robot has slow motion, the search speed of the robot is reduced. In A-RPSO, because of the smart change for the inertial weight, the robots do not lie in a small region which can increase diversity, and the search speed will be increased. A-RPSO has better performance than RPSO. The adaptive inertial weight is adopted in MFPSO, the impact of inertial weight is increased by increasing “aggregation degree” or decreasing “evolutionary speed,” which helps robots to keep diversity. The multi-swarm strategy and each robot employing 10 FOA particles can increase the speed of the algorithm. MFPSO can quickly find the target in iteration 35, while RPSO finds the target in iteration 55 and A-RPSO finds the target in iteration 47. The elapsed time of RPSO, A-RPSO and MFPSO are 10.093214 s, 8.291358 s, 5.374862 s respectively.

Compared with the other algorithms, the proposed algorithm has no superiority in time complexity. But the multi-swarm strategy and IFOA method are adopted in the proposed algorithm, which can reduce computation time and improve the efficiency of MFPSO. According to Figs. 4, 5 and 6, the three algorithms reach the target, which means that they are effective. And the number of iterations and elapsed time are less than that of the two other algorithms. MFPSO reaches the target before the two others, meaning that it is more efficiency.

All of the simple obstacle avoidance methods can be applied to swarm-based search algorithms. A-RPSO uses the PSO mechanism to avoid obstacles, which works better in the case of smaller obstacles. If the robots meet a larger obstacle and the position is a local optima point, robots may fall into the local optimum and lack the ability to find the global optimal position. To overcome this limitation, MFPSO employs a linear generation mechanism based on MSCM. When the robots meet a larger obstacle and reach a local optimal value, MSCM mutates the position of the robots to escape from the local optima position.

In Fig. 7, there is an environment with larger obstacles. When there is a large obstacle in front of the robots, the robots begin to avoid the obstacle but the position of the robot is the “local best,” and the robot has the tendency to reach the optimal point. The robot will move towards the direction of the previous best position. The robot will return to the best position repeatedly because that position has the better fitness value at present. The robots may fall into local optima and lack the ability to find the global optimal position, which occurs repeatedly until one of the robots attains a better “local best” new position. To overcome this limitation, MFPSO employs an escape mechanism (MSCM) that mutates the position of the robots to escape from the local optimum when the robots meet a larger obstacle and reach a local extreme value. From Fig. 7, to better verify the ability to escape from the local optimum, the step size is reduced appropriately and adjusted to the original 2/3. When the robots meet the large obstacle in front of them, although the performance of the A-RPSO algorithm is better than that of RPSO, RPSO and A-RPSO have no method for escaping from the local optimum. When the A-RPSO algorithm’ number of iterations reaches 200, the robots still cannot jump out of the local optimal position. In MFPSO, the robots can escape from the local optimum faster and successfully find the target in iteration 153. According to Fig. 7, RPSO and A-RPSO did not find the target whereas MFPSO reached it. When the robot meets the larger obstacle, the escape mechanism in MFPSO can effectively solve the problem of obstacle avoidance and thus not fall into the local optimum. MFPSO reduces the search time required and the search speed is further improved. MFPSO is more effective and efficient than the two other algorithms.

4.2 Influence of environment size

In this section, the influence of environment space size on robotic target searching is studied. All experiments carried out employ six robots. Environments with obstacles and complex environments are studied. The difference between an environment with obstacles and a complex environment is that, in the latter there are more obstacles. For example, there are more than 20 obstacles in a complex environment, in the size of 300 × 300 squares. Figure 8 is the impact of environment size for environments with obstacles and Fig. 9 is the impact of environment size for complex environments.

Thirty different environments with the same size are simulated with the search algorithms, and then the repeated experiments are carried out in environments with different sizes. The averages of iterations and success rates for each algorithm were reported. Comparing the number of iterations and success rates in successful experiments for RPSO, A-RPSO and MFPSO, the experimental results are shown in Figs. 8 and 9. It observed from the experimental results that compared with the other approaches, the proposed approach of MFPSO has a significantly better performance, and MFPSO has higher success rates and lower rates of increase in its iterations. If the environment size is small, the performance in terms of success rates and iterations is not very outstanding. With the increase in the size of the search space, the advantage in performance becomes more prominent, especially when the environment size is greater than 600 × 600 squares. For example, in the environment size of 600 × 600 squares, MFPSO has 100% and 95% success rates for environments with obstacles and complex environments, respectively, while A-RPSO has 95% success rates and A-RPSO only has 90% and 85% success rates.

As shown from Fig. 8 and 9, in the same environment size, the number of iterations in the complex environments is higher than that in the environments with obstacles, but in most cases, the success rate in the complex environments is lower than that in the environments with obstacles.

4.3 Influence of population size

In this section, the influence of population size on robotic target searching is studied. Thirty different environments with the same number of robots are simulated with the search algorithms, and then repeated experiments are carried out with different numbers of robots. The environments with obstacles and complex environments are studied; the environment size in all experiments is 300 × 300 squares. For example, twenty different environments are simulated with obstacles. Then, RPSO, A-RPSO and MFPSO are used to search for target in those environments with only one robot. The result is reported in the first row of Table 1, and the other rows show the result of different population sizes.

As shown from Tables 1 and 2, the average number of iterations (Avg), average elapsed time (Time) and success rates (Succ) for each algorithm are reported. The approach of MFPSO is compared with RPSO and A-RPSO. The average elapsed time is approximately proportional to the number of iterations; MFPSO has superiority in elapsed time. With the increase in the number of robots (the sub-swarms of robots increase), robots search faster and have higher success rates. For example, in Table 1, using one robot, MFPSO could find the target in an average number of iterations of 125.47 and has a 95% success rate, but when using six robots, MFPSO could find the target in average number of iterations of 73.34 and has a 100% success rate. Furthermore, compared with the other approaches, MFPSO has significantly better performance. For example, using eight robots, MFPSO could find the target in an average number of iterations of 67.79, but RPSO could find the target in an average number of iterations of 75.59, and A-RPSO could find the target in an average number of iterations of 70.24.

As shown in Fig. 10, with the increase in the sub-swarm number of robots, the search task is performed faster. Actually, the proposed approach is more obvious and faster when the number of robots is small, but this approach sometimes has poor performance in the number of iterations, as is observed from the data presented in Table 1 (row 1) and Table 2 (row 3). With the increase in the number of robots (especially more than ten), the sub-swarm number is increased, the efficiency of the proposed approach is much more significant, and the success rates of the three algorithms are all 100%. Robots cooperate with each other to find the target, but it is different in different problems. In the search mission of our study, the position of the target is unknown, and the proposed approach of using several sub-swarms to find the target is obviously more advantageous.

The proposed approach uses several sub-swarms, mainly to enhance the diversity and achieve an effective exploration to avoid local optimal and premature convergence. Dividing a swarm into sub-swarms has the effect of reducing the computational time and enhancing the efficiency. It observed from Tables 1-2 that compared with the other approaches, MFPSO have less number of iterations and elapsed time in most cases, MFPSO reaches the target before the two others, MFPSO has a better performance and a higher efficiency.

4.4 Influence of different environments

In this part, several experiments are carried out for different sub-swarm robots and different types of environments (environments with obstacles and complex environments). Each experiment is repeated 30 times, and the environment size in all experiments is 300 × 300 squares. Because the initial positions of robots, obstacles and the target affect the search time, several experiments have been carried out under the same sub-swarm number of robots. All experiments with the same number of robots have been tested two times. For example, two different experiments are tested in Table 3 (rows 3 and 4) for six robots divided into 3 sub-swarms. In Tables 3, 4, 5, 6, 7 and 8, each row shows an experiment that is repeated thirty times for each algorithm, and each sub-swarm has two robots. Through the analysis of Tables 1 and 2, we know that the elapsed time is approximately proportional to the number of iterations, so the elapsed time is no longer presented in Table 3, 4, 5, 6, 7 and 8, but only the number of iterations is presented. The average iterations, success rate and standard deviation of iteration consumption (Std) are compared for the RPSO, A-RPSO and MFPSO algorithms in different environments, and the experimental results are reported in Tables 3,4, 5, 6, 7 and 8. In Tables 3 and 6, the robots’ positions are initialized in the top of the search space (as in Fig. 4). The position of the robots is initialized from the same region (as in Fig. 5) in Tables 4 and 7. In Tables 5 and 8, robots are initialized with dispersed positions in the search space (as in Fig. 6).

As shown in Tables 3, 4, 5, 6, 7 and 8, compared with the RPSO and A-RPSO approaches, the approach of MFPSO has distinct capability advantages in regard to the search time and success rate, especially when the sub-swarm of robots is small, but sometimes has a poor performance as is observed in Table 4 (row 1) and Table 6 (row 1, column 7). Because the number of robots is small, the diversity of the proposed algorithm is difficult to adjust. With the increase in the sub-swarm number of robots (especially more than 3), the performance of the proposed approach is much more significant, and the success rates of the three algorithms are all 100%. Not only can MFPSO find target more successful but it is also faster than the other approaches.

Target searching is performed with different types of environments (environments with obstacles in Tables 3, 4 and 5 and complex environments in Tables 6, 7 and 8). It is observed from Tables 6, 7 and 8 that the MFPSO algorithm has obviously better performance in complex environments. The main reason is that MFPSO uses the MSCM mechanism to avoid large obstacles, and robots can jump out of the local optima position faster and find the target faster. The multi-swarm strategy and IFOA have been used in the proposed approach. When the initialization positions of the robots are different, the results are different. Especially when robots are initialized from the same region in Tables 4 and 7, because of the lack of diversity between robots, the search speed and the success rates decline, but MFPSO performs better in the complex environment. In most cases, the average number of iterations of MFPSO is less than other comparison algorithms, and MFPSO has better effectiveness and efficiency.

When the environment is larger, the advantages of the proposed algorithm are obvious. The impacts of environment size and the population size have been discussed in the previous sections.

5 Conclusions

In this paper, a novel Multi-swarm hybrid FOA-PSO (MFPSO) algorithm for robotic target searching in unknown environments is proposed. MFPSO has three advantages that yield a higher performance than that of other approaches. First, IFOA with multiple swarms and adaptive coefficient is proposed, which provides a better value for IPSO to find the next optimal robot position value. Second, multi-swarm robots search for the target independently in the search space at the same time, which improves the diversity and achieve an effective exploration to avoid premature convergence and falling into local optima. Third, an escape mechanism named MSCM can enhance the escape ability for obstacle avoidance when the robot meet larger obstacles or fall into local optima.

Several experiments are performed in four parts to verify the better performance of MFPSO. MFPSO significantly outperforms other approaches (including A-RPSO and RPSO), in particular, with multi-swarm robots and large environments. The proposed algorithm has less iteration, higher success rates and better efficiency in most cases. In the future, we plan to study multi-target search problems. It is expected that the proposed approach based on multiple swarms will perform well in multi-target searching, and that the proposed approach will be able to be applied to solve real-world engineering problems in the field of intelligent search robots.

References

Wei C, Hindriks KV, Jonker CM (2016) Dynamic task allocation for multi-robot search and retrieval tasks. Appl Intell 45(2):1–19

Kantor G, Singh S, Peterson R, Rus D, Das A, Kumar V, Pereira G, Spletzer J (2003) Distributed search and rescue with robot and sensor teams. Springer Tracts in Advanced Robotics 24:529–538

Senthilkumar KS, Bharadwaj KK (2012) Multi-robot exploration and terrain coverage in an unknown environment. Robot Auton Syst 60(1):123–132

Zhang J, Gong D, Zhang Y (2014) A niching PSO-based multi-robot cooperation method for localizing odor sources. Neurocomputing 123:308–317

Cuevas E, Cienfuegos M, Zaldívar D, Pérez-Cisneros M (2013) A swarm optimization algorithm inspired in the behavior of the social-spider. Expert Syst Appl 40(16):6374–6384

Pan WT (2012) A new fruit Fly optimization algorithm: taking the financial distress model as an example. Knowl-Based Syst 26(2):69–74

Yuan X, Dai X, Zhao J, He Q (2014) On a novel multi-swarm fruit fly optimization algorithm and its application. Appl Math Comput 233(3):260–271

Zhang Y, Cui G, Wu J, Pan WT, He Q (2016) A novel multi-scale cooperative mutation fruit Fly optimization algorithm. Knowl-Based Syst 114:24–35

Karaboga D, Basturk B (2007) A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm. J Glob Optim 39(3):459–471

Karaboga D, Basturk B (2008) On the performance of artificial bee colony (ABC) algorithm. Appl Soft Comput 8(1):687–697

Dorigo M, Maniezzo V, Colorni A (1996) Ant system: optimization by a colony of cooperating agents. IEEE Transactions on Systems Man & Cybernetics Part B :Cybernetics 26(1):29–41

Ying KC, Liao CJ (2004) An ant colony system for permutation flow-shop sequencing. Comput Oper Res 31(5):791–801

Shen W, Guo X, Wu C, Wu D (2011) Forecasting stock indices using radial basis function neural networks optimized by artificial fish swarm algorithm. Knowl-Based Syst 24(3):378–385

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Icnn'95 - IEEE International Conference on Neural Networks 1995.4(8):1942–1948

Sun W, Lin A, Yu H, Liang Q, Wu G (2017) All-dimension neighborhood based particle swarm optimization with randomly selected neighbors. Inf Sci 405(C):141–156

Liang JJ, Suganthan PN (2005) Dynamic multi-swarm particle swarm optimizer with local search. In: 2005 IEEE congress on evolutionary computation (2005 CEC), vol 521, pp 522–528

Yang X-S (2010) A new metaheuristic bat-inspired algorithm. In: González JR et al. (eds) Nature inspired cooperative strategies for optimization (NICSO 2010). Springer Berlin Heidelberg, Berlin, Heidelberg, pp 65–7e4

Shi Y (2011) Brain storm optimization algorithm. IEEE Congress on Evolutionary Computation (2011 CEC) 6728:1–14

Sun C, Duan H, Shi Y (2013) Optimal satellite formation reconfiguration based on closed-loop brain storm optimization. Computational Intelligence Magazine IEEE 8(4):39–51

Dadgar M, Jafari S, Hamzeh A (2016) A PSO-based multi-robot cooperation method for target searching in unknown environments. Neurocomputing 177(C):62–74

Hsieh HT, Chu CH (2013) Improving optimization of tool path planning in 5-axis flank milling using advanced PSO algorithms. Robot Comput Integr Manuf 29(3):3–11

Chen YL, Cheng J, Lin C, Wu X, Ou Y, Xu Y (2013) Classification-based learning by particle swarm optimization for wall-following robot navigation. Neurocomputing 113(7):27–35

Zhang Y, Gong DW, Zhang JH (2013) Robot path planning in uncertain environment using multi-objective particle swarm optimization. Neurocomputing 103(2):172–185

Chyan GS, Ponnambalam SG (2012) Obstacle avoidance control of redundant robots using variants of particle swarm optimization. Robot Comput Integr Manuf 28(2):147–153

Mario ED, Talebpour Z, Martinoli A (2013) A comparison of PSO and reinforcement learning for multi-robot obstacle avoidance. In: 2013 IEEE congress on. Evol Comput:149–156

Liang JJ, Song H, Qu BY, Mao XB (2012) Path planning based on dynamic multi-swarm particle swarm optimizer with crossover. International Conference on Intelligent Computing:159–166

Cai Y (2016) A PSO-based approach with fuzzy obstacle avoidance for cooperative multi-robots in unknown environments. Int J Comput Intell Appl 15(01):1386–1391

Cai Y, Yang SX (2013) An improved PSO-based approach with dynamic parameter tuning for cooperative multi-robot target searching in complex unknown environments. Int J Control 86(10):1720–1732

Hereford JM (2006) A distributed particle swarm optimization algorithm for swarm robotic applications. In: Evolutionary computation, 2006 (CEC 2006), pp 1678–1685

Mcgill K, Taylor S (2009) Comparing swarm algorithms for multi-source localization. IEEE International Workshop on Safety, Security & Rescue Robotics:1–7

Couceiro MS, Vargas PA, Rui PR, Ferreira NMF (2014) Benchmark of swarm robotics distributed techniques in a search task. Robot Auton Syst 62(2):200–213

Krishnanand KN, Ghose D (2009) Glowworm swarm optimization for simultaneous capture of multiple local optima of multimodal functions. Swarm Intelligence 3(2):87–124

Krishnanand KN, Ghose D (2009) A glowworm swarm optimization based multi-robot system for signal source localization. Studies in Computational Intelligence 177(177):49–68

Ataei HN, Ziarati K, Eghtesad M (2013) A BSO-based algorithm for multi-robot and multi-target search. International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems:312–321

Gazi V, Passino KM (2004) Stability analysis of social foraging swarms. Systems Man & Cybernetics Part B Cybernetics IEEE Transactions on 34(1):539–557

Gazi V, Passino KM (2003) Stability analysis of swarms. IEEE Trans Autom Control 48(4):692–697

Manic M, Manic M (2009) Multi-robot, multi-target particle swarm optimization search in noisy wireless environments. Conference on Human System Interactions, In, pp 78–83

Tang Q, Eberhard P (2013) Mechanical PSO aided by extremum seeking for swarm robots cooperative search. International Conference in Swarm Intelligence:64–71

Couceiro MS, Rui PR, Ferreira NMF (2011) A novel multi-robot exploration approach based on particle swarm optimization algorithms. IEEE International Symposium on Safety, Security, and Rescue Robotics:327–332

Couceiro MS, Rui PR, Ferreira NMF (2011) Ensuring ad hoc connectivity in distributed search with robotic Darwinian particle swarms. IEEE International Symposium on Safety, Security, and Rescue Robotics:284–289

Zhang C, Sun J (2009) An alternate two phases particle swarm optimization algorithm for flow shop scheduling problem. Expert Syst Appl 36(3):5162–5167

Yang X, Yuan J, Yuan J, Mao H (2007) A modified particle swarm optimizer with dynamic adaptation. Appl Math Comput 189(2):1205–1213

Tao XM, Liu FR, Yu L, Tong ZJ (2012) Multi-scale cooperative mutation particle swarm optimization algorithm. Journal of Software 23(7):1–15

Acknowledgments

The authors thank the researcher Mr. Dadgar for providing the “Robotic Target Searching Simulator” for free. This work was supported by the National Natural Science Foundation of China (U1813205 and 61573135), the Independent Research Project of State Key Laboratory of Advanced Design and Manufacturing for Vehicle Body (71765003), the Hunan Key Laboratory of Intelligent Robot Technology in Electronic Manufacturing Open Foundation (2017TP1011), the Planned Science and Technology Project of Hunan Province (2016TP1023), Key Research and Development Project of Science and Technology Plan of Hunan Province (2018GK2021), the Science and Technology Plan Project of Shenzhen City (JCYJ20170306141557198), and the Key Project of Science and Technology Plan of Changsha City (kq1801003).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tang, H., Sun, W., Yu, H. et al. A novel hybrid algorithm based on PSO and FOA for target searching in unknown environments. Appl Intell 49, 2603–2622 (2019). https://doi.org/10.1007/s10489-018-1390-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-018-1390-0