Abstract

In the current paper, we introduce an efficient methodology to solve nonlinear stochastic differential equations (SDEs) driven by variable order fractional Brownian motion (vofBm) with appropriate initial condition. SDEs have many applications throughout pure mathematics and are used to model various behaviors of stochastic models such as stock prices, random growth models or physical systems that are subjected to thermal fluctuations. The mechanism of our proposed approach, which is based on Bernoulli polynomials operational matrices, is that it first transforms the problem under consideration into a nonlinear stochastic integral equation (SIE) driven by vofBm by using given initial condition and integrating from both sides of nonlinear SDE over the interval [0, t], where \(t\in [0,{\textbf {T}}]\). Then, operational matrices of integration (omi) based on Bernoulli polynomials are utilized to significantly reduce the complexity of solving obtained SIE through converting such SIE into a nonlinear system of algebraic equations. Error analysis and convergence of suggested technique are also analyzed under some mild conditions. It is concluded that by increasing \(\hat{n}\), the approximate solution more accurately estimates the exact solution, where \(\hat{n}\) denotes the number of elements in the used Bernoulli vector. Finally, some test problems are included to emphasize that the introduced idea is accurate, efficient and applicable. One of the most important innovations of this paper is the numerical simulation of vofBm, which is done in two steps. In the first step, standard Brownian motion (sBm) is constructed via spline interpolation scheme and then block-pulse and hat functions are used in the second step to simulate vofBm. Moreover, the obtained numerical results are also compared with achieved results from Chebyshev cardinal wavelets (Ccw) method to confirm the superiority of the presented method respect to the previous methods. This paper finishes with presenting a real application of this model and solving it via our method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The significant part of contents in numerical analysis field is designing and analyzing of efficient algorithms to meet demands that have been appeared in other sciences. Among the available methods, the operational matrix methods (omm) have dedicated itself a lot of researches. These methods are based on expansion of all functions in the problem under investigation with various basic functions and using popular matrices which are named omi and operational matrix of differentiation (omd). Different functions and polynomials have been extensively utilized as basic functions by mathematicians to approximate the solution of underlying problem. For examples, Chebyshev polynomials have been employed by Heydari et al. to solve variable order fractional biharmonic equation and nonlinear Ginzburg–Landau equation in Heydari and Avazzadeh (2018) and Heydari et al. (2019), respectively. Abbasbandy et al. (2015) applied Legendre functions to estimate the solution of time fractional convection-diffusion equation. An applicable technique based on Bernoulli polynomials together with its accuracy analysis has been introduced by Singh et al. (2018). Euler polynomials have been used as basic functions in Balcı and Sezer (2016) to find the numerical solution of generalized linear Fredholm integro-differential difference equations. A numerical technique based on Bernstein polynomials has been improved by Chen et al. to estimate the solution of variable order linear cable equation in Chen et al. (2014). Finally, block-pulse functions have been proposed by Maleknejad et al. (2012), delta functions have been used by Roodaki and Almasieh (2012), triangular functions have been employed by Asgari and Khodabin (2017), hat functions have been suggested by Tripathi et al. (2013), to solve various and numerous problems of mathematics.

Fractional calculus has gained the attention of scholars as a mathematical modeling tool to describe occurred phenomena in many various disciplines. Fractional order models are more appropriate than integer order models to survey the behavior of processes with memory and hereditary features. The mathematical scientists have to focus on numerical schemes due to only a few number of such equations have analytical solution. The many efforts of researchers to estimate the solution of fractional problems have led to the development of various numerical techniques. The common methods are wavelet method (ur Rehman and Khan 2011), operational matrix methods (Mirzaee and Samadyar 2019; Rahimkhani et al. 2017), Galerkin method (Kamrani 2016), collocation method (Rahimkhani et al. 2019), finite difference method (Li et al. 2011), finite element method (Li et al. 2018), meshless method (Mirzaee and Samadyar 2019), spectral element method (Dehghan and Abbaszadeh 2018), the multistep Laplace optimized decomposition method (Maayah et al. 2022), etc.

Existence of various stochastic perturbation factors and production of powerful computing tools have led us that occurred phenomena in real life are modeled via different types of stochastic problems to reveal more accurate details in behavior description of such phenomena. In addition to no having the exact solution of such equations in many situations, it is also difficult obtaining their numerical solution. Thereby among introduced schemes in published papers, one method can play a more important role among other numerical methods if it produces more accurate results and can be extended to solve other problems. Finite difference method which has been utilized to solve linear stochastic integro-differential equations in Dareiotis and Leahy (2016) can be caused many difficulties in real life problems. For instance, discretization of domain and generating meshes is a time consuming and costly activity. Furthermore, some finite difference ideas are unconditionally stable. In the last decade, omm have been extremely used to obtain sufficiently high accuracy and alleviate accumulation of truncated error, complexity, computational operations and CPU times. For example, it has been greatly utilized to solve stochastic Volterra–Fredholm integral equations in Khodabin et al. (2012). Stochastic Volterra integral equations has been numerically solved by this method based on block-pulse and triangular functions in Maleknejad et al. (2012) and Khodabin et al. (2013), respectively. Samadyar et al. introduced orthonormal Bernoulli polynomials and applied them to approximate solution of stochastic Itô–Volterra integral equations of Abel type in Samadyar and Mirzaee (2020). In Heydari et al. (2016), omm based on second kind Chebyshev wavelets has been suggested by Heydari et al. to achieve an accurate numerical solution of stochastic heat equation. Shifted Legendre Spectral Collocation Algorithm has been applied to investigate the existence and uniqueness of the solution of fractional stochastic integro differential equations and obtain its numerical simulation in Badawi et al. (2022). The approximate solution of fractional stochastic integro differential equations using Legendre-shifted spectral approach and Legendre Gauss spectral collocation method has been presented by Badawi et al. in the papers Badawi et al. (2023a, b), respectively.

Sheng et al. (2011) introduced vofBm in the Riemann–Liouville sense as follows:

where \(H(t)\in (0,1)\). Notice that specific situations of this process are classical fBm and sBm that obtain by considering \(H(t)=H\) and \(H(t)=\frac{1}{2}\), respectively. Although solving stochastic problems driven by sBm and classical fBm is difficult, there have been more works on the numerical solution of such equations rather than the numerical solution of stochastic problems driven by vofBm. Providing a flexible method together with analyzing its convergence to solve stochastic evolution equations driven by fBm has been done in Kamrani and Jamshidi (2017). Nonlinear stochastic Itô–Volterra integral equations driven by fBm have been solved by omm based on hat functions and modification of hat functions in Hashemi et al. (2017) and Mirzaee and Samadyar (2018), respectively. It is essential that mentioned that classical fBm is appropriate only for modeling mono fractal phenomena which have similar global irregularity and fixed memory. On the other hand, global self-similarity is rarely appeared and fixed scaling is only satisfied for a series of certain finite intervals. Moreover, experimental data show that scaling exponent and order of similarity have multivalues and there exist phenomena in real life which have multifractal properties with variable space and time dependent memory. Thus, vofBm is a suitable way to overcome these limitations and it has been recently used for modeling events with variable irregularities or variable memory properties.

Suppose that \(B^{H(t)}(t), t\ge 0\), be a vofBm process which is defined in Eq. (1). An differential equation of the form Heydari et al. (2019)

where the functions \(\mu (u,t)\) and \(\sigma (u,t)\) are known smooth functions and U(t) is an unknown stochastic process defined on a certain probability space \((\Omega , {\mathcal {F}},{\mathcal {P}})\), is named nonlinear SDE driven by vofBm. The process B(t) denotes a sBm defined on same probability space and \(U_0\) is a given deterministic initial value. The functions \(\mu (u,t)\) and \(\sigma (u,t)\) are called the coefficients of this equation.

Equation of the form (2) is seen in modeling various problems such as signal processing (Sheng et al. 2012), geophysics (Echelard et al. 2010), financial time series (Corlay et al. 2014), but unfortunately its exact solution in many situations is not available. In the present time, it is very difficult to solve the nonlinear SDEs driven by vofBm either analytically or numerically. So, there are not many published literatures on this subject. In this paper, we introduced an efficient idea to find the numerical solution of nonlinear SDE expressed in Eq. (2). The structure of this method is such that it first transform the mentioned SDE into a corresponding SIE driven by vofBm and expand all functions in the obtained SIE with Bernoulli polynomials. In the sequel, stochastic omi driven by vofBm based on Bernoulli polynomials is derived, and then it together with ordinary omi are used to convert solving the mentioned problem into solving a set of nonlinear equations. That way, we try to find the numerical solution of this equation with higher accuracy and lower computational performance. The most important advantages of the presented method are as follows:

-

Using this method, equation under consideration is converted to a system of algebraic equations which can be easily solved. Therefore, the complexity of this equation, which is caused by fractional and stochastic terms, becomes very simple.

-

The unknown coefficients of the approximation of the function with these basis are easily calculated without any integration. Therefore, the computational cost of the proposed numerical method is low.

-

Because of the simplicity of Bernoulli polynomials, this method is a powerful mathematical tool to solve various kinds of equations with little additional works. In other words, Bernoulli polynomials are the simple basis functions, so the proposed method is easy to implement and it is a powerful mathematical tool to obtain the numerical solution of various kind of problems with little additional works.

-

The proposed scheme is convergent. Also, when the exact solution of the problem is a polynomial of degree n, we obtain the exact solution.

The outline of the rest of this paper is organized as follows. Simulation of vofBm by using block-pulse and hat functions has been done in Sect. 2. The definition of Bernoulli polynomials and some of their properties are presented in Sect. 3. In Sect. 4, a numerical method for solving the nonlinear SDEs driven by vofBm is proposed. The error analysis has been investigated in Sect. 5. The numerical results and application of under consideration problem in real world are carried out in Sect. 6 and Sect. 7, respectively. Finally, the conclusion is included in the Sect. 8.

2 Simulation of vofBm

.

2.1 Variable Order Fractional Integral Operator

Definition 1

Suppose that \(\alpha (t)\ge 0\) is a known continuous function. The Riemann–Liouville fractional integral of function f(t) of variable order \(\alpha (t)\) is defined as follows (Chen et al. 2014):

2.2 The Block-Pulse Functions

Definition 2

(Maleknejad et al. 2012) Consider a vector of block-pulse functions with \(\hat{m}\) components over the interval \([0,{\textbf {T}})\) as follows:

where the ith components of this vector is defined by

Any absolutely integrable function f(t), defined over the interval \([0,{\textbf {T}})\), can be expanded by \(\hat{m}\) terms of block-pulse functions as follows:

where \(\overrightarrow{\Phi _{\hat{m}}}(t)\) is defined in Eq. (4) and \(\overrightarrow{F_{\hat{m}}}=[f_1,f_2,\ldots ,f_{\hat{m}}]^T\). Furthermore, ith component of vector \(\overrightarrow{F_{\hat{m}}}\) is calculated by the following relation

Remark 1

(Kilicman and Al Zhour 2007) Integration and differentiation of block-pulse functions vector \(\overrightarrow{\Phi _{\hat{m}}}(t)\) for l-times is approximated as follows:

where \({\textbf {I}}_{\hat{m}}^{(l)}\) and \({\textbf {D}}_{\hat{m}}^{(l)}\) are called block-pulse omi and omd of l-times, respectively, and are given by

and

where \(\xi _j=(j+1)^{l+1}-2j^{l+1}+(j-1)^{l+1}\) and \(\zeta _j=-\sum _{i=1}^j\xi _i \zeta _{j-i}\) and \(\zeta _0=1\).

2.3 The Hat Functions

Definition 3

(Hashemi et al. 2017) In a hat functions vector \(\overrightarrow{\Psi _{\hat{m}}}(t)=[\psi _0(t), \psi _1(t),\ldots , \psi _{\hat{m}-1}(t)]^T\) over the interval \([0,{\textbf {T}})\) with \(\hat{m}\) components, the first component is defined as follows:

The ith component is defined as follows:

Finally, the last component is defined as follows:

where \(h=\frac{{\textbf {T}}}{\hat{m}-1}\).

Every continuous function g(t) can be approximated by using \(\hat{m}\) terms of hat functions as follows:

where \(\overrightarrow{G_{\hat{m}}}=[g_0,g_1,\ldots , g_{\hat{m}-1}]^T\), and \(g_i=g(ih)\) for \(i=0,1,\ldots , \hat{m}-1\).

Theorem 1

(Heydari et al. 2019) Consider positive continuous function \(\alpha (t): [0,{\textbf {T}})\rightarrow \mathbb {R}^+\) and hat functions vector \(\overrightarrow{\Psi _{\hat{m}}}(t)\). The Riemann–Liouville fractional integration \(\overrightarrow{\Psi _{\hat{m}}}(t)\) of variable order \(\alpha (t)\) is represented by \(\bigl ({\mathcal {I}}^{\alpha (t)}\overrightarrow{\Psi _{\hat{m}}}\bigl )(t)\), and is estimated as follows:

where \({\textbf {L}}^{\alpha (t)}_{\hat{m}}\) is called fractional omi of variable order \(\alpha (t)\) for hat functions and is computed as follows:

where

and for \(i,j=1,\ldots ,\hat{m}-1\),

Theorem 2

(Heydari et al. 2019) Assume that \(\overrightarrow{\Phi _{\hat{m}}}(t)\) and \(\overrightarrow{\Psi _{\hat{m}}}(t)\) denote the block-pulse and hat functions vectors, respectively. The vector \(\overrightarrow{\Phi _{\hat{m}}}(t)\) can be estimated as follows:

where \({\textbf {R}}_{\hat{m}}=({\textbf {r}}_{ij})\) is a matrix of order \(\hat{m}\times \hat{m}\) which its elements are computed from the following formula

2.4 The vofBm Process Simulation

In this section, block-pulse and hat functions which are introduced in Subsects. 2.2 and 2.3 are employed to simulate the vofBm process. The strategy of constructing this stochastic process is divided into two parts. The sBm process is constructed in the first step by using the properties of this process and spline interpolation method. It is essential to mention that in this step other interpolation methods such as “linear”, “nearest”, “cubic” and “pchip” can be used instead of spline interpolation method. In the second step, first the simulated sBm process is estimated by block-pulse functions, and then, the relationship between block-pulse and hat functions is applied to obtain the vofBm process.

-

Step 1.

Simulation of sBm process: Let’s start by hinting the properties of sBm process. This process is denoted by \(B(t), t\in [0,{\textbf {T}}]\) and has the following properties (Mirzaee and Samadyar 2018):

-

\(B(0)=0\).

-

The increment \(B(t)-B(s)\) where \(0\le s<t\le {\textbf {T}}\) has normal distribution with mean 0 and variance \(t-s\), i.e., \(B(t)-B(s)\sim \sqrt{t-s}{\mathcal {N}}(0,1)\) such that \({\mathcal {N}}(0,1)\) denotes normal distribution with mean 0 and variance 1.

-

The increments \(B(t)-B(s)\) and \(B(v)-B(u)\), where \(0\le u<v<s<t\le {\textbf {T}}\) are independent.

To construct sBm, first we choose a large positive integer number \(N\in \mathbb {Z}^+\) and let \(\delta t=\frac{{\textbf {T}}}{N}\). Then, we consider distinguished nodal points \(t_j=j\delta t\) for \(j=0,1,\ldots ,N\). The first condition of sBm tell us that \(B(0)=0\) with probability 1, and the second and third conditions ensure us that \(B(t_j)=B(t_{j-1})+\delta B(t_j)\) where \(j=1,2,\ldots ,N\), and \(\delta B(t_j) \sim \sqrt{\delta t}{\mathcal {N}}(0,1)\) is independent random variable. This procedure create a discretized sBm and then spline interpolation scheme is used to achieve a continuous function for sBm. The Matlab code for simulating sBm process over the interval [0, 1] with \(N=100\) has been presented in Algorithm 1. The command “randn” has been used to create a random number with normal distribution and mean 0 and variance 1.

-

-

Step 2.

Simulation of vofBm. As we mentioned in Sect. 2.2, every absolutely integrable function can be expanded in terms of block-pulse functions. So, the extension of sBm can be written as follows:

where \(\overrightarrow{B_{\hat{m}}}=[b_1,b_2,\ldots ,b_{\hat{m}}]^T\), \(b_i\simeq B\Bigl (\frac{(2i-1){\textbf {T}}}{2\hat{m}}\Bigl )\) for \(i=1,2,\ldots , \hat{m}\), and the block-pulse vector \(\overrightarrow{\Phi _{\hat{m}}}(t)\) has been introduced in Eq. (4). By substituting Eq. (19) into Eq. (1), we obtain

From Eqs. (8) and (17), we conclude

Finally, Eq. (15) yields

The vofBm process simulation steps are summarized in Algorithm 2.

Algorithm 1. | Algorithm 2. |

|---|---|

\(N=100\); | Input: \(\hat{m}, H(t)\). |

\({\textbf {T}}=1\); | Output: \(B^{H(t)}(t)\). |

\(\delta t\)=\(\frac{{\textbf {T}}}{N}\); | Step 1: Compute the value of \(h=\frac{{\textbf {T}}}{\hat{m}-1}\). |

\({\textbf {for}}\) \(i=0:N\) | Step 2: Compute the vector \(\overrightarrow{B_{\hat{m}}}=[b_1,b_2,\ldots ,b_{\hat{m}}]^T\). |

\(t(i+1,1)=i\) \(\delta t\); | Step 3: Compute matrix \({\textbf {D}}_{\hat{m}}^{(l)}\) for \(l=1\) from Eq. (10). |

\({\textbf {end}}\) | Step 4: Compute matrix \({\textbf {R}}_{\hat{m}}\) from Theorem 2. |

\(B=\text {zeros}(N+1,1)\); | Step 4: Compute matrix \({\textbf {L}}_{\hat{m}}^{\alpha (t)}\) for \(\alpha (t)=H(t)+\frac{1}{2}\). |

\({\textbf {for}}\) \(i=2:N+1\) | Step 5: Compute \(B^{H(t)}(t)\) from Eq. (22). |

\(B(i,1)=B(i-1,1)+\sqrt{\delta t}\) randn; | |

\({\textbf {end}}\) | |

sBm=plot(t, B); |

3 Bernoulli Polynomials

Definition 4

(Bazm 2015) The Bernoulli polynomials satisfy in the following formula

Equation (23) can be written in the following matrix form

where \(\overrightarrow{X_{\hat{n}}}(t)=[1,t,t^2,\ldots , t^{\hat{n}-1}]^T\), \(\overrightarrow{\Upsilon _{\hat{n}}}(t)=[{\mathfrak {B}}_0(t),{\mathfrak {B}}_1(t),\ldots , {\mathfrak {B}}_{\hat{n}-1}(t)]^T\) denotes Bernoulli basic vector, and \({\textbf {G}}_{\hat{n}}=({\textbf {g}}_{ij})\) is a lower triangular matrix of order \(\hat{n}\times \hat{n}\) and

Since all diagonal elements of matrix \({\textbf {G}}_{\hat{n}}\) are nonzero, then the matrix \({\textbf {G}}_{\hat{n}}\) is nonsingular and Bernoulli basic vector can be directly calculated from

Every integrable function u(t) can be expanded by using \(\hat{n}\) terms of Bernoulli polynomials as follows:

where \(\overrightarrow{U_{\hat{n}}}=[u_0,u_1,\ldots ,u_{\hat{n}-1}]^T\), and the component \(u_i\) is computed from the following formula

Theorem 3

(Bazm 2015) The integral of Bernoulli vector \(\overrightarrow{\Upsilon _{\hat{n}}}(s)\) respect to variable s over the interval [0, t] can be estimated as follows:

where the matrix \(\overline{{\textbf {P}}}_{\hat{n}}\) is named omi based on Bernoulli polynomials and is computed as follows:

In the following, a theorem is stated for the first time in relation to stochastic omi driven by vofBm and then is proved by authors.

Theorem 4

The stochastic integral of Bernoulli polynomials vector \(\overrightarrow{\Upsilon _{\hat{n}}}(s)\) respect to vofBm \(B^{H(s)}(s)\) over the interval [0, t] can be approximated as follows:

where \(\overline{{\textbf {P}}}_{\hat{n}}^s\) is a matrix of order \(\hat{n}\times \hat{n}\) and is called stochastic omi driven by vofBm. Also, we have \(\overline{{\textbf {P}}}_{\hat{n}}^s={\textbf {G}}^{-1}_{\hat{n}} {\textbf {A}}_{\hat{n}}{} {\textbf {G}}_{\hat{n}}\) where \({\textbf {A}}_{\hat{n}}=({\textbf {a}}_{ij})\) is a diagonal matrix with the following diagonal components

Proof

From Eq. (26), we have

Using part by part integration idea, we conclude

Let \(\overrightarrow{\Xi _{\hat{n}}}(t)=\int _0^t \overrightarrow{X_{\hat{n}}}(s)\rm{d}B^{H(s)}(s)\), where \(\overrightarrow{\Xi _{\hat{n}}}(t)=[\varpi _0(t), \varpi _1(t),\ldots , \varpi _{\hat{n}-1}(t)]^T\) and

The existing integral in Eq. (34) is calculated via composite trapezoidal numerical integration rule. Thus, we obtain

The values of \(B^{H(t)}(t)\) and \(B^{H(\frac{t}{2})}\bigl (\frac{t}{2}\bigl )\) in Eq. (35) for \(0\le t\le 1\) can be approximated by \(\varpi :=B^{H(0.5)}(0.5)\) and \(\varrho :=B^{H(0.25)}(0.25)\), respectively. So, we can write

Using Eqs. (24), (33) and (36), we have

where \(\overline{{\textbf {P}}}_{\hat{n}}^s ={\textbf {G}}_{\hat{n}} ^{-1}{} {\textbf {A}}_{\hat{n}}{} {\textbf {G}}_{\hat{n}}\). \(\square\)

4 Numerical Scheme

The nonlinear SDE (2) can be written in the following SIE form

Let

Thus, we should solve the following SIE driven by vofBm

From Eqs. (39) and (40), we have

The unknown functions \(\omega (t)\) and \(\theta (t)\) can be expanded in terms of Bernoulli polynomials as follows:

where \(\overrightarrow{\Omega _{\hat{n}}}\) and \(\overrightarrow{\Theta _{\hat{n}}}\) are Bernoulli coefficient vectors of \(\omega (t)\) and \(\theta (t)\), respectively. By inserting the approximations (42) into Eq. (41), we have

Using operational matrices of integration defined in Eqs. (29) and (31), we have

We consider \(\hat{n}\) Newton-Cotes collocation nodes which are calculated as follows:

By inserting collocation points \(t_l\) into Eq. (44), we obtain the following nonlinear system of \(2\hat{n}\) algebraic equations and \(2\hat{n}\) unknowns

The approximate solution of Eq. (2) is determined after solving system (46) and computing unknown vectors as follows:

The process of the proposed method is described in the step-by-step in Algorithm 3.

Algorithm 3. | |

|---|---|

Input: The number \(\hat{n}\), the vofBm \(B^{H(t)}(t)\), the initial value \(U_0\), | |

and the functions \(\mu , \sigma : [0,1]\times \mathbb {R} \rightarrow \mathbb {R}\). | |

Output:The numerical solution of \(U(t)\simeq U_0+\overrightarrow{\Omega _{\hat{n}}}^T\overline{{\textbf {P}}}_{\hat{n}}\overrightarrow{\Upsilon _{\hat{n}}}(t) + \overrightarrow{\Theta _{\hat{n}}}^T \overline{{\textbf {P}}}_{\hat{n}}^s \overrightarrow{\Upsilon _{\hat{n}}}(t)\). | |

Step 1: Construct the vector \(\overrightarrow{\Upsilon _{\hat{n}}}(t)=[{\mathfrak {B}}_0(t),{\mathfrak {B}}_1(t),\ldots , {\mathfrak {B}}_{\hat{n}-1}(t)]^T\) which \({\mathfrak {B}}_i(t)\) | |

for \(i=0,1,\ldots , \hat{n}-1\) satisfied in Eq. (23). | |

Step 2: Let \(\omega (t)=\mu \bigl (t,U(t)\bigl )\) and \(\theta (t)=\sigma \bigl (t,U(t)\bigl )\). | |

Step 3: Define the Bernoulli coefficient vectors of \(\omega (t)\) and \(\theta (t)\) which are denoted by \(\overrightarrow{\Omega _{\hat{n}}}\) and \(\overrightarrow{\Theta _{\hat{n}}}\). | |

Step 4: Compute the matrix \(\overline{{\textbf {P}}}_{\hat{n}}\) using Eq. (30). | |

Step 5: Compute the matrix \({\textbf {G}}_{\hat{n}}=({\textbf {g}}_{ij})\), which \({\textbf {g}}_{ij}\) for \(i,j=1,2,\ldots , \hat{n}\) are computed using Eq. (25). | |

Step 6: Compute the matrix \({\textbf {A}}_{\hat{n}}=({\textbf {a}}_{ij})\) , which \({\textbf {a}}_{ij}\) for \(i,j=1,2,\ldots , \hat{n}\) are computed using Eq. (32). | |

Step 7: Calculate the matrix \(\overline{{\textbf {P}}}_{\hat{n}}^s ={\textbf {G}}_{\hat{n}} ^{-1}{} {\textbf {A}}_{\hat{n}}{} {\textbf {G}}_{\hat{n}}\). | |

Step 8: Consider \(\hat{n}\) Newton-Cotes collocation nodes \(t_l\) for \(l=0,1,\ldots , \hat{n}-1\) using Eq. (45). | |

Step 9: Put \({\left\{ \begin{array}{ll} \overrightarrow{\Omega _{\hat{n}}}^T\overrightarrow{\Upsilon _{\hat{n}}}(t_l)=\mu \bigl ( t_l,U_0+ \overrightarrow{\Omega _{\hat{n}}}^T\overline{{\textbf {P}}}_{\hat{n}}\overrightarrow{\Upsilon _{\hat{n}}}(t_l) + \overrightarrow{\Theta _{\hat{n}}}^T \overline{{\textbf {P}}}_{\hat{n}}^s \overrightarrow{\Upsilon _{\hat{n}}}(t_l)\bigl )\\ \overrightarrow{\Theta _{\hat{n}}}^T\overrightarrow{\Upsilon _{\hat{n}}}(t_l)=\sigma \bigl ( t_l,U_0+ \overrightarrow{\Omega _{\hat{n}}}^T\overline{{\textbf {P}}}_{\hat{n}}\overrightarrow{\Upsilon _{\hat{n}}}(t_l) + \overrightarrow{\Theta _{\hat{n}}}^T\overline{{\textbf {P}}}_{\hat{n}}^s \overrightarrow{\Upsilon _{\hat{n}}}(t_l)\bigl ) \end{array}\right. }\). | |

Step 10: Solve the nonlinear system in Step 9 and compute the unknown vectors \(\overrightarrow{\Omega _{\hat{n}}}\) and \(\overrightarrow{\Theta _{\hat{n}}}\). |

5 Error Analysis

Theorem 5

(Tohidi et al. 2013) Suppose that f(t) is an infinity continuous function over the interval [0, 1] and \(f_{\hat{n}}(t)\) is the approximate function of f(t) via Bernoulli polynomials. The following upper error bound has been achieved

Theorem 6

Assume that \(\omega (t)=\mu (t,U(t))\) and \(\theta (t)=\sigma (t,U(t))\) be the exact solutions of Eq. (41) such that satisfy in the Lipschitz condition, i.e.,

Let

and consider

as the approximate solutions of the mentioned equation, where \(\omega _{\hat{n}}^{\hat{n}}(t)\) and \(\theta _{\hat{n}}^{\hat{n}}(t)\) are the estimation of \(\omega _{\hat{n}}(t)\) and \(\theta _{\hat{n}}(t)\) via Bernoulli polynomials. Also, suppose that the following assumption is satisfied

Then, the following upper error bound is obtained

where

Proof

According to Eq. (49) and Theorem 5, we have

where \({\mathcal {W}}_{\hat{n}}\) and \({\mathcal {T}}_{\hat{n}}\) have been defined in Eq. (54). On the other hand, the approximate solution of Eq. (40) is as follows:

where \({\mathcal {H}}_{\hat{n}}=\max _{t\in [0,1]} B^{H(t)}(t)\). From Eqs. (55) and (57), we get

From Eqs. (58) and (52), we conclude

\(\square\)

Remark 2

Equation (53) tells us that if \(\hat{n}\rightarrow \infty\) then \({\mathcal {W}}_{\hat{n}},{\mathcal {T}}_{\hat{n}},{\mathcal {H}}_{\hat{n}}\rightarrow 0\), and therefore \(\frac{{\mathcal {W}}_{\hat{n}}+{\mathcal {H}}_{\hat{n}}{\mathcal {T}}_{\hat{n}}}{1-{\mathcal {L}}-{\mathcal {H}}_{\hat{n}}{\mathcal {L}}} \rightarrow 0\). It means that by increasing \(\hat{n}\), the approximate solution \(U_{\hat{n}}(t)\) tends to the exact solution U(t).

6 Numerical Results

Two numerical examples are solved in this section via proposed method to check applicability and computational efficiency of the suggested technique. In reporting the values of absolute errors (AEs) for some used Bernoulli polynomials \(\hat{n}\), we pursue two goals. The first goal is that the presented theoretical results in Sect. 5 are numerically investigated in this section, and the second goal is that presented numerical method is compared with Ccw method (Heydari et al. 2019).

Example 1

(Heydari et al. 2019) Consider the following SDE driven by vofBm

such that its exact solution is given by

-

The presented method in the Sect. 4 has been employed to solve this example for three values \(\hat{n}=6,8,10\) and two functions \(H(t)=0.5+0.3\sin (\pi t)\) and \(H(t)=0.6-0.2\exp (-2t)\), and obtained AEs at some nodal points are reported in Tables 1 and 2, respectively. Other variable parameters are considered as \({\textbf {T}}=1, N=50, \hat{m}=100\), and \(U_0=\nu =\frac{1}{20}\). It is mentioned in Heydari et al. (2019) that the values of AEs obtained by considering \(M=3\) and \(k=3,4,5\). So, authors have applied Ccw vector with \(2^kM=24, 48, 96\) elements which this caused to generating larger matrices and more computations. The results of these tables reveal that our suggested method is more accurate and efficient than Ccw method, and indicate the effect of \(\hat{n}\) on the approximate solution. The value of \(\hat{n}\) have inverse relation with the values of AEs, and by increasing \(\hat{n}\) AEs decrease.

-

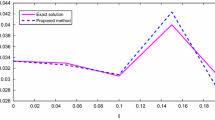

Also, the behavior of AEs by considering mentioned parameters is illustrated in Fig. 1. The truth of our claim that the values of AEs decrease by increasing Bernoulli vector’s size, can be more clearly seen from this diagram.

-

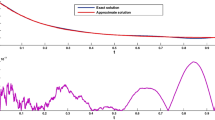

For investigating the effect of initial value and \(\nu\) on the values of AEs, we consider the rest of the parameters as \(N=100, \hat{m}=100, \hat{n}=6, {\textbf {T}}=1, H(t)=0.3+0.2\cos (-3t),\) and run the Matlab code for different values of \(U_0=\frac{1}{10}, \frac{1}{20}, \frac{1}{30}\) and \(\nu =\frac{1}{20}\). Once again, we consider the value of \(U_0=\frac{1}{20}\) fixed and run programming code for various values of \(\nu =\frac{1}{10}, \frac{1}{20}, \frac{1}{30}\). The obtained results are reported in Table 3 and are plotted in Fig. 2 which demonstrate that there is a direct relation between initial value and the values of AEs. Furthermore, the values of AEs are also related on \(\nu\) directly.

The graph of AEs for Example 1 with two selected H(t)

Example 2

(Heydari et al. 2019) Consider the following SDE driven by vofBm

such that its exact solution is given by

-

The presented method in the Sect. 4 has been employed to solve this example for three values \(\hat{n}=8,10,12\) and two functions \(H(t)=0.7+0.2\sin (\pi t)\) and \(H(t)=0.7-0.25\exp (-t)\), and obtained AEs at some nodal points are reported in Tables 4 and 5, respectively. Other variable parameters are considered as \({\textbf {T}}=1, N=50, \hat{m}=100\), \(U_0=0.01\), and \(\nu =\frac{1}{30}\). It is mentioned in Heydari et al. (2019) that the values of AEs obtained by considering \(M=2\) and \(k=4,5,6\). So, authors have applied Ccw vector with \(2^kM=32, 64, 128\) elements which this caused to generating larger matrices and more computations. The results of these tables reveal that our suggested method is more accurate and efficient than Ccw method, and indicate the effect of \(\hat{n}\) on the approximate solution. The value of \(\hat{n}\) have inverse relation with the values of AEs, and by increasing \(\hat{n}\) AEs decrease.

-

Also, the behavior of AEs by considering mentioned parameters is illustrated in Fig. 3. The truth of our claim that the values of AEs decrease by increasing Bernoulli vector’s size, can be more clearly seen from this diagram.

-

For investigating the effect of initial value and \(\nu\) on the values of AEs, we consider the rest of the parameters as \(N=100, \hat{m}=100, \hat{n}=8, {\textbf {T}}=1, H(t)=0.4+0.3t^2,\) and run the Matlab code for different values of \(U_0=0.01, 0.005, 0.001\) and \(\nu =\frac{1}{30}\). Once again, we consider the value of \(U_0=0.01\) fixed and run programming code for various values of \(\nu =\frac{1}{20}, \frac{1}{30}, \frac{1}{40}\). The obtained results are reported in Table 6 and are plotted in Fig. 4 which demonstrate that there is a direct relation between initial value and the values of AEs. Furthermore, the values of AEs are also related on \(\nu\) directly.

The graph of AEs for Example 2 with two selected H(t)

7 Application in Real World

One of the most well-known equation in the ecology science is logistic equation which has the following form

In Eq. (64), \(U(t), \tau >0\) and r denote the population size at time t, carrying capacity of environment, and population growth rate, respectively. As we know, the rate of population growth is uncertain in real world and it can be perturbed by white noise process \(\xi (t)\) as \(r\rightarrow r+\nu \xi (t)\) where \(\xi (t)=\frac{\rm{d}B(t)}{\rm{d}t}\) and \(\nu\) is a constant number. So, the classical logistic equation (64) is transformed to the following stochastic logistic equation

Since the rate of population growth depends on time t, it is better to study non-autonomous form of stochastic logistic equation which obtain from \(r\rightarrow r(t)+\nu (t)\xi (t)\). Furthermore, stochastic form of logistic equation driven by vofBm is introduced as follows:

The introduced method in Sect. 4 is applied to solve Eq. (66) for \(H(t)=0.5+0.3\cos (1000t)\) and \(H(t)=0.3+0.3\exp (-t)\) and obtained results are plotted in Fig. 5. Also, other parameters are considered as \(N=100, \hat{m}=100, \hat{n}=8, U_0=0.3, r(t)=0.2,\tau =1\) and three values \(\nu (t)=0, \nu (t)=0.8+0.2\cos (t)\) and \(\nu (t)= 0.7+0.2\sin (t)\).

8 Conclusion and Future Works

The studied model in this paper is a nonlinear SDE driven by vofBm that it has no analytical solution in many situations. On the other hand, the complexity of this model is so great that until now only one numerical method has been proposed to solve it. In order to solve this model, first we have derived stochastic omi driven by vofBm, then this operator together with ordinary omi based on Bernoulli polynomials are used to convert mentioned model to a nonlinear system of algebraic equations. The obtained system is solved via Newton’s numerical method and the approximate solution of this equation is achieved. In Sect. 5, we theoretically proved that by increasing the number of used Bernoulli polynomials \(\hat{n}\), the approximate solution tends to the exact solution. The effect of the number of used Bernoulli polynomials \(\hat{n}\), initial value \(U_0\), and constant coefficient \(\nu\), on the AEs values have been investigated in Sect. 6. Also, the presented method has been compared with Ccw method in the same section to confirm the superiority of our method respected to previous methods. The numerical results for different values of \(\hat{n}\) are reported in order to is established that by increasing \(\hat{n}\) the approximate solution converges to the exact solution. Reported numerical results in Sect. 6 confirm that one can obtain accurate approximate solution even by using small number of basic functions and performing few calculation efforts. Also, numerical results demonstrate that the values of \(U_0\) and \(\nu\) have an direct relationship with the values of AEs, i.e., by reducing the values of \(U_0\) and \(\nu\), the AEs values are also reduced. It seems that the values of N and \(\hat{m}\) are affected on the error values which investigation of this fact is recommended for future research works.

References

Abbasbandy S, Kazem S, Alhuthali MS, Alsulami HH (2015) Application of the operational matrix of fractional-order Legendre functions for solving the time-fractional convection-diffusion equation. Appl Math Comput 266:31–40

Asgari M, Khodabin M (2017) Computational method based on triangular operational matrices for solving nonlinear stochastic differential equations. Int J Nonlinear Anal Appl 8(2):169–179

Badawi H, Arqub OA, Shawagfeh N (2023) Well-posedness and numerical simulations employing Legendre-shifted spectral approach for Caputo-Fabrizio fractional stochastic integro differential equations. Int J Mod Phys C 34(06):2350070

Badawi H, Arqub OA, Shawagfeh N (2023) Stochastic integrodifferential models of fractional orders and Leffler nonsingular kernels: well-posedness theoretical results and Legendre Gauss spectral collocation approximations, Chaos. Solit Fract X 10:100091

Badawi H, Shawagfeh N, Arqub OA (2022) Fractional conformable stochastic integro differential equations: Existence, uniqueness, and numerical simulations utilizing the shifted Legendre spectral collocation algorithm, Math Probl Eng

Balcı MA, Sezer M (2016) Hybrid Euler-Taylor matrix method for solving of generalized linear Fredholm Integro-differential difference equations. Appl Math Comput 273:33–41

Bazm S (2015) Bernoulli polynomials for the numerical solution of some classes of linear and nonlinear integral equations. J Comput Appl Math 275:44–60

Chen Y, Liu L, Li B, Sun Y (2014) Numerical solution for the variable order linear cable equation with Bernstein polynomials. Appl Math Comput 238:329–341

Corlay S, Lebovits J, Véhel JL (2014) Multifractional stochastic volatility models. Math Finance 24(2):364–402

Dareiotis K, Leahy JM (2016) Finite difference schemes for linear stochastic integro-differential equations. Stoch Proc Appl 126(10):3202–3234

Dehghan M, Abbaszadeh M (2018) A Legendre spectral element method (SEM) based on the modified bases for solving neutral delay distributed-order fractional damped diffusion-wave equation. Math Meth Appl Sci 41(9):3476–3494

Echelard A, Véhel JL, Barrière O (2010) Terrain modeling with multifractional Brownian motion and self-regulating processes, International conference on computer vision and graphics, Berlin Heidelberg: Springer

Hashemi B, Khodabin M, Maleknejad K (2017) Numerical solution based on hat functions for solving nonlinear stochastic Itô-Volterra integral equations driven by fractional Brownian motion. Mediterr J Math 14(1):1–15

Heydari MH, Avazzadeh Z (2018) An operational matrix method for solving variable-order fractional biharmonic equation. Comput Appl Math 37(4):4397–4411

Heydari MH, Hooshmandasl MR, Badri Loghmani G, Cattani C (2016) Wavelet Galerkin method for solving stochastic heat equation. Int J Comput Math 93.9:1579–1596

Heydari MH, Atangana A, Avazzadeh Z (2019) Chebyshev polynomials for the numerical solution of fractal-fractional model of nonlinear Ginzburg-Landau equation. Eng, Comput (In Press)

Heydari MH, Avazzadeh Z, Mahmoudi MR (2019) Chebyshev cardinal wavelets for nonlinear stochastic differential equations driven with variable-order fractional Brownian motion. Chaos Soliton Fract 124:105–124

Kamrani M (2016) Convergence of Galerkin method for the solution of stochastic fractional integro differential equations. Optik 127:10049–10057

Kamrani M, Jamshidi N (2017) Implicit Euler approximation of stochastic evolution equations with fractional Brownian motion. Commun Nonlinear Sci Numer Simulat 44:1–10

Khodabin M, Maleknejad K, Rostami M, Nouri M (2012) Numerical approach for solving stochastic Volterra-Fredholm integral equations by stochastic operational matrix. Comput Math Appl 64(6):1903–1913

Khodabin M, Maleknojad K, Hossoini Shekarabi F (2013) Application of triangular functions to numerical solution of stochastic Volterra integral equations. IAENG Int J Appl Math 43(1):1–9

Kilicman A, Al Zhour ZA (2007) Kronecker operational matrices for fractional calculus and some applications. Appl Math Comput 187.1:250–265

Li C, Zhao Z, Chen Y (2011) Numerical approximation of nonlinear fractional differential equations with subdiffusion and superdiffusion. Comput Math Appl 62(3):855–875

Li M, Gu XM, Huang C, Fei M, Zhang G (2018) A fast linearized conservative finite element method for the strongly coupled nonlinear fractional Schrödinger equations. J Comput Phys 358:256–282

Maayah B, Moussaoui A, Bushnaq S, Arqub OA (2022) The multistep Laplace optimized decomposition method for solving fractional-order coronavirus disease model (COVID-19) via the Caputo fractional approach. Demonstr Math 55(1):963–977

Maleknejad K, Khodabin M, Rostami M (2012) Numerical solution of stochastic Volterra integral equations by a stochastic operational matrix based on block pulse functions. Math Comput Model 55(3):791–800

Maleknejad K, Khodabin M, Rostami M (2012) A numerical method for solving m-dimensional stochastic Itô-Volterra integral equations by stochastic operational matrix. Comput Math Appl 63(1):133–143

Mirzaee F, Samadyar N (2018) Numerical solution of nonlinear stochastic Itô-Volterra integral equations driven by fractional Brownian motion. Math Math Appl Sci 41(4):1410–1423

Mirzaee F, Samadyar N (2018) Using radial basis functions to solve two dimensional linear stochastic integral equations on non-rectangular domains. Eng Anal Bound Elem 92:180–195

Mirzaee F, Samadyar N (2019) Numerical solution based on two-dimensional orthonormal Bernstein polynomials for solving some classes of two-dimensional nonlinear integral equations of fractional order. Appl Math Comput 344–345:191–203

Mirzaee F, Samadyar N (2019) On the numerical solution of fractional stochastic Integro-differential equations via meshless discrete collocation method based on radial basis functions. Eng Anal Bound Elem 100:246–255

Rahimkhani P, Ordokhani Y, Babolian E (2017) Application of fractional-order Bernoulli functions for solving fractional Riccati differential equation. Int J Nonlinear Anal Appl 8(2):277–292

Rahimkhani P, Ordokhani Y, Lima PM (2019) An improved composite collocation method for distributed-order fractional differential equations based on fractional Chelyshkov wavelets. Appl Numer Math 145:1–27

Roodaki M, Almasieh H (2012) Delta basis functions and their applications to systems of integral equations. Comput Math Appl 63:100–109

Samadyar N, Mirzaee F (2020) Orthonormal Bernoulli polynomials collocation approach for solving stochastic Itô-Volterra integral equations of Abel type. Int J Numer Model 33(1):1–14

Sheng H, Sun H, Chen Y, Qiu T (2011) Synthesis of multi fractional gaussian noises based on variable-order fractional operator. Signal Process 91(7):1645–1650

Sheng H, Chen YQ, Qiu TS (2012) Fractional processes and fractional-order signal processing: techniques and applications. Springer, New York

Singh S, Patel VK, Singh VK, Tohidi E (2018) Application of Bernoulli matrix method for solving two-dimensional hyperbolic telegraph equations with Dirichlet boundary conditions. Comput Math Appl 75(7):2280–2294

Tohidi E, Bhrawy AH, Erfani K (2013) A collocation method based on Bernoulli operational matrix for numerical solution of generalized pantograph equation. Appl Math Model 37:4283–4294

Tripathi MP, Baranwal VK, Pandey RK, Singh OP (2013) A new numerical algorithm to solve fractional differential equations based on operational matrix of generalized hat functions. Commun Nonlinear Sci Numer Simulat 18(6):1327–1340

ur Rehman M, Khan RA (2011) The Legendre wavelet method for solving fractional differential equations. Commun Nonlinear Sci Numer Simul 16(11):4163–4173

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ordokhani, Y., Samadyar, N. Approximating a New Class of Stochastic Differential Equations via Operational Matrices of Bernoulli Polynomials. Iran J Sci 47, 1633–1647 (2023). https://doi.org/10.1007/s40995-023-01518-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40995-023-01518-3