Abstract

It is well known that free vibration of a taut string having mass per unit m(x) and frequency \(\omega\) is governed by ordinary differential equation \(y^{\prime \prime }+\omega ^2m(x)y=0.\) In this paper, first we discretize the differential equation by using Numerov’s method to obtain a matrix eigenvalue problem of the form \(-Au=\Lambda B M u\), where A and B are constant tridiagonal matrices and M is a diagonal matrix related to mass function m(x). In direct problem, for a given m(x), we approximate the first N eigenvalues of the string equation by making a new correction on the eigenvalues of matrix pair \((-A,BM)\). Also we obtain the error order of corrected eigenvalues. For inverse problem, we propose an efficient algorithm for constructing unknown mass function m(x) by using given spectra by solving a nonlinear system. We solve the nonlinear system by using modified Newton’s method and a regularization technique. The convergence of Newton’s method is proved. Finally, we give some numerical examples to illustrate the efficiency of the proposed algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

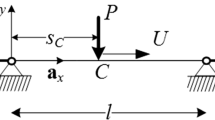

The free vibration of a taut string having mass per unit m(x) and frequency \(\omega\) is described by ordinary differential equation of the following form

where \(\lambda =\omega ^2\), (Gladwell 2004). Equation (1) is called string equation which is a special form of Sturm-Liouville equation. Usually, boundary conditions at end points are considered for differential equation (1). The most important boundary conditions are fixed-fixed (\(y(0)=y(1)=0\)), fixed-free (\(y(0)=0, y^\prime (1)=0\)) and free-free (\(y^\prime (0)=0, y^\prime (1)=0\)). It is well known that differential equation (1) with one set of the boundary conditions has infinite number of eigenvalues \(\{\lambda _n\}_1^\infty\) such that

For more details see (Freiling and Yurko 2001; Gladwell 2004; Kirsch 1996). In this paper, we investigate two types of problems related to differential equation (1). First, we consider direct problem i.e. approximating the first N eigenvalues of the string equation with given boundary conditions. Second, we solve the corresponding inverse problem i.e. we construct unknown mass function m(x) using informations of spectral data. By solving inverse problem for a string, we may design a string with a prescribed frequences. It is proved that if m(x) is symmetric with respect to mid point \(x=\frac{1}{2}\), i.e. \(m(x-\frac{1}{2})=m(x)\), then one spectrum corresponding to fixed-fixed boundary condition suffices to construct m(x), uniquely. But for non symmetric case of m(x) two spectra corresponding to two boundary conditions e.g. fixed-fixed and fixed-free are required to construct m(x), uniquely (Gladwell 2004; Jiang and Xu 2019). Since m(x) is a positive function, for computational purpose we define \(m(x)=\rho ^2(x)\). If \(\int _0^1\rho (x) dx=1\) then by changing of variables

the string equation (1) can be transformed to the following Sturm-Liouville problem (Gladwell 2004; Jiang and Xu 2019)

where

Direct and inverse Sturm-Liouville problems are well studied problems in the literature. For more details see (Andrew 2005; Ezhak and Telnova 2020; Freiling and Yurko 2001; Gladwell 2004; Jiang et al. 2021; Kirsch 1996; Mirzaei 2017; Perera and Böckmann 2020, 2019; Mosazadeh and Akbarfam 2020; Neamaty and Akbarpoor 2016). Note that construction of m(x) from q(x) using (3) and (4) needs more information and conditions on m(x). That is why the direct and inverse problem corresponding to the string equation have been studied, independently. In papers (Andrew 2003; Andrew and Paine 1986; Andrew 2000; Gao et al. 2015, 2017, 2018; Paine et al. 1981), the direct and inverse problems of Sturm-Liouville equation (3) are studied by using finite difference, finite element and Numerov’s methods. They find approximations for the first N eigenvalues as follows

where \(\Lambda _k\) is the kth eigenvalue of the matrix obtained by discretization of the Sturm-Liouville equation (3) and \(\epsilon _{r,k}\) is the difference between the kth eigenvalue of (3) and the kth eigenvalue of the matrix form of (3) for \(q(x)=0\). It is proved that

where r is a parameter that depends on the discretization method. For finite difference method \(r=1\), for Numerov’s method \(r=2\) and \(r=3\) for finite element method. In general, solving the direct and inverse problems of the string equation has been less studied comparing to the classical Sturm-Liouville equation. In Jiang and Xu (2019, 2021) construction of the mass function m(x) is considered by using trace formula. In Rundell and Sacks (1992) mass function constructed by an iterative procedure based on Goursat problem. In general, computing eigenvalues and eigenfunctions of the string equation for non constant mass function is impossible, explicitly. In practice, however, finite dimensional numerical methods are used to estimate the spectral data. Using such methods Eq. (1) is then transformed to a matrix eigenvalue problem where the eigenvalues of the resulting matrix become approximations for the first N eigenvalues of the string equation. The eigenvalues of matrix equation can be used to approximate the eigenvalues of lower indices but for eigenvalues of higher indices they generally lead to poor numerical results. In this paper, we discretize the string equation by using Numerov’s method to obtain the corresponding matrix eigenvalue problem. In order to make good approximations for the eigenvalues of the string equation we add a new suitable correction term to the eigenvalues of the matrix obtained from Numerov’s method. Then we propose an algorithm to solve direct and inverse problems corresponding to the string equation in the cases of symmetric and non symmetric function m(x). Our results show that Numerov’s method together with correction technique can be applied successfully to solve direct and inverse problems. To our knowledge, correction idea has not been applied to direct and inverse problems of the string equation.

The rest of the paper is arranged in the following manner. In Sect. 2, we discretize the string equation to obtain a matrix eigenvalue problem. By making new correction on the eigenvalues of resulting matrix eigenvalue problem we approximate the first N eigenvalues of the string equation. Moreover, the error analysis of corrected eigenvalues and some numerical results are presented in this section. In Sect. 3, a method based on correction technique of Sect. 2 is proposed to solve inverse problem of the string equation in symmetric and non symmetric cases. Finally, some different numerical examples are given in Sect. 4 to show the good efficiency of this technique for inverse problem.

2 Direct Problem

In this section, we study direct problem of Eq. (1). First we discretize Eq. (1) by using Numerov’s method. For this aim we divide the interval [0, 1] into N subintervals of length h and evaluate Eq. (1) at \(x_i=ih\) as follows

where \(m_i=m(x_i)\) and \(y_i=y(x_i)\). Using central difference formula we have

Using Eq. (1) we have \(y_i^{(4)}=-\lambda (m(x)y)_i^{\prime \prime }\). Approximating the second derivative by central difference formula gives the following approximation for \(y_i^{(4)}\)

Substituting (9) in (8) and approximating \(y_i^{\prime \prime }\) by (7), we find

Equation (10) can be written in the following matrix form

where \(\Lambda\) and \({\textbf{u}}=[u_1,u_2,\ldots ,u_{N-1}]^T\) are approximations for \(\lambda\) and \({\textbf{y}}=[y_1,y_2,\ldots ,y_{N-1}]^T\), respectively. For fixed-fixed boundary conditions the matrices A and B are as follows:

and \(M=diag(m_1,m_2,\cdots ,m_{N-1})\). We have \(B=I+\frac{h^2}{12}A\). For fixed-free boundary conditions the condition \(y^\prime (1)=0\) can be discretized by using a first or second order approximation. But in this paper we use Remark 2 to transform the non symmetric string equation on [0, 1] to symmetric string equation on [0, 2] with fixed-fixed boundary conditions. Throughout the paper the eigenvalues of string equation with fixed-fixed boundary conditions are denoted by \(\{\lambda _i\}\) and the eigenvalues with fixed-free boundary conditions are denoted by \(\{\mu _i\}\), respectively. Similarly, the eigenvalues of the matrix Eq. (11) corresponding to fixed-fixed boundary conditions are denoted by \(\{\Lambda _i^1\}\) and the eigenvalues of the matrix equation (11) corresponding to fixed-free boundary conditions are denoted by \(\{\Lambda _i^2\}\). Note that \(\Lambda _i^1\) and \(\Lambda _i^2\) are approximations for \(\{\lambda _i\}\) and \(\{\mu _i\}\), respectively which are good approximations for lower eigenvalues but we have poor results for higher indices, see Tables 1 and 2. In order to improve the results, we extend the correction technique (Paine et al. 1981) to the string equation.

If \(\int _0^1 \rho (x)dx=1\), then Eqs. (1) and (3) are isospectral, i.e. they have the same eigenvalues. Therefore it is natural to expect that the correction (5) may be used to approximate the eigenvalues of Eq. (1). If \(\int _0^1 \rho (x)dx=c\ne 1\) then we may modify Eq. (1) as follows

where \(\lambda ^*=c^2\lambda\) and \(m^*=\frac{1}{c^2}m(x)\). Making correction (5) for Eq. (12) we find

Thus, if \(c\ne 1\), selecting the correction term as \(\epsilon _{2,k}\) does not make a good approximation. In this case we must choose the correction term as \(\frac{1}{c^2}\epsilon _{2,k}\).

2.1 Error Analysis of Corrected Eigenvalues

In this part, using some lemmas and theorems we find the error order for corrected eigenvalues \({\tilde{\Lambda }}_k\). We prove the results for the case \(c=1\) and fixed-fixed boundary condition. The results can be extend to the case \(c\ne 1\) and other boundary conditions, similarly. It is obvious that the eigenvalues of problem (1) with \(m(x)=1\) and fixed-fixed boundary condition, are \(\lambda _k^\circ =k^2\pi ^2\) and corresponding eigenfunctions are \(y_k^\circ (x)=\sin k \pi x\). We have the following lemma for the matrix pair \((-A,B)\).

Lemma 1

(Andrew 2000; Chawla and Katti 1980) For matrix pair \((-A,B)\) we have

-

(i)

\(-A{\textbf{s}}_k=\Lambda _{k}^\circ B{\textbf{s}}_{k},\quad \Lambda _{k}^\circ =\frac{12}{h^2}\frac{\sin ^2(\frac{k \pi }{2N})}{3-\sin ^2(\frac{k\pi }{2N})},\) \({\textbf{s}}_{k}=(\sin k \pi x_{1}, \dots ,\sin k \pi x_{N-1})\),

-

(ii)

\(\Lambda _{k}^\circ =k^2\pi ^2+O(k^6h^4)\).

Lemma 2

(Andrew 2000; Chawla and Katti 1980) If \(\int _0^1\rho (x) \textrm{d}x=1,\,\) then

-

(i)

\(\lambda _k=k^2\pi ^2+O(\frac{1}{k^2})\),

-

(ii)

\(y_{k}(x)=\sin k\pi x +O(\frac{1}{k})\),

-

(iii)

\(\vert \lambda _k-\Lambda _k\vert =O(k^6h^4)\).

Note that for arbitrary function f(x), we use the boldface vector \({\textbf{f}}\), for \((f(x_1),f(x_2),\dots ,f(x_n))\).

Lemma 3

Suppose that \(e(x)=y(x)-\sin k \pi x\), \({\textbf{e}}={\textbf{y}}-{\textbf{s}}\) and \(\mathbf {\epsilon }={\textbf{u}}-{\textbf{s}}\), then we have

Proof

By transposing Eq. (11) then multiplying by \({\textbf{y}}\) we obtain

Writing (7) in matrix form and multiplying by \({\textbf{u}}^T\) we get

Subtracting (16) from (15) we find

substituting \({\textbf{y}}={\textbf{e}}+{\textbf{s}}\), \({\textbf{s}}^{\prime \prime }=-k^2\pi ^2 {\textbf{s}}\), \({\textbf{u}}= \mathbf {\epsilon }+{\textbf{s}}\) in the right hand side of (17) we find

\(\square\)

Remark 1

We can rewrite the string equation as \(y^{\prime \prime }(x)+\lambda y(x)=\lambda (1-m(x))y(x)\). Also, the discrete equation (11) can be written as \(-A {\textbf{u}}+\Lambda B (I-M){\textbf{u}}=\Lambda B{\textbf{u}}\). From Andrew and Paine (1985) by comparing these equations with corresponding equations in canonical Sturm-Liouville problem we conclude that for higher index k, \(\vert {\textbf{y}}_k-{\textbf{s}}_k\vert\) and \(\vert {\textbf{u}}_k-{\textbf{s}}_k\vert\) are of order \(O\left( \frac{1}{k}\right)\).

Lemma 4

For the function e(x) we have

Proof

Differentiating twice of \(e(x)=y(x)-\sin (k \pi x)\) we obtain

thus \(e^{\prime \prime }+k^2 \pi ^2 e=[k^2 \pi ^2-\lambda m(x)]y(x)\). This equation with boundary conditions \(e(0)=0\) and \(e(1)=0\), has the solution of the form (18). Using Lemma 2, part (ii) we find \(e(x)=O\left( \frac{1}{k}\right)\). Differentiating (18), implies (19). Simple calculation conclude (20). \(\square\)

Theorem 1

Suppose that

then

Proof

We have \({\textbf{e}}^{\prime \prime }+k^2\pi ^2 {\textbf{e}}={\textbf{f}}\), therefore

On the other hand by using (18) and part (i) of Lemma 1, for the jth entry of the vector \(A{\textbf{e}}\) we have

dividing both sides to \(k \pi h^2\) we obtain

Subtracting (24) from (25) we obtain the required result. \(\square\)

Theorem 2

Suppose that \(m(x) \in C^{4}[0,1]\), then there exists constant \(c_1\) such that

Proof

By definition of \(E_j\) we have

Applying Taylor’s expansion of the function f(x) around \(x_j\), then using integration by parts we find

On the other hand we have

Thus

Since \(\Lambda _{k}^\circ -k^2 \pi ^2=O(k^6 h^4)\), \(1-\frac{h^2 k^2 \pi ^2}{12}=O(1)\), and \(\Vert f^{(j)}\Vert _{\infty }=O(k^{(j+2)})\). Thus all terms in (26) are of order \(O(k^6 h^6)\). Therefore we find

Using Remark 1 we have \(\Vert \mathbf {\epsilon } \Vert _{\infty }=O(\frac{1}{k})\). Also \(\Vert B^{-1}\Vert =O(1)\) and \(n=O(\frac{1}{h})\). Thus, using (23) and (27) we obtain

\(\square\)

Theorem 3

If \(m(x) \in C^4 [0,1]\) then there exists constant \(c_2\) such that

Proof

Define \(F(x)=f(x) \sin k \pi x\). The function F(x) has the following properties

Substituting \(x=1\) in (18) we find \(\int _0^1 F(x) \textrm{d}x=0\). Suppose that \(T_h F\) denote the trapezoidal integration formula of F(x) with step size h on [0, 1]. Using Euler-Maclurin formula (Davis and Rabinowitz 1975), we have

where \(B_4\) is Bernoulli number and \(\{ p_i\}\) are piecewise polynomials of period one satisfying

Simple calculations show that

Since \(\lambda =O(k^2)\), \(y'=O(k)\), \(m'=O(1)\), we find \(F'''(1)-F'''(0)=O(k^4)\). Substituting this in (30), we get

By simple calculations, we obtain

where \(g(x)= k^2 \pi ^2- \lambda m(x)\). By similar procedure in proof of Lemma 6 in Andrew and Paine (1985), we obtain

Combining (31) and (32) we obtain the result (28). \(\square\)

Theorem 4

Suppose that \(m(x) \in C^4[0,1]\), then we have \(\vert {\tilde{\Lambda }}_k-\lambda _k \vert \le c k^6\,h^4\).

Proof

Using part (i) of Lemma 1 then adding and subtracting \(k^2\pi ^2{\textbf{s}}^T{\textbf{e}}\) we obtain

According to the proof of Lemma 4 we have \({\mathbf{e}}^{\prime \prime }+k^2\pi ^2 {\textbf{e}}={\textbf{f}}\), thus we obtain

On the other hand we have \({\mathbf{u}}^T{\mathbf{y}}={\mathbf{u}}^T{\mathbf{s}}+{\mathbf{s}}^T{\mathbf{e}}+\mathbf {\epsilon }^T{\mathbf{e}}\). Using these relations and Theorems 1, 2 and 3 we find

Thus we get

Using Remark 1 we have \(\Vert {\mathbf{y}}-{\mathbf{s}} \Vert _\infty =O(\frac{1}{k}), \Vert {\mathbf{u}}-{\mathbf{s}} \Vert _\infty =O(\frac{1}{k})\). Also we have \(\frac{1}{{\mathbf{s}}^TM{\mathbf{s}}}=O(h)\) (Andrew and Paine 1986, 1985). Thus we obtain \(\frac{1}{{\mathbf{u}}^TM{\mathbf{y}}}=O(h)\). On the other hand we have \(\Lambda _k^\circ -k^2 \pi ^2 =O(k^6h^4)\), thus we obtain \(\vert {\tilde{\Lambda }}-\lambda \vert \le ck^6\,h^4. \square\)

In Tables 1 and 2, the eigenvalues of string equation corresponding to mass functions \(m(x)=1-0.3e^{-20(x-0.5)^2}\) and \(m(x)=2+\sin (\pi (x-1)^2)\) are approximated using new correction term given by (13). The exact eigenvalues are computed by Matslise package (Ledoux et al. 2005). The results for \(\vert \lambda _k-\Lambda _k\vert\) and \(\vert \lambda _k-{\tilde{\Lambda }}_k\vert\) show the efficiency of the correction term \(\frac{1}{c^2}\epsilon _{2,k}\) to approximate the eigenvalues of the string equation. We state the following remark to obtain the eigenvalues of non symmetric mass functions.

Remark 2

Let \(\{\lambda _i\}_1^n\) and \(\{\mu _i\}_1^{n+1}\) be the eigenvalues of the string equation (1) with fixed-fixed and fixed-free boundary conditions, respectively. If m(x) is extended to interval [0, 2] as a symmetric function, then it is well known that \(\{\lambda _i^*\}_{1}^{2n+1}\) defined by

are the eigenvalues of the string equation on interval [0, 2] with symmetric mass function and fixed-fixed boundary condition (Gladwell 2004). Thus we conclude that the nonsymmetric case on [0, 1] is equivalent to symmetric case on [0, 2]. Note that by change of variable (2) the coefficient c in correction term (13) for nonsymmetric function m(x) is computed as \(c=\frac{1}{2}\int _0^2\rho (x) dx\).

To confirm the results of Theorem 4, we compute the values of scaled errors \(\vert \lambda _i -{\tilde{\Lambda }}_i \vert /h^4i^6\) in Tables 3 and 4. As expected, the results are bounded and more smaller than the corresponding results for \(\Lambda _i\). The results show that for string equation uncorrected and corrected Numerov’s method are of order \(O(h^4i^6)\). But, correction term reduces the asymptotic error constant, significantly.

3 Inverse Problem

In this section, we want to construct unknown mass function m(x) in the differential equation (1) by using spectral data. If m(x) is symmetric then one spectrum corresponding to fixed-fixed boundary condition will be sufficient to construct it, uniquely. For non symmetric case we need two spectra for unique construction of m(x). Here we use the spectra correspond to fixed-fixed and fixed-free boundary conditions. We state the main inverse problem as follows:

Inverse Problem 1

Let \(n \in {\mathbb {N}}\) and \(\{\lambda _i\}_1^{n+1}\) be a given set of positive and distinct real numbers. Construct a symmetric mass function m(x) such that \(\{\lambda _i\}_1^{n+1}\) are the first \((n+1)\) eigenvalues of the equation (1) with fixed-fixed boundary condition.

Inverse Problem 2

Let \(n \in {\mathbb {N}}\) and \(\{\lambda _i\}_1^{n}\), \(\{\mu _i\}_1^{n+1}\) be two given set of distinct and positive real numbers such that \(\mu _i<\lambda _i<\mu _{i+1}\). Construct a mass function m(x) such that \(\{\lambda _i\}_1^{n}\) and \(\{\mu _i\}_1^{n+1}\) are the first eigenvalues of the equation (1) with fixed-fixed and fixed-free boundary conditions, respectively.

First we verify the solution for Inverse Problem 1. Let \(N=2n\) (also we can take \(N=2n+1\)). Using Numerov’s method the string equation can be written in the matrix eigenvalue problem \(-A{\mathbf{u}}=\Lambda BM{\mathbf{u}}\). According to symmetric assumption of m(x) we have

Therefore the Inverse Problem 1 is equivalent to construct \(\{m_i\}_1^n\) by using prescribed eigenvalues \(\{\lambda _i\}_1^{n+1}\). The construction procedure is as follows. First we find the eigenvalues \(\Lambda ^1_i\) of the matrix pair \((-A,BM)\) by the procedure given in Sect. 2. Using Eq. (13) we may write

where \(\beta =\frac{1}{c^2}=\frac{1}{(\int _0^1\rho (x)dx)^2}\). Note that \(\beta\) is an unknown parameter, since \(m(x)=\rho ^2(x)\) is unknown. Thus we have to find \(\beta\) and \(\{m_i\}_1^n\) such that \(\{\Lambda ^1_i\}_1^{n+1}\) defined by (34) are eigenvalues of the matrix pair \((-A,BM)\). Indeed \(\beta\) and \(\{m_i\}_1^n\) must be the solutions of the following nonlinear system of algebraic equations

where \({\mathbf{m}}=[m_1,m_2,\cdots ,m_n,\beta ]\). Note that parameter \(\beta\) can be computed as follows:

See (Chawla and Katti 1980). Here we can approximate \(\beta \simeq \frac{\lambda _n}{n^2 \pi ^2}\). But computing the parameter \(\beta\) by solving nonlinear system (35), leads to efficient results for inverse problem, see Tables 6, 7, 8, and 9. We can solve the system (35) by using modified Newton’s method (Stoer and Bulirsch 2002). The sequence of recursive Newton’s method is given by:

where G is Jacobian matrix of system (35) at point \({\mathbf{m}}={\mathbf{m}}_0\). For this aim, we need the eigenpairs of (11) corresponding to \(m(x)\equiv 1\) and Jacobian matrix G. The eigenpairs are as follows:

Lemma 5

(Yueh 2005) The orthonormal eigenvector \({\mathbf{y}}_i^\circ\) of the matrix pair \((-A,B)\) is given by

We find the Jacobian matrix in the following lemma.

Lemma 6

Jacobian matrix corresponding to nonlinear system (35) at \({\mathbf{m}}={\mathbf{m}}_0\) is given by:

Proof

Using Eq. (35) we find

Taking partial derivative from both sides of \(-B^{-1}A{\mathbf{u}}_i=\Lambda ^1_iM{\mathbf{u}}_i\) with respect to \(m_j\) we have

Multiplying both sides of the last equation by \({\mathbf{y}}_i^T\) we obtain

Orthogonality of the eigenvectors with respect to M implies that \({\mathbf{u}}_i^TM{\mathbf{u}}_i=1\). On the other hand \(-B^{-1}A\) is a symmetric matrix (Chawla and Katti 1980). Therefore \(-{\mathbf{u}}_i^TB^{-1}A\frac{\partial {\mathbf{u}}_i}{\partial m_j}=\Lambda ^1_i {\mathbf{u}}_i^T M \frac{\partial {\mathbf{u}}_i}{\partial m_j}.\) Considering these properties in (40) we obtain

Computing \(\frac{\partial M}{\partial m_j}\) and substituting \({\mathbf{u}}_i, \Lambda ^1_i\) corresponding to \({\mathbf{m}}={\mathbf{m}}_0\) we find

Combining Eqs. (39) and (41) we find the entries of the required Jacobian matrix. \(\square\)

Remark 3

The matrix G is a nonsingular constant matrix and independent of mass function m(x). The condition number of G is given in Table 5 for different values of n. For some large values of n, we may need to apply a regularization method for solving nonlinear system (35). Here we apply the quasi-Newton’s method as follows

where \(\alpha _k\) satisfy the Wolf conditions (Gilbert 1997) and \(\sigma >0\) is a regularization parameter.

Remark 4

The equation (1) is a special case of the Sturm-Liouville equation \((py^\prime )^\prime +(\lambda w+q)y=0\) thus, the parameter \(\lambda\) is a differentiable function with respect to m(x) (Zhang and Li 2020).

The following theorem shows the convergence of modified Newton’s sequence (37).

Theorem 5

Let \(n \in {\mathbb {N}}\), \(p\ge 1\) and \(\Vert .\Vert _p\) denotes the \(L_p\) norm on [0, 1], there exists a positive number \(c_p(n)\) such that if \(\Vert m(x)-1\Vert _p<c_p(n)\) and the sequence \({\mathbf{m}}_k\) obtained from (37) be positive, then the recursive sequence (37) with initial value \({\mathbf{m}}_0\) is convergent to the solution of the system (35).

Proof

Let \(\Vert . \Vert\) denote a norm on \({\mathbb {R}}^{n+1}\). Suppose that \(S_r({\mathbf{m}}_0){:}{=}\{{\mathbf{m}} \vert \Vert {\mathbf{m}}-{\mathbf{m}}_0 \Vert <r\}\). First we prove that \({\mathbf{P}}({\mathbf{m}})\) is an analytic function with respect to \({\mathbf{m}}\) and a differentiable function with respect to m(x). The matrix \(-B^{-1}A\) is positive definite (Chawla and Katti 1980), thus positivity of \({\mathbf{m}}_k\) implies that the eigenvalues \(\Lambda ^1_i({\mathbf{m}})\) of matrix pair \((-B^{-1}A,M)\) are positive and simple (see (Gladwell 2004), chapter 3). Thus \({\mathbf{P}}({\mathbf{m}})\) is an analytic function of \({\mathbf{m}}\) (Sun 1990). Also by Remark 4, the eigenvalues \(\lambda _i(m(x))\) are differentiable function of m(x). Since G is nonsingular and \({\mathbf{P}}\) is analytic with respect to \({\mathbf{m}}\), there exists a constant \(K>0\) such that \(\Vert G^{-1} ({\mathbf{P}}^\prime ({\mathbf{m}})-{\mathbf{P}}^\prime ({\mathbf{m}}_0))\Vert \le K \Vert {\mathbf{m}}-{\mathbf{m}}_0 \Vert\). Suppose that \(\eta =\Vert G^{-1}{\mathbf{P}}({\mathbf{m}}_0)\Vert\), \(\rho =K \eta\) and \(r_-=\frac{1-\sqrt{1-2 \rho }}{K}\). If \(\Vert m(x) -1\Vert _p=0\), then for \(i=1,2,\ldots ,n+1\) we have

Thus for all \(p\ge 1\), there exists \(c_p(n)\), such that for all \(m \in L_p[0,1]\), if \(\Vert m(x)-1 \Vert _p \le c_p(n)\), then \(0<\rho <\frac{1}{2}\) and \(r_-<r\). By theory of modified Newton’s method (Stoer and Bulirsch 2002), we conclude that all \({\mathbf{m}}_k\) lie in \(S_{r_-}({\mathbf{m}}_0)\) and the sequence \(\{ {\mathbf{m}}_k\}\) converges to a solution of \({\mathbf{P}}({\mathbf{m}})=0\). \(\square\)

Corollary 1

By Remark 2, we can solve the Inverse problem 2 using the method of Inverse problem 1 on the interval [0, 2].

Using the following algorithm we can solve the inverse problem to construct m(x).

4 Numerical Results

In this section, we propose some numerical examples to show the efficiency of the presented algorithm for construction of mass function by solving the nonlinear system \(P_i({\mathbf{m}}){:}{=}\Lambda ^1 _i({\mathbf{m}})-\lambda _i(m(x))+\beta \epsilon _{2,i}=0\). The computed solution of the nonlinear system is denoted by \(\tilde{{\mathbf{m}}}\). We denote the \(L_2\) error of constructed m(x) by \(\Vert \tilde{{\mathbf{m}}}-{\mathbf{m}}\Vert _2\) when the parameter \(\beta\) is obtained by solving nonlinear system \(P_i({\mathbf{m}})=0\) and by \(\Vert \tilde{{\mathbf{m}}}- {\mathbf{m}}\Vert _{2,n}\) when the parameter \(\beta\) is approximated by (36). Moreover, in the numerical examples we try to construct m(x) by solving uncorrected system \(T_i({\mathbf{m}}){:}{=}\Lambda ^1 _i({\mathbf{m}})-\lambda _i(m(x))=0\) and system \(H_i({\mathbf{m}}){:}{=}\Lambda ^1 _i({\mathbf{m}})-\lambda _i(m(x))+ \epsilon _{2,i}=0\) with correction term \(\epsilon _{2,i}\). The results of these systems are compared in the numerical examples. All computations were performed with MATLAB R2015a on an Intel(R) Core(TM) i5 desktop computer.

Example 1

We consider the mass functions \(m_1(x)=3+(x-0.5)^2\) and \(m_2(x)=1-0.3e^{-20(x-0.5)^2}\) on the interval [0, 1]. These functions are symmetric with respect to \(x=0.5\), thus we can construct them using one spectrum \(\{\lambda _i\}_{i}^{n+1}\). Tables 6 and 7 show the \(L_2\) errors and absolute errors in some points. Also parameter \(\beta\) is obtained in these tables. The results of \(m_2(x)\) for \(n=15\) and \(n=20\) are obtained using regularized quasi-Newton’s method (42). Figures 1 and 2 show the numerical results corresponding to uncorrected scheme (\(T_i({\mathbf{m}})=0\)) and corrected scheme with correction terms \(\epsilon\) (\(H_i({\mathbf{m}})=0\)) and \(\beta \epsilon\) (\(P_i({\textbf{m}})=0\)). Numerical results show the good efficiency of the correction term \(\beta \epsilon _{2,i}\). The mass function \(m_2(x)\) is constructed in Jiang and Xu (2019). There is an agreement between the results of our method and Jiang and Xu (2019).

Example 2

We consider the non symmetric mass functions \(m_3(x)=2+\sin (\pi (x-1)^2)\) and

on the interval [0, 1]. We need two spectra \(\{\lambda _i\}_1^n\) and \(\{\mu _i\}_1^{n+1}\) for constructing these functions. The \(L_2\) errors and absolute errors in some selected points are listed is Tables 8 and 9. For uncorrected and corrected schemes the numerical results are compared and depicted in Figs. 3 and 4. The results of \(m_4(x)\) for \(n=15,20\) are obtained using regularized quasi-Newton’s method (42). The mass function \(m_4(x)\) is constructed in Jiang and Xu (2021). There is an agreement between the results of our method and Jiang and Xu (2021).

5 Conclusion

In this paper, we used Numerov’s method along with a new correction term to approximate the eigenvalues and construct mass function of the string equation. It was shown that, unlike to canonical Sturm-Liouville and vibrating Rod equations, for the string equation the correction term \(\epsilon _{2,i}\) does not work and we must consider the correction term as \(\beta \epsilon _{2,i}\). Therefore, we introduced the parameter \(\beta\) that plays an important role in approximating the eigenvalues and constructing the mass function m(x). Numerical results show that the correction technique is able to reduce the asymptotic error constant of Numerov’s method, significantly. This leads to efficient results for direct and inverse problems of the string equation.

References

Andrew AL (2000) Asymptotic correction of Numerov’s eigenvalue estimates with natural boundary conditions. J Comput Appl Math 125:359–366

Andrew AL (2003) Asymptotic correction of more Sturm–Liouville eigenvalue estimates. BIT Numer Math 43:485–503

Andrew AL (2005) Numerov’s method for inverse Sturm–Liouville problems. Inverse Probl 21:223–238

Andrew AL, Paine JW (1985) Correction of Numerov’s eigenvalue estimates. Numer Math 47:289–300

Andrew AL, Paine JW (1986) Correction of finite element estimates for Sturm–Liouville eigenvalues. Numer Math 50:205–215

Chawla MM, Katti CP (1980) On Noumerov’s method for computing eigenvalues. BIT Numer Math 20:107–109

Davis PJ, Rabinowitz P (1975) Methods of numerical integration. Academic Press, New York

Ezhak SS, Telnova MY (2020) Estimates for the first eigenvalue of the Sturm–Liouville problem with potentials in weighted spaces. J Math Sci 244:216–234

Freiling G, Yurko VA (2001) Inverse Sturm–Liouville problems and their applications. Nova Science Publications, New York

Gao Q, Huang Z, Cheng X (2015) A finite difference method for an inverse Sturm–Liouville problem in impedance form. Numer Algor 70:669–690

Gao Q, Zhao Q, Zheng X, Ling Y (2017) Convergence of Numerov’s method for inverse Sturm–Liouville problems. Appl Math Comput 293:1–17

Gao Q, Zhao Q, Chen M (2018) On a modified Numerov’s method for inverse Sturm–Liouville problems. Int J Comput Math 95(2):412–426

Gilbert JC (1997) On the realization of the Wolf conditions in reduced Quasi-Newton for equality constrained optimization. SIAM J Optim 7(3):780–813

Gladwell GML (2004) Inverse problem in vibration. Kluwer Academic Publishers, New York

Jiang X, Li X, Xu X (2021) Numerical algorithms for inverse Sturm–Liouville problems. Numer Algorithms 89:1287–1309

Jiang X, Xu X (2019) Inversion of trace formulas for Sturm–Liouville operator. arXiv:1906.12108v1

Jiang X, Xu X (2021) An inversion algorithm for recovering a coefficient of Sturm–Liouville operator. http://hdl.handle.net/2433/263961

Kirsch A (1996) An introduction to the mathematical theory of inverse problems. Springer-Verlag, New York

Ledoux V, Daele MV, Berghe GV (2005) Matslise: a Matlab package for the numerical solution of Sturm–Liouville and Schrodinger equations. ACM Transl Math Softw 31:532–554

Mirzaei H (2017) Computing the eigenvalues of fourth order Sturm–Liouville problems with Lie Group method. J Numer Anal Optim 7(1):1–12

Mosazadeh S, Akbarfam AJ (2020) Inverse and expansion problems with boundary conditions rationally dependent on the eigenparameter. Bull Iran Math Soc 46:67–78

Neamaty A, Akbarpoor SH (2016) Numerical solution of inverse nodal problem with an eigenvalue in the boundary condition. Inverse Probl Sci Eng 25(7):978–994

Paine JW, de Hoog FR, Anderssen RS (1981) On the correction of finite difference eigenvalue approximations for Sturm–Liouville problems. Computing 26:123–139

Perera U, Böckmann C (2019) Solutions of direct and inverse even-order Sturm–Liouville problems using Magnus expansion. Mathematics 7:544

Perera U, Böckmann C (2020) Solutions of Sturm–Liouville problems. Mathematics 8:2074

Rundell W, Sacks PE (1992) The reconstruction of Sturm–Liouville operators. Inverse Probl 8:451–482

Stoer J, Bulirsch R (2002) Introduction to numerical analysis, 3rd edn. Springer-Verlag, Wurzburg

Sun JG (1990) Multiple eigenvalue sensitivity analysis. Linear Algebra Appl 137–138:183–211

Yueh WC (2005) Eigenvalues of several tridiagonal matrices. Appl Math E-Notes 5:66–74

Zhang M, Li K (2020) Dependence of eigenvalues of Sturm–Liouville problems with eigenparameter dependent boundary conditions. Appl Math Comput 378:125214

Acknowledgements

The authors would like to thank the anonymous reviewers for their valuable comments and suggestions which have helped to improve the quality of the article.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any conflict of interest.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mirzaei, H., Ghanbari, K. & Emami, M. Direct and Inverse Problems of String Equation by Numerov’s Method. Iran J Sci 47, 871–884 (2023). https://doi.org/10.1007/s40995-023-01463-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40995-023-01463-1