Abstract

We consider the backward problem of reconstructing the initial condition of a nonhomogeneous time-fractional diffusion equation from final measurements. The proposed method relies on the eigenfunction expansion of the forward solution and the Tikhonov regularization to control the instability of the underlying inverse problem. We establish stability results and we provide convergence rates under a priori and a posteriori parameter choice rules. The resulting algorithm is robust and computationally inexpensive. Two examples are included to illustrate the effectiveness and accuracy of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past decade, time-fractional differential equations have received considerable attention due to their potential applications in modeling physical phenomena that cannot be described by classical diffusion models (Uchaikin 2013). One such phenomenon is anomalous diffusion, also known as subdiffusion (Metzler and Klafter 2000). This type of diffusion has been observed in various transport processes, including those in porous media (Fomin et al. 2011), protein diffusion within cells, movement of material along fractals (Hatano and Hatano 1998), and turbulent fluids and plasmas (Kilbas et al. 2006). For more information, refer to Podlubny (1991) and the references therein.

Recently, several inverse problems related to fractional diffusion equations have been studied. These problems mostly take the form of backward problems (Abdel et al. 2022; Djennadi et al. 2021a, 2021). In these problems, the goal is to determine or recover the source term or initial condition that leads to a known solution of the diffusion equation at a fixed time \(T > 0\). In this paper, we study a backward problem associated with the nonhomogeneous time-fractional diffusion problem:

where \(\Omega\) is a bounded domain in \(\mathbb {R}^{d}\) with sufficiently smooth boundary \(\partial \Omega\), \(T>0\) is a given final time, \(\alpha \in (0,1)\), and f is the source term which is assumed to be known exactly. In Eq. (1), \(\Delta u\) denotes the spatial Laplacian of u, and \(\partial ^{\alpha }_{t}u\) stands for the \(\alpha\)th Caputo time-fractional derivative of u.

Finding the density u from a given source term f and initial distribution g is usually termed as the forward problem. However, in many practical situations, we often do not know the initial density of the diffusing substance, but we can measure (observe) the density at a positive moment. Therefore, it is desirable to investigate the important backward problem:

-

Given a noisy measurement \(q^{\delta }(\textbf{x})\) of the final data \(q(\textbf{x})=u(\textbf{x},T)\) satisfying

$$\begin{aligned} \Vert q^\delta -q\Vert \le \delta , \end{aligned}$$(2)estimate the initial state \(g(\textbf{x})\).

Here \(\delta >0\) represents the noise level measured in the \(L^{2}\)-norm. Such an inverse problem can be used to recover the initial concentration of a contaminant (or the initial temperature profile in the case of a heat conduction problem) in a sub-diffusive media occupying the domain \(\Omega\) which is important for example in environmental engineering, hydrology, and physics. In such scenarios, knowledge of the initial distribution of a substance is essential to predicting its contamination in porous media like soil. This problem can also be applied to other disciplines, such as image deblurring. In image processing and computer vision, the final data \(q^{\delta }\) represents a blurred image, while g represents the original (sharp) image. Therefore, the backward problem of diffusion can aid in reconstructing the original image from the observed, blurred image.

As previously mentioned, time-fractional differential equations have become increasingly popular due to their promising applications in several fields. As a result, they have been extensively studied, and their analytical aspects and numerical treatments are well-developed. Readers interested in a comprehensive analysis of fractional differential equations can refer to Diethelm (2010), Meerschaert et al. (2009), Agrawal (2002), while (Jiang and Ma 2013; Alqhtani et al. 2022, 2023) provide recent numerical methods. For a recent account of the applications of fractional differential Eqs. (Al-Jamel et al. 2018; Hengamian et al. 2022; Srivastava et al. 2022) are recommended.

Recently, inverse problems related to time-fractional differential equations have been considered. In Trong and Hai (2021), Trong and Hai used the modified quasi-method to construct a stable approximation to the backward problem and gave optimal convergence rates in Hilbert scales. Al-Jamal (2017a) proved uniqueness and stability results concerning the reconstruction of the initial condition from interior measurements. In Li and GUO B, (2013), Li and Guo considered the identification of the diffusion coefficient and the order of the fractional derivative from boundary data. In (Wang et al. 2013), Wang et al. considered time-fractional diffusion equations with variable coefficients and used Tikhonov regularization to solve the corresponding Fredholm integral Eq. Wang and Liu (2013) used the total variation regularization to solve the backward problem from given internal measurements. Deng and Yang (2014) used the idea of reproducing kernel approximation to reconstruct the unknown initial heat distribution from scattered measurements. In Kokila and Nair (2020), Kokila and Nair utilized the Fourier truncation method to solve the nonhomogeneous time-fractional backward heat conduction problem. In Yang et al. (2019), Yang et al. used the truncation regularization technique to solve the backward problem for nonhomogeneous time-fractional diffusion-wave equation. Tuan et al. (2017) used the filter regularization method to determine the initial data from the final value with deterministic and random noise. See also (Al-Jamal et al. 2017; Djennadi et al. 2021b) for source identification problems, Al-Jamal (2017b),Wang and Liu (2012) for backward problems, and Jin and Rundell (2015), Djennadi et al. (2020) for other inverse problems in fractional differential equations.

Contrary to the forward problem, the backward problem is ill-posed in the sense that small perturbations in the final data result in large errors in the computed initial data. This instability behavior will be demonstrated in the sequel. Therefore, some regularization is required to obtain stable solutions. In this paper, we utilize Tikhonov regularization to tackle such instability of the backward problem. With the aid of the singular value expansion of the forward map, an explicit formula for the regularized solution will be provided. We will prove convergence results and derive convergence rates under both a priori and a posteriori parameter choice rules of the regularization parameter. The suggested method can be easily implemented, particularly for cubical domains where the fast Fourier transform can be used. The results of the numerical experiments are in good agreement with our theoretical analysis.

The current work makes a significant contribution by addressing an important inverse problem that has a wide range of scientific applications. Unlike previous works, the proposed method is not limited to one-dimensional domains and does not require a homogeneous source term. In addition, the method is characterized by its ease of applicability and implementation, as well as its robustness and computational speed.

The organization of this paper is as follows. In the next section, we set up notations and terminologies and lay out the necessary background material. In Sect. 3, we introduce the regularization technique and develop the main results. Section 4 is devoted to the practical implementation and numerical experiments.

2 Preliminaries

We use the notation \(L^{2}(\Omega )\) to denote the Hilbert space of square integrable functions on \(\Omega\) with inner product and norm given respectively by

The Caputo time-fractional derivative of order \(\alpha \in (0,1)\) of u is defined by

where \(\Gamma (\cdot )\) is the gamma function. The books (Kilbas et al. 2006; Podlubny 1991) provide excellent accounts regarding the history, theory, and applications of fractional calculus.

The Mittag-Leffler function of index \((\alpha ,\beta )\) is defined by

where \(\alpha >0\) and \(\beta >0\). The notation \(E_{\alpha }(z)\) will be used to denote \(E_{\alpha ,1}(z)\). The following relevant results can be found in Podlubny (1991) and Liu and Yamamoto (2010), respectively.

Lemma 2.1

Let \(\lambda >0\). We have

-

1.

\(\frac{d}{dt}\left[ E_{\alpha }(-\lambda t^\alpha )\right] =-\lambda t^{\alpha -1} E_{\alpha ,\alpha }(-\lambda t^\alpha )\), for \(\alpha >0\), \(t>0\).

-

2.

\(0<E_{\alpha }(-\lambda t)\le 1\), for \(0<\alpha <1\), \(t\ge 0\).

-

3.

\(\partial ^{\alpha }_{t}E_{\alpha }(-\lambda t^\alpha )=-\lambda E_{\alpha }(-\lambda t^\alpha )\), for \(0<\alpha <1\), \(t>0\).

-

4.

\(E_{1/2}(z)=e^{z^2}\text {Erfc}(z)\).

Lemma 2.2

Assume that \(0<\alpha <1\). Then there exist constants \(C_{-},C_{+}>0\) depending only on \(\alpha\) such that

We shall also need the following lemma.

Lemma 2.3

Let \(\beta >0\). Then

Proof

For \(x>0\) and \(p<2\), the function \(\mu (x)=\beta x^{p}/(x^2+\beta )\) attains its maximum value at \(x_0=\sqrt{\frac{p\beta }{2-p}}\). Thus, for \(p<2\), we have

For \(p\ge 2\), the second part of Lemma 2.1 yields

which concludes the proof. \(\square\)

Consider the following Sturm–Liouville eigenvalue problem:

From McOwen (1996), the eigenvalues can be enumerated to form a nondecreasing sequence of positive real numbers \(\{\lambda _n\}\) with \(\lambda _n\rightarrow \infty\), and the corresponding eigenfunctions \(\{X_n\}\) form an orthonormal basis for \(L^{2}(\Omega )\). We define the Hilbert space \(\textbf{H}^{p}(\Omega )\) by

where

Using the separation of variables method, the formal solution to (1) can be expressed as

where \(T_n(t)\) solves the fractional order initial-value problem

with \(f_n(t)=(f(\cdot ,t),X_n)\) and \(g_n=(g,X_n)\). From Diethelm (2010), the solution to the above problem is

with

and therefore, the formal solution to (1) is given by

From the final data \(u(\textbf{x},T)=q(\textbf{x})\), we observe that

where \(q_n=\left( q,X_n\right)\). Thus, the initial condition can be expressed as

where \(\overline{q}_n=q_n-F_{n}(T)\).

Define the linear operator \(K:L^{2}(\Omega )\rightarrow L^{2}(\Omega )\) by

Then, in view of (17), the backward problem can be phrased more concisely as

We demonstrate the instability of the backward problem by the following example.

Example 1

Assume that \(Kg=\overline{q}\), and consider the sequence of observations of \(\overline{q}\) given by

If we set

then \(Kg^n=\overline{q}^{n}\) with

while

as \(n\rightarrow \infty\).

The previous example highlights the instability of the backward problem, where even small errors in the final data q can lead to large errors in the computed initial condition g. To overcome this instability, we use regularization, which involves solving a sequence of nearby problems that are parameterized by a regularization parameter. One widely used regularization method is Tikhonov regularization, which we will discuss in the next subsection. Specifically, we will present the method’s application to our backward problem \(K g = \overline{q}\), as given by equations (20)–(21).

3 Tikhonov Regularization of the Backward Problem

As indicated by Example 1, the backward problem lacks continuous dependence on data \(\overline{q}^{\delta }\), and thus regularization is required. In this paper, we consider Tikhonov regularization (Engl et al. 2000).

Following Tikhonov regularization method, the regularized solution, denoted by \(g^{\beta ,\delta }\), is defined to be the solution of the optimization problem

where \(\beta >0\) is the regularization parameter, and \(\overline{q}^{\delta }=q^{\delta }-\sum _{n=1}^{\infty }F_n(T) X_{n}\). The first term in (26) represents the data fitting term, while the second term represents the regularization term. The rule of the parameter \(\beta\) is to control the trade-off between fitting the data and satisfying the regularization constraint (i.e., the smoothness of the solution).

To obtain an explicit formula for the solution to (26), we observe that the triplet

form a singular system for K, and consequently, from Engl et al. (2000), the minimizer is given explicitly by

where \(\overline{q}^{\delta }_{n}=(\overline{q}^\delta ,X_n)\).

Next, we analyze the convergence behavior of the proposed method using both a priori and a posteriori parameter choice rules of the regularization parameter \(\beta\). We shall use the notation \(g^{\beta ,0}\) to denote the solution of (26) corresponding to the noise-free data, that is,

where \(\overline{q}_{n}=(\overline{q},X_n)\).

3.1 A Priori Analysis

In this subsection, we investigate the convergence of the regularization method under a priori choice rule, that is, the choice of the regularization parameter \(\beta\) depends on the noise level \(\delta\). We begin with the following stability result.

Lemma 3.1

It holds that

Proof

From Lemma 2.3 and its proof, we have

This ends the proof. \(\square\)

Next, we consider the consistency result:

Lemma 3.2

Assume that \(g\in \textbf{H}^{p}(\Omega )\) for some \(p>0\). Then, the following bound holds

for some constant \(M_1\) independent of \(\beta\).

Proof

From the expansions (19) and (29) it follows that

Thus, in view of the equation (33) and Lemma 2.3, we have

From Lemma 2.2, we conclude that

where the constant \(C_1\) is given by

The result now follows from the inequalities (34) and (35) with \(M_1=C_1 \Vert g\Vert _p\). \(\square\)

Using the triangle inequality together with the last two lemmas, we conclude the convergence result:

Theorem 1

Assume that \(g\in \textbf{H}^{p}(\Omega )\) for some \(p>0\). Then, the following error bound holds

for some constant \(M_1\) independent of \(\beta\) and \(\delta\).

Regarding the convergence rate under a priori parameter choice rule, we cite the following remark.

Remark 1

Under the hypotheses of Theorem 1, if we choose \(\beta =C_0\delta ^\gamma\) for some \(\gamma \in (0,2)\) and constant \(C_0>0\), then

as \(\delta \rightarrow 0\). For a given value of \(p>0\), the convergence rate is optimal when

in which case we have

Thus, we obtain the fastest convergence when \(p\ge 2\). In this case, we have

provided \(\beta =C_0\delta ^{\frac{2}{3}}\).

3.2 Convergence Analysis Under a Posteriori Rules

The optimal rate of convergence mentioned in Remark 1 above depends on knowing the value of p. However, in practice, we may not know the exact value of p, and even if we do, any positive \(C_0\) will give an optimal asymptotic rate of convergence as given by (40). But the choice of \(C_0\) can have a significant impact for a given value of \(\delta >0\). Hence, it may be reasonable (and necessary) to take the actual data \(q^{\delta }\) into account when choosing the regularization parameter \(\beta\). A parameter choice method that incorporates both \(\delta\) and \(q^{\delta }\) is known as an a posteriori parameter choice rule. In the this subsection, we will describe one such rule, namely, Morozov’s Discrepancy Principle (MDP) (Engl et al. 2000).

According to this principle, the regularization parameter \(\beta\) is to be chosen so that

for some given constant \(\tau >1\). The main result is stated in the following theorem.

Theorem 2

Assume that \(g\in \textbf{H}^{p}(\Omega )\) for some \(p>0\), and that \(\beta\) is chosen according to (42). Then

for some constant \(M_2\) independent of \(\delta\).

Proof

From equation (33) and Holder’s inequality, we get

By the triangle inequality and equation (42), we get

and by the bound in (35), we have

from which it follows that

In virtue of the definition of K given by equation (20), together with expansion (28), equation (42), and the triangle inequality, we get

Then, the first term on the right-hand side of (48) can be bounded as

and using Lemma 2.3 and inequality (35), the second term on the right-hand side of (48) can be bounded as

where the constant \(C_1\) is given by (36). By combining the inequalities (48), (49), and (50), it follows that

Given the assumption that \(\tau >1\), a straightforward manipulation of inequality (51) yields that

Consequently, by Lemma 3.1, inequality (30), and the bound in (52), we get

Finally, from the bounds (47)-(53) and the triangle inequality, we have

which concludes the proof of the theorem. \(\square\)

We conclude with the following remark which discusses the convergence rate of the proposed method under the a posteriori parameter choice rule given by the MDP.

Remark 2

In view of Theorem 2, we see that under the Morozov’s discrepancy principle (42), the proposed method is of order \(O(\delta ^\frac{p}{p+1})\) if \(p<1\), with optimal convergence rate \(O(\delta ^\frac{1}{2})\) when \(p\ge 1\).

4 Numerical Illustrations

Next, we will show how to implement the proposed scheme for a practical problem. We treat the case when the domain \(\Omega\) is a cubical domain in \(\mathbb {R}^{d}\).

Since it is often the case that the final data is just a noisy discrete reading of the exact final data \(q(\textbf{x})=u(\textbf{x},T)\), we will assume that the noisy data \(q^\delta\) is generated using the formula

where \(\textbf{x}_i\) are regular grid points of \(\Omega\), and \(\eta _i\) are uniform random real numbers in \([-1,1]\). In practical applications, since we typically work with discrete data, it is often preferable to use the root-mean-square (RMS) norm for vectors. The RMS norm of a vector \(\textbf{v}\in \mathbb {R}^m\) is defined as:

In this paper, we will designate the symbol \(\delta\) to denote the noise level in the data measured in the root-mean-square norm, that is,

Similarly, we assess the quality of the recovered initial condition via the root-mean-square norm:

which is the discrete version of the \(L^{2}\)-error.

In the computations below, the sampling mesh size is fixed to \(m=500^d\). We utilize the fast Fourier transform (FFT) for the computations of \(q^{\delta }_{n}\) and \(f_n\), and we use the midpoint quadrature rule to estimate \(F_n(T)\). The regularization parameter \(\beta\) is chosen using the discrepancy principle with \(\tau =1.1\). In the experiments below, we use the built-in MATHEMATICA routines to compute the Mittag-Leffler function and the FFT as well. The algorithm of the proposed method goes along the following lines:

-

\({\texttt {f}}_{{\texttt {n}}}={\texttt {1/Sqrt[m]}}\, {\texttt {FourierDST[f,1]}}\);

(\(*\) the Fourier coefficients \(f_n\) of the vector f \(*\))

-

\({\texttt {q}}^{\delta }_{{\texttt {n}}}\) =1/Sqrt[m] FourierDST[ q\(^{\delta }\),1];

(\(*\) the Fourier coefficients of the noisy data vector \(q^{\delta }\) \(*\))

-

M=MittagLefflerE[\(\alpha , {\texttt {-}}\lambda {\texttt {T}}^\alpha\) ];

(\(*\) the Mittag-Leffler at all the first m eigenvalues \(*\))

-

F\(_{{\texttt {n}}}\)=(1/\(\lambda\) )(1-M) f\(_{{\texttt {n}}}\) ;

(\(*\) estimate the all values of \(F_n\) at once \(*\))

-

g\(^{\delta }_{{\texttt {n}}}\) =M/(M\(^2\)+\(\beta\) )(q\(^{\delta }_{{\texttt {n}}}\) -F\(_{{\texttt {n}}}\) );

(\(*\) the Fourier coefficients \(g^{\delta }_n\) of the approximate solution \(*\))

-

g\(^{\beta ,\delta }\) =FourierDST[Sqrt[m]g\(^{\delta }_{{\texttt {n}}}\) ,1];

(\(*\) the approximate solution \(*\))

Finally, we note that the verification of the exact solutions for the presented equations below can be directly carried out by applying Lemma 2.1.

Example 2

We consider the 1-D fractional order diffusion equation

supplied with the initial condition

The source term f is chosen so that the solution is

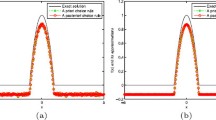

Error results for several noise levels \(\delta\) along with estimated order of convergence are summarized in Table 1. The exact final data q and the noisy final data \(q^{\delta }\) when \(\delta =1\%\) are depicted in Fig. 1, while the corresponding exact and recovered initial conditions are shown in Fig. 2.

Figure 2a shows that the naive reconstruction, without regularization, bears no resemblance to the exact solution. However, as illustrated in Fig. 2b, the regularized solution is remarkably close to the exact initial condition. This highlights the importance of regularization and underscores the efficacy of the proposed approach.

For this particular example, the eigenpairs are given by \(\lambda _n=\pi ^2 n^2\) and \(X_n=\sqrt{2}\sin (n \pi x)\). Thus, we obtain that

This shows that \(g\in \textbf{H}^{p}(\Omega )\) for \(p<3/4\). Consequently, according to Remark 2, the theoretical rate of convergence should be approximately \(O(\delta ^{3/7})\). The numerical results reported in Table 1 indicate that the regularized solution converges to the exact solution at a rate very close to the anticipated theoretical order.

Noisy final data \(q^{\delta }\) for Example 2 corresponding to noise level \(\delta =10^{-2}\)

Recovered initial condition for Example 2 corresponding to noise level \(\delta =10^{-2}\)

Example 3

In this 2-D example, we consider the time-fractional diffusion problem:

where \(\Omega\) is the unit square \([0,1]^2\), and \(0<t<0.1\). The forward solution is

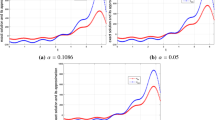

where \(\text {Erfc}(\cdot )\) is the complementary error function. Error results for several noise levels \(\delta\) along with estimated order of convergence are summarized in Table 2. The noisy final data when \(\delta =1\%\) along with the corresponding exact and recovered initial conditions are shown in Fig. 3.

The ill-posedness of the backward problem is starkly manifested in Fig. 3, where the naive reconstruction (i.e., without regularization) bears no resemblance to the exact solution. But through the use of regularization, the regularized solution achieves remarkable fidelity with the exact initial condition, underscoring the efficacy and power of our approach.

The eigenvalues and eigenfunctions in this example are given by

Upon carrying out lengthy yet straightforward computations, it can be concluded that

which establishes that \(g\in \textbf{H}^{1}(\Omega )\). Therefore, we can anticipate a theoretical rate of convergence of \(O(\delta ^{1/2})\), as suggested by Remark 2. However, the results in Table 2 demonstrate that the regularized solution exhibits a slower rate of convergence than the anticipated theoretical order. We attribute this to the effects of numerical discretization. Nevertheless, our approach still yields an accurate reconstruction of the exact solution, highlighting the practical effectiveness of regularization.

The noisy final data \(q^{\delta }\) with \(\delta =10^{-2}\) and the recovered initial condition without and with regularization for Example 3

The numerical results of the above experiments show that regularization method is stable and robust with respect to the noise level \(\delta\). Notice that the naive reconstruction (i.e., without regularization) is very poor which reflects the instability of the backward problem. Moreover, the observed convergence rate in these examples are very close to the anticipated convergence rates predicted by our theoretical analysis. The algorithm has a reasonably fast running time in a personal computer. Overall, the proposed method offers several advantages over existing techniques. It is not restricted to one-dimensional domains and can handle equations with nonhomogeneous source terms. Furthermore, it is capable of handling initial data that may not be smooth, which is a desirable feature for practical applications. Additionally, the method is easy to implement and apply, and it is characterized by its robustness and computational efficiency.

5 Conclusion

This paper presents a rigorous investigation of a Tikhonov regularization-based method for reconstructing unknown initial conditions in nonhomogeneous time-fractional diffusion equations from noisy measurements of final data. The proposed method is applicable to higher dimensional domains and is not restricted to homogeneous problems, making it a valuable tool in diverse scientific fields. The method is supported by rigorous theoretical analyses, which establish its consistency, stability, and convergence rates for both a priori and a posteriori parameter choice rules. Impressively, the method is highly robust to noise levels, further underscoring its reliability and practicality. Our numerical results demonstrate excellent agreement with the proven theoretical results, highlighting the accuracy and effectiveness of the proposed approach. Overall, this study makes a significant contribution to the field of inverse problems, with potential applications in a wide range of scientific disciplines.

References

Abdel Aal M, Djennadi S, Abu Arqub O et al (2022) On the recovery of a conformable time-dependent inverse coefficient problem for diffusion equation of periodic constraints type and integral over-posed data. Math Probl Eng. https://doi.org/10.1155/2022/5104725

Agrawal OP (2002) Solution for a fractional diffusion-wave equation defined in a bounded domain. Nonlinear Dyn 290(1):145–155

Al-Jamal MF (2017) A backward problem for the time-fractional diffusion equation. Math Methods Appl Sci 40(7):2466–2474. https://doi.org/10.1002/mma.4151

Al-Jamal MF (2017) Recovering the initial distribution for a time-fractional diffusion equation. Acta Appl Math 149(1):87–99. https://doi.org/10.1007/s10440-016-0088-8

Al-Jamal MF, Baniabedalruhman A, Alomari AK (2017) Identification of source term in time-fractional diffusion equations. J Adv Math Stud 10(2):280–287

Al-Jamel A, Al-Jamal MF, El-Karamany A (2018) A memory-dependent derivative model for damping in oscillatory systems. J Vib Control 24(11):2221–2229. https://doi.org/10.1177/1077546316681907

Alqhtani M, Owolabi KM, Saad KM et al (2022) Efficient numerical techniques for computing the Riesz fractional-order reaction-diffusion models arising in biology. Chaos, Solitons Fractals 161(112):394. https://doi.org/10.1016/j.chaos.2022.112394

Alqhtani M, Khader MM, Saad KM (2023) Numerical simulation for a high-dimensional chaotic Lorenz system based on Gegenbauer wavelet polynomials. Mathematics. https://doi.org/10.3390/math11020472

Deng ZL, Yang XM (2014) A discretized Tikhonov regularization method for a fractional backward heat conduction problem. Abstr Appl Anal 2014(SI64):1–12. https://doi.org/10.1155/2014/964373

Diethelm K (2010) The Analysis of Fractional Differential Equations. Springer, Berlin

Djennadi S, Shawagfeh N, Arqub OA (2020) Well-posedness of the inverse problem of time fractional heat equation in the sense of the Atangana-Baleanu fractional approach. Alex Eng J 59(4):2261–2268. https://doi.org/10.1016/j.aej.2020.02.010

Djennadi S, Shawagfeh N, Abu Arqub O (2021) A fractional Tikhonov regularization method for an inverse backward and source problems in the time-space fractional diffusion equations. Chaos, Solitons Fractals 150(1011):127. https://doi.org/10.1016/j.chaos.2021.111127

Djennadi S, Shawagfeh N, Abu Arqub O (2021) A numerical algorithm in reproducing kernel-based approach for solving the inverse source problem of the time-space fractional diffusion equation. Partial Diff Equ Appl Math 4(100):164. https://doi.org/10.1016/j.padiff.2021.100164

Djennadi S, Shawagfeh N, Inc M et al (2021) The Tikhonov regularization method for the inverse source problem of time fractional heat equation in the view of ABC-fractional technique. Phys Scr 96(9):094006. https://doi.org/10.1088/1402-4896/ac0867

Engl H, Hanke M, Neubauer A (2000) Regularization of inverse problems. Mathematics and its applications. Kluwer Academic, Netherlands

Fomin E, Chugunov V, Hashida T (2011) Mathematical modeling of anomalous diffusion in porous media. Fract Differ Calc 1(1):1–28. https://doi.org/10.7153/fdc-01-01

Hatano Y, Hatano N (1998) Dispersive transport of ions in column experiments: an explanation of long-tailed profiles. Water Resour Res 34(5):1027–1033. https://doi.org/10.1029/98WR00214

Hengamian E, Saberi-Nadjafi J, Gachpazan M (2022) Numerical solution of fractional-order population growth model using fractional-order Muntz-Legendre collocation method and pade-approximants. Jordan J Math Stat 14(1):157–175

Jiang Y, Ma J (2013) Moving finite element methods for time fractional partial differential equations. Sci China Math 56(6):1287–1300. https://doi.org/10.1007/s11425-013-4584-2

Jin B, Rundell W (2015) A tutorial on inverse problems for anomalous diffusion processes. Inverse Probl 31(3):035003. https://doi.org/10.1088/0266-5611/31/3/035003

Kilbas AA, Srivastava HM, Trujillo JJ (2006) Theory and applications of fractional differential equations. Elsevier Science Inc, New York

Kokila J, Nair MT (2020) Fourier truncation method for the non-homogeneous time fractional backward heat conduction problem. Inverse Probl Sci Eng 28(3):402–426. https://doi.org/10.1080/17415977.2019.1580707

Li J, GUO B (2013) Parameter identification in fractional differential equations. Acta Math Sci 33(3):855–864. https://doi.org/10.1016/S0252-9602(13)60045-4

Liu J, Yamamoto M (2010) A backward problem for the time-fractional diffusion equation. Appl Anal 89(11):1769–1788. https://doi.org/10.1080/00036810903479731

McOwen R (1996) Partial differential equations: methods and applications. Prentice Hall, Upper Saddle River, NJ

Meerschaert MM, Nane E, Vellaisamy P (2009) Fractional Cauchy problems on bounded domains. Ann Probab 37(3):979–1007. https://doi.org/10.1214/08-AOP426

Metzler R, Klafter J (2000) The random walks guide to anomalous diffusion: a fractional dynamics approach. Phys Rep 339(1):1–77. https://doi.org/10.1016/S0370-1573(00)00070-3

Podlubny I (1991) Fractional differential equations. Academic Press, San Diego, CA

Srivastava HM, Saad KM, Hamanah WM (2022) Certain new models of the multi-space fractal-fractional Kuramoto-Sivashinsky and Korteweg-de Vries equations. Mathematics. https://doi.org/10.3390/math10071089

Trong DD, Hai DND (2021) Backward problem for time-space fractional diffusion equations in Hilbert scales. Comput Math Appl 93(1):253–264. https://doi.org/10.1016/j.camwa.2021.04.018

Tuan NH, Kirane M, Bin-Mohsin B et al (2017) Filter regularization for final value fractional diffusion problem with deterministic and random noise. Comput Math Appl 74(6):1340–1361. https://doi.org/10.1016/j.camwa.2017.06.014

Uchaikin VV (2013) Fractional derivatives for physicists and engineers: background and theory. Springer, Berlin

Wang JG, Wei T, Zhou YB (2013) Tikhonov regularization method for a backward problem for the time-fractional diffusion equation. Appl Math Model 37(18):8518–8532. https://doi.org/10.1016/j.apm.2013.03.071

Wang L, Liu J (2012) Data regularization for a backward time-fractional diffusion problem. Comput Math Appl 64(11):3613–3626. https://doi.org/10.1016/j.camwa.2012.10.001

Wang L, Liu J (2013) Total variation regularization for a backward time-fractional diffusion problem. Inverse probl 29(11):115013. https://doi.org/10.1088/0266-5611/29/11/115013

Yang F, Pu Q, Li XX et al (2019) The truncation regularization method for identifying the initial value on non-homogeneous time-fractional diffusion-wave equations. Mathematics 7(11):1007. https://doi.org/10.3390/math7111007

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors state no conflict of interest to disclose.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Al-Jamal, M.F., Barghout, K. & Abu-Libdeh, N. Regularization of the Final Value Problem for the Time-Fractional Diffusion Equation. Iran J Sci 47, 931–941 (2023). https://doi.org/10.1007/s40995-023-01448-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40995-023-01448-0

Keywords

- Inverse problems

- Tikhonov regularization

- Nonhomogeneous

- Fractional diffusion

- Backward problem

- Noisy final data