Abstract

In this paper, a new ant algorithmic approach is presented for solving \(n\)-job, \(m\)-machine permutation flow shop scheduling problem. The main objective is to find a permutation of \(n\) given jobs, i.e., \(\sigma {:}\,\left\{ {1,2, \ldots ,n} \right\} \to \left\{ {1,2, \ldots ,n} \right\}\). This permutation minimizes the maximum completion time of the schedule arising from \(\sigma\). An illustration of using the presented heuristic algorithm for finding a good initial sequence of jobs is given. The proposed method is an ant-based approach to permutation flow shop scheduling problem by the behavior of real ants, but it is different with the pheromone trail concept. The presented model is compared against the one by NEH which has been considered the best constructive algorithm so far. Regarding the quality of results, the superiority of the proposed method over NEH is demonstrated by computational evaluation. The comparison is produced on generated random test problems. This comparison is drawn in domain of feasible instances. It is easy to implement the produced method as a metaheuristic.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A permutation flow shop scheduling problem (PFSP) is known to determine a sequence of \(n\) jobs that are processed on \(m\) independent machines such that the makespan or \(C_{\max }\) is minimized. Makespan is the distance in time that elapses from the start of processing to the end.

Although the result of \(n \times m\) PFSP for \(m = 2\) was obtained in polynomial time, it was proved that problem is NP-complete in the strong sense for \(m \ge 3\) (Garey et al. 1976). Therefore, when \(m\) and \(n\) are increased, the methods giving the optimum solutions are impractical. That is why there is a preference for heuristic algorithm.

Initial sequences of jobs are derived in many heuristic algorithms; thereafter, the heuristic approach consists of its performance evaluation. Framinan et al. (2003) proposed 177 different initial orders in NEH-insertion approach. The execution of Kurz and Askin (2004), Leung et al. (2005), Allahverdi and Al-Anzi (2006), Alaykýran et al. (2007), Kalczynski and Kamburowski (2008), Rad et al. (2009), Ancău (2012), Malik and Dhingra (2013), Xu et al. (2014), Liu et al. (2016), Brum and Ritt (2018), Nurdiansyah et al. (2019) and Sauvey and Sauer (2020) heuristics was proposed after obtaining suitable initial sequences.

A PFSP is supposed in conditions below:

-

1.

All jobs are processed on the same machines.

-

2.

Each job is processed at most once on machines number 1 to \(m\) with the same order.

-

3.

All jobs are independent and ready for processing in zero time.

-

4.

There is one-to-one correspondence between the processing of jobs and machines.

-

5.

Buffering storage between every two machines is infinite.

-

6.

There are not parallel machines.

-

7.

Machines are available continuously.

-

8.

The processing of jobs cannot pass each other.

Since all jobs are processed on the same machines, the number of results for a \(n \times m\) problem is reduced from \(\left( {n!} \right)^{m}\) to \(n!\). Let \(a_{ij}\) be the processing time of \(i\)th job on \(j\)th machine.

Suppose the matrix of processing times is \(M = \left[ {a_{ij} } \right]_{n \times m}\). Consider the following weighted directed graph (Fig. 1).

In a PFSP for minimizing the \(C_{\max }\), a suitable ordering of jobs is searched. According to the mentioned conditions, the columns of matrix \(M\) or the graph cannot be replaced, because columns are corresponding machines. The rows must be permuted such that \(C_{\max }\) becomes minimized. The weight of the vertex situated in \(i\)th row and \(j\)th column is equal to \(a_{ij}\), (\(i = 1,2, \ldots ,n\)) and (\(j = 1,2, \ldots ,m\)). The weight of every path in the graph is the total of weights of its vertices.

For every permutation denoted by \(\pi\), \(\pi \left( j \right)\) is the job in \(j\)th row. Then

\(C_{{{\text{max}}}} = C_{m,\pi \left( n \right)} .\)

In these relations, \(C_{ji}\) is the completion time of \(i\)th job on \(j\)th machine. It can also be written for every \(\pi\) and \(j = 1,2, \ldots ,m\)

2 Bellman, Esogbue, Nabeshima Theorem

In every PFSP with the time matrix \(M = \left[ {a_{ij} } \right]\), makespan is equal to the weight of the heavy path that is traced between \(a_{11}\) and \(a_{nm}\) in corresponding directed graph (Bellman et al. 2014).

According to this theorem, let every vertex \(a_{ij}\) be the food with \(a_{ij}\) weight in the mentioned graph. An ant wants to go from \(a_{11}\) to \(a_{nm}\) after collecting the heaviest foods with knowing all the weight of \(a_{ij}\)s.

The objective is giving an order of rows in \(M\) or graph such that the collected food be minimized.

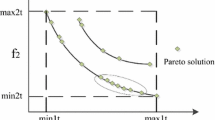

Based on this intuition, the heavy entries must be out of access. Indeed, whenever ant obtains food in a vertex, other heavy foods get out of reach. Hence, if ant reaches \(a_{ij}\), it does not attain the vertex with less column and row numbers. In fact, there will be no loop. Therefore, as the following figure is shown, if the line that connects two vertices has positive slope, ant does not receive both of them (Fig. 2).

With due attention to this idea, the heavy entries are considered and rows are permuted such that the line that connects these vertices has positive slope. This is certainly impossible for all vertices. In this situation, the best permutation of rows is resulted from the amount of makespans. These are the motivation of the presented algorithm.

3 The Proposed Algorithm

All of the prior concepts lead to the following steps. These steps will result in a good initial order of jobs.

-

1.

The greatest entry in \(M\) is chosen, and the corresponding row and column of it are deleted.

-

2.

The above step is repeated on the rest of entries, and the greatest chosen entries in total make a finite sequence of m members.

-

3.

All of \(m\) elected members in the last step are deleted in \(M\).

-

4.

The loop is continued n times.

For each sequence of entries, m corresponding rows are arranged. Suppose the sequence \(a_{{i_{1} j_{1} }} , \ldots ,a_{{i_{m} j_{m} }}\) is the resulted one in the above process. Since \(m \le n\) and \(j_{1} , \ldots ,j_{m}\) are distinct, \(j_{1} ,j_{2} , \ldots ,j_{m}\) is a permutation of \(1,2, \ldots ,m\). The indices \(j_{1} ,j_{2} , \ldots ,j_{m}\) are distinct plus they are not all of the \(1,2, \ldots ,n\).

The rows \(r_{{i_{1} }} , \ldots ,r_{{i_{m} }}\) are arranged such that the slope of lines connecting each elected two entries is positive.

The furthest row is \(r_{{i_{h} }}\) with \(j_{{i_{h} }} = m\). The next row is \(r_{{i_{h - 1} }}\) with \(j_{{i_{h - 1} }} = m - 1\), and it will be repeated in this manner for the next rows until the last one being \(r_{{i_{1} }}\) with \(j_{{i_{1} }} = 1\). Since the column numbers of elected entries in a group are all of the numbers \(1,2, \ldots ,m\), these entries can be numerated in the from \(a_{{i_{1} 1}} , \ldots ,a_{{i_{m} m}}\). Hence, smaller row means the row that is under the greater one.

A distinguished ordering among the rows of elected entries has been constructed. But for every such ordering, a value can be allocated. For determining the value of ordering \(r_{{i_{l} }} < r_{{i_{h} }}\), the profit of this ordering must be checked. Based on Bellman’s theorem (2014), only the bigger one in the \(a_{{i_{l} l}}\), \(a_{{i_{h} h}}\) is chosen. In the inverse ordering, both of entries can be chosen. Thus, the profit of this ordering can be defined \(\min \left\{ {a_{{i_{h} h}} ,a_{{i_{l} l}} } \right\}\). This means that the inverse ordering, i.e., \(r_{{i_{h} }} < r_{{i_{l} }}\) has a disadvantage that is equal to \(\min \left\{ {a_{{i_{l} l}} ,a_{{i_{h} h}} } \right\}\). The negative of this number is proposed as the profit of \(r_{{i_{l} }} < r_{{i_{h} }}\) ordering. Now for each group that has been obtained, there are \(m\) rows with distinguishing ordering. Then, for every two rows in it, the obtained profit is constructed from the order of them. In the inverse ordering of these two rows, the profit becomes negative. Therefore, the square matrix \(P = \left[ {p_{ij} } \right]\) is defined. Every \(p_{ij}\) denotes the sum of \(r_{i} < r_{j}\) ordering profits \(\left( {\hat{P}_{ij} } \right)\) and \(r_{j} < r_{i}\) ordering disadvantages \(\left( {\hat{\hat{P}}_{ij} } \right)\). Indeed, for every two indices i and j, it is possible that \(r_{i}\) and \(r_{j}\) arise in the some of the mentioned corresponding sequences. Clearly \(p_{ij} = - p_{ji}\), \(P_{ij} = \hat{P}_{ij} + \hat{\hat{P}}_{ij}\).

For every arrangement of rows in \(M\) that is obtained from a permutation \(\sigma {:}\,\left\{ {1,2, \ldots ,n} \right\} \to \left\{ {1,2, \ldots ,n} \right\}\), matrix \(P\) denotes the amount of correctness for place and order of each row. For example, suppose there is \(r_{\sigma \left( i \right)}\) of \(M\) in \(i\)th row. Then, the amount of correctness for place of \(r_{\sigma \left( i \right)}\) in \(i\)th row is

Obviously \(p_{ij} = 0\) when \(i = j\).

4 Example for Constructing Matrix P

Let the processing times of four jobs on three machines be as the following:

Consider the lines connecting two entries of (59, 80, 44) and other resulted sequences. The slopes of these must be positive, therefore;

It is possible that \(p_{ij} > 0\) or \(p_{ij} < 0\). The best place for a row is where the corresponding \(p_{ij}\) has the greatest amount, and thus, the row with the least amount of \(p_{ij}\) is in the worst place.

After constructing the matrix \(P = \left[ {p_{ij} } \right]\), the row with the worst place is determined, and the best place for it is obtained with a local search. Then, the penultimate row can be chosen, and the mentioned operations are repeated.

These operations are continued till the last row. At the end, a well ordering is found in which every replacement of its rows does not provide a better solution. A situation improves when a better makespan is found.

A suitable initial sequence for processing \(n\) jobs on \(m\) machines can be obtained from \(P = \left[ {p_{ij} } \right]\). The Bellman et al. theorem (Bellman et al. 2014) is used to determine makespans.

5 Computational Evaluation

The heuristic algorithm was implemented in Visual Basic and carried on a Pentium IV PC/AT computer running at 3.2 GHz with 2 GB RAM memory. The proposed algorithm was tested on 100 generated random problems with the sizes \(n = 10,20,30,40,50,60,70,80,90,100\) and \(m = 10,20,30,40,50\) in the feasible domain. The results of these tests are shown in Table 1 and were compared with the results of NEH [the best heuristic algorithm in classic methods (Nawaz et al. 1983)] on the same random problems. There are two numbers in each table cell. These numbers represent how many times corresponding algorithm has better results than the other.

The main conclusion to be drawn from this table is that in 82% of times, the heuristic algorithm has better results than NEH. Also in large-scale problems, this improvement is increased.

The results are compared in the following figures (Figs. 3, 4).

In Table 2, the running times of the problems are in second.

The approximate relation \(T_{{{\text{Heu}}}} \cong 0/15 \times m\,T_{{{\text{NEH}}}}\) is resulted on the basis of the data in Table 2. Notations \(T_{{{\text{Heu}}}}\) and \(T_{{{\text{NEH}}}}\) are, respectively, referred to the running times of heuristic algorithm and NEH in each allocation of the table.

6 Time Complexity

The complexity of sorting \(mn\) entries of the matrix \(M\) is \(O\left( {m^{2} n^{2} } \right)\). There is less running time for dividing these entries into \(m\) groups and counting the number of operations. The calculation of \(p_{ij}\) s has less computational used time in the construction of matrix \(P = \left[ {p_{ij} } \right]\). Therefore, the complexity of the presented heuristic algorithm is \(O\left( {m^{2} n^{2} } \right)\).

7 Conclusions

In this paper, a reasonably good initial sequence was obtained for solving PFSP with minimizing makespan criterion. After running the heuristic algorithm on 100 random problems, the results in 82% of times were better than NEH. For future researching lines, the presented algorithm with due attention to its mathematical properties could be used as a metaheuristic.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Alaykýran K, Engin O, Döyen A (2007) Using ant colony optimization to solve hybrid flow shop scheduling problems. Int J Adv Manuf Technol 35(5):541–550

Allahverdi A, Al-Anzi FS (2006) A PSO and a Tabu search heuristics for the assembly scheduling problem of the two-stage distributed database application. Comput Oper Res 33(4):1056–1080

Ancău M (2012) On solving flowshop scheduling problems. Proc Roman Acad Ser A 13(1):71–79

Bellman R, Esogbue AO, Nabeshima I (2014) Mathematical aspects of scheduling and applications: modern applied mathematics and computer science, vol 4. Elsevier, Amsterdam

Brum A, Ritt M (2018) Automatic design of heuristics for minimizing the makespan in permutation flow shops. In: 2018 IEEE congress on evolutionary computation (CEC). IEEE, pp 1–8

Framinan JM, Leisten R, Rajendran C (2003) Different initial sequences for the heuristic of Nawaz, Enscore and Ham to minimize makespan, idletime or flowtime in the static permutation flowshop sequencing problem. Int J Prod Res 41(1):121–148

Garey MR, Johnson DS, Sethi R (1976) The complexity of flowshop and jobshop scheduling. Math Oper Res 1(2):117–129

Kalczynski PJ, Kamburowski J (2008) An improved NEH heuristic to minimize makespan in permutation flow shops. Comput Oper Res 35(9):3001–3008

Kurz ME, Askin RG (2004) Scheduling flexible flow lines with sequence-dependent setup times. Eur J Oper Res 159(1):66–82

Leung JYT, Li H, Pinedo M (2005) Order scheduling in an environment with dedicated resources in parallel. J Sched 8(5):355–386

Liu W, Jin Y, Price M (2016) A new Nawaz–Enscore–Ham-based heuristic for permutation flow-shop problems with bicriteria of makespan and machine idle time. Eng Optim 48(10):1808–1822

Malik A, Dhingra AK (2013) Comparative analysis of heuristics for makespan minimising in flow shop scheduling. Int J Innov Eng Technol 2(4):263–269

Nawaz M, Enscore EE Jr, Ham I (1983) A heuristic algorithm for the m-machine, n-job flow-shop sequencing problem. Omega 11(1):91–95

Nurdiansyah R, Rijanto OAW, Santosa B, Wiratno SE (2019) An improved differential evolution algorithm for permutation flow shop scheduling problem. Int J Oper Res 16(2):37–44

Rad SF, Ruiz R, Boroojerdian N (2009) New high performing heuristics for minimizing makespan in permutation flowshops. Omega 37(2):331–345

Sauvey C, Sauer N (2020) Two NEH heuristic improvements for flowshop scheduling problem with makespan criterion. Algorithms 13(5):112

Xu J, Yin Y, Cheng TCE, Wu CC, Gu S (2014) An improved memetic algorithm based on a dynamic neighbourhood for the permutation flowshop scheduling problem. Int J Prod Res 52(4):1188–1199

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Shahriar Farahmand Rad. The first draft of the manuscript was written by Shahriar Farahmand Rad and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Rights and permissions

About this article

Cite this article

Farahmand Rad, S. A New Ant Algorithmic Approach for Solving PFSP. Iran J Sci Technol Trans Sci 46, 181–188 (2022). https://doi.org/10.1007/s40995-021-01202-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40995-021-01202-4