Abstract

Neural correlates of emotions have been widely investigated using noninvasive sensor modalities. These approaches are often characterized by a low level of usability and are not practical for real-life situations. The aim of this study is to show that a single electroencephalography (EEG) electrode placed in the central region of the scalp is able to discriminate emotionally characterized events with respect to a baseline period. Emotional changes were induced using an imagery approach based on the recall of autobiographical events characterized by four basic emotions: “Happiness”, “Fear”, “Anger”, and “Sadness”. Data from 17 normal subjects were recorded at the Cz position according to the International 10–20 system. After preprocessing and artifact detection phases, raw signals were analyzed through a time-variant adaptive autoregressive model to extract EEG characteristic spectral components. Five frequency bands, i.e., the classical EEG rhythms, were considered, namely the delta band (δ) (1–4 Hz), the theta band (θ) (4–6 Hz), the alpha band (α) (6–12 Hz), the beta band (β) (12–30 Hz), and the gamma band (γ) (30–50 Hz). The relative powers of the EEG rhythms were used as features to compare the experimental conditions. Our results show statistically significant differences when comparing the power content in the gamma band of baseline events versus emotionally characterized events. Particularly, a significant increase in gamma band relative power was found in 3 out of 4 emotionally characterized events (“Happiness”, “Sadness”, and “Anger”). In agreement with previous studies, our findings confirm the presence of a possible correlation between broader high-frequency cortical activation and affective processing of the brain. The present study shows that a single EEG electrode could potentially be used for the assessment of the emotional state with a minimally invasive setup.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There is increasing interest in developing systems that can automatically detect and distinguish emotions in a quantitative way. Emotions play an important role in the human experience, influencing cognition, perception, and everyday tasks such as learning, communication, and decision making [1]. Besides qualitative methods, such as self-reports, startle responses, and behavioral responses, quantitative approaches based on autonomic correlates and/or neurophysiological measurements have been recently proposed. As the brain is the centre of every human action, emotions can be detected through the analysis of physiological signals generated by the central nervous system [2, 3]. Neurophysiological measurements might provide a direct means for emotion recognition [4]. The neural correlates of emotions have been extensively investigated using noninvasive sensor modalities, each one with unique spatial and temporal resolutions and spanning different levels of usability. Functional magnetic resonance imaging (fMRI) has been used to uncover cortical and subcortical nuclei involved in affective responses [5]. Magnetoencephalography (MEG) has been used to explore emotion-related neural signals in specific brain loci [6]. The cost and the laboratory environment of the experimental setup do not allow these modalities to be used for out-of-lab emotion recognition systems [7, 8]. Electroencephalography (EEG) has been widely used to investigate the brain dynamics related to emotions since its high temporal resolution allows the early detection of response to emotionally characterized stimuli.

1.1 EEG Emotion Identification

Emotion identification from EEG has been performed using different features in the time and/or frequency domain. Event-related potential (ERP) components and spectral power at various frequency bands are related to underlying emotional states [4]. In the frequency domain, the spectral power in various frequency bands has been implicated in the emotional state. The alpha band power has been associated with discrete emotions such as happiness, sadness, and fear [9]. Other studies analyzed the connection between the gamma band and emotions. Li and Lu [10] analyzed event-related desynchronization (ERD) in the gamma band during emotional stimuli presentation. Müller et al. [11] and Keil et al. [12] reported the connection between gamma band activity and emotions when differential hemispheric activations were induced. Emotion identification from EEG has also been performed using mathematical transforms [13] or nonlinear quantities [14, 15]. A complete review of recent contributions to the field can be found elsewhere [4].

1.2 Toward the Reduction of EEG Channels Number

The above-mentioned studies employed up to 128 EEG channels. Such a large number makes these approaches impractical in real-life situations, resulting in long experimental setup, significant discomfort for the subject, and a huge computational burden to handle the large amount of data. Therefore, attention has been focused on developing a feasible emotion classification system that uses a reduced number of EEG channels. Min et al. [16] found an emotional response in physiological signals, including EEG signals recorded from two channels (Cz and Fz International 10-20 System positions). Alpha and beta relative powers were used as features to distinguish conditions such as pleasantness, arousal, relaxation, and unpleasantness. Mikhail and El-Ayat [1] performed a classification of four emotions through brain responses elicited by facial expressions using 4 to 25 EEG channels. They showed a decrease in accuracy with a reduction in the number of channels. A trade-off between the complexity of the setup and the accuracy of the classification was reached with 4–6 channels. The aim of the present work is to verify whether a single EEG electrode placed in the central region, i.e., the Cz position, can discriminate emotionally characterized events from a baseline condition. An imagery approach was used as the experimental protocol. Emotional responses were elicited by the recall of autobiographical events of four basic emotions, namely “Happiness”, “Fear”, “Anger”, and “Sadness”.

2 Materials and Methods

2.1 Experimental Protocol

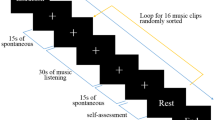

Twenty-one healthy volunteers from the student body of IULM University of Milan took part in the experiments. All participants had no history of neurological or psychiatric problems. The present experimental protocol, previously employed in other works [17, 18], makes use of a memory recall paradigm of emotionally characterized autobiographical episodes to trigger physiological responses. The considered emotions are “Happiness”, “Fear”, “Anger”, and “Sadness”. The experiments took place at the Behavior & Brain Lab at IULM University of Milan and consisted of two different phases. In the first phase, the subject told a psychologist two recent emotionally characterized autobiographical episodes for each target emotion. During the recall, the psychologist took notes about the episodes. For each target emotion, the psychologist asked the subject to judge the most intense episode. These episodes were selected as emotional stimuli for the second phase of the protocol. Subjects unable to recall any episode related to the target emotion were excluded from the protocol. The second phase took place 1 day after the first. After arriving at the lab, the subject was asked to sit in front of the eye tracker provided of a grey screen monitor, at a fixed distance of 70 cm, in a room with constant illumination conditions. First, 3 min of a “Baseline”, i.e., a resting period, were recorded. During this phase, the subject was asked to sit still and to relax. Subsequently, the psychologist helped the subject to recall the formerly selected episode which was related to the first target emotion. When the subject nodded that they were experiencing the emotion, their physiological signals were recorded for 3 min. This phase is referred to as “Autobiographical Recall”. Later on, a “washout” period of at least 3 min was provided to allow the subject to relax and clear their mind. Finally, the recall of the next emotional episode could start. The order of the target emotions was randomly chosen for every subject. Figure 1 shows a graphical representation of the experimental protocol.

A graphical representation of the protocol. Data were recorded during gray colored boxes. After recording the “Baseline” event, during “Autobiographical Recall” the subject was asked to recall the most vivid episode of the target emotion that they had reported in the first phase of the study. When the subject nodded to again experience the target emotion, the recording of physiological data was performed for 3 min. A “washout” period of at least 3 min was provided before starting the next “Autobiographical Recall”

2.2 Physiological Signal Recording

To track brain activity, single-channel EEG was recorded. The electrode was placed at the Cz position, according to the 10–20 International System, while the reference electrode was placed on the left earlobe. The electromyography (EMG) signal was acquired on the corrugator supercilii muscle. EEG and EMG were recorded through a Flexcomp Infinity™ encoder (Thought Technology Ltd., Montreal, Canada) at a sampling rate of 2048 Hz. Pupil dilation (PD) signals were recorded using an eye tracker (RED250™ Eye-Tracker, SensoMotoric Instruments, Teltow, Germany). Prior to the start of “Baseline” and each “Autobiographical Recall”, calibration of the eye tracker was performed. Other physiological signals were acquired during the second phase, but their analysis is not included in the present study. Further details on the acquired signals can be found elsewhere [17].

2.3 Signal Preprocessing and Artifact Detection

For computational purposes, the EEG signals were low-passed and resampled at 100 Hz. Before the analysis of the EEG signals, artifact detection was performed. Muscle contraction related to eye movements is the main artifact in EEG signals because it produces a sudden change in the electric field around the eyes, which affects the scalp electric field [19]. In particular, the electric potentials due to eye blinking can be larger than the EEG signal and can propagate across most of the scalp, covering and contaminating brain signals [20]. To detect eye-blinking artifacts, we took advantage of simultaneously recorded PD signals. EMG signals of the corrugator muscle were used for other undifferentiated muscle activity. The PD signal permits eye-blinking occurrences to be precisely detected. The EMG signal tracked the activity of the corrugator supercilii muscle, which is mostly involved in the frowning response. When the EMG and PD signals were aligned, a narrow correspondence between eye-blinking events and EMG activity peaks was observed, as shown in Fig. 2. According to this observation, the eye-blinking detection performed by the eye tracker was used to automatically identify and exclude contaminated EEG segments. A visual check of the signals was performed to correct possible misdetections.

2.4 EEG Analysis

EEG power spectral density (PSD) was computed using an adaptive autoregressive (AAR) method. Florian and Pfurtscheller [21] claimed that autoregressive (AR) approaches are more suitable than traditional techniques based on the Fourier transform, as the frequency resolution does not depend on the length of the time series. AR models are suited to stationary signals only, an assumption that is in general not valid for EEG signals [21]. To circumvent this limitation, AAR methods have been proposed [22]. At each new available observation, the set of AR parameters is updated to track the statistical changes in the observed data. For each AR update, a window of the signal is used by assuming that the segment within the window is stationary.

2.4.1 AAR Model

The vector representation of a generic linear AR model is:

where w(t) is zero-mean Gaussian noise, a is the vector of the parameters \(a_{1} ,a_{2}, \ldots a_{p}\), where p is the model order, and \(\Phi (t)^{T} = [\Phi (t - 1),^{{}} \Phi (t - 2), \ldots ,\Phi (t - p)]\) is the observation vector. The corresponding predictor is given by:

where \(\mathop y\limits^{ \wedge } \left( t \right) = \mathop y\limits^{ \wedge } (t|t - 1)\) is the estimated vector and \(y(t)\) is the discrete time series. The difference between the measured value \(y(t)\) and its prediction \(\mathop y\limits^{ \wedge } \left( t \right)\) is the prediction error, also called the a priori error, because it is based on the parameter vector defined at the previous estimation step.

The analytical formulation of the prediction error is:

Once \(\varepsilon \left( t \right)\) has been computed, the model coefficients are updated using recursive least squares (RLS) identification. A forgetting factor \(\lambda \in (0,1)\) is introduced in the RLS formulation. The coefficients \(\hat{a}(t - 1)\) obtained at the previous sample are updated, adding an innovation term that is dependent on the estimation error \(\varepsilon \left( t \right)\), which is weighted according to a gain vector \(\varvec{K}(t, \lambda )\), i.e., a vector of weights depending on the forgetting factor \(\lambda\) and the inverse of the autocorrelation matrix of the time series [23]. \(\lambda\) defines a decay factor \(T = 1/(1 - \lambda )\) of the weights, and can be interpreted as the memory of the update step. More recent values of the prediction error contribute more than older ones. A compact formulation of the update is given by:

The updating formulation of the autoregressive model, which tracks the dynamic variations of the signal, is thus obtained. The factor \(\lambda\) is selected as a trade-off between preserving the fast dynamics of the signal and preventing the influence of artifacts on the model. From each a(t), the power spectral density (PSD) and the single spectral components are computed. The general form of the parametric estimation of PSD through an AR model is:

where \(z = exp(j2\pi f),\) \(\sigma^{2}\) is the variance of the prediction error, and \(H\left( z \right)\) is defined as:

The spectral components are computed using the residual method [24, 25]. Each component is attributed to a given frequency band when the central frequency of its peak lays within the frequency band itself. Five frequency bands, i.e., the classical EEG characteristic rhythms, are considered: the delta band (δ) (1–4 Hz), the theta band (θ) (4–6 Hz), the alpha band (α) (6–12 Hz), the beta band (β) (12–30 Hz), and the gamma band (γ) (30–50 Hz). All the components laying in a given frequency band are summed to compute the total power of the rhythm. AAR identification and correspondent spectral decomposition were computed for each sample of the entire signal. Figure 3 shows an example of time–frequency representation of EEG PSD.

For each rhythm the relative power, i.e., the ratio between the average power in a certain band and the total variance of the signal, was computed. The main steps of the method are summarized in Fig. 4.

2.5 Statistical Analysis

The relative powers of the EEG rhythms were used to compare the experimental conditions. A Lilliefors test [26] and a Levene test [27] were performed to verify the hypotheses, respectively, of normality and homogeneity of the variance. As the hypothesis of normality was rejected and the hypothesis of the homogeneity of the variance was accepted, a nonparametric statistical test was performed for the analysis of variance, i.e., the Kruskal–Wallis test. For post hoc analysis, the Wilcoxon sign-rank test was performed to test the differences between the “Baseline” and each emotionally characterized event.

3 Results

Seventeen out of 21 subjects were analyzed, as 4 subjects were affected by too many artifacts. Table 1 lists the median values and the first and third quartiles of the relative powers of EEG signals in each frequency band and for each emotion.

There are significant differences in the gamma band for “Baseline” versus “Happiness”, “Anger”, and “Sadness”, respectively. These differences are also shown in Fig. 5. The median value of “Happiness” is higher than that in the “Baseline” condition. The same trend can be found when “Baseline” is compared with “Anger” and “Sadness”, respectively.

Figure 6 shows the PSD for the subject “sbj57”. Each line represents the mean spectrum computed in each experimental condition. It can be seen that a higher power value is observed in the gamma band during “Happiness”, “Anger”, and “Sadness” compared to that for “Baseline”.

An example of PSD for subject “sbj57”. Each plot represents a comparison between the PSD during the “Baseline” condition (in black) and one of the emotional conditions. “Happiness”, “Fear”, “Anger”, and “Sadness” are respectively depicted in green at top left, red at top right, blue at bottom left, and purple at bottom right

Figure 7 shows the inter-subject variations of the relative power of the gamma band during “Baseline” versus the other experimental conditions except “Fear”. In particular, the relative power during “Happiness” is higher than that during “Baseline” for 16 out of 17 subjects. Similarly, the comparison between “Anger” and “Baseline” shows the same trend in 13 out of 17 participants. During “Sadness”, 12 out of 17 subjects experienced an increase in the gamma power compared to that for “Baseline”.

4 Discussion

This work explored the prospect of using single-channel EEG (Cz) to evaluate the effect of emotionally characterized stimuli when compared with a neutral stimulus. Several studies have tried to recognize emotions from EEG signals with a number of electrodes that varies from 3 [28] to 128 [12, 29]. Our results show the possibility to distinguish the elicitation of basic emotions, i.e., “Happiness”, “Anger”, and “Sadness”, from the “Baseline” condition using single-channel EEG. The differences between the baseline and emotions were located in the gamma band. This result is in agreement with previous studies in which a connection between high-frequency components in EEG signals, i.e., gamma rhythm (30–50 Hz), and emotions was documented. Müller [11] and Keil [12] suggested that emotions are represented in a wide cortico-limbic network rather than in particular regions of the brain and that during processing of emotional stimuli such a network produces widespread rather than focal cortical activity at high frequencies. Li and Lu [10] used EEG signals to classify emotional reactions evoked by pictures of facial expressions representing different emotions, and the gamma band was found to be related to pictures of “Happiness” and “Sadness”. These approaches required a large number of electrodes for emotion detection, 62 in the latter study [10] and 128 in the former [11, 12]. These findings are in agreement with our results, which indicate an increase in the gamma band that is more evident for “Happiness” than for “Sadness” and “Anger”. Müller [11] and Keil [12] also supported the hypothesis of a localized EEG activity due to positive and negative emotions. They observed the involvement of the left hemisphere in positive valence and of the right hemisphere in negative valence in gamma band activity. For this reason, it would be difficult to discriminate among emotions using a single EEG electrode; it would be necessary to use at least two electrodes, one for each brain side. Our findings suggest that the activity collected from a central lobe electrode can distinguish neural activation of emotional stimuli from neural activation of a neutral condition. A future development of this work will be the use of two electrodes placed on the left and right sides of the central lobe, respectively. This would lead to a further confirmation of the results in the present study and allow the investigation of whether it is possible to improve the results by taking into account the asynchronous activity of the brain.

5 Conclusion

The results obtained in this study suggest the possibility to use a single-channel EEG (Cz) to evaluate the effect of emotionally characterized stimuli when compared with neutral one. Although future development are required, this work can be a useful guide for the assessment of emotional states through the use of a minimally invasive system, particularly in applications such as human–computer interaction, communication, marketing research, and user experience, which can take advantage of a quantitative representation of emotions in a minimal sensory setup.

References

Mikhail, M., & El-Ayat, K. (2013). Using minimal number of electrodes for emotion detection using brain signals produced from a new elicitation technique. International Journal of Autonomous and Adaptive Communications Systems, 6, 80–97.

Mauss, I. B., & Robinson, M. D. (2009). Measures of emotion: A review. Cognition and Emotion, 23, 209–237.

Panksepp, J. (2007). Neuro-psychoanalysis may enliven the mindbrain sciences. Cortex, 43, 1106–1107.

Kim, M.-K., Kim, M., Oh, E., & Kim, S.-P. (2013). A review on the computational methods for emotional state estimation from the human EEG. Computational and Mathematical Methods in Medicine, 2013, 579–734.

Vytal, K., & Hamann, S. (2010). Neuroimaging support for discrete neural correlates of basic emotions: A voxel-based metaanalysis. Journal of Cognitive Neuroscience, 22, 2864–2885.

Peyk, P., Schupp, H. T., Elbert, T., & Junghöfer, M. (2008). Emotion processing in the visual brain: A MEG analysis. Brain Topography, 20, 205–215.

Hämäläinen, M., Hari, R., Ilmoniemi, R. J., Knuutila, J., & Lounasmaa, O. V. (1993). Magnetoencephalography-theory, instrumentation, and applications to noninvasive studies of the working human brain. Reviews of Modern Physics, 65, 413–497.

Ray, A., & Bowyer, S. M. (2010). Clinical applications of magnetoencephalography in epilepsy. Annals of Indian Academy of Neurology, 13, 14–22.

Balconi, M., & Mazza, G. (2009). Brain oscillations and BIS/BAS (behavioral inhibition/activation system) effects on processing masked emotional cues. ERS/ERD and coherence measures of alpha band. International Journal of Psychophysiology, 74, 158–165.

Li, M., & Lu, B. L. (2009). Emotion classification based on gamma band EEG. In: Engineering in Medicine and Biology Society, 2009. EMBC 2009. Annual International Conference of the IEEE, (pp. 1223–1226).

Müller, M. M., Keil, A., Gruber, T., & Elbert, T. (1999). Processing of affective pictures modulates right-hemispheric gamma band EEG activity. Clinical Neurophysiology, 110, 1913–1920.

Keil, A., Müller, M. M., Gruber, T., Wienbruch, C., Stolarova, M., & Elbert, T. (2001). Effects of emotional arousal in the cerebral hemispheres: a study of oscillatory brain activity and event related potentials. Clinical Neurophysiology, 112, 2057–2068.

Murugappan, M., Nagarajan, R., & Yaacob, S. (2011). Combining spatial filtering and wavelet transform for classifying human emotions using EEG signals. Journal of Medical and Biological Engineering, 31, 45–51.

Hosseini, S. A., & Naghibi-Sistani, M. B. (2011). Emotion recognition method using entropy analysis of EEG signals. International Journal of Image, Graphics and Signal Processing (IJIGSP), 3, 30–36.

Jiea, X., Rui, C., & Li, L. (2014). Emotion recognition based on the sample entropy of EEG. Bio-Medical Materials and Engineering, 24, 1185–1192.

Min, Y. K., Chung, S. C., & Min, B. C. (2005). Physiological evaluation on emotional change induced by imagination. Applied Psychophysiology and Biofeedback, 30, 137–150.

Onorati, F., Barbieri, R., Mauri, M., Russo, V., & Mainardi, L. (2013). Characterization of affective states by pupillary dynamics and autonomic correlates. Frontiers in Neuroengineering, 6, 9.

Mauri, M., Onorati, F., Russo, V., Mainardi, L., & Barbieri, R. (2012). Psycho-physiological assessment of emotions. International Journal of Bioelectromagnetism, 14, 133–140.

Croft, R. J., & Barry, R. J. (2000). Removal of ocular artifact from the EEG: A review. Clinical Neurophysiology, 30, 5–19.

Joyce, C. A., Gorodnitsky, I. F., & Kutas, M. (2004). Automatic removal of eye movement and blink artifacts from EEG data using blind component separation. Psychophysiology, 41, 313–325.

Florian, G., & Pfurtscheller, G. (1995). Dynamic spectral analysis of event-related EEG data. Electroencephalography and Clinical Neurophysiology, 95, 393–396.

Bianchi, A. M. (2011). Time-variant spectral estimation. In S. Cerutti & C. Marchesi (Eds.), Advanced Methods of Biomedical Signal Processing (pp. 259–288). Hoboken, NJ: Wiley.

Bittanti, S., & Campi, M. (1994). Bounded error identification of time-varying parameters by RLS techniques. IEEE Transactions on Automatic Control, 39, 1106–1110.

Baselli, G., Cerutti, S., Civardi, S., Lombardi, F., Malliani, M., Merri, M., et al. (1987). Heart rate variability signal processing: a quantitative approach as an aid to diagnosis in cardiovascular pathologies. International Journal of Bio-Medical Computing, 20, 51–70.

Zetterberg, L. H. (1969). Estimation of parameters for a linear difference equation with application to EEG analysis. Mathematical Biosciences, 5, 227–275.

Lilliefors, H. (1967). On the kolmogorov–smirnov test for normality with mean and variance unknown. Journal of American Statistical Association, 62, 399–402.

Levene, H. (1960). Robust testes for equality of variances. In I. Olkin (Ed.), Contributions to probability and statistics (pp. 278–292). Palo Alto CA: Stanford University Press.

Petrantonakis, P. C., & Hadjileontiadis, L. J. (2010). Emotion recognition from EEG using higher order crossings. IEEE Transactions on Information Technology in Biomedicine, 14, 186–197.

Aftanas, L. I., Varlamov, A. A., Pavlov, S. V., Makhnev, V. P., & Reva, N. V. (2001). Affective picture processing: event-related synchronization within individually defined human theta band is modulated by valence dimension. Neuroscience Letters, 303, 115–118.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sirca, F., Onorati, F., Mainardi, L. et al. Time-Varying Spectral Analysis of Single-Channel EEG: Application in Affective Protocol. J. Med. Biol. Eng. 35, 367–374 (2015). https://doi.org/10.1007/s40846-015-0044-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40846-015-0044-5