Abstract

In this work, we present a novel approach for effectively reducing impulse noise from images using Conjugate Gradient Method. Our Proposed method used the iterative property of the conjugate gradient method to accurately identify and restore corrupted pixel values while preserving the underlying image details. In the first part, we propose a new conjugate gradient parameter, and proved the global convergence using some assumptions. Then numerical experiment is conducted on some benchmark images contaminated with impulse noise to validate the efficacy of the method. The numerical results demonstrate a significant noise reduction in comparison with an existing method showing that our proposed method is more effective in denoising degraded images.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Digital image processing is a crucial component within a multitude of domains in the fields of science and engineering, playing an indispensable role in the advancement and innovation of such industries. It is used in medical imaging, remote sensing, multimedia applications and computer vision [1]. However, acquisition and transmission of images in real-world scenarios are often ruined by different forms of noise which affects the image quality and subsequent analysis [1]. Among these types of noise, impulse noise poses a particular challenging problem due to its irregular and abrupt occurrence which results in a corrupt pixel value disrupting the underlying image structure.

In recent years, researchers have devoted extensive efforts in developing denoising techniques to solve impulse noise problems. Some of the classical methods include median filtering and adaptive filtering which achieved some level of success in reducing noise artifact.

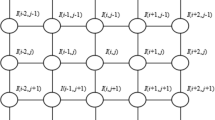

An adaptive median filter (AMF) [2] can detect and filter impulse noise by utilizing two equations to predict pixels that are likely to be polluted. The real picture is indicated by \(X\), and \({\rm K} = \left\{ {1,2,3, \ldots M} \right\} \times \left\{ {1,2,3, \ldots N} \right\}\) is the index set of \(X\), \({\rm N} \subset {\rm K}\) is the set of indices of noise pixels detected in the first phase, \(P_{w,v}\) is the set of four closest neighbors of a pixel at position \(\left( {w,v} \right) \in {\rm K}\), \(y_{w,v}\) is the observed pixel value of the image at position \(\left( {w,v} \right),\) and \(u_{w,v} = \left[ {\mu_{w,v} } \right]_{{\left( {w,v} \right) \in {\rm N}}}\) is a column vector of length \(c\) ordered lexicographically, see [3]. The letter \(c\) represents the number of elements in\(N\). The following function is minimized in the second equation to recover noise pixels:

where \(\beta \) denotes the regularization parameter, and: \({S}_{w,v}^{1}=2{\sum }_{(m,n)\in {\rm P}_{w,v}\cap {\rm N}^{c}}{\phi }_{\alpha }({\mu }_{w,v}-{y}_{m,n}),\) \({S}_{i,j}^{2}={\sum }_{(m,n)\in {\rm P}_{w,v}\cap {\rm N}}{\phi }_{\alpha }({\mu }_{w,v}-{y}_{m,n})\) where \({\phi }_{\alpha }=\sqrt{\alpha +{x}^{2}},\alpha >0\) is a potential function used to reduce noise in an optimization process by minimizing a smooth function without the need for a non-smooth data-fitting term:

However, the traditional methods often have difficulty in striking balance between noise suppression and preservation of image details. Hence, a need for more advanced and adaptive methods that can solve the problems posed by impulse noise in digital images efficiently.

Gradient methods are known to be vital in solving these kinds of problems. One of the popular gradient methods is conjugate gradient method which is a widely used optimization algorithm with remarkable performance and nice convergence properties. Given the below problem,

\({f}_{\alpha }(\mu ):{R}^{n}\to R\). Conjugate gradient technique creates a sequence of iterations in the following format:

where \(d_{k}\) is the search direction and \(\alpha_{k}\) is the step length obtained through line search, as in:

For more detailed information, interested reader can refer to [4]. The step length determined by Wolfe conditions is represented by a parameter \({\alpha }_{k}\) as follows:

where \(0<\delta <\sigma <1\), see [5,6,7] for more information. The computation of the search direction in the conjugate gradient algorithm is achieved through the following equation:

where \({\beta }_{k}\) is a scalar called conjugate gradient parameter. There are many conjugate gradient parameters developed by different researchers such as the Dai-Yuan (DY) [8] and the Fletcher-Reeves (FR) [9] which are defined as follows:

Many research have been conducted on the qualities of convergence that are shown by conjugate gradient techniques [8,9,10,11,12,13,14,15]. Among them is the Zoutendijk [16], who demonstrated the global convergence of the FR method when accurate line searching is performed. Several researchers have developed different formulae for the conjugate gradient parameter that have some numerical performance and convergence properties. Some works on the nonlinear conjugate gradient method can be found in Hideaki and Yasushi’s [17] and Basim [18], in which the parameters are defined as follows:

These algorithms demonstrated some nice properties and good performance [19]. Also, it is quite effective in terms of computing. In the context of unconstrained problems, the proposed update aims to optimize the benefits of the original conjugate gradient techniques by utilizing a quadratic model to enhance performance.

In this work, we propose a novel approach for impulse noise reduction in digital images using conjugate gradient method. Our idea is to utilize the conjugate gradient method’s iterative nature and adaptability and tailor it for noise reduction in images aiming to have an optimal trade-off between noise removal and image fidelity. We propose a new conjugate gradient parameter and perform convergence analysis. This is followed by numerical experiment and the experimental results are compared with an existing method.

Deriving the New Conjugate Gradient Parameter

Let us begin with the below Taylor series:

where \(Q\) is Hessian matrix. Putting \(\mu ={\mu }_{k}\) and we find the derivative as:

we can write (11) as:

applying \({y}_{k}=Q{s}_{k}\) \(\mathrm{Q}({\mathrm{u}}_{\mathrm{k}}){\mathrm{s}}_{\mathrm{k}}\) \(\mathrm{Q}({\mathrm{u}}_{\mathrm{k}}){\mathrm{s}}_{\mathrm{k}}\) in (12), we have:

Applying conjugacy condition \({d}_{k+1}^{T}{y}_{k}=0\) on search direction \({d}_{k+1}\) will lead to:

where \(Q\) is Hessian matrix. Putting (13) in (14), it holds that:

Using exact line search in (15), then it reduces to:

which is the new proposed conjugate parameter.

Global Convergence

In order to ascertain the convergence, we use the following assumptions:

-

1.

\(f(\mu )\) is bounded below on the level set \(\mathcal{H}=\left\{\mu :\mu \in {R}^{n},f(\mu )\le f({\mu }_{1})\right\}\).

-

2.

The first-order derivative \(\nabla f(\mu )\) is Lipschitz continuous with \(L>0\), i.e., for all \(\tau ,\upsilon \in {R}^{n}\), the following inequality holds:

$$ \|g\left( \tau \right) - g\left( \upsilon \right)\| \le L \|\tau - \upsilon\| ,\forall \tau ,\upsilon \in R^{n} $$(17)

In order to comprehensively assess the global convergence characteristics of the Conjugate Gradient method, it is of utmost importance to have a thorough understanding of the Zoutendijk criterion [16], which is the following lemma.

Lemma 1

Assuming that (1) and (2) are true, \({\alpha }_{k}\) satisfies the Wolfe conditions, and that \(d_{k}\) is the descent direction, then:

Theorem 1

If the assumptions and lemma 1 are true and \(\left\{{\mu }_{k}\right\}\) is a new sequence. Then:

Theorem 2

If the step length \({\alpha }_{k}\) is obtained via relation Wolfe conditions, then:

Proof

Suppose by contradiction Eq. (20) is not true. Then for all k, we can find an r > 0 for which:

But from search direction as \({d}_{k+1}+{g}_{k+1}={\beta }_{k}{d}_{k}\) and squaring both sides, we obtain:

Utilizing (17) to (22) we get:

When (23) is divided by \(({d}_{k+1}^{T}{g}_{k+1}{)}^{2}\) we obtain:

as a result, we came to:

Assume there is \({c}_{1}>0\) such that \(\Vert {g}_{k}\Vert \ge {c}_{1}\) for all \(k\in n\) exists. Then:

Then finally, we get:

Likewise, by Lemma 1, we get \(\underset{k\to \infty }{mathrm{lim}} \, inf\left(\Vert {g}_{k}\Vert \right)=0\) holds.

Numerical Experiments

In this section, we have executed some numerical experiments to show the performance of the proposed method. Our primary objective is to demonstrate the efficacy of this approach in significantly reducing the amount of salt-and-pepper noise from images. The entire experiment is performed using MATLAB r2017a. A comprehensive comparison has been made between the newly presented method and the FR method. In order to achieve this objective, we have utilized a robust and diverse set of parameters for the experiment. Our findings revealed that the proposed method is indeed highly effective in reducing the amount of salt-and-pepper noise from images, thereby demonstrating its potential to be utilized in real-world applications. We use:

as a stopping criterion.

Popular benchmark images of Lena, Home, the Cameraman, and Elaine are all included in the test pictures. To evaluate the level of quality achieved by the methods, we used the peak signal-to-noise ratio, often known as PSNR as an indicator for quality restoration given by

in this instance, the abbreviations \({\mu }_{i,j}^{r}\) and \({\mu }_{i,j}^{*}\) stand for the pixel values of the original image and the restored image, respectively, see [20,21,22,23]. The result of the peak signal to noise ratio (PSNR), the number of function evaluations (NF), and the iterations (NI) required for the entire denoising process are provided for the restored image. As per Table 1, it is evident that the novel method outperformed the FR method in most of the test images. Furthermore, Table 1 illustrates the PSNR values generated by comparing the proposed method to the FR approach. It can be seen that the proposed method performs better than the FR method based on the number of iterations, function evaluations, and peak signal to noise ratio. Ultimately, this indicates that the proposed approach is more efficient and effective than the FR method. Thus, we can conclude that as a denoising strategy, the proposed method is superior in comparison to the FR method (Figs. 1, 2, 3 and 4).

Conclusions

In this work, we presented a unique conjugate gradient method for impulse noise reduction in digital images. The proposed method demonstrated a remarkable denoising capabilities by effectively restoring corrupted pixel values while preserving essential image features. The result of our experiments showed efficiency of our proposed method by outperforming the existing FR method. This work contributes to the increasing interest in optimization-based methods for image restoration and motivates further exploration of the conjugate gradient method in solving different image related problems.

Data availability

This manuscript has no associated data.

References

Gonzales, R.C., Wintz, P.: Digital Image Processing. Addison-Wesley Longman Publishing Co., Inc., London (1987)

Cai, J.-F., Chan, R., Morini, B.: Minimization of an edge-preserving regularization functional by conjugate gradient type methods. In: Tai, X.-C., Lie, K.-A., Chan, T.F., Osher, S. (eds.) Age Processing Based on Partial Differential Equations, pp. 109–122. Springer, Berlin, Heidelberg (2007)

Xue, W., Ren, J., Zheng, X., Liu, Z., Liang, Y.: A new DY conjugate gradient method and applications to image denoising. IEICE Trans. Inf. Syst. E101.D(12), 2984–2990 (2018). https://doi.org/10.1587/transinf.2018EDP7210

Nocedal, J., Wright, S.J.: Numerical optimization. In: Springer Series in Operations Research and Financial Engineering, pp. 1–664. Springer, New York (2006)

Jiang, X.Z., Jian, J.B.: A sufficient descent Dai-Yuan type nonlinear conjugate gradient method for unconstrained optimization problems. Nonlinear Dyn. (2013). https://doi.org/10.1007/s11071-012-0694-6

Wolfe, P.: Convergence conditions for ascent methods. SIAM Rev. 11(2), 226–235 (1969). https://doi.org/10.1137/1011036

Wolfe, P.: Convergence conditions for ascent methods. II: some corrections. SIAM Rev. 13(2), 185–188 (1971). https://doi.org/10.1137/1013035

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999). https://doi.org/10.1137/S1052623497318992

Fletcher, R.: Function minimization by conjugate gradients. Comput. J. 7(2), 149–154 (1964). https://doi.org/10.1093/comjnl/7.2.149

Dai, Y., Han, J., Liu, G., Sun, D., Yin, H., Yuan, Y.: Convergence properties of nonlinear conjugate gradient methods. SIAM J. Optim. 10(2), 345–358 (2000). https://doi.org/10.1137/S1052623494268443

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2006). https://doi.org/10.1137/030601880

Aji, S., Kumam, P., Siricharoen, P., Abubakar, A.B., Yahaya, M.M.: A modified conjugate descent projection method for monotone nonlinear equations and image restoration. IEEE Access 8, 158656–158665 (2020). https://doi.org/10.1109/ACCESS.2020.3020334

Aji, S., Abubakar, A.B., Kiri, A.I., Ishaku, A.: A spectral conjugate gradient-like method for convex constrained nonlinear monotone equations and signal recovery. Nonlinear Convex Anal. Optim. 1(1), 1–23 (2022)

Abed, M.M., Öztürk, U., Khudhur, H.M.: Spectral CG algorithm for solving fuzzy nonlinear equations. Iraqi J. Comput. Sci. Math. 3(1), 1–10 (2022). https://doi.org/10.52866/ijcsm.2022.01.01.001

Laylani, Y.A., Hassan, B.A., Khudhur, H.M.: Enhanced spectral conjugate gradient methods for unconstrained optimization. Comput. Sci. 18(2), 163–172 (2023)

Zoutendijk, G.: Nonlinear programming, computational methods. Integer Nonlinear Program 37–86 (1970)

Iiduka, H., Narushima, Y.: Conjugate gradient methods using value of objective function for unconstrained optimization. Optim. Lett. 6(5), 941–955 (2012). https://doi.org/10.1007/s11590-011-0324-0

Abbas Hassan, B.: A new formula for conjugate parameter computation based on the quadratic model. Indones. J. Electr. Eng. Comput. Sci. 13(3), 954 (2019). https://doi.org/10.11591/ijeecs.v13.i3.pp954-961

Polak, E., Ribiere, G.: Note sur la convergence de méthodes de directions conjuguées. Rev. Française d’informatique Rech. Opérationnelle. Série Rouge 3(16), 35–43 (1969). https://doi.org/10.1051/m2an/196903r100351

Yu, G., Huang, J., Zhou, Y.: A descent spectral conjugate gradient method for impulse noise removal. Appl. Math. Lett. 23(5), 555–560 (2010). https://doi.org/10.1016/j.aml.2010.01.010

Khudhur, H.M., Fawze, A.A.M.: An improved conjugate gradient method for solving unconstrained optimisation and image restoration problems. Int. J. Math. Model. Numer. Optim. 13(3), 313–325 (2023). https://doi.org/10.1504/IJMMNO.2023.132286

Ismail Ibrahim, Y., Mohammed Khudhur, H.: Modified three-term conjugate gradient algorithm and its applications in image restoration. Indones. J. Electr. Eng. Comput. Sci. 28(3), 1510–1517 (2022). https://doi.org/10.11591/ijeecs.v28.i3.pp1510-1517

Jiang, X., Liao, W., Yin, J., Jian, J.: A new family of hybrid three-term conjugate gradient methods with applications in image restoration. Numer. Algorithms 91(1), 161–191 (2022). https://doi.org/10.1007/s11075-022-01258-2

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Contributions

Hisham M. Khudhur, and Sani Aji wrote the main manuscript text and Hisham M. Khudhur, and Basim A Hassan prepared figures 1-4. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Ethical approval

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Khudhur, H.M., Hassan, B.A. & Aji, S. Superior Formula for Gradient Impulse Noise Reduction from Images. Int. J. Appl. Comput. Math 10, 4 (2024). https://doi.org/10.1007/s40819-023-01637-w

Accepted:

Published:

DOI: https://doi.org/10.1007/s40819-023-01637-w