Abstract

The present work aims to investigate the approximate solution to a general form of higher-order boundary value problem of both linear and nonlinear types. A novel Genocchi polynomial-based method is adapted for solving the model through a matrix collocation-based method. The considered model is investigated using the presented technique which mainly converts the equation into a system of algebraic equations. This system is then solved using a new algorithm. The method is tested on several examples and the acquired results prove that the method is accurate compared to other techniques from the literature. The method is straightforward and fast in terms of computational effort and is considered a promising technique for solving similar problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Higher-order boundary value problems are considered as one of the most important models to be dealing with while modelling both linear and nonlinear phenomena in real life. These models have been used for simulating several natural phenomena in science and engineering including thermodynamics, fluid mechanics, astronomy and astrophysics, and many other similar models. They have been used to simulate the action of heated fluid with the action of rotation [1, 2].

Also, the torsional vibration of some uniform beams is modelled by higher-order boundary value problems (BVP) in [3]. In addition, it has been used in modeling the viscoelastic or inelastic flows and studying the effect on the deformation on beams which can be simulated using a fourth-order BVP [4]. Many other models are simulated using these higher-order versions of the BVP and the reader can refer to [5,6,7,8] and their references.

The study of the higher-order boundary value problem (HBVP) has gotten the immense attention of researchers’s community for the last few decades. This was the reason that many researchers tried to investigate the solution to these problems using different techniques. These techniques are based on many different approaches and each has its advantages and disadvantages. For example, the reproducing kernel space method [9], sinc-Galerkin method [10], differential transform [11], homotopy perturbation method [12,13,14], quintic spline [15], Adomian decomposition method [16, 17], B-spline method [18], non-polynomial spline functions [19], Chebychev polynomial solutions [20], Euler matrix method [21], Galerkin residual technique with Bernstein and Legendre polynomials [22], variational iteration method [23], homotopy perturbation [24], Legendre-homotopy method [25], Haar wavelets [26], Legendre-Galerkin method [27], wavelet based hybrid method [28], Laplace series decomposition method [29], Quintic B-spline collocation method [30] and Spectral monic chebyshev approximation [31]. In addition, the fractional-order models of this class of equations gain sand increasing interest with the rise of the fractional calculus due to their applications and ability to simulate complex phenomenons. Ganji et. al use a fractional-order model to simulate the brain tumor and their behaviors [32]. Also, Ganji et. al in [33] investigated a fractional population model including the prey-predator and logistic models. A novel numerical approach in [34] was presented to find the solution of a fractional optimal control problem with the Mittag–Lefler kernel. A collocation method based on shifted Chebyshev polynomials of the third kind was introduced in [35] by Polat et. al to solve the multi-term fractional model of fractional order. Further models and methods were proposed for solving real-life phenomenon like the wave equation [36], Bagely–Torvik equation [37, 38], ODE with Gomez–Atangana–Caputo derivative [39], fractional KdV and KdV-Burgers [40], time-fractional Fisher’s model [41], time-fractional Klein-Gordon equations [42], fractional order diffusion equation [43] and mathematical model of atmospheric dynamics of CO2 gas [44].

In this paper, we are concerned with the study of the HBVP in the form

with boundary conditions

where \(\xi (x,u(x))\) and u(x) are both continuous functions defined on the interval \(0\le x\le 1\) and \(\sigma _{m}\) is a constant.

We are concerned in this work with the application of a novel collocation method based on Genocchi polynomials for solving Eq. (1.1). Genocchi collocation (GC) techniques have been playing an increasing role in solving different types of application problems. Researchers have been trying to expand the use of this technique for solving application problems in different areas of science and engineering. For example, Genocchi polynomials have been used for solving fractional partial differential equations using the definitions of Atangana-Baleanu derivative [45]. Also, a fractional model of the SEIR epidemic has been investigated in [46] with the aid of the same polynomials. Other models including nonlinear fractional differential equations [47], integral and integrodifferential equations [48], and delay differential equations [49]. As far as we knew, this is the first attempt to solve HBVP using the Genocchi collocation technique.

The novelty of the proposed technique can be summarized in the following few points:

-

A novel numerical technique based on the Genocchi polynomials is presented.

-

This technique is applied for the linear and nonlinear case and the nonlinear system especially is solved using a new iterative technique.

-

The method is tested on five different examples to ensure that the method is effective and accurate.

The organization of the paper is as follows: Sect. 2 provides some preliminaries regarding the proposed technique. Section 3, presents the function approximation, fundamental relations, Genocchi operational matrix, GC scheme, and a few related theorems. The upper bound for the proposed method is illustrated in Sect. 4 in details. Section 5 describes the results and discussions. Conclusions and future research guidance are listed in the last section.

Basic Definitions

In this section, some basics regarding the Genocchi numbers and polynomials shall be presented. Genocchi polynomials have been widely used in multiple areas of mathematics including the complex analytic number and other relative branches. We can define the Genocchi polynomials and Genocchi numbers by the generating functions [49,50,51]:

where \(G_n(x)\) are the Genocchi polynomials of degree n and are defined on interval [0, 1] as

where \(G_k\) is the Genocchi numbers.

These polynomials have many interesting properties and the most important among them is the differential property which can be generated by differentiating both sides of Eq. (2.3) concerning x, then we have

If we differentiate (2.3) k times then we have

Next, we will use the differential property to generate the Genocchi operational matrix of differentiation which will be used later for solving Eq. (1.1).

Genocchi Differentiation Matrices

First, we express the approximate solution in Eq. (1.1) in the following form

where \(\mathbf {C}\) are the unknown Genocchi coefficients and \(\mathbf{G}(x) \) are the Genocchi polynomials of the first kind, then they are given by

The \(k^{th}\) derivative of \(u_N(x)\) can be expressed by

where \(\mathbf{M} \) is \(N \times N\) operational matrix of derivative, and can be defined as

Using collocation points defined by

to approximate the Genocchi polynomials, we reach the following

In the next section, we shall demonstrate the main steps for applying the collocation technique based on Genocchi polynomials.

Genocchi Collocation Technique

In this section, we shall investigate the adaptation of our proposed technique for solving a linear and nonlinear form of the HBVP. First, we begin with the linear form in the next subsection.

Case I: Linear Case

We first begin by letting \(\xi (x,u)=f(x)\) into the main equation which may lead us to

The approximate solution for u(x) can be represented by

where the Genocchi coefficients vector \(\mathbf {C}\) and the Genocchi polynomials vector \( \mathbf {G}(x)\) are given by

and the \(k^{th}\) derivative of \(u_N(x)\) can be expressed in the form

After substituting the approximate solution represented in Eq. (3.2) and its derivatives from Eq. (3.3), we reach the following theorem.

Theorem 3.1

If the assumed approximate solution for the problem (3.1) in Eq. (3.2), then the discrete Genocchi system for determining the unknown coefficients can be represented in the form

Proof

If we replace the approximate solution defined in Eqs. (3.2), (3.3) into the main equation in (3.1) and then by applying the collocation points \(x = x_i\) defined as

we reach the following matrix form for the Genocchi system as

where

and

Also, the boundary conditions can be described in the form

Finally, replacing the first and last r rows of the augmented matrix \([\varvec{\Theta }, \varvec{\Xi }]\) with boundary conditions, then the new augmented matrix becomes

which gives a \(N \times N\) system of linear algebraic equations. By solving this system the unknown coefficients \(\mathbf {C}\) can be evaluated. \(\square \)

Next, we will investigate the application of Genocchi collocation method for solving the nonlinear form of Eq. (1.1).

Case II: Nonlinear Case

In this subsection, we assign the value of \(\xi (x,u)=f(x)-q(x)(u(x))^v\) in Eq. (1.1). Thus, we reach the following equation

The nonlinear term in Eq. (3.6) after substituting collocation points \( x=x_i\) is needed to be evaluated and to do this we need the following theorem.

Theorem 3.2

[52] The nonlinear term of the function \(u^v(x_i),i=1,2,\ldots ,N\) can be expressed as in the following form

where

Substituting this into Eq. (3.6), we conclude to the next theorem.

Theorem 3.3

If the approximate solution of the discrete Genocchi system of Eq. (3.6) after expanding the nonlinear term using Theorem 3.2, then the system can be expressed in the form

By the same way presented in Sect. 3.1 and with the aid of the previous theorem, the Genocchi matrix system can be written in matrix form

where

and

It is worth mentioning that the nonlinear part in Eq. (3.6) may take the form \(\xi (x,u)=f(x)-q(x)u^v(x) u^{(r)}(x)\) or \(\xi (x,u)=f(x)-q(x)u^{(v)}(x) u^{(r)}(x)\), then the approximation for this part shall take the form

and

where

Replacing the first r and last r rows of the augmented matrix with boundary conditions matrices then the augmented matrix becomes as

Solving this \( (N \times N)\) linear system to obtain N unknown coefficients using the algorithm in [52]. In the next section, we acquire the upper bound of error for the proposed method.

Error Analysis

In this section, we shall provide the error bound for the approximated function f(x). First, suppose that the function f(x) be an arbitrary element of \(H=L^2 [0,1]\) and \(U=span\{{G_1 (x),G_2 (x), \ldots ,G_N (x)}\} \subset H \) where \(\{{G_n (x)}\}_{n=1}^N \) is the set of Genocchi polynomials. Let f(x) has a unique best approximation in the space U, that we can say that \( f^* (x)\) such that \(\forall u(x) \in U \) and \(\left\| f(x)-f^* (x)\right\| _2 \le \left\| f(x)-u(x)\right\| _2 \). Since, \( f^* (x)\in U \), there exist a unique coefficient in the form \({\{c_n \}}_{n=1}^N \) such that

where \(\mathbf {C}=\left[ c_1,c_2,c_3,\ldots ,c_N \right] \), \(\mathbf {G(x)}=\left[ G_1 (x),G_2 (x),\ldots ,G_N (x)\right] ^{T}\). In order to obtain the values of the coefficients, we need the following lemmas.

Lemma 4.1

Assume that \(f\in H=L^2 [0,1]\) , is an arbitrary function that can be approximated by the Genocchi series \(\sum _{n=1}^{N}c_n G_n(x)\), then the unknown coefficients \({\{c_n \}}_{n=1}^N \) can be evaluated from the following form

Proof

For proof, please refer to [49]. \(\square \)

Lemma 4.2

Suppose that \(f(x) \in C^{(n+1)} [0,1]\) and \( U=span\{{G_1 (x),G_2 (x),\ldots ,G_N (x)}\}\) where \(C^T G\) is the best approximation of the function f(x) out of U, then

where \(\left\| \xi _N (f)\right\| =\left\| f(x)-C^T G\right\| \) and \(W=\max \limits _{ x\in [0,1]} |f^{(n+1)} (x)| \) and \(h=x_{i+1}-x_i\) .

Proof

For proof, please refer to [47]. \(\square \)

With the aid of the previous two lemmas, we reach the following theorem.

Theorem 4.1

Suppose that the function u(x) be an enough smooth function and that \(u_N (x)\) be the truncated Genocchi series solution of u(x). Then the error bound can be in the form

where \(\varvec{\aleph }=\frac{\frac{2n+3}{2}}{1-\ell _\mathcal {M}}\) and \(\mathbf {W}=\max \limits _{ x\in [0,1]} |f^{(n+1)} (x)|\).

Proof

The operator form of Eq. (1.1) can be in the following form

where the differential operator \({\mathcal {L}}\) can be defined by

and the inverse of the operator \({\mathcal {L}}^{-1}\) is considered as the 2r fold integral operator of the differential operator \({\mathcal {L}}\) and can take the form

Then, by applying the inverse operator \({\mathcal {L}}^{-1}\) defined before on Eq. (1.1) we get

Next, if we approximate both the functions f(x) and u(x) using the truncation of the Genocchi polynomials as \(\chi (x)\) and \({\mathcal {H}}(x,u_N(x))\), respectively, then the approximation for \(u_{N}(x)\) can be defined in the form

Therefore, by subtracting the last two equations we conclude that

where \(\ell _{\mathcal {M}}\) defined as the Lipschitz constant for the function \({\mathcal {M}}(x,u_N(x))\), then

Finally, with the aid of Lemma (4.2) we reach the following

where \(\varvec{\aleph }=\frac{1}{1-\ell _m}\) .

From Eq. (4.8) we can conclude that the theorem gives the error for \(u_N (x)\) which shall give the solution when using sufficient values of n. \(\square \)

Residual Error Function

In this subsection, we can easily check the accuracy of the suggested method in terms of the residual error function. Since the truncated Genocchi series in Eq. (2.7) is considered as an approximate solution of Eq. (1.1), then by substituting the approximate solution \(u_N (x)\) and its derivatives into Eq. (1.1), the resulting equation must be satisfied and when substituting the collocation points defined as

The residual error function for the approximate solution can be calculated in the from

or

where \(\Re _N (x_i)\) are the residual error function defined at the collocation points \(x_i\) and \(\tau _{i}\) is any positive integer that can be pre-described and can be defined as the tolerance for reaching the desired error. Then, the value of the number of iterations N is increased until the residual error \(\Re _N (x_i)\) at each of the points become smaller than the prescribed tolerance \(10^{\tau _{i}}\) which shall prove that the method converge to the desired solution as the residual error approaches zero. Also, we can calculate the error function at each of the collocation points to prove the efficiency of the proposed technique that can be described as

Then, if \(u_N (x) \rightarrow 0\) , as N has sufficiently enough value, then the residual error decreases and this proves that the proposed method converge correctly.

Numerical Simulation

In this section, we are interested to show the efficiency of our proposed method for solving the class of HOBVP through different forms of linear and nonlinear examples. The method is tested and compared to other relevant methods form the literature including [23, 26, 27, 31, 52,53,54,55,56,57,58,59,60,61,62]. The results presented are being acquired with the aid of Matlab 2015. Also, the performance will be checked through calculating the maximum absolute error from the following equation

Example 5.1

[52,53,54,55] In our first example, we consider a linear form of the BVP with variable c in the form

with boundary conditions

and exact solution

We need first to find the approximate solution \(u_N(x)\) in terms of Genocchi series for \(N=6\) in the form

Then

Using collocation points \(x_i=\frac{i-1}{5},\quad i=1,2,\ldots ,6\), then the augmented matrix can be acquired in the form

Also, the augmented matrix for the boundary conditions can take the forms

Replacing the first two and last two rows with the previous representation of the boundary conditions, the new augmented matrix takes the form

Then, by solving the above system the Genocchi coefficients can be found as

and the approximate solution is

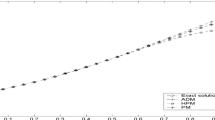

Our method is tested in this example and the results are shown in Table 1 for the maximum absolute error for \(c=10\) and different values of N and compared with Bernoulli method in [52] besides the maximum residual error. Also, Table 2 compare the maximum absolute error for the differential transform method [53], reproductive Kernel method [54], Haar wavelet method [55] and Genocchi method for different values of c at \(N=18\) . It can be noticed from these tables that our method perform better than the other mentioned methods and this can be also witness from Fig. 1 in which the exact and approximate solution are drawn at \(N=18\) and \(c=10\).

Solution profiles for Example 5.1

Example 5.2

[26, 27, 56] Next, we consider our next example of the sixth-order BVP in the special form with a variable coefficient c in the form

with conditions in the form

with exact solution

Table 3 shows the maximum absolute error comparison at \(N=18\) for different values of \((c=10,100,1000)\) with maximum residual error (\(6.4429E-11,6.4429E-11,6.4429E-11\)) between Genocchi method, Variational decomposition method [56], Legendre Galerkin method [27] and wavelet method [26]. In addition, Fig. 2 illustrates the behavior of exact and approximate solution at \(N=18\).

Comparison between exact and approximate Genocchi solution for Example 5.2

Example 5.3

[31] Next, we consider another form of the linear sixth-order BVP defined on an extended interval of \([-1,1] \) in the form

with boundary conditions

the exact solution of this problem is

In this example, we have done the same steps for solving the linear system only with changing the interval of the Genocchi polynomials to \([-1,1]\). Maximum absolute errors for (\(N=20, 24\)) obtained by our method are compared with results obtained by spectral monic Chebyshev approximation [31] and represented in Table 4.

Example 5.4

[59] Consider the nonlinear tenth-order BVP of the form

with boundary conditions

the exact solution of this problem is

The values of the maximum absolute error for different values of N with \( tol=10^{-7}\) are tabulated in Table 5. Also, a comparison is presented for absolute error obtained by Genocchi method with thr new iterative method (NIM) [59] for \(N=14\) in Table 6 .The exact and approximate solution in Fig. 3.

Comparison between exact and Genocchi solution for Example 5.4

Comparison between exact and Genocchi solution for Example 5.5

Example 5.5

[23, 61] Consider the following nonlinear twelfth order BVP

with boundary conditions

the exact solution of the problem is

Table 7 represents approximate solution compared with the exact solution, and the absolute error obtained by our method with \(N=16\) and \(tol=10^{-10}\) compared with absolute error obtained by Optimal Homotopy Asymptotic Method (OHAM) [61] and Variational iteration method [23]. Figure 4 expresses the relation between exact and approximate Genocchi solution.

Conclusion

In this paper, we have developed a collocation technique based on the Genocchi polynomials for solving a wide class of linear and nonlinear higher-order BVP. The method is analyzed and the basic definitions for the Genocchi polynomials are introduced and then has been used to solve a general form of the problem. The nonlinear form of the presented equation is investigated using this technique and the resulting nonlinear system of algebraic equations is then solved using a novel iterative algorithm that produces the unknown coefficients with less computational effort. To verify the technique, several linear and nonlinear examples of different order are presented and the acquired results are provided throughout some tables and figures. The results show the superiority of the proposed technique to other techniques from the literate especially for the nonlinear examples with the new iterative algorithm. The behavior of the solution can be witnessed from the figures and this supports the claim that the method is fast and effective for providing accurate results. It will be interesting to see in the future how this method will work in solving the nonlinear higher-order delay differential equations.

Data Availability

All data generated or analyzed during this study are included in this article.

References

Howard, L.N., Gupta, A.S.: On the hydrodynamic and hydromagnetic stability of swirling flows. J. Flu. Mech. 14, 463–476 (1962)

Chandrasekhar, S.: Hydrodynamic and Hydromagnetic Stability. Clarendon Press, Oxford (1961)

Bishop, D., Cannon, S., Miao, S.: On coupled bending and torsional vibration of uniform beams. J. Sound Vib. 131, 457–464 (1989)

Davies, A., Karageoghis, A.: Spectral Galerkin methods for the primary two-point boundary value problem in modelling viscoelastic flows. Int. J. Numer. Math. Eng. 26, 647–652 (1988)

Boutayeb, A., Twizell, E.: Numerical methods for the solution of special sixth-order boundary value problems. Int. J. Comput. Math. 45, 207–233 (1992)

Siddiqi, S., Twizell, E.: Spline solutions of linear sixth-order boundary value problems. Int. J. Comput. Math. 60, 295–307 (1996)

Liang, A., Jeffrey, A.: An efficient analytical approach for solving fourth-order boundary value problems. Comput. Phys. Commun. 180, 2034–2040 (2009)

Ascher, U., Mattheij, R., Russell, R.: Numerical Solution of Boundary Value Problems for Ordinary Differential Equations. Society for Industrial and Applied Mathematics, Philadelphia (1995)

Akram, G., Rehman, H.: Numerical solution of eighth order boundary value problems in reproducing kernel space. Numer. Algor. 62, 527–540 (2013)

El-Gamel, M., Abdrabou, A.: Sinc-Galerkin solution to eighth-order boundary value problems. SeMA J. 76, 249–270 (2019)

Suat, V., Momani, S.: Comparing numerical methods for solving fourth-order boundary value problems. Appl. Math. Comput. 188, 1963–1968 (2007)

Mohyud-Din, S., Noor, M.: Homotopy perturbation method for solving fourth-order boundary value problems. Math. Prob. Eng. 27, 1–15 (2007)

Noor, M., Mohyud-Din, S.: Homotopy perturbation method for solving sixth-order boundary value problems. Comput. Math. Appl. 55, 2953–2973 (2008)

Golbabai, A., Javidi, M.: Application of homotopy perturbation method for solving eighth-order boundary value problems. Appl. Math. Comput. 191, 334–346 (2007)

Lang, F., Xu, X.: An effective method for numerical solution and numerical derivatives for sixth-order two-point boundary value problems. Comput. Math. Math. Phys. 55, 811–822 (2015)

Wazwaz, A.: The numerical solution of special fourth- order boundary value problems by the modified decomposition method. Int. J. Comput. Math. 79, 345–356 (2002)

Wazwaz, A.: The numerical solutions of special eighth-order boundary value problems by the modified decomposition method. Neural Parallel Sci. Comput. 8, 133–146 (2000)

El-Gamel, M., El-Shamy, N.: B-spline and singular higher-order boundary value problems. SeMA J. 73, 287–307 (2016)

Siddiqi, S., Akram, G.: Solution of eighth-order boundary value problems using the non-polynomial spline technique. Int. J. Comput. Math. 84, 347–368 (2007)

El-Gamel, M.: Chebychev polynomial solutions of twelfth-order boundary-value problems. J. Adv. Math. Comput. Sci. 6, 13–23 (2015)

El-Gamel, M., Adel, W.: Numerical investigation of the solution of higher-order boundary value problems via Euler matrix method. SeMA J. 75, 349–364 (2018)

Islam, M., Hossain, M.: Numerical solutions of eighth-order bvp by the Galerkin residual technique with Bernstein and Legendre polynomials. Appl. Math. Comput. 261, 48–59 (2015)

Noor, M., Mohyud-Din, S.: Variational iteration method for solving twelfth-order boundary-value problems using he’s polynomials. Comput. Math. Model. 21, 239–251 (2010)

Mohyud-Din, S., Yildirim, A.: Solution of tenth and ninth-order boundary value problems by homotopy perturbation method. J. Korean Soc. Indus. Appl. Math. 14, 17–27 (2020)

Sadaf, M., Akram, G.: A Legendre-homotopy method for the solutions of higher-order boundary value problems. J. King Saud Uni. Sci. 32, 537–543 (2020)

Haq, F., Ali, A., Hussain, I.: Solution of sixth-order boundary value problems by collocation method using haar wavelets. Int. J. Phys. Sci. 7, 5729–5735 (2012)

El-Gamel, M., El-Azab, M., Fathy, M.: The numerical solution of sixth-order boundary value problems by Legendre-Galerkin method. Acta Univ. Apulensis Math. Inf. 40, 145–165 (2014)

Arora, G., Kumar, R., Kaur, H.: A novel wavelet based hybrid method for finding the solutions of higher-order boundary value problems. Ain Shams Eng. J. 9, 3015–3031 (2018)

Akinola, E., Akinpelu, F., Areo, A., Akanni, J., Oladejo, J.: The mathematical formulation of Laplace series decomposition method for solving nonlinear higher-order boundary value problems in finite domain. Int. J. Innov. Sci. Res. 28, 110–114 (2017)

Lang, F., Xu, X.: Quintic B-spline collocation method for second order mixed boundary value problem. Comput. Phys. Commun. 183, 913–921 (2012)

Abdelhakem, M., Ahmed, A., El-Kady, M.: Spectral monic chebyshev approximation for higher-order differential equations. Math. Sci. Lett. 8, 11–17 (2019)

Ganji, R., Jafari, H., Moshokoa, S., Nkomo, N.: A mathematical model and numerical solution for brain tumor derived using fractional operator. Results Phys. 28, 104671 (2021)

Jafari, H., Ganji, R., Nkomo, N., Lv, Y.: A numerical study of fractional order population dynamics model. Results Phys. 27, 104456 (2021)

Jafari, H., Roghayeh, Ganji M., Sayevand, K., Baleanu, D.: A numerical approach for solving fractional optimal control problems with Mittag-Leffler kernel. J. Vib. Control 10775463211016968 (2021)

Tural-Polat S., Turan Dincel A.: Numerical solution method for multi-term variable order fractional differential equations by shifted Chebyshev polynomials of the third kind. Alex. Eng. J. (2021)

Kadkhoda, N., Jafari, H., Ganji, R.: A numerical solution of variable order diffusion and wave equations. Int. J. Nonlinear Anal. Appl. 12, 27–36 (2021)

Mekkaoui, T., Hammouch, Z.: Approximate analytical solutions to the Bagley–Torvik equation by the fractional iteration method. Ann. Univ. Craiova Math. Comput. Sci. Ser. 39, 251–256 (2012)

El-Gamel, M., Abd, El-Hady M.: Numerical solution of the Bagley–Torvik equation by Legendre-collocation method. SeMA J. 74, 371–383 (2017)

Mekkaoui, T., Hammouch, Z., Kumar, D., Singh, J.: A new approximation scheme for solving ordinary differential equation with Gomez-Atangana Caputo fractional derivative. Methods Math. Modell, pp. 51–62. CRC Press, Cambridge (2019)

Khader, M., Saad, K., Hammouch, Z., Baleanu, D.: A spectral collocation method for solving fractional KdV and Kdv-Burgers equaions with non-singular kernel derivatives. Appl. Numer. Math. 161, 137–146 (2021)

Rashid, S., Hammouch, Z., Aydi, H., Ahmad, A., Alsharif, A.: Novel computationsof the time-fractional Fisher’s model via generalized fractional integral opreators by means of the Elzaki transform. Fractal Fract 94 (2021)

Ganji, R., Jafari, H., Kgarose, M., Mohammadi, A.: Numerical solutions of time-fractional Klein-Gordon equations by clique polynomials. Alex. Eng. J. 60, 4563–4571 (2021)

Aghdam, Y., Safdari, H., Azari, Y., Jafari, H., Baleanu, D.: Numerical investigation of space fractional order diffustion equation by the Chebyshev collocation method of the fourth kind and compact finite difference scheme. Discrete Continuous Dyn. Syst. Syst. 14, 2025 (2021)

EIlhan, P., Baskonus, H.: Fractional approach for a methematical model of atmosheric dynamics of CO2 gas with an efficient method. Chaos Solitons Fractals 152, 1–10 (2021)

Sadeghi, S., Jafari, H., Nemati, S.: Operational matrix for Atangana-Baleanu derivative based on Genocchi polynomials for solving FDEs. Chaos Solitons Fractals 135, 109736 (2020)

Kumar, S., Ranbir, K., Osman, M., Samet, B.: A wavelet based numerical scheme for fractional order SEIR epidemic of measles by using Genocchi polynomials. Numer. Methods Partial Differ. Equ. 37, 1250–1268 (2021)

Isah, A., Phang, C.: New operational matrix of derivative for solving non-linear fractional differential equations via Genocchi polynomials. J. King Saud Uni. Sci. 31, 1–7 (2019)

Shiralashetti, S., Kumbinarasaiah, S.: CAS wavelets analytic solution and Genocchi polynomials numerical solutions for the integral and integro-differential equations. J. Interdiscipl. Math. 22, 201–218 (2019)

Isah, A., Phang, C.: Operational matrix based on Genocchi polynomials for solution of delay differential equations. Ain Shams Eng. J. 9, 2123–2128 (2018)

Araci, S.: Novel identities for q-genocchi numbers and polynomials. J. Funct, Spaces Appl (2012)

Ozden, H., Simsek, Y., Srivastava, H.: A unified presentation of the generating functions of the generalized Bernoulli, Euler and genocchi polynomials. Comput. Math. Appl. 60, 2779–2787 (2010)

El-Gamel, M., Adel, W., El-Azab, M.: Bernoulli polynomial and the numerical solution of higher-order boundary value problems. Math. Nat. Sci. 4, 45–59 (2019)

Momani, S., Noor, M.: Numerical comparison of methods for solving a special fourth-order boundary value problem. Appl. Math. comput. 191, 218–224 (2007)

Geng, F.: A new reproducing kernel Hilbert space method for solving nonlinear fourth-order boundary value problems. Appl. Math. Comput. 213, 163–169 (2009)

Ali, A.: Numerical solution of fourth order boundary-value problems using haar wavelets. Appl. Math. Sci 5, 3131–3146 (2011)

Noor, M., Noor, K., Mohyud-Din, S.: Variational iteration method for solving sixth-order boundary value problems. Commun. Nonlinear Sci. Numer. Simul. 14, 2571–2580 (2009)

Inc, M., Evans, D.: An efficient approach to approximate solutions of eighth-order boundary-value problems. Int. J. Comput. Math. 81, 685–692 (2004)

Singh, R., Kumar, J., Nelakanti, G.: Approximate series solution of fourth-order boundary value problems using decomposition method with green’s function. J. Math. Chem. 52, 1099–1118 (2014)

Ullah, I., Khan, H., Rahim, M.: Numerical solutions of higher-order nonlinear boundary value problems by new iterative method. Appl. Math. Sci. 7, 2429–2439 (2013)

Agarwal, P., Attary, M., Maghasedi, M., Kumam, P.: Solving higher-order boundary and initial value problems via chebyshev spectral method: application in elastic foundation. Symmetry 12, 987 (2020)

Naeem, M., Muhammad, S., Hussain, S., Din, Z., Ali, L.: Applying homotopy type techniques to higher-order boundary value problems. J. Math. 51, 127–139 (2019)

Aydinlik S., Kiris A.: A generalized chebyshev finite difference method for higher-order boundary value problems. ArXiv preprint arXiv:1609.04064 (2016)

Acknowledgements

The authors are grateful to the referees for their valuable comments and suggestions on the original manuscript.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All the authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

El-Gamel, M., Mohamed, N. & Adel, W. Numerical Study of a Nonlinear High Order Boundary Value Problems Using Genocchi Collocation Technique. Int. J. Appl. Comput. Math 8, 143 (2022). https://doi.org/10.1007/s40819-022-01262-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s40819-022-01262-z