Abstract

This paper presents a new approximate method based on operational matrices of fractional integrations and differentiations for fractional Navier–Stokes equation in polar coordinate system using Legendre scaling functions as a basis. Convergence analysis as well as error analysis of the proposed methods is given. Numerical stability of the method is shown. Numerical examples are given to show the effectiveness of the proposed method. Results are compared with existing analytical methods to show the accuracy of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The Navier–Stokes equation (NSE) is fundamental equation of the computational fluid dynamics. It relates pressure and external forces acting on a fluid to the response of the fluid flow. The NSE and continuity equations are given by

where \(\underline{u}\) is the velocity, \(\nu \) is the kinematics viscosity, p is the pressure, \(\rho \) is density and t is the time variable.

We transfer all motion in polar coordinates \((r,\theta ,z)\) where z axis coincides with the axis of cylinder. Taking \(u_r =u_\theta =0\) and \(u_z =u(r,t)\), the NSE in polar coordinate reduces to following form

Recently El-Shahed and Salem [6] and Odibat and Momani [21] have generalised the classical NSE by replacing first order time derivative to fractional order time derivative \(\alpha \), where \(0<\alpha \le 1\). In the present paper we shall consider the unsteady flow of a viscous fluid in a tube in which the velocity field is a function of only one space coordinate and time as one of the dependent variable. So our aim is to study numerically the following generalised form of FNSE [6, 21], namely

subject to the initial and boundary conditions:

where \(P=-\frac{\partial p}{\rho \partial z}\), r is spatial domain.

There exist several analytical methods to solve fractional Navier–Stokes equations. In [21], Odibat and Momani solve Eq. (3) using Adomian decomposition method (ADM). Some other analytical approaches are modified Laplace decomposition method [15], homotopy analysis method [25], homotopy perturbation method [9], homotopy perturbation transform method [2]. In [16], Chaurasia and Kumar used Mittag–Leffler and Bessel functions to obtain analytical solution of FNSE in circular cylinder.

In this paper we are using numerical approach based on operational matrices of fractional integration and differentiation. There exist a vast literature for theory and applications of fractional differential equations in areas such as hydrology [1, 13, 18, 27, 28], physics [7, 8, 12, 19, 23, 32, 35] and finance [10, 24, 26]. For construction of operational matrices and their applications to solve fraction differential equations see [14, 17, 31, 33, 34]. In this method we first get finite dimensional approximate solution taking finite dimensional basis in r–t plane, which in turn leads to a system of linear algebraic equations whose solution is obtained using Sylvester’s approach. This in turn gives us the approximate solution of the FNSE.

In general for the existence and uniqueness of the solution for the fractional Navier–Stokes equation (FNSE) there does not exist any analytical methods. Stability and convergence are not shown for the methods given above. In this paper we give a new stable numerical approach along with error and convergence analysis for FNSE. Due to the complexity and non-local nature of the fractional order derivatives, it is not encouraged to search for a strong solution. In this paper, we solve this issue by giving solution in the sense of association in the same lines as developed by Colombeau, see [3, 4, 22]. This gives satisfactory concept of solution. With some additional condition on approximating sequence, we obtain the strong solution.

The present paper is organised as follows. In second section, we describe basic preliminaries. In third section, we construct operational matrices of fractional differentiation and integration using Legendre scaling functions as basis. In fourth section, we describe the algorithm for the construction of approximate solutions. In fifth section, we give the error analysis of the proposed method. In sixth section, we describe the convergence of the method. In seventh section, we discuss the stability of our method based on maximum absolute error and root mean square error. In eighth section, we present numerical experiments and discussions to show the effectiveness of the proposed method.

Preliminaries

There are several definitions of fractional order derivatives and integrals. These are not necessarily equivalent. In this paper, the fractional order differentiations and integrations are in well-known Caputo and Riemann–Liouville sense respectively [5, 20].

The Legendre scaling functions \(\left\{ {\phi _i (t)} \right\} \) in one dimension are defined by

where \(P_i (t)\) is Legendre polynomials of order i on the interval \([-1,1]\), given explicitly by the following formula;

Using one dimensional Legendre scaling functions, we construct two dimensional Legendre scaling function \(\phi _{i_1 ,i_2 } \),

An explicit expression of two dimensional Legendre scaling functions are given as

From the above formula it is clear that two dimensional Legendre scaling functions are orthogonal;

and \(\left( {\phi _{i_1 ,i_2 } } \right) \) form a complete orthonormal basis.

So, a function \(f(x,t)\in L^{2}([0,1]\times [0,1])\), can be approximated as

where \(C=[c_{0,0} ,\ldots ,c_{0,n_2 } ,\ldots ,c_{n_1 ,1} ,\ldots ,c_{n_1 ,n_2 } ]^{T}\);

The coefficients \(c_{i_1 ,i_2 } \) in the Fourier expansions of f(x, t) are given by the formula,

Using matrix notation Eq. (5) can be written as,

where \(\phi (x)=[\phi _0 (x),\ldots ,\phi _{n1} (x)]^{T}\), \(\phi (t)=[\phi _0 (t),\ldots ,\phi _{n2} (t)]^{T}\) and \(C=\left( {c_{i_1 ,i_2 } } \right) _{(n_1 +1)\times (n_2 +1)} \).

Operational Matrices

Theorem 3.1

Let \(\phi (x)=[\phi _0 (x),\phi _1 (x),\ldots ,\phi _n (x)]^{T}\), be Legendre scaling vector and consider \(\alpha >0\), then

where \(I^{(\alpha )}=\left( {\left. {\omega (i,j)} \right) } \right. \), is \((n+1)\times (n+1)\) operational matrix of fractional integral of order \(\alpha \) and its (i, j)th entry is given by

Proof

Pl. see [29]. \(\square \)

Theorem 3.2

Let \(\phi (x)=[\phi _0 (x),\phi _1 (x),\ldots ,\phi _n (x)]^{T}\), be Legendre scaling vector and consider \(\beta >0\), then

where \(D^{(\beta )}=\left( {\eta (i,j)} \right) \), is \((n+1)\times (n+1)\) operational matrix of Caputo fractional derivative of order \(\beta \) and its (i, j)th entry is given by

Proof

Pl. see [30]. \(\square \)

Method of Solution

In this section for any approximation we take \(n_1 =n_2 =n\), and describe the algorithm for the construction of approximate solution of the Eq. (3). If \(D_t^\alpha u=w\), then

Eqs. (3) and (11) are equivalent. Equation (11) can be written as

Let

From Eqs. (12) and (13) we can write,

Approximating

where C is a square matrix to be found.

Using Eq. (15) and operational matrices for the operators \(D_r^2 I_t^\alpha \) and \(D_r^1 I_t^\alpha \) in Eq. (14), we obtain,

where \(I_t^{(\alpha )}\) is an operational matrix of fractional integration of order \(\alpha \) and \(D_r^{(1)}\), \(D_r^{(2)}\) are an operational matrix of fractional differentiation of order 1 and 2 respectively.

Further approximating the following approximations

where the matrix A, B and E are known matrices and can be calculated using Eq. (6).

Now Eq. (20) can be written as,

where \(L=\nu ((D_r^{(2)} )^{T})+inverse (E) (D_r^{(1)} )^{T}\), \(M=-inverse (I_t^{(\alpha )} )\) and \(N=inverse (E)(A+B)inverse (I_t^{(\alpha )} ).\)

Equation (21) is a Sylvester equation which can be solved easily to get the matrix C.

Using the value of C in Eq. (15), we can find w and further using value of w in Eq. (10) we can obtain an approximate solution for time fractional Navier–Stokes equation.

Error Analysis

Theorem 5.1

Let \(\frac{\partial ^{\alpha }f(x,t)}{\partial t^{\alpha }}\in L^{2}([0,1]\times [0,1])\), and \(\frac{\partial ^{\alpha }f_n (x,t)}{\partial t^{\alpha }}\) be its approximation obtained by using \((n+1)^{2}\), 2-dimensional Legendre scaling vectors. Assuming \(\left| {\frac{\partial ^{\alpha +4}f(x,t)}{\partial x^{2}\partial t^{\alpha +2}}} \right| \le K\), we have the following upper bound for error

where,

and \(F_n (z)\) is the Poly Gamma function defined by,

Proof

Let \(\frac{\partial ^{\alpha }f(x,t)}{\partial t^{\alpha }}=\sum _{i_1 =0}^\infty {\sum _{i_2 =0}^\infty {c_{i_1 ,i_2 } \phi _{_{i_1 ,i_2 } } } } (x,t)\). Truncating it to level n, we get \(\left( {\frac{\partial ^{\alpha }f_n (x,t)}{\partial t^{\alpha }}} \right) =\sum _{i_1 =0}^n {\sum _{i_2 =0}^n {c_{i_1 ,i_2 } \phi _{_{i_1 ,i_2 } } } } (x,t)\), thus,

Similar process as in [11] and using our condition, we get,

\(\square \)

Convergence Analysis

Definition 6.1

A sequence of functions \(u_n\) is said to be a solution in the sense of association of \(L(u)=f\), \(u(0,x)=u_0 (x)\), where L is a linear fractional differential operator involving fractional derivatives and integrations with respect to x and t; if \(\mathop {\lim }\nolimits _{n\rightarrow \infty } \left\langle {L(u_n )-f_n ,\left. \phi \right\rangle } \right. =0\), for all \( C^{\infty }\) function \( \phi \) having compact support in \( (0,1)\times (0,1)\).

Theorem 6.1

If the constructed approximations \(\{w_n \}\), where \(w_n (r,t)=\phi ^{T}(r)C\phi (t)\), satisfy \(\left\| {\bar{{L}}(w_n )-h_n -G_n } \right\| _{L^{2}} <K,\forall n;\) then, \(w_n\) is a solution in the sense of association, where \(\bar{{L}}(w)=rw-\nu \left( {rD_r^2 (I_t^\alpha (w))+D_r^1 (I_t^\alpha (w))} \right) \).

Proof

Observe that \( w_n (r,t)=\phi ^{T}(r)C\phi (t)\) satisfy

Let \(V_n =\) span \(\{\phi _i (r)\phi _j (t): i=0,1,\ldots ,n. j=0,1,\ldots ,n\}\).

Then by linearity,

Since \(\left( {\phi _{i_1 ,i_2 } } \right) \) form a complete orthonormal basis for the Hilbert space \(L^{2}\left( {[0,1]\times [0,1]} \right) \), we can get \(\psi _n \in V_n\), such that

Our assumption,

in Eq. (28), We can write

Now using Eq. (28), in (31), we get

from Eqs. (29), (30) and (32), it follows that \(\mathop {\lim }\nolimits _{n\rightarrow \infty } \left\langle \bar{{L}}(w_n )-h_n -G_n , {\psi (r,t)} \right\rangle =0\).

So \(w_n \) is a solution in the sense of association. \(\square \)

Corollary 6.1

In addition to statement of the Theorem 6.1, if \(\bar{{L}}(w_n )\) converges to \(\bar{{L}}(w)\) in \(L^{2}\) norm then w forms a strong solution.

Proof

By the Theorem 6.1, \(w_n \) forms a solution in the sense of association so

\(\Rightarrow \bar{{L}}(w)=0\). So w forms a strong solution. \(\square \)

Numerical Stability

The accuracy of proposed method is demonstrated by calculating absolute error, average deviation \(\sigma \) also known as root mean square error (RMS). They are calculated using the following equations

and

where \(u_e (r_i ,t_j )\) is the exact value of output function at point \((r_i ,t_j )\) and \(u_a (r_i ,t_j )\) is the approximate value of output function at the same point.

From now wards, considering h(r) as input function. Adding random noise term in input function we demonstrate the stability of the proposed method.

In all examples, the exact and input function with noise are denoted by h(r) and \(h^{\delta }(r)\), respectively, where \(h^{\delta }(r)\) is obtained by adding a noise \(\delta \) to h(r) such that \(h^{\delta }(r_i )=h(r_i )+\delta \theta _i \), where \(r_i =ih, i=1,2,\ldots ,N, Nh=1\); and \(\theta _i \) is the uniform random variable with values in [\(-\)1, 1] such that

Reconstructed output function \(u_a^\delta (r,t)\)(with \(\delta \) noise) and \(u_a^0 (r,t)\) (without noise) are obtained with and without noise term in the input function h(r) and using Eqs. (10) and (15) these are given by

where \(C^{\delta }\) and C are known matrices and they are obtained from the following equations:

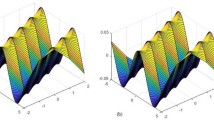

Approximate solution for \(\alpha =0.5\), Example 1

Approximate solution for \(\alpha =1\), Example 1

Absolute error for \(\alpha =1\), Example 1

Absolute error for \(\alpha =0.9\), Example 1

\(LC^{\delta }+C^{\delta }M+N^{\delta }=0\) and \(LC+CM+N=0\), where L, M, N are same as in Eq. (21) and

we approximate \(h^{\delta }(r)\) as

Let

then H(r, t) reflects the noise reduction capability of the method. Its values at various points and its graph are shown in Table 1 and Fig. 1.

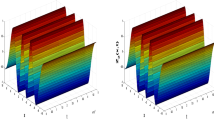

The behaviour of solution for different values of \(\alpha \) at \(\hbox {t}=1\), Example 1

The behaviour of solution for different values of \(\alpha \) at \(\hbox {r}=1\), Example 1

Difference of absolute errors with and without noise, Example 1

In Table 1, we list the noise reduction.

In eighth section, two examples are solved with and without noise to illustrate the stability of the proposed method. In all the two examples, we add the noise \(\delta =\sigma _{(N+1)^{2}}\), for two different values of \(N=10,20\). For different values of N we calculate maximum absolute error and root mean square errors denoted by \(E_1\) and \(E_2 \) respectively for input functions without noise term and these respective errors are denoted by \(E_1^{*}\) and \(E_2^{*}\) for input function with noise respectively. In Table 3, we have listed the different values of \(E_1 ,E_2 ,E_1^*\) and \(E_2^{*}\) for \(N=10,20\). From the table it is clear that the there is a very small change in errors when we add noise term in input function showing the stability of our method.

In Figs. 8 and 14 the difference of absolute errors with and without noise are plotted for Examples 1 and 2 respectively and it is observed that it is very small so our method is stable.

Numerical Results and Discussion

Example 1

Consider the following time-fractional Navier–Stokes equation [9, 15, 16, 21, 25];

with initial-boundary conditions \(u(r,0)=1-r^{2}, \)

and exact solution  . For simplicity taking \(P=1\). Figures 2 and 3, show the approximate solution of Eq. (42),for different values of \(\alpha =0.5\) and 1 respectively. Figures 4 and 5 show the absolute error graph of Eq. (42), for \(\alpha = 1\) and 0.9 respectively.

. For simplicity taking \(P=1\). Figures 2 and 3, show the approximate solution of Eq. (42),for different values of \(\alpha =0.5\) and 1 respectively. Figures 4 and 5 show the absolute error graph of Eq. (42), for \(\alpha = 1\) and 0.9 respectively.

Figures 6 and 7 show the behaviour of approximate solution of Eq. (42), for different values of \(\alpha =0.7,0.8,0.9\) and 1 for fix value of \(t=1\) and \(r=1\) respectively. From Figs. 6 and 7, it is clear that solution varies continuously for different values of \(\alpha \) and approaches monotonically to integer order NSE continuously as \(\alpha \rightarrow 1\) (Fig. 8).

In Table 2, we compare results obtain by our numerical algorithm and existing analytical methods [9, 16, 25].

Example 2

Consider the following time-fractional Navier–Stokes equation;

with initial-boundary conditions as \(u(r,0)=r^{2}\), \(u(0,t)=4t, u(1,t)=1+4t\) and exact solution \(u(r,t)=r^{2}+4t\), for \(\alpha =1\).

Figures 9 and 10 show the approximate solution of Eq. (43) for different values of \(\alpha =0.5\) and 1 respectively. Figure 11 shows the absolute error graph of Eq. (43), for \(\alpha =1\).

Approximate solution for \(\alpha =0.5\), Example 2

Approximate solution for \(\alpha =1\), Example 2

Absolute error for \(\alpha =1\), Example 2

Figures 12 and 13 show the behaviour of approximate solution of Eq. (43), for different values of \(\alpha =0.7,0.8,0.9\) and 1 for fix value of \(t=1\) and \(r=1\) respectively. From Figs. 12 and 13, it is clear that solution varies continuously for different values of \(\alpha \) and approaches monotonically to integer order NSE continuously as \(\alpha \rightarrow 1\) (Fig. 14).

The behaviour of solution for different values of \(\alpha \) at \(\hbox {t}=1\), Example 2

The behaviour of solution for different values of \(\alpha \) at \(\hbox {r}=1\), Example 2

Difference of absolute errors with and without noise, Example 2

In Table 3, we list the errors \(E_1 ,E_2 ,E_1^*\) and \(E_2^{*}\) to show the stability of our method.

Conclusions and Future Work

The approximate solutions can be obtained by solving some algebraic equations, so it is very handy for computational purposes. There are few numerical methods to solve FNSE but none of them show the stability and convergence of method. In our method stability with respect to the data is restored and accuracy is good even for high noise levels in the data. Convergence and error analysis are also given. From numerical examples we show that solution varies continuously for different values of \(\alpha \). For \(\alpha =1\) solution for standard NSE is obtained. For future work we can use different orthonormal polynomials to achieve better accuracy.

References

Baeumer, B., Meerschaert, M.M., Benson, D.A., Wheatcraft, S.W.: Subordinate advection–dispersion equation for contaminant transport. Water Resour. Res. 37, 1543–1550 (2001)

Chaurasia, V.B.L., Kumar, D.: Solution of the time fractional Navie–Stokes equation. Gen. Math. Notes 4(2), 49–59 (2011)

Colombeau, J.F.: New Generalized Functions and Multiplication of Distributions. North Holland, Amsterdam (1984)

Colombeau, J.F.: New generalized functions and multiplication of distributions: a graduate course, application to theoretical and numerical solutions of partial differential equations. (Lyon) (1993)

Diethelm, K., Ford, N.J., Freed, A.D., Luchko, Y.: Algorithms for fractional calculus: a selection of numerical methods. Comput. Methods. Appl. Mech. Eng. 194, 743–773 (2005)

El-Sayed, A., Salem, A.: On the generalised Navier–Stokes equations. Appl. Math. Comput. 156(1), 287–293 (2005)

Fu, Z.-J., Chen, W., Yang, H.-T.: Boundary particle method for Laplace transformed time fractional diffusion equations. J. Comput. Phys. 235(15), 52–66 (2013)

Fu, Z.-J., Chen, W., Ling, L.: Method of approximate particular solutions for constant- and variable-order fractional diffusion models. Eng. Anal. Bound. Elem. 57, 37–46 (2015)

Ganji, Z.Z., Ganji, D.D., Ganji, A.D., Rostamian, M.: Analytical solution of time-fractional Navier–Stokes equation in polar coordinate by homotopy perturbation method (2008). doi:10.1002/num.20420

Gorenflo, R., Mainardi, F., Scalas, E., Raberto, M.: Fractional calculus and continuous-time finance III : the diffusion limit. In: Kohlmann M., Tang S. (eds.) Mathematical Finance. Trends in Mathematics, pp. 171–180. Birkhäuser, Basel (2001)

Heydari, M.H., Hooshmandasl, M.R., Maalek Ghaini, F.M., Fereidouni, F.: Two-dimensional Legendre wavelets for solving fractional Poisson equation with Dirichlet boundary conditions. Eng. Anal. Bound. Elem. 37, 1331–1338 (2013)

Hilfer, R.: Application of Fractional Calculus in Physics. World Scientific, Singapore (2000)

Huang, F., Liu, F.: The fundamental solution of the space–time fractional advection–dispersion equation. J. Appl. Math. Comput. 18, 339–350 (2005)

Kazem, S., Abbasbandy, S., Kumar, S.: Fractional order Legendre functions for solving fractional-order differential equations. Appl. Math. Model. 37, 5498–5510 (2013)

Kumar, S., Kumar, D., Abbasbandy, S., Rashidi, M.M.: Analytical solution of fractional Navier–Stokes equation by using modified Laplace decomposition method. Ain Shams Eng. J. 5, 569–574 (2014)

Kumar, D., Singh, J., Kumar, S.: A fractional model of Navier–Stokes equation arising in unsteady flow of a viscous fluid. J. Assoc. Arab Univ. Basic Appl. Sci. 17, 14–19 (2015)

Lakestani, M., Dehghan, M., Pakchin, S.I.: The construction of operational matrix of fractional derivatives using B-spline functions. Commun. Nonlinear Sci. Numer. Sci. 17, 1149–1162 (2012)

Lin, Y., Jiang, W.: Approximate solution of the fractional advection–dispersion equation. Comput. Phys. Commun. 181, 557–561 (2010)

Meerschaaert, M., Benson, D.A., Scheffler, H.P., Baeumer, B.: Stochastic solution of space–time fractional diffusion equation. Phys. Rev. E 65, 1103–1106 (2002)

Miller, K., Ross, B.: An Introduction to Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Momani, S., Odibat, Z.: Analytical solution of a time-fractional Navier–Stokes equation by Adomian decomposition method. Appl. Math. Comput. 177, 488–494 (2006)

Oberguggenberger, M.: Multiplication of Distributions and Applications to PDEs. Pittman Research Notes in Mathematics, 259. Longman, Harlow (1992)

Pang, G., Chen, W., Fu, Z.: Space-fractional advection–dispersion equations by the Kansa method. J. Comput. Phys. 293, 280–296 (2015)

Roberto, M., Scalas, E., Mainardi, F.: Waiting-times and returns in high frequency financial data: an empirical study. Phys. A 314, 749–755 (2002)

Ragab, A.A., Hemida, K.M., Mohamed, M.S., Abd El Salam, M.A.: Solution of time-fractional Navier-Stokes equation by using homotopy analysis method. Gen. Math. Notes 13, 13–21 (2012)

Sabatelli, L., Keating, S., Dudley, J., Richmond, P.: Waiting time distributions in financial markets. Eur. Phys. J. B 27, 273–275 (2002)

Schumer, R., Benson, D.A., Meerschaert, M.M., Baeumer, B., Wheatcraft, S.W.: Eulerian derivation of the fractional-dispersion equation. J. Contam. Hydrol. 48, 69–88 (2001)

Schumer, R., Benson, D.A., Meerschaert, M.M., Baeumer, B.: Multi scaling fractional advection–dispersion equation and their solutions. Water Resour. Res. 39, 1022–1032 (2003)

Singh, H.: A new numerical algorithm for fractional model of Bloch equation in nuclear magnetic resonance. Alex. Eng. J. (2016). doi:10.1016/j.aej.2016.06.032

Singh, C., Singh, H., Singh, V.K., Singh, O.P.: Fractional order operational matrix methods for fractional singular integro-differential equation. Appl. Math. Model. (2016). doi:10.1016/j.apm.2016.08.011

Wang, L., Ma, Y., Meng, Z.: Haar wavelet method for solving fractional partial differential equations numerically. Appl. Math. Comput. 227, 66–76 (2014)

West, B., Bologna, M., Grigolini, P.: Physics of Fractal Operators. Springer, New York (2003)

Wu, J.L.: A wavelet operational method for solving fractional partial differential equations numerically. Appl. Math. Comput. 214, 31–40 (2009)

Yousefi, S.A., Behroozifar, M., Dehghan, M.: The operational matrices of Bernstein polynomials for solving the parabolic equation subject to the specification of the mass. J. Comput. Appl. Math. 235, 5272–5283 (2011)

Zaslavsky, G.: Hamiltonian Chaos and Fractional Dynamics. Oxford University Press, Oxford (2005)

Acknowledgements

The author is very grateful to the referees for their constructive comments and suggestions for the improvement of the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Singh, H. A New Stable Algorithm for Fractional Navier–Stokes Equation in Polar Coordinate. Int. J. Appl. Comput. Math 3, 3705–3722 (2017). https://doi.org/10.1007/s40819-017-0323-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40819-017-0323-7