Abstract

Atanassov intuitionistic fuzzy set (AIFS)-based C-means algorithms are successful in clustering uncertain or vague real-world datasets. The AIFS-based clustering algorithms are classified into adaptive class and non-adaptive class. An algorithm from the adaptive class computes its feature weight distribution with the help of the given dataset. On the other side, the algorithm belonging to the non-adaptive class mostly computes the feature weight distribution by employing an equally likely approach. The guarantee to reach up to the mark clustering performance is missing within this approach. Simultaneously, the performance gets deteriorated if the datasets showcase noises/irrelevant features. The irrelevant features in the datasets add to the computational cost. So, a feature reduction-equipped clustering algorithm called uni-weighted intuitionistic fuzzy C-means (uW-IFCM) is introduced in the paper. Moreover, the probabilistic weights-based adaptive clustering algorithm, namely bi-weighted probabilistic intuitionistic fuzzy C-means (bW-PIFCM) is proposed under the AIFS environment. The parametric analysis for uW-IFCM is provided to comprehend and compare its performance with bW-PIFCM, PIFCM, IFCM, and FCM algorithms. Here, an intuitionistic data fuzzification technique transforms the real-valued dataset into AIFS dataset, therefore bW-PIFCM and uW-IFCM algorithms cluster the real-valued datasets. The research proposal of Yang and Nataliani in [IEEE Transactions on Fuzzy Systems, 26(2), 817–835] motivates us to introduce a feature reduction-equipped uW-IFCM algorithm. We have considered synthetic datasets and some UCI machine learning datasets for the experimental study of uW-IFCM and bW-PIFCM. The efficacy and the precision of proposed algorithms are tested in terms of some popular benchmark indexes as well.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the paper, we explore multi-dimensional/multi-featured datasets with the help of clustering. Some of the well-known procedures of clustering are subspace clustering (see [1, 2]), density-based clustering [3], fuzzy clustering [4], and hierarchical clustering [5]. Fuzzy C-means (FCM) [6] is an example of a fuzzy partitional clustering technique, and its functioning has been widely observed over multiple types of real-world multi-dimensional datasets. The variants of FCM obtained in the purview of other fuzzy sets are known as intuitionistic fuzzy C-means (IFCM) [7], interval type-2 fuzzy C-means [8, 9], rough fuzzy C-means [10, 11], and hesitant fuzzy k-means [12]. The FCM algorithm uses Euclidean distance measure. Researchers exploited measures like Mahalanobis distance in place of the Euclidean distance measure to obtain clusters that are not spherical (see [13]). Further such distance-based variants of FCM are found less sensitive towards noises and outliers (see [14]). The weighted fuzzy C-mean clustering algorithms are successful in satisfactory clustering of the multi-dimensional/multi-featured datasets (see [15,16,17,18]). The weighted FCM assigns an appropriate role to each feature in a multi-featured dataset during its clustering. It involves a feature weight learning technique that decides the role of each feature (see [19,20,21,22,23]). A feature weight learning technique is proposed in [20] based on the gradient descent procedure. For an efficient clustering of the multi-dimensional datasets, an entropy-based weight learning technique is proposed by the [21]. Some of the recent studies on feature learning are [24, 25, 17, 26,27,28,29], and [30].

The AIFS proposed by [31] is an effective tool to model the ambiguity and uncertainty present within real-world phenomena. The ambiguity and uncertainty present in the multi-dimensional datasets motivate us to cluster them under the AIFS environment using the hesitancy component of the AIFS. An AIFS-based clustering algorithm efficiently solves the problems of various fields discussed by [32,33,34,35,36,37,38]. [34] proposes a clustering technique for image representations, whereas medical image segmentation is done by an entropy-based technique (see [32]). [33] extends the clustering technique of [32] to cope with the issues of noise/outliers by selecting neighborhood pixels from different regions of the magnetic resonance imaging for its tuning. The natural extension of the FCM under the AIFS environment is the IFCM algorithm [7], which is further studied by [38]. It is improvised for the noisy medical image segmentation while using the rough set theory (see [39]).

During the clustering of a multi-dimensional/featured dataset, FCM and IFCM assign equal importance to each dimension/feature. Moreover, the derivation of FCM/IFCM or their variants involves calculus-based optimization. It is observed that many of these variants are using weighted Euclidean distance measure. Here, we categorize these variants on the basis of an equally likely approach as follows: (1) In the IFCM variants (see [32,33,34, 40, 36, 37]), an equally likely approach is used, that is, all the components of Euclidean distance are assigned same weight. (2) There are some variants (see [41, 39]) in which unequal weights are allocated to the components of Euclidean distance in contrast to the equally likely approach. Moreover, the variants of the FCM employing an equally likely approach traverse their distance along a circular path, whereas the IFCM counterparts compute distances along spherical paths. A single weighing exponent is sufficient to control those distance measures which track along a circular or spherical/hyper-spherical path. Now, if variants of FCM/IFCM do not employ an equally likely approach, then elliptical/ellipsoidal path-based distances are measured. To monitor an ellipsoidal path, three independent weighing exponents are exploited, here, the same path is monitored using a weighing exponent containing two variables. We show that the improvisation of the clustering algorithm depends on the computation of an accurate feature weight distribution. The clustering algorithms discussed in the paper are either uni-parametric, bi-parametric, tri-parametric, or quad-parametric (see Table 2). Let us discuss the major contributions of the paper:

-

An equally likely approach often fails in the dealing of a multi-dimensional/featured dataset because all features of the dataset are not equally relevant. To address this problem, we have proposed two clustering techniques: (1) a singly weighted data-driven algorithm called uni-weighted intuitionistic fuzzy C-means (uW-IFCM), (2) two variables-based weight triplets introduce bi-weighted probabilistic intuitionistic fuzzy C-means algorithm (bW-PIFCM) (see Sect. 2.2 and 2.3).

-

The initialization of uW-IFCM and bW-PIFCM algorithms requires a transformation of the real-valued multi-dimensional/featured dataset to an AIFS dataset. For this, we have utilized two parameters \((\alpha ~\text{ and }~\beta )\)-based novel data fuzzification technique (see Sect. 3).

-

In uW-IFCM algorithm, the weighing exponent \(\eta\) and fuzzy factor m are used as parameters. We further tune the feature weights using the exponent \(\eta\), which gives better feature weight distribution for uW-IFCM in comparison to bW-PIFCM. A study about the optimal choices of \(\eta\) and m at which uW-IFCM delivers its best clustering has been carried out in Sect. 4. Here, we perform both experimental analysis and convergence analysis of the uW-IFCM and bW-PIFCM algorithms.

-

The uW-IFCM detects irrelevant features in a multi-dimensional/featured dataset during its clustering. We have shown that uW-IFCM is a mechanized feature reduction technique (see Sect. 5.1), whereas an automatized feature reduction technique called FRT-equipped uW-IFCM algorithm is also proposed for nullification of irrelevant features (see Sect. 5.2).

The remaining paper is organized into five sections. In Section 2, we provide a mathematical solution for a fuzzy clustering problem. Section 3 discusses the procedural detailing of proposed uW-IFCM and bW-PIFCM algorithms. In Sect. 4, an experimental analysis of synthetic and some UCI machine learning datasets is carried out. We provide two uW-IFCM-based feature reduction techniques in Sect. 5. Finally, the conclusion is stated in Sect. 6.

2 Some Important Mathematical Results for C-Means Clustering Algorithms

We have divided this section into three subsections.

2.1 Description of Clustering Problem I

Intuitionistic fuzzy C-means (IFCM) is a well-known clustering algorithm that uses Euclidean distance measure to cluster the datasets.

Clustering problem I: Let \(\{\hat{x}_1, \hat{x}_2, \dots ,\) \(\hat{x}_P \}\) be a set of multi-valued AIFS data items. Here, the dimension of each data item is D, that is, \(\hat{x}_i = (\tilde{x}_{id})_{d=1}^{D}\), and \(\tilde{x}_{id} = (\mu _{id},\nu _{id},\pi _{id})\). We have to group \(\{\hat{x}_1, \hat{x}_2, \dots ,\) \(\hat{x}_P \}\) into C clusters. Let \(V = \{\hat{v}_1, \hat{v}_2, \dots , \hat{v}_C: \hat{v}_l = (\bar{\mu }_{ld},\bar{\nu }_{ld},\bar{\pi }_{ld})_{d=1}^{D}, 1 \le l \le C\}\) be a set of initial cluster centroids.

IFCM-based solution procedure: The criterion function \(J_m\) is defined as

such that

The membership value \(u_{il}\) of ith data item in lth cluster and the centroid \((\mu _{\bar{v}_{ld}},\nu _{\bar{v}_{ld}},\pi _{\bar{v}_{ld}})_{d=1}^{D}\) are updated using Eqs. (4) and (5).

In IFCM algorithm, all the features of a multi-dimensional/ featured dataset are equally emphasized during clustering. The IFCM transforms into weighted-IFCM, if \(D_1^2(\tilde{x}_i,\tilde{v}_l)\) is replaced with \(D_w^2(\tilde{x}_i,\tilde{v}_l)=\sum _{d=1}^{D}w_{d}((\mu _{id}-\bar{\mu }_{ld})^2 + (\nu _{id}-\bar{\nu }_{ld})^2 + (\pi _{id}-\bar{\pi }_{ld})^2)\). In weighted-IFCM, we randomly select its weights (i.e., \(w_{d},1\le d\le D\)) such that \(\sum _{d=1}^{D}w_{d}=1\).

2.2 A Proposal of Novel Probabilistic Intuitionistic Fuzzy C-Means Clustering Algorithm

Let us solve the Clustering problem I probabilistically. So, we propose bi-Weighted Probabilistic Intuitionistic Fuzzy C-Means algorithm (bW-PIFCM), and this variant of PIFCM uses two variable-based weight triplets. The proposed algorithm computes data-driven feature weights such that it results to an optimal feature weight distribution at the convergence. It means that the proposed algorithm is adaptive in nature. The weights portray the importance of features in a multi-dimensional/featured dataset. Here, a membership matrix \(M = {(u_{il})_{P \times C}}\) of order \({P \times C}\) is obtained. The mathematical results which correspond to bW-PIFCM are given in the form of four theorems.

Theorem 2.1

Let S be a collection that contains AIFS. If \(I_{1}=[p',p'']\) and \(I_{2}=[q',q'']\) be the weight intervals assigned to membership and non-membership components of an AIFS, which is an element of S. The weight interval I assigned to the hesitancy component of the AIFS is

Proof

Let \(A=(\mu ,\nu ,\pi )\) be an AIFS belonging to S. As,

Therefore, from (9) and (10), we get,

\(\square\)

Theorem 2.2

Let an AIFS, say \(A_1\) belonging to S has been assigned weight intervals \(I_{1}=[p',p'']\), \(I_{2}=[q',q'']\) , and I to their membership, non-membership, and hesitancy components, respectively. Here, \(I_{1}=[p',p'']\), \(I_{2}=[q',q'']\) are independent of \(A_1\), but the interval I depends on \(A_1\). Let \(d:S\times S\rightarrow \mathbb R\) be a mapping, such that,

The mean of the intervals \(I_{1},I_{2}, I_{3}=I\cap I^{'}\) gives the coefficients \(p_{12},q_{12}\), \(r_{12}\), respectively. Here, the weight interval associated with the hesitancy component of \(A_2\) is \(I^{'}\). The mapping d is a distance measure.

Proof

We show that d satisfies all the properties of distance measure:

1. It is trivial to see \(0\le d(A_1,A_2)\le 1\), where \(A_1\) and \(A_2\) are any two AIFS of S.

2. Let, \(d(A_1,A_2)=0\)

Equation (12) implies

3. It can be easily shown that \(d(A_1,A_2)=d(A_2,A_1).\)

4. To establish this condition, it is equivalent to prove the property of transitivity (see [42, 43] and [41]). To do so, let \(A_1, A_2, A_3\) be the three AIFSs. Now,

Using Minkowski’s inequality, we get

\(~~~~~~~~~~~~~~~\Rightarrow d(A_1,A_3)\le d(A_1,A_2)+d(A_2,A_3)\)

Thus, \(d(A_1,A_2)\) is a distance measure. \(\square\)

Theorem 2.3

Let \(\theta _1:\mathbb {M}_{si}\times \mathbb {V}_{sd}\rightarrow \mathbb {R}\) be a mapping, such that \(\theta _1(U,V) = J_{m}({U},{V})\), where cluster matrix, \(V\in \mathbb {V}_{sd}\) and membership matrix, \(U\in \mathbb {M}_{si}\). Then \(U^*\) is a strict local minima if \(U^*\) is calculated from Eq. (23). The coefficients \(p_{12}, q_{12}, r_{12}\) are known (see Theorem 2.2).

Proof

The criterion function and constraint are given as

such that

The Lagrangian G(U, V) is constructed based on the criterion function and constraint as follows:

In Eq. (17), we have used Lagrange’s multipliers \(\lambda _i,~1 \le i \le P\). Here, the fuzzy factor \(m \in (0,1) \cup (1,\infty )\) operates over the elements of the membership matrix, U. The element \(u_{li} \in [0,1]\) denotes the membership grade of the ith element of the universe of discourse within the lth cluster. It is a minimization problem, so for its solution, we differentiate Eq. (17) with respect to Lagrange multipliers \(\lambda _i\), and set them equal to zero as follows:

Similarly, derivatives of the Lagrangian condition (17) are set equal to zero with respect to membership parameter \(u_{si}\), where \(1\le i\le P\),\(1 \le s \le C\) as

Solving Eq. (19), we get

To compute the iterative formula for \(u_{si}\), we combine Eqs. (18) and (20) to yield

Therefore, with the division of Eq. (20) by Eq. (22), the iterative formula for membership value \(u_{si}\) is obtained as follows:

\(\square\)

Theorem 2.4

The optimal minima \(U^*\) is obtained at a point \(V=V^*\).

Proof

We define the Lagrangian G(U, V) as

To find the cluster center matrix V, we equate derivatives of the Lagrangian (see Eq. (24)) equal to zero with respect to cluster center \(\bar{\mu }_{sd},\bar{\nu }_{sd},\bar{\pi }_{sd}\), where \(1 \le s \le C, 1 \le d \le D\). It yields,

Solving Eq. (25), we get

Similarly, we obtain the following solutions from Eqs. (26) and (27), respectively,

Here, eqns. (29), (30), and (31) derive the point \(V=V^*\). \(\square\)

2.3 A Proposal of Novel Intuitionistic Fuzzy C-Means Clustering Algorithm

This section establishes some mathematical results to propose the uni-weighted intuitionistic fuzzy C-means clustering (uW-IFCM) algorithm. A feature weight matrix (namely, \(W = (w_{d})_{1 \times D}\))-dependent distance measure \({D}_2^2(\hat{x_i},\hat{v_l})\) is used here. We have

The set containing C cluster centroids against dth feature is \(V = \{{v}_1, {v}_2, \dots , {v}_C; {v}_l = (\bar{\mu }_{ld},\bar{\nu }_{ld},\bar{\pi }_{ld}), 1 \le l \le C\}\). Here, the theorems derive membership matrix U, cluster centroids \((\bar{\mu }_{ld},\bar{\nu }_{ld},\bar{\pi }_{ld})\) , and weight matrix W.

Theorem 2.5

Let \(\theta _1:\mathbb {M}_{li}\rightarrow \mathbb {R}\) be a mapping, such that \(\theta _1(U) = J({U},{\textbf {V}},{\textbf {W}})\), where cluster matrix \({\textbf {V}}\in \mathbb {V}_{ld}\) and weight matrix \({\textbf {W}}\in \mathbb {W}_{ld}\) are fixed. Then \(U^*\) is a strict local minima if \(U^*\) is calculated from Eq. (41).

Proof

As \({{\textbf {V}}}\) and \({{\textbf {W}}}\) are kept fixed, they are marked bold. Let us take cluster center matrix as \({\textbf {V}}=[{\textbf {v}}_1,{\textbf {v}}_2,...,{\textbf {v}}_C]^T\), where \({\textbf {v}}_{l}=(\bar{\mu }_{lj},\bar{\nu }_{lj},\bar{\pi }_{lj})_{j=1}^{D}\). The weight matrix \({\textbf {W}}\) is of order \({1\times D}\), and \(\sum _{d=1}^{D}w_{d}=1,~\text{ for } \text{ all }~1 \le i \le P\). Here, \(m,\eta \in (0,1) \cup (1,\infty )\). Now, we define the criterion function:

the following constraint is used for defining Lagrangian:

Equations (33) and (34) define the Lagrangian:

The derivatives of the Lagrangian condition (35) are set equal to zero with respect to Lagrange’s multipliers \(\lambda _i\):

Similarly, derivatives of the Lagrangian condition (35) are set equal to zero with respect to membership parameter \(u_{si}\), where \(1\le i\le P\),\(1 \le s \le C\). So,

Solving Eq. (37), we get

Equations (36) and (38) together yield:

Dividing Eq. (38) by Eq. (40) results in an iterative formula for membership value \(u_{si}\) as follows:

\(\square\)

Theorem 2.6

Let \(\theta _2:\mathbb {W}_{D}\rightarrow \mathbb {R}\) be a mapping such that \(\theta _2(W) = J({\textbf {U}},{\textbf {V}},W)\), where membership matrix \({\textbf {U}}\in \mathbb {U}_{li}\) and cluster matrix \({\textbf {V}}\in \mathbb {V}_{ld}\) remain constant. Then \(W^*\) is a strict local minima if Eq. (50) calculates \(W^*\).

Proof

The membership matrix \({\textbf {U}}\) and cluster center matrix \({\textbf {V}}\) are kept constant and we mark them bold. The Lagrangian \(G({\textbf {U}},{\textbf {V}},W)\) is defined using a weight matrix \(W = (w_{d}^\eta )_{1 \times D}\) as follows:

The derivatives of the Lagrangian (see Eq. (42)) with respect to Lagrange multiplier \(\lambda _l\) are set equal to zero. Hence,

Similarly, the derivatives of the Lagrangian (see Eq. (42)) with respect to weight parameter \(w_{a}\), \(1 \le a \le D\) is set equal to zero. Hence,

We simplify Eq. (44) and it derives feature weight \(w_{a}\) as follows:

For \(\eta \ne 1\):

Equations (46) and (47) are solved, so we have

Let us divide Eq. (47) by Eq. (49), and it results in the iterative formula for weight \(w_{a}\) as follows:

\(\square\)

Corollary 2.1

Equations. (55), (56), and (57) give the iterative formula for cluster center matrix V.

For proof, we set derivatives of the Lagrangian (see Eq. (42)) with respect to cluster center \(\bar{\mu }_{sa},\bar{\nu }_{sa},\bar{\pi }_{sa}\), where \(1 \le s \le C, 1 \le a \le D\) equal to zero is as follows:

From Eq. (51), we have

Similarly, from Eqns. (52) and (53), we get

\(\square\)

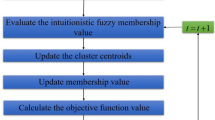

3 Procedural Details of uW-IFCM and bW-PIFCM

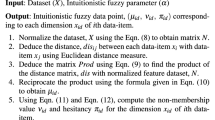

The implementation of the uW-IFCM algorithm involves seven steps. In the first step, intuitionistic data fuzzification technique transforms the given dataset into an AIFS dataset. Here, the functions \(\mu _{id}\), \(\nu _{id}\), and \(\pi _{id}\) calculate membership, non-membership, and hesitancy values, respectively. The optimized iterative values of membership matrix U, centroid matrix V, weight matrix W, and convergence criteria are determined from step 2 to step 7. A brief procedure to implement uW-IFCM and bW-PIFCM has been given in Algorithm III and Algorithm IV.

Step 1. Intuitionistic data fuzzification technique: Let us consider a real-valued multi-dimensional/featured dataset \(T=\{ x^{'}_{1}, x^{'}_{2}, \dots , x^{'}_{P} \}\), where \(x^{'}_{i}=(x_{id})_{d=1}^{D}\) and \(x_{id}\in R\) for all \(1\le d\le D\). The bi-parametric formula (a and b are the parameters) normalizes T as follows:

The symbols \(x^i_{\underset{d}{\min }}\) and \(x^i_{\underset{d}{\max }}\) denote the minima and maxima of the set \((x_{id})_{d=1}^{D}\). Here, \(a = 0\) and \(b = 1\).

1. Membership function, \(\mu _{id}\): Equation. (58) transforms T into a normalized data matrix N. The norm function of MATLAB computes Euclidean distance between each pair \((x^{'}_{i},x^{'}_{j})\), and its resultant is a distance matrix \([dis_{ij}]\). Let us multiply N and \([dis_{ij}]\), and then we obtain the matrix \([P_{id}]\). The feature weight allocation to \(x_{id}\) is done with a factor \(M_{id}\) computed as follows:

We have calculated \(\sum _{i=1}^{P}\sum _{d=1}^{D} (x_{id}+M_{id})\), and it results to a new matrix called \(normalized\text{- }(x_{id}+M_{id})\) \(=\frac{x_{id}+M_{id}}{\sum _{i=1}^{n}\sum _{d=1}^{D} (x_{id}+M_{id})}\). Now, a data-centric membership function is computed as follows:

The tuning parameter \(\beta \in (0,10)\) tunes the membership values as per the requirement of the dataset T.

2. Non-membership function, \(\nu _{id}\): The generalized intuitionistic fuzzy generator given by [40] derives the non-membership function \(\nu _{id}\) as

The domain of the tuning parameter \(\alpha\) is (0, 1].

3. Hesitancy function, \(\pi _{id}\): The mathematical formulation of the hesitancy function is given as follows:

Equations. (60), (61), and (62) together transform \({x}_{id}\) into an AIFS \(\tilde{x}_{id} = (\mu _{id},\nu _{id},\pi _{id})\). This novel data fuzzification technique is implemented with the help of Algorithm I.

Now, we discuss the outlines of Algorithm II:

Step 2. Initialization of centroid matrix V: To initialize uW-IFCM, and bW-PIFCM algorithms, it is necessary to fix the prior number of cluster centroids. We randomly select initial cluster centroids, \(\hat{v}_l\), \(1 \le l \le C\) with the help of rand function of MATLAB.

Step 3. Initialization of weight matrix, W: The feature information provided in the multi-dimensional dataset is used to compute initial feature weights in uW-IFCM (see Eqn 50), whereas bW-PIFCM algorithm uses data-driven weight triplets for its initialization (see Theorem 2.2).

Step 4. Computation of membership matrix, U: The membership matrix U contains the membership value of each data point in every cluster. The higher the membership value, the more the associativity of the data points in the particular cluster. Here, the matrix \(U = [u_{kl}]\) is of order \(P\times C\), where P is the number of data points and C is the number of clusters. Eq. (41) and Eq. (23) provide mathematical formulas with which we calculate the membership matrix corresponding to uW-IFCM and bW-PIFCM algorithms, respectively.

Step 5. Updation of cluster centroid matrix, V: We have arbitrarily selected C number of initial cluster centroids \((\hat{v}_l=(\mu _{ld},\nu _{ld},\pi _{ld})_{d=1}^{D}, 1\le l\le C)\) in step 2. The same weightage has been given to each dimension d of \(\hat{s}_l\) (lth cluster centroid). The uW-IFCM uses Eqns. (55-57) to update V, whereas Eqns. (29-31) update the cluster centroids of bW-PIFCM. In some algorithms, we see an explicit role of hesitancy during the updation of centroid matrix [32]).

Step 6. Updation of weight matrix, W: To update W, the updated membership matrix U and the updated cluster centroids V of uW-IFCM are utilized. We observe that after each iteration, there is an increase in the weights of relevant features and a decrease in the weights of irrelevant features or noisy features. The data-specific appropriate selection of the exponent \(\eta\) and fuzzy factor m delivers an optimal feature weight distribution. The formula (see Eq. (50)) works for the weight matrix updation. In the case of bW-PIFCM, we update the probability weight intervals \(I_1,I_2\) and I with the help of Algorithm III.

Step 7. Convergence criterion: The convergence of the uW-IFCM and bW-PIFCM algorithms are decided with an error margin \(\epsilon\) equal to \(10^{-6}\). The convergence is met for uW-IFCM if the termination criterion \({\sum \limits _{d=1}^{D}} \frac{{d_2}({W}_d(t),{W}_d(t+1))}{D} < \epsilon\) is reached else the algorithm repeats themselves from Step 4. The termination criterion used for bW-PIFCM is \({\sum \limits _{l=1}^{C}} \frac{{d_2}({V}_l(t),{V}_l(t+1))}{C} < \epsilon\) till the convergence is reached.

4 An Experimental Study of Synthetic and UCI Machine Learning Datasets

This section is divided into four subsections as follows:

4.1 Study of Synthetic Dataset I Using IFCM and Weighted-IFCM

Description of Synthetic Dataset I: For the experimentation, we use a Gaussian distribution function-based five-dimensional Synthetic Dataset I of sample size 300 (see [44]). Here, we denote the mean and the standard deviation with \(\theta\) and \(\sigma\), respectively. The mean value of the jth cluster along lth dimension (feature) is given as \(\theta _{jl}\) with standard deviation \(\sigma _{jl}\) and distribution \(\sum _{j=1}^{3}\sum _{l=1}^{5}N(\theta _{jl},\sigma _{jl})\). The Synthetic Dataset I consists of three well-separated clusters and each cluster has 100 data items (see Fig. 1). In Table 3, the mean values and standard deviations, which we use for the three clusters are provided.

Claim: The features relevant to clustering are \(d_1, d_2, d_3\) , whereas \(d_4, d_5\) are irrelevant features in the given dataset.

Strategy: This weighted-IFCM-based data analysis is bifurcated on the basis of the evaluation process of feature weight distribution as follows: (1) Firstly, we arbitrarily use four fixed feature weight distributions \(W_i = \{w_{ik}\}_{k=1}^5\), \(1 \le i \le 4\) (see Table 4). Here, \(w_{ik}\) is assigned to feature \(d_k\), where \(1 \le k \le 5\). (2) Secondly, we use an equally likely approach to calculate the feature weight distributions (see Table 5).

Experimental analysis:

Case 1. The collections \(W_1,W_2\), \(W_3\), and \(W_4\) contain weights that are assigned to features \(d_1, d_2, d_3, d_4\) and \(d_5\) (see Table 4). The benchmark measuring indexes validating the clustering performance of weighted-IFCM are clustering accuracy CA and rand index \(R_I\) (for their details see Sect. 4.4). Using a random set of initial cluster centroids and the two parameters m, \(\alpha\) (see Table 4), we initialize the weighted-IFCM algorithm. Here, 30 values of m are selected from interval [1, 4], and 20 values of \(\alpha\) are selected out of the interval (0, 1) which results in 600 pairs of \((m,\alpha )\) for the algorithm. The use of \(W_1\) in the weighted-IFCM yields better clustering in comparison to \(W_2,W_3,W_4\), in this case, CA and \(R_I\) are obtained as 0.9933 and 0.9911, respectively (see Table 4). In \(W_1\), the emphasis is given to features \(d_1,d_2,d_3\) , whereas very less importance is assigned to \(d_4\) and \(d_5\). It is observed that a considerable weightage is given to \(d_4\) or \(d_5\) in \(W_2,W_3,W_4\), so the weighted-IFCM does not perform well under these distributions (see Table 4). We further explore the role of features \(d_4\) and \(d_5\) in Case 2.

Case 2. The collection computed using an equally likely approach is \(W_5\) = (1/5,1/5,1/5,1/5,1/5). We nullify features one by one, then the weight distributions obtained by applying this approach over four feature-based sets, three feature-based sets, two feature-based set, and single feature-based set are \(W_6\) = (1/4,1/4,1/4,1/4,0), \(W_7\) = (1/3,1/3,1/3,0,0), \(W_8\) = (1/2,1/2,0,0,0), and \(W_9\) = (1,0,0,0,0), respectively (see Table 5). The algorithm delivers its worst clustering performance at \(W_5\) (CA = 0.3333 and RI = 0.3311), and delivers its best clustering performance at \(W_7\) (CA = 0.9966 and RI = 0.9911). We have discarded features \(d_4\) and \(d_5\) in \(W_7\). Both cases verify that \(d_4\) and \(d_5\) are irrelevant features. Please refer to Table 4 and Table 5 for all the results obtained using weighted-IFCM.

Through the experiment, we observe a marginal gap between the performance of weighted-IFCM with random allocation of weights and relevancy-based weights.

4.2 Study of Synthetic Dataset I Using bW-PIFCM

The weight distributions used in weighted-IFCM algorithm are randomly selected. In this algorithm, each feature is allocated a single weight, whereas the algorithms like PIFCM (see [41]) employ a weight triplet to cluster the multi-dimensional dataset. Here, we analyze Synthetic Dataset I with the bi-weighted variant of PIFCM called bW-PIFCM. The bW-PIFCM algorithm converges after seven iterations (see Fig. 3d). The bW-PIFCM performs well over synthetic datasets (see Table 6). It verifies that algorithm is adaptive in nature. Here, none of the features were neglected. Therefore, it cannot be used as a feature reduction technique.

4.3 Study of Synthetic Dataset I Using uW-IFCM

The research work of [21] motivates us to propose uW-IFCM clustering algorithm. The mathematical study of convergence of uW-IFCM becomes a trivial exercise due to the results established by [21]. So, the focus of the paper is on the experimental study of uW-IFCM algorithm. Our study on Synthetic Dataset I shows that uW-IFCM and IFCM take five and ten iterations, respectively, to converge (see Fig. 3a, b). The criterion value of uW-IFCM differs from that of wIFCM (see Table 2) by a large margin (3c). In the figure, the number of iterations is kept along the horizontal axis, whereas the criterion function values are placed on the vertical axis.

Role of weighting exponent, \(\eta\): The weight \(w_d\) is iteratively calculated in the proposed uW-IFCM algorithm (see Eq. (50) in Theorem 2.6). Then, the algorithm assigns a weight \(w_d^\eta\) to the feature \(\hat{x}_{id}\), where \(1\le d\le D\). Here, the feature obtaining the minimum sum of cluster distances is allocated a larger weight in comparison to other features. This weight assignment approach involves a weighing exponent \(\eta\), so it distinguishes between relevant and irrelevant features. The weight \(w_d^\eta\) helps in the selection of relevant features. The set of positive real numbers (\(\eta > 0\)) is the domain of weighing exponent \(\eta\).

a Depicting five random initial weights for the five features of Synthetic Dataset I. b The five final weights coinciding to same values when \(\eta =2\). c Five initial weights using the equally likely approach for Synthetic Dataset I (d) Graphical representation of variation in the distribution of final weights for Synthetic Dataset I with varying weighing exponent \(\eta\) in uW-IFCM

We pictorially describe and analyze each feature of Synthetic Dataset I independently in Fig. 1. In Sect. 4.1, we have already discussed about relevant features \(d_1, d_2\), \(d_3\), and irrelevant/noisy features \(d_4\), \(d_5\). The inseparable clusters are obtained in the presence of noisy features \(d_4\) and \(d_5\) (see Fig. 1). Here, we interrelate an appropriate selection of \(\eta\) and fuzzy factor m with good clustering. Let us study the domain of \(\eta\) in two parts, \(\eta \in (0,1)\) and \(\eta >1\). Total of eight values of \(\eta\) are used for the experimentation. It is reasonable to select the domain equal to [1, 4] for m (see [45]). The experimental analysis is done in two parts:

1. A case study showing impact of weighing exponent \(\eta\) on feature weight distribution:

We implement uW-IFCM algorithm over Synthetic Dataset I for the study. The clustering depends on parameters \(\eta\) and m (see Fig. 2). Here, uW-IFCM has initialized under the consideration that all features are equally likely. If \(\eta = 0.1\), then \(w_d^\eta\) allocates a weight distribution to the first three features that are very different to the distribution assigned to the last two features, provided m is suitably chosen. If \(m<1.8\), then \(w_{d}^{0.1}\) assigns high weightages to \(d_4\) and \(d_5\), that is, \(w_{d_4}^{0.1},w_{d_5}^{0.1}>>0.2\). Moreover, \(w_{d}^{0.1}\) assigns weightages to the features \(d_1, d_2\), \(d_3\) of value lesser than 0.2 (see Fig. 2a). Now, if \(m \ge 1.8\), then \(w_d^\eta\) assigns higher weights to relevant features \(d_1, d_2\) and \(d_3\) in comparison to noisy features \(d_4\) and \(d_5\). Here, we do not observe many changes in the weight distribution on changing \(\eta\), where \(\eta \in \{0.5,0.7,0.9\}\). From this discussion, we conclude that \(m \ge 1.8\) and \(0<\eta <1\) is the domain of uW-IFCM algorithm.

In Table 7, the initial centroids and five randomly generated weight distributions initializing the algorithm are given. We have \(\eta \in \{0.1, 0.3, 0.5, 0.7, 0.9, 1.5, 2.0, 2.5, 5.0, 8.0, 10\}\), now the best performance of the algorithm is adjudged after experimenting upon all the values of \(\eta\). Let \(\eta = 1.1\) and \(m<1.8\). In this domain, \(w_d^\eta\) assigns high weights to \(d_1, d_2\), \(d_3\) in comparison to noisy features \(d_4\) and \(d_5\), here a good weight distribution operationalizes uW-IFCM. We vary \(\eta\) over the set \(\{1.5,2,2.5\}\) while fixing \(m<1.8\), the distributions obtained slightly differ with the distribution at \(\eta = 1.1\). If \(m \ge 1.8\) and \(\eta >1\), then noisy features \(d_4\) and \(d_5\) are assigned high weights due to virtue of \(w_d^\eta\). If \(\eta >2\), then the second feature \(d_2\) is assigned a very high weightage in comparison to the remaining features. Here, corresponding to each \(\eta\), we select an optimal m and the algorithm results in efficient clustering using the optimal \((\eta ,m)\) (see Table 8). It is concluded that for \(\eta <1\), we have \(m\ge 1.8\), and \(\eta >1\) implies \(m<1.8\). The complete performance details of the uW-IFCM are provided in Table 7. Here, the two benchmark indexes CA and rand index \(R_I\) verify the performance of the uW-IFCM algorithm. The large deviations in the relevant feature weights and irrelevant feature weights are observed at \(\eta =2\). So, there are bright chances that the algorithm delivers an efficient performance at \(\eta =2\), and this case is analyzed separately in detail.

2. A case study showing connection between the weighing exponent \(\eta =2\) and optimal feature weight distribution:

Here, the role of \(\eta =2\) in obtaining efficient clustering is discussed. We randomly select five initial cluster centroids (see Table 7) and five initial weight distributions (see \(w_o\) rows in Table 9) for the experimentation. Here, all the \(w_o\) rows are initializing uW-IFCM algorithm one by one, still, the output remains the same because in all the five instances, the point of convergence is not changing (see Fig. 7b). Let us deal with the Synthetic Dataset I using the equally likely approach in uW-IFCM. The multiple convergence patterns are observed for feature weights on varying \(\eta\), where \(\eta \in \{0.3, 1.5, 2.0, 8.0,10\}\) (see Fig. 7d). In Table 10, the performance of \(\eta = 2\) based uW-IFCM is compared with the IFCM and weighted-IFCM. An optimal feature weight distribution appears at \(\eta =2\), and we see very different distributions at \(\eta = 0.5\) and \(\eta = 5\) (see Fig. 6a–d). In the case of \(\eta =5\), a high feature weight is allocated to \(d_1\) in comparison to \(d_2\) and \(d_3\) (see Fig. 6a). In Fig. 6b, the weight allocated to feature \(d_2\) is very high, whereas \(d_2\) and \(d_3\) are assigned very low weights. In other words, the algorithm singles out feature \(d_2\) for the clustering at \(\eta = 5\). An optimal weight distribution is obtained using \(\eta =2\), therefore \(w_d^2\) delivers the best clustering accuracy and rand index value also.

4.4 A Comparative Analysis of the Proposed Algorithms with some C-Means Algorithms Over UCI Machine Learning Datasets

Here, we explore the functioning of proposed uW-IFCM and bW-PIFCM algorithms on some real-valued UCI machine learning datasets. In addition, the clustering performance of uW-IFCM is compared with bW-PIFCM, PIFCM, IFCM, and FCM clustering algorithms.

1. UCI Machine Learning Datasets

The seven machine learning datasets, namely, IRIS, thyroid, Bupa, zoo, heart, WDBC, and Ecoli are considered for experimentation (see [47]). Out of these seven datasets, five are disease-based datasets concerning to thyroid, liver, heart, breast, and cancer. IRIS is a plant dataset and zoo belongs to the category of the animal dataset. The description of the datasets is provided in Table 15. MATLAB version 8.1 running on a PC with 3.40 GHz frequency and RAM 16 GB is used for the computational tasks.

2. Some popular benchmark measuring indexes:

Here, the five benchmark measuring indexes, namely, clustering accuracy (CA), rand index (\(R_I\)), partition coefficient (PC), partition index (PI), and Dunn index (DI) are used to compare the performances of the algorithms. Now, we define the indexes as follows:

(1) Clustering accuracy (CA) [48]: It is the ratio of the number of correctly classified elements \(p_c\) and a total number of elements p, that is, \(CA=\frac{p_c}{p}\). It is a popular index that works over labeled datasets.

(2) Rand index (\(R_I\)) [44]: Rand index is also used for labeled datasets. Let \(\mathbb {C}\) be the set of clusters being provided in the original dataset. The cluster set resulting after the clustering of the dataset is \(\mathbb {C'}\). The elements in \(\mathbb {C}\times \mathbb {C'}\) are categorized as follows: (1) SD, (2) DS, (3) SS, (4) DD (here S stands for same cluster and D stands for different cluster). Mathematically, we have,

, where \(t_1\) = number of SS, \(t_2\) = number of DS, \(t_3\) = number of SD and \(t_4\) = number of DD with \(R = a+b+c+d\).

(3) Partition coefficient (PC) [49]: It is the mean of the summation of the square of membership value of ith element in jth cluster, \(1\le j\le C\). Mathematically, \(PC=\frac{1}{P}\sum _{i=1}^{P}\sum _{j=1}^{C}u_{ij}^2\), where P is the number of elements in the dataset. It informs about the trade-off between the clusters. If PC limits to unity, then the number of elements appearing in common clusters is very few. Therefore, higher the value of \(PC\in [\frac{1}{C},1]\) (where C is the number of clusters) results in better clustering performance.

(4) Partition index (SC) [50]: The ratio between the compactness of a cluster and its separation from other clusters calculates the partition index. Mathematically,

A lower value of SC means better clustering.

(5) Dunn index (DI) [51]: It is a well-established hard cluster validity index. Dunn defines the clusters to be “compact, separate (CS)” relative to the metric iff the following condition is satisfied: for all p, q, r, where \(p \ne q\), any pair of elements \(u, v \in A_r\) are closer together (with respect to metric), then any pair of elements \(x \in A_p\) and \(y \in A_q\). Let \(A_1, A_2,\dots , A_C\) be C-partition of A and let U be the partition matrix. Dunn index is defined as follows:

3. Experimental analysis of UCI machine learning datasets: In Table 17, we record three types of values for CA, \(R_I\), PC, SC, DI, namely, worst, average, and best. The circular/spherical path-based algorithms, such as uW-IFCM, wIFCM, IFCM, wFCM, FCM, and elliptic/ellipsoidal path-based data-driven bW-PIFCM, PIFCM algorithms are implemented over the seven datasets (see Table 11-Table 14). In IFCM and FCM algorithms, the equally likely approach computes the weight distributions. The weight distributions in fixed-weighted data-driven algorithms, namely wIFCM and wFCM are calculated using Eq. (50). The uW-IFCM algorithm further tunes the weight distribution of wIFCM with the help of its weighing exponent \(\eta\). Here, the values that frequently repeat themselves during 100 random initializations of the algorithms are termed as average values. The results of Table 17 are used for the following experimental analysis:

-

(1)

The best CA obtained by uW-IFCM differs from its worst with a minimal value of 0.033 over the IRIS dataset, whereas the maximal deviation equal to 0.118 is observed on the zoo dataset. Hence, we find a CA-deviation interval [0.033, 0.118] for uW-IFCM. The CA-deviation intervals [0.032, 0.212], [0.007, 0.393], [0.033, 0.196], [0.031, 0.253] are obtained using wIFCM, IFCM, FCM, and wFCM, respectively. Since, in IFCM, the underlined requirements of the problem and weights are not correlated, therefore IFCM has the largest deviation interval. In uW-IFCM, \(\eta\) acts as a controlling parameter, so it deduces highly stable weight distributions (in other words, the deviation interval is least).

-

(2)

In a similar fashion, the \(R_I\)-deviation intervals [0.007, 0.124], [0.002, 0.195], [0.008, 0.166], [0.004, 0.322], and [0.005, 0.317] are obtained using uW-IFCM, wIFCM, IFCM, FCM, and wFCM, respectively. Since, in FCM also the weights and underlined requirement of the problem are not correlated, therefore FCM yields the largest deviation interval. The uW-IFCM uses a controlling parameter \(\eta\)-based weight distribution, and hence highly stable weights are obtained (in other words, the deviation interval is least). For all the algorithms, we have shown the patterns of \(R_I\)-deviations in Fig. 8.

-

(3)

Similar type of analysis can be done for the PC, SC, and DI indexes.

-

(4)

Here, every algorithm shows its best and worst performances on IRIS and Bupa datasets, respectively.

-

(5)

PIFCM is an adaptive algorithm (see [41]), and its clustering is improvised by using data-driven bW-PIFCM. Further, bW-PIFCM yields clustering better than wIFCM, IFCM, wFCM, FCM algorithms except for uW-IFCM. Hence, bW-PIFCM is also an adaptive algorithm.

-

(6)

The worst, average, and best column of every benchmark measuring indexes clearly indicate that uW-IFCM outperforms bW-PIFCM, PIFCM, wIFCM, IFCM, wFCM, and FCM algorithms (see Table 17).

-

(7)

The wFCM and wIFCM are data-driven non-adaptive fixed-weighted algorithms. These fixed weights are derived by Eq. (50). The weighing parameter tunes the weight provided in Eq. (50) during the implementation of uW-IFCM.

5 Feature Reduction Technique

We carry out this study in two sections. In the first section, the uW-IFCM algorithm is introduced as a mechanized feature reduction technique. The next section proposes automatized feature reduction technique called FRT-equipped uW-IFCM algorithm. Here, we try to establish a connection between computational cost and feature reduction.

5.1 A discussion of uW-IFCM clustering algorithm as a feature reduction technique:

Here, we analyze IRIS dataset deeply with the help of the uW-IFCM algorithm. The dataset consists of four features \(\{d_1, d_2, d_3, d_4\}\), namely, sepal length, sepal width, petal length, and petal width, respectively. The 150 instances present in the dataset are divided into three classes: setosa, virginica, versicolor, and each class contains 50 instances. We use twenty feature weight distributions to initialize the algorithm. Here, the first ten feature weight distributions are calculated using the equally likely approach, whereas the next ten feature weight distributions are randomly generated with the help of \(\texttt {rand}\) function of MATLAB (see Table 19). The initial cluster centroids given in Sect. 4.3 are used here also. The importance of weighing exponent \(\eta = 2\) in uW-IFCM is highlighted in Sect. 4.4. Let us use \(\eta = 2\), \(m = 1.7\), and equally likely approach-based initial weights (see rows 1–10 of Table 19) to initialize the uW-IFCM, the algorithm assigns higher weights to the third feature and fourth feature in comparison to other two features. Further, a good clustering accuracy \(CA=0.9867\), rand index \(RI=0.9875\), \(PC=0.9205\) are obtained with \(SC=0.00007\) and \(DI=0.05123\). These values validate that initial weight distribution converges to optimal feature weight distribution. Now, we initialize the uW-IFCM algorithm using initial weight distribution (0.3119, 0.2371, 0.3183, 0.1327), \(\eta = 2\), and \(m = 1.7\), its result is an optimal weight distribution (0.1622, 0.1661, 0.3527, 0.3191) (See Table 19). The experimental findings confirm that uW-IFCM yields optimal weight distribution over the IRIS dataset at \(\eta =2\) (see Fig. 10a, e, f). The algorithm assigns higher weights to the third and fourth features in comparison to the first two features, while using \(\eta =2\) and \(m=1.1\). A graphical comparison of feature weight distribution obtained over the IRIS dataset is also presented (see Fig. 10). At \(\eta =0.5\) and \(\eta = 1.1\), uW-IFCM gives almost equal weightage to all the four features (see Fig. 10b, c). The clustering completely depends on the third feature whenever \(\eta > 2.5\) is explored (see Fig. 10g–j). Here, convergence is achieved at the ninth iteration. Further, if \(\eta >2\), the clustering performance of uW-IFCM deteriorates which can be improvised by tuning \(\eta\) with m. The domain of m depends on \(\eta\) (see Sect. 4.4). Therefore, from an \(\eta\)-dependent domain of m, we have selected some values for the experimentation (see Table 19). The clustering results obtained using different \(\eta >2\) and \(m=1.1\) are recorded in Table 19, we exploit \(m=1.1\) as it suitably tunes the \(\eta\). During the clustering of the IRIS dataset, we obtain higher relevancy for the third and fourth features in comparison to the remaining features. In Table 20, the relevancy of the features of the IRIS dataset is given.

Summary of this discussion: During the clustering of the IRIS dataset, the uW-IFCM allocates more weightage to features \(d_3, d_4\) in comparison to \(d_1, d_2\). Here, due to the absence of proper feature nullification criteria, the less relevant features are mechanically neglected. So, uW-IFCM mechanically observes that \(d_1, d_2\) are irrelevant features. This algorithm can be used as a feature reduction technique. The uW-IFCM results in an efficient clustering of two featured versions of the IRIS dataset also. The algorithm converges in nine iterations while clustering the IRIS dataset, whereas the two featured versions of the IRIS dataset are clustered in five iterations (see Fig. 11). The total computational time taken by uW-IFCM over IRIS dataset and its two featured versions is 0.1485 and 0.1118, respectively.

5.2 Performance of the FRT-equipped uW-IFCM algorithm

Here, a feature reduction technique (FRT) is incorporated in the uW-IFCM algorithm for the automatic elimination of irrelevant features. The computational cost of the algorithm is low. In the FRT-equipped uW-IFCM, a collective threshold is decided for all features such that the weights equal and above the threshold value are accepted and weights having values lower than the threshold gets rejected. The dataset contains D numbers of features/dimensions, so for the removal of irrelevant features the threshold value equal to \(1/(\sqrt{D})^{\chi },\chi >0\) is selected for computational work. The features having weight values lower than \(1/(\sqrt{D})^{\chi }\) are not useful for clustering. Higher the number of dimensions/features, the lower the value of the threshold and vice-versa. If D is large, then \(1/(\sqrt{D})^{\chi }\) becomes small, and in this case, the underlying iterative process of the algorithm converges after discarding low relevancy features. The small value of D results in high threshold value, and hence in this situation the features are rapidly eliminated. This threshold selection works well for all finitely valued D. In this section, we worked on two synthetic datasets of two dimensions and one six-dimensional synthetic dataset. Please refer to Table 16 for synthetic datasets used in the following examples.

Algorithm V: | FRT-equipped uW-IFCM algorithm |

|---|---|

Input: | Dataset (X), number of centroids (C), weighing exponent (\(\eta\)), fuzzy factor (m), intuitionistic fuzzy parameter (\(\alpha\)), tuning parameter for membership function (\(\beta\)), tolerance level (\(\epsilon\)) |

Output: | Fuzzy partition U, centroids \(\{(\bar{\mu }_{ld}, \bar{\nu }_{ld}, \bar{\pi }_{ld})\}_{d=1}^{D}\), Weight matrix W |

Procedure: | 1. 1. Data fuzzification using Algorithm I. |

2. 2. Initialize centroid \(\hat{V}\), U at \(t=0\). | |

3. 3. Initialize weight matrix W with \(\frac{1}{D}\)’s. | |

Repeat | |

4. Update \((U=u_{il})^{t+1}\) by calculating the fuzzy partition using Eq. (41). | |

5. Update centroid \(\{(\bar{\mu }_{ld}, \bar{\nu }_{ld}, \bar{\pi }_{ld})\}_{d=1}^{D}\) using Eqns. (55-57). | |

6. Update weight matrix, W using Eq. (50). | |

7. 7. Optimize weight matrix with threshold value \(1/(\sqrt{D})^{\chi }\). | |

Until | |

8. \(\sum _{i=1}^{C}\frac{d_2(W_i(t),W_i(t+1))}{D} < \epsilon\) is satisfied. | |

Return | \(U^{{t+1}}\), (\(\bar{\mu }_{l}^{t+1}, ~\bar{\nu }_{l}^{t+1}\),\(\bar{\pi }_{l}^{t+1}\)) and \(W^{t+1}\). |

Example 1

We generate a two-dimensional Synthetic Dataset II of sample size 1000 using a Gaussian distribution function, \(\sum _{j=1}^{3}\sum _{l=1}^{2}q_k N(\theta _{jl},\sigma _{jl})\). Here, we denote the mean and the standard deviation with \(\theta\) and \(\sigma\), respectively. The mean value of the jth cluster along lth dimension/feature is given as \(\theta _{jl}\) with standard deviation \(\sigma _{jl}\). Synthetic Dataset II consists of three well-separated clusters. Here, the parameter \(q_k = 1/3, \forall 1 \le k \le 3\). The values of the mean and standard deviation of the three clusters are given as \(\theta _{j1} = (0~2)^T, \theta _{j2} = (4~7)^T, \theta _{j3} = (8~12)^T\) and \(\sigma _{j1} = \sigma _{j2} = \begin{pmatrix} 10&{}0.01\\ 0.01&{}0.01 \end{pmatrix}\) and \(\sigma _{j3} = \begin{pmatrix} 4&{}0\\ 0&{}1 \end{pmatrix}\).

The threshold limit \(1/(\sqrt{D})^{\chi }\) of FRT-equipped uW-IFCM algorithm nullifies the first feature because the relevancy of the first feature is quite less in comparison to the second feature. Now, uW-IFCM computes the CA and \(R_I\) values using only the second feature. The clustering accuracy \(CA=0.9950\) and rand index \(R_I=0.9960\) are calculated over Synthetic Dataset II. Here, with reduced computational cost, we obtain similar results. These criteria easily eliminate irrelevant features from the dataset.

Example 2

We generate a two-dimensional/featured Synthetic Dataset III of sample size 1000 using a Gaussian distribution function, \(\sum _{j=1}^{4}\sum _{l=1}^{2}q_k N(\theta _{jl},\sigma _{jl})\). Here, we denote the mean and the standard deviation with \(\theta\) and \(\sigma\), respectively. The mean value of the jth cluster along lth dimension/feature is given as \(\theta _{jl}\) with standard deviation \(\sigma _{jl}\). The Synthetic Dataset III consists of four well-separated clusters. Here, the parameter \(q_k = 1/4, \forall 1 \le k \le 4\). The values of the mean and standard deviation of the four clusters are given as \(\theta _{j1} = (10~5)^T, \theta _{j2} = (10~10)^T, \theta _{j3} = (10~15)^T, \theta _{j4} = (10~20)^T\) and \(\sigma _{jk} = \begin{pmatrix} 1&{}0\\ 0&{}1 \end{pmatrix}\), \(\forall 1 \le k \le 4\).

We have transformed two-dimensional/featured dataset into a three-dimensional/featured dataset using the mapping \(\chi (x, y) = (x \cos x, y, x\sin x)\). A two-dimensional cross-sectional view of the clustering is shown in Fig. 14, and in this case, we obtain clustering accuracy \(CA=0.5888\) and rand index \(R_I=0.4999\). The threshold limit of the dataset is given by \(1/(\sqrt{3})^{\chi }\). The incorporation of feature reduction criteria (\(1/(\sqrt{3})^{\chi }\)) in uW-IFCM excludes the first feature during the clustering, and thus improved \(CA= 0.9954\) and \(R_I=0.9960\) are obtained.

Example 3

Here, we use a two-cluster six-dimensional dataset named Synthetic Dataset IV. The dataset contains two types of data points. Each data point has six features. The Gaussian distribution selects the 1st, 3rd, and 5th feature values of the first 100 data points. This distribution has two parameters, mean = 3 and variance = 0.5. Using uniform distribution, the 2nd, 4th, and 6th feature values are assigned in the interval [0,10]. In a similar fashion, the 2nd, 4th, and 5th feature values of the next 100 data points are selected with the help of Gaussian distribution having mean = 7 and variance = 0.5. Uniform distribution assigns values to the remaining features, i.e., 1st, 3rd, and 6th in [0,10].

The dataset is constructed such that the 1st, 3rd, and 5th dimensions/features reside in the first cluster, whereas the 2nd, 4th, and 6th dimensions reside in the second cluster. In such datasets, subspace clustering gives effective results. A detailed subspace view of the dataset is presented (see Fig. 12). The importance of the 5th feature in separating two clusters is clearly shown in Fig. 12c and h. The uW-IFCM algorithm also verifies that 5th feature is most important as it calculates weight distribution, \(W = [0.1215,0.1102,0.0934,\) \(0.0956,{\textbf {0.5252}},0.0539]\). The CA and \(R_I\) are being recorded as 0.9989 and 0.9954, respectively.

The weight distributions obtained using the uW-IFCM algorithm over different datasets are compared in Fig. 15. The figure clearly shows the differences in the weights of relevant and irrelevant features. Once the number of features is reduced, the running time also decreases (see Table 22). A detailed analysis of the parameter \(\chi\) is provided in Table 21.

6 Conclusion

In this paper, we have introduced a spherical path-based clustering algorithm called uW-IFCM and an ellipsoidal path-based algorithm known as bW-PIFCM. The uW-IFCM algorithm has assigned a single weight to each feature, whereas bW-PIFCM is an adaptive algorithm that allocates data-driven weight triplet to each feature. The feature weights in uW-IFCM are further tuned with the help of a weighing parameter \(\eta\). Here, the real-valued dataset has been transformed into an AIFS dataset using a novel intuitionistic fuzzification technique. The technique does not extract membership and non-membership functions from the dataset. This lacking of the extraction process leads to uncertainty for which the two parameters \(\alpha\) and \(\beta\) have been introduced. The uW-IFCM is not an adaptive algorithm, so it is computationally expensive. In order to reduce the cost of the uW-IFCM algorithm, we have introduced the FRT-equipped uW-IFCM algorithm. The feature reduction technique eliminates irrelevant features from the dataset. The proposed uW-IFCM algorithm has been compared with the introduced bW-PIFCM algorithm, PIFCM, IFCM, wIFCM, wFCM, and FCM algorithms over UCI machine learning datasets and some synthetic datasets. The feature weight distribution used in uW-IFCM is appropriate, so it has effectively handled irrelevant and noisy features in comparison to other algorithms. The uW-IFCM has shrunk the search space of fuzzy factor m besides eliminating irrelevant features, so the running time of the algorithm is reduced.

In the future, the aim is to study the outliers and noises possessing real-world datasets using uW-IFCM. We will try to formulate an AIFS extraction technique involving a multi-valued function. The final objective is to suggest exponent \(\eta\)-based improvisation for the proposed bW-PIFCM and to tune the weight triplets with \(\eta\). The FRT-equipped uW-IFCM algorithm deduces a threshold-dependent weight distribution, and thereby relevant features get separated from irrelevant features. In the future, we will try to introduce the FRT-equipped bW-PIFCM algorithm. Here, the paper has established bW-PIFCM as an adaptive algorithm.

The future work of this paper is motivated by the limitations of our proposed approach, which involve implementing the algorithm on real-valued datasets in a computationally efficient manner.

References

Agrawal, R., Gehrke, J., Gunopulos, D., Raghavan, P.: Automatic subspace clustering of high dimensional data for data mining applications. In: Proceedings of the 1998 ACM SIGMOD International Conference on Management of Data, pp. 94–105 (1998)

Vidal, R.: Subspace clustering. IEEE Signal Process. Mag. 28(2), 52–68 (2011)

Sander, J., Ester, M., Kriegel, H.-P., Xiaowei, X.: Density-based clustering in spatial databases: the algorithm gdbscan and its applications. Data Min. Knowl. Discov. 2(2), 169–194 (1998)

Valente, D.O.J., Witold, P.: Advances in Fuzzy Clustering and Its Applications. Wiley, New York (2007)

Cohen-Addad, V., Kanade, V., Mallmann-Trenn, F., Mathieu, C.: Hierarchical clustering: objective functions and algorithms. J. ACM 66(4), 1–42 (2019)

Bezdek, J.C., Ehrlich, R., Full, W.: Fcm: the fuzzy c-means clustering algorithm. Comput. Geosci. 10(2–3), 191–203 (1984)

Zeshui, X., Junjie, W.: Intuitionistic fuzzy c-means clustering algorithms. J. Syst. Eng. Electron. 21(4), 580–590 (2010)

Qiu, C., Xiao, J., Yu, L., Han, L., Iqbal, M.N.: A modified interval type-2 fuzzy c-means algorithm with application in mr image segmentation. Pattern Recogn. Lett. 34(12), 1329–1338 (2013)

Chengmao, W., Xiaokang, G.: A novel interval-valued data driven type-2 possibilistic local information c-means clustering for land cover classification. Int. J. Approx. Reason. 148, 80–116 (2022)

Ji, Z., Sun, Q., Xia, Y., Chen, Q., Xia, D., Feng, D.: Generalized rough fuzzy c-means algorithm for brain mr image segmentation. Comput. Methods Programs Biomed. 108(2), 644–655 (2012)

Li, F., Ye, M., Chen, X.: An extension to rough c-means clustering based on decision-theoretic rough sets model. Int. J. Approx. Reason. 55(1), 116–129 (2014)

Chen, N., Ze-shui, X., Xia, M.: Hierarchical hesitant fuzzy k-means clustering algorithm. Appl. Math. A 29(1), 1–17 (2014)

Gustafson,D.E., Kessel, W.C.: Fuzzy clustering with a fuzzy covariance matrix. In: 1978 IEEE Conference on Decision and Control Including the 17th Symposium on Adaptive Processes, pp. 761–766. IEEE (1979)

Kuo-Lung, W., Yang, M.-S.: Alternative c-means clustering algorithms. Pattern Recognit. 35(10), 2267–2278 (2002)

Lee, J.Y., Kim, D., Mun, J.Y., Kang, S., Son, S.H., Shin, S.: Texture weighted fuzzy c-means algorithm for 3d brain mri segmentation. In: Proceedings of the 2018 Conference on Research in Adaptive and Convergent Systems, pp. 291–295 (2018)

Liu, X., Li, X., Zhang, Y., Yang, C., Xu, W., Li, M., Luo, H.: Remote sensing image classification based on dot density function weighted fcm clustering algorithm. In: 2007 IEEE International Geoscience and Remote Sensing Symposium, pp. 2010–2013. IEEE (2007)

Miin-Shen, Y., Sinaga Kristina, P.: Collaborative feature-weighted multi-view fuzzy c-means clustering. Pattern Recognit. 119, 108064 (2021)

Wenyuan, Z., Tianyu, H., Jun, C.: A robust bias-correction fuzzy weighted c-ordered-means clustering algorithm. Math. Probl. Eng. (2019). https://doi.org/10.1155/2019/5984649

Li, J., Gao, X., Ji, H.: A feature weighted FCM clustering algorithm based on evolutionary strategy. In: Proceedings of the 4th World Congress on Intelligent Control and Automation (Cat. No. 02EX527), Vol. 2, pp. 1549–1553. IEEE (2002)

Wang, X., Wang, Y., Wang, L.: Improving fuzzy c-means clustering based on feature-weight learning. Pattern Recognit. Lett. 25(10), 1123–1132 (2004)

Yang, M.-S., Nataliani, Y.: A feature-reduction fuzzy clustering algorithm based on feature-weighted entropy. IEEE Trans. Fuzzy Syst. 26(2), 817–835 (2017)

Stephan, T., Sharma, K., Shankar, A., Punitha, S., Varadarajan, V., Liu, P.: Fuzzy-logic-inspired zone-based clustering algorithm for wireless sensor networks. Int. J. Fuzzy Syst. 23, 506–517 (2021)

Wang, G., Wang, J.-S., Wang, H.-Y.: Fuzzy c-means clustering validity function based on multiple clustering performance evaluation components. Int. J. Fuzzy Syst. 24(4), 1859–1887 (2022)

Sinaga, K.P., Hussain, I., Yang, M.-S.: Entropy k-means clustering with feature reduction under unknown number of clusters. IEEE Access 9, 67736–67751 (2021)

D’Urso, P., Leski, J.M.: Fuzzy clustering of fuzzy data based on robust loss functions and ordered weighted averaging. Fuzzy Sets Syst. 389, 1–28 (2020)

He Yu-Lin, O., Gui-Liang, P.F.-V., Huang, J.Z., Suganthan, P.N.: A novel dependency-oriented mixed-attribute data classification method. Expert Syst. Appl. 199, 116782 (2022)

Sun, L., Zhang, J., Ding, W., Jiucheng, X.: Feature reduction for imbalanced data classification using similarity-based feature clustering with adaptive weighted k-nearest neighbors. Inf. Sci. 593, 591–613 (2022)

Nha, V.P., The, P.L., Pedrycz, W., Ngo, L.T.: Feature-reduction fuzzy co-clustering approach for hyper-spectral image analysis. Knowl. Based Syst. 216, 106549 (2021)

Siminski, K.: An outlier-robust neuro-fuzzy system for classification and regression. Int. J. Appl. Math. Comput. Sci. 31(2), 303–319 (2021)

Khan, M.A., Arshad, H., Nisar, W., Javed, M.Y., Sharif, M.: An integrated design of fuzzy c-means and nca-based multi-properties feature reduction for brain tumor recognition. In: Signal and Image Processing Techniques for the Development of Intelligent Healthcare Systems, pp. 1–28. Springer, New York (2021)

Atanassov, K.T.: Intuitionistic fuzzy sets. Fuzzy Sets Syst. 20(1), 87–96 (1986)

Chaira, T.: A novel intuitionistic fuzzy c means clustering algorithm and its application to medical images. Appl. Soft Comput. 11(2), 1711–1717 (2011)

Huang, C.-W., Lin, K.-P., Ming-Chang, W., Hung, K.-C., Liu, G.-S., Jen, C.-H.: Intuitionisti fuzzy c-means clustering algorithm with neighborhood attration in segmenting medial image. Soft Comput. 19(2), 459–470 (2015)

Iakovidis, D.K., Pelekis, N., Kotsifakos, E., Kopanakis, I.: Intuitionistic fuzzy clustering with applications in computer vision. In: International Conference on Advanced Concepts for Intelligent Vision Systems, pp. 764–774. Springer, New York (2008)

Lin, K.-P.: A novel evolutionary kernel intuitionistic fuzzy \(c\)-means clustering algorithm. IEEE Trans. Fuzzy Syst. 22(5), 1074–1087 (2013)

Hanuman, V., Agrawal, R.K., Aditi, S.: An improved intuitionistic fuzzy c-means clustering algorithm incorporating local information for brain image segmentation. Appl. Soft Comput. 46, 543–557 (2016)

Zhao, F., Chen, Y., Liu, H., Fan, J.: Alternate pso-based adaptive interval type-2 intuitionistic fuzzy c-means clustering algorithm for color image segmentation. IEEE Access 7, 64028–64039 (2019)

Zhou, X., Zhao, R., Fengquan, Yu., Tian, H.: Intuitionistic fuzzy entropy clustering algorithm for infrared image segmentation. J. Intell. Fuzzy Syst. 30(3), 1831–1840 (2016)

Namburu, A., Samayamantula, S.K., Edara, S.R.: Generalised rough intuitionistic fuzzy c-means for magnetic resonance brain image segmentation. IET Image Process. 11(9), 777–785 (2017)

Kaushal, M., Solanki, R., Danish Lohani, Q.M., Muhuri Pranab, K.: A novel intuitionistic fuzzy set generator with application to clustering. In: 2018 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), pp. 1–8. IEEE (2018)

Danish Lohani, Q.M., Solanki, R., Muhuri, P.K.: Novel adaptive clustering algorithms based on a probabilistic similarity measure over atanassov intuitionistic fuzzy set. IEEE Trans. Fuzzy Syst. 26(6), 3715–3729 (2018)

Szmidt, E., Kacprzyk, J.: Geometric similarity measures for the intuitionistic fuzzy sets. In: EUSFLAT Conference (2013)

Szmidt, E., Kacprzyk, J.: Distances between intuitionistic fuzzy sets. Fuzzy Sets Syst. 114(3), 505–518 (2000)

Zhexue, H.J., Ng Michael, K., Hongqiang, R., Zichen, L.: Automated variable weighting in k-means type clustering. IEEE Trans. Pattern Anal. Mach. Intell. 27(5), 657–668 (2005)

Danish Lohani, Q.M., Solanki, R., Muhuri, P.K.: A convergence theorem and an experimental study of intuitionistic fuzzy c-mean algorithm over machine learning dataset. Appl. Soft Comput. 71, 1176–1188 (2018)

Siminski, K.: Fuzzy weighted c-ordered means clustering algorithm. Fuzzy Sets Syst. 318, 1–33 (2017)

Asuncion, A., Newman, D.: Uci machine learning repository (2007)

Graves, D., Pedrycz, W.: Kernel-based fuzzy clustering and fuzzy clustering: a comparative experimental study. Fuzzy Sets Syst. 161(4), 522–543 (2010)

Pal, N.R., Bezdek, J.C.: On cluster validity for the fuzzy c-means model. IEEE Trans. Fuzzy Syst. 3(3), 370–379 (1995)

Bensaid Amine, M., Hall Lawrence, O., Bezdek James, C., Clarke Laurence, P., Silbiger Martin, L., Arrington John, A., Murtagh, R.F.: Validity-guided (re) clustering with applications to image segmentation. IEEE Trans. Fuzzy Syst. 4(2), 112–123 (1996)

Bezdek, J.C., Pal, N.R.: Cluster validation with generalized dunn’s indices. In: Proceedings 1995 Second New Zealand International Two-Stream Conference on Artificial Neural Networks and Expert Systems, pp. 190–190. IEEE Computer Society (1995)

Funding

The research work of the first author is funded by University Grants Commission (UGC), New Delhi, India under the grant number 19/06/2016(i)EU-V.

Author information

Authors and Affiliations

Contributions

All the authors have equally contributed in the paper.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Ethical Approval

This manuscript does not contain any studies with human participants performed by any of the authors.

Informed Consent

We do not have any associated data used in our manuscript.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kaushal, M., Danish Lohani, Q.M. & Castillo, O. Weighted Intuitionistic Fuzzy C-Means Clustering Algorithms. Int. J. Fuzzy Syst. 26, 943–977 (2024). https://doi.org/10.1007/s40815-023-01644-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-023-01644-5