Abstract

Dual hesitant fuzzy sets (DHFSs) are powerful and efficient to express hesitant preferred and non-preferred information simultaneously. This paper focuses on similarity measures for DHFSs. To do this, it first analyzes the limitations of previous similarity measures for DHFSs. Then, several new dual hesitant fuzzy similarity measures are defined that can avoid the issues of previous ones. To discriminate the importance of decision-making criteria, several weighted similarity measures are further defined in views of additive and 2-additive measures. When the weighting information is not exactly known, optimization methods for determining additive and 2-additive measures are built, respectively. Furthermore, a method for multi-criteria decision-making based on new weighted similarity measures is developed. Finally, two numerical examples are provided to show the utilization of the new method and compare with previous methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

To cope with uncertain and fuzzy decision-making information, fuzzy decision-making theory has been developed into a hot researching topic. With the development of decision-making theory, scholars noted that Zadeh’s fuzzy sets [1] cannot express the non-preferred or hesitant decision-making information. To address this issue, Atanassov [2] introduced the concept of Atannasov’s intuitionistic fuzzy sets (AIFSs) that employs two real values in [0, 1] to express the preferred and non-preferred judgments, respectively. To further denote the uncertain judgments of the decision-makers (DMs), Atanassov and Gargov [3] defined interval-valued intuitionistic fuzzy sets (IVIFSs) that are composed by two intervals in [0, 1] to separately denote the uncertain preferred and non-preferred judgments of DMs. On the other hand, Torra [4] presented the concept of hesitant fuzzy sets to show the hesitancy of DMs. Taking the advantages of these two types of fuzzy sets, many extending forms are proposed such as intuitionistic multiplicative sets (IMSs) [5], interval-valued intuitionistic multiplicative sets (IVIMSs) [6], linguistic intuitionistic fuzzy sets (LIFVSs) [7], multiplicative linguistic intuitionistic fuzzy sets (MLIFSs) [8], hesitant multiplicative fuzzy sets (HMFSs) [9], interval-valued hesitant fuzzy sets (IVHFSs) [10, 11], hesitant fuzzy linguistic term sets (HFLTSs) [12], multiplicative hesitant fuzzy linguistic sets (MHFLSs) [13], and interval linguistic hesitant fuzzy sets (ILHFSs) [14]. At present, the theory and application of decision-making with such types of fuzzy information have achieved great success.

Although intuitionistic fuzzy sets and hesitant fuzzy sets endow the DMs with more flexibility and greatly extend the theory and application of decision-making, some scholars noted that they still have some limitations to express the recognitions of the DMs in some complex situations. For example, none of them can express the hesitant preferred and non-preferred judgements of the DMs simultaneously. Therefore, Zhu et al. [15] introduced the concept of dual hesitant fuzzy sets (DHFSs) that can be seen as a combination of intuitionistic fuzzy sets and hesitant fuzzy sets. Then, the authors studied some basic operations and defined the score and accuracy functions for DHFSs. After the pioneer work of Zhu et al. [15], many decision-making problems with dual hesitant fuzzy information are proposed. For example, Wang et al. [16] extended the intuitionistic fuzzy aggregation operator to define two types of dual hesitant fuzzy aggregation operators: the dual hesitant fuzzy hybrid average (DHFHA) operator and the dual hesitant fuzzy hybrid geometric (DHFHG) operator. Using these two operators, the authors offered a method to multi-attribute decision-making with dual hesitant fuzzy information. Based on the Einstein t-conorm and t-norm, Zhao et al. [17] proposed the Einstein dual hesitant fuzzy (ordered) weighted averaging operator and the Einstein dual hesitant fuzzy (ordered) weighted geometric mean operator to calculate the comprehensive attribute values of objects with dual hesitant fuzzy information. Based on the Archimedean t-conorm and t-norm, Wang et al. [18] defined several dual hesitant fuzzy power aggregation operators including the weighted generalized dual hesitant fuzzy power average (WGDHFPA) operator, the weighted generalized dual hesitant fuzzy power geometric (WGDHFPG) operator, the dual hesitant fuzzy power ordered weighted average (DHFPOWA) operator and the dual hesitant fuzzy power ordered weighted geometric (DHFPOWG) operator. Considering the interactive characteristics among attributes, Ren et al. [19] used the λ-measure to develop a dual hesitant fuzzy VIKOR method for multi-criteria group decision-making. Furthermore, the dual hesitant fuzzy Choquet operators are studied by Ju et al. [20] and the dual hesitant fuzzy Shapley operators are researched by Qu et al. [21]. Considering the correlations among DHFEs, Yu et al. [22] defined the dual hesitant fuzzy Heronian mean (DHFHM) operator and the dual hesitant fuzzy geometric Heronian mean (DHFGHM) operator. Then, the authors researched their application in the supplier selection. Ren and Wei [23] used the defined correctional score function to give the dice similarity measure of DHFSs and then gave an approach to multi-attribute decision-making. Zhang et al. [24] extended the cosine similarity measure to DHFSs and defined a weighted dual hesitant fuzzy cosine similarity measure. Furthermore, similarity measures for DHFSs based on distance measures are researched in the literature [12, 25, 26] and dual hesitant fuzzy correlation coefficients are discussed in the literature [27,28,29]. It is noteworthy that most of previous research about dual hesitant fuzzy similarity measures and correlation coefficients needs DHFSs to have the same length of their membership and non-membership degree sets, respectively. Otherwise, extra values need to be added to the shorter length membership and non-membership degree sets. However, this procedure changes the original DHFSs offered by the DMs [30]. Furthermore, different rankings may be obtained by subjectively adding different values to DHFSs. Thus, the rationality of this procedure needs further study. Furthermore, all the above dual hesitant fuzzy similarity measures are based on the assumption that the weights of criteria are independent. However, this assumption may be incorrect and there may be interactions among them [19,20,21].

This paper continues to study decision-making with dual hesitant fuzzy information and defines several new similarity measures for DHFSs that allow the lengths of the membership and non-membership degree sets to be different. Then, a method for decision-making with dual hesitant fuzzy information is developed that can address the situation where the weighting information with interactive characteristics is inexactly known. The main contributions of this paper include: (1) new similarity measures for DHFSs are defined that need not consider the lengths of DHFSs and avoid the limitations of previous ones; (2) mathematical programming models for determining the weighting information are offered; (3) new method can address the situation where there are interactive characteristics among criteria by the 2-additive measure [31] and the Shapley value [32]; (4) two numerical examples are offered to show the special application of the new method and comparative analysis is made.

This paper is organized as follows: Sect. 2 first reviews the concept of DHFSs and an ordered relationship. Then, it recalls several similarity measures for DHFSs and analyzes their limitations in some cases. Section 3 defines several new similarity measures for DHFSs based on different viewpoints, which do not require DHFSs to have the same length of their membership and non-membership degree sets. Considering the difference between the weights of criteria and the interactions, several 2-additive measure-based Shapley weighted similarity measures are further proposed. Section 4 builds several models for determining the weighting information on the criteria set. Then, it offers an algorithm for multi-criteria decision-making with DHFSs. Section 5 offers two examples to show the application of the new method and compare it with several previous similarity measure-based methods. Conclusions and future remarks are given in Sect. 6.

2 Basic Concepts

This section first reviews some basic concepts about DHFSs. Then, it lists and analyzes previous similarity measures for DHFSs.

In this paper, let X = {x1, x2, …, xn} be the set of compared objects. To express the hesitant preferred and non-preferred information, Zhu et al. [15] introduced DHFSs that employ several values in [0, 1] to express the possible membership and non-membership degrees of a judgment.

Definition 1

[15] A DHFS D on X is defined as \( D = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}(x_{i} ),\tilde{g}(x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \), where \( \tilde{h}(x_{i} ) \) and \( \tilde{g}(x_{i} ) \) are two sets of several values in [0, 1] that represent the possible membership and non-membership degrees of the element \( x_{i} \in X \) to the set D, respectively. For each \( x_{i} \in X \), it has \( \mathop {\hbox{max} }\limits_{{\gamma \in \tilde{h}(x_{i} )}} \gamma + \mathop {\hbox{max} }\limits_{{\eta \in \tilde{g}(x_{i} )}} \eta \le 1 \). Furthermore, each element \( d(x_{i} ) = \left\langle {\tilde{h}(x_{i} ),\tilde{g}(x_{i} )} \right\rangle \) in D is called a dual hesitant fuzzy element (DHFE), denoted by \( d = \left\langle {\tilde{h},\tilde{g}} \right\rangle \) such that \( \mathop {\hbox{max} }\limits_{{\gamma \in \tilde{h}}} \gamma + \mathop {\hbox{max} }\limits_{{\eta \in \tilde{g}}} \eta \le 1 \).

For example, let X = {x1, x2, x3}. Then, a DHFS D on X may be defined as:

Considering the order relationship between DHFEs, Zhu et al. [15] offered the following ranking based on the score and accuracy functions.

Definition 2

[15] Let \( d_{1} = \left\langle {\tilde{h}_{1} ,\tilde{g}_{1} } \right\rangle \) and \( d_{2} = \left\langle {\tilde{h}_{2} ,\tilde{g}_{2} } \right\rangle \) be any two DHFEs. Then, their order relationship is defined as:

-

(1)

If \( s(d_{1} ) > s(d_{2} ) \), then \( d_{1} \succ d_{2} \);

-

(2)

if \( s(d_{1} ) = s(d_{2} ) \), then \( \left\{ \begin{aligned} a(d_{1} ) > a(d_{2} ),d_{1} \succ d_{2} \hfill \\ a(d_{1} ) = a(d_{2} ),d_{1} \sim d_{2} \hfill \\ \end{aligned} \right. \), where \( s( \cdot ) \) is the score function, and \( a( \cdot ) \) is the accuracy function, defined by \( s(d) = \frac{1}{{|\tilde{h}|}}\sum\nolimits_{{\gamma \in \tilde{h}}} \gamma - \frac{1}{{|\tilde{g}|}}\sum\nolimits_{{\eta \in \tilde{g}}} \eta \) and \( a(d) = \)\( \frac{1}{{|\tilde{h}|}}\sum\nolimits_{{\gamma \in \tilde{h}}} \gamma + \frac{1}{{|\tilde{g}|}}\sum\nolimits_{{\eta \in \tilde{g}}} \eta \) for any DHFE \( d = \left\langle {\tilde{h},\tilde{g}} \right\rangle \), and \( |\tilde{h}| \) and \( |\tilde{g}| \) are the cardinalities of \( \tilde{h} \) and \( \tilde{g} \), respectively.

For example, let \( d_{1} = \left\langle {\{ 0.3,0.4,0.5\} ,\{ 0.2\} } \right\rangle \) and \( d_{2} = \left\langle {\{ 0.4,0.5\} ,\{ 0.2,0.3\} } \right\rangle \). Then, their scores are \( s(d_{1} ) = 0.2 \) and \( s(d_{2} ) = 0.2 \). Furthermore, their accuracies are \( a(d_{1} ) = 0.6 \) and \( s(d_{2} ) = 0.7 \). According to Definition 2, we derive \( d_{1} \prec d_{2} \).

Zhao et al. [33] noted that there are some situations where the ranking method in Definition 2 cannot distinguish. Thus, Zhao et al. [33] further introduced an improving ranking method.

Definition 3

[33] Let \( d_{1} = \left\langle {\tilde{h}_{1} ,\tilde{g}_{1} } \right\rangle \) and \( d_{2} = \left\langle {\tilde{h}_{2} ,\tilde{g}_{2} } \right\rangle \) be any two DHFEs. Then, their order relationship is defined as:

-

(1)

If \( s(d_{1} ) > s(d_{2} ) \), then \( d_{1} \succ d_{2} \);

-

(2)

If \( s(d_{1} ) = s(d_{2} ) \) and \( a(d_{1} ) > a(d_{2} ) \), then \( d_{1} \succ d_{2} \);

-

(3)

If \( s(d_{1} ) = s(d_{2} ) \) and \( a(d_{1} ) = a(d_{2} ) \), then \( \left\{ \begin{aligned} p(d_{1} ) < p(d_{2} ),d_{1} \succ d_{2} \hfill \\ p(d_{1} ) = p(d_{2} ),d_{1} \sim d_{2} \hfill \\ \end{aligned} \right. \), where \( p( \cdot ) \) is the variance degree, defined by \( p(d) = \frac{1}{{|\tilde{h}|}}\sqrt {\sum\limits_{{\gamma_{i} ,\gamma_{j} \in \tilde{h}}} {\left( {\gamma_{i} - \gamma_{j} } \right)^{2} } } + \frac{1}{{|\tilde{g}|}}\sqrt {\sum\limits_{{\eta_{i} ,\eta_{j} \in \tilde{g}}} {\left( {\eta_{i} - \eta_{j} } \right)^{2} } } \) for any DHFE \( d = \left\langle {\tilde{h},\tilde{g}} \right\rangle \), and \( |\tilde{h}| \) and \( |\tilde{g}| \) are the cardinalities of \( \tilde{h} \) and \( \tilde{g} \), respectively.

For example, let \( d_{1} = \left\langle {\{ 0.3,0.5\} ,\{ 0.2,0.4\} } \right\rangle \) and \( d_{2} = \left\langle {\{ 0.3,0.4,0.5\} ,\{ 0.3\} } \right\rangle \). Then, we have \( s(d_{1} ) = s(d_{2} ) = 0.1 \) and \( a(d_{1} ) = a(d_{2} ) = 0.7 \). Therefore, Definition 2 is helpless in this case. Following Definition 3, we obtain \( p(d_{1} ) = 0.2 \) and \( p(d_{2} ) = 0.0816 \). According to Definition 3, we derive \( d_{1} \prec d_{2} \).

To measure the similarity between DHFSs, Singh [25] presented the following two similarity measures.

Definition 4

[25, 26] Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) and \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any two DHFSs. Then, the similarity measures are defined as follows:

and

where

is the generalized dual hesitant normalized distance between D1 and D2, and

is the generalized dual hesitant normalized Hausdorff distance between D1 and D2. Furthermore, \( \lambda > 0 \), \( l_{{x_{i} }} = \hbox{max} \left\{ {|\tilde{h}_{1} (x_{i} )|,|\tilde{h}_{2} (x_{i} )|} \right\} \) and \( m_{{x_{i} }} = \hbox{max} \left\{ {|\tilde{g}_{1} (x_{i} )|,|\tilde{g}_{2} (x_{i} )|} \right\} \) for each \( x_{i} \in X \), and \( \sigma ( \cdot ) \) is the permutation such that \( \left\{ \begin{aligned} \gamma_{1}^{\sigma (j)} (x_{i} ) \le \gamma_{1}^{\sigma (j + 1)} (x_{i} ) \hfill \\ \gamma_{2}^{\sigma (j)} (x_{i} ) \le \gamma_{2}^{\sigma (j + 1)} (x_{i} ) \hfill \\ \end{aligned} \right. \) for all \( j = 1,2, \ldots ,l_{{x_{i} }} - 1 \) and all \( x_{i} \in X \), and \( \left\{ \begin{aligned} \eta_{1}^{\sigma (k)} (x_{i} ) \le \eta_{1}^{\sigma (k + 1)} (x_{i} ) \hfill \\ \eta_{2}^{\sigma (k)} (x_{i} ) \le \eta_{2}^{\sigma (k + 1)} (x_{i} ) \hfill \\ \end{aligned} \right. \) for all \( k = 1,2, \ldots ,m_{{x_{i} }} - 1 \) and all \( x_{i} \in X \).

Based on the Dα operator for Atannasov’s intuitionistic fuzzy sets [2] and the formula for determining the value of α [34], Ren and Wei [23] presented a correctional score function for DHFEs, by which the authors defined the dice similarity measure of DHFSs.

Definition 5

[23] Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) and \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any two DHFSs. Then, the weighted dice similarity measure of DHFSs is defined as follows:

where \( w_{i} \) is the weight of the object xi such that \( \sum\nolimits_{i = 1}^{n} {w_{i} } = 1 \) and \( w_{i} \ge 0 \) for all i = 1, 2, …, n, \( d_{1} (x_{i} ) \in D_{1} \) and \( d_{2} (x_{i} ) \in D_{2} \) are DHFEs for \( x_{i} \in X \) to the DHFSs D1 and D2, respectively, \( S_{{D_{\alpha } }} \) is the correctional score function for DHFEs, defined by \( S_{{D_{\alpha } }} = \frac{{1 + S_{{\tilde{h}}} (\tilde{h}) - S_{{\tilde{g}}} (\tilde{g})}}{2} \) for any DHFE \( d = \left\langle {\tilde{h},\tilde{g}} \right\rangle \) such that \( S_{{\tilde{h}}} (\tilde{h}) = \frac{1}{{|\tilde{h}|}}\sum\nolimits_{{\gamma \in \tilde{h}}} \gamma + \alpha \pi \), \( S_{{\tilde{g}}} (\tilde{g}) = \frac{1}{{|\tilde{g}|}}\sum\nolimits_{{\eta \in \tilde{g}}} \eta + (1 - \alpha )\pi \), \( \pi = 1 - \frac{1}{{|\tilde{h}|}}\sum\nolimits_{{\gamma \in \tilde{h}}} \gamma - \frac{1}{{|\tilde{g}|}}\sum\nolimits_{{\eta \in \tilde{g}}} \eta \) and \( \alpha = \frac{1}{2} + \frac{{\frac{1}{{|\tilde{h}|}}\sum\nolimits_{{\gamma \in \tilde{h}}} {\gamma - } \frac{1}{{|\tilde{g}|}}\sum\nolimits_{{\eta \in \tilde{g}}} \eta }}{2} + \frac{{\frac{1}{{|\tilde{h}|}}\sum\nolimits_{{\gamma \in \tilde{h}}} {\gamma - } \frac{1}{{|\tilde{g}|}}\sum\nolimits_{{\eta \in \tilde{g}}} \eta }}{2}\pi \)

Furthermore, Singh [12] introduced another similarity measure based on the distance measure between DHFSs.

Definition 6

[12] Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) and \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any two DHFSs. Then, their similarity measure is defined as follows:

where \( 0 < \tau < + \infty \), \( \phi_{{\tilde{h}}} \left( {x_{i} } \right) = \frac{1}{{2l_{{x_{i} }} }}\sum\limits_{j = 1}^{{l_{{x_{i} }} }} {\left| {\gamma_{1}^{\sigma (j)} (x_{i} ) - \gamma_{2}^{\sigma (j)} (x_{i} )} \right|} \), \( \phi_{{\tilde{g}}} \left( {x_{i} } \right) = \frac{1}{{2m_{{x_{i} }} }}\sum\limits_{k = 1}^{{m_{{x_{i} }} }} {\left| {\eta_{1}^{\sigma (k)} (x_{i} ) - \eta_{2}^{\sigma (k)} (x_{i} )} \right|} \), and \( l_{{x_{i} }} \) and \( m_{{x_{i} }} \) as shown in Definition 4.

Deferent from the above dual hesitant fuzzy similarity measures, Zhang et al. [24] proposed the following weighted dual hesitant fuzzy cosine similarity measure:

Definition 7

[24] Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) and \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any two DHFSs. Then, their weighted cosine similarity measure is defined as follows:

where \( w_{i} \) is the weight of the object xi such that \( \sum\nolimits_{i = 1}^{n} {w_{i} } = 1 \) and \( w_{i} \ge 0 \) for all i = 1, 2, …, n,, and \( l_{{x_{i} }} \) and \( m_{{x_{i} }} \) as shown in Definition 4.

Remark 1

The similarity measures shown in Definitions 4, 6 and 7 require the considered DHFSs to have the same length of the membership degree set and non-membership degree set, respectively. Otherwise, we need to add extra values to the shorter length membership and the non-membership degree sets. However, this procedure changes the original information offered by the DMs, namely, the adjusted DHFSs are different from the original ones. To show this issue, we offer the following example.

Example 1

Let \( d_{1} = \left\langle {\tilde{h}_{1} ,\tilde{g}_{1} } \right\rangle = \left\langle {\left\{ {0.15,0.4} \right\},\left\{ {0.3,0.4,0.5,0.6} \right\}} \right\rangle \) and \( d_{2} = \left\langle {\tilde{h}_{2} ,\tilde{g}_{2} } \right\rangle = \left\langle {\left\{ {0.5,0.6,0.7} \right\},\left\{ {0.25,0.3} \right\}} \right\rangle \). When we measure their similarity by Eq. (1), (2), or (6), one extra value needs to be added to \( \tilde{h}_{1} = \left\{ {0.15,0.4} \right\} \), and two extra values need to be added to \( \tilde{g}_{2} = \left\{ {0.25,0.3} \right\} \). If 0.15 is added to \( \tilde{h}_{1} \), we derive \( \tilde{h}^{\prime}_{1} = \left\{ {0.15,0.15,0.4} \right\} \). However, one can check that \( d_{1} = \left\langle {\tilde{h}_{1} ,\tilde{g}_{1} } \right\rangle \ne d^{\prime}_{1} = \left\langle {\tilde{h}^{\prime}_{1} ,\tilde{g}_{1} } \right\rangle \) because \( s(d_{1} ) = - 0.175 \ne - 0.217 = s(d^{\prime}_{1} ) \), by which we have \( d_{1} \succ d^{\prime}_{1} \). If 0.4 is added to \( \tilde{h}_{1} \), we derive \( \tilde{h}^{\prime\prime}_{1} = \left\{ {0.15,0.4,0.4} \right\} \). However, it has \( d_{1} = \left\langle {\tilde{h}_{1} ,\tilde{g}_{1} } \right\rangle \ne d^{\prime\prime}_{1} = \left\langle {\tilde{h}^{\prime\prime}_{1} ,\tilde{g}_{1} } \right\rangle \) because \( s(d_{1} ) = - 0.175 \ne - 0.133 = \)\( s(d^{\prime\prime}_{1} ) \), by which we have \( d_{1} \prec d^{\prime\prime}_{1} \).

Furthermore, if we add the values 0.15 and 0.25 to \( \tilde{h}_{1} \) and \( \tilde{g}_{2} \), respectively, then the similarity measures between d1 and d2 based on Eqs. (1), (2), and (6) are \( GD_{dhfs} \left( {d_{1} ,d_{2} } \right) = 0.573 \), \( GHD_{dhfs} \left( {d_{1} ,d_{2} } \right) = 0.55 \), and \( S_{dhfs} \left( {d_{1} ,d_{2} } \right) = 0. 7 2 3 \), respectively, where λ = p = 2. On the other hand, if we add the values 0.4 and 0.3 to \( \tilde{h}_{1} \) and \( \tilde{g}_{2} \), respectively, then the similarity measures between d1 and d2 based on Eqs. (1), (2), and (6) are \( GD_{dhfs} \left( {d_{1} ,d_{2} } \right) = 0.654 \),\( GHD_{dhfs} \left( {d_{1} ,d_{2} } \right) = 0.65 \), and \( S_{dhfs} \left( {d_{1} ,d_{2} } \right) = 0. 7 7 7 \), respectively, where λ = p = 2. One can find that different results are obtained for different added values. In these two cases where different values are added, the corresponding cosine similarity measures between d1 and d2 by Eq. (7) are \( CS_{dhfs} \left( {d_{1} ,d_{2} } \right) = 0.76 \) and \( CS_{dhfs} \left( {d_{1} ,d_{2} } \right) = 0.85 \).

Based on the above discussion, we conclude that there are two limitations of similarity measures given in Definitions 4, 6 and 7: (1) they change the original DHFSs; (2) different results may be derived for different added values.

Remark 2

The issue of the dice similarity measure offered in Definition 5 is that the dice similarity measure of two different DHFSs may be equal to 1. For example, the dice similarity measure of any two DHFSs with the same mean values of the possible membership degrees and the possible non-membership degrees is equal to 1. Therefore, the discriminability is insufficient.

Example 2

Let \( d_{1} = \left\langle {\tilde{h}_{1} ,\tilde{g}_{1} } \right\rangle = \left\langle {\left\{ {0.2,0.3,0.4} \right\},\left\{ {0.3,0.4,0.5} \right\}} \right\rangle \) and \( d_{2} = \left\langle {\tilde{h}_{2} ,\tilde{g}_{2} } \right\rangle = \left\langle {\left\{ {0.3} \right\},\left\{ {0.4} \right\}} \right\rangle \), we have \( DS_{dhfs} \left( {d_{1} ,d_{2} } \right) = 1 \). However, one can easily find that \( d_{1} \) and \( d_{2} \) are obviously different. Furthermore, we have \( d_{1} \prec d_{2} \) following the ranking method in Definition 3.

In the calculation of the dice similarity measure of d1 and d2, one can check that it only uses one value in the membership and non-membership degree sets of d1, which are 0–3 and 0.4. This shows another issue of this similarity measure, namely, it may only use part information of DHFSs and cause information loss.

3 New Similarity Measures for DHFSs

This section contains two parts. The first part defines several new similarity measures for DHFSs based on additive measure and the second part introduces several new similarity measures for DHFSs based on the Shapley value and 2-additive measure.

3.1 New Similarity Measures for DHFSs Based on Additive Measure

To avoid the issues of previous similarity measures for DHFSs, this part introduces several new similarity measures. First, we define the distance measure between one point and a set as follows.

Definition 8

Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) and \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any two DHFSs. For any \( \gamma_{1} (x_{i} ) \in \tilde{h}_{1} (x_{i} ) \) and any \( x_{i} \in X \), the distance measure between \( \gamma_{1} (x_{i} ) \) and \( \tilde{h}_{2} (x_{i} ) \) is defined as:

and, for any \( \eta_{1} (x_{i} ) \in \tilde{g}_{1} (x_{i} ) \) and any \( x_{i} \in X \), the distance measure between \( \eta_{1} (x_{i} ) \) and \( \tilde{g}_{2} (x_{i} ) \) is defined as:

Furthermore, for any \( \gamma_{2} (x_{i} ) \in \tilde{h}_{2} (x_{i} ) \) and any \( x_{i} \in X \), the distance measure between \( \gamma_{2} (x_{i} ) \) and \( \tilde{h}_{1} (x_{i} ) \) is defined as:

and, for any \( \eta_{2} (x_{i} ) \in \tilde{g}_{2} (x_{i} ) \) and any \( x_{i} \in X \), the distance measure between \( \eta_{2} (x_{i} ) \) and \( \tilde{g}_{1} (x_{i} ) \) is defined as:

According to Definition 8, one can check that \( \rho \left( {\gamma_{1} (x_{i} ),\tilde{h}_{2} (x_{i} )} \right) = \rho \left( {\eta_{1} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right) = \rho \left( {\gamma_{2} (x_{i} ),\tilde{h}_{1} (x_{i} )} \right) = \rho \left( {\eta_{2} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right) \)\( = 0 \) if and only if \( \gamma_{1} (x_{i} ) \in \tilde{h}_{2} (x_{i} ),\eta_{1} (x_{i} ) \in \tilde{g}_{2} (x_{i} ),\gamma_{2} (x_{i} ) \in \tilde{h}_{1} (x_{i} ),\eta_{2} (x_{i} ) \in \tilde{g}_{1} (x_{i} ) \).

There may be more than one value that satisfies Eqs. (8), (9), (10) or (11). In this case, we adopt the corresponding minimum value and offer the following expressions to facilitate discussion:

For any \( \gamma_{1} (x_{i} ) \in \tilde{h}_{1} (x_{i} ) \) and any \( x_{i} \in X \), let \( \gamma_{{_{2} }}^{{\gamma_{1} (x_{i} )}} (x_{i} ) = \hbox{min} \left\{ {\gamma_{2} (x_{i} )\left| {|\gamma_{1} (x_{i} ) - \gamma_{2} (x_{i} )| = \rho \left( {\gamma_{1} (x_{i} ),\tilde{h}_{2} (x_{i} )} \right),\gamma_{2} (x_{i} ) \in \tilde{h}_{2} (x_{i} )} \right.} \right\} \).

For any \( \eta_{1} (x_{i} ) \in \tilde{g}_{1} (x_{i} ) \) and any \( x_{i} \in X \), let \( \eta_{{_{2} }}^{{\eta_{1} (x_{i} )}} (x_{i} ) = \hbox{min} \left\{ {\eta_{2} (x_{i} )\left| {|\eta_{1} (x_{i} ) - \eta_{2} (x_{i} )| = \rho \left( {\eta_{1} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right),\eta_{2} (x_{i} ) \in \tilde{g}_{2} (x_{i} )} \right.} \right\} \).

For any \( \gamma_{2} (x_{i} ) \in \tilde{h}_{2} (x_{i} ) \) and any \( x_{i} \in X \), let \( \gamma_{{_{1} }}^{{\gamma_{2} (x_{i} )}} (x_{i} ) = \hbox{min} \left\{ {\gamma_{1} (x_{i} )\left| {|\gamma_{1} (x_{i} ) - \gamma_{2} (x_{i} )| = \rho \left( {\gamma_{2} (x_{i} ),\tilde{h}_{1} (x_{i} )} \right),\gamma_{1} (x_{i} ) \in \tilde{h}_{1} (x_{i} )} \right.} \right\} \).

For any \( \eta_{2} (x_{i} ) \in \tilde{g}_{2} (x_{i} ) \) and any \( x_{i} \in X \), let \( \eta_{{_{1} }}^{{\eta_{2} (x_{i} )}} (x_{i} ) = \hbox{min} \left\{ {\eta_{1} (x_{i} )\left| {|\eta_{1} (x_{i} ) - \eta_{2} (x_{i} )| = \rho \left( {\eta_{2} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right),\eta_{1} (x_{i} ) \in \tilde{g}_{1} (x_{i} )} \right.} \right\} \).

To show the concrete utilization of the above distance measures and notations, we take the DHFEs offered in Example 1 for instance, where \( d_{1} = \left\langle {\tilde{h}_{1} ,\tilde{g}_{1} } \right\rangle = \left\langle {\left\{ {0.15,0.4} \right\},\left\{ {0.3,0.4,0.5,0.6} \right\}} \right\rangle \) and \( d_{2} = \left\langle {\tilde{h}_{2} ,\tilde{g}_{2} } \right\rangle = \left\langle {\left\{ {0.5,0.6,0.7} \right\},\left\{ {0.25,0.3} \right\}} \right\rangle \).

Based on Eq. (8), we get \( \left\{ \begin{aligned} \rho \left( {0.15,\tilde{h}_{2} } \right) = \mathop {\hbox{min} }\limits_{{\gamma_{2} \in \tilde{h}_{2} }} |0.15 - \gamma_{2} | = |0.15 - 0.5| = 0.35{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.15 \in \tilde{h}_{1} \hfill \\ \rho \left( {0.4,\tilde{h}_{2} } \right) = \mathop {\hbox{min} }\limits_{{\gamma_{2} \in \tilde{h}_{2} }} |0.4 - \gamma_{2} | = |0.4 - 0.5| = 0.1{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.4 \in \tilde{h}_{1} \hfill \\ \end{aligned} \right. \).

Based on Eq. (9), we have \( \left\{ \begin{array}{ll} \rho \left( {0.3,\tilde{g}_{2} } \right) = \mathop {\hbox{min} }\limits_{{\eta_{2} \in \tilde{g}_{2} }} |0.3 - \eta_{2} | = |0.3 - 0.3| = 0{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.3 \in \tilde{g}_{1} \hfill \\ \rho \left( {0.4,\tilde{g}_{2} } \right) = \mathop {\hbox{min} }\limits_{{\eta_{2} \in \tilde{g}_{2} }} |0.4 - \eta_{2} | = |0.4 - 0.3| = 0.1{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.4 \in \tilde{g}_{1} \hfill \\ \rho \left( {0.5,\tilde{g}_{2} } \right) = \mathop {\hbox{min} }\limits_{{\eta_{2} \in \tilde{g}_{2} }} |0.5 - \eta_{2} | = |0.5 - 0.3| = 0.2{\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.5 \in \tilde{g}_{1} \hfill \\ \rho \left( {0.6,\tilde{g}_{2} } \right) = \mathop {\hbox{min} }\limits_{{\eta_{2} \in \tilde{g}_{2} }} |0.6 - \eta_{2} | = |0.6 - 0.3| = 0.3{\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.6 \in \tilde{g}_{1} \hfill \\ \end{array} \right. \).

Based on Eq. (10), we obtain \( \left\{ \begin{aligned} \rho \left( {0.5,\tilde{h}_{1} } \right) = \mathop {\hbox{min} }\limits_{{\gamma_{1} \in \tilde{h}_{1} }} |0.5 - \gamma_{1} | = |0.5 - 0.4| = 0.1{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.5 \in \tilde{h}_{2} \hfill \\ \rho \left( {0.6,\tilde{h}_{1} } \right) = \mathop {\hbox{min} }\limits_{{\gamma_{1} \in \tilde{h}_{1} }} |0.6 - \gamma_{1} | = |0.6 - 0.4| = 0.2{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.6 \in \tilde{h}_{2} \hfill \\ \rho \left( {0.7,\tilde{h}_{1} } \right) = \mathop {\hbox{min} }\limits_{{\gamma_{1} \in \tilde{h}_{1} }} |0.7 - \gamma_{1} | = |0.7 - 0.4| = 0.3{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.7 \in \tilde{h}_{2} \hfill \\ \end{aligned} \right. \).

Based on Eq. (11), we derive \( \left\{ \begin{array}{ll} \rho \left( {0.25,\tilde{g}_{1} } \right) = \mathop {\hbox{min} }\limits_{{\eta_{1} \in \tilde{g}_{1} }} |0.25 - \eta_{1} | = |0.25 - 0.3| = 0.05{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.25 \in \tilde{g}_{2} \hfill \\ \rho \left( {0.3,\tilde{g}_{1} } \right) = \mathop {\hbox{min} }\limits_{{\eta_{1} \in \tilde{g}_{1} }} |0.3 - \eta_{1} | = |0.3 - 0.3| = 0{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{for}}{\kern 1pt} {\kern 1pt} 0.3 \in \tilde{g}_{2} \hfill \\ \end{array} \right. \).

If we replace 0.3 in \( \tilde{g}_{2} \) with 0.35, then we obtain \( \rho \left( {0.35,\tilde{g}_{1} } \right) = \mathop {\hbox{min} }\limits_{{\eta_{1} \in \tilde{g}_{1} }} |0.35 - \eta_{1} | = \left\{ {\begin{array}{*{20}c} {|0.35 - 0.3|} \\ {|0.35 - 0.4|} \\ \end{array} } \right. = 0.05 \). In this case, we have \( \eta_{{_{1} }}^{{\eta_{2} = 0.35}} = 0.3 \).

According to Definition 8, we can further obtain the following generalized normal distance measure between DHFSs.

Definition 9

Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) and \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any two DHFSs in X. Then, the generalized normal distance measure between \( \tilde{h}_{1} (x_{i} ) \) and \( \tilde{h}_{2} (x_{i} ) \) is defined as:

Meanwhile, the generalized normal distance measure between \( \tilde{g}_{1} (x_{i} ) \) and \( \tilde{g}_{2} (x_{i} ) \) is defined as:

Furthermore, the generalized normal distance measure between D1 and D2 is defined as:

Example 3

Let X = {x1, x2, x3}. The DHFSs D1 and D2 in X are defined as follows:

Based on Eq. (14), the normal distance measures between D1 and D2 for different values of λ are \( \rho^{\lambda = 0.2} \left( {D_{1} ,D_{2} } \right) = 0.5775 \), \( \rho^{\lambda = 0.5} \left( {D_{1} ,D_{2} } \right) = 0.3290 \), \( \rho^{\lambda = 1} \left( {D_{1} ,D_{2} } \right) = 0.1333 \), \( \rho^{\lambda = 2} \left( {D_{1} ,D_{2} } \right) = 0.0247 \), and \( \rho^{\lambda = 5} \left( {D_{1} ,D_{2} } \right) = 0.0003 \).

To show the relationality of the normal distance measure, we offer the following the property.

Property 1

Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \), \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \), and \( D_{3} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{3} (x_{i} ),\tilde{g}_{3} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any three DHFSs in X. Then, the generalized normal distance measure \( \rho^{\lambda } \) offered in Definition 9 satisfies:

-

(i)

\( 0 \le \rho^{\lambda } \left( {D_{1} ,D_{2} } \right) \le 1 \);

-

(ii)

\( \rho^{\lambda } \left( {D_{1} ,D_{2} } \right) = 0 \) if and only if \( \tilde{h}_{1} (x_{i} ) = \tilde{h}_{2} (x_{i} ) \) and \( \tilde{g}_{1} (x_{i} ) = \tilde{g}_{2} (x_{i} ) \) for any \( x_{i} \in X \);

-

(iii)

\( \rho^{\lambda } \left( {D_{1} ,D_{2} } \right) = \rho^{\lambda } \left( {D_{2} ,D_{1} } \right) \).

Proof

Following Eq. (14), one can easily check that the conditions (i) and (iii) are true. As for (ii), for any given \( x_{i} \in X \), when \( \rho^{\lambda } \left( {D_{1} ,D_{2} } \right) = 0 \), from Eqs. (12) and (13) we know that

by which we know that there are \( \gamma_{2} (x_{i} ) \in \tilde{h}_{2} (x_{i} ) \) such that \( \gamma_{2} (x_{i} ) = \gamma_{1} (x_{i} ) \) for any \( \gamma_{1} (x_{i} ) \in \tilde{h}_{1} (x_{i} ) \), \( \gamma_{1} (x_{i} ) \in \tilde{h}_{1} (x_{i} ) \) such that \( \gamma_{1} (x_{i} ) = \gamma_{2} (x_{i} ) \) for any \( \gamma_{2} (x_{i} ) \in \tilde{h}_{2} (x_{i} ) \), \( \eta_{2} (x_{i} ) \in \tilde{g}_{2} (x_{i} ) \) such that \( \eta_{2} (x_{i} ) = \eta_{1} (x_{i} ) \) for any \( \eta_{1} (x_{i} ) \in \tilde{g}_{1} (x_{i} ) \) and \( \eta_{1} (x_{i} ) \in \tilde{g}_{1} (x_{i} ) \) such that \( \eta_{1} (x_{i} ) = \eta_{2} (x_{i} ) \) for any \( \eta_{2} (x_{i} ) \in \tilde{g}_{2} (x_{i} ) \). Thus, \( \tilde{h}_{1} (x_{i} ) = \tilde{h}_{2} (x_{i} ) \) and \( \tilde{g}_{1} (x_{i} ) = \tilde{g}_{2} (x_{i} ) \).

The main feature of the generalized normal distance measure given in Definition 9 is to permit the membership and non-membership degree sets to have different lengths, respectively. Following the defined generalized normal distance measure between DHFSs, we further offer the following generalized similarity measures.

Definition 10

Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) and \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any two DHFSs in X. Then, the generalized similarity measure \( S_{1}^{\lambda } \) between them is defined as follows:

In Example 3, the similarity measure between the DHFSs D1 and D2 for different values of λ are \( S_{1}^{\lambda = 0.2} \left( {D_{1} ,D_{2} } \right) = 0.4225 \), \( S_{1}^{\lambda = 0.5} \left( {D_{1} ,D_{2} } \right) = 0.6710 \), \( S_{1}^{\lambda = 1} \left( {D_{1} ,D_{2} } \right) = 0.8667 \), \( S_{1}^{\lambda = 2} \left( {D_{1} ,D_{2} } \right) = 0.9753 \), and \( S_{1}^{\lambda = 5} \left( {D_{1} ,D_{2} } \right) = 0.9997 \).

Based on Property 1 for the generalized normal distance measure, one can easily derive the following property for the generalized similarity measure \( S_{1}^{\lambda } \) as shown in Definition 10.

Property 2

Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \), \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \), and \( D_{3} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{3} (x_{i} ),\tilde{g}_{3} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any three DHFSs in X. Then, the generalized similarity measure \( S_{1}^{\lambda } \) offered in Definition 10 satisfies:

-

(i)

\( 0 \le S_{1}^{\lambda } \left( {D_{1} ,D_{2} } \right) \le 1 \);

-

(ii)

\( S_{1}^{\lambda } \left( {D_{1} ,D_{2} } \right) = 1 \) if and only if \( \tilde{h}_{1} (x_{i} ) = \tilde{h}_{2} (x_{i} ) \) and \( \tilde{g}_{1} (x_{i} ) = \tilde{g}_{2} (x_{i} ) \) for any \( x_{i} \in X \);

-

(iii)

\( S_{1}^{\lambda } \left( {D_{1} ,D_{2} } \right) = S_{1}^{\lambda } \left( {D_{2} ,D_{1} } \right) \).

Proof

Following Eq. (15), one can easily check that the conditions (i) and (iii) are true. As for (ii), for any given \( x_{i} \in X \), when \( S_{1}^{\lambda } \left( {D_{1} ,D_{2} } \right) = 1 \), we get \( \rho^{\lambda } \left( {D_{1} ,D_{2} } \right) = 0 \). According to Property 1, we know that the condition (ii) is true.

Remark 3

The main features of the generalized similarity measure offered in Definition 10 include: (i) it does not need to add extra values that leads to change the original information; (ii) it avoids the situation where different results are derived for different added values; (iii) it does not restrict to calculate the similarity measure by considering the corresponding ordered values; (iv) the second condition in Property 1 makes it avoid the issue of the dice similarity measure shown in Definition 5.

Different from the generalized similarity measure shown in Definition 10 that is based on the defined generalized normal distance measure, we further define the following similarity measures.

Definition 11

Let \( D_{1} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{1} (x_{i} ),\tilde{g}_{1} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) and \( D_{2} = \left\{ {\left. {\left\langle {x_{i} ,\tilde{h}_{2} (x_{i} ),\tilde{g}_{2} (x_{i} )} \right\rangle } \right|x_{i} \in X} \right\} \) be any two DHFSs. Then, the Hausdorff normal similarity measure is defined as follows:

The correlation coefficient-based normal similarity measure is defined as follows:

The minimax normal similarity measure is defined as follows:

In Example 3, using the similarity measures listed in Definition 11, we have \( S_{H} \left( {D_{1} ,D_{2} } \right) = 0.7547 \), \( S_{CC} \left( {D_{1} ,D_{2} } \right) = 0.4672 \) and \( S_{MM} \left( {D_{1} ,D_{2} } \right) = 0.7167 \), where \( D_{1} = \left\{ {\left\langle {x_{1} ,\{ 0.2,0.3\} ,\{ 0.5,0.7\} } \right\rangle ,\left\langle {x_{2} ,\{ 0.6,0.8\} ,\{ 0.1\} } \right\rangle ,\left\langle {x_{1} ,\{ 0.5\} ,\{ 0.2,0.3,0.4\} } \right\rangle } \right\} \) and \( D_{2} = \left\{ {\left\langle {x_{1} ,\{ 0.4,0.5\} ,\{ 0.4\} } \right\rangle ,} \right.\left. {\left\langle {x_{2} ,\{ 0.5,0.6\} ,\{ 0.2,0.3\} } \right\rangle ,\left\langle {x_{1} ,\{ 0.7,0.8\} ,\{ 0.1,0.2\} } \right\rangle } \right\} \).

Remark 4

Based on Eqs. (16), (17), and (18), one can easily check that the Hausdorff normal similarity measure, the correlation coefficient-based normal similarity measure and the minimax normal similarity measure satisfy the conditions offered in Property 2. Furthermore, they also own the characteristics listed in Remark 3. There are two purposes to offer the similarity measures shown in Definition 11: (i) they offer the DMs with more choices, and (ii) in practical decision-making problems, it is better to use different similarity measures to show the stability of the ranking results.

To discriminate the weights of criteria, we further define their associated weighted similarity measures as follows:

The weighted generalized normal similarity measure is

The weighted Hausdorff normal similarity measure is

The weighted correlation coefficient-based normal similarity measure is

The weighted minimax normal similarity measure is

where \( w = \left( {w_{1} ,w_{2} , \ldots ,w_{n} } \right) \) is a weight vector such that \( \sum\nolimits_{i = 1}^{n} {w_{i} } = 1 \) and \( w_{i} \ge 0 \) for all \( i = 1,2, \ldots ,n \), and other notations as shown in Definitions 10 and 11.

3.2 New Similarity Measures for DHFSs Based on the Shapley Value and 2-Additive Measure

As some scholars noted, criteria are interactive rather than independent in some situations. In this case, the normal similarity measures based on additive measure are unreasonable. To cope with this issue, the Shapley value for fuzzy measures is a good tool, which not only offers the importance of each criterion, but also defines the importance of each combination of criteria. Nevertheless, it is not easy to determine a fuzzy measure because it defines on the power set. The 2-additive measure [31] is a good choice, which reflects the interaction between each pair of criteria and reduces the complexity of solving a fuzzy measure. Based on these facts, the following offers the Shapley weighted similarity measures based on the 2-additive measure. First, we review 2-additive measure and the Shapley value for 2-additive measure.

Definition 12

[31] Let X = {x1, x2, …, xn} be the set of compared objects. \( \mu \) is called a 2-additive measure if, for any \( R \subseteq X \) with |R| \( \ge 2 \), we have

where \( \mu (x_{i} ) \) is the importance of the object xi, and \( \mu (x_{i} ,x_{j} ) \) is the importance of the objects xi and xj for all i, j = 1, 2, …, n with i \( \ne \)j.

The main feature of 2-additive measure is that it only needs \( \frac{n(n + 1)}{2} \) variables to determine a fuzzy measure, while \( 2^{n} - 2 \) variables are needed in general case.

For example, let X = {x1, x2, x3, x4}. Suppose that the 2-additive measure μ on X is defined as: \( \mu (x_{1} ) = 0.4 \), \( \mu (x_{2} ) = 0.3 \), \( \mu (x_{3} ) = 0.4 \), \( \mu (x_{4} ) = 0.2 \), \( \mu (x_{1} ,x_{2} ) = 0.6 \), \( \mu (x_{1} ,x_{3} ) = 0.7 \), \( \mu (x_{1} ,x_{4} ) = 0.6 \), \( \mu (x_{2} ,x_{3} ) = 0.5 \), \( \mu (x_{2} ,x_{4} ) = 0.6 \), and \( \mu (x_{3} ,x_{4} ) = 0.6 \). According to Eq. (23), we have \( \mu (x_{1} ,x_{2} ,x_{3} ) = 0.7 \), \( \mu (x_{1} ,x_{2} ,x_{4} ) = 0.9 \), \( \mu (x_{1} ,x_{3} ,x_{4} ) = 0.9 \), \( \mu (x_{2} ,x_{3} ,x_{4} ) = 0.8 \), and \( \mu (X) = 1 \).

Definition 13

[35] Let \( \mu \) be a 2-additive measure on X. Then, the Shapley value for \( \mu \) on X is expressed as:

With respect to the above example, following Eq. (24) the Shapley values of xi, i = 1, 2, 3, 4, are \( Sh_{{x_{1} }} (\mu ,X) = 0.3, \)\( Sh_{{x_{2} }} (\mu ,X) = 0.2 \) and \( Sh_{{x_{3} }} (\mu ,X) = Sh_{{x_{4} }} (\mu ,X) = 0.25 \). Taking the Shapley value of x1 for example, we have

To reflect the interactions and importance of criteria, the 2-additive measure-based Shapley weighted similarity measures are defined as follows:

The 2-additive measure-based Shapley weighted generalized normal similarity measure is

The 2-additive measure-based Shapley weighted Hausdorff normal similarity measure is

The correlation coefficient and 2-additive measure-based Shapley weighted normal similarity measure is

The 2-additive measure-based Shapley weighted minimax normal similarity measure is

where \( Sh_{{x_{i} }} (\mu ,X) \) is the Shapley value of the criterion xi for the 2-additive measure \( \mu \) as shown in Eq. (24) for all i = 1, 2, …, n.

Following the above similarity measures for DHFSs, we can obtain their associated similarity measures for DHFEs. For example, for any two DHFEs \( d_{1} = \left\langle {\tilde{h}_{1} ,\tilde{g}_{1} } \right\rangle \) and \( d_{2} = \left\langle {\tilde{h}_{2} ,\tilde{g}_{2} } \right\rangle \), the generalized normal similarity measure is

where \( \lambda > 0 \), \( \rho^{\lambda } \left( {\tilde{h}_{1} ,\tilde{h}_{2} } \right) = \frac{1}{2}\left( {\frac{1}{{|\tilde{h}_{1} |}}\sum\nolimits_{{\gamma_{1} \in \tilde{h}_{1} }} {|\gamma_{1} - \gamma_{{_{2} }}^{{\gamma_{1} }} |^{\lambda } + \frac{1}{{|\tilde{h}_{2} |}}\sum\nolimits_{{\gamma_{2} \in \tilde{h}_{2} }} {|\gamma_{2} - \gamma_{{_{1} }}^{{\gamma_{2} }} |^{\lambda } } } } \right)^{{\tfrac{1}{\lambda }}} \), and \( \rho^{\lambda } \left( {\tilde{g}_{1} ,\tilde{g}_{2} } \right) = \frac{1}{2}\left( {\frac{1}{{|\tilde{g}_{1} |}}\sum\nolimits_{{\eta_{1} \in \tilde{g}_{1} }} {|\eta_{1} - \eta_{{_{2} }}^{{\eta_{1} }} |^{\lambda } } + } \right. \)\( \left. {\frac{1}{{|\tilde{g}_{2} |}}\sum\nolimits_{{\eta_{2} \in \tilde{g}_{2} }} {|\eta_{2} - \eta_{{_{1} }}^{{\eta_{2} }} |^{\lambda } } } \right)^{{\tfrac{1}{\lambda }}} . \)

4 An Approach for Multi-criteria Decision-Making with DHFSs

The part introduces a new multi-criteria decision-making method with DHFSs based on the defined similarity measure. In this procedure, the weights of criteria are needed. When they are incompletely known, we first need to determine them.

4.1 Optimization models for ascertaining the weighting information

This subsection builds optimization models for ascertaining the weighting information based on the defined similarity measures. Without loss of generality, suppose that there are n alternatives and m criteria, denoted by X = {x1, x2, …, xn} and C = {c1, c2, …, cm}, respectively. Let \( D = \left( {d_{ij} } \right)_{n \times m} \) be the dual hesitant fuzzy decision matrix (DHFDM) offered by the experts, where \( d_{ij} = \left\langle {\tilde{h}_{ij} ,\tilde{g}_{ij} } \right\rangle \) is the DHFE with \( \tilde{h}_{ij} \) and \( \tilde{g}_{ij} \) being the hesitant fuzzy preferred and non-preferred judgements of the alternative xi for the criterion cj given by the experts for all i = 1, 2, …, n, and all j = 1, 2, …, m.

For each criterion cj, we calculate the similarity measure \( S\left( {d_{ij} ,d_{kj} } \right) = S_{1}^{\lambda } \left( {d_{ij} ,d_{kj} } \right),S_{H} \left( {d_{ij} ,d_{kj} } \right),S_{CC} \left( {d_{ij} ,d_{kj} } \right){\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} S_{MM} \left( {d_{ij} ,d_{kj} } \right) \) using Eqs. (15), (16), (17), or (18) for DHFEs \( d_{ij} \) and \( d_{kj} \), where i, k = 1, 2, …, n with \( i \ne k \). Then, the sum of the similarity measures for the criterion cj is \( S_{{c_{j} }} = \sum\nolimits_{i = 1}^{n - 1} {\sum\nolimits_{k = i + 1}^{n} {S\left( {d_{ij} ,d_{kj} } \right)} } \). As well known, the bigger the value of \( S_{{c_{j} }} \) is, the smaller the discrimination will be for ranking alternatives. Therefore, the importance of such criteria is relatively small. Based on this analysis, we build the following model to determine the 2-additive measure \( \left\{ \begin{aligned} &\mu (c_{j} ),{\kern 1pt} {\kern 1pt} j = 1,{ 2}, \, \ldots ,n \hfill \\& \mu (c_{k} ,c_{j} ),{\kern 1pt} {\kern 1pt} k,j = 1,{ 2}, \, \ldots ,n{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{with}}{\kern 1pt} {\kern 1pt} {\kern 1pt} k \ne j \hfill \\ \end{aligned} \right. \) on the criteria set C:

where the first constraint is Eq. (24), and the second to fourth constraints are the sufficient and necessary conditions for the 2-additive measure [31], and \( W_{{e_{k} }} \) is the known weighting range of the criterion cj.

Model (M-1) can be further equivalently transformed into the following model:

Solving model (M-2), we can obtain the 2-additive measure on the criteria set C, where \( \mu^{ * } (c_{j} ) \) and \( \mu^{ * } (c_{k} ,c_{j} ) \) for all k, j = 1, 2, …, n with \( k \ne j \). It is noteworthy that when the importance of the experts is independent, then model (M-2) recues to the following model for determining the additive measure \( w = \left( {w_{{c_{1} }} ,w_{{c_{2} }} , \ldots ,w_{{c_{n} }} } \right) \) on the criteria set C:

Once the 2-additive measure or additive measure on the criteria set C is derived by solving model (M-2) or model (M-3), we can apply the defined (Shapley) weighted similarity measures to derive the ranking of objectives.

4.2 A New Algorithm for Dual Hesitant Fuzzy Multi-criteria Decision-Making

Adopting the defined similarity measures and built models for determining weighting information, we offer the following algorithm to multi-criteria decision-making with dual hesitant fuzzy information in view of TOPSISI method [36].

- Step 1:

-

Let \( D = \left( {d_{ij} } \right)_{n \times m} \) be the DHFDM, where \( d_{ij} = \left\langle {\tilde{h}_{ij} ,\tilde{g}_{ij} } \right\rangle \) is the DHFE with \( \tilde{h}_{ij} \) and \( \tilde{g}_{ij} \) being the hesitant fuzzy preferred and non-preferred judgement sets of the alternative xi for the criterion cj for all i = 1, 2, …, n, and all j = 1, 2, …, m;

- Step 2:

-

If the weighting information on the criteria set C = {c1, c2, …, cn} is exactly known, ship to Step 3. Otherwise, we employ the built programming model (M-2) or (M-3) to determine the optimal 2-additive measure \( \left\{ \begin{aligned} &\mu^{ * } (c_{j} ),{\kern 1pt} {\kern 1pt} j = 1,{ 2}, \, \ldots ,n \hfill \\ &\mu^{ * } (c_{k} ,c_{j} ),{\kern 1pt} {\kern 1pt} k,j = 1,{ 2}, \, \ldots ,n{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{with}}{\kern 1pt} {\kern 1pt} {\kern 1pt} k \ne j \hfill \\ \end{aligned} \right. \) or additive measure \( w^{ * } = \left( {w_{{c_{1} }}^{ * } ,w_{{c_{2} }}^{ * } , \ldots ,w_{{c_{n} }}^{ * } } \right) \) on the criteria set C;

- Step 3:

-

Using the (Shapley) weighted normal similarity measures to calculate the similarity measures between the DHFS \( d_{i} = \left\{ {d_{i1} ,d_{i2} , \ldots ,d_{im} } \right\} \) and the positive ideal DHFS \( d^{ + } = \left\{ {d_{1}^{ + } ,d_{2}^{ + } , \ldots ,d_{m}^{ + } } \right\} \) as well as between the DHFS \( d_{i} = \left\{ {d_{i1} ,d_{i2} , \ldots ,d_{im} } \right\} \) and the negative ideal DHFS \( d^{ - } = \left\{ {d_{1}^{ - } ,d_{2}^{ - } , \ldots ,d_{m}^{ - } } \right\} \) for each alternative xi, denoted by \( S\left( {d_{i} ,d^{ + } } \right) \) and \( S\left( {d_{i} ,d^{ - } } \right) \), where \( d_{j}^{ + } = \left\langle {\{ 1\} ,\{ 0\} } \right\rangle \) and \( d_{j}^{ - } = \left\langle {\{ 0\} ,\{ 1\} } \right\rangle \) for all j = 1, 2, …, m;

- Step 4:

-

Following the similarity measures \( S\left( {d_{i} ,d^{ + } } \right) \) and \( S\left( {d_{i} ,d^{ - } } \right) \), we calculate the ranking values of alternatives, where \( R(x_{i} ) = \frac{{S\left( {d_{i} ,d^{ + } } \right)}}{{S\left( {d_{i} ,d^{ - } } \right) + S\left( {d_{i} ,d^{ + } } \right)}} \) for all i = 1, 2, …, n;

- Step 5:

-

End

5 Two Illustrative Examples

To show the concrete application of the new method and compare it with previous similarity measure-based methods, this section offers two examples.

Example 4

With the development of living standards, car becomes an essential tool for people to travel. According to the latest statistics, the number of motor vehicles in China is 325 million. It has greatly changed the people’s lifestyle. However, automobile is the main cause of urban traffic congestion. To ease traffic congestion, China’s major cities continue to improve and build subways. As well known, the construction of Metro is complex and expensive. Therefore, the choice of construction enterprises is very important. After preliminary screening, four construction enterprises are chosen as alternatives X = {x1, x2, x3, x4}. To select the best construction enterprise, an expert team is invited to offer their judgments following four main criteria: c1: construction quality, c2: construction progress, c3: construction cost, and c4: enterprise reputation. If the experts fail to reach an agreement, more than one value for some judgement is permitted. Furthermore, the experts can offer their preferred and non-preferred membership degrees to fully express their judgements. Considering these aspects, DHFEs are good tools. Suppose that DHFEs about construction enterprises for the considered criteria are offered as shown in Table 1. Furthermore, the weights of criteria are incompletely known, where \( W_{{c_{1} }} = [0.2,0.4], \)\( W_{{c_{2} }} = [0.15,0.25], \)\( W_{{c_{3} }} = [0.1,0.3] \) and \( W_{{c_{4} }} = [0.25,0.35] \).

To obtain the ranking of alternatives, the following procedure is needed:

- Step 1:

-

Since the DHFDM D has been offered as shown in Table 1, go to Step 2;

- Step 2:

-

Taking Eq. (15) for λ = 1 for example, the optimal 2-additive measure \( \mu^{ * } \) on the criteria set C is shown in Table 2

Table 2 The optimal 2-additive measure \( \mu^{ * } \) on the criteria set C Based on Eq. (24), the Shapley values of criteria are

$$ Sh_{{c_{1} }} (\mu ,C) = 0.0583,\;Sh_{{c_{2} }} (\mu ,C) = 0.0583,\;Sh_{{c_{3} }} (\mu ,C) = 0.325,\;Sh_{{c_{4} }} (\mu ,C) = 0.5584 $$ - Step 3:

-

Using Eq. (25) for λ = 1, the 2-additive measure-based Shapley weighted normal similarity measures are

$$ \left\{ \begin{aligned} S\left( {d_{1} ,d^{ + } } \right) = 0.8366 \hfill \\ S\left( {d_{2} ,d^{ + } } \right) = 0.7896 \hfill \\ S\left( {d_{3} ,d^{ + } } \right) = 0.8126 \hfill \\ S\left( {d_{4} ,d^{ + } } \right) = 0.7757 \hfill \\ \end{aligned} \right.\;\;{\text{and}}\;\;\left\{ \begin{aligned} S\left( {d_{1} ,d^{ - } } \right) = 0.7182 \hfill \\ S\left( {d_{2} ,d^{ - } } \right) = 0.7592 \hfill \\ S\left( {d_{3} ,d^{ - } } \right) = 0.7297 \hfill \\ S\left( {d_{4} ,d^{ - } } \right) = 0.7438 \hfill \\ \end{aligned} \right.. $$ - Step 4:

-

Following the weighted similarity measures obtained in Step 3, the ranking values of objectives are

$$ R(x_{1} ) = 0.5381,\;R(x_{2} ) = 0.5098,\;R(x_{3} ) = 0.5269,\;R(x_{4} ) = 0.5105 $$

Thus, the ranking is \( x_{1} \succ x_{3} \succ x_{4} \succ x_{2} \).

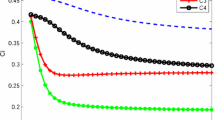

The above ranking is derived from the 2-additive measure-based Shapley weighted normal similarity measure for λ = 1, when different Shapley weighted normal similarity measures are employed, the ranking values and orders are derived as shown in Table 3.

Table 3 shows that different ranking values are obtained for different Shapley weighted normal similarity measures. However, the same ranking is derived for these similarity measures.

In this example, if we assume that there is no interaction among criteria. Similar to the above procedure, the ranking values and orders for different weighted normal similarity measures are obtained as shown in Table 4.

Table 4 shows that different ranking values and orders are obtained for different weighted normal similarity measures. Furthermore, all rankings based on the weighted normal similarity measures are different to that derived from the Shapley weighted normal similarity measures.

Furthermore, when we employ the previous weighted similarity measures based on the weights obtained from our method. The associated results are derived as shown in Table 5.

Remark 5

Tables 3, 4 and 5 show that the alternative a1 should be selected as the best choice based on the Shapley weighted similarity measures. However, the alternative a2 should be viewed as the best choice based on the weighted similarity measures. The purpose for evaluating the best construction enterprise based on the weighted similarity measures is to show the necessity of determining the weights of criteria considering interactions. Otherwise, we may derive unreasonable ranking and make incorrect choice. Because the offered criteria in Example 4 are dependent, we suggest the DMs to use the Shapley weighted similarity measures. For example, there is a complementary interaction between c1: construction quality and c4: enterprise reputation, while there is a redundant interaction between c1: construction quality and c3: construction cost. However, if there is a clear explanation that does not need to consider the interactions among criteria, then the alternative a2 should be viewed as the best choice in most cases.

Besides the application of the new method in multi-criteria decision-making, we can also use it to pattern recognition. To show this clearly, we take the example in [26] for instance.

Example 5

[26] Suppose that a new metal material B is recognized following four criteria. The criteria values of the recognized new metal material are denoted as:

Furthermore, there are five types of minerals Ai, i = 1, 2, 3, 4, 5, where the criteria values are expressed using DHFEs, listed in Table 6.

Furthermore, assume that the weights of the criteria are incompletely known, where \( W_{{c_{1} }} = [0.3,0.5],W_{{c_{2} }} = [0.12,0.32], \)\( W_{{c_{3} }} = [0.08,0.28] \) and \( W_{{c_{4} }} = [0.1,0.3] \). To recognize which type of the recognized metal material belongs to, the following procedure is needed.

- Step 1:

-

Similar to the analysis for multi-criteria decision-making, we first calculate the similarity measure \( S\left( {D_{i} ,D_{j} } \right) \) between the criteria ci and cj, where Di is the ith column of Table 6, i = 1, 2, 3, 4. For example, using Eq. (15) for λ = 1, the similarity measures between each pair of criteria are \( S\left( {D_{1} ,D_{2} } \right) = 0.8,S\left( {D_{1} ,D_{3} } \right) = 0.8,S\left( {D_{1} ,D_{4} } \right) = 0.7775, \)\( S\left( {D_{2} ,D_{3} } \right) = 0.8175, \)\( S\left( {D_{2} ,D_{4} } \right) \)\( = 0.8133 \) and \( S\left( {D_{3} ,D_{4} } \right) = 0.84 \). Therefore, the sum of the similarity measure for each criterion is \( S_{{c_{1} }} = 2.3775 \), \( S_{{c_{2} }} = 2.4308 \), \( S_{{c_{3} }} = 2.4575 \) and \( S_{{c_{4} }} = 2.4308 \), respectively

- Step 2:

-

Because the bigger the value of \( S_{{c_{j} }} \) is, the smaller the discrimination will be. Therefore, the importance of such criteria is relatively small for recognizing the metal material. Using model (M-2), the optimal 2-additive measure \( \mu^{ * } \) on the criteria set C is shown in Table 7

Table 7 The optimal 2-additive measure \( \mu^{ * } \) on the criteria set C

According to Eq. (24), the Shapley values of criteria are

- Step 3:

-

Using Eq. (25) for λ = 1 to calculate the similarity measure between each type of mineral and the recognized material, we derive

$$ S_{1}^{\lambda = 1} (A_{1} ,B) = 0. 8 4 9 1 ,S_{1}^{\lambda = 1} (A_{2} ,B) = 0. 7 7 1 6 ,S_{1}^{\lambda = 1} (A_{3} ,B) = 0. 8 7 40,S_{1}^{\lambda = 1} (A_{4} ,B) = 0. 7 1 9 5 ,S_{1}^{\lambda = 1} (A_{5} ,B) = 0. 8 1 1 3 $$

Because of \( S_{1}^{\lambda = 1} (A_{3} ,B) = \mathop {\hbox{max} }\limits_{1 \le i \le 5} S_{1}^{\lambda = 1} (A_{i} ,B) \), the recognized material belongs to the third type of mineral A3.

When different Shapley weighted normal similarity measures are employed, similarity measures are derived as shown in Table 8.

Similar to Example 4, when we suppose that there is no interaction among criteria, following model (M-3) and the similarity measure for each criterion derived from Step 1, the weight vector on criteria set is w = (0.5, 0.08, 0.12, 0.3). Using the weighted similarity measures, the similarity measure between each type of mineral and the recognized material is obtained as shown in Table 9.

Tables 8 and 9 all show that the recognized material belongs to the third type of material A3 except for one case.

In this example, when the reviewed similarity measures are employed, the similarity measure between each type of mineral and the recognized material is shown in Table 10.

Remark 6

Tables 8, 9 and 10 indicate that the recognized material belongs to the third type of material A3 based on the Shapley weighted similarity measures. However, the recognized material may belong to the second, third or fourth type of material based on the weighted similarity measures. This example also shows that different ranking orders and best choices may be obtained based on the Shapley weighted similarity measures and the weighted similarity measures. Just as the analysis in Remark 5 for Example 4, because there is no special explanation about the independence of criteria, it is more reasonable to use the Shapley weighted similarity measures. With this in mind, the material should be recognized as the third type of material A3.

To illustrate the differences between the new method and previous ones based on similarity measures for DHFSs, please see Table 11.

6 Conclusions

Considering the advantages of DHFSs and the limitations of previous research about the similarity measures for DHFSs, this paper introduced several new similarity measures that avoid the limitations of previous similarity measures. To discriminate the differences of DHFEs for different criteria, two types of weighted similarity measures are defined. One type is based on additive measure and the other follows the 2-additive measure-based Shapley value. After that, a new method based on similarity measures is proposed. The originalities include: (1) it offers the first type of similarity measures for DHFSs that does not change the original DHFSs and considers all information offered by the decision-makers; (2) it is the first type of similarity measures for DHFSs that can deal with the situation where there are interactions among DHFSs; (3) it is the first similarity measure-based method that can deal with the situation where the weighting information with interactive characteristics is incompletely known. Compared with previous dual hesitant fuzzy similarity measures, the new ones may need more computation and time. However, with the help of computer, these problems can be ignored. Furthermore, we think that it is worthwhile to do this for deriving more objective and stable results. To show the application of the new algorithm, a multi-criteria decision-making problem and a pattern recognition problem are provided. Meanwhile, the comparison analysis is given. From the given algorithm in Subsection 4.2, one can check that the new method is not offered for special decision-making problems. As long as we derive the DHFDMs of decision-making problems, we can use the new method to address them.

It is noteworthy that this paper focuses on similarity measures for DHFSs and we can similarly study other measures for DHFSs such as distance measure and entropy. Furthermore, we will continue to research other methods for DHFSs including dual hesitant fuzzy PROMETHEE method, dual hesitant fuzzy ELECTRE method, dual hesitant fuzzy MULTIMOORA method and dual hesitant fuzzy MACBETH. On the other hand, we shall study their application in practice [37,38,39].

References

Zadeh, L.A.: Fuzzy sets. Inform. Con. 8, 338–353 (1965)

Atanassov, K.T.: Intuitionistic fuzzy sets. Fuzzy Sets Syst. 20(1), 87–96 (1986)

Atanassov, K.T., Gargov, G.: Interval valued intuitionistic fuzzy sets. Fuzzy Sets Syst. 31(3), 343–349 (1989)

Torra, V.: Hesitant fuzzy sets. Int. J. Intell. Syst. 25(6), 529–539 (2010)

Xia, M.M., Xu, Z.S., Liao, H.C.: Preference relations based on intuitionistic multiplicative information. IEEE Trans. Fuzzy Syst. 21(1), 113–133 (2013)

Jiang, Y., Xu, Z.S., Xu, J.P.: Interval-valued intuitionistic multiplicative sets. Int. J. Uncertain. Fuzz. 22(3), 385–406 (2014)

Chen, Z.C., Liu, P.H., Pei, Z.: An approach to multiple attribute group decision making based on linguistic intuitionistic fuzzy numbers. Int. J. Comput. Intell. Syst. 8(4), 747–760 (2015)

Tang, J., Meng, F.Y., Zhang, Y.L.: Programming model-based group decision making with multiplicative linguistic intuitionistic fuzzy preference relations. Comput. Ind. En. 136, 212–224 (2019)

Zhu, B., Xu, Z.: Analytic hierarchy process-hesitant group decision making. Eur. J. Oper. Res. 239(3), 794–801 (2014)

Chen, N., Xu, Z.S., Xia, M.M.: Interval-valued hesitant preference relation relations and their applications to group decision making. Knowl. Based Syst. 37, 528–540 (2013)

Zhang, Y.N., Tang, J., Meng, F.Y.: Programming model-based method for ranking objects from group Decision making with interval-valued hesitant fuzzy preference relations. Appl. Intell. 49(3), 837–857 (2019)

Singh, P.: A new method for solving dual hesitant fuzzy assignment problems with restrictions based on similarity measure. Appl. Soft Comput. 24, 559–571 (2014)

Tang, J., Meng, F.Y.: Decision making with multiplicative hesitant fuzzy linguistic preference relations. Neural Comput. Appl. 31(7), 2749–2761 (2017)

Tang, J., Meng, F.Y., Zhang, S.L., An, Q.: Group decision making with interval linguistic hesitant fuzzy preference relations. Expert Syst. Appl. 119, 231–246 (2019)

Zhu, B., Xu, Z., Xia, M.M.: Dual hesitant fuzzy sets. J. Appl. Math. 2012, 1–13 (2012)

Wang, H.J., Zhao, X.F., Wei, G.W.: Dual hesitant fuzzy aggregation operators in multiple attribute decision making. J. Intell. Fuzzy Syst. 26(5), 2281–2290 (2014)

Zhao, H., Xu, Z.S., Liu, S.S.: Dual hesitant fuzzy information aggregation with Einstein T-conorm and T norm. J. Syst. Sci. Syst. Eng. 26(2), 240–264 (2017)

Wang, L., Shen, Q.G., Zhu, L.: Dual hesitant fuzzy power aggregation operators based on Archimedean t-conorm and t-norm and their application to multiple attribute group decision making. Appl. Soft Comput. 38, 23–50 (2016)

Ren, Z.L., Xu, Z.S., Wang, H.: Dual hesitant fuzzy VIKOR method for multi-criteria group decision making based on fuzzy measure and new comparison method. Inform. Sci. 388–389, 1–16 (2017)

Ju, Y.B., Yang, S.H., Liu, X.Y.: Some new dual hesitant fuzzy aggregation operators based on Choquet integral and their applications to multiple attribute decision making. J. Intell. Fuzzy Syst. 27(6), 2857–2868 (2014)

Qu, G.H., Zhang, H.P., Qu, W., Zhang, Z.H.: Induced generalized dual hesitant fuzzy Shapley hybrid operators and their application in multi-attributes decision making. J. Intell. Fuzzy Syst. 31(1), 633–650 (2016)

Yu, D.J., Li, D.F., Merigo, J.M.: Dual hesitant fuzzy group decision making method and its application to supplier selection. Int. J. Mach. Learn. Cyber. 7(5), 819–831 (2016)

Ren, Z.L., Wei, C.P.: A multi-attribute decision-making method with prioritization relationship and dual hesitant fuzzy decision information. Int. J. Mach. Learn. Cyber. 8(3), 755–763 (2017)

Zhang, Y.T., Wang, L., Yu, X.H., Yao, C.H.: A new concept of Cosine similarity measures based on dual hesitant fuzzy sets and its possible applications. Cluster Comput. 22(6), S15483–S15492 (2019)

Singh, P.: Distance and similarity measures for multiple-attribute decision making with dual hesitant fuzzy sets. Comput. Appl. Math. 36(1), 111–126 (2017)

Su, Z., Xu, Z.S., Liu, H.F., Liu, S.S.: Distance and similarity measures for dual hesitant fuzzy sets and their applications in pattern recognition. J. Intell. Fuzzy Syst. 29(2), 731–745 (2015)

Chen, Y.F., Peng, X.D., Guan, G.H., Jiang, H.D.: Approaches to multiple attribute decision making based on the correlation coefficient with dual hesitant fuzzy information. J. Intell. Fuzzy Syst. 26(5), 2547–2556 (2014)

Tyagi, S.K.: Correlation coefficient of dual hesitant fuzzy sets and its applications. Appl. Math. Modell. 39(22), 7082–7092 (2015)

Ye, J.: Correlation coefficient of dual hesitant fuzzy sets and its application to multiple attribute decision making. Appl. Math. Modell. 38(2), 659–666 (2014)

Meng, F.Y., Wang, C., Chen, X.H., Zhang, Q.: Correlation coefficients of interval-valued hesitant fuzzy sets and their application based on Shapley function. Int. J. Intell. Syst. 3(1), 43 (2016)

Grabisch, M.: k-order additive discrete fuzzy measures and their representation. Fuzzy Sets Syst. 92(2), 167–189 (1997)

Shapley, L.S.: A value for n-person game. Princeton University Press, Princeton (1953)

Zhao, N., Xu, Z.S., Liu, F.J.: Group decision making with dual hesitant fuzzy preference relations. Cogn. Comput. 8(6), 1119–1143 (2016)

Huang, M.J., Li, K.W.: A novel approach to characterizing hesitations in intuitionistic fuzzy numbers. J. Syst. Sci. Syst. Eng. 22(3), 283–294 (2013)

Meng, F.Y., Tang, J.: Interval-valued intuitionistic fuzzy multi-criteria group decision making based on cross entropy and Choquet integral. Int. J. Intell. Syst. 28(12), 1172–1195 (2013)

Hwang, C.L., Yoon, K.: Multiple attributes decision making methods and applications. Springer, New York (1981)

Zhang, Y.L., Zhang, X.L., Li, M., Liu, Z.M.: Research on heat transfer enhancement and flow characteristic of heat exchange surface in cosine style runner. Heat Mass Transfer. 55, 3117–3131 (2019)

Zhang, Y.L., Huang, P.: Influence of mine shallow roadway on airflow temperature. Arab. J. Geosci. (2020). https://doi.org/10.1007/s12517-019-4934-7

Zhang, X.L., Zhang, Y.L., Liu, Z.M., Liu, J.: Analysis of heat transfer and flow characteristics in typical cambered ducts. Int. J. Therm. Sci. 150, 106226 (2020)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 71571192), the Beijing Intelligent Logistics System Collaborative Innovation Center (No. 2019KF-09), and the Major Project for National Natural Science Foundation of China (No. 71790615).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yuan, R., Meng, F. New Similarity Measures for Dual Hesitant Fuzzy Sets and Their Application. Int. J. Fuzzy Syst. 22, 1851–1867 (2020). https://doi.org/10.1007/s40815-020-00910-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-020-00910-0