Abstract

In light of the remarkable diversity of data, arises an interesting and challenging problem of their description and concise interpretation. In a nutshell, in the proposed description pursued in this study, we consider a framework of information granules. The study develops a general scheme composed of two functional phases: (i) clustering data and features forming segments of original data and delivering a meaningful partition of data, and (ii) development of information granules. In both phases, we discuss a suite of performance indexes quantifying the quality of segments of data and the resulting information granules. Along this line, discussed are collections of information granules and their mutual relationships. A series of publicly available data sets is used in the experiments—their granular signature is quantified, and the quality of these findings is analyzed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Data analysis and data analytics, in general, are inherently aimed at revealing and description of interpretable and stable relationships among variables as well as quantifying their changes over time and space. Along with large volumes of data and their diversity, comes a genuine need to develop a flexible, user-centric and computationally efficient environment producing meaningful results.

The key research hypothesis is that in the realization of the above stated agenda of data analytics, the concepts of a multiview perspective [1,2,3,4] of data with the use of information granules play a pivotal role both at the methodological as well as algorithmic level of ensuing constructs. The formation and engagement of the multiview organization of processing of data contributes in a tangible way to the efficient way of solving of a spectrum of tasks of data analysis, especially facilitating a thorough user-centric interpretation of results and producing readable yet fully legitimate outcomes supported by the existing experimental evidence. The varying (adjustable) perspective delivered by information granules helps establish a sound tradeoff between the representation capabilities of various views at the data and the efficiency of fundamental categories of tasks of data science such as association analysis, classification, prediction, link analysis and others.

Another important research hypothesis is that by engaging the multiview perspective of data analytics of the same data, we establish a coherent and holistic view of the data and ensuing models under consideration. Data are represented through a collection of information granules. The diversity of the constructed granules manifests itself by the fact that information granules are built based on subsets of data and subsets of features while the quality of granules is being assessed.

The term multiview data analysis has been used in the literature in the past, however this term comes with a different meaning. The study reported in [5] offers an interesting view focused on feature selection. In our case, the multiview character of information granules is concerned with the perspective established with regard to mutual organization involving some sections of the data and subsets of features. Furthermore, the multiview is formed in the conceptually appealing and computationally sound setting of information granules.

A number of well-focused research aims of this study are presented (subsequently leading to the formulation of a coherent and comprehensive methodological framework of the investigation):

-

i.

A multiview formation of data subspaces leading to dimensionality reduction, enhanced readability (interpretability) of the data and increased efficiency of ensuing analysis (such as e.g., prediction, association analysis, or classification). The varying (adjustable) levels of detail captured by the individual views (perspectives) are helpful in reducing computing overhead of individual optimization tasks.

-

ii.

The multiview facets built for the data are also concerned with granulation of the feature space, therefore leading to so-called meta-features (viz. collections of features, which exhibit some semantics and offer a view at the data at the higher level of abstraction). Both of these categories of views outlined in (i)–(ii) give rise to information granules of meta-features and information granules established in the joint data-feature space. Each view (perspective) gives rise to its focused perception of the same data and ensuing results produced in this setting.

-

iii.

Formation of optimization criteria quantifying the quality and practical relevance of the multiview perspective at the data. The essential criteria fall under the umbrella of representation capabilities of the data (which are commonly linked to the inevitable compression error) and the relevance of the established cognitive perspective in solving main categories of data analysis problems. An important and intriguing problem comes with a way on how to balance these two requirements and cope with their conflicting nature (higher representation capabilities do not directly translate into more efficient and computationally sound performance of data analysis).

-

iv.

While numeric prototypes are sound initial descriptors of segments of data and features, they are elevated to granular counterparts, which in turn offer better abstract and holistic descriptors of data.

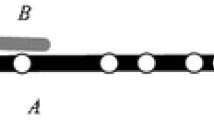

The ultimate objective is to derive structural information [6] in the data and feature (attribute) space and construct information granules on combinations of subsets of data and features. Their quality is evaluated in view of various criteria depending on further use of information granules in system modeling (classification and prediction) and data representation. The constructed information granules are ranked with respect to the pertinent performance criteria (either reconstruction-based, prediction-oriented, or classification-based). An overall scheme of processing underlying a way of moving from data to information granules is displayed in Fig. 1. Here, the main phases are highlighted along with numeric and granular descriptors. The overall scheme outlined here entails also a significant level of originality as the comprehensive concept and its algorithmic environment have not been investigated.

The data-feature segmentation can be concisely captured in the following way:

where the data and feature sets are exhaustive and mutually exclusive, namely

where c and r are the number of segments present in data and feature space.

The study offers some original insights into the problem of data description that have not been studied in the past: (i) the development of information granules in the data and feature space delivers a new focused view at the essence of the overall data set; (ii) the ensuing information granules built on a basis of numeric prototypes establish a so-called granular blueprint of data and help focus on the essence of the relationships present there, and (iii) the construction of classifiers and predictors at the granular level by engaging information granules as a backbone of such constructs.

To systematically organize the presentation on the concepts and their construction, the paper is structured as follows. Section 2 elaborates on the development of subsets of data and features (data views) with the use of fuzzy clustering, Fuzzy C-Means (FCM) [7], being more specific. Subsequently, in Sect. 3, the characterization of these views is offered through several performance indexes, say a reconstruction error, classification content and prediction content of numeric representatives of data views. Section 4 is devoted to the construction of information granules through the principle of justifiable granularity. Information granules form a blueprint of classifiers and predictors; these topics are covered in Sect. 5. Experiments using publicly available data are presented in Sect. 6. Conclusions and directions of future research are included in Sect. 7.

2 Development of Subsets of Data (Clusters) Through Clustering Completed in Data Space and Feature Space

Information granules are commonly constructed with the help of clustering techniques [8] regarded as a prerequisite design vehicle. Clustering is regarded as a sound departure point of further constructs. Here, we consider the Fuzzy C-Means (FCM) algorithm as a representative of vehicle of clustering. While the FCM is commonly used to cluster data, it can be also considered to cluster features, viz. build a collection of features. In what follows, we consider patterns (data) x1, x2,…, xN expressed in the n-dimensional space of real numbers Rn. Recall that clustering realized by the FCM algorithm returns a collection of prototypes and a partition matrix. The number of clusters in the data space is set to c, and the number of clusters in the feature space is set to r. In what follows, we recall the essence of building data segments and feature segments.

2.1 Clustering in the Data Space

The FCM is guided by the following well-known objective function:

where c stands for the number of clusters, m is a fuzzification coefficient (m > 1) and ||.|| is a weighted Euclidean distance [9, 10], namely \(||\varvec{a} - \varvec{b}||^{2} = \sum\nolimits_{j = 1}^{n} {\frac{{(a_{j} - b_{j} )^{2} }}{{\sigma_{j}^{2} }}}\), dim(a) = n with the weights being the standard deviations of the corresponding variables. The optimization (viz. partitioning the data) carried out in the data space is realized iteratively: one starts with a randomly initialized partition matrix U and then updates the parameters to be optimized, viz. the partition matrix and the collection of the prototypes v1, v2,…, vc.

Note that the partition matrix is fuzzy, viz. the entries assume values in between 0 and 1. In other words, fuzzy sets formed by the FCM embrace almost all data but with some degrees of membership [11]. To form the constructed subsets of data, we make them Boolean (two-valued) by admitting those data which belong to the ith cluster to the highest extent, viz. Di = {xk| uik= maxj=1,2,…,c ujk}.

In conclusion, one can regard the prototypes vi as the concise numeric descriptors of Di. The prototypes are just a manifestation of the data composing the clusters and as such are the most meaningful outcomes of fuzzy clustering to be used in further investigations.

2.2 Clustering in the Feature Space

When it comes to revealing structure in feature space, we reformulate the problem and look at the objects that are subject to clustering. Let us organize the original data into vectors positioned in RN, namely z1, z2,…, zn, where zj = [xj1 xj2 … xjN], j = 1, 2,…, n.

The objective function guiding the process of clustering of features (thus building subsets of features) is expressed as follows:

Here, the distance is expressed as follows:

The partition matrix G conveys crucial information about the subsets of features forming so-called met features. The features belonging to the jth cluster to the highest extent are denoted by Fj. In virtue of identifying elements of the partition matrix F, the subsets Fj, Fl, etc., are mutually disjoint.

In summary, the results of clustering completed in the data and feature space come as data sets ad feature sets. We form all possible combinations of subsets produced by the clustering completed in the data space and feature space. For instance, the subset (Di, Fj) describes the data belonging to Di and having features belonging to Fj. Having c and r clusters in the data and feature space, respectively, we have cr subsets (segments) in the Cartesian product of these two spaces. In what follows, we evaluate the quality of such subsets; by computing the pertinent measures one can order the subsets and evaluate their distribution.

3 Characterization of Data Views (Di, Fj)

The performance of each cluster (data view) can be evaluated in various ways. Depending upon applications, there are several main indexes to be considered: (i) reconstruction error, (ii) classification content, and (iii) prediction capabilities.

First, we elaborate on the reconstruction criterion. In total there are cr information granules (clusters) each associated with the reconstruction error. The results obtained for the corresponding clusters are arranged in a matrix form organizing results for all combinations of Di and Fj.

3.1 Reconstruction Error

Denote the reconstruction error produced for (Di, Fj) by Vij, i = 1, 2.., c; j = 1, 2,…, r.

This error expresses the representation capabilities of the prototype vij associated with (Di, Fj) by computing the following expression Vij:

The same as in clustering algorithms, the distance ||.|| is the weighted Euclidean involving the standard deviation of the variables; let us emphasize that the calculations are completed for features forming Fj. The prototype vij standing in (6) is computed as follows:

where l runs through indexes of features forming Fj. Obviously, the coordinates of vij stand for those variables which form Fj. We organize the values of the reconstruction error into a c by r matrix form containing the values of the Vij. Furthermore, the values of Vij can be arranged in an increasing order by ranking the relevance of numeric descriptors by starting from the most relevant ones (viz. with the smallest values of this criterion).

Furthermore, depending on the nature of the data under consideration, the quality of information granules can be assessed by viewing their discriminatory and predictive content (abilities).

3.2 Classification Content of Information Granule

When dealing with classification problem, one determines a class content of information granule. Consider that in the classification problem we encounter t classes ω1, ω2,…, ωt. The quality of the information granule (cluster) formed by (Di, Fj) is assessed by looking at the distribution of data in (Di, Fj) across different classes. With regard to the matrix of segments of data and features, the result is the same across the columns in the given row.

Then we calculate the probability of classes present in this information granule pi= [pi1 pi2 …, pit], i = 1,2,…,c. The less homogenous the information granule is, the higher its vagueness becomes. The quantification is realized by means of the entropy [12] measure defined as follows:

The vagueness of the ith granule is expressed as follows:

3.3 Predictive Content of Information Granules

Comparing the performance indexes is completed by looking at the diversity of output data falling within the bounds of the information granules. The diversity is quantified by means of the variance of the output variable of data falling within the bounds of (Di, Fj). In more detail, recall that the data come in the form (xk, yk), where yk is the output variable. We calculate the variance

where

and

i = 1, 2,…,c.

4 Construction of Information Granules

The data subsets (segments) (Di, Fj) embracing some data and formed over a certain collection of features give rise to information granules. The granules are built with the use of the principle of justifiable granularity [13,14,15,16].

In a nutshell, this principle produces an information granule in such a way it meets the requirements of coverage and specificity whose product is maximized; see Fig. 2.

The design of information granule is realized in such a way that the granule is (i) experimentally justifiable and (ii) is semantically sound. The experimental justification means that there is enough data embraced (contained) in the constructed granule making its existence legitimate in terms of the experimental data (hence the aspect of experimental justification). The semantic soundness states that the granule has to exhibit some interpretation capabilities and its precision needs to be sufficient enough. The coverage is expressed in the following way:

The interpretation of the coverage criterion requires some attention. This criterion quantifies the amount of experimental evidence behind the constructed information granule. In more detail, we count the number of data whose distance computed over all features present in Fj, say

\(||x_{k} - v_{ij} ||_{{F_{j} }} = \sum\nolimits_{l = 1}^{{n_{j} }} {\frac{{(x_{kl} - v_{ij,l} )^{2} }}{{\sigma_{il}^{2} }}}\), with \(\sigma_{il}\) being equal to the standard deviation of data residing within the corresponding segment of the data) equal or smaller than \(n_{j} \rho_{ij}^{2}\) the threshold implied by the radius of the constructed information granule \(\rho_{ij}^{2}\).

The specificity regarded as a measure of precision is given as follows:

Note again that the highest specificity is achieved for the radius set to zero. However, at this case, the coverage is practically equal to zero. On the other hand, the highest coverage implies a zero value of specificity. The increase of coverage implies the decrease of specificity and vice versa. If these conflicting criteria have to be optimized, one has to proceed with a bi-criteria optimization or formulate the problem as a scalar optimization by taking an aggregate of the criteria. The product of coverage and specificity could serve as a viable alternative here.

An information granule Vij associated with (Di, Fj) is the pair (vij, ρij), where the radius ρij is optimized by considering the optimization problem

The higher the value of the optimized product of coverage and specificity, the more suitable (relevant) the constructed information granule becomes. Proceeding with the constructed information granules (after maximization of (13)), we can conveniently display them in the coverage-specificity plane; see Fig. 3. The location of information granules helps identify the best of them in terms of the specificity and coverage criteria.

5 Granular Predictors and Classifiers

The collection of information granules Gij = (vij, ρij), i = 1, 2,…, c; j = 1, 2…, r, forming the concise description of data are regarded as building modules (blueprint) so that they can give rise to granular predictors and classifiers. We briefly outline the essence of the underlying architecture; noticeable is a role of the granules as a skeleton of the construct.

5.1 Predictors

Let us consider that for each information granule, there is a numeric representative of the output variable. Any input x is matched vis-a-vis the individual information granules giving rise to the corresponding activation (matching) levels u1, u2, …, ucr:

The prediction result is computed by taking a linear combination of the numeric representatives of the individual information granules and their radii, namely

where

Thus, the prediction result arises as information granule \(\hat{Y} = (\hat{y},\hat{\rho }).\)

5.2 Classifiers

As presented so far, each information granule comes with a vector of probability of classes pi. pi= [pi1 pi2 …pit], i = 1,2,…,c. They are coming as a result of counting the number of patterns belonging to the individual classes [17]. More specifically, denoting by Ni the number of data contained in Di, ni1, ni2,…, nit are the counts of number of data belonging to the corresponding classes. The vector pi is composed of the ratios

The process of class assignment proceeds in a similar way as discussed in case of predictors. The final class membership p is computed in the following way:

Using the maximum rule, one selects this class i0 for which the coordinate of p attains the highest value, viz. \(i_{0} = \arg {\text{Max}}_{i = 1,2, \ldots ,p} p_{i} .\)

In other words, i0 is the index of the largest coordinate of the vector p.

5.3 Illustrative Example

In this example, we assume a synthetic data of six data points of four features. The first three data points are from a certain normal distribution (classification class 1), and the next three from another normal distribution (classification class 2).

X is clustered into c = 2 data clusters, and r = 2 feature clusters as follows (Fig. 4).

Accordingly, we have four information granules: (D1, F1), (D1, F2), (D2, F1), and, (D2, F2). The prototype of each information granule is computed by averaging all its data points. For example, the computation of v11 is illustrated in Fig. 5.

Now using Eq. (16), we compute the membership matrix uij. Figure 6 illustrates the semantics of uij.

Using Eq. (18), we compute the membership through data clusters (\(\bar{u}_{i}\)). For example, \(\bar{u}_{i}\) is computed as shown in Fig. 7.

Using Eq. (19), we compute (pi), the ratio of each classification class in each data cluster Di. Then, using Eq. (20), the assigned class to a certain data point Xi is computed as shown in Fig. 8.

6 Experimental Studies

In this section, we elaborate on the development of information granules and their quality. Both classification and regression type of data are considered; see Table 1.

We proceed with the clustering algorithm in the data space or feature space as described in Sect. 2. The number of clusters in the data space is c while for clustering features we consider r clusters. The clustering results are transformed to the binary version. The values of these numbers are selected based on the behavior of the objective function versus the varying values of these parameters. For each data set, we report the results in a certain format. The numbers of segments in the data and feature space are selected on a basis of the changes of the performance indexes (objective functions) regarded as functions of c and r; see Fig. 9. They are treated as functions of the number of segments and tend to stabilize when moving towards higher values of c and r. Now we will demonstrate our scheme using one classification data set (Gender Voice data set), and one regression data set (Concrete Data set), then follow the same procedure for more data sets.

As we have cr information granules, the quality of obtained information granules is reported by means of the reconstruction index (6). The values of Vij computed with the use of (6) for individual granules are presented in Tables 2 and 3. It is clear from these tables, and based on Fig. 9 that when the number of data clusters reaches 7 and above, and when the number feature clusters reaches 4 and above, we get a low value of the reconstruction error. This can be verified by computing the average reconstruction error.

Figures 10 and 11 (bar plot) display the values of Vij starting from the best information granules (viz. with the lowest value of Vij). In general, the error is low for all information granules when the values of both c and r are relatively high (4 or higher for r, and 7 or higher for c).

In case of classification data, the quality of information granules is evaluated with the aid of entropy (9). The obtained values of entropy are shown in an increasing order by proceeding with the lowest value; see Fig. 12. While for the regression data, the quality of information granules is evaluated with the aid of the variance (10) shown in an increasing order by proceeding with the lowest value (see Fig. 13). When the number of information granules increases, the average variance and average vagueness decrease.

Proceeding with the characterization of information granules built on a basis of the numeric prototypes, we display the optimal values of coverage and specificity (viz. the values obtained when the product of coverage and specificity achieved the highest value). Some selected results are displayed in Figs. 14 and 15. It can be noted that when the total number of information granules is high, the average product of coverage and specificity becomes low.

Proceeding with the remaining data sets, we report the results in a similar manner in Tables 4, 5 and Figs. 16, 17, 18, 19, 20 and 21. These tables and figures support all conclusions we have reached by inspecting the above two data sets.

7 Conclusions

The study was devoted to the concise development of data by constructing their numeric representatives followed by the augmentation of the prototypes expressed in terms of information granules. The developed optimization environment helps quantify the quality of information granules (in terms of entropy and diversity) and numeric prototypes (evaluated by means of the reconstruction error). Information granules form a blueprint of data and constitute an initial setting for a variety of constructs and classifiers, predictors and association networks. It is worth stressing that information granules are functional building modules that are used as generic components in the development of a plethora of models including predictors and classifiers. Equally important is the fact that the study delivered a way to quantify the quality of information granules with regard to their classification or prediction capabilities. Likewise, the multiview perspective at experimental data is essential to cope with massive data as one constructs essential information granules pertinent that are central to facilitate an efficient way of building classifiers and predictors.

While Sect. 5 elaborates on the fundamentals of the modeling constructs (which owing to the use of information granules can be referred to as granular predictors, granular classifiers, etc.), more detailed studies could follow that focus on the detailed architectures and some following learning schemes.

References

Sun, S., Shawe-Taylor, J., Mao, L.: PAC-Bayes analysis of multi-view learning. Inf. Fusion 35, 117–131 (2017)

Jiang, B., Qiu, F., Wang, L.: Multi-view clustering via simultaneous weighting on views and features. Appl. Soft Comput. 47, 304–315 (2016)

Pontes, B., Giráldez, R., Aguilar-Ruiz, J.S.: Biclustering on expression data: a review. J. Biomed. Inform. 57, 163–180 (2015)

Zong, L., Zhang, X., Yu, H., Zhao, Q., Ding, F.: Local linear neighbor reconstruction for multi-view data. Pattern Recognit. Lett. 84, 56–62 (2016)

Yin, J., Sun, S.: Multiview uncorrelated locality preserving projection. IEEE Trans. Neural Netw. Learn. Syst. (2019). https://doi.org/10.1109/TNNLS.2019.2944664

Henriques, R., Antunes, C., Madeira, S.C.: A structured view on pattern mining-based biclustering. Pattern Recognit. 48, 3941–3958 (2015)

Bezdek, J.C., Ehrlich, R., Full, W.: FCM: the fuzzy c-means clustering algorithm. Comput. Geosci. 10, 191–203 (1984)

Lunwen, W.: Study of granular analysis in clustering. Comput. Eng. Appl. 5, 29–31 (2006)

Liberti, L., Lavor, C., Maculan, N., Mucherino, A.: Euclidean distance geometry and applications. SIAM Rev. 56, 3–69 (2014)

Dattorro, J.: Convex optimization & euclidean distance geometry. Lulu.com (2010)

Zadeh, L.A.: Toward a theory of fuzzy information granulation and its centrality in human reasoning and fuzzy logic. Fuzzy Sets Syst. 90, 111–127 (1997)

de Sa, J., Silva, L., Santos, J., Alexandre, L.: Minimum error entropy classification. In Studies in Computational Intelligence. Springer (2013)

Wang, X., Pedrycz, W., Gacek, A., Liu, X.: From numeric data to information granules: a design through clustering and the principle of justifiable granularity. Knowl. Based Syst. 101, 100–113 (2016)

Pedrycz, W., Homenda, W.: Building the fundamentals of granular computing: a principle of justifiable granularity. Appl. Soft Comput. 13, 4209–4218 (2013)

Pedrycz, W., Al-Hmouz, R., Morfeq, A., Balamash, A.: The design of free structure granular mappings: the use of the principle of justifiable granularity. IEEE Trans. Cybern. 43, 2105–2113 (2013)

Pedrycz, W.: The principle of justifiable granularity and an optimization of information granularity allocation as fundamentals of granular computing. J. Inf. Process. Syst. 7, 397–412 (2011)

Balamash, A., Pedrycz, W., Al-Hmouz, R., Morfeq, A.: Granular classifiers and their design through refinement of information granules. Soft. Comput. 21, 2745–2759 (2017)

Machine Learning Data (MLData). https://www.mldata.io/dataset-details/gender_voice/. Accessed 1 Sept 2019

UCI Machine Learning Repository. https://archive.ics.uci.edu/ml/index.php. Accessed 1 Sept 2019

Acknowledgements

This project was funded by the Deanship of Scientific Research (DSR), King Abdulaziz University, Jeddah, Saudi Arabia, under Grant No. (KEP-5-135-39). The authors, therefore, acknowledge with thanks DSR technical and financial support.

Author information

Authors and Affiliations

Corresponding author

Appendix A

Appendix A

Used symbols

Symbol | Description |

|---|---|

Di | Data cluster i |

Fj | Feature cluster j |

r | Number of feature clusters |

c | Number of data clusters |

Q | FCM objective variable |

xk | Data point k |

zj | Feature j |

N | Total number of data points |

n | Total number of features |

vi | Data cluster i prototype |

m | Fuzzification coefficient |

uik | The membership value of a data point xk to the data cluster i |

gij | The membership value of a feature zj to the feature cluster i |

Vij | Reconstruction error produced for (Di, Fj) |

\(\left\| \cdot \right\|_{{F_{j} }}\) | Distance completed for features forming Fj |

ρij | The probability class j exists in information granule i |

vij | Data cluster i prototype computed by averaging cluster data points just for features forming Fj |

h | Entropy |

Ci | Vagueness of the ith information granule |

σiy | The variance of the output values of data cluster i |

\(\bar{y}_{i}\) | The average of the output values of data cluster i |

Ri | |

Nij | Number of data points in information granule Gij ≡ (Di, Fj) |

cov | Coverage |

sp | Specify |

\(\hat{y}\) | Predicted y value |

\(\rho\) | Predicted class |

Rights and permissions

About this article

Cite this article

Balamash, A., Pedrycz, W., Al-Hmouz, R. et al. Data Description Through Information Granules: A Multiview Perspective. Int. J. Fuzzy Syst. 22, 1731–1747 (2020). https://doi.org/10.1007/s40815-020-00903-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-020-00903-z