Abstract

A new optimal robust control is proposed for mechanical systems with fuzzy uncertainty. Fuzzy set theory is used to describe the uncertainty in the mechanical system. The desirable system performance is deterministic (assuring the bottom line) and also fuzzy (enhancing the cost consideration). The proposed control is deterministic and is not the usual if–then rules-based. The resulting controlled system is uniformly bounded and uniformly ultimately bounded proved via the Lyapunov minimax approach. A performance index (the combined cost, which includes average fuzzy system performance and control effort) is proposed based on the fuzzy information. The optimal design problem associated with the control can then be solved by minimizing the performance index. The unique closed-form optimal gain and the cost are explicitly shown. The resulting control design is systematic and is able to guarantee the deterministic performance as well as minimizing the cost. In the end, an example is chosen for demonstration.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

There always exist unnoticeable and unknown aspects of the real system in the dynamic model which captures prominent features of the mechanical system. Researches on mechanical system control have always been very active, especially on handling uncertainties in the system. Exploring uncertainty and determining what is known and what is unknown about the uncertainty is very important. Once the bound information of the uncertainty is clearly identified, we can use this known bound information to develop deterministic control approaches. The well-known H 2/ H ∞ control [17, 20], the Lyapunov-based control [6, 19], the sliding mode control [24], and so on contribute to this deterministic approach. When the known portion cannot be completely isolated from the unknown, one may take the stochastic control approach. The classic Linear–Quadratic–Gaussian control [23] is in such domain.

The stochastic dynamical system merges the probability theory with system theory and has been the most outstanding since 1950s. Kalman initiated the effort of looking into the estimation problem and control problem [11, 12] in the state space framework when a system is under stochastic noise. Although the stochastic approach is quite self-contained and an impressive arena of practitioners, concerns on the probability theory’s validity in describing the real world does exist. That is to say, the link between the stochastic mathematical tool and the physical world might be loose. Kalman, among others, despite his early devotion to stochastic system theory now contends that the probability theory might not be all that suitable to describe the majority of randomness [13].

The uncertainty in engineering is often acquired via observed data and then analyzed by the practitioner. However, the observed data are, by nature, always limited and the uncertainty is unlikely to be exactly repeated many times. The earthquake data can be an example for interpretation [22]. Hence, any interpretation via the frequency of occurrence, which requires a large number of repetitions, might sometimes be limited due to a lack of basis. As a result, the fuzzy view via the degree of occurrence may be considered as an alternative in certain applications. We can find more discussions on the relative advantages of fuzziness versus probability in Bezdek [2].

Fuzzy theory was initially introduced to describe information (for example, linguistic information) that is in lack of a sharp boundary with its environment [26]. Most interest in fuzzy logic theory is attracted to fuzzy reasoning for control, estimation, decision-making, etc. Therefore, the merge between the fuzzy theory and system theory (fuzzy dynamical system) has been less focused on. Past efforts on fuzzy dynamical systems can be found in Hanss [9], Bede et al. [1], Lee et al. [15], Huang et al. [10], and Xu et al. [25]. We stress that these are different from the very popular Takagi–Sugeno model or other fuzzy if–then rules-based models. In this paper, from a different angle, we employ the fuzzy theory to describe the uncertainty in the mechanical system and then propose optimal robust control design of fuzzy mechanical systems.

The main contributions are fourfold. First, we not only guarantee the deterministic performance (including uniform boundedness and uniform ultimate boundedness), but also explore fuzzy description of system performance should the fuzzy information of the uncertainty be provided. Third, we propose a robust control which is deterministic and is not the usual if–then rules-based. The resulting controlled system is uniformly bounded and uniformly ultimately bounded proved via the Lyapunov minimax approach. Fourth, a performance index (the combined cost, which includes average fuzzy system performance and control effort) is proposed based on the fuzzy information. The optimal design problem associated with the control can then be solved by minimizing the performance index. In the end, the unique closed-form solution of optimal gain and the cost are explicitly presented. The resulting control design is systematic and is able to guarantee the deterministic performance as well as minimizing the cost.

2 Fuzzy mechanical systems

Consider the following uncertain mechanical system:

Here \( q_{0} \) is the time (i.e., the independent variable), \( q \in R^{n} \) is the coordinate, \( \dot{q} \in R^{n} \) is the velocity, \( {\ddot{q}} \in R^{n} \) is the acceleration, \( \sigma \in R^{p} \) is the uncertain parameter, and\( \tau \in R^{n} \) is the control input. \( M(q,\sigma ,t) \in R^{n \times n} \) is the inertia matrix, \( V(q,\dot{q},\sigma ,t) \in R^{n} \) is the Coriolis/centrifugal force vector, \( G(q,\sigma ,t) \in R^{n} \) is the gravitational force vector, and \( T(q,\dot{q},\sigma ,t) \in R^{n} \) is the friction force and external disturbance or force (we omit arguments of functions where no confusions may arise).

Assumption 1

The functions M(·), V(·), G(·), and T(·) are continuous (Lebesgue measurable in t). Furthermore, the bounding set Σ is known and compact.

Assumption 2

(i) For each entry of \( q_{0i} \), namely \( q_{0i} \), i = 1, 2,…, n, there exists a fuzzy set \( Q_{0i} \) in a universe of discourse \( \varXi i \subset R \) characterized by a membership function \( \mu \varXi i:\varXi i \to \left[ {0,1} \right] \). That is,

Here \( \varXi i \) is known and compact. (ii) For each entry of the vector σ(t), namely \( \sigma_{i} \left( t \right) \), i = 1, 2,…, p, the function \( \sigma_{i} \left( \cdot \right) \) is Lebesgue measurable. (iii) For each \( \sigma_{i} \left( t \right), \) there exists a fuzzy set \( S_{i} \) in a universe of discourse \( \varSigma i \subset R \) characterized by a membership function \( \mu_{i} :\varSigma_{i} \to \left[ {0,1} \right] \). That is,

Here \( \varSigma_{i} \) is known and compact.

Remark

Assumption 2 imposes fuzzy restriction on the uncertainty \( q_{0} \) and \( \sigma \left( t \right) \). We employ the fuzzy description on the uncertainties in the mechanical system. This fuzzy description earns much more advantage than the probability avenue which often requires a large number of repetitions to acquire the observed data (always limited by nature).

Assumption 3

The inertia matrix \( M\left( {q,\sigma ,t} \right) \) in mechanical systems is uniform positive definite, that is, there exists a scalar constant \( r > 0 \) such that

for all \( q \in R^{n} . \)

Remark

We emphasize that this is an assumption, not a fact. There are cases that the inertia matrix may be positive semi-definite (hence, γ = 0). One example is documented in McKerrow [18] where the generalized inertia matrix

Thus \( det\left[ M \right] = 0 \) if \( \theta_{2} = \, \left( {2n \, + \, 1} \right)\frac{\pi }{2},\quad n = 0, \pm 1, \pm 2, \ldots \). That is to say, the generalized inertia matrix M is singular. When \( \theta_{2} = \, \left( {2n \, + \, 1} \right)\frac{\pi }{2},\quad n = 0, \pm 1, \pm 2, \ldots \), the kinetic energy \( \frac{1}{2}\dot{q}{}^{T}M\dot{q} = 0,\quad \forall \,\dot{\theta }_{1} \) which means the rotation does not bring up kinetic energy.

Assumption 4

There is a constant \( \mathop \gamma \limits^{\_} \), such that for all \( \left( {q,t} \right) \in R^{n} \times R,\sigma \in \varSigma , \) the inertial matrix \( M\left( {q,\sigma ,t} \right) \) is bounded as

Unless otherwise stated, \( \left\| {\; \cdot \;} \right\| \) always denotes the Euclidean norm (i.e., \( \left\| {\; \cdot \;} \right\|_{2} \)). The l 1-norm are sometimes used and indicated by subscript 1.

Theorem 1

There always exists the factorization

such that \( \dot{M}\left( {q,\dot{q},\sigma ,t} \right) - 2C\left( {q,\dot{q},\sigma ,t} \right) \) is skew symmetric [21]. Here \( \dot{M}\left( {q,\dot{q},\sigma ,t} \right) \) is the time derivative of \( M\left( {q,\sigma ,t} \right) \).

Remark

To satisfy \( V = C\mathop q\limits^{.} \), the matrix C may not be unique. But if you also want \( \mathop M\limits^{.} - 2C \) to be skew symmetric, then the particular choice of C should be defined as

where \( c_{ij} \) is the ij-element of matrix C.

3 Robust control design of fuzzy mechanical systems

We wish the mechanical system to follow a desired trajectory \( q^{d} \left( t \right),t \in \left[ {t_{0} ,t_{1} } \right] \), with the desired velocity \( \dot{q}^{d} \left( t \right) \). Assume \( q^{d} (\cdot) \, : \, [t_{0} ,\infty ] \to R^{n} \) is of class \( C^{2} \) and \( \dot{q}^{d} \left( t \right) \),\( {\ddot{q}}^{d} \left( t \right) \), and \( {\ddot{q}}^{d} \left( t \right) \) are uniformly bounded. Let

and hence \( {\dot{\text{e}}}\left( t \right) = \, \dot{q}\left( t \right) \, - \, \dot{q}^{d} \left( t \right),\,{{{\ddot{e}}}}\left( t \right) = \, {\ddot{q}}\left( t \right) \, - \, {\ddot{q}}^{\text{d}} \left( t \right)\). The system (2.1) can be rewritten as

The functions M(·), C(·), G(·), and T(·) can be decomposed as

where \( \overline{M},\overline{C},\overline{G}, \) and \( \overline{T} \) are the nominal terms of corresponding matrix/vector and \( \Delta M,\Delta C,\Delta G, \) and \( \Delta{\text{T}} \) are the uncertain terms which depend on \( \sigma \). We now define a vector

where \( S = diag\left[ {si} \right]_{n \times n} \), \( s_{i} > 0 \) is a constant, i = 1, 2,…, n. Obviously \( \varPhi \equiv \, 0 \) if all uncertain terms vanish.

Assumption 5

There are fuzzy numbers \( \zeta_{K} \left( {\dot{q}d, \, {\ddot{q}}d,e, \, \dot{e},\sigma ,t}\right)\) ’s and scalars \( \rho_{K} \left( {\dot{q}d, \, {\ddot{q}}d,e, \, \dot{e},\sigma ,t} \right) \)’s, k = 1, 2,…, r, such that

From (3.5), we have

Remark

One can employ fuzzy arithmetic and decomposition theorem (see the Appendix) to calculate the fuzzy number ζ based on the fuzzy description of \( \sigma_{i} \)’s (Assumption 2).

We introduce the following desirable deterministic dynamical system performance.

Definition 1

Consider a dynamical system

The solution of the system (suppose it exists and can be continued over \( [t_{0} ,\infty ) \)) is uniformly bounded if for any \( r > 0 \) with \( \left\| {\xi_{0} } \right\| \le r \), there is \( d\left( r \right) > 0 \) with \( \left\| {\xi_{0} } \right\| \le d\left( r \right) \) for all \( t \ge t_{0} \). It is uniformly ultimately bounded if for \( \left\| {\xi_{0} } \right\| \le r \) there are \( \overline{d}\left( r \right) > 0 \) and \( T(\overline{d}\left( r \right),r) \ge 0 \) such that \( \left\| {\xi_{0} } \right\| \le \overline{d}\left( r \right) \) for all \( t \ge t_{0} + \, T(\overline{d}\left( r \right),r) \).Let

The control design is to render the tracking error vector e(t) to be sufficiently small. We propose the control as

where P, D are positive definite diagonal matrices and the scalar \( \overline{\gamma }: = \, \gamma^{r} > 0. \) The scalar \( \gamma \) is a constant design parameters.

Theorem 2

Subject to Assumptions 1–5, the control (3.9) renders \( {\text{e}}\left( t \right) \) of the system (3.2) to be uniformly bounded and uniformly ultimately bounded. In addition, the size of the ultimate boundedness ball can be made arbitrarily small by suitable choices of the design parameters.

Remark

The control \( \tau (t) \) is based on the nominal system, the tracking error e(t), the bound of uncertainty, and the design parameters. Therefore, this proposed control is deterministic and is not if–then rules-based.

4 Proof of Theorem 2

The mechanical system with the proposed control is proved to be stable in this section. The chosen Lyapunov function candidate is shown to be legitimate and then the proof of stability follows via Lyapunov minimax approach [7, 16].

The Lyapunov function candidate is chosen as

To prove \( V \, \) is a legitimate Lyapunov function candidate, we shall prove that \( V \, \) is (globally) positive definite and decrescent. By (2.4), we have

where \( s_{i} \), \( p_{i} \)’s, and \( d_{i} \)’s are from (3.4) and (3.9), \( e_{i} \) and \( \dot{e}_{i} \) are the i-th components of e and \( \dot{e} \), respectively, and

It can be easily verified that \( \varPsi_{i} > 0\quad \forall \;i \). Thus, by letting \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{\lambda } = min\left( {\frac{1}{2}\lambda_{m} \left( {\varPsi_{1} } \right),\cdot\cdot\cdot \, ,\frac{1}{2}\lambda_{m} \left( {\varPsi_{n} } \right)} \right) \) (hence λ > 0), V is shown to be positive definite:

By Assumption 4, we have

For the first term on the right-hand side,

For the second term on the right-hand side, by Rayleigh’s principle,

With (4.6) and (4.7) into (4.5), we have

where \( \overline{\lambda } = \overline{\gamma }\overline{s} \, + \, \lambda_{M} \left( {P \, + \, SD} \right). \) Note that \( \overline{\lambda } \) in (4.8) is a strictly positive constant, which implies that V is decrescent. From (4.4) and (4.8), V is a legitimate Lyapunov function candidate.

Now, we prove the stability of the mechanical system with the proposed control. For any admissible \( \xi \left( \cdot \right) \), the time derivative of V along the trajectory of the controlled mechanical system of (3.2) is given by

By applying \( {\ddot{e}} = {\ddot{q}} - {\ddot{q}}^{d} \) and Eq. (2.1), the first two terms become

With Theorem 1, (3.3) and (3.9), we can get

Since

we have

Substituting (4.14) into (4.9), we get

where \( \lambda = \hbox{min} \left\{ {\lambda \hbox{min} \left( {PS} \right), \, \lambda \hbox{min} \left( D \right)} \right\}. \) This in turn means that\( \dot{V} \) is negative definite for all \( \left\| {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{e} } \right\| \) such that

where \( \delta = \frac{{\zeta^{2} }}{4} \). Since all universes of discourse \( \varSigma_{i} \)’s are compact (hence closed and bounded), δ is bounded. In addition, both \( \gamma \) and \( \lambda \) are crisp. Thus, \( \dot{V} \) is negative definite for sufficiently large \( \parallel e\parallel \). The uniform boundedness performance follows [6]. That is, given any r > 0 with \( \parallel e\left( {t_{0} } \right)\parallel \le r, \) where \( t_{0} \) is the initial time, there is a d(r) given by

such that \( \parallel \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{e} \left( t \right)\parallel \le d\left( r \right) \) for all \( t \ge t_{0} . \) Uniform ultimate boundedness also follows. That is, given any d with

we have \( \parallel \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{e} \left( t \right)\parallel \le d,\forall t \ge t_{0} + \, T\left( {d,r} \right) \), with

The stability of the mechanical system is guaranteed and tracking error \( \parallel \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{e} \parallel \) can be made arbitrarily small by choosing large λ and/or γ. □

Remark

We have shown that fundamental properties explored in Sect. 3 are quite useful in constructing the legitimate Lyapunov function. The first five terms of the control scheme (3.9) are only for the nominal system (i.e., the system without uncertainty) while the last term is to compensate the uncertainty. For the last term, the magnitude γ is still free for we still have freedom on designing γ to determine the size of the ultimate boundedness. The larger the value of γ is, the smaller the size. This stands for a trade-off between the system performance and the cost which suggests an interesting optimal quest for the control design. We will pursue the optimal design in the following section.

5 Optimal gain design

Sections 3 and 4 show that a system performance can be guaranteed by a deterministic control scheme. By the analysis, the size of the uniform ultimate boundedness region decreases as γ increases. As γ approaches to infinity, the size approaches to 0. This rather strong performance is accompanied by a (possibly) large control effort, which is reflected by γ (assuming r has been chosen). From the practical design point of view, the designer may be interested in seeking an optimal choice of γ for a compromise among various conflicting criteria. This is associated with the minimization of a performance index.

We first explore more on the deterministic performance of the uncertain mechanical system. Define

where \( \overline{\lambda } \) is from (4.8) and λ is from (4.15) and κ > 0. Then by (4.8) and (4.15), we get

with \( V_{0} = V\left( {t_{0} } \right) = V\left( {e\left( {t_{0} } \right)} \right) \). This is a differential inequality [8] whose analysis can be made according to Chen [4] as follows.

Definition 2

If \( w\left( {\psi ,t} \right) \) is a scalar function of the scalars ψ, t in some open connected set D, we say a function \( \psi \left( t \right), \, t_{0} \le t \le \overline{t},\overline{t} > t_{0} \) is a solution of the differential inequality [8]

on \( \left[ {t_{0} ,\overline{t}} \right) \) if ψ(t) is continuous on \( \left[ {t_{0} ,\overline{t}} \right) \) and its derivative on \( \left[ {t_{0} ,\overline{t}} \right) \) satisfies (5.3).

Theorem 3

Let w(φ(t), t) be continuous on an open connected set D ∈ R 2 and such that the initial value problem for the scalar equation [8]

has a unique solution. If φ(t) is a solution of (5.4) on \( t_{0} \le t \le \overline{t} \) and ψ(t) is a solution of (5.3) on \( t_{0} \le t \le \overline{t} \) with \( \psi \left( {t_{0} } \right) \le \varphi \left( {t_{0} } \right) \), then \( \psi \left( {t_{0} } \right) \le \varphi \left( {t_{0} } \right) \) for \( t_{0} \le t \le \overline{t} \).

Instead of exploring the solution of the differential inequality, which is often non-unique and not available, the above theorems suggest that it may be feasible to study the upper bound of the solution. The reason is, however, based on that the solution of (5.4) is unique.

Theorem 4

Consider the differential inequality (5.3) and the differential Eq. (5.4). Suppose that for some constant L > 0, the function w(·) satisfies the Lipschitz condition [4]

for all points \( \left( {v_{1} ,t} \right),\left( {v_{2} ,t} \right) \in D. \) Then any function ψ(t) that satisfies the differential inequality (5.3) for \( t_{0} \le t \le \overline{t} \) satisfies also the inequality

for \( t_{0} \le t \le \overline{t} \).

We consider the differential equation

The right-hand side satisfies the global Lipschitz condition with L = 1/κ. We proceed with solving the differential Eq. (5.7). This result in

Therefore,

or

for all \( t \ge t_{0} \). By the same argument, we also have, for any \( t_{\rm s} \) and any \( \tau \ge t_{\rm s} , \)

where \( V_{\text{s}} = V\left( {t_{\text{s}} } \right) = V\left( {e\left( {t_{\text{s}} } \right)} \right) \). The time \( t_{\text{s}} \) is when the control scheme (3.9) starts to be executed. It does not need to be \( t_{0} \).

By (4.4) \( V\left( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{e} } \right) \ge \lambda \left\| e \right\|^{2} \), the right-hand side of (5.11) provides an upper bound of \( \lambda \left\| e \right\|^{2} \). This in turn leads to an upper bound of \( \left\| e \right\|^{2} \). For each \( \tau \ge t_{\text{s}} , \) let

Notice that for each \( \delta ,\gamma ,t_{\rm s} ,\eta (\delta ,\gamma ,\tau ,t_{\rm s} ) \to 0 \) as \( \, \tau \to \infty . \)

One may relate \( \eta (\delta ,\gamma ,t,t_{0} ) \) to the transient performance and \( \eta_{\infty } (\delta ,\gamma ) \) the steady-state performance. Since there is no knowledge of the exact value of uncertainty, it is only realistic to refer to \( \eta (\delta ,\gamma ,t,t_{0} ) \) and \( \eta_{\infty } (\delta ,\gamma ) \) while analyzing the system performance. We also notice that both \( \eta (\delta ,\gamma ,t,t_{0} ) \) and \( \eta_{\infty } (\delta ,\gamma ) \) are dependent on \( \delta \). The value of \( \delta \) is not known except that it is characterized by a membership function.

Definition 3

Consider a fuzzy set [5]

For any function f: N → R, the D-operation \( D[f(\nu )] \) is given by

Remark

In a sense, the D-operation \( D\left[ {f\left( \nu \right)} \right] \) takes an average value of f(ν) over \( \mu N\left( \nu \right) \). In the special case that \( f\left( \nu \right) = \nu , \) this is reduced to the well-known center-of-gravity defuzzification method [14]. Particularly, if N is crisp (i.e., \( \mu N(\nu ) \, = \, 1 \) for all \( \nu \in N \)), \( D[f(\nu )] \, = \, f(\nu ). \)

Lemma 1

For any crisp constant a ∈ R,

We now propose the following performance index: For any \( t_{\rm s} \), let

where α, β > 0 are scalars. The performance index consists of three parts. The first part \( J_{1} \left( {\gamma ,t_{\text{s}} } \right) \) may be interpreted as the average (via the D-operation) of the overall transient performance (via the integration) from time \( t_{\text{s}} \). The second part \( J_{2} \left( \gamma \right) \) may be interpreted as the average (via the D-operation) of the steady-state performance. The third part \( J_{3} \left( \gamma \right) \) is due to the control cost. Both α and β are weighting factors. The weighting of \( J_{1} \) is normalized to be unity. Our optimal design problem is to choose γ > 0 such that the performance index \( J\left( {\gamma ,t_{\rm s} } \right) \) is minimized.

Remark

A standard LQG (i.e., linear-quadratic-Gaussian) problem in stochastic control is to minimize a performance index, which is the average (via the expectation value operation in probability) of the overall state and control accumulation. The proposed new control design approach may be viewed, loosely speaking, as a parallel problem, though not equivalent, in fuzzy mechanical systems. However, one cannot be too careful in distinguishing the differences. For example, the Gaussian probability distribution implies that the uncertainty is unbounded (although a higher bound is predicted by a lower probability). In the current consideration, the uncertainty bound is always finite. Also, LQG does not take parameter uncertainty into account.

One can show that

Taking the D-operation yields

The last equality is due to Lemma 1. Next, we analyze the cost \( {\text{J}}_{ 2} \left( \gamma \right). \) Again by Lemma 1, we have

With (5.19) and (5.20) into (5.17), we also have

where \( \kappa_{1} = \, \left( {\kappa /2} \right)D\left[ {V_{\rm s}^{2} } \right],\quad \kappa_{2} = \, \kappa^{2} D\left[ {V_{\rm s} \delta } \right],\quad \kappa_{3} = \left( {\kappa^{3} /2} \right)D\left[ {\delta^{2} } \right],\quad \kappa_{4} = \kappa^{2} D\left[ {\delta^{2} } \right] \).

The optimal design problem is then equivalent to the following constrained optimization problem: For any t s,

By using the performance index in (5.21), we will then pursue the optimal solution in the next section.

6 The closed-form solution of optimal gain

For any \( t_{\text{s}} \), taking the first-order derivative of J with respect to γ

That

leads to

or

Equation (6.4) is a quartic equation.

Theorem 6

Suppose \( {\text{D}}\left[ \delta \right] \ne 0 \) . For given \( \kappa_{ 1} ,\kappa_{ 2} ,\kappa_{ 3} ,\kappa_{ 4} , \) the solution γ > 0 to (6.4) always exists and is unique, which globally minimizes the performance index (5.21).

Proof

Let \( \theta \left( \gamma \right) \, : = \, \kappa_{ 2} \gamma + 2\beta \gamma^{ 4} . \) Then \( \theta \left( 0 \right) \, = \, 0 \) and \( \theta \left( \cdot \right) \) is continuous in γ. In addition, since \( \kappa_{ 2} \ge 0 \) and \( \beta > 0,\theta ( \cdot ) \) is strictly increasing in γ. Since \( {\text{D}}\left[ \delta \right] \ne 0 \), we have \( {\text{D}}\left[ \delta \right] > 0,\;{\text{D}}\left[ {\delta^{2} } \right] > 0,\kappa 3,\kappa 4> \, 0, \) and therefore \( 2\left( {\kappa_{ 3} + \, \alpha \kappa_{ 4} } \right) > 0 \, \) (notice that α, κ > 0). As a result, the solution γ > 0 to (6.4) always exists and is unique. For the unique solution γ > 0 that solves (6.4),

Therefore, the positive solution γ > 0 of the quartic Eq. (6.4) solves the constrained minimization problem (5.22).□

Remark

In the special case that the fuzzy sets are crisp, D[δ] = δ, D[δ 2] = δ 2. The current setting still applies. The optimal design can be found by solving (6.4).The solutions of the quartic Eq. (6.4) depend on the cubic resolvent [3]

where

Let \( p_{1} : = - 4r_{1} ,p_{2} : = - r_{2}^{2} \). The discriminant H of the cubic resolvent is given by

Since r < 0, H > 0. The solutions of the cubic resolvent are given by

where

The cubic resolvent possesses one real solution and two complex conjugate solutions. This in turn implies that the quartic equation has two real solutions and one pair of complex conjugate solutions. The maximum real solution, which is positive and is therefore the optimal solution to the constrained optimization problem, of the quartic equation is given by

By using (6.4), the cost J in (5.21) can be rewritten as

With (6.15), the minimum cost is given by

Remark

Combining the results of Sects. 4, 5, 6, and 7, the robust control scheme (3.9) using the optimal design of γ > 0 renders the tracking error e of the closed-loop mechanical system uniformly ultimately bounded (with the initial state \( e(t_{\text{s}} ) \)). In addition, the performance index \( {\text{J}} \) in (5.21) is globally minimized.

The optimal design procedure is summarized as follows:

-

Step 1 For a given inertia matrix M, obtain γ and \( \overline{\gamma } \).

-

Step 2 According to \( \left\| \varPhi \right\| \) in (3.4), obtain ζ and ρ in (3.6).

-

Step 3 Based on the \( {\text{V }}\left( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{\text{e}}} \right) \) \( {\text{e}}\left( {{\text{t}}_{\text{s}} } \right)). \) V (e) in (4.1), solve for the \( \overline{\lambda}\) in (4.8). For given S, P’s, and D’s, solve for the λ in (4.15). Thus, κ is given in (5.1).

-

Step 4 Using the ζ obtained in Step 2 and the \( V_{\rm s} \) in (5.11), calculate \( \kappa_{ 1} ,\kappa_{ 2} ,\kappa_{ 3} ,\kappa_{ 4} \) in (5.21) based on the D-operation.

-

Step 5 For given α and β, solve for the \( \gamma_{\text{opt}} \) in (6.15) and the minimum cost given in (6.17).

-

Step 6 The optimal robust control scheme is given in (3.9).

7 Some limiting performance

As was shown earlier, the tracking error e of the controlled system enters the uniform ultimate boundedness region after a finite time and stays within the region thereafter. Thus, it is interesting to consider, in the limiting case, only the cost associated with this portion of performance.

In the limiting case, the transient performance cost \( J_{1} \left( {\gamma ,t_{\text{s}} } \right) \) is not considered. The cost is then dictated by that of the steady-state performance and the control gain:

The quartic Eq. (6.4) is reduced to

Its positive solution is given by

Using (7.3), the minimum cost is then

If the weighting \( \alpha \to \infty , \) then in the quartic equation, the positive solution \( \gamma \to \infty . \) This simply means that the relative cost of the ultimate boundedness region (as is given by \( \alpha J_{ 2} \left( \gamma \right)) \) is high and the control gain, which is relatively cheap, is turned high.

If \( \beta \to \infty , \) then in the quartic equation, the positive solution \( \gamma \to 0, \) this shows the other extreme case when the control is very expensive.

8 Application

Consider the two-link planar manipulator on a vertical plane as shown in Fig. 1. This manipulator is used to convey the load to a designated place. The load may be parts in the factory, solid waste after an earthquake, or packages in the storehouse. Considering the cost, humans first sort out the light, medium, and heavy things based on their own fuzzy judge. Therefore, the load is uncertain and should be described in a fuzzy way.

Friction is not considered. The masses of links one and two are \( m_{1} \) and \( m_{2} \), respectively. The lengths are \( l_{1} \) and \( l_{2} \). The load mg is uncertain. External torques \( \tau = [\mu_{1} \mu_{2} ]^{T} \) (the control) are imposed on the joints.

We choose two generalized coordinates \( q: = [q_{1} ,q_{2} ]^{T} = [\theta_{1} ,\theta_{2} ]^{T} \) to describe the system. The two coordinates are independent of each other. The equation of motion can be written in matrix form using Lagrange’s equation as

where

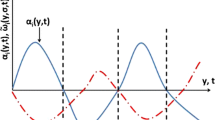

The desired trajectory \( q^{d} (t) \), the desired velocity and acceleration \( \dot{q}^{d} (t) \), \( {\ddot{q}}^{d} (t) \) are given by

By using \( q = e + q^{d} ,\dot{q} = \dot{e} + \dot{q}^{d} ,{\ddot{q}} = {\ddot{e}} + {\ddot{q}}^{d} \), Eq. (8.1) can be rewritten as

where

The masses \( m_{1} \),\( m_{2} \) are known. Therefore, \( M = \overline{M},C = \overline{C},G = \overline{G} \) and \( \Delta M =\Delta C =\Delta G = 0 \). The mass \( m \) is uncertain with \( m = \overline{m} + \Delta m(t) \), where \( \overline{m} \) is the constant nominal value and \( \Delta m \) is the uncertainty. The nominal matrix \( \overline{T} \) and the uncertainty matrix ΔT are given by

We choose S to be a 2 × 2 identity matrix. Therefore, we can get

where

and

For the system, we choose \( m_{1} = m_{2} = 10,\;m = 1,\;l_{1} = l_{2} = 1,\;g = 10 \) and assume the uncertainty \( \Delta m \) is “close to 0.5,” or “close to 0.3” or “close to 0.1” corresponding to “heavy,” or “medium” or “light” and governed by three corresponding membership functions

Set the design parameters P, and D to be the identity matrix \( I_{2 \times 2} \). Follow the design procedure, we have \( \overline{\lambda } = 60.68 \), \( \lambda = 1 \) , and \( \kappa = 60.68 \). If the load is “heavy”, by using the fuzzy arithmetic and decomposition theorem, we obtain \( \kappa_{1} = 1.7386 \times 10^{5} \), \( \kappa_{2} = 3.4842 \times 10^{4} \), \( \kappa_{3} = 893.71 \), \( \kappa_{4} = 29.46 \). By selecting five sets of weighting α and β, the optimal gain \( \gamma_{\text{opt}} \) and the corresponding minimum cost \( J_{\hbox{min} } \) are summarized in Table 1. If the load is “medium,” we obtain \( \kappa_{1} = 1.7386 \times 10^{5} \), \( \kappa_{2} = 2.0914 \times 10^{4} \), \( \kappa_{3} = 145.23 \), \( \kappa_{4} = 4.79 \). By selecting five sets of weighting α and β, the optimal gain, \( \gamma_{\text{opt}} \) and the corresponding minimum cost \( J_{\hbox{min} } \) are summarized in Table 2. If the load is “light,” we obtain \( \kappa_{1} = 1.7386 \times 10^{5} \), \( \kappa_{2} = 6.700 \times 10^{3} \), \( \kappa_{3} = 1.4348 \), \( \kappa_{4} = 0.0474 \). By selecting five sets of weighting α and β, the optimal gain \( \gamma_{\text{opt}} \) and the corresponding minimum cost \( J_{\hbox{min} } \) are summarized in Table 3.

For numerical simulation, we choose \( t_{\text{s}} = 0 \) and the initial condition \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{e} (0) = [1111]^{T} \). We choose the uncertainty as \( \Delta m = 0.5 + \cos (10t) \) for the heavy load, \( \Delta m = 0.3 + \cos (10t) \) for the medium and \( \Delta m = 0.1 + \cos (10t) \) for the light.

Figure 2 shows comparison of the tracking error norm \( \left\| {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{e} } \right\| \) trajectory with the proposed control (for the heavy load, \( \gamma_{\text{opt}} = 29.6119 \) when \( \alpha = \beta = 1 \)) and with the nominal PD control (without the part of control that governs the uncertainty i.e., \( \gamma = 0 \)). The trajectory \( \left\| {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{e} } \right\| \) with the proposed control enters a much smaller region around 0 after some time (hence ultimately bounded) than the trajectory only with the nominal PD control. For the medium and light load, the figures are similar and also show that the trajectory \( \left\| {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{e} } \right\| \) with the proposed control is ultimately bounded, so we do not include the figures here.

9 Conclusions

Fuzzy description of uncertainties in mechanical systems is employed and we incorporate fuzzy uncertainty and fuzzy performance into the control design. A new robust control scheme is proposed to guarantee the deterministic performance (including uniform boundedness and uniform ultimately boundedness). The control is deterministic and is not if–then rules-based. The resulting controlled system is stable proved via the Lyapunov minimax approach. A performance index is proposed and by minimizing the performance index, the optimal design problem associated with the control can be solved. The solution of the optimal gain is unique and closed-form. The resulting control design is systematic and is able to guarantee the deterministic performance as well as minimizing the cost.

References

Bede, B., Rudas, I.J., Fodor, J.: Friction Model by using Fuzzy Differential Equations. Foundations of Fuzzy Logic and Soft Computing, pp. 338–353. Springer, Berlin (2007)

Bezdek, J.C.: Special issue on fuzziness vs. probability—the N-th round. IEEE Trans. Fuzzy Syst. 2, 1–42 (1994)

Bronshtein, I.N., Semendyayev, K.A.: Handbook of Mathematics. Van Nostrand Reinhold, New York (1985)

Chen, Y.H.: Performance analysis of controlled uncertain systems. Dyn. Control 6, 131–142 (1996)

Chen, Y.H.: Fuzzy dynamical system approach to the observer design of uncertain systems. J. Syst. Control Eng. 224, 509–520 (2010)

Chen, Y.H., Leitmann, G.: Robustness of uncertain systems in the absence of matching assumptions. Int. J. Control 45, 1527–1542 (1987)

Corless, M.: Control of uncertain nonlinear systems. J. Dyn. Syst. Meas. Contr. 115, 362–372 (1993)

Hale, J.K.: Ordinary Differential Equations. Wiley, New York (1969)

Hanss, M.: Applied Fuzzy Arithmetic: An Introduction with Engineering Applications. Springer, Berlin (2005)

Huang, J., Chen, Y.H., Cheng, A.: Robust control for fuzzy dynamical systems: uniform ultimate boundedness and optimality. IEEE Trans. Fuzzy Syst. 20(6), 1022–1031 (2012)

Kalman, R.E.: A new approach to linear filtering and prediction problems. Trans. ASME, J. Basic Eng. 82D, 35 (1960)

Kalman, R.E.: Contributions to the theory of optimal control. Boletin de la Sociedad Mastematica Mexicana 5, 102–119 (1960)

Kalman, R.E.: Randomness reexamined. Model. Identif. Control 15, 141–151 (1994)

Klir, G.J., Yuan, B.: Fuzzy Sets and Fuzzy Logic: Theory and Applications. Pretice-Hall, Englewood Cliffs (1995)

Lee, T.S., Chen, Y.H., Chuang, J.: Robust control design of fuzzy dynamical systems. Appl. Math. Comput. 164(2), 555–572 (2005)

Leitmann, G.: on one approach to the control of uncertain systems. J. Dyn. Syst. Meas. Control 115, 373–380 (1993)

Li, X.P., Chang, B.C., Banda, S.S.: Robust control system design using H∞ optimization theory. J. Guid. Control Dyn. 2, 1975–1980 (1992)

McKerrow, P.J.: Introduction to Robotics. Addison-Wesley, Sydney (1991)

Schoenwald, D.A., Ozgunner, I.: Robust stabilization of nonlinear systems with parametric uncertainty. IEEE Trans. Autom. Control 39, 1751–1755 (1994)

Shen, T., Tamura, K.: Robust H∞ control of uncertain nonlinear system via state feedback. IEEE Trans. Autom. Control 40, 766–768 (1995)

Slotine, J.E., Li, W.: Applied Nonlinear Control. Prentice Hall, Englewood Cliffs (1991)

Soong, T.T.: Active Structural Control: Theory and Practice. Longman Scientific and Technical, Essex, England (1990)

Stengel, R.F.: Optimal Control and Estimation. Dover Publications, Mineola (1994)

Utkin, V.: Sliding mode control in mechanical systems. In: 20th International Conference on Industrial Electronics, Control and Instrumentation, vol. 3, pp. 1429–1431 (1994)

Xu, J., Chen, Y.H., Guo, H.: Fractional robust control design for fuzzy dynamical systems: an optimal approach. J. Intell. Fuzzy Syst. (2014). doi:10.3233/IFS-141316

Zadeh, L.A.: Fuzzy sets. Inf. Control 8, 338–353 (1965)

Acknowledgments

The authors would like to express sincere thanks to National Natural Science Foundation of China No.51275147, who have supported this research work.

Author information

Authors and Affiliations

Corresponding author

Appendix: fuzzy mathematics

Appendix: fuzzy mathematics

We briefly review some preliminaries regarding fuzzy numbers and their operations [14]:

1.1 Fuzzy number

Let G be a fuzzy set in R, the real number. G is called a fuzzy number if (i) G is normal, (ii) G is convex, (iii) the support of G is bounded, and (iv) all α-cuts are closed intervals in R.

Throughout, we shall always assume the universe of discourse of a fuzzy set number to be its 0-cut.

1.2 Fuzzy arithmetic

Let G and H be two fuzzy numbers and \( G_{\alpha } = [g_{\alpha }^{ - } ,g_{\alpha }^{ + } ] \), \( H_{\alpha } = [h_{\alpha }^{ - } ,h_{\alpha }^{ + } ] \) be their α-cuts, \( \alpha \in [0,1] \). The addition, subtraction, multiplication, and division of G and H are given by, respectively,

1.3 Decomposition theorem

Define a fuzzy set \( \tilde{V}_{\alpha } \) in U with the membership function \( \mu_{{\tilde{V}_{\alpha } }} = I_{{\tilde{V}_{\alpha } }} (x), \) where \( I_{{\tilde{V}_{\alpha } }} (x) = 1 \) if \( x \in \tilde{V}_{\alpha } \) and \( I_{{\tilde{V}_{\alpha } }} (x) = 0 \) if \( x \in U - \tilde{V}_{\alpha } \). Then the fuzzy set V is obtained as

where \( \cup \) is the union of the fuzzy sets (that is, sup over \( \alpha { \in }[0,1] \)).

Based on these, after the operation of two fuzzy numbers via their α-cuts, one may apply the decomposition theorem to build the membership function of the resulting fuzzy number.

Rights and permissions

About this article

Cite this article

Zhang, K., Han, J., Xia, L. et al. Theory and Application of a Novel Optimal Robust Control: A Fuzzy Approach. Int. J. Fuzzy Syst. 17, 181–192 (2015). https://doi.org/10.1007/s40815-015-0026-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-015-0026-3