Abstract

In this paper, a method using a stereo vision device and fuzzy control to guide a robot arm to grasp a target object is proposed. The robot arm has five degrees of freedom including a gripper and four joints. The stereo vision device located beside the arm captures images of the target and the gripper. Image processing techniques such as color space transformation, morphologic operation, and 3-D position measurement are used to identify the target object and the gripper from the captured images and estimate their relative positions. Based on the estimated positions of the gripper and the target, the gripper can approach and grasp the target using inverse kinematics. However, since the robot arm’s accuracy of movement may be affected by gearbox backlash or hardware uncertainty, the gripper might not approach the desired position with precision using only inverse kinematics. Therefore, a fuzzy compensation method is added to correct any position errors between the gripper and target such that the gripper can grasp the target. Using the proposed method, the stereo vision device can not only locate the target object but also trace the position of the robot arm until the target object is grasped. Finally, some experiments are conducted to demonstrate successful implementation of the proposed method on the robot arm control.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

To control a robot arm, it is necessary that the positions of this controlled robot arm should be known at all times. Some external sensors such as the accelerometer and the resolver are needed in order to estimate the positions of the robot arm and its gripper. These sensors may be installed on every actuator to measure the angles of the degrees of freedom (DOFs) as feedback signals. From the feedback signals of the robot arm, the pose of the robot arm and the position of the gripper can be estimated. However, if the robot arm has many DOFs, the number of sensors should be equivalent to or even more than the number of DOFs. In other words, if the robot arm has many DOFs, the cost for installing those external sensors will increase accordingly. On the other hand, many studies regarding robot arm platforms use cameras to identify their targets and to monitor their workspaces. The paper [1] used a depth image sensor called the Kinect to identify target objects and control a humanoid robot arm to grasp the target objects. The paper [2] identified an object using a SIFT algorithm and a monocular vision device mounted on the gripper. The paper [3] identified elevator buttons and made a robot arm to operate an elevator. Supposing that the monitoring camera can recognize the position of the gripper and track it, we will not need to install sensors on the robot arm, thus reducing the total cost of the robot arm platform. Considering this cost reduction benefit, this study proposes a method which uses a stereo vision device to identify and locate the gripper and the target object, and estimate the pose of the robot arm.

There have been many studies on the subject of robot arm control using many different methods, the paper [4] used interactive teaching to approximate a space of the knowledge-based grasp. The paper [5] presented an off-line trajectory generation algorithm to solve the contouring problem of industrial robot arms. Using the Mitsubishi PA-10 robot arm platform, the paper [6] proposed a harmonic drive transmission model to investigate the gravity and material influence on the robot arm. Then, the robot arm can be controlled to track a desired trajectory and the motion error can be further analyzed. The paper [7] applied a self-configuration fuzzy system to find the inverse kinematics solutions for a robot arm. The paper [8] employed the inverse-kinematics-based two-nested control-loop scheme to control the tip position using joint position and tip acceleration feedback. The paper [9] proposed an analytical methodology of inverse kinematics computation for a seven-DOF redundant robot arm with joint limits. Using the inverse kinematics technique, the robot arm in [10] was designed to push the buttons of an elevator. On the other hand, the studies relating to position measurement and use of vision therein are described as follows. The paper [11] combined a 2-D vision camera and an ultrasonic range sensor to estimate the position of the target object for the robot gripper. The paper [12] used two cameras and one laser to identify the elevator door and to determine its depth distance. The papers [13–15] proposed photogrammetric methods to measure the distances of objects using their features. The paper [16] effectively utilized color images to achieve 3-D measurement using an RGB color histogram. The paper [17] proposed an image-based 3-D measuring system to measure distance and area using a CCD camera and two laser projectors. The paper [18] adopted two cameras and a laser projector to measure the edge of an object regardless of its position.

In this paper, we propose an object-grasping method using a stereo vision device and fuzzy control so that a robot arm can accomplish an object-grasping task. The robot arm has no sensors installed on it, however, the stereo vision device is set up beside the robot arm. Here, the stereo vision device plays an important role in perceiving the position of the robot arm from the feedback signals. Firstly, the stereo vision device is applied to identify the position of the target object. Subsequently, the stereo vision device traces the position of the robot arm and estimates the angle of each joint on the robot arm using the inverse kinematic method. However, because the design and assembly of the robot arm is not that precise, there is visible backlash in the mechanism. This means that position errors caused by backlash or hardware uncertainty should be considered when the robot arm moves. Herein, fuzzy compensation method is used to deal with position errors. The concept of the fuzzy compensation method is to adjust the amount of robot arm movement using fuzzy logic. When the robot arm is close to the target, the compensation value is low, on the contrary, when the robot arm is far from the target, the compensation value is high. Fusing the position value of the robot arm and compensation values, we can obtain the new angle of each joint of the robot arm by the inverse kinematic and drive the motors to the calculated angle. Hence, using the stereo vision to estimate the configuration of robot arm and the fuzzy compensation method to reduce the position errors, the robot arm can accurately take its target object.

This paper is organized as follows. Section 2 introduces the experimental platform of this study. The principal stereo vision techniques, robot arm inverse kinematics analysis, and fuzzy compensation are explained in Sects. 3, 4, and 5, respectively. The results of practical experiment and discussion are given in Sect. 6. Finally, the conclusion is given in Sect. 7.

2 Description of Experimental Platform

In this paper, in order to implement an object-grasping task a platform for a robot arm with a stereo vision device is designed which contains a PC (laptop computer), a robot arm, a stereo vision device, and batteries, as shown in Fig. 1. The utilized devices and their purposes are as described below.

2.1 The Robot Arm

The robot arm consists of the main body (four DOFs) and a gripper (one DOF) as shown in Fig. 2. Its main components are four SmartMotors, a planetary gearbox, three harmonic drivers, two AX-12 motors, and some metal components. The specifications of the SmartMotor and the AX-12 are shown in Tables 1 and 2, respectively. The SmartMotors and the AX-12 motors communicate with PC through the use of the serial ports (RS-232). The biggest torque motor, SM3416D_PLUS, and a planetary gearbox are installed at axis 1 to provide larger output torque, thus enabling the robot arm to lift not only itself but also the target object. Based on many experiments, the robot arm can lift objects weighing up to about 2 kg. Since the load of axes 2–4 is much smaller than that of axis 1, each remaining axis is composed of a SM2315D motor and a harmonic driver. The gripper is composed of two AX-12 motors as shown in Fig. 3. The PC receives the digitized feedback values of the AX-12 motors which include position, speed, and torque through the serial ports (RS-232). Therefore, we can use those status values to ascertain whether the gripper can successfully grasp an object or not. For instance, when the robot arm recognizes the target object and the gripper starts to close, the M L motor’s torque value is positive and the M R motor’s torque value is negative. If the values are inversed, it means the target object has been grasped. To prevent objects from falling out of the gripper, two pieces of non-slip materials are pasted inside the grippers. A green mark is placed on each of the two sides of the gripper in order to ease the identification of the gripper using the stereo vision device.

2.2 The Stereo Vision Device

This stereo vision device consists of two Logitech QuickCam Pro webcams as shown in Fig. 4. It is used to capture stereo images with a resolution of 320 × 240 pixels at a rate of capture of 30 frames per second. The captured images are transmitted to the laptop computer via USB ports. The images are then used to identify, and calculate the positions of, the object and the gripper in 3-D space.

2.3 The Laptop Computer and Software

In this study, a PC (laptop computer) is used as the control center. The CPU is an Intel Core 2 Dual P8600 which runs at 2.4 GHz with 2 GB RAM. Borland C++ Builder is chosen as the development software and is applied to implement the robot arm control and stereo vision recognition algorithms. After the stereo vision device captures the images, the software processes and binarizes the images to identify the target object. Subsequently, the center point of target object is designated as the gripper’s desired position. Then, the green marks on the gripper are identified from the captured stereo vision images and a motion plan is calculated to ensure that the robot arm can successfully move the gripper to the goal position. Finally, the gripper grasps the target object.

2.4 The Batteries

The batteries consist of a Li-ion battery pack and two lead–acid batteries. The lead–acid batteries provide the 24 V power for the SmartMotors by cascading two lead–acid batteries (a single lead–acid battery can provide 12 V of output power). The output voltage of the Li-ion battery pack is 7.4 V, and it provides the power for the AX-12 motors.

3 Stereo Vision Method for Object Position Measurement

The two webcams, W R and W L, respectively, capture several pairs of images (see Fig. 5) as the input images of the stereo vision. Based on those images, two tasks should be achieved; one is the target object identification and the other is the object position measurement. The target object (or gripper) identification method consists of some image-processing techniques such as color space transformation, binarization, morphologic operations, and connected components, and is used to find the target object in the captured images. Next, the image geometry is used to calculate the position of the target object (or the gripper) in 3-D space. The identification and positioning of the target object (or the gripper) are described as follows.

3.1 Target Object Identification

The target object identification is based on the color of object. In other words, a region is distinguished whether its color is the same with object’s color. If the region’s color is same with the object’s color, the region can be identified as the target object in the image. It is noted that the captured images use the RGB color model, but the particular color region is not easily to be distinguished in this color space. Hence, a color model transformation (1)–(3) is needed in which the RGB color model is transformed into the HSV (HSV means hue, saturation, and value) color model [19].

According to the color of the target object, the two images of the HSV color space are binarized into two binary images by setting suitable interval values of HSV. Then, morphologic operators [20] such as erosion, dilation, and connected component are used to filter the noise as shown in Fig. 6. To mark the target object, the connected component is applied to find the center of gravity of the target object and its coordinate value in those images, namely, (x L, y L) and (x R, y R). The identification result of a target object is shown in Fig. 7, in which the red points indicate the center of gravity of the target object. Those two coordinate values for the center of gravity of the target object will be used to measure the target object’s position in 3-D space.

The binarized and filtered images of Fig. 5

3.2 Target Object Position Measurement

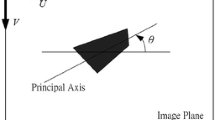

In order to measure the target object’s position in 3-D space, we first need to define the coordinates of the robot arm upon which the object-grasping system is constructed. We define the coordinates of this system as shown in Fig. 8, in which C R and C L are the positions in 3-D space of the two webcams W R and W L, respectively, and O w is the origin point of this system which is the center point between the two webcams. O t is the center point of the object plane. O L and O R are the center pixels of the W L image and the center pixel of the W R image, respectively. L indicates the distance between the two webcams. The hardware parameters are f L and f R which indicate the two webcam’s focal lengths. T point (x t, y t, z t) is the position of the target object and its 3-D coordinates. Once this system is defined, we can use the image geometry [21] to measure the position of the target object from the W L and W R images as shown in Eqs. (4)–(6). The obtained position T(x t, y t, z t) can be considered the goal point of the robot arm.

Then, the vision origin O w is assigned as the world origin O which means O w = O, in which the world coordinate is defined in Cartesian coordinate. However, due to the fact that the vision device is capable of rotation, there exists a three-phase difference in angle between the vision and the world coordinates, which can be divided into a pitch angle ψ x , a yaw angle ψ y , and a roll angle ψ z as shown in Fig. 9.

Hence, the three-phase rotation matrices are used to transform the stereo vision coordinate into the world coordinates as in Eq. (7) [22].

After the transformation, the positions (x t, y t, z t) of the stereo vision coordinate can be transformed into the positions (x, y, z) of the world coordinate. In addition, since there are no sensors such as resolvers or encoders on the robot arm with which to measure the rotation angles of all axes and estimate the position of the gripper, a similar method of object position measurement is applied to find the position of the gripper.

4 Robot Arm Inverse Kinematics Analysis

To make the robot arm and its gripper move to a desired position, we must first perform the inverse kinematics analysis. Figure 10 shows the linking structure of the robot arm, in which the shoulder joint is O r and is the origin point of the inverse kinematics coordinate. Q is the elbow joint, and G r(x r, y r, z r) is the position of gripper. \( \overline{{O_{\text{r}} Q}} \) and \( \overline{{QG_{\text{r}} }} \) indicate links 1 and 2 of the robot arm, respectively, the lengths of which are d 1 and d 2, respectively. In addition, there are three rotation angles; θ 1, θ 2, and θ 3, on each of the O r and Q points. For further geometric derivations, links 1 and 2 are projected on the Y r–Z r plane (see the green line on Fig. 10), where R and S denote that the projective points of G r and Q, respectively. The axis W is a referenced axis which extends from the projected link 1 on the Y r–Z r plane.

Let us provide three figures to introduce the deviations of the kinematics of the robot arm. Figure 11a shows a two-link arm plane, where \( L = \sqrt {x_{\text{r}}^{2} + y_{\text{r}}^{2} + z_{\text{r}}^{2} } \) is the distance between the gripper G r and O r. The elbow joint angle θ 3 is obtained as follows.

where

On the X r–W plane as shown in Fig. 11b, the lifting movement joint angle θ 2 on O r point can be derived as

Furthermore, Fig. 11c depicts the projected arm on the Y r–Z r plane, where \( L^{\prime} = \sqrt {y_{\text{r}}^{2} + z_{\text{r}}^{2} } . \) Consequently, the shoulder rotation joint angle θ 1 is obtained.

where

and

The gripper’s grasping angle is controlled by a rotation angle θ 4, so that θ 4 must be adjusted to accord with that the two pincers are perpendicular to the X r–Y r plane as shown in Fig. 12. θ 4 can be obtained from θ 2. Since the position of the gripper G r is the terminal point of robot arm’s motion, when axis 2 of the robot arm rotates to angle θ 2, the gripper should rotate to an inverse angle to cancel out the dependent rotation. Therefore, we can get the θ 4 as shown in Eq. (14). It is noted that the dual pincer gripper will fail to grasp any object if the two pincers are not perpendicular to the X–Y plane.

Since the stereo vision and the robot arm are not at the same coordinate, to successfully grasp a target object, the coordinates of the stereo vision are transformed into the coordinate of the robot arm as follows.

where G gr is the position of the gripper in the world coordinate, (O r − O) is the distance from the world origin to robot arm origin.

5 Fuzzy Control for Position Error Compensation

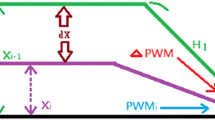

Practically, the motor backlash problems and the hardware uncertainties may affect the 3-D position accuracy of the inverse kinematics technique, i.e., the gripper can not accurately approach a desired position using only the inverse kinematics method as shown in Fig. 13. In Fig. 13, the G act point indicates the actual position of the gripper which has had its movement guided by the stereo vision device and the inverse kinematics method, and the G gr point indicates the desired position of the gripper. There is a position error between G gr and G act. Hence, this section presents a fuzzy control technique to compensate for position errors when using the stereo vision device. When the gripper is moved to a predicted position, the stereo vision device measures the gripper’s actual position as feedback and then calculates the position error. Subsequently, the fuzzy controller is used to reduce the position error. The fuzzy controller is described as follows.

To compensate for errors in the position, the deviation vector between G act and G gr is calculated as shown in Eq. (16), where D is the deviation and, e x , e y , and e z indicate deviation on the x-, y-, and z-axis, respectively.

Then, let us describe the design of the fuzzy control for error compensation using the deviation on the x-axis first. To compensate for the x-axis error, the fuzzy control rule table is designed as Table 3, in which e x is the deviation value on the x-axis and denotes the premise part; σ x is the compensation factor and denotes the consequent part. Both e x and σ x are decomposed into five fuzzy sets, including negative big (NB), negative small (NS), zero (ZO), positive small (PS), and positive big (PB). Figure 14 shows the membership functions of the premise part and the consequent part.

In Fig. 14, \( a_{x}^{i} \) (i = 1, 2,…,5) and \( u_{x}^{i} \) (i = 1, 2,…,5) are the parameters of the two membership functions. Considering the structure of the robot arm in this study, the parameters of the premise parts and consequent parts are assigned as Tables 4 and 5, respectively, to deal with the object-grasping task. Due to the structure defect of the real robot arm, its backlash is about 5° which cause the position error of the gripper will be around ±5.5 cm. Furthermore, it is hard to have exact mathematical equations to express the model of the robot arm. Therefore, those parameters in the premise part are set according to the experience of a lot of experiments. Since the output of the fuzzy control is the compensation factors, the consequent parts should be very small. Therefore, after many experiments to adjust those parameters of the consequent fuzzy sets, we have Table 5.

After the parameters are assigned, the center average defuzzification method is used to obtain the position compensation factor σ x as follows.

where \( u_{x}^{i} \) is the center value of the consequent part and B i(e x ) is the membership degree of the premise part. For the same reason, the compensation factors σ y and σ z can be found in a similar manner. Finally, the compensation factors σ i (i = x, y, z) are used to adjust the error as follows.

where u i are the compensation values which are used to compensate for the position error as Eq. (19).

where G com is the new position of the gripper for approaching to the desired position.

Overall, the error compensation for the robot arm with fuzzy controller is shown in Fig. 15. The stereo vision device captures the actual position of robot arm (G act) and calculates the deviation values (D). These deviation values are as the input into the fuzzy controller to obtain the compensation values for the robot arm (σ x , σ y , and σ z ). Subsequently, the compensation values are used to get the new position of the robot arm (G com) by inverse kinematics and then the gripper moves to the new position. The compensation process will be terminated until |e i | ≤ ρ i (i = x, y, z) are satisfied, where ρ i is an acceptable error threshold of the gripper position.

6 Experimental Results and Discussion

This section demonstrates a target object-grasping task which drives the robot arm to grasp and raise a thermos. To implement the experiment, some hardware parameters should be assigned as follows. The lengths of two links are d 1 = 17 cm and d 2 = 26 cm (see Fig. 10). The target object to be grasped in the experiment is a blue thermos. The distance between the webcams W L and W R is 3 cm and the focal lengths correspond to f L = f R = 265 pixels. The rotation between the world coordinate and the stereo vision coordinate is (ψ x , ψ y , ψ z ) = (27°, 0°, 60°). The error thresholds are assigned as ρ x = 2.5 cm, ρ y = 1.5 cm, and ρ z = 1.5 cm in this experiment. Since the thermos’s diameter of 7 cm is much smaller than the open range of the gripper, the error thresholds are acceptable. Figure 16 shows the whole process of the robot arm grasping the thermos. Table 6 shows the gripper position and its position errors. Firstly, the stereo vision system measures the position of the thermos which is T VD (11.52, −8.5, 41.98 cm) as shown in Fig. 16a. Then, the robot arm positions its gripper around the thermos as shown in Fig. 16b, and the iteration count i is 0 as shown in Table 6. Subsequently, Figure 16c, d shows that the robot arm compensates for its position errors, and the iteration counts i are 1 and 2 as in Table 6. When the gripper moves to a new position, the stereo vision device will measure the actual position of gripper and the fuzzy control is used to compensate for the position errors until the position errors satisfy the accuracy conditions (see Fig. 16c, d). The iteration count i starts from 0 and it can be considered a count of the instances of position compensation. In other words, when i = 0, the robot arm moves to the desired position using only inverse kinematics method without fuzzy position error compensation. i = 1 indicates that the robot arm uses fuzzy control to compensate the position error once. In this experiment, the robot arm compensates the position errors twice (i = 2). In Table 6, it is seen that the position error is reduced when using fuzzy control to compensate the gripper’s position error. As a result of the compensation, the gripper is then in the proper grasping location (12.99, −9.13, 40.52 cm). Figure 17 shows that the position errors e x , e y , and e z are reduced to within the error thresholds ρ x , ρ y , and ρ z in the iteration i = 2. Finally, the robot arm successfully grasps the thermos and raises it as shown in Fig. 16e, f.

In order to examine the robot’s grasping ability, we placed the thermos in five different locations (A–E) in the workspace as shown in the first row of Tables 7, 8, 9, 10, and 11. The following rows of Tables 7, 8, 9, 10, and 11 show the gripper position errors for each iteration count. Figures 18, 19, 20, 21, and 22 illustrate the position errors for each iteration count. As can be seen, the experiment results reveal that the robot arm can successfully approach the thermos, since all position errors are smaller than the given threshold values (ρ x = 2.5 cm, ρ y = 1.5 cm and ρ z = 1.5 cm) in the final iteration. After the robot arm reaches and grasps the thermos, the robot arm raises the thermos approximately 10 cm to ensure that the thermos is assuredly caught and then the grasping task is finished. However, the error compensation processing may increase the value of each position error of the gripper. Comparing the position errors between the first and second iteration, the first position errors in the first iteration are smaller than that of second iteration. Because of the recoil from the gearbox and the inertia produced by the movement of the robot arm, the adjustment exceeds the compensation values. Similar situations are shown in Fig. 19 when the iteration count is 4 and in Fig. 21 when the iteration is 3. But, in these instances there is still a decrease in the position errors on the following iteration when compared to the previous iteration. In brief, the position errors tend to decrease regardless of the gearbox recoil or the inertia produced by the robot arm moving. Hence, the proposed method can reliably control the robot arm so as to effectively grasp an object.

In Tables 6, 7, 8, 9, and 10, it has to be emphasized that when i = 0, the position error is achieved only using inverse kinematics without fuzzy control, but after i = 1, the position error is reduced continuously because of the aids of fuzzy control compensation.

Herein, we have some discussion to compare the proposed method with the papers [23–25]. The paper [23] proposed a PID control algorithm and implemented a ball-grasping task for robot arm. Since it used the encoder’s signal as the feedback, the accumulated error may occur after a sequence of movement. The paper [24] adopted extended Kalman filtering algorithm to identify the geometric parameters of the model of the robot arm, and then used the artificial neural network to compensate the position errors. In the proposed method, we do not need the mathematic model of the robot arm and any expensive sensor to measure the position of the robot. All we need are the 3-D position measurement by the stereo version and inverse kinematics with fuzzy control for error compensation. The paper [25] also used stereo cameras to calculate the correction vector by the difference between the robot arm’s end-effector from inverse kinematics and vision. Then, the robot arm compensates its position error according to the correction vector. However, their compensation value is obtained by some calculation; it still may have a little error because of the real mechanism uncertainty such as backlash or friction. In our method, the robot arm can adjust the compensation values by fuzzy control method all the way.

Furthermore, the proposed method has two advantages which are low cost and high efficiency. Low cost is that only a stereo vision device is needed as the sensor. High efficiency is that using fuzzy control to compensate the error at final step. Therefore, any uncertainty and backslash problems are easy to be dealt with for the movement of the robot arm.

7 Conclusion

This study has described a method to achieve an object-grasping task using only a stereo vision device to trace and guide the motion of the robot arm. The stereo vision device identifies the position of the target object and gripper using color space transformation, morphologic operation, and 3-D position measurement. After obtaining those positions, there is usually a position error between the gripper and target, due to recoil from the gearbox and inertia produced by the movement of the robot arm. In order to compensate for these errors, the fuzzy compensation method is proposed to generate compensation values for each axis. The method is designed according to a principle that when the error in position is small, the movement required to compensate for that error is also relatively small. Then, fuzzy compensation method integrates the compensation values and inverse kinematics to estimate and drive the gripper to a new compensative position.

Several experiments are given to demonstrate the implementation of the proposed object-grasping method. The fuzzy error compensation regulates the position of the gripper until the position error satisfies acceptable error thresholds. Therefore, the robot arm can successfully approach the target object and raise it under the guidance of the stereo vision device in all the experiments.

The benefit of using the proposed method is that the robot arm does not need external sensors such as accelerometers or resolvers to measure the degree of rotation on each axis. Thus, the cost for building the robot arm platform can be reduced.

References

Song, K.-T., Tsai, S.-C.: Vision-based adaptive grasping of a humanoid robot arm. In: Proceedings of the IEEE International Conference on Automation and Logistics, Zhengzhou, China, August 2012, pp. 155–160

Yang, Y., Cao, Q.-X.: Monocular vision based 6D object localization for service robot’s intelligent grasping. Comput. Math. Appl. 64(5), 1235–1241 (2012)

Kim, H.-H., Kim, D.-J., Park, K.-H.: Robust elevator button recognition in the presence of partial occlusion and clutter by specular reflections. IEEE Trans. Ind. Electron. 59(3), 1597–1611 (2012)

Aleotti, J., Caselli, S.: Interactive teaching of task-oriented robot grasps. Robot. Auton. Syst. 58(5), 539–550 (2010)

Munashnghe, S.R., Nakamura, M., Goto, S., Kyura, N.: Optimum contouring of industrial robot arms under assigned velocity and torque constraints. IEEE Trans. Syst. Man Cybern. C 31(2), 159–167 (2001)

Kennedy, C.W., Desai, J.P.: Modeling and control of the Mitsubishi PA-10 robot arm harmonic drive system. IEEE ASME Trans. Mechatron. 10(3), 263–274 (2005)

Shen, W., Gu, J., Milios, E.E.: Self-configuration fuzzy system for inverse kinematics of robot manipulators. In: Proceedings of the Annual Meeting of the North American Fuzzy Information Processing Society, Montreal, QC, Canada, June 2006, pp. 41–45

Feliu, V., Somolinos, J.A., Garcia, A.: Inverse dynamics based control system for a three-degree-freedom flexible arm. IEEE Trans. Robot. Autom. 19(6), 1007–1014 (2003)

Shimizu, M., Kakuya, H., Yoon, W.-K., Kitagaki, K., Kosuge, K.: Analytical inverse kinematics computation for 7-DOF redundant manipulators with joint limits and its application to redundancy resolution. IEEE Trans. Robot. 24(5), 1131–1142 (2008)

Wang, W.-J., Huang, C.-H., Lai, I.-H., Chen, H.-C.: A robot arm for pushing elevator buttons. In: Proceedings of SICE Annual Conference, Taipei, Taiwan, August 2010, pp. 1844–1848

Nilsson, A., Holmberg, P.: Combining a stable 2-D vision camera and an ultrasonic range detector for 3-D position estimation. IEEE Trans. Instrum. Meas. 43(2), 272–276 (1994)

Baek, J.-Y., Lee, M.-C.: A study on detecting elevator entrance door using stereo vision in multi floor environment. In: Proceedings of ICROS-SICE International Joint Conference, Fukuoka, Japan, August 2009, pp. 1370–1373

Fraser, C.S., Cronk, S.: A hybrid measurement approach for close-range photogrammetry. ISPRS J. Photogramm. Remote Sens. 64(3), 328–333 (2009)

van den Heuvel, F.A.: 3D reconstruction from a single image using geometric constraints. ISPRS J. Photogramm. Remote Sens. 53(6), 354–368 (1998)

Zhang, D.-H., Liang, J., Guo, C.: Photogrammetric 3D measurement method applying to automobile panel. In: Proceedings of the 2nd International Conference on Computer and Automation Engineering (ICCAE), Singapore, February 2010, pp. 70–74

Egami, T., Oe, S., Terada, K., Kashiwagi, T.: Three dimensional measurement using color image and movable CCD system. In: Proceedings of the 27th Annual Conference on IEEE Industrial Electronics Society, Denver, Colorado, USA, November 2001, pp. 1932–1936

Hsu, C.-C., Lu, M.-C., Wang, W.-Y., Lu, Y.-Y.: Three-dimensional measurement of distant objects based on laser-projected CCD images. IET Sci. Meas. Technol. 3(3), 197–207 (2009)

Aguilar, J.J., Torres, F., Lope, M.A.: Stereo vision for 3D measurement: accuracy analysis, calibration and industrial applications. Measurement 18(4), 193–200 (1996)

Feng, L., Xiaoyu, L., Yi, C.: An efficient detection method for rare colored capsule based on RGB and HSV color space. In: IEEE International Conference on Granular Computing, Noboribetsu, Japan, , October 2014, pp. 175–175

Laganière, R.: OpenCV2 Computer Vision Application Programming Cookbook. Packt Publishing, Birmingham (2011)

Jain, R., Kasturi, R., Schunk, B.G.: Machine Vision. McGraw-Hill, New York (1995)

Kim, B.S., Lee, S.H., Cho, N.I.: Real-time panorama canvas of natural images. IEEE Trans. Consum. Electron. 57(4), 1961–1968 (2011)

Su, J., Zhang, Y.: Integration of a plug-and-play desktop robotic system. Robotica 27, 403–409 (2009)

Nguyen, H.-N., Zhou, J., Kang, H.-J.: A calibration method for enhancing robot accuracy through integration of an extended Kalman filter algorithm and an artificial neural network. Neurocomputing 151, 996–1005 (2015)

DiCicco, M., Bajracharya, M., Nickels, K., Backes, P.: The EPEC algorithm for vision guided manipulation: analysis and validation. In: Proceedings of the IEEE Aerospace Conference, Big Sky, Montana, March 2007, pp. 1–11

Acknowledgments

The authors like to thank the Ministry of Science and Technology of Taiwan for its support under Contracts MOST 103-2221-E-008-001-.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chang, JW., Wang, RJ., Wang, WJ. et al. Implementation of an Object-Grasping Robot Arm Using Stereo Vision Measurement and Fuzzy Control. Int. J. Fuzzy Syst. 17, 193–205 (2015). https://doi.org/10.1007/s40815-015-0019-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-015-0019-2