Abstract

A new class of distributions with increasing, decreasing, bathtub-shaped and unimodal hazard rate forms called generalized quadratic hazard rate-power series distribution is proposed. The new distribution is obtained by compounding the generalized quadratic hazard rate and power series distributions. This class of distributions contains several important distributions appeared in the literature, such as generalized quadratic hazard rate-geometric, -Poisson, -logarithmic, -binomial and -negative binomial distributions as special cases. We provide comprehensive mathematical properties of the new distribution. We obtain closed-form expressions for the density function, cumulative distribution function, survival and hazard rate functions, moments, mean residual life, mean past lifetime, order statistics and moments of order statistics; certain characterizations of the proposed distribution are presented as well. The special distributions are studied in some details. The maximum likelihood method is used to estimate the unknown parameters. We propose to use EM algorithm to compute the maximum likelihood estimators of the unknown parameters. It is observed that the proposed EM algorithm can be implemented very easily in practice. One data set has been analyzed for illustrative purposes. It is observed that the proposed model and the EM algorithm work quite well in practice.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The modeling and analysis of lifetime phenomenon play a prominent role in a wide variety of scientific and technological fields. Recently, several new lifetime distributions have been proposed. Mudholkar and Srivastava [35] and Mudholkar et al. [36] introduced a generalization of the Weibull distribution called generalized Weibull (GW) distribution. The generalized exponential (GE) distribution presented by [19]. Nadarajah and Kotz [37] introduced four generalized (exponentiated) type distributions: the exponentiated gamma, exponentiated Weibull, exponentiated Gumbel and the exponentiated Frechet distributions. Sarhan and Kundu [48] proposed the generalized linear failure rate (GLFR) distribution and they explained that this distribution can have increasing, decreasing, and bathtub-shaped hazard rate functions which are quite desirable for data analysis purposes. Recently, Sarhan [47] proposed the generalized quadratic hazard rate (GQHR) distribution. This distribution is more general than several well-known distributions such as GE, GLFR, and generalized Rayliegh (GR) distributions. In addition, the GQHR distribution has an increasing, bathtub-shaped, unimodal and inverted bathtub-shaped hazard rate functions.

A new generalization of quadratic hazard rate distribution called the Kumuraswamy quadratic hazard rate (KQHR) distribution was introduced by [15]. Another distribution which is called the beta QHR distribution is investigated by [33]. Okasha et al. [40] introduced the QHR-geometric distribution.

Several distributions have been proposed in the literature to model lifetime data by compounding some useful lifetime distributions. Adamidis and Loukas [1] introduced a two parameter distribution known as exponential-geometric (EG) distribution by compounding an exponential distribution with a geometric distribution. Kundu and Raqab [27] introduced the generalized Rayleigh distribution. Ku [29] and Tahmasbi and Rezaei [51] introduced the exponential-Poisson (EP) and exponential-logarithmic (EL) distributions, respectively. Recently, Chahkandi and Ganjali [12] proposed the exponential power series (EPS) class of distributions, which contains as special cases these distributions. Some other recent works are: exponentiated exponential-Poisson (EEP) by [7]; Weibull-geometric (WG) by [8]; Weibull-power series (WPS) by [34]; complementary exponential power series by [16]; extended Weibull-power series (EWPS) distribution by [49]; double bounded Kumaraswamy power series distribution by [9]; the Burr-XII power series distribution by [50]; the complementary Weibull geometric distribution by [52]; the Birinbaum-Sanders power series distribution by [11]; the complementary extended Weibull power series distribution by [14]; the Burr-XII negative binomial distribution by [42]; the generalized linear failure rate-power series (GLFRPS) distribution by [20]; the bivariate exponentiated extended Weibull family of distributions by [43]; the quadratic hazard rate power series distribution by [45]; the generalized modified Weibull power series distribution by [4]; and the power series skew normal class of distributions by [44]; For compounding continuous distributions with a discrete distribution, Nadarajah et al. [38] introduced a package Compounding in R software (R Development Core Team, 2014). We are motivated to introduce our new distribution for (i) the wide usage of the general class of quadratic hazard rate distribution, (ii) the proposed model has some interesting physical interpretations as well. Hence, it may be more flexible than the existing models and it will give the practitioner one more option to choose a model among the possible class of models for analyzing data., (iii) the stochastic representation \(Y=\min {(X_1, \ldots , X_n)}\), which can arises in series systems with identical components in many industrial applications and biological organisms.

The main purpose of this paper is to introduce GQHRPS distribution and study some of its properties. This new model has the following distributions as special cases: (i) the QHR, (ii) the generalized linear failure rate power series (GLFRPS), (iii) the generalized Rayleigh power series and (iv) the generalized exponential power series (GEPS) distributions.

First we derive the GQHRPS by compounding the GQHR distribution with the power series class of distributions. We like to mention that the GQHR distribution has several interesting properties. We obtain these properties and also provide the marginal and conditional distributions of the GQHRPS distribution. The proposed class has five unknown parameters. The maximum likelihood estimators (MLEs) cannot be obtained in closed form. The MLEs can be obtained by solving five non-linear equations simultaneously. The standard Newton–Raphson algorithm may be used for this purpose, but it requires a very good choice of the initial guesses of the five parameters. Otherwise, it has the standard problem of converging to a local maximum rather the global maximum. It is observed that the implementation of the proposed EM algorithm is very simple in practice. We have analyzed one data set for illustrative purposes. The performance is quite satisfactory.

The rest of the paper is organized as follows. In Sect. 2 we introduce the new class of generalized quadratic hazard rate power series distributions. The density, survival, failure rate, and moment generating functions as well as the moments, mean residual life, mean past lifetime, quantiles, the stress–strength parameter and order statistics are given in Sect. 3. In Sect. 4, we have discussed some of the cases and study some of their distributional properties in details. Certain characterizations of GQHRPS distribution are presented in Sect. 5. Estimation of the parameters is discussed in Sect. 6. The Standard errors of the estimates and simulations are obtained in Sects. 7 and 8, respectively. The application is considered in Sect. 9 to show the flexibility and potentiality of the new distribution. Finally, some concluding remarks are presented in Sect. 10.

2 The GQHRPS Distribution

The four-parameter distribution known as generalized quadratic hazard rate (GQHR) distribution, was introduced by [47]. The cumulative distribution function (cdf) of the GQHR distribution is given by

where \(\alpha \), \(\beta \) non-negative, \(\gamma \) positive, \(\lambda \ge -2\sqrt{\alpha \beta }\) are parameters and \(v_{x}=\alpha x+ (\lambda /2 )x^2 + (\beta /3) x^3\).

Given N, let \({X_1, \ldots , X_N}\) be independent and identically distributed (i.i.d.) random variables following a GQHR distribution with cdf (1). Here N is independent of \(X_{i}^{'}\)s and it is a member of the family of power series distribution, truncated at zero, with the probability mass function (pmf)

where \(a_{n} \ge 0\) depends only on n, \(A(\theta )=\sum _{n=1}^{\infty }a_{n}{\theta }^n\), \({\theta }\in (0,s)\) (s can be \(+\infty \)) is such that \(A(\theta )\) is finite and its first, second and third derivatives exist and are denoted by \(A^{\prime }(.), A^{\prime \prime }(.)\) and \(A^{\prime \prime \prime }(.)\). Table 1 shows some power series distributions (truncated at zero) such as Poisson, geometric, logarithmic, binomial and negative binomial (with m being the number of replicates) distributions.

It is noticeable that the probability distributions of the form (2) have been considered in [10, 41]. For more properties of this class of distributions, see [39].

Now, let \(X_{(1)}=\min \{ X_1, X_2, \ldots , X_N\}\). The conditional cdf of \(X_{(1)}|N=n\) is given by

Observe that \(X_{(1)}|N=n\) follows a KQHR distribution with parameters \(\alpha , \lambda , \beta , \gamma \), and n, which was defined by [15] and it is denoted by \(KQHR(\alpha , \lambda , \beta , \gamma , n)\). The marginal cdf of \(X_{(1)}\)

defines the cdf of GQHRPS distribution. We denote a random variable X following the GQHRPS distribution with parameters \(\alpha , \lambda , \beta , \gamma \), and \(\theta \) by \( X \sim GQHRPS(\alpha , \lambda , \beta , \gamma , \theta )\).

This proposed class of distributions include lifetime distributions presented by [47] (generalized quadratic hazard rate distribution), [31] (generalized exponential power series distribution), Flores et al. [16] (complementary exponential power series distribution), Harandi and Alamatsaz [20] (generalized linear failure rate power series distribution), and Okasha et al. [40] (quadratic hazard rate-geometric distribution) among others.

3 Statistical and Reliability Properties

In this section we derive the probability density function (pdf), survival function, hazard rate function, reversed hazard rate function, mean residual life (MRL) and mean past lifetime (MPL) functions. The pdf of a random variable \(X \sim GQHRPS(\alpha , \lambda , \beta , \gamma , \theta )\) is given by

Proposition 3.1

The KQHR distribution with parameters \(\alpha , \lambda , \beta , \gamma \), and c is a limiting distribution of the GQHRPS distribution when \( \theta \rightarrow 0^{+}\), where \(c=\min \{ n \in \mathbf{N }: a_n \succ 0 \}\).

Proof

\(\square \)

Proposition 3.2

The pdf of GQHRPS distribution can be written as a mixture of the density function of KQHR distribution with parameters \(\alpha , \lambda , \beta , \gamma \), and n.

Proof

We know that \(A^{\prime }(\theta )=\sum _{n=1}^{\infty } n a_n \theta ^{n-1}\). Therefore by Eq. (5), we have

where \(g(x;\alpha , \lambda , \beta , \gamma , n)\) is the density function of \(KQHR(\alpha , \lambda , \beta , \gamma , n)\) distribution. \(\square \)

It is well-known that an important measure of aging is the hazard rate function, defined as

The survival and hazard rate functions of GQHRPS distribution are given by

and

respectively.

Similarly, the reversed hazard rate function of GQHRPS distribution is given by

An alternative aging measure, widely used in applications, is the mean residual life (MRL) function, defined as

where E is the expectation operator.

Proposition 3.3

The MRL function of GQHRPS distribution is given by

where \(\varGamma (a,x)=\int _{x}^{\infty } e^{-t} t^{a-1} dt\) and

Proof

See the “Appendix”. \(\square \)

The MPL function or mean waiting time function is a well-known reliability measure that has applications in many disciplines such as reliability theory and actuarial studies. The MPL function of a random variable X is defined by

As mentioned in [40], the MPL is a dual property of the MRL. The MPL function could be of ineterest for describing different maintenance strategies. Chandra and Roy [13] showed that the MPL function cannot decrease on (0, 1). Other interpretation, properties and applications of the MPL function can be found in [2, 3, 22, 25, 26] and the references therein. The next proposition provides the MPL of GQHRPS distribution.

Proposition 3.4

The MPL of GQHRPS distribution is given by

where \(\gamma (a,x)=\int _{0}^{x} e^{-t} t^{a-1} dt\).

Proof

See the “Appendix”. \(\square \)

3.1 Moments and Moment Generating Function

Let Y be a random variable following the KQHR distribution with parameters \(\alpha , \lambda , \beta , \gamma \) and n. Elbatal and Butt [15] obtained the moment generating function (mgf) of the random variable Y as follows

where

Combining Eq. (6) and Proposition (3.2) yields the mgf of the GQHRPS distribution

The r-th moment of the KQHR distribution with parameters \(\alpha , \lambda , \beta , \gamma \) and n is given by

(see [15]). Thus, the r-th moment of the GQHRPS distribution is given by

Based on Eq. (7), the measures of variation, skewness and kurtosis of GQHRPS distribution can be obtained via the following relations:

3.2 Order Statistics

Let \(X_1, \ldots , X_n\) be a random sample from a GQHRPS distribution and \(X_{i:n}\), \(i=1,2,\ldots , n\), denote its i-th order statistics. The pdf of \(X_{i:n}\) is given by

where f, F, and \({\overline{F}}\) are the pdf, cdf, and survival function of GQHRPS distribution, respectively. Equation (8) can be written in the following forms

or

In view of the fact that

the cdf of \(f_{i:n}(x)\) denoted by \(F_{i:n}(x)\), becomes

An alternative expression for \(F_{i:n}(x)\), using Equation (10), is

The Gauss hypergeometric function \(_2F_1(a,b:c;z)\) is the particular case of generalized hypergeometric function and is defined by

where \((a)_m=\dfrac{\varGamma (a+m)}{\varGamma (a)}\) is the Pochhammer symbol with the convention that \((0)_0=1\). The next proposition states \(F_{i:n}(x)\) in terms of Gauss hypergeometric function.

Proposition 3.5

For all \(1\le i \le n\) and \(x \ge 0\),

is a polynomial in B, where

Moreover, \(F_{n:n}(x)=B^n\)

Proof

See the “Appendix”. \(\square \)

Expressions for moments of the ith order statistics \(X_{i:n}\), \(i=1,2,\ldots , n\), with cdf \(F_{i:n}(x)\) can be obtained using a result of [6] as follows:

for \(r=1,2, \ldots \) and \(i=1,2, \ldots , n\). An application of the first moment of order statistics can be considered in calculating the L-moments which are in fact the linear combination of the expected order statistics, See [21] for details.

By inserting the pdf and cdf of GQHRG distribution into Eq. (10), we obtain the pdf of the i-th order statistics of GQHRG distribution as follows:

where B and A are defined as above. By definition of density function of KQHR distribution in [15], the pdf of the i-th order statistics of GQHRG distribution can be written as

where \(f_{KQHR}\) is the density function of KQHR distribution. As we see, the pdf of order statistics of GQHRG distribution can be expressed as a linear combination of the pdf of KQHR distribution. Therefore, some properties of the i-th order statistic, such as the mgf and moments, can be obtained directly from those of KQHR distribution. Foe example, the moments of the i-th order statistic of GQHRG distribution are given by

for \(r=1,2,\ldots \), where

3.3 Stress–Strength Parameter of the QHRG Distribution

The stress–strength parameter \(R = P(X >Y)\) is a measure of component reliability and its estimation problem when X and Y are independent and follow a specified distribution has been discussed widely in the literature. Let X be the random variable of the strength of a component which is subjected to a random stress Y. The component fails whenever \(X <Y\) and there is no failure when \(X >Y\). Here, we obtain an expression for the stress–strength parameter of the QHRG distribution.

Let \(X\sim QHRG(\alpha , \lambda , \beta , \theta _1)\) and \(Y \sim QHRG(\alpha , \lambda , \beta , \theta _2)\) be independent random variables. The stress–strength parameter is defined as

Changing the variable \(u=\exp (- v_x) \), we obtain

where \(E=\dfrac{\theta _2^2 (\theta _2-1)}{\theta _2^2-2\theta _1^2+\theta _1}\), \(F=\dfrac{\theta _2^2-2\theta _1^2- \theta _1\theta _2+2\theta _1}{\theta _2^2-2\theta _1^2+\theta _1}\), and \(G=\dfrac{\theta _1\theta _2-\theta _1}{\theta _2^2-2\theta _1^2+\theta _1}\).

4 Special Cases of the GQHRPS Distribution

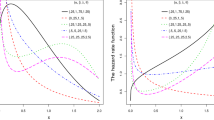

In this section, we study basic distributional properties of the generalized quadratic hazard rate-geometric (GQHRG), generalized quadratic hazard rate-Poisson (GQHRP), generalized quadratic hazard rate-logarithmic (GQHRL), generalized quadratic hazard rate-binomial (GQHRB) and generalized quadratic hazard rate-negative binomial (GQHRNB) distributions as special cases of GQHRPS distribution. In addition, expressions for the pdf and moments of order statistics as well as the stress–strength parameter of the QHRG distribution are obtained. First, to illustrate the flexibility of the distributions, plots of the density and hazard rate functions are presented in Figs. 1 and 2 for some selected values of parameters.

Using Table 1 and some equations in Sect. 2, basic distributional properties of the five special distributions of GQHRPS model are immediately obtained. Table 2 contains the survival function, pdf, hazard rate, MRL, and MPL functions of the GQHRG, GQHRP, GQHRL, GQHRB, and GQHRNB distributions.

where \(A=\left[ 1- \left( 1-\exp {(-v_{x})} \right) ^{\gamma } \right] ,\)\(B=\gamma v^{\prime }(x) \exp {(-v_{x})} \left( 1-\exp {(-v_{x})} \right) ^{\gamma -1},\)

and

5 Characterizations

This section is devoted to certain characterizations of GQHRPS distribution. These characterizations are based on: (i) a simple relation between two truncated moments and (ii) the hazard function. One of the advantages of the characterization (i) is that the cdf is not required to have a closed form.

We present our characterizations (i)–(ii) in two subsections.

5.1 Characterizations in Terms of the Ratio of Two Truncated Moments

In this subsection we present characterizations of GQHRPS distribution in terms of a simple relationship between two truncated moments. This characterization result employs a theorem due to [17], see Theorem 1 of “Appendix A”. Note that the result holds also when the interval H is not closed. Moreover, as mentioned above, it could also be applied when the cdf F does not have a closed form. As shown in [18], this characterization is stable in the sense of weak convergence.

Proposition 5.1

Let \(X:\varOmega \rightarrow \left( 0,\infty \right) \) be a continuous random variable and let \(q_{1}\left( x\right) =\left\{ A^{\prime }\left( \theta \left[ 1-\left( 1-\exp \left( -\upsilon _{x}\right) \right) ^{\gamma }\right] \right) \right\} ^{-1}\) and \(q_{2}\left( x\right) =q_{1}\left( x\right) \left( 1-\exp \left( -\upsilon _{x}\right) \right) ^{\gamma }\) for \(x>0.\) The random variable X has pdf \(\left( 2.5\right) \) if and only if the function \(\eta \) defined in Theorem 1 has the form

Proof

Let X be a random variable with pdf \(\left( 2.5\right) \), then

and

and finally

Conversely, if \(\eta \) is given as above, then

and hence

Now, in view of Theorem 1, X has density \(\left( 2.5\right) .\)\(\square \)

Corollary 5.1

Let \(X:\varOmega \rightarrow \left( 0,\infty \right) \) be a continuous random variable and let \(q_{1}\left( x\right) \) be as in Proposition 5.1. The pdf of X is \(\left( 2.5\right) \) if and only if there exist functions \(q_{2}\) and \(\eta \) defined in Theorem 1 satisfying the differential equation

The general solution of the differential equation in 5.1 is

where D is a constant. Note that a set of functions satisfying the above differential equation is given in 5.1 with \(D=\frac{1}{2}.\) However, it should be also noted that there are other triplets \(( q_{1},q_{2},\eta ) \) satisfying the conditions of Theorem 1.

5.2 Characterization Based on Hazard Function

It is known that the hazard function, \(h_{F}\), of a twice differentiable distribution function, F, satisfies the first order differential equation

For many univariate continuous distributions, this is the only characterization available in terms of the hazard function. The following characterization establish a non-trivial characterization of GQHRPS distribution which is not of the above trivial form.

Proposition 5.2

Let \(X:\varOmega \rightarrow \left( 0,\infty \right) \) be a continuous random variable. The pdf of X is \(\left( 2.5\right) \) if and only if its hazard function \(h_{F}\left( x\right) \) satisfies the differential equation

\(x>0.\)

Proof

If X has pdf \(\left( 3.3\right) \), then clearly the above differential equation holds. Now, this differential equation holds, then

\(x>0\) , from which, we obtain

which is the hazard function of GQHRPS distribution. \(\square \)

Remark 5.1

For \(\gamma =1\) , we have a simpler differential equation in terms of the hazard function

6 Parameters Estimation via the EM Algorithm and Simulation Studies

In this section, we compute maximum likelihood estimates (MLE) of the parameters via the EM algorithm. The EM algorithm is a method for computing MLE when some of the variables have missing values. The EM algorithm is an iterative procedure which consists of two steps. In the E step, we compute expectation of the log-likelihood of complete data, which contains observed and unobserved data, given observed data. In the M step, MLEs values updates by maximizing the obtained function in the E step. This iteration process is repeated until convergence.

In this paper, we assume that N is a missing variable and denote it by Z and we define a hypothetical complete data distribution with a joint probability density function in the form

where \(\varTheta =(\alpha ,\lambda ,\beta ,\gamma ,\theta )\) denotes the set of parameters. In the \((t+1)\)th iteration of the EM algorithm, \(E(Z|X,\varTheta )\) is evaluated at \(\varTheta ^{(t)}\) where \(\varTheta ^{(t)}\) denotes the value of \(\varTheta \) obtained in the t-th iteration of the EM algorithm. So, we need to obtain the conditional distribution of Z given X. We have

So,

since \(A'(\theta )+\theta A''(\theta )=\sum _{z=1}^{\infty } z^2 a_z\theta ^{z-1}\).

The conditional expectation of the complete data log-likelihood given observed data is

In the M step, by differentiation \(E(\ln L(\varTheta ;x,z|x))\) with respect to \(\theta \), we obtain the following recursive equation

By taking differentiation with respect to the \(\gamma \), we have \({\gamma }=h(\gamma )\) where

We solve this equation with a simple iteration method as Kundu and Gupta [28]. Starting with an initial guess \(\gamma ^{(0)}\), then \(\gamma ^{(1)}=h(\gamma ^{(0)})\), similarly, \(\gamma ^{(2)}=h(\gamma ^{(1)})\) and so on. Continue the process until the convergence is obtained.

\(\alpha \) is the solution of the following equation

where \(A_i^{(1)}=(a_i-1)\gamma \exp (-\nu _{x_{i}})\times \frac{(1-\exp (-\nu _{x_{i}}))^{\gamma -1}}{1-(1-\exp (-\nu _{x_{i}}))^{\gamma }}\).

Unfortunately, there is no closed form solution. We have employed the Newton–Raphson method to compute the solution. Using this method, the following recursive equation can be found

where

where \(A_i^{(2)}=(a_i-1)\gamma \exp (-\nu _{x_{i}})(1-\exp (-\nu _{x_{i}}))^{\gamma -2} \frac{\Big [(1-\exp (-\nu _{x_{i}}))^{\gamma }+\gamma \exp (-\nu _{x_{i}})-1\Big ]}{(1-(1-\exp (-\nu _{x_{i}}))^{\gamma })^2}\).

Similarly, the \(\lambda ^{(t+1)}\) is obtained by the following equations

and

Analogously to the previous paragraph, the following relationships can be obtained for computing \(\beta ^{(t+1)}\)

and

It worth be noted that, in all of above equalities, in the \((t+1)\)-th iteration of the EM algorithm, except the parameter that is being estimated, for other parameters we used their estimated values from the t-th iteration of the EM algorithm.

7 Standard Errors of the Estimates

The inverse of the observed information matrix \(\text {I}({\hat{\varTheta }};{\mathbf{x}})\) can be used for approximating the asymptotic covariance matrix of the ML estimator. A few methods for computing \(\text {I}({\hat{\varTheta }};x)\) when the EM algorithm is carried out are proposed in some researches. Among these, some methods are provided by Louis [30], Meng and Rubin [32], Baker [5] and Jamshidian and Jennrich [23, 24]. In here, we used Supplemented EM (SEM) algorithm that introduced by Meng and Rubin [32]. The most important feature of the SEM method is that related calculation can be readily done by using only the EM code. Meng and Rubin [32] show that

where \(\varDelta \mathbf{V}=(\text {I}_d-\hbox {J}({\hat{\varTheta }}))^{-1} \hbox {J}({\hat{\varTheta }})\text {I}^{-1}_{com}({\hat{\varTheta }};x)\), \(\text {I}_{com}({\hat{\varTheta }};x)=-E(\frac{\partial ^2}{\partial {\varTheta }\partial {\varTheta '}} \log L({\varTheta };x, z)|x)\), \(\text {I}_d\) denotes the \(d\times d\) identity matrix and \(\hbox {J}({\hat{\varTheta }})=\frac{\partial }{\partial \varTheta } \hbox {M}({\hat{\varTheta }})\) where \(\hbox {M}({\varTheta })\) is the EM map defined as \({\varTheta }^{(t+1)}=\hbox {M}({\varTheta }^{(t)})\) and \(\hbox {J}({\hat{\varTheta }})\) is referred as the matrix rate of convergence. They showed that \(\hbox {J}({\hat{\varTheta }})\) can be approximated by using the EM code.

In our model, the (i, j)th element of the symmetric matrix \(\text {I}_{com}({\hat{\varTheta }};x)\), \(i,j=1,2 \), is denoted by \(\text {I}_{com}({\hat{\theta }}_i;{\hat{\theta }}_j)\) and can be obtained as follows

where \(A_i^{(3)}=\frac{\exp {(-\nu _{x_{i}})} (1-\exp {(-\nu _{x_{i}})})^{\gamma -1}}{\Big (1-(1-\exp {(-\nu _{x_{i}})})^{\gamma }\Big )^2}\Big [ \ln (1-\exp {(-\nu _{x_{i}})})^\gamma +1-(1-\exp {(-\nu _{x_{i}})})^\gamma \Big ]\).

\(A_i^{(2)}\), \(A_i^{(3)}\) and all of the parameters in the above equalities are computed in \({\hat{\varTheta }}\).

8 Simulation Study

To illustrate the performance and the accuracy of the EM algorithm in estimating the model parameters we performed a simulation study. To investigate the effect of the sample size we consider \(n=35, 50\) and 100. In each replication, a random sample of size n is drawn from the GQHRP, GQHRL and GQHRG distributions. The true values of the parameters, that we use in the simulation, are given in Table 3 For each n, we simulate 500 random data sets from the proposed model. For each data set, the model parameters are estimated via the EM algorithm. We calculate means and standard deviations of the obtained estimates from 500 replications and these are given in Table 3 The results given in this table are shown that the differences between the average estimates and the true values are almost small and also we can see that by increasing the sample size, standard deviations of parameters are reduced. So, overall, the obtained results are satisfactory.

9 Real Data Analysis

The data set given by [46] on the represent the times between successive failures (in thousands of hours) in events of secondary reactor pumps. The data are presented in Table 4. Table 5 gives some statistic measures for these data, which indicate that the empirical distribution is skewed to the left and platykurtic. The MLEs of the parameters along with the standard errors (SE) of the estimates, AIC (Akaike Information Criterion), BIC (Bayesian information criterion), and AICc are displayed in Table 6 for this data set and the following distributions (BGG and TGG). The Beta Generalized Gamma distribution (BGG) is given by the PDF

where

and

Here p is not zero and the other parameters are positive. The Transmuted Generalized Gamma (TGG) distribution is given by the PDF

for \( p>0 \), \( \mid \lambda \mid \le 1 \), \( a>0 \) and \( \nu >0 \). The \( MLE_{s} \), the value of the Akaike information criterion (AIC), the value of the Bayesian information criterion (BIC) and the second-order AIC (AICc) for goodness of fit are reported in Table 5 for each of the fitted distributions.

Based on the AIC and BIC and AICc statistics, we see that four of the fitted generalized quadratic hazard rate power series distribution perform better than the generalized quadratic hazard rate binomial distribution. The generalized quadratic hazard rate logarithmic distribution gives the best fit with the smallest values for AIC, BIC and AICc and p value = 0.9989. The generalized quadratic hazard rate geometric with p value = 1 gives the second smallest values for AIC , BIC and AICc . The generalized quadratic hazard rate Poisson with p value = 1 gives the third smallest values for AIC, BIC and AICc. The generalized quadratic hazard rate negative binomial distribution with p value = 0.9985, \(m=3\) gives the forth smallest values for AIC, BIC and AICc. The Transmuted Generalized Gamma distribution gives the worst fit with the largest values for AIC, BIC and AICc. The Beta Generalized Gamma distribution gives the second worst fit with the second largest values for AIC, BIC and AICc.

10 Concluding Remarks

In this paper we have introduced the new class of lifetime distributions. It contains a number of known special submodels such as generalized exponential power series, generalized linear failure rate power series, quadratic hazard rate geometric distributions, among others. We think the formulas derived are manageable by using modern computer resources with analytic and numerical capabilities. The proposed model has enough flexibility that can be used quite effectively for modelling lifetime data. The generation of random samples from proposed distribution is very simple, and therefore Monte Carlo simulation can be performed very easily for different statistical inference purpose. Maximum likelihood estimates of the new model are discussed. Analyses of one real data set indicate the good performance and usefulness of the new model.

References

Adamidis K, Loukas S (1998) A lifetime distribution with decreasing failure rate. Stat Probab Lett 39(1):35–42

Ahmad IA, Kayid M, Pellerey F (2005) Further results involving the MIT order and the IMIT class. Probab Eng Inf Sci 19(03):377–395

Ahmad IA, Kayid M (2005) Characterizations of the RHR and MIT orderings and the DRHR and IMIT classes of life distributions. Probab Eng Inf Sci 19(04):447–461

Bagheri SF, Samani EB, Ganjali M (2016) The generalized modified Weibull power series distribution: theory and applications. Comput Stat Data Anal 94:136–160

Baker SG (1992) A simple method for computing the observed information matrix when using the EM algorithm with categorical data. J Comput Graph Stat 1(1):63–76

Barakat HM, Abdelkader YH (2004) Computing the moments of order statistics from nonidentical random variables. Stat Methods Appl 13(1):15–26

Barreto-Souza W, Cribari-Neto F (2009) A generalization of the exponential-Poisson distribution. Stat Probab Lett 79(24):2493–2500

Barreto-Souza W, de Morais AL, Cordeiro GM (2011) The Weibull-geometric distribution. J Stat Comput Simul 81(5):645–657

Bidram H, Nekoukhou V (2013) Double bounded Kumaraswamy-power series class of distributions. SORT-Stat Oper Res Trans 1(2):211–230

Boehme TK, Powell RE (1968) Positive linear operators generated by analytic functions. SIAM J Appl Math 16(3):510–519

Bourguignon M, Silva RB, Cordeiro GM (2014) A new class of fatigue life distributions. J Stat Comput Simul 84(12):2619–2635

Chahkandi M, Ganjali M (2009) On some lifetime distributions with decreasing failure rate. Comput Stat Data Anal 53(12):4433–4440

Chandra NK, Roy D (2001) Some results on reversed hazard rate. Probab Eng Inf Sci 15(01):95–102

Cordeiro GM, Silva RB (2014) The complementary extended Weibull power series class of distributions. Cienc Nat 36:1–13

Elbatal I, Butt NS (2014) A new generalization of quadratic hazard rate distribution. Pak J Stat Oper Res 9(4):343–361

Flores J, Borges P, Cancho VG, Louzada F (2013) The complementary exponential power series distribution. Braz J Probab Stat 27(4):565–584

Glanzel W (1987) A characterization theorem based on truncated moments and its application to some distribution families. In: Mathematical statistics and probability theory (Bad Tatzmannsdorf, 1986), vol B. Reidel, Dordrecht, pp 75–84

Glanzel W (1990) Some consequences of a characterization theorem based on truncated moments. Stat J Theor Appl Stat 21(4):613–618

Gupta RD, Kundu D (1999) Theory and methods: generalized exponential distributions. Aust N Z J Stat 41(2):173–188

Harandi SS, Alamatsaz MH (2015) Generalized linear failure rate power series distribution. Commun Stat Theory Methods 45(8):2204–2227

Hosking JRM (1990) L-moments: analysis and estimation of distributions using linear combinations of order statistics. J R Stat Soc Ser B (Methodol) 52(1):105–124

Izadkhah S, Kayid M (2013) Reliability analysis of the harmonic mean inactivity time order. IEEE Trans Reliab 62(2):329–337

Jamshidian M, Jennrich RI (1997) Acceleration of the EM algorithm by using quasi-Newton methods. J R Stat Soc Ser B (Stat Methodol) 59(3):569–587

Jamshidian M, Jennrich RI (2000) Standard errors for EM estimation. J R Stat Soc Ser B (Stat Methodol) 62(2):257–270

Kayid M, Ahmad IA (2004) On the mean inactivity time ordering with reliability applications. Probab Eng Inf Sci 18(03):395–409

Kayid M, Izadkhah S (2014) Mean inactivity time function, associated orderings, and classes of life distributions. IEEE Trans Reliab 63(2):593–602

Kundu D, Raqab MZ (2005) Generalized Rayleigh distribution: different methods of estimations. Comput Stat Data Anal 49(1):187–200

Kundu D, Gupta RD (2006) Estimation of \(P (Y< X)\) for Weibull distributions. IEEE Trans Reliab 55(2):270–280

Ku C (2007) A new lifetime distribution. Comput Stat Data Anal 51(9):4497–4509

Louis TA (1982) Finding the observed information matrix when using the EM algorithm. J R Stat Soc Ser B (Methodol) 44(2):26–233

Mahmoudi E, Jafari AA (2012) Generalized exponentialpower series distributions. Comput Stat Data Anal 56(12):4047–4066

Meng XL, Rubin DB (1991) Using EM to obtain asymptotic variance-covariance matrices: the SEM algorithm. J Am Stat Assoc 86(416):899–909

Merovci F, Elbatal I (2015) he beta quadratic hazard rate distribution. Pak J Stat 31(5):427–446

Morais AL, Barreto-Souza W (2011) A compound class of Weibull and power series distributions. Comput Stat Data Anal 55(3):1410–1425

Mudholkar GS, Srivastava DK (1993) Exponentiated Weibull family for analyzing bathtub failure-rate data. IEEE Trans Reliab 42(2):299–302

Mudholkar GS, Srivastava DK, Freimer M (1995) The exponentiated Weibull family: a reanalysis of the bus-motor-failure data. Technometrics 37(4):436–445

Nadarajah S, Kotz S (2006) The beta exponential distribution. Reliab Eng Syst Saf 91(6):689–697

Nadarajah S, Popovic BV, Ristic MM (2013) Compounding: an R package for computing continuous distributions obtained by compounding a continuous and a discrete distribution. Comput Stat 28(3):977–992

Noack A (1950) A class of random variables with discrete distributions. Ann Math Stat 21(1):127–132

Okasha HM, Kayid M, Abouammoh MA, Elbatal I (2015) A new family of quadratic hazard rate-geometric distributions with reliability applications. J Test Eval 44(5):1937–1948

Ostrovska S (2007) Positive linear operators generated by analytic functions. Proc Math Sci 117(4):85–493

Ramos MWA, Percontini A, Cordeiro GM, da Silva RV (2015) The Burr XII negative binomial distribution with applications to lifetime data. Int J Stat Probab 4(1):109–125

Roozegar R, Jafari AA (2016) On bivariate exponentiated extended Weibull family of distributions. Cienc Nat 38(2):564–576

Roozegar R, Nadarajah S (2016a) The power series skew normal class of distributions. Commun Stat Theory Methods 46(22):11404–11423

Roozegar R, Nadarajah S (2016b) The quadratic hazard rate power series distribution. J Test Eval 45(3):1058–1072

Salman SM, Prayoto S (1999) Total time on test plot analysis for mechanical components of the RSG-GAS reactor. Atom Indones 25(2):81–90

Sarhan AM (2009) Generalized quadratic hazard rate distribution. Int J Appl Math Stat 14(S09):94–106

Sarhan AM, Kundu D (2009) Generalized linear failure rate distribution. Commun Stat Theory Methods 38(5):642–660

Silva RB, Bourguignon M, Dias CR, Cordeiro GM (2013) The compound class of extended Weibull power series distributions. Comput Stat Data Anal 58:352–367

Silva RB, Cordeiro GM (2015) The Burr XII power series distributions: a new compounding family. Braz J Probab Stat 29(3):565–589

Tahmasbi R, Rezaei S (2008) A two-parameter lifetime distribution with decreasing failure rate. Comput Stat Data Anal 52(8):3889–3901

Tojeiro C, Louzada F, Roman M, Borges P (2014) The complementary Weibull geometric distribution. J Stat Comput Simul 84(6):1345–1362

Acknowledgements

We are thankful to the Editor-in-Chief, Associate Editor and the anonymous reviewers for their careful reading of our manuscript and their many insightful comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

Theorem 1

Let \(\left( \varOmega ,{\mathscr {F}},{\mathbf {P}}\right) \) be a given probability space and let \(H=\left[ a,b\right] \) be an interval for some \(d<b\)\(\left( a=-\infty ,\text { }b=\infty \text { might as well be allowed}\right) .\) Let \(X:\varOmega \rightarrow H\) be a continuous random variable with the distribution function F and let \(q_{1}\) and \(q_{2}\) be two real functions defined on H such that

is defined with some real function \(\eta \). Assume that \(q_{1} ,q_{2}\in C^{1}\left( H\right) \), \(\eta \in C^{2}\left( H\right) \) and F is twice continuously differentiable and strictly monotone function on the set H. Finally, assume that the equation \(\eta q_{1}=q_{2}\) has no real solution in the interior of H. Then F is uniquely determined by the functions \(q_{1},q_{2}\) and \(\eta \) , particularly

where the function s is a solution of the differential equation \(s^{\prime }=\frac{\eta ^{\prime }\text { }q_{1}}{\eta q_{1}-q_{2}}\) and C is the normalization constant, such that \(\int _{H}dF=1\).

Proof of Proposition 3.3

From definition of MRL and Eq. (3), we have

where \(v_t=\alpha t+ (\lambda /2 )t^2 + (\beta /3) t^3\). Using the series expansion

it follows that

Proof of Proposition 3.4

The MPL of a random variable X with GQHRPS distribution is given by

where \(K=\dfrac{A(\theta )}{A(\theta )-A\left( \theta \left[ 1- \left( 1-\exp {(-v_{x})} \right) ^{\gamma } \right] \right) }\).

Proof of Proposition 3.5

Follows by noting that

The Gauss hypergeometric function reduces to 1 when \(i=n\), therefore \(F_{n:n}(x)=B^n\).

Rights and permissions

About this article

Cite this article

Roozegar, R., Hamedani, G.G., Amiri, L. et al. A New Family of Lifetime Distributions: Theory, Application and Characterizations. Ann. Data. Sci. 7, 109–138 (2020). https://doi.org/10.1007/s40745-019-00216-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40745-019-00216-5

Keywords

- Generalized quadratic hazard rate distribution

- Order statistics

- Power series distribution

- Characterizations