Abstract

In three experiments, we explore human and simulated participants’ potential for deriving and merging analogous forms of stimulus relations. In the first experiment, five human participants were exposed to compound stimuli (stimulus pairs) by way of an automated yes–no protocol. Participants received discrimination training focusing on four three-member stimulus classes, where only two of the four classes were correctly related algebraic expressions. Training was intended to establish generalized identification of novel correct stimulus pairs and generalized identification of novel incorrect stimulus pairs. In Experiment 2, we employed a three-layer connectionist model (CM) of a yes–no protocol aimed at training and testing an analogous set of stimulus relations. Our procedures were aimed at assessing a neural network’s ability to simulate derived stimulus relations consistent with the human performances observed in Experiment 1. In Experiment 3, we employed a four-layer CM to compute the number of training epochs required to attain mastery. As with our human participants, our neural network required specific training procedures to become proficient in identifying stimuli as being members or nonmembers of specific classes. Outcomes from Experiment 3 suggest that the number of training epochs required to attain mastery for our simulated participants corresponded closely with the number of training trials required of our human participants during Experiment 1. Moreover, generalization tests revealed that human and simulated participants exhibited analogous response patterns. We discuss the evolving potential for CMs to emulate and predict human training requirements for deriving and merging complex stimulus relations during generalization tests.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

In the behavior analytic literature, it is not unusual for applied and basic researchers to describe derived relational responding as a behavioral phenomenon confined to humans with some minimal level of verbal capability (e.g., Iversen, 1993, 1997; Iversen, Sidman, & Carrigan, 1986; Hayes et al., 2001); however, there are several studies suggesting that non-verbal organisms may be capable of deriving stimulus relations in a manner that is analogous to outcomes seen in human performances (e.g., Frank & Wasserman, 2005; Urcuioli, Lionello-DeNolf, Michalek, & Vasconcelos, 2006; Urcuioli & Swisher, 2015). Investigations in this area frequently employ matching-to-sample (MTS) protocols, exposing participants to an array of conditional discriminations using arbitrary sample and comparison stimuli (e.g., nonsense syllables or abstract shapes). In more didactic, academically oriented applied studies, training, and test stimuli are selected on the basis of the participants being unfamiliar with particular academic symbols or concepts. Irrespective of the type of stimuli employed within a given experimental preparation, participants trained by way of conventional MTS protocols select a correct comparison, B1 in the presence of A1. In a similar way, C1 is trained as the correct comparison response in the presence of A1. Following sufficient training, symmetric relations are confirmed when participants exhibit an increased likelihood of selecting A1 when B1 and C1 are presented. Transitivity is established when B1 is selected given C1, and equivalence is confirmed when C1 is selected given B1 (Sidman & Cresson, 1973; Sidman & Tailby, 1982). Although it is often the case that MTS protocols include training and subsequent testing of symmetric relations before assessing transitive or equivalence relations, it has been demonstrated that establishing symmetry may not be essential to the emergence of equivalence (e.g., Arntzen & Holth, 1997).

Unlike the MTS protocol, the yes–no training method displays stimulus pairs (often referred to as compound stimuli or stimulus–stimulus relations). During each trial, participants are exposed to two adjacent stimuli and attempt to select compound stimuli that are members of the same class. For example (given that A1B1 and A1C1 are members of the same class), during randomized presentations of onscreen compound stimuli, participants click one of two buttons labeled YES and NO. Within the training condition, A1B1 are displayed adjacent to one another on the computer screen as compound stimuli, and clicking the “yes” button produces onscreen reinforcement. Likewise, A1C1 are displayed side-by-side onscreen as compound stimuli, and clicking the “yes” button produces onscreen reinforcement. Subsequent to training, during the assessment of derived relations, untrained stimulus pairs (B1C1) are displayed, and in the absence of programmed reinforcement, the participant identifies these stimuli as belonging (or not belonging) to the same class (cf. Fields, Reeve, Varelas, Rosen, & Belanich, 1997).

Independent of training format, innovative explorations of derived stimulus relations have been a growing source of laboratory and field investigation throughout behavior analysis. Most of the current studies have employed humans as experimental participants. However, several researchers have explored derived stimulus relations using artificial neural network (NN) models, often referred to as connectionist models (CMs), to simulate and forecast task performances observed among human participants. Briefly stated, CMs are data-driven algorithms that detect patterns in complex datasets and provide a computational representation of evasive linear and nonlinear relationships among variables of interest (Hagan, Demuth, Beale, & De Jesús, 2002). This feature often allows them to reproduce or predict many of the performance patterns observed among human participants (see Barnes & Hampson 1993; Lyddy & Barnes-Holmes, 2007; Ninness, Ninness, Rumph, & Lawson, 2018; Tovar & Torres-Chavez, 2012; Tovar & Westermann, 2017).

From some behavioral perspectives, neural network research is not congenial with the physiological components of human learning. As mentioned by Burgos (2007), “. . . the models are not informed by neuroscientific knowledge about the biological structure and functioning of brains” (p. 218). However, several investigations, such as those conducted by Tovar and Westerman (2017), suggest that network learning corresponds closely with synaptic adjustments that occur during the biological acquisition of equivalence relations. Subsequent to developing a training model based on Hebbian learning principles, Tovar and Westermann conducted neural network simulations of three classic equivalence experiments based on outcomes from the existing literature (Sidman & Tailby 1982; Devany et al., 1985; Spencer & Chase, 1996). In all three simulations, the models’ connection weights were analyzed by comparing them to the published records of human participants’ speed and accuracy during training and testing (cf. Lew & Zanutto, 2011). This approach is particularly robust in the sense that, when having access to published investigations that contain sufficient records of training and test outcomes, this model provides a strategy for predicting human performances in the acquisition of equivalence relations. Independent of theoretical orientation, the current growth of neural network applications throughout academia makes it likely that this technology will play a critically important role in the experimental analysis of human behavior (see Greene, Morgan & Foxall, 2017, for a general discussion).

As will be described in greater detail within Experiment 2 and Experiment 3, for a CM to learn the relations among stimuli, it must undergo a process of supervised learning. As an overview, the neurons (nodes) within each layer of the CM must be fully connected such that they are able to continually update values as they pass back and forth across all layers of the CM network. The connections among neurons within each of the layers in the CM are weighted, and these weights are continually modified as they are passed forward (feedforward) and backward (backpropagation) through the layers of the network until the best set of weights can be computed. In supervised learning, the connections between layers are modified in an “attempt” to decrease discrepancies between the known (target) values and the CM’s calculated values. If training is successful, the CM should be able to analyze new input values in the absence of supervision. That is to say, a well-trained CM should be able to model similarly structured but previously unseen input values and generate accurate outcomes—provided that these new values are representative of the data employed during training.

While simulating human behavior by way of computer algorithms might have been viewed as fodder for an interesting science fiction script fewer than two decades ago (e.g., Spielberg, 2001), the culture is now awash in neural network applications aimed at modeling a wide range of human behaviors. To mention only a few from a continually expanding number and variety of examples, neural networks have been applied successfully to predicting congressional voting patterns (Ninness et al., 2012) and consumer behaviors (Greene, Morgan, & Foxall, 2017). Recently (DNN) algorithms have gone beyond forecasting human performances to generating reliable explanations for specific behaviors in the area of health social networks (Phan, Dou, Piniewski, & Kil, 2016).

With the rapid evolution of artificial intelligence and computational modeling systems throughout the academic and scientific communities, it is inevitable that neural network technology will play a pivotal role in the experimental analysis of complex stimulus relations. Indeed, there have been several profound behavior analytic investigations using neural network procedures, and several of these studies have focused on identifying the extent to which CMs are able to derive complex stimulus relations consistent with those seen in human performances. Barnes and Hampson (1993a, b) were among the first behavior analytic researchers to explore and demonstrate a CM’s potential for simulating derived stimulus relations seen during investigations of human participants as originally conducted by Steele and Hayes (1991) and Wulfert and Hayes (1988). These findings were followed by those of Cullinan, Barnes, Hampson, and Lyddy (1994), who developed a CM capable of simulating the emergence of equivalence in combination with a transfer of sequence function consistent with the performances demonstrated by eight college students. Later, Lyddy, Barnes-Holmes, and Hampson (2001) designed a CM capable of simulating unique and syntactically correct response sequences consistent with active and passive voice. Later, in an attempt to contrast linear versus one-to-many MTS training procedures, Lyddy and Barnes-Holmes (2007) confirmed that the one-to-many method required approximately half the training time as the linear method; these findings were commensurate with human response patterns observed under similar training conditions (e.g., Arntzen & Holth, 1997).

Along similar lines, Tovar and Torres-Chavez (2012) conducted combined human and CM experiments. Using an automated yes–no procedure, they employed arbitrary stimuli (diversified shapes) to examine the formation of equivalence classes by human participants. They then conducted an analog CM experiment of equivalence class formation by way of six computer simulations. Five of these simulations exhibited class formations consistent with human participants within the same investigation. Vernucio and Debert (2016) conducted a systematic replication of the Tovar and Torres-Chavez study using the same input values; however, rather than a yes–no or MTS protocol, their CM analysis was conducted by way of a go/no-go preparation. That is, the CM only made “yes” responses when presented with related stimuli. Similar to the outcomes obtained by Tovar and Torres-Chavez, their findings confirmed that four of six simulated participants formed stimulus relations consistent with equivalence.

Advancing this line of research, our rapidly evolving behavioral and computer sciences invite a comprehensive, rigorous, and unrestricted experimental analysis of behavior. Even the early research by Bullinaria (1997) illustrates several neural network strategies that permit a more intensive and intrusive experimental analysis than is possible when employing human participants. For instance, if we disrupt the CM software connections that are responsible for the ability to articulate printed material, the CM’s subsequent speech impediments are consistent with the compromised vocalizations uttered by humans suffering cerebral insult or dyslexia. As described by Bullinaria (1997, p. 281), “The obvious forms of damage we should consider are the removal of random connections, the removal of random hidden units, and the addition of random noise to all the weights.” Obviously, damaging or even interfering with human learners by removing or adding internal components is not an acceptable experimental manipulation; however, such strategies are commonly employed during CM simulations of human behavior. Additionally, environmental artifacts commonly interfere with our best efforts to conduct arduous experimental procedures. Lyddy and Barnes-Holmes (2007, p. 15) suggest that “considering such restrictions on a full understanding of the development of stimulus equivalence, other sources of evidence regarding the effect might warrant consideration. One such source might be afforded by means of computational modeling.”

Recently, we (Ninness et al., 2018) developed and described a working prototype of a CM neural network dubbed Emergent Virtual Analytics (EVA). This article reviewed the quickly evolving technology that allows researchers to employ neural networks to simulate and predict human behaviors. Based on our review of the existing CM technology, one area that appears not to have been sufficiently explored is that of training and deriving stimulus relations pertaining to academic material. This is particularly apparent when training academic stimulus relations that entail rule-following. In other words, during conventional MTS or yes–no protocols, participants often are exposed to a series of training trials in which arbitrary (nonsense) sample stimuli are presented in conjunction with comparison stimuli. Here, training usually is conducted in the absence of rule-following procedures. For example, during training, participants attempt to select a correct arbitrary stimulus from a display of comparison stimuli while obtaining accuracy feedback in the form of putative reinforcement or punishment. Although this is a valuable strategy for training arbitrary stimulus relations within the realm of basic research, it is not a functional or realistic strategy for teaching basic or advanced mathematical or other academic relations. As noted by Ninness et al. (2005, p. 5),

. . . practically speaking, mathematics instructors (or designers of computer-assisted mathematics software) would find it untenable to ask their students or software users to become skilled at complex mathematical operations without introducing them to precise rules for solving the problems in question.

From an instructional perspective, for many human participants, there exists a large body of mathematical symbols and expressions (as well as other abstract academic content) for which verbally able participants are experimentally naïve. If academic material rather than arbitrary stimuli is employed in the training of stimulus relations, pretesting can be conducted in such a way as to demonstrate that the participant is not familiar with the experimental stimuli and has no preconceived notions regarding their meanings.

When training and testing complex academic material by way of stimulus relations, class merger is an especially valuable outcome during tests of generalization (e.g., Fienup, Covey, & Critchfield, 2010); however, to date, there have been no published investigations exploring the common processes required for generating class merger by human and simulated participants. In this article, we attempt to identify some of the analogous processes by which human and simulated participants can form larger and more complex combinations of derived stimulus relations.

General Method

Overview

In three experiments, we conducted analogous preparations using compound stimuli within yes–no procedures. All experiments were designed as single-subject studies employing five human participants in Experiment 1 and five simulated participants in Experiments 2 and 3. Expanding on studies conducted by Fienup and Critchfield (2011) and Mackay, Wilkinson, Farrell, and Serna (2011), our first two experiments explored strategies for generating a class merger among human and simulated participants. Experiment 3 went beyond CM replication of human task performances and permitted the exploration and prediction of one of the central requirements for emulating human behavior, assessing the number of training epochs required for simulated participants to attain mastery and comparing these outcomes with the number of trials required by human participants to attain mastery.

We argue that for many basic and applied research designs, it is reasonable to employ mathematical or other forms of academic stimuli that have no prior associations in the participants’ learning history. In addition, we discuss how such research designs can be facilitated with the help of artificial neural networks. The analysis of human skills in Experiment 1 brings us closer to an understanding of the operations needed to conduct computer simulations of analogous training and test procedures. In Experiments 2 and 3, we draw together various components of Experiment 1 and move toward simulating derived stimulus relations within connectionist models. Our focus is on exploring the ways in which computer models are capable of accurately and efficiently emulating, vetting, and predicting derived stimulus relations as observed among human participants.

Experiment 1

Following pretesting, five human participants were exposed to a series of mathematical compound stimuli by way of a computer interactive yes–no procedure. Based on pretest outcomes, participants who were unfamiliar with exponential algebraic relations received conditional discrimination training aimed at differentiating four three-member stimulus classes where only two of the four classes were correctly related algebraic exponential expressions. Two other classes were composed of algebraic expressions that were unrelated, and the expressions within these two classes served as incorrectly related compound stimuli. During generalization tests, participants were assessed on 22 novel algebraic relations. This assessment included deriving 10 accurate algebraic relations and 12 inaccurate algebraic stimulus relations.

Method

Participants

Five hospital staff members, ages 22 to 45 years, served as participants in this investigation. All participants signed informed consents indicating that they would be financially compensated for their service (roughly 1.5 times their hourly salary) immediately on completion of the study, and that they were free to terminate the experiment at any time and receive partial compensation consistent with the amount of time they had engaged in the study. Participants were informed that they could earn additional monetary reinforcement (up to US$25.00) based on their level of accuracy during the generalization test of novel relations. They were likewise informed that they would be debriefed regarding their individual performances as well as on the underlying theme of the experiment at its conclusion. As verified by self-report and hospital administration records, participants met the following criteria: (a) employed as hospital staff during the time of the experiment, (b) described themselves as being unfamiliar with algebraic operations, and (c) having no form of sensory or physical impairment that might interfere with their abilities to interact with the automated training system (i.e., keyboard, mouse, and computer screen). During the pretest, participants responded to a series of stimulus pairs in the form of exponential expressions. The pretest consisted of 30 computer-interactive items similar to those employed during training; however, the pretest items were composed of expressions containing entirely different fractional exponents. A participant who performed at or above 60% correct was not permitted to participate in the study. Note that at the time of the study, participants were unaware that performing well on the pretest precluded their eligibility for participation in the study. None of the participants (or pilot participants) performed above 50% correct on the pretest.

Setting, apparatus, and experimental stimuli

Training was conducted in a large therapy room within a regional medical center where the first author had a prior professional affiliation. Participants were positioned so as to allow comfortable interaction with the 15.6-inch monitor and a mouse connected to a Lenovo laptop computer (Windows 8.5 operating system—2.50 GHz processor). As a matter of contextual detail only, most participants completed training in approximately 60 min (range: 47–73 min). This training was followed by a generalization test of novel relations completed by all participants in approximately 45 min (range: 39–52 min).

Design and procedure

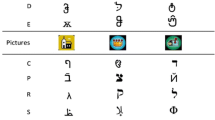

Following informed consent and the pretest identifying participants’ potential familiarity with algebraic operations and expressions as they pertain to fractional exponents, individuals who demonstrated any acquaintance with fractional exponents were excused from the experiment. Figure 1 shows four stimulus classes of algebraic expressions with fractional exponents. The expressions in Class 1 and Class 2 are “algebraically equivalent” (Larson & Hostetler, 2001; Sullivan, 2002). That is to say, given a particular numerical value for “a,” all the expressions within Class 1 and Class 2 have a common solution. Conversely, within Class 3 and Class 4, the B and C expressions are inconsistent as their solutions are not mathematically equivalent to the A expression, and these B and C expressions do not produce the same solutions as those within any of the expressions within Classes 1 and 2.

Automated training and test protocol

Training and test software developed by the first author in the C# programming language allowed participants to interact with visually displayed algebraic compound stimuli while the program recorded all participant responses. Our automated training procedures were somewhat similar to those used by Tovar and Torres-Chavez (2012) as well as those of Lyddy and Barnes-Holmes (2007); however, instead of employing arbitrary symbols as training and test stimuli, we employed algebraic expressions. Also, as previously described, rather than separating training into a series of separate stages and phases, all training and testing procedures were conducted within one hour and forty-five minutes.

Incorporating the Fisher-Yates shuffle algorithm within our automated training protocol, we exposed participants to a series of randomized algebraic compound stimuli. Note that the Fisher-Yates Shuffle (Fisher & Yates, 1948) resequences all items within an array (see Durstenfeld, 1964). Using this algorithm, trained relations were presented in a completely random sequence at the beginning of training and each time a participant was reexposed to training. In the center of the screen, a cumulative point counter increased by one following a participant’s correct response. Participants were informed that these points were not directly exchangeable for any type of financial compensation; rather, the onscreen points served as an indicator of the participants’ cumulative progress during the training condition. Figure 2 illustrates five stimulus–stimulus relations (solid lines) corresponding to the trained correct responses. As noted by Debert et al. (2009) and forwarded by Tovar and Torres-Chavez (2012), compound stimuli within a yes–no training protocol are not the same as traditional MTS conditional discriminations. Notwithstanding, the experimental contingencies are analogous to MTS response requirements since the onscreen presentation of compound stimuli can be regarded as a sample stimulus while the two response options can be regarded as comparison stimuli. The acquisition of these five trained relations created the potential for the emergence of 10 additional untrained stimulus relations (illustrated with dashed lines in Fig. 2). Figure 3 illustrates four incorrect algebraic relations (hashed lines). That is, whereas B3 and C3 produce common solutions, the expression A3 is inconsistent with B3 and C3. Likewise, whereas B4 and C4 provide common solutions, the expression A4 is inconsistent with B4 and C4.

Illustration of incorrect algebraic relations among stimuli (severed lines) where A3 is inconsistent with B3 and C3. Likewise, A4 is inconsistent with B4 and C4, and all four of these expressions (B3, C3, B4, and C4) are inconsistent with all of the expressions in Fig. 2

Training

Following a brief (2 min) pretraining PowerPoint presentation illustrating the onscreen locations of the yes–no buttons, compound stimuli, and direction of the participants’ attention to the locations of the terms power, exponent, numerator, and denominator as shown on Fig. 4, computer-interactive training was initiated. At the beginning of the training session, the computer screen displayed brief instructions. Participants were asked to read the instructions aloud in the presence of a researcher. In addition to the instructions below, each individual participant was shown how to direct the mouse in order to select “yes” or “no” on the computer screen. Given that the participant was amenable to continuing with the experiment, the mouse–screen demonstration was followed by an opportunity to take the pretest.

Instructions to participants

Participation in the entire study requires approximately one hour and thirty minutes, and the results are strictly anonymous. No form of deception is involved in any part of this investigation, and the study entails no level of risk. The study is not an intelligence test and will not evaluate any aspect of your intellectual abilities. At the end of the study, you will receive a complete explanation of your results. No one else will have access to your particular outcomes. A researcher will remain inside the room in order to help you in the event that a technical problem arises, but he (or she) will not provide you with assistance to answer items on the test.

After a brief demonstration, the entire study will be conducted by way of interacting with a computer screen and mouse. At the center of the screen, two math expressions will be displayed side by side. Your task will be to choose “yes” by clicking the YES button when you believe that the two expressions have the same meaning, and you will click the NO button when you believe that the two expressions do not have the same meaning. All results will be treated as confidential, and in no case will responses from individual participants be identified. For publication purposes, participant results will be presented in the form of arbitrary/non-sequential numbers.

We anticipate that participation in this study will be somewhat interesting, and no adverse reactions are expected from this experience. It is important for you to remember, however, that you are free to terminate your participation at any time during the course of this experiment, and if you choose to do so, you will be compensated in accordance with the amount of time you have invested in the study.

On completion of these instructions, the researcher inquired as to whether participants had any questions regarding the computer-interactive requirements of the experiment. In addition, participants were reminded that they were free to terminate at any point during the experiment, and if they did so, they would be compensated in accordance with the amount of time they had invested in the study.

Participants began training by clicking a start button and were exposed to a 20 s display of two rules regarding fractional exponents as shown in Fig. 4. Note that the numerical values employed in this illustration of rules governing fractional exponents did not use the same numerical values as those employed during the actual training or generalization test. The rules displayed in Fig. 4 address the relations among A1B1, A1C1, A2B2, and A2C2; however, these rules do not address the relation of sameness pertaining to A1A2. These two rules were shown for 20 s each time a participant was returned to the beginning of training (see Ninness et al., 2009, for a discussion of rules and training stimulus relations).

Following the 20-s display of the two rules pertaining to fractional exponents, the automated program presented a randomized series of compound stimuli in the form of two exponential expressions in conjunction with “yes” and “no” buttons located at the bottom of each screen. Within the context of training algebraic expressions by way of a yes–no protocol, it was deemed appropriate to convey the concept of sameness by placing an equal sign between the onscreen stimulus pairs. Each correct response in the form of a button click resulted in a new presentation of a stimulus pair together with a display of the current number of correct responses up to that point in training.

With each new display of compound stimuli, participants clicked the “yes” or “no” button, indicating whether the two stimuli did or did not yield the same algebraic solution. Clicking the “yes” button in the presence of compound stimuli that were members of the same class or clicking the no button in the presence of compound stimuli that were not members of the same class produced putative reinforcers in the form of a brief (1 s) oscillating display of the words “Well Done!!,” increasing the number of correct responses displayed in the center of the screen as the program advanced to the next trial. Conversely, clicking the “yes” button in the presence of compound stimuli that were not members of the same class or clicking the “no” button in the presence of compound stimuli that were members of the same class produced mild punishment in the form of a brief (1 s) oscillating display of the words “Try Again,” returning the displayed accuracy count to 0 and returning the participant to the start of training. Figure 5 illustrates an example of correct and incorrect exponential relations. In the upper panel, a correct response produced points contingent on the participant clicking “yes” in the presence of a display of accurate compound stimuli. In the lower panel, an incorrect response generated the words “Try Again!!” when the participant clicked “yes” in the presence of inaccurate compound stimuli. Each new start of the automated training procedure generated the display of the algebraic rules shown in Fig. 4 (cf. Ninness et al., 2009, 2006), and a newly randomized presentation of all training compound stimuli began. To eliminate redundancy in Table 1, the training stimuli are illustrated in alphanumeric order; however, during the training condition, the displays of compound stimuli were presented randomly. Likewise, the onscreen left–right positions of stimuli alternated randomly.

In the unlikely event that no errors were emitted by a given participant, the training session ended when that participant responded correctly to four randomized exposures to the nine trained relations shown in Table 1. However, all participants produced incorrect responses during training, and each error resulted in the participant being returned to the beginning of a newly randomized training sequence (see Table 2). Here, the participant was again exposed to the same two rules governing fractional exponential expressions displayed in Fig. 4; the number of correct responses was reset to 0, and the participant was reexposed to a newly randomized series of training stimuli.

Mastery criteria and correction procedures

Mastery entailed correct identification of all accurate (A1B1, A1C1, A2B2, A2C2, A1A2) and inaccurate (A1B3, A1C3, A2B4, A2C4) stimulus–stimulus relations. Examination of these mathematical expressions illustrates the subtle relations among all stimuli in the experiment. As shown in Figs. 2 and 3, only the values in the numerator and denominator of the fractional exponents or the index of the radicals with integer exponents governed class memberships. Thus, acquisition of these algebraic relations required multiple exposures to the training stimuli. As mentioned earlier, when a stimulus pair was incorrectly identified as belonging to or not belonging to a particular algebraic class, the participant was returned to the beginning of training whereupon the program randomized all items prior to initiating retraining.

Generalization test

Upon completing training, participants were assessed over 22 novel stimulus–stimulus relations. We refer to this condition as a generalization test because the assessments go beyond testing participants for class formation. As shown in Table 3, participants were tested for the identification of 22 accurate and inaccurate stimulus relations. This included the identification of 10 accurate stimulus pairs and the identification of 12 inaccurate stimulus pairs. In order to preclude presentation order as an artifact relating to participant accuracy, the test items were displayed on the participants’ computer screens in a series of 22 randomized presentations. Each stimulus pair was presented over the course of 20 randomized trials for a total of 440 tests of novel stimulus–stimulus relations for each participant.

For each participant, the proportion of correct responses for each stimulus–stimulus relation was obtained by dividing the total number of accurate responses by the total number of presentations. As discussed earlier, no feedback was given during the generalization test of novel stimulus relations; however, participants were informed prior to training that during this generalization test condition, the program would monitor correct and incorrect responses and that they would be compensated in accordance with how well they performed during both the training and test conditions.

Results

All five participants required several reexposures to the training protocol before attaining mastery. Table 2 displays negligible scatter of inaccurately selected stimulus–stimulus relations. Participants 102 and 103 required the fewest reexposures to training whereas participants 104, 105, and 101 required more training, respectively.

Test of novel relations

Note that a perfect score for a participant who correctly identified all compound stimuli that are members of the same class would be 1.0, and a perfect score for identifying compound stimuli that are not members of the same class would be 0. Before initiating the experimental conditions, two pilot participants demonstrated an ability to perform within a 0.15 accuracy level subsequent to training. For these pilot participants, the computer-interactive protocol and all other aspects of training and testing were identical to those employed with the actual participants during Experiment 1. Thus, the lower 0.15 and upper 0.85 thresholds were based on pilot participants’ task performance levels when attempting to identify accurate and inaccurate stimulus relations.

As a matter of experimental precedents, the 0.15 and 0.85 mastery levels were employed by Tovar and Torres-Chavez (2012) when comparing six individual human performances to those generated by six runs of a connectionist model. Likewise, Vernucio and Debert (2016) conducted a replication of Tovar and Torres-Chavez (2012) using a 0.15 and 0.85 mastery threshold by way of a go/no-go CM preparation. However, because the Tovar and Torres-Chavez study employed arbitrary sample and comparison stimuli (rather than mathematical expressions) and their participants were not given access to rules as part of their training protocol, we could not be certain that the 0.15 and 0.85 thresholds were appropriate for the participants in our study.

Figure 6 shows the distribution of errors and accuracy thresholds that were employed during the generalization test. When test items displayed stimulus pairs consistent with class membership, the correct response required clicking the “yes” button, and a perfect series of responses to the 10 stimulus pairs would be 1.0 correct class formation. As previously noted, during the generalization test, particular stimulus pairs were not members of the same class, and the correct response required clicking the no button. Thus, a perfect series of responses to the 12 tests of unrelated stimuli would be 0.0 class formation. The dashed lines in Fig. 6 demarcate the two thresholds for accurate “yes” and “no” responses. The upper line demarcates the 0.85 threshold for participants correctly identifying stimuli as being Related (i.e., members of the same class). The lower line demarcates the 0.15 threshold for selection of “no” responses when participants were presented with novel compound stimuli that were Unrelated (i.e., not members of the same class). While Fig. 6 reveals variations across participants, all five participants performed as well or better than our hypothesized accuracy threshold.

The dashed lines illustrate the hypothesized 0.15 and 0.85 accuracy thresholds for the 22 generalization tests of novel relations. Human participant 101 through 105 are shown in conjunction with the number of trials needed before being exposed to these generalization tests. From left to right, B2C3 through A1C4 represent human participants’ performance levels for between-class (Unrelated) compound stimuli, and B1C1 through A2C1 represent performance levels for within-class (Related) compound stimuli

Discussion

As previously noted, the relations among the algebraic expressions employed as stimuli in this experiment inevitably appear ambiguous to individuals who have not been exposed to these relations, and these expressions are frequently revisited in the opening chapters of calculus texts (e.g., Larson & Edwards, 2010; Stewart, 2008). If any of our participants knew the relations among the algebraic stimuli prior to training, it was not apparent from an inspection of their pretest performances, nor was it apparent from an examination of their accuracy levels during training. Debriefing our participants suggested that their abilities to derive algebraic relations were functions of their attempts to apply the rules displayed during training and coming into contact with consequences while attempting to do so (cf. Santos, Ma, & Miguel, 2015). Indeed, during debriefing all five participants were able to express the rules pertaining to the purposes of the numerator and the denominator within fractional exponents as they relate to the same purposes of the index of the radicals and integer exponents. Here, our participants’ ability to verbalize rules as they affect particular mathematical concepts was consistent with our previous research in training mathematical stimulus relations (e.g., Ninness et al., 2006, 2009). Although the outcomes from this experiment did not include tests for symmetry or reflexivity (Sidman & Tailby, 1982), it seems reasonable to assume that the patterns of accurately identified stimulus relations during the generalization test represent the emergence of a similar type of derived relational responding.

Experiment 2

In the second experiment, our procedures were aimed at assessing a CM neural network’s ability to simulate derived stimulus relations consistent with the human performances observed in Experiment 1. As in Experiment 1, our generalization tests did not include the assessment of reflexive or symmetric relations. And, as in the acquisition of derived relations by human participants, our CM required specific training procedures in order to become proficient in identifying stimuli as being members or non-members of a given class. As noted by Ninness et al. (2013, p. 52), “. . . neural networks must undergo a series of training sessions with training values in conjunction with expected output patterns before they are capable of providing solutions to problems.”

As described earlier, during a series of training trials referred to as epochs, the feedforward backpropagation algorithm (Rumelhart, Hinton, & Williams, 1986) learns to derive the relations among stimuli by training neurons to process the correct weights and bias values that correspond with known target values (McCaffrey, 2014). With some oscillation, during each epoch, the CM computes progressively accurate estimates of the known target values. Hence, throughout the training process, the CM is gradually acquiring the relationships among the training values and the known target values.

Stopping rules

During each training epoch, the mean square error (MSE) is calculated as a function of updating the mean of the squared deviations between the calculated and the target values (McCaffrey, 2015). The CM algorithm continues to shrink the MSE until the training error becomes so small that continued training is unnecessary and training is automatically stopped. As an alternative, a CM may be designed so that training ends when a specific number of epochs are completed. Indeed, many researchers employ “stopping rules” that incorporate both strategies concurrently; that is, the CM stops when the MSE shrinks to a small preset value or when a specific number of epochs have been completed. Irrespective of how a particular CM determines that sufficient training has been conducted, a well-trained CM should be prepared to make new conditional discriminations independently. That is, upon completing the training procedure, the CM should have acquired sufficient experience with a representative set of conditional discriminations to allow it to accurately respond to new stimulus–stimulus relations for which it has had no previous exposure. See Ninness et al. (2018) for a list of terms and meanings commonly used when describing CM algorithms. During supervised training, the objective is to reduce the differences between the CM’s calculated values and the target output values. As will be described in more detail, the delta rule accomplishes this by updating weight and bias values as the data moves forward and backward across the layers of the network, updating the connection weights with each forward and backward pass (Donahoe & Palmer, 1989). Because the discrepancies are passed backward to the connection weights between each layer, the delta rule is characterized as “backpropagating” the discrepancies between the CM’s calculated and target output values (Rumelhart et al., 1986).

Although the MSE oscillates throughout training, the network weights are continually updated in an “attempt” to better approximate the target values. One stopping rule for ending training is to exit when the MSE descends to some preset level (e.g., 0.05). An alternative strategy identifies the number of epochs required to allow the network to perform reasonably well with pilot data, and this is the tactic we have adopted in the current experiment. As mentioned above, prior to initiating the actual experimental conditions, two pilot participants demonstrated a capability of performing within a 0.15 accuracy level with respect to identifying class membership and nonmembership. Thus, the 0.15 and the 0.85 thresholds are based on two pilot participants performing within this level. Given our pilot participant outcomes, we set our neural network at 500 epochs where pilot testing of our CM appeared to closely approximate the performances demonstrated by our two human pilot participants.

CM Network Design and Procedures

Expanding on an architecture employed during an earlier investigation (Ninness et al., 2018), we developed an enhanced CM analog of the yes–no protocol aimed at training and testing a wider range of stimulus relations. The feedforward backpropagation algorithm that operates within the current version of the CM network was developed and described originally by McCaffrey (2017; refer also to Haykin, 2008, for a related discussion). Previous investigations (e.g., Lyddy & Barnes-Holmes, 2007) have demonstrated that, given analogous input values, CMs are capable of mirroring (and predicting) intricate response patterns consistent with human performances. For example, a row of input values (record) might be arranged as a distinctively sequenced set of 12 digits, all but two of which are set to 0 (inactive) whereas two of these digits are uniquely positioned and set to 1 (active). Each of the 1s within a given row represents a particular stimulus (mirroring the training sequence of human counterparts) whereas the 0s are simply place holders, allowing each row to be sequenced as a distinctive pattern, a salient pattern unlike any of the other rows of stimuli employed in training the neural network.

Abstract symbols (arbitrary shapes or mathematical expressions) employed in the training of human participants clearly share no physical semblance to diversified patterns of 1s and 0s but for the purpose of training a CM neural network; the actual appearance of the training stimuli is irrelevant. As described by Ninness et al. (2018, p. 8),

. . . For neural network training purposes, it is enough that a precisely sequenced row of binary units function in place of a specific abstract stimulus. For every abstract symbol that might be used in training human participants, there is a pattern of binary units that can represent the symbol for a simulated human participant.

Three-Layer CM Architecture

Figure 7 illustrates the basic architecture (network design) of our CM employed in training and testing stimulus relations in Experiment 2. As described earlier, neural networks learn by processing and reprocessing training values forward and backward across layers of neurons within the network. That is, each layer is composed of a set of neurons that perform mathematical operations on the training values as they traverse the layers during the training process. The connections among neurons within each of the layers in the network are “weighted,” and these weights are updated each time the training values are sent forward (feedforward) and backward (backpropagation) across the layers of the network until the best set of weights are obtained for a given number of epochs. Again, the CM uses the delta rule to update the weights within the network during each epoch. Note that unlike our earlier version of this CM, the current version of EVA allows interested researchers to access the weights that were generated during the training condition. (See Appendix in Ninness et al., 2018, for related details.)

To summarize with a few technical terms, training values go through a series of mathematical functions beginning at their point of initial contact with the network’s input layer. Here, the values are multiplied by randomized weights. Next, the weighted values are summed and forwarded to the hidden layer for additional processing (e.g., using the hyperbolic tangent function) and continue the forward pass, arriving at the output layer. At this point, the values undergo further processing (e.g., using the Softmax function), and the training values are compared against the known target values. During the backward pass, these training values return to the hidden layer for further processing and then pass back to the input layer, completing the first of many epochs. Over a series of training epochs, this process allows the network to become increasingly proficient at correctly identifying stimulus relations (see Hagan et al., 2002, for a discussion).

As noted earlier, the processing of training values stops when the MSE shrinks to some preset value or when a preset number of epochs have been completed. Independent of the “stopping rules” employed by a particular CM, when training is stopped, a well-trained CM has been exposed to sufficient training such that it is now able to accurately derive stimulus relations when exposed to stimuli not previously encountered. As described by Ninness et al. (2018, p. 10), “CMs learn by coming into contact with multiple exemplars as do their human counterparts; a child learns to recognize particular spoken and written words by interacting with language from a broader population of verbal exemplars. . .” (p. 10). In a roughly similar way, a CM learns to identify patterns of novel stimulus relations by interacting with representative digital stimuli from a broader population of numerical exemplars.

Training Simulated Participants

As with the training of human participants, each new simulated participant will follow a slightly different learning path throughout the course of training. Much the way human participants demonstrate diversified levels of speed and accuracy during the training and testing of stimulus relations, each new simulated participant is likely to learn the relations among stimuli at a rate and accuracy level that is unlike any of the previous or subsequent simulated participants. This is because each time the simulation number is advanced, all preceding CM memory is deleted, and the new simulated participant will function at a baseline level. Notwithstanding, each new simulated participant’s pattern of correct and incorrect responses should approximate those of other simulated participants. More important, by the end of training, the response patterns exhibited by simulated participants are likely to closely approximate those exhibited by their human counterparts. Thus, the CM becomes a good candidate for modeling and predicting derived stimulus relations as seen in human learning (e.g., Lyddy & Barnes-Holmes, 2007).

Input settings

In order for the CM to learn the complex relationships among stimulus pairs that do and do not share class membership, the network must go through some minimal number of training epochs. As noted above, in this experiment, Emergent Virtual Analytics (EVA) was configured to run 500 epochs. The Simulation Number was set to 1, and as previously mentioned, this number must be advanced each time a new simulated participant is trained. We set the number of Total Columns at 14 because there are a total of 14 columns of input variables; this includes two target variables, 0 and 1. The number of Training Rows was set to nine because there are nine rows representing an input pattern for each input presentation to the network. In this experiment, we set the number of Input Neurons to 12, indicating the number of independent variables. Momentum was set to 0.10, and the Learning Rate is 0.50. The number of Hidden Neurons was 2; the number of Test Rows was set at 22; and the number of Output Neurons was set at 2. Most of the above input settings are required because they match the parameters associated with the input file. Namely, the number of Columns, Rows, Input Neurons (independent variables), and Output Neurons (target variables) must be consistent with those in the training and test values. We provide an illustration of a comparable Windows form within Experiment 3.

Results

Outcomes revealed that all simulated participants performed at near perfect accuracy levels during the training of stimulus relations where the MSE ranged between 0.0002339 and 0.0002391 for all five simulations. As mentioned above, our CM architecture for this included only one hidden layer (see Fig. 7), and this feature dictated that simulated participants were exposed to a relatively large number of training epochs (i.e., 500 epochs for all simulations). Thus, in this experiment, it was not possible to compare the training requirements for simulated and human participants (i.e., epochs versus trials); however, it was possible to compare the test of novel relations for simulated participants to those of human participants.

Test of novel relations

Figure 8 displays five simulated participants’ response patterns in the assessment of class membership. As in the generalization test of novel relations by human participants in Experiment 1, when stimulus pairs were consistent with class membership, the correct CM response required identifying these stimuli as members of the same class, and a perfect set of CM responses to the 10 stimulus pairs would be 1.0 correct class formation. And, as in the generalization test of novel relations by human participants, specific stimulus pairs were not members of the same class, and the correct CM response required identifying these stimuli as unrelated. Here, a perfect series of responses to the 12 tests of incorrect stimulus relations would be 0.0 class formation.

The dashed lines illustrate the hypothesized 0.15 and 0.85 accuracy thresholds for the 22 generalization tests of novel relations. From left to right, B2C3 through A1C4 represent simulated participants’ performance levels for between-class (Unrelated) compound stimuli, and B2C1 through A2C1 represent performance levels for within-class (Related) compound stimuli

As previously noted, Fig. 8 illustrates the simulated participants’ outcomes in the formation of derived stimulus relations when the simulated participants were exposed to novel compound stimuli (in binary format). As in the illustration of human performances in Experiment 1, the dashed lines designate the demarcation between accurate “yes” and “no” responses. Consistent with the five illustrations of human performances, beginning at B2C3, our goal for correctly identifying unrelated stimuli are displayed at or below the dashed lines within each of the five bar graphs shown in Fig. 8. That is, the lower dashed line (where all bars are at or below 0.15) demarcates the thresholds for identification of unrelated stimuli (cf. Vernucio & Debert, 2016). Beginning at B1C1, the upper dashed line (at or above 0.85) demarcates the threshold for the simulated participants’ correct identification of analogous stimuli as members of the same class (i.e., related).

Discussion

In this experiment, we employed a CM yes–no protocol with the ambition of training and testing stimulus relations analogous to those used during Experiment 1. Subsequent to training, generalization tests revealed that simulated participants exhibited response patterns nearly identical to those exhibited by our human participants during the first experiment. Here, the simulated participants' performances suggest the formation of equivalence classes among untrained stimulus relations. An even more provocative finding is that the simulated and human participants merged stimulus relations between Class 1 and Class 2, suggesting that the training procedures in both experiments allowed human and simulated participants to correctly identify and merge new presentations of compound stimuli between equivalence classes.

Experiment 3

During Experiment 2, the number of epochs was set at a constant 500 for all simulated participants. This particular number of training epochs was employed because it allowed our simulated participants to perform within a 0.15 error threshold commensurate with the task performance levels demonstrated by our human pilot participants. During Experiment 2, we did not attempt to determine if particular simulated participants might have been able to perform well on the generalization tests with fewer than 500 training epochs. In Experiment 3, we restructured and employed a four-layer CM to identify the number of training epochs required to attain mastery during training (see McCaffrey, 2017).

Stopping rules

A CM may be constructed as in Experiment 2 so that training stops when a specified number of epochs are completed. Alternatively, a CM algorithm may be developed such that training is terminated when the MSE shrinks to some preset value. As mentioned earlier, during each training epoch, the MSE is calculated by updating the mean of the squared deviations between the calculated and the target values. During training, the algorithm “attempts” to reduce the MSE until it becomes so small that continued training is unnecessary. As a practical matter, many researchers employ “stopping rules” that incorporate both strategies concurrently; that is, the CM stops when the MSE shrinks to a small preset value or when a specific number of epochs has been completed. This is the approach we employed during Experiment 3. Specifically, if training exceeded 100 epochs, training was terminated. At the same time, if during training the MSE fell below 0.0006, training ended. The stopping threshold of 0.0006 was selected because when employing a four-layer CM, it provided sufficient training to allow simulated participants to attain a mastery level that closely approximated the mastery level required of our human participants; however, as with our human participants, attaining mastery during training offered no guarantee that simulated participants would perform well during generalization tests.

CM Design and Procedures

Expanding on the foundation of the three-layer architecture used during Experiment 2, we employed a four-layer CM analog of the yes–no protocol aimed at training and testing stimulus relations (see McCaffrey, 2017, on developing deep neural network algorithms). This CM is formatted so that the number of training epochs required to attain mastery is consistent with the range of training trials required by our human participants during Experiment 1. As with the CM employed during Experiment 2, our four-layer algorithm receives a target set of input values that have known accurate outputs. The algorithm (EVA 2.50) calculates optimal weights and bias values such that the difference between calculated outputs and known outputs are sufficiently close to allow the CM to generalize to new input values—inputs that are representative of a type of problem to be solved by the model.

Architecture

Figure 9 illustrates the basic architecture of our four-layer CM employed in training stimulus relations during Experiment 3. As with our three-layer CM employed during Experiment 2, each layer is composed of a set of neurons that perform mathematical operations on the training values as they traverse the layers during the training process; however, the CM developed for this experiment has two hidden layers rather than one. Thus, this architecture is fully connected and composed of an input layer, two hidden layers, and an output layer. Each hidden layer contains four neurons. As with our three-layer CM, the connections among neurons within all layers of the network are weighted, and these weights are recalculated each time the training data are sent forward and backward across the layers of the CM network. This process continues until the MSE reduces error to 0.0006.

Training a four-layer CM

As with the training of human participants during Experiment 1 and simulated participants during Experiment 2, each simulated participant exhibits a different learning rate (i.e., number of epochs to attain mastery). When employing a four-layer CM, training is likely to require fewer epochs for each simulation than when employing a three-layer network (Hagan et al., 2002; Haykin, 2008). However, as with our three-layer CM, each new simulated participant learns the relations among stimuli at a performance level that is unlike any of the previous or subsequent simulated participants. And, as with our three-layer CM, each time the simulation number is advanced to initiate the training of a new participant, all CM memory is deleted, and the new simulated participant will function at a baseline level. When the MSE falls below 0.0006, the final epoch is completed, and the total number of training epochs, the training outcomes, and the MSE are posted to the user.

Input settings

In this experiment, we set the number of “Total Columns” at 14 as there are a total of 14 columns of input variables, including two target variables, 0 and 1. The number of Training Rows is set to nine because there are nine rows representing an input pattern for each input presentation to the network. As in Experiment 2, our four-layer CM requires supervised training to derive the complex relations among stimulus pairs that do and do not share class membership; however, the number of training epochs required by our four-layer network (with four nodes in each of the two hidden layers) is fewer than our three-layer network (Haykin, 2008). Figure 10 illustrates our four-layer CM Windows form requiring the number of epochs to cumulate until the MSE falls below 0.0006.

EVA 2.50 windows form permitting interactive training and testing of simulated participants. Training values are shown under the “Input Values” heading. Training Accuracy and Error levels are shown in the center under “Training Outcomes,” and generalization test outcomes are shown under “Test Outcomes.” Momentum is set at 0.38, and the Learning Rate is set to 0.90. The number of Hidden Layers is 2, and the number of neurons within each of these two hidden layers is 4

With the above architecture, we were able to obtain very small error values for each of our nine simulated participants (see Table 4). We might have made the MSE requirement even smaller and in doing so, further reduced the error proportions obtained when training stimulus–stimulus relations. However, employing an exceedingly small MSE as a stopping requirement (e.g., an MSE set at 0.0000000001) involves a risk of “overtraining” the model. Overtraining any type of neural network frequently produces a side effect referred to as “overfitting,” a condition in which the CM performs at near perfection during training; however, this extreme level of training precision increases the likelihood of the network being unable to generalize during tests of novel relations (see Haykin, 2008, for a detailed discussion).

As in Experiment 2, the number of Columns, Rows, Input Neurons, and Output Neurons must match those of the training and test input values. Also, as in Experiment 2, the number of Test Rows is 22, and the number of Output Neurons is two. The number of Input Neurons is set to 12, consistent with the number of independent variables employed. It is important to note that determining the most functional Learning Rate, Momentum, the number of hidden layers, and the number of neurons within each of the hidden layers can only be determined by systematically exploring the outcomes obtained at various settings. For this experiment, Momentum was set at 0.38, and the Learning Rate was increased to 0.90. The number of Hidden Layers was increased to two, and the number of neurons within each of these two hidden layers was set to four. Output from this first simulation (i.e., Sim No 101) reveals that this particular run required 41 epochs. Training accuracy is displayed at 100%, indicating that the final epoch for this run produced an MSE value below 0.0006 (i.e., 0.000558). Test Accuracy is displayed at 100%, indicating that all output values for this simulation fell within the 0.15 projected error threshold (i.e., below 0.15 or above 0.85). The lower center box in Fig. 10 displays the number of weights and bias values employed as 82. The specific weights and bias values for any given run can be obtained by clicking the Save Training Outcomes as CSV button (see Ninness et al., 2018, for details on saving and reusing these values).

Results

Examination of our human and simulated participants’ training outcomes reveal the resemblances between the required number of human trials and simulated epochs needed to attain mastery. By necessity, Tables 2 and 4 illustrate two different forms of errors obtained during the training of stimulus relations to human and simulated participants. These error values are the results of two different types of error calculations required for two different types of participants; however, they are both practical and analogous strategies for identifying the amount of error that occurs during the training of stimulus relations. Table 2 displays the number of errors that occurred during the training of nine compound stimuli for each human participant. Here, the total number of trials/exposures needed to attain mastery ranges between 41 and 74 and is shown in the far right column. The errors displayed in Table 2 are a function of the human participants’ failures to correctly identify compound stimuli belonging to the same class. Likewise, the decimal error values displayed in Table 4 (and within Fig. 10 under the heading of Training Accuracy and Error) are measures of the CM’s computational inaccuracies that were obtained when attempting to identify the nine compound stimuli during the training of five simulated participants.

Ideally, human and simulated training errors would be calculated and displayed in the same metric; however, the respective error values are a function of different learning processes. When human participants fail to identify compound stimuli correctly, errors are calculated and identified as the number of repeated trials (number of exposures) required for attaining mastery. When the CM’s simulated participants fail to identify compound stimuli correctly, errors are computed and identified as the proportion of error existing between the exact and computed values of compound stimuli. Thus, the small decimal values within Table 4 represent the differences between the actual values and the obtained values for each of the five simulated participants across all nine compound stimuli.

In other words, unlike human participant errors that are a function of the number of repeated exposures needed to attain mastery as displayed in Table 2, the simulated participant’s errors (shown as small decimal values within Table 4) represent the proportion of differences between known and calculated values when identifying compound stimuli that are members or nonmembers of the same class. Irrespective of the manner in which error values are calculated for human and simulated participants, the total number of human participant exposures and simulated participant epochs needed to attain mastery in the final columns of Tables 2 and 4 is nearly identical (i.e., 41 to 74 and 41 to 73, respectively).

Visual inspection of these two tables shows the diversified but negligible array of errors for both types of participants and both types of errors. Although the “range” of required exposures and epochs is similar, there is as much error diversity among human participants as there is among simulated participants. This is as expected. As in the training of human participants, in which one individual’s learning does not reliably forecast another’s skill acquisition even under duplicate training conditions, simulated participants usually produce slightly different task performance levels during consecutive simulations. Such diversity is a function of memory deletion and reacquisition of learning each time the CM’s simulation number is changed. Analogous to the diversified task performance levels demonstrated by separate humans within the same experimental preparation, advancing the CM’s simulation number will generate some new level of variability for each simulated participant. As noted by Ninness et al. (2018, p. 132), “. . . from the perspective of a researcher who wants to simulate human learning, neural networks demonstrate human-like variations across simulated participants. . . .” The outcomes shown in Tables 2 and 4 (as well as Figs. 6 and 11) illustrate the human training trials and the CM training epochs. While there is no point-to-point correspondence for simulated and human participants’ training errors, visual inspection of the respective tables and figures in each experiment show a diverse but negligible pattern of errors for human and simulated participants during training. More importantly, training outcomes obtained in Experiment 3 speak to the resemblances in the number of training epochs required of simulated participants and the number of training exposures required of human participants in Experiment 1.

The dashed lines illustrate the hypothesized 0.15 and 0.85 accuracy thresholds for the 22 generalization tests of novel relations. Simulations 101 through 105 are shown in conjunction with the number of Epochs needed before being exposed to these generalization tests. From left to right, B2C3 through A1C4 represent simulated participants’ performance levels for between-class (Unrelated) compound stimuli, and B1C1 through A2C1 represent performance levels for within-class (Related) compound stimuli

Test of novel relations

Figure 11 shows our five simulated participants’ outcomes in the formation of derived stimulus relations when exposed to novel compound stimuli during the generalization test. As in the generalization test of novel relations by human participants in Experiment 1 and the simulated participants in Experiment 2, when compound stimuli were members of the same class, the correct CM response required recognizing these stimuli as sharing class membership. As with the human performances in Experiment 1 and the simulated participants in Experiment 3, the dashed lines demarcate the accuracy of the “yes” and “no” responses. As with our human participants during Experiment 1, all simulated participants performed within our hypothesized error threshold during the generalization test.

SOM Analysis of Experiments 1 and 3

Looking at the generalization Tests of Novel Relations from Experiment 1 and Experiment 3, it is possible to conduct several types of data analyses. The Kohonen Self-Organizing Map (SOM) is widely acknowledged as a precise and robust unsupervised neural network that is particularly useful in pattern recognition when applied to diversified datasets (Ultsch, 2007). The SOM’s ability to recognize and classify linear and nonlinear data patterns makes it an especially valuable procedure in the analysis of small-N datasets, and this distinguishes it from conventional univariate and multivariate statistical procedures. As described by Ninness et al. (2012, 2013), rather than conducting supervised training in which weights are modified by decreasing the discrepancies between target and calculated values, the SOM systematically decreases the Euclidean distance among all input records, recognizing cohesive data patterns that would be difficult to isolate and classify by way of traditional statistical methodologies. Essentially, the SOM is used to cluster large or small datasets that have common patterns. Records with similar patterns are identified with unique labels, and records that do not belong to the same pattern are identified as belonging to different groups and labeled as such (see Kohonen, 2001, for a complete discussion).

In order to provide a more rigorous examination of our findings from Experiments 1 and 3, we conducted a SOM analysis of the tests of novel relations by combining the generalization test data from both of these experiments. Note that data from Experiment 2 were not included in our SOM analysis. Rather, we assessed the generalization outcomes from Experiment 1 (Fig. 6) combined with Experiment 3 (Fig. 11) using the SOM to recognize corresponding data patterns across these two experiments. In other words, we combined the generalization outcomes from human and simulated participants into one dataset in an effort to identify the related patterns for both human and simulated participants as they pertain to compound stimuli that did or did not share class membership. Figure 12 shows that the SOM recognized two distinct patterns. Pattern 1 is composed of the combined human and simulated participants’ task performances identifying compound stimuli that are “not members of the same class,” and Pattern 2 illustrates the combined human and simulated participants’ compound stimuli that “are members of the same class.” Thus, the SOM did not differentiate between human and simulated participants, only between task performances identifying compound stimuli that were, or were not, members of the same class.Footnote 1 The SOM neural network (and sample datasets) can be downloaded from www.chrisninness.com. As an alternative, SPSS Modeler 15.00 contains a series of SOM strategies under the general heading of Kohonen Node (see IBM Knowledge Center, 2017)

Illustration of outcomes from the SOM analysis of pattern recognition. Pattern 1 shows the human and simulated participants’ performances identifying compound stimuli that were not members of the same class, and Pattern 2 shows the combined human and simulated participants’ performances that were members of the same class

Discussion

As previously described, the numbers of CM training epochs necessary to achieve mastery in Experiment 3 closely approximate the numbers of training trials required of our human participants in Experiment 1. The training threshold for human participants entailed correct identification of all accurate (A1B1, A1C1, A2B2, A2C2, A1A2) and inaccurate (A1B3, A1C3, A2B4, A2C4) stimulus–stimulus relations, whereas our simulated participants’ mastery required that training continue until the MSE fell below 0.0006. More importantly, an examination of the respective generalization tests (i.e., Figs. 6 and 11) show that our simulated participants exhibited accurate performance levels that closely approximated those of our human participants during Experiment 1. As in Experiment 2, the simulated and human participants merged stimulus relations between Classes 1 and 2, demonstrating that our training procedures allowed human and simulated participants to identify the novel presentations of compound stimuli within and between equivalence classes. While training outcomes displayed by our simulated participants employed different error metrics than our human participants, the performance levels of our simulated participants are congenial with those of our human participants. As a practical matter, outcomes displayed in Fig. 12 reveal that our four-layer CM allowed us to obtain comparable performance levels for human and simulated participants.

General Discussion

Visual inspection of the generalization test findings from our three experiments (as shown in Figs. 6, 11, and 12) illustrate the corresponding levels of correct and incorrect performances in tests of accurate and inaccurate stimulus relations by human and simulated participants. Importantly, simulated and human participants demonstrated class merger of derived stimulus relations for Classes 1 and 2, suggesting that the training procedures in all three experiments allowed human and simulated participants to merge novel complex presentations of compound stimuli between equivalence classes. Our human and CM findings are consistent with early research in the area of class merger (e.g., Sidman, Kirk, & Willson-Morris, 1985); however, such outcomes have not been demonstrated by simulated participants. These findings extend our understanding of the corresponding conditions for humans and CMs in the formation of larger and more complex combinations of derived stimulus relations.

The findings from our three experiments are congenial with previous research by Fienup and Critchfield (2011) in which equivalence-based instructional strategies were effective in establishing complex concepts in the area of inferential statistics within and between equivalence classes (cf. Mackay et al., 2011). From a behavior analytic perspective (e.g., Walker, Rehfeldt, & Ninness, 2010; Fienup et al., 2010), verbally able humans may acquire complex academic repertoires as a function of rule following combined with coming into contact with consequences for acting in accordance with rules. Analogously, a CM’s simulated participants may acquire the ability to derive complex relations among stimuli by coming into contact with consequences for acting in accordance with the delta rule. We are not suggesting that the delta rule operates in a manner that coincides with human rule-governed behavior; we are only noting that the delta rule effectuates the supervision of simulated participants.

Another way to conceptualize this process is that the CM algorithm provides a form of supervised training that allows simulated participants to systematically acquire accurate stimulus relations based on a sample set of training values. Much like their human counterparts, subsequent to training, simulated participants are often capable of deriving relationships among new and complex stimuli to which they have had no previous exposure. As previously mentioned, a particularly provocative finding in this study is the extent to which CMs appear to be able to derive relations between classes. As with human participants, during training, we see the acquisition of within-class relations. And, during generalization tests, we see the emergence of untrained stimulus relations between classes as well as the identification of incorrect stimulus relations.

With the current exponential advances in computer modeling across academic disciplines, the extent to which CM algorithms may be capable of emulating and predicting human behaviors remains an inevitable and increasingly dynamic area of scientific exploration. As mentioned at the beginning of this article, only a few decades ago, simulating human behavior by way of a computer algorithm might have been viewed as a potentially interesting theme for a science fiction script, but now the culture is awash in software engineering advances, and researchers across academic disciplines are quickly developing computer algorithms with increasingly powerful applications aimed at modeling and predicting a variety of human behaviors (e.g., see Greene et al., 2017, regarding neural networks and consumer behaviors).