Abstract

Raw palm oil mill effluent (POME) is classified as a highly polluting effluent, which needs to be treated to an acceptable level before being discharged into water bodies. Currently, chemical coagulants are widely used in treating POME, but their hazardous nature has caused several health and environmental problems. Therefore, this research presents the use of natural materials such fenugreek and okra as bio-coagulants and bio-flocculants, respectively, for the treatment of POME. Artificial neural network (ANN) modelling technique was used for the estimation of predicted results of the coagulation-flocculation process. The responses of the process were the percentage removal of total suspended solid (TSS), turbidity (TUR) and chemical oxygen demand (COD), while the inputs were fenugreek dosages, okra dosages, pH and mixing speed. The ANN model was developed using 12 different training algorithms. Scaled conjugate algorithm (SCG) proved to be the best training algorithm with lowest mean-squared error (MSE), mean absolute error (MAE), mean absolute percentage error (MAPE) values for all the three outputs. The MSE, MAE and MAPE values were: 16.64, 2.10, 0.03 for the TSS response; 5.05, 1.03, 0.01 for the TUR response; and 54.59, 3.82, 0.07 for the COD response, respectively. The ANN model with a regression R of 0.8629 proved to be the best for the prediction of all the responses in this study. The results proved that ANN can be applied to predict TSS, TUR and COD of POME.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Palm oil mill effluent (POME) is a liquid waste with an unpleasant odour, acidic properties, and high oxygen demand. These characteristics make it a highly polluting wastewater, hence cannot be directly released into water bodies (Sethu et al. 2019). The general characteristics of raw POME are given in Table 1. The values were obtained from a local palm oil mill. There are several methods used to treat POME, and the most popular one is the ponding method, via anaerobic, aerobic and facultative treatment ponds. Though efficient, this process takes a very long time, up to about 6 months to treat and release or reuse the water. Besides that, this treatment method is not very attractive these days as greenhouse gases are emitted directly into the environment (Yacob et al. 2006). Thus, a much faster and more efficient method that is economically and environmentally friendly is required. One such method, which is becoming popular these days, is coagulation and flocculation with natural materials.

Coagulation and flocculation are widely used in treating wastewater and these coagulants can be either organic or inorganic in form. Ab Kadir et al. (2004) concluded that chemical coagulants are the fastest way to treat POME, and proper selection of coagulant and its optimum dosage succeed in reducing about 60% of BOD and COD content along with 90% of suspended solids content. Aluminium sulphate (alum) is widely used as coagulant in wastewater treatment. However, recent studies reported that using alum generates voluminous sludge and it is linked with health-related issues such as Alzheimer’s disease and senile dementia (Ugwu et al. 2017). Therefore, natural coagulants are sought by researchers as a viable alternative in overcoming the drawbacks of using coagulants.

The application of natural coagulants in wastewater treatment has been extensively studied since natural coagulants produce promising results (Yin 2010; Asad et al. 2020). The advantages of using natural coagulants are that they are eco-friendly, cost-effective, generate a minimal amount of sludge and have a higher nutritional value which could be used as fertilizers and as an energy source (Sethu et al. 2019). Bhatia et al. (2007) reported that 99% removal of suspended solid in POME treatment was achieved using Moringa oleifera seeds extract as coagulant at optimum pH 5, settling time 114 min, 3469 mg/L of M. oleifera dosage and 6736 mg/L of flocculant dosage. Freitas et al. (2015) reported that the addition of okra mucilage as coagulant into textile wastewater treatment was able to increase the COD removal by 35.74% and reduce the chemical coagulant dosage by 72.5%. Chung et al. (2018) reported that when peanut coagulant and okra flocculant were used to treat POME, the highest observed removal efficiencies of TUR, TSS and COD were 92.5%, 86.6% and 34.8%, respectively. The optimum pH, and coagulant and flocculant dosages were pH 11.6, and 1000.1 mg/L and 135.5 mg/L, respectively. They also reported that for wheat germ coagulant and okra flocculant the removal efficiencies were 86.6%, 87.5% and 43.6% for TUR, TSS and COD, respectively. The optimum parameters were pH 12, coagulant dosage 1170.5 mg/L and flocculant dosage 100 mg/L. Choong et al. (2018) utilised chickpea as the natural coagulant and flocculant for the treatment of POME. They reported that the optimum condition was established at pH of 6.69, chickpea dosage of 2.6 g/L, and rapid mixing speed of 140 rpm. At the optimum condition, the removal percentages of TUR, COD and TSS were 86%, 56% and 87%, respectively. Thus, from all the above studies, it is clear that natural coagulants perform far superior than their chemical counterparts, hence, the need of the current research. The present work was carried out to test the efficacy of a natural and abundantly available bio-coagulant (fenugreek), and a natural bio-flocculant (okra mucilage), to be used for the treatment of POME. Results obtained were modelled with artificial neural networks (ANN).

Artificial neural network is a simulation technique used for predicting responses of input data and identify relationships between input and output data. Several researchers have utilized ANN for modelling various non-linear systems. Ahmad Tajuddin et al. (2015) developed an ANN model to predict the percent removals of COD, TSS and TDS through five input variables, namely flocculant dosage, fenugreek dosage, rapid mixing speed, rapid mixing time and pH. Selvanathan et al. (2017) used ANN modelling for the investigation of biochar derived from rambutan peel via slow pyrolysis as potential adsorbent for the removal of copper ion Cu (II) from aqueous Haghiri et al. (2018) used ANN to determine the optimum coagulant dosage for water treatment process by developing two models, i.e., the first to predict the water quality parameter, and the second to predict the optimal coagulant dosage. In a separate study, a multilayer feed-forward neural network (FANN) model was trained with an error back-propagation algorithm to predict char yield from reaction time and temperature input (Arumugasamy and Selvarajoo 2015). Selvarajoo et al. (2019) focused on modelling the weight loss due to the effect of temperature and time of banana peels during pyrolysis thermal degradation. An ANN model was developed based on single layer hidden neurons. The model was trained based on the backpropagation technique using the Levenberg–Marquardt (LM) optimization algorithm.

Pakalapati et al. (2019a) used ANN for the prediction of polycaprolactone (PCL polymer) conversion. They used five training algorithms of ANN to carry out the modelling. Among the five, LM proved to be the most appropriate training algorithm. Pakalapati et al. (2019b) utilized ANN to predict the biopolymer (PCL) polydispersity index from the enzymatic polymerization process with four different training algorithms, out of which, the LM training algorithm proved to be most suitable for their work. Wong et al. (2018) modelled the enzymatic polymerization of e-caprolactone using 11 training algorithms of ANN, and found LM to be the best algorithm. Pilkington et al. (2014) used ANN to predict the percentage recovery of artemisinin through the extraction of A. annua. They used extraction temperature, duration and solvent (petroleum ether) as inputs and leaf proportions on the recovery of artemisinin from leaf steeped in solvent as output. In the work of Karri and Sahu (2018), a low-cost adsorbent produced from palm oil kernel shell based agricultural waste was examined for its efficiency to remove Zn(II) ions from the aqueous effluent. They made use of ANN for the study of optimal values of independent process variables to achieve maximum removal efficiency. The inputs to the ANN model were the factors (initial solution concentration, pH, adsorbent dosage, residence time and process temperature) and the percentage removal of Zn(II) ions was chosen as a response. They had the metaheuristic differential evolution optimization strategy embedded into the ANN architecture which results in a hybrid of both methods (termed as ANN-DE). Yogeswari et al. (2019) made use of ANN for the estimation of hydrogen production from confectionery wastewater. Their input parameters were time, influent COD, effluent pH and volatile fatty acids (VFA). They concluded that maximum COD removal efficiency of 99% and hydrogen production rate of 6570 mL/d was achieved at 7.00 kg COD/m3d and 24 h of hydraulic retention time.

In this research, ANN has been used to evaluate and predict the TSS, TUR and COD removal efficiency of the coagulation-flocculation process using fenugreek-okra in treating POME. The objective of this research paper was to determine the performance comparison for the coagulation-flocculant process in efficiency removal of TSS, TUR and COD under 4 operating conditions, namely fenugreek dosage, okra dosage, pH and mixing speed. ANN models of the coagulation-flocculation process were developed using Matlab™. ANN was used to predict TSS, TUR and COD results through regression value (R), mean squared error (MSE), mean absolute error (MAE) and mean absolute percentage error (MAPE). The novelty of this research is in terms of modelling, where 12 training algorithms of ANN have been used instead of only using LM training algorithms which is the most popular training algorithm and usually found in most of the literature. This comparison of training algorithms provides the conclusion of the most suitable training algorithm for the obtained data.

2 Materials and Methods

2.1 Preparation of Samples

2.1.1 POME Sample

POME samples were obtained from Jugra Palm Oil, Banting, Selangor, Malaysia. The samples were collected at a temperature range of 70˚C to 80˚C from the first anaerobic pond. The samples were cooled down to room temperature and stored in an airtight container, away from sunlight to avoid decomposition due to microbial reaction. The characteristics of the POME are given in Table 1.

2.1.2 Fenugreek Coagulant

Fenugreek was purchased from a local supermarket in Semenyih, Malaysia. The fenugreek was dried in an oven (Memmert GmbH) at 60˚C for 24 h. The dried fenugreek was ground into fine powder using a domestic blender. The fine powder was sieved to a size range of 200 µm to 500 µm through a sieve shaker and was stored in a sealed beaker and kept in a dry place. The fenugreek stock solution was prepared by dissolving 10 g of fenugreek powder in 500 ml of distilled water.

2.1.3 Okra Flocculant

Fresh okra was bought from a local supermarket in Semenyih, Malaysia. The okra was washed to remove impurities before it was cut into small pieces. The pieces were then immersed in a container filled with distilled water for 12 h to extract the mucilage. The mucilage was squeezed using a muslin cloth and stored in an airtight beaker and kept in a cool and dry place.

2.2 Jar Test Experiments

Jar test experiments were performed in batches of six, using the Lovibond flocculator. The POME samples were homogenized by inverting the plastic container several times before it was poured into the beaker. Each jar test was carried out using 500 mL of POME and initial readings, namely pH, TSS, TUR and COD were measured for each jar test. The jar test was conducted by following the combination of the four manipulating variables that have been generated by the Design Expert™ software. Each manipulating variable, namely pH, mixing speed, fenugreek, and okra dosage were adjusted for each jar test as shown in Table 2.

The pH value of the POME sample was altered to the desired pH value within the range 3 to 8 by adding either 0.1 M of sodium hydroxide (NaOH) or 0.1 M of sulphuric acid (H2SO4). The coagulation and flocculation process were carried out by adding the fenugreek coagulants at the beginning, at dosages from 4 to 8 g/L, with rapid mixing for 2 min. The mixing speed values used were based on the ones given in Table 2. After rapid mixing, the okra flocculants were added with dosages between 20 and 100 mL, and slow mixing was carried out at 60 rpm for 30 min. The rapid agitation favours mixing of reagents and destabilization of particles, while the slow agitation was for stimulating collision of particles to promote agglomeration of particles. The POME samples were then allowed to settle for 4 h.

2.3 Experimental Analyses

TSS was measured using the Hach DR 3900 spectrophotometer, and turbidity was measured using the Martini Mi415 turbidity meter. As for COD, 2 mL of the treated POME samples were mixed with high-range Hach COD vials separately and the samples were digested at 150 oC for 2 h. After that it was cooled to room temperature and the COD reading was taken using the Hach DR 3900 spectrophotometer. Each experiment was carried out in triplicates to increase accuracy. Values obtained were averaged and reported. The removal efficiencies of TSS, turbidity and COD were calculated using Eq. (1):

Zeta potential analysis was conducted on the fenugreek solution and raw POME using the Malvern Zetasizer. Its main aim was to measure the magnitude of the electrostatic charges of particles, which is vital to explain the attractive or repulsion forces that exist between particles during POME treatment.

2.4 Modelling with ANN

An artificial neural network (ANN) is a computational model inspired by the concept of human brain consisted of units called neuron. In the present study, modelling was carried out using MATLAB 2016a software. Generally, a neural network consists of 3 layers namely input, hidden and output layers. Each layer is connected through neurons via weight matrices. Figure 1 shows a single layer network where an input vector P is connected to neuron input through weight w that is usually associated with vectors and nodes. The input is multiplied by weight to form a product wp. The sum of weighted input and bias b form a transfer function of net input which determines the activation of neurons, and finally, forming an output after processing the transfer function f. Activation function or transfer function usually used is the sigmoid, which is logistic sigmoid (logsig) and hyperbolic tangent sigmoid (tansig) function.

There are several types of neural network techniques, namely feedforward, recurrent, recursive, convolution. Feedforward backpropagation neural network (FFNN) is the most commonly used among all multilayer perception (MLP) networks because in this network the information moves only from the input layer directly through the hidden layers to the output layer without any cycles or loops.

2.4.1 ANN Structure

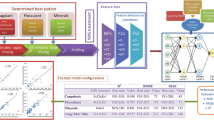

The ANN is composed of 3 layers which are the input layer, the hidden layer, and the output layer. The input layer contained four neurons since four parameters were manipulated in this study, namely pH, mixing speed (MX), fenugreek dosages (FD) and okra dosages (OD), whereas the output layer had three neurons as three parameters were controlled, namely TSS, TUR and COD removal percentages. There are a total of three transfer functions that were used in this study, namely tansig, logsig and purelin. Through trial and run method, it was found that the ‘tansig-purelin’ combination function results in the best regression, R value and lowest MSE value for all the three outputs. Thus, the neurons in the hidden layer used the ‘tan sigmoid’ function, whereas the output neurons used the ‘purelin’ function. The proposed network topology is 4-N-3, as illustrated in Fig. 2. The number of nodes in the input layer is four, and N is the number of neurons in the hidden layers, and three is the number of neurons in the output layer.

2.4.2 Data Division and Collection

Twenty five trial runs were obtained from Design Expert™ software, as presented in Table 2. The network was trained using these 25 runs. All the data needed to be normalized, and once the input and target data were normalized, it was divided into three sets of data which were training, validation and testing data through the ‘divideblock’ function. 50% of the data were used for training, 25% used for testing and 25% used for validation. The training dataset was used to train the model for adjusting the weights and biases, whereas the validation set was used to minimize overfitting. The data for the testing set was used to evaluate the prediction performance of the network.

2.4.3 Data Normalization

Input and output were normalized so that all data were in the uniform range for each parameter. This was to enhance the network performance and stability, since each variable, either input, output or target, was composed of varied ranges. The data was normalized in uniform range within an interval of -1 to 1 using the min-max normalization approach as shown in Eq. (2):

where x = actual data; xmin= minimum value of actual data; xmax= maximum value of actual data; y= minimum value in normalized data (default=-1); ymax= maximum value in normalized data (default=1)

2.4.4 Hidden Neuron Selection

The number of hidden neurons and hidden layers play a significant role in the performance of the network, especially for the MSE, MAE and MAPE values. Therefore, the network was trained using a trial-and-error method with hidden neurons varying from 1 to 25, in order to determine the optimum hidden neurons with the least error. Excessive number of neurons may cause overfitting of the network, whereas insufficient number of neurons may cause inaccuracy of performance.

2.4.5 Training Algorithm

Training of data is essential as it determines the optimal weights and bias points of the network to minimize the error between the output and target data. Backpropagation was used generally to calculate derivatives of performance through weight and bias variable; it functions by calculating the output layer first then propagate it backward.

Backpropagation algorithms can be categorized into six classes which are: (1) Adaptive momentum; (2) Self-adaptive learning rate; (3) Resilient backpropagation; (4) Conjugate gradient; (5) Quasi-Newton; and (6) Bayesian regularization. A further explanation of these algorithms is given in Sect. 2.3.5.1 till 2.3.5.7.

Adaptive Momentum (Training Function: GD, GDm)

Gradient descent backpropagation (GD): GD is the batch steepest descent training algorithm in which the weights and biases are updated in the negative gradient direction. The algorithm used seven training parameters namely epochs, show, goal, time, lr, min_grad and max_fail.

Self-adaptive Learning Rate (Training Function: GDx, GDa)

Gradient descent with momentum and adaptive learning rate backpropagation (GDx): ‘GDx’ states that the algorithm converges much slower than the other training algorithms, therefore, it is recommended to use this algorithm in training networks with early stopping since it will let the network to converge much slower to minimize the error.

Resilient Backpropagation (Training Function: trainrp)

The resilient backpropagation (RP) is used generally to eliminate harmful effects of partial derivatives magnitudes. The advantage of this algorithm is that the algorithm is much faster than the standard descent algorithm.

Conjugate Gradient (Training Function: traincgf, traincgp, traincgb, trainscg)

All of the conjugate gradient algorithms are required to determine the steepest descent direction which is the negative of the gradient on the 1st iteration since all the algorithms are based on conjugate directions. The steepest descent gradient can be calculated using Eq. (3). Then, the optimal distance was calculated using the line search method through Eq. (4). And Eq. (5) is the general equation that combines new steepest decent direction and previous search direction to determine a new search direction. The conjugate gradient has moderate memory requirements.

Conjugate Gradient Algorithms

-

(i)

Polak-Ribiere Update (CGP): For CGP, the search iteration was determined using Eq. (5) with computing new constant of βk (Eq. 6) in Eq. (5). It is stated that the algorithm has performance similar to CGF. The storage requirement of CGP (4 vectors) is slightly larger than CGF (3 vectors):

-

(ii)

Powell-Beale Restarts (CGB): It is stated that the CGB algorithm has better performance than the CGP algorithm and the storage requirement for CGB (6 is larger than CGP (4 vectors).

-

(iii)

Scaled Conjugate Gradient (SCG): The SCG algorithm is used to avoid time-consuming The algorithm combines LM and conjugate gradient approaches.

Quasi-Newton (Training function: trainbfg, trainoss, trainlm)

-

(i)

BFGS algorithm (BFG): The Newton’s method is considered as an alternative to the conjugate gradient since it provides fast optimization and convergence. The Newton’s method is based on the use of Eq. (7):

where Ak−1 is a Hessian matrix (second derivatives). However, it is not preferable to compute the matrix in the feedforward network due to its complexity and high cost. BFGS is recommended to be applied for smaller networks as it requires large storage since it needs more computation in each iteration.

-

(ii)

One step secant (OSS): OSS method is an alternative to BFGS algorithm as OSS requires smaller storage and does not involve second derivatives calculation and does not save the matrix since the algorithm assumes that the Hessian matrix is an identity matrix for each of the iterations.

-

(iii)

Levenberg-Marquardt (LM): The LM algorithm is commonly used in training a neural network mainly moderate-sized feedforward network, since LM appears to be the fastest method. This is presumably due to the efficiency of the matrix shown in Eq. (8), which reduces the performance function in each of the iteration. The advantage of this algorithm is that it can also be used for second-order training without computing the Hessian matrix:

Bayesian Regularization (Training function: trainbr)

Bayesian regularization backpropagation (BR) is a function used to minimize a combination of squared error and weight. It adjusts the weight and bias values according to LM optimization through calculating backpropagation by calculating the Jacobian performance X. This function is given in Eq. (9):

2.4.6 Optimization of ANN

ANN modelling is developed based on a trial-and-error method with different numbers of neurons. This technique is used for different training algorithms. Each training method results in a regression, R which indicates the relationship among the variables, thus, the R value should be near 1. Any training with R value of 0.8 to 1.0 is considered as a satisfactory result. The training process of the network will generate training, validation and testing errors. The error is calculated as the difference between target and output data. The selection of number of neurons and training algorithm is based on fitting of the graph with least value of Mean Squared Error (MSE), Mean Absolute Error (MAE) and Mean Absolute Percentage Error (MAPE) whose formulae are given by following Eqs. (10) to (13):

where n is the experiment number; EV is the experimental value of ith experiment; PV is the predicted value of ith experiment.

However, it was found that the network still could not reach regression R higher than 0.8 after conducting several trial-and-run methods. Thus, ‘repmat’ function was added into the coding in order to multiply the input by 3 which results in total of 75 input data. This is presumably due to the fact that the network was a multi-input and multi-output (MIMO) model with limited input data.

3 Results and Discussion

3.1 Interaction between Process Variables and Responses

3.1.1 Effect of Coagulant Dosage

Figure 3 shows the influence of fenugreek dosage on the removal efficiencies of TSS, TUR and COD on POME treatment. It can be observed that the increase in coagulant dosage was able to increase the percent removal of TSS, TUR and COD. Highest percent removal of TSS, TUR and COD obtained at the above conditions are on average 90%, 80% and 70%, respectively.

This is probably due to the fact that fenugreek powder has rough and highly porous surfaces, which increases the overall surface area, thus increasing the adsorption sites (Chung et al. 2018). Sethu et al. (2015) stated that the fenugreek seed is an anionic polyelectrolyte and the coagulation process involves adsorption and inter-bridging mechanisms due to the fact that the seeds have protein that composes amide group. This statement is further verified by analysing the fenugreek solution with zeta potential that results in negative net charge of -39.6 mV. The reduction of removal efficiencies in certain dosages is probably due to that the negatively charged fenugreek replaces the anionic groups on POME colloidal particles (Ab Kadir et al. 2004).

3.1.2 Effect of Flocculant Dosage

Figure 4 shows that the TSS, TUR and COD removal increased with increasing okra dosage. Maximum removal percentage of TSS, TUR and COD were on average 95%, 95% and 70%, respectively.

The high removal may be due to the protein content in okra that act as active agent in flocculation process (Fahmi et al. 2013). It was reported that okra that was extracted with distilled water is an anionic polyelectrolyte composed of galactose, rhamnose and galacturonic acid which make the okra having acidic property with pH of 5.8. Okra was reported to mainly flocculate through bridging since the mucilage has similar composition to cactus (Sethu et al. 2019). This can be explained as both okra and cactus have galacturonic acid, which acts as an active component that contributes to the formation of bridging for the particles to adsorb onto (Yin 2010; Freitas et al. 2015). Therefore, higher dosage of okra should result in achieving higher removals of TSS, TUR and COD. The okra tends to replace the ionic group on the colloidal particle of POME which allows hydrogen bonding to occur between the colloids and okra. The reduction in removal efficiencies may be due to the re-stabilization of particles since the okra was negatively charged (Sethu et al. 2015).

3.1.3 Effect of pH

The pH was altered within the range of 3 to 8 to determine the effect of pH towards the removal efficiencies of TSS, TUR and COD. Based on Fig. 5, the removal efficiency slightly decreased with increasing pH.

This phenomenon can be explained as follows: pH changes usually do not affect the efficiency of bio-coagulants, thus any change in percent removals of TSS, TUR and COD must be due to its effect on the constituents of POME (Mishra et al. 2004). In addition, the reduction in removal efficiencies was due to an increase in concentration of OH− ions. At high pH, the OH− ions tend to compete with organic molecules for the vacant adsorption site on the coagulant (Ab Kadir et al. 2004). Under alkaline conditions, any positive charged reagent will tend to be negatively charged due to increasing of OH− ions, which can be illustrated in Table 3. Based on Table 3, maximum removals of TSS, TUR and COD achieved at pH 3 are probably due to occurring charged neutralization since raw POME is positively charged while the fenugreek solution is negatively charged at pH 3.

3.1.4 Effect of Rapid Mixing Speed

Rapid mixing speed plays a significant role in the coagulation-flocculation process, since it is during the mixing stage when the destabilization reaction and formation of floc particles occur. Figure 6 shows that the removal efficiency of TSS, TUR and COD increased as the mixing speed increased from 150 to 200 rpm.

Higher mixing speed causes increasing collision of particles and attachment which then promotes agglomeration of particles to form larger flocs. Besides, increasing rapid mixing speed during coagulation causes increasing of shear stress applied on the flocs which then lead to formation of denser and compact flocs (Ahmad Tajuddin et al. 2015; Choong et al. 2018). This mainly affected the removal of TSS and TUR, and not very much the removal of COD. From Fig. 6, it can be seen that the maximum percent removals of TSS, TUR and COD were found to be on average at 98%, 98% and 85% at a mixing speed of 200 rpm.

3.2 Artificial Neural Network Modelling

The experimental and predicted value from ANN modelling for TSS, TUR and COD with SCG training algorithm is given in Table 4.

3.2.1 TSS Response

Based on Tables 5, 6 and 7, the lowest MSE, MAE, and MAPE values for TSS response, or the output from the model, can be determined. Table 5 shows the MSE values for the TSS response for hidden neurons varying from 1 to 25. Table 5 provides the MSE values for 12 different training algorithms that were used in the study. The lowest MSE of 16.64 (bold font, Table 5) is obtained for SCG for 25 hidden neurons (HN). The largest MSE is 325.69 (bold font, Table 5) for GDX when HN is 12. Overall GD, LM and BR have shown lower MSE values for HN from 1 to 25 consistently. BR has been consistently having MSE values lower than 20 for HN 4 through 25. On the other hand, GD, GDA and GDX have shown some very high values of MSE of 177.97 for HN = 4, 124.33 for HN = 20, 135.95 for HN = 2, respectively.

Table 6 presents the MAE values for TSS response. MAE values for the hidden neurons from 1 to 25 are given in Table 6 for the 12 training algorithms. Lowest MAE values of 1.98 is obtained for LM with HN = 15. Highest MAE of 14.26 is obtained for GDX with HN = 9. LM and BR have shown good performance in terms of MAE whereas GD, GDA, and GDX are the worst performers with GDA showing very high MAE values of 13.84 and 12.46.

Table 7 shows the MAPE values for the TSS response. Lowest MAPE value of 0.03 and highest MAPE value of 0.16 is obtained for GDX with HN = 12. As an overall, the LM, BR and CGF have shown lower MAPE values, whereas GDA and GDX have shown higher values of MAPE.

3.2.2 TUR Response

Based on Tables 8, 9 and 10, lowest and highest MSE, MAE, MAPE values are determined for the TUR response for the 12 training algorithms. Table 8 shows the MSE values for the 12 training algorithms. BR and LM show better performance than other training algorithms. GDX is the worst performer of all. SCG shows the lowest MSE value of 5.05 for HN = 25 and highest MSE value of 73.68 for GD with HN = 4. Table 9 shows the MAE values for HN 1 through 25. LM and BR show good performance whereas GDA and GDX show poor performance. Lowest MSE value of 1 is obtained for LM with HN = 15. Highest MSE of 6.89 is for GDX with HN = 9. Table 10 shows the MAPE values for HN 1 through 25. LM shows good results compared to other training algorithms with multiple training algorithms showing lowest MAPE value of 0.01. The highest MAPE value of 0.07 is obtained for GDX HN = 9.

3.2.3 COD Response

Tables 11, 12 and 13 present MSE, MAE and MAPE values, respectively, for the COD response. Table 11 shows the MSE values for HN 1 through 25. Lowest MSE value of 54.59 was obtained for SCG HN = 25. Highest MSE value of 586.36 is obtained for GDX for HN = 23. BR has shown multiple lowest values of MSE for HN 6 through 25. GDX has shown multiple high values of MSE. Table 12 shows the MAE values with the lowest being 3.62 for LM with HN = 16. Highest MAE of 19.58 is for GDX with HN = 12. LM and BR have shown good performance where as GDX is worst performer with very high values of MAE for many HN’s. Table 13 shows the MAPE values with lowest being 0.06 for LM with HN = 17. Highest MAPE of 0.34 is obtained for GDX with HN = 12. Best MAPE performance has been seen in BR and LM and worst MAPE is for GDX with multiple high values of MAPE for various HN’s.

Based on the response of all the 3 outputs from Tables 3, 4, 5, 6, 7, 8, 9, 10 and 11, it can be concluded that the 25 hidden neurons were the optimal number of neurons in the hidden layer since most of the training algorithms for TSS response results in lowest value of MSE, MAE and MAPE value for 25 hidden neurons. The same holds for TUR and COD response. Through the 3 outputs, the selection of the training function is mostly based on COD response since the minimal MSE value that can be reached was 54.71 compared to TSS (16.64) and TUR (5.06) responses. Then, the best training algorithms for COD response is then further sorted by comparing the chosen training algorithm in TSS and TUR response which also gives the least value of MSE, MAE and MAPE values.

Four best training algorithms were identified namely Levenberg-Marquardt (LM), Bayesian regularization (BR), one step secant (OSS) and scaled conjugate gradient (SCG) training function. These four best algorithms were further sorted based on training algorithm that results in the least value of MSE, MAE and MAPE for each data sets namely the training (TR) data set, the testing (TS) data set and the validation (VL) data set for all the 3 outputs.

3.3 Optimization and Validation of the Experimental Results

In order to determine the best training algorithm among the four selected training functions, the MSE, MAE, and MAPE values were analysed. Based on Figs. 7 and 8, as well as Tables 14, 15 and 16, the scaled conjugate gradient (SCG) training algorithm proved to be the best training algorithm among all, since it has the most optimal MSE, MAE and MAPE values for the training, testing and validation data for all the 3 responses. For the TSS output, SCG results in MAE, MAE and MAPE values of 18.18, 2.26, 0.03 for training data set, 8.44, 1.24, 0.03 for testing data set and 21.53, 1.24, 0.03 for validation data set. In terms of the TUR output, SCG results in MAE, MAE and MAPE values of 6.18, 1.20, 0.01 for training data set, 0.73, 0.35, 0.004 for testing data set and 6.94, 1.34, 0.02 for validation data set.

For the COD output, SCG results in MAE, MAE and MAPE values of 64.87, 4.37, 0.08 for training data set, 13.91, 1.71, 0.03 for testing data set and 73.02, 4.75, 0.09 for validation data set. Besides, it is stated that SCG algorithm is capable in performing well in diverse problem (Wong et al. 2018). For instance, the convergence performance of SCG was nearly similar to LM on solving approximation problems, and the same goes for the solving of pattern recognition problems, which result in faster convergence as RP. Figs. 7 and 8 show the comparison of target and output data for each response using the SCG training algorithm.

3.3.1 TSS Response

3.3.2 TUR Response

3.3.3 COD Response

3.4 Evaluation on the Performance of ANN

The rule of thumb is that regression, R should approach 1, as the R value measures the correlation between actual and predicted value.

Figure 9 compare experimental TSS, TUR and COD with predicted values. The plot indicates that the training algorithm SCG has been successful in predicting the TSS, TUR and COD values as the graphs of predicted results match well with the experimental values. These plots give the best results of the study carried out.

Figure 10 depicts the R values obtained for the SCG training algorithm, with training, testing and validation data showing acceptable R values. This indicates the good fitting of the experimental data in the ANN model

4 Conclusions

Performance of coagulation-flocculation process using fenugreek-okra in treating POME was studied to evaluate the removal efficiency of TSS, TUR and COD. The results were further optimized using ANN modelling technique. ANN was used to evaluate the accuracy of the predicted results. The process involved four parameter inputs namely pH, stirring speed, fenugreek dosage and okra dosage, and three parameter outputs namely TSS, TUR and COD removal percentages. Optimum parameters determined were pH 3.2, 4.09 g/L of fenugreek dosage, 58 mL/500 mL POME of okra dosage and stirring speed of 197 rpm in order to yield maximum TSS, TUR and COD removal efficiencies of 92.7%, 94.97%, and 63.11%. An FFNN backpropagation model was developed using tangent-purelin transfer function and trained using 12 different training algorithms and 1 to 25 number of hidden neurons. It was observed that the SCG algorithm was the best training function for the coagulant-flocculant process with optimal MSE, MAE and MAPE values for all three outputs. From the present study, it was found that the ANN model has been successfully used to predict the TSS, TUR and COD with an R value of 0.8629.

References

Ab Kadir M, Nik Norulaini NA, Ahmad Zuhairi A, Muhamad Hakimi I (2004) Chemical coagulation of settleable solid-free palm oil mill effluent (POME) for organic load reduction. J Ind Technol 10(1):55–72. https://doi.org/10.11113/jt.v40.424

Ahmad Tajuddin H, Chuah Abdullah L, S Y (2015) Modelling of chitosan-treating palm oil effluent (POME) by artificial neural network (ANN). Int J Sci Res 4(4):3360–3365 (https://pdfs.semanticscholar.org/c7d8/066ff07f3c06c7be0943b73753f4651cb589.pdf?_ga=2.206608016.2017122194.1582259401-585789982.1571195784)

Arumugasamy S, Selvarajoo A (2015) Feedforward neural network modeling of biomass pyrolysis process for biochar production. Chem Eng Trans 45:1681–1686. https://doi.org/10.3303/CET1545281

Asad MT, Sethu V, Arumugasamy S, Selvarajoo A (2020) Rambutan and fenugreek seeds for the treatment of palm oil mill effluent (POME) and its feedforward artificial neural network (FANN) modelling. Res Commun Eng Sci Technol 4:1–14

Bhatia S, Othman Z, Ahmad AL (2007) Coagulation–flocculation process for POME treatment using Moringa oleifera seeds extract: Optimization studies. Chem Eng J 133(1–3):205–212. https://doi.org/10.1016/j.cej.2007.01.034

Choong BLL, Peter AP, Hwang KQC, Ragu P, Sethu V, Selvarajoo A, Arumugasamy S (2018) Treatment of palm oil mill effluent (POME) using chickpea (Cicer arietinum) as a natural coagulant and flocculant: Evaluation, process optimization and characterization of chickpea powder. J Environ Chem Eng 6(5):6243–6255. https://doi.org/10.1016/j.jece.2018.09.038

Chung CY, Selvarajoo A, Sethu V, Koyande AK, Arputhan A, Lim ZC (2018) Treatment of palm oil mill effluent (POME) by coagulation flocculation process using peanut–okra and wheat germ–okra. Clean Technol Environ Policy 20(9):1951–1970. https://doi.org/10.1007/s10098-018-1619-y

Fahmi MR, Hamidin N, Abidin CZA, Fazara MAUF, Hatim MDI (2013) Performance evaluation of okra (Abelmoschus esculentus) as coagulant for turbidity removal in water treatment. Key Eng Mater 594-595:226–230. https://doi.org/10.4028/www.scientific.net/KEM.594-595.226

Freitas T, Oliveira V, de Souza M, Geraldino H, Almeida V, Fávaro S, Garcia J (2015) Optimization of coagulation-flocculation process for treatment of industrial textile wastewater using okra (A. esculentus) mucilage as natural coagulant. Ind Crops Prod 76:538–544. https://doi.org/10.1016/j.indcrop.2015.06.027

Haghiri S, Daghighi A, Moharramzadeh S (2018) Optimum coagulant forecasting by modelling jar test experiments using ANNs. Drink Water Eng Sci 11(1):1–8. https://doi.org/10.5194/dwes-11-1-2018

Karri RR, Sahu JN (2018) Process optimization and adsorption modelling using activated carbon derived from palm oil kernel shell for Zn (II) disposal from the aqueous environment using differential evolution embedded neural network. J Mol Liq 265:592–602. https://doi.org/10.1016/j.molliq.2018.06.040

Mishra A, Yadav A, Agarwal M, Bajpai M (2004) Fenugreek mucilage for solid removal from tannery effluent. React Funct Polym 59(1):99–104. https://doi.org/10.1016/j.reactfunctpolym.2003.08.008

Pakalapati H, Arumugasamy S, Khalid M (2019a) Comparison of response surface methodology and feedforward neural network modelling for polycaprolactone synthesis using enzymatic polymerization. Biocatal Agric Biotechnol 18:101046. https://doi.org/10.1016/j.bcab.2019.101046

Pakalapati H, Tariq MA, Arumugasamy S (2019) Optimization and modelling of enzymatic polymerization of ε-caprolactone to polycaprolactone using Candida Antartica Lipase B with response surface methodology and artificial neural network. Enzym Microb Technol 122:7–18. https://doi.org/10.1016/j.enzmictec.2018.12.001

Pilkington JL, Preston C, Gomes RL (2014) Comparison of response surface methodology (RSM) and artificial neural networks (ANN) towards efficient extraction of artemisinin from Artemisia annua. Ind Crops Prod 58:15–24. https://doi.org/10.1016/j.indcrop.2014.03.016

Selvanathan M, Yann K, Chung CY, Selvarajoo A, Arumugasamy SK, Sethu V (2017) adsorption of copper (II) ion from aqueous solution using biochar derived from rambutan (Nephelium lappaceum) Peel: Feedforward Neural Network Modelling Study. Water Air Soil Pollut 228(8):229. https://doi.org/10.1007/s11270-017-3472-8

Selvarajoo A, Muhammad D, Arumugasamy SK (2019) An experimental and modelling approach to produce biochar from banana peels through pyrolysis as potential renewable energy resources. Model Earth Syst Environ 6:115–128. https://doi.org/10.1007/s40808-019-00663-2

Sethu V, Mendis A, Rajiv R, Chimbayo S, Vejayan S (2015) Fenugreek seeds for the treatment of Palm Oil Mill Effluent (POME). Int J Chem Environ Eng 6(2):1–5

Sethu V, Selvarajoo A, Lee CW, Pavitren G, Goh SL, Mok XY (2019) Opuntia cactus as a novel bio-coagulant for the treatment of palm oil mill effluent. Prog Energy Environ 9:11–26

Ugwu SN, Umuokoro AF, Echiegu EA, Ugwuishiwu BO, Enweremadu CC (2017) Comparative study of the use of natural and artificial coagulants for the treatment of sullage (domestic wastewater). Cogent Eng 4:1. https://doi.org/10.1080/23311916.2017.1365676

Wong Y, Arumugasamy S, Jewaratnam J (2018) Performance comparison of feedforward neural network training algorithms in modelling for synthesis of polycaprolactone via biopolymerization. Clean Technol Environ Policy 20(9):1971–1986. https://doi.org/10.1007/s10098-018-1577-4

Yacob S, Ali Hassan M, Shirai Y, Wakisaka M, Subash S (2006) Baseline study of methane emission from anaerobic ponds of palm oil mill effluent treatment. Sci Total Environ 366:187–196. https://doi.org/10.1016/j.scitotenv.2005.07.003

Yin C (2010) Emerging usage of plant-based coagulants for water and wastewater treatment. Process Biochem 45(9):1437–1444. https://doi.org/10.1016/j.procbio.2010.05.030

Yogeswari MK, Dharmalingam K, Mullai P (2019) Implementation of artificial neural network model for continuous hydrogen production using confectionery wastewater. J Environ Manag 252:109684. https://doi.org/10.1016/j.jenvman.2019.109684

Acknowledgements

The author would like to acknowledge Jugra Palm Oil Sdn. Bhd. (Selangor, Malaysia) for providing the POME samples throughout the studies.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Mohd Najib, N.A., Sethu, V., Arumugasamy, S.K. et al. Artificial Neural Network (ANN) Modelling of Palm Oil Mill Effluent (POME) Treatment with Natural Bio-coagulants. Environ. Process. 7, 509–535 (2020). https://doi.org/10.1007/s40710-020-00431-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40710-020-00431-w