Abstract

Peer feedback can be described as the act of one learner evaluating the performance of another learner. It has been shown to impact student learning and achievement in language learning contexts positively. It is a skill that can be trained, and there have been calls for research on peer feedback training. Mobile microlearning is a type of technology-enhanced learning which is notable for its short duration and flexibility in the time and place of learning. This study aims to evaluate how an asynchronous microlearning app might improve students’ skills for providing peer feedback on spoken content in the context of English as a foreign language (EFL) education. This study used convenience sampling and a quasi-experimental single-group pre-/post- research design. Japanese university students (n = 87) in an EFL course used the Pebasco asynchronous microlearning app to practice peer feedback skills. The students’ app usage data were used to identify five behavioral profiles. The pattern of profile migration over the course of using Pebasco indicates that many participants improved or maintained desirable patterns of behavior and outcomes, suggesting a positive impact on the quality of peer feedback skills and second-language (L2) skills, as well as the ability to detect L2 errors. The findings also suggest improvements that can be made in future design iterations. This research is novel because of a current lack of research on the use of no-code technology to develop educational apps, particularly in the context of microlearning for improving peer feedback skills in EFL.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Peer feedback can be described as the act of one learner evaluating the performance of another learner. It has been shown to positively impact student learning and achievement (Hattie, 2009, 2012; Hattie & Clarke, 2019; Kerr, 2020). It also has been found to improve students’ self-reflection/internal feedback skills (To & Panadero, 2019). In the context of language learning, the practice of peer feedback (both provision and reception) can improve learners’ second/foreign language (L2) skills (El Mortaji, 2022; Patri, 2002; Rodríguez-González & Castañeda, 2016); and peer feedback practice can improve learners’ ability to detect L2 errors (Fujii et al., 2016). Peer feedback is a skill that can be trained (Sluijsmans et al., 2004; van Zundert et al., 2010).

There have been calls for research examining how training can enhance the quality of peer feedback (Dao & Iwashita, 2021) and for more use of experimental and quasi-experimental methodologies in peer feedback research (Topping, 2013). In the Dual Model Theory of peer corrective feedback (PCF), it is theorized that there are educational benefits to both the provision and reception of PCF, and there is a call for research to “experimentally tease apart the two sides” (Sato, 2017, p. 30). The current study addresses this call for research by isolating just the provisioning side of peer feedback.

Mobile microlearning is a type of technology-enhanced learning which is notable for its short duration and flexibility in the time and place of learning (Hug et al., 2006). Although there are several studies that research novel uses of microlearning to improve L2 vocabulary skills (e.g., Arakawa et al., 2022; Dingler et al., 2017; Inie & Lungu, 2021; Kovacs, 2015), there is a gap in the literature of research that investigates the use of asynchronous mobile microlearning peer feedback training in the context of spoken content in foreign language learning. The current study aims to fill this gap.

The opportunity to address these gaps in the literature arose due to an unexpected change in a teaching context. In response to the ongoing COVID-19 pandemic, the city of Tokyo was placed under a declared state of emergency multiple times in 2021 (The Japan Times, 2021). As a result, the university where the current study was conducted decided that some classes would be held in person while others would be taught using an online asynchronous modality. The present study examines the continued development and use of the Pebasco app to support the adaptation of a mandatory Communicative Language Teaching (CLT)-based English as a foreign language (EFL) class to this asynchronous modality.

The first version of Pebasco was a prototype learning analytics system used in synchronous online classes held in a Japanese university in the first-year of the COVID-19 pandemic (Gorham & Ogata, 2020). It was designed to improve students’ peer feedback skills on spoken content in a CLT-based EFL class context. Following an iterative design process, the second version of Pebasco was built as a standalone asynchronous microlearning app using the no-code development platform Bubble (Bubble, 2022).

The second version of Pebasco allows for the collection of learning analytics data. Based on the system affordances and learner interactions with the system, this paper first defines the learner behavior profiles of the participants (n = 87) for this language microlearning task. Using these learner profiles, this paper attempts to answer the following research question by tracking the migration of learner profiles over the course of using the Pebasco system:

How and to what extent can the asynchronous microlearning app Pebasco improve students’ skills for providing peer feedback on spoken content in CLT-based English as a foreign language (EFL) education?

Literature review

Peer feedback

Peer feedback is sometimes considered a variant of peer evaluation that includes providing more detailed qualitative comments (see Alqassab et al., 2018; Liu & Carless, 2006). However, for this literature review, we will follow Panadero and Alqassab’s (2019) lead and treat peer assessment and peer feedback as synonymous. We consider peer feedback as the act of one learner evaluating the performance of another learner. We also include peer corrective feedback (PCF) as a synonym, which is differentiated from corrective feedback provided by a teacher.

Theoretical perspectives on peer feedback

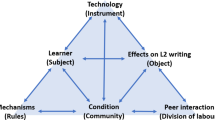

Multiple theoretical lenses can be used to frame peer feedback (Sato, 2017). It fits well within the ICAP framework (Chi et al., 2018). The ICAP framework addresses active learning through the lens of cognitive engagement as observed through overt behaviors. The levels of the framework listed in descending order of cognitive engagement are “interactive,” “constructive,” “active,” and “passive.” Peer feedback activities tend to require more cognitive engagement. In the context of second-language learning, Sato and Lyster (2012) draw upon skill acquisition theory to explain that some of the benefits of PCF result from the multiple repetitions of practice that it requires.

Nicol et al. (2014) demonstrated that the act of providing peer feedback can encourage students to think more deeply and critically about their own work. This may be because providing peer feedback harnesses the brain’s natural inclination to engage in comparison (Nicol, 2020). The act of comparison is a process at the heart of much of human cognition (Gentner et al., 2001; Goldstone et al., 2010; Hofstadter & Sander, 2013). Nicol (2020) theorizes that engaging in peer feedback contributes to the development of “internal feedback” skills, in which learners reflect on and regulate their own learning processes. In the context of second-language learning, Levelt’s perceptual theory of monitoring has been used to explain how a learner can improve their internal feedback skills by providing peer feedback (Sato, 2017; Sato & Lyster, 2012). The dual model of PCF expands on this by theorizing that there are benefits to both providing and receiving peer feedback. There has been a call for future research that can investigate these two sides independently (Sato, 2017).

Efficacy of peer feedback

Peer feedback has been shown to positively impact student learning and achievement (Hattie, 2009, 2012; Hattie & Clarke, 2019; Kerr, 2020). These findings have also held true in online learning environments such as MOOCs (Kasch et al., 2021). It has been found to improve students’ self-reflection/internal feedback skills (To & Panadero, 2019).

In the context of language learning, peer feedback can improve learners’ L2 language skills and public speaking presentation skills (El Mortaji, 2022; Patri, 2002). Rodríguez-González and Castañeda (2016) found that peer feedback can improve L2 speaking skills and feelings of self-confidence and self-efficacy. Peer feedback practice can improve learners’ ability to detect language errors (Fujii et al., 2016), and PCF has been found to be a “feasible option for helping learners to attend to form in effective ways during peer interaction” (Sato & Lyster, 2012, p. 618). Conversely, Adams et al. (2011) found that peer feedback had a negative effect on L2 learning. This may point to the importance of improving the quality of peer feedback through training.

Peer feedback training

In the context of a teacher training course, Sluijsmans et al. (2004) concluded that “peer assessment is a skill that can be trained” (p. 74). In a survey of 27 studies conducted at the university level, van Zundert et al. (2010) found evidence that training can improve peer assessment skills and the attitude that students have toward peer assessment.

Similarly, in the context of language learning, Sato (2013) found that peer feedback training could improve the attitude that learners have toward peer feedback while also improving the learners’ ability to notice grammar (i.e., form) mistakes in both their peers’ speaking and in their own (i.e., internal feedback). Training was also found to improve the amount of peer feedback given and its quality in studies with Japanese adult English language learners and children in Canadian French immersion programs (Sato & Ballinger, 2016).

One challenge in implementing peer feedback in language learning contexts is that “learners need a threshold level of the target language” (Lyster et al., 2013, p. 29) for them to offer accurate feedback. Toth (2008) identified this same problem of students’ peer feedback accuracy and suggested the need for training. Foreign language students have reported a reluctance to give peer feedback because of a lack of L2 proficiency (Philp et al., 2010). It is hypothesized that students will improve their peer feedback skills and L2 skills using the Pebasco app.

Microlearning and peer feedback apps

The concept of microlearning has been around for nearly 20 years, and the practice has been evolving along with the technologies that enable it (Hug et al., 2006). To best understand microlearning, it is worthwhile to consider some of its inherent design principles. Jahnke et al. (2020) identified the design principles of mobile microlearning (i.e., microlearning that is accessed on mobile devices), including the following key ideas: (a) the learning activities should be practical and interactive; (b) the activities should be “snackable,” meaning students should be able to complete activities at their convenience in just a few minutes; (c) microlearning activities should include multimedia content; (d) the activities should provide immediate formative feedback; and (e) finally, microlearning apps should be available across multiple types of devices and operating systems. Lee et al. (2021) suggest that mobile microlearning is better suited for training lower-order thinking skills.

Microlearning is inherently flexible and can be connected with “multiple learning theories and approaches” (Garshasbi et al., 2021, p. 241). For example, Khong and Kabilan (2022) developed a theoretical model of microlearning for second-language instruction. Their theory fuses multiple existing theories related to cognitive science, motivation, and multimedia technology. One interesting element of their theory is the incorporation of Cognitive Load Theory (Sweller, 1994, 2020). This suggests that one of the advantages of the brief duration of microlearning activities is that the effort required does not exceed the cognitive capacity that a learner has available at the time to allocate to a particular activity.

While microlearning is often used in business and industry contexts, Drakidou and Panagiotidis (2018) cite examples of microlearning in foreign language learning. A significant amount of research has focused on using microlearning to improve L2 vocabulary. For example, Dingler et al. (2017) created a mobile microlearning app called “QuickLearn,” which offered L2 vocabulary practice with flashcards and multiple-choice questions that could be pushed by mobile device notifications. They found that their study’s participants used the app more while in transit between two locations.

Schneegass et al. (2022) investigated the integration of L2 vocabulary practice into common mobile device interactions, such as unlocking the device through an authentication action and responding to notifications. Inie and Lungu (2021) developed an internet browser extension that requires the user to spend a specified amount of time engaged in language learning activities such as (but not limited to) L2 vocabulary study before allowing them to access a designated “time-wasting” website. They reported a slight improvement in the post-tests following the use of the system, but not all the participants reported enjoying using it in its current implementation.

L2 vocabulary microlearning has also been investigated with an app that leverages user location data (Edge et al., 2011); with passive exposure to vocabulary and collocated phrases via an automatically updated wallpaper screen on the user’s mobile device (Dearman & Truong, 2012); with an Internet browser extension that overlays L2 words on first language website content (Trusty & Truong, 2011); with a system that inserts interactive vocabulary quizzes into the user’s social media feed (Kovacs, 2015); and with machine learning (natural language processing) to offer vocabulary practice that is embedded into the learner’s daily life (Arakawa et al., 2022). L2 vocabulary microlearning has been inserted in the time wasted while people are waiting for an elevator, an instant message reply, or a wi-fi connection (Cai et al., 2015, 2017). Zhao et al. (2018) even suggested using smartwatches for L2 vocabulary learning.

Despite this considerable focus on L2 vocabulary learning, there is a lack of research on using microlearning to train peer feedback skills. However, there is a relatively long history of using technology to support the provision of peer feedback. van den Bogaard and Saunders-Smits (2007) describe the PeEv system from the Delft University of Technology and the SPARK (Self and Peer Assessment Resource Kit) from the University of Technology Sydney, which date back to the turn of the twenty-first century (Sridharan et al., 2019). Two more recent tools that are used for providing peer feedback include the University of Technology Sydney’s updated SPARKPLUS system (Knight et al., 2019) and the Comprehensive Assessment of Team Member Effectiveness (CATME) system (Layton et al., 2012; Loughry et al., 2014; Ohland et al., 2012).

Both systems have some functionality for training students to provide peer feedback. CATME’s calibration function allows students to practice on fictional student content using the rating scale for a particular activity. Similarly, SPARKPLUS has a “benchmarking” feature where students can check to see if their peer feedback matches the instructor’s. While these systems are examples of technology-assisted peer feedback training, a search of the literature did not return any research investigating the use of the SPARKPLUS benchmarking function or the CATME rater calibration feature for training peer feedback skills in the context of spoken content in foreign language learning.

Çelik et al. (2018) describe a digital tool used in teacher training that allows users to tag videos of their peers’ teaching practice with feedback comments. In the context of foreign language learning, PeerEval is a mobile app for students to provide real-time peer feedback during classmates’ L2 presentations (Wu & Miller, 2020). While both tools provide a platform for providing peer feedback on spoken content, neither one offers asynchronous microlearning training of peer feedback skills. In fact, Wu and Miller (2020) cite the need for training students how to confidently provide feedback as the main challenge with using the PeerEval app.

There is a gap in the literature of research that investigates the use of asynchronous mobile microlearning peer feedback training in the context of spoken content in foreign language learning. The development of the second version of the Pebasco app aims to fill this gap.

Scaffolding learning with hints

One of the challenges of designing asynchronous learning experiences is that teachers cannot directly give their students scaffolding support as they might in a traditional face-to-face class. For this reason, research on primarily asynchronous massive open online courses (MOOCs) can guide possible approaches to take because much of it has investigated ways to provide students with feedback and support (Kasch et al., 2021; Meek et al., 2017). Heffernan and Heffernan (2014) describe the ASSISTments platform, which allows researchers to investigate the effects of different types of hints and scaffolding in online educational contexts such as MOOCs. Zhou et al. (2021) used the ASSISTments platform in concert with the edX MOOC Big Data and Education. They found that adult learners with lower prior knowledge of the material performed worse when they were required to complete an entire sequence of scaffolding support, regardless of how relevant it was to them at the time. They suggested that adults who prefer to have more learner autonomy may prefer to select only the hints that they need. Another option provided to the participants in that study was that participants could request for the system to reveal the correct answers to items. This option was also described by Moreno-Marcos et al. (2019). In the second version of Pebasco, the students can choose what level of support they receive from the system, including requesting the reveal of the correct answers for each item. This design choice aligns with the theoretical perspective of Cognitive Load Theory as described earlier.

Design of Pebasco v. 2.0

Overview of Pebasco

The naming for Pebasco comes from the underlined letters in the following phrase: “peer feedback on spoken content.” It has been used in the context of mandatory Communicative Language Teaching-focused English as a foreign language classes at a Japanese university.

Building with no-code technology

The second version of Pebasco was built as a standalone asynchronous microlearning app using the no-code development platform Bubble (Bubble, 2022). No-code technology empowers people less proficient in computer programming to develop websites and interactive apps using visual programming languages and more intuitive human-readable syntax. No-code technology is rapidly gaining implementation in business and industry; the former CEO of the massive software development platform Github, Chris Wanstrath, has said that in the future, all coding will be done by some form of no-code (Johannessen & Davenport, 2021). This is an important element of this paper’s novelty because a literature search reveals a lack of research on the use of no-code technology to develop educational apps, learning analytics apps, or apps for computer-assisted language learning (CALL).

Bubble is a multi-functional no-code platform. It can serve as an editing tool for creating the user interface and the underlying workflows of an app. It can also host the app on the web and maintain the dynamic database of all of the app’s data. Figure 1 displays some of the collapsed workflows that underlie one of the pages in the Pebasco app; each of the rectangles can be clicked to expand and display the step-by-step breakdown of the actions that comprise that particular workflow.

Just because Bubble does not require a user to know how to program in a particular computer coding language does not mean that it is necessarily easy to learn. Bubble offers more flexibility in the range of websites and apps that can be built than many other no-code tools do. However, that results in more complexity and a steeper learning curve. As none of the authors of this paper had used a no-code tool to build an app before, it was essential to reach out to the no-code community for support. This support included an official Bubble Bootcamp offered from the Bubble homepage (Bubble, 2022) and no-code design and educational groups such as ProNoCoders (2022) and Buildcamp (2022).

The Pebasco student interface

The purpose of Pebasco is to help students improve their peer feedback skills and internal feedback skills by comparing the feedback that they give to the expert feedback from a teacher. The overview of Pebasco is that students listen to audio clips that were recorded by students enrolled in the same course. For each audio, the teacher has made timestamped comments that indicate some mistake within the audio. Each audio has between one and seven teacher comments. The teacher comments either indicate a grammar/vocabulary mistake, a pronunciation mistake, or an unnecessarily long pause in the audio. The goal is for the student to listen to an audio and to try to predict the type and timestamp of each of the teacher’s comments. If a student can successfully predict all the teacher’s comments in an audio, they earn three stars for that audio. Students can make an unlimited number of attempts per audio.

After a student logs in to Pebasco, they first see the unit list page (see Fig. 2). There are currently four units with 15 audios per unit. Students were assigned one unit per week over four weeks. The student then selects an audio by clicking the “New Attempt” button. This takes the student to the attempt page (see Fig. 3).

On the attempt page, students have a simple audio player which allows them to play, pause, and skip forward or backward by 5 s. If a student identifies a section of the audio where they think the teacher may have left a comment, they will pause the audio, which then displays the current timestamp. The student can then annotate the audio at that timestamp by clicking on the red “Feedback” button. Next, they select the type of teacher comment (e.g., a pronunciation mistake) from a dropdown list. Then they indicate how confident they feel that their feedback annotation matches with the teacher comment. The system then announces if the student annotation matches with a teacher comment near the same timestamp.

Hints and stars

If a student successfully matches their peer feedback annotations to all of the teacher comments in an audio, the system makes a congratulatory announcement and awards them three stars for that audio. In the current version of Pebasco, users are not penalized for making “false positive” annotations (i.e., an annotation that does not match a teacher comment). This design decision was made to not discourage students from attempting annotations even if they did not have high confidence that they were correct.

The Pebasco app also has a hint engine to scaffold students in this activity. Below the red “Feedback button” on each audio’s attempt page is a “Hint” icon (see Fig. 3). Students can click on that icon up to three times to access increasing levels of support from the system. For hint number one, the system tells the student how many teacher comments are in the current audio. For hint number two, the system provides the timestamp for each of the teacher comments and links that jump the audio player to a few seconds prior to the timestamps and begin playing the audio. The links can be clicked multiple times. The third hint is a request to reveal the answers, similar to the functionality used in prior studies (Moreno-Marcos et al., 2019; Zhou et al., 2021). When hint three is used, the following information is shared with the user: the number of teacher comments; the timestamps and links to each timestamp in the audio for each teacher comment; the type of each teacher comment; the corrected text of the mistake (in the case of a grammar/vocabulary mistake) or a link to audio examples of British or North American pronunciation of words (in the case of a pronunciation mistake); and a full transcript of the audio clip (see Fig. 4).

The number of hints used in an attempt determines the number of stars awarded when a student matches with all the teacher comments. If a student uses zero hints, they are awarded three stars. If they use one hint, they get two stars. If they use two hints, they get one star. Finally, if they use all three hints (i.e., request to reveal the answers), they get zero stars. Whenever a student makes a new attempt at an audio, the number of hints used for that attempt is reset at zero.

The number of attempts made and stars earned is dynamically reflected on the unit list page. The “New Attempt” button changes color from red (zero stars), to orange (one star), to yellow (two stars), to green (three stars), reflecting the maxim number of stars earned across all attempts made on an audio. For example, in Fig. 2, in the second audio of unit 2 (i.e., Audio 2.02), the student had made one attempt, using two hints while matching with all of the teacher comments, to earn one star. In the fifth audio of unit two (i.e., Audio 2.05), the student did not earn any stars on their first attempt (either because they did not match with all of the teacher comments or they used all three hints and requested the reveal of the correct answers), but they were able to earn three stars on their second attempt. Please note that the screenshot shown in Fig. 2 is early in the student’s engagement with Pebasco’s unit 2; the student could still make unlimited future attempts to earn three stars on each audio.

Methods

Research design and context

Research design

This study used convenience sampling and a quasi-experimental single-group pre-/post- research design. Participants used the Pebasco system over the course of four units. The first unit was treated as a tutorial for the students to get familiarized with the system’s functionality. The participants’ usage patterns with the system and their end-of-activity performance in the second (pre) and fourth (post) units were compared. The usage patterns and performance data were used to create profiles. Based on work by Majumdar and Iyer (2016), each profile is considered a stratum, and the transition pattern is measured across the two phases (i.e., unit 2 and unit 4). The migration in profile types from the second to fourth units was described and further compared by appropriate statistics to evaluate if there was an improvement in the group of participants’ peer feedback skills over the course of using the Pebasco system.

Course goals

The context for this study is an asynchronous introductory CLT-focused EFL course that was mandatory for all first-year students in the Faculty of Letters at a university in Tokyo, Japan. The primary goal of the course is to improve students’ basic English communicative skills. One of the sub-goals of the course is to improve the students’ peer feedback and internal feedback skills for spoken content.

Participants

The participants in this study were first-year university students enrolled in the course described in the previous section. They were selected using convenience sampling. A total of 131 students were enrolled in the course. However, only students who provided informed consent and who had at least tried the second and last (fourth) units in Pebasco were included in this study (n = 87).

Data collected and analysis method

Data collected from Pebasco

The Pebasco app collects learning analytics data from user interactions with the system. Table 1 shows the number of attempts that all the students made in each unit of Pebasco. For each record of a student’s attempt, the system collected additional data, including the number of stars earned on the attempt, the number of hints used, the number of false positives (annotations that did not match with a teacher comment), the number of seconds that the audio was played during the attempt, the level of confidence a student reported for each annotation, and other clickstream data created when navigating the audio player.

The highest level of analysis provided by the Pebasco system is at the student outcome level. It shows how many stars an individual earned for each unit. Each unit has 15 audios with an opportunity to earn three stars per audio, resulting in the potential to earn a maximum of 45 stars per unit. The students are told when they are assigned a Pebasco unit that their score for the activity is based on the number of stars they earn in the unit, regardless of the number of attempts they make. The score breakdown is 10 points (45 stars), 9 points (40–44 stars), 8 points (35–39 stars), 7 points (30–34 stars), 6 points (25–29 stars), 5 points (20–24 stars), 4 points (15–19 stars), 3 points (10–14 stars), 2 points (5–9 stars), 1 point (1–4 stars), and 0 points (0 stars).

For the present study, unit 1 of Pebaso was used for onboarding and training purposes. The students’ outcomes and behaviors in unit 2 and unit 4 were compared for this study. Figure 5 shows the distribution of points (which reflect the sum of the maximum number of stars students earned for each audio in a unit) earned by students in unit 2 compared to unit 4. Students who earned seven points or more (i.e., 30 stars or more) were placed in the “High Stars” category, while the remaining students were placed in the “Low Stars” category. Figure 6 shows the migration of students between the two categories from unit 2 to unit 4 (Flourish, 2022).

Data analysis method

To answer the research question posed by this study, it is necessary to examine the data collected from Pebasco at a finer level of detail than shown by the student outcomes in Fig. 6. The additional behavioral data can be mined and combined with the outcome data to identify student profiles in the context of Pebasco use.

A collaborative no-code visual interactive data analytics platform called Einblick (Zgraggen et al., 2017) was used to identify student usage patterns within the learning analytics data generated by the Pebasco app. Einblick was used to explore and manipulate the data and identify behavioral patterns of interest within the data. Einblick (2022) offers a video of one example session using this platform to explore the Pebasco data used in this study. Through this data exploration and manipulation, two behavioral patterns of interest emerged.

The first behavioral pattern is when students request the correct answer using three hints during an attempt. The second behavioral pattern is when students’ behavior while completing an audio conforms to multiple success criteria that the authors identified and combined into an aggregate behavioral pattern called “Top audio.” Students displaying this pattern of behavior demonstrate higher levels of peer feedback skills. Table 2 shows the elements and thresholds of the collected learning analytics data used to create the “Top audio” category. For example, a student in the “Top audio” category would have only made one attempt in which they matched with all of the teacher comments and earned three stars without using any hints. The decision was made to allow up to two false positives (student feedback annotations that did not match with a teacher comment) because, in the usage data, many students used the fact that the system did not penalize false positives rather than using one hint to check the number of teacher comments in an audio. Finally, the system recorded the average number of seconds a student had played an audio per annotation they made. If it was too low, it might indicate a “spamming” technique in which the student quickly makes annotations without adequately listening to the audio, thereby abusing the lack of penalization of false positives. On the other end of the spectrum, if the time was too high, it might indicate that the student may not understand the audio well and may be hesitating or indecisive.

The behavioral patterns of “correct answer request” and “Top audio” were combined with the outcome data of the number of stars earned to identify learner profiles for each unit (see Fig. 7). Note that in Fig. 7, the two rectangles on the far left and right sides only have one output because there were no participants in the other possible output. The “1_Desired” profile is a student who performed well across all of the success criteria: they earned more than 30 stars in a unit, more than 50% of their audios in the unit meet the “Top audio” criteria, and they requested the correct answers for fewer than 50% of the audios in the unit. The “2_Successful with Support” profile is a student who earned more than 30 stars in a unit, but they had under 50% of audios that met the “Top audio” criteria and 50% of audios that had correct answer requests. These students may have used other supports in Pebasco, such as the ability to make multiple attempts and to seek hints that do not reveal the correct answers. The “3_ Successful with Answers” profile is a student who frequently relied on the system’s ability to reveal the correct answers to earn more than 30 stars in a unit. The “4_Unsuccesful with Answers” profile is a student who requested correct answers for more than 50% of the audios in the unit, but they still did not manage to get 30 stars or more. Only one student in one unit fell into this unusual category. Finally, the “5_Disengaged” profile is a student who made at least one attempt at a unit, but they disengaged from the system without earning 30 stars or more or displaying either the “correct answer request” or the “Top audio” behavioral pattern in more than 50% of the audios in a unit.

Results and interpretation

Figure 8 shows the migration of the student profiles (n = 87) within Pebasco from unit 2 to unit 4 (Flourish, 2022). Based on work by Majumdar and Iyer (2016), each profile is considered as a stratum, and the transition pattern is measured across the two phases (i.e., unit 2 and unit 4). Of the 36 students who started in the “1_Desired” profile in unit 2, 32 remained in that profile, while 2 migrated to the “2_Successsful with Support” profile, and two migrated to the “3_Successful with Answers” profile. Of the 18 students who started in the “2_Successsful with Support” profile in unit 2, only two remained in that profile, while 15 migrated to the “1_Desired” profile and one migrated to the “4_Unsuccessful with Answers” profile. Of the 14 students who started in the “3_Successful with Answers” profile, 13 remained, while one migrated to the “2_Successful with Support” profile. Of the 19 students who started in the “5_Disengaged” profile, 12 remained, while three migrated to the “2_Successsful with Support” profile and four migrated to the “1_Desired” profile.

Addressing the research question

This study sought to answer the following research question: How and to what extent can the asynchronous microlearning app Pebasco improve students’ skills for providing peer feedback on spoken content in English as a foreign language (EFL) education? By mining behavioral data from the Pebasco learning analytics system and combining it with the outcome data (i.e., the number of stars earned per unit), it became possible to identify five profiles of the students using the app. The profile migration that the students made over the course of using Pebasco indicate changes in the students’ level of peer feedback skills.

The “1_Desired” profile is for students with the highest level of peer feedback skills that can be demonstrated within the app. The profile migration pattern shown in Fig. 8 shows that in unit 2, only 41% (n = 36) of the students fit the “1_Desired” profile. However, by unit 4, 59% (n = 51) of students fit the “1_Desired” profile. This includes 15 students who migrated up from the “2_Successsful with Support” profile and four students who migrated up from the “5_Disengaged” profile. This migration shows that using the Pebasco microlearning app can positively affect students’ peer feedback skills in the context of English as a foreign language (EFL) education.

It is also worth noting two groups of students who remained static in their profiles from unit 2 to unit 4. The first group is 13 out of 14 students who started in the “3_Successful with Answers” profile and remained there. This may be because they felt they could get a good grade on the Pebasco assignments without needing to engage deeply with the content in the app. The second group is the 12 out of 19 students who started in the “5_Disengaged” profile and remained there. This might indicate a problem with student motivation. Alternatively, perhaps they had difficulty understanding how to use the app.

To conduct a statistical analysis of the profile changes from unit 2 to unit 4, the profile types were considered as ordinal variables running from 1 (i.e., “1_Desired”) to 5 (i.e., “5_disengaged”), with lower numbers indicating a better-performing profile. Unit 2 (M = 2.402, SD = 1.551, n = 87) was compared with unit 4 (M = 2.023, SD = 1.438, n = 87). The Shapiro–Wilk Test of Normality was conducted and found to be statistically significant, suggesting a deviation from normality, so the Wilcoxon signed-rank one-tailed, paired samples t-test was used (see Table 3). It was hypothesized that the unit 4 profile "rank" would be a lower number (because lower numbers indicate higher performance/better behavior) than that of unit 2. The results showed a statistically significant difference (P = 0.002), indicating an improvement from unit 2 to unit 4 as measured by profile migration.

Discussion and conclusions

Contrast with previous work

The present research first interacts with previous work by addressing multiple calls for research. There have been calls for research examining how training can enhance the quality of peer feedback (Dao & Iwashita, 2021) and for more use of experimental and quasi-experimental methodologies in peer feedback research (Topping, 2013). In the Dual Model Theory of peer corrective feedback, it is theorized that there are educational benefits to both the provision and reception of PCF, and there is a call for research to “experimentally tease apart the two sides” (Sato, 2017, p. 30). The present study addresses these calls for research using a quasi-experimental research methodology to investigate the effect of practicing only the provision of peer feedback on the quality of the feedback provided.

Jahnke et al. (2020) proposed a set of mobile microlearning design principles described earlier in this paper. The design of the second version of the Pebasco app, which was used in the present study, adheres to the mobile microlearning design principles described by Jahnke et al. (2020): (a) the L2 listening and peer feedback practice are practical and interactive; (b) the Pebasco activities can be completed in only a few minutes; (c) multimedia audio clips are integral to the user experience; (d) the system provides immediate formative feedback in the form of text notifications, animations, and icon color changes; (e) and finally, Pebasco is accessible across multiple devices and operating systems. Although there are several studies that research novel uses of microlearning to improve L2 vocabulary skills (e.g., Arakawa et al., 2022; Dingler et al., 2017; Inie & Lungu, 2021; Kovacs, 2015), there is a gap in the literature of research that investigates the use of asynchronous mobile microlearning peer feedback training in the context of spoken content in foreign language learning. The current study aimed to fill this gap. The results of this study show that the use of an asynchronous mobile microlearning system resulted in a statistically significant shift in user profiles over time. This expands on the previous literature by demonstrating the use of mobile microlearning to improve peer feedback skills in the context of spoken content in foreign language learning.

The Pebasco app builds on previous research showing that peer assessment skills can be trained (Sluijsmans et al., 2004; van Zundert et al., 2010); that the practice of peer feedback (both provision and reception) can improve learners’ L2 language skills (El Mortaji, 2022; Patri, 2002; Rodríguez-González & Castañeda, 2016); and that peer feedback practice can improve learners’ ability to detect L2 errors (Fujii et al., 2016). The results of this study show a pattern of statistically significant profile migration over the course of using Pebasco that indicates that many participants improved or maintained desirable patterns of behavior and outcomes. This suggests a positive impact on the quality of peer feedback skills and L2 language skills, as well as the ability to detect L2 errors. This improvement in L2 language skills is important because a lack of L2 proficiency can hamper the effectiveness of peer feedback in language learning (Lyster et al., 2013; Philp et al., 2010; Toth, 2008). The current study adds to this body of literature by demonstrating that mobile microlearning can be an effective means of training peer feedback skills and L2 language skills.

One possible explanation of this improvement is based on the multiple repetitions of practice that the participants had to practice their L2 skills and their peer feedback skills as described in Skill Acquisition Theory (Sato & Lyster, 2012). However, deeper consideration must be given to the performance of the students who remained in the “3_Successful with Answers” profile. It may suggest a difficulty in balancing two theoretical approaches. On the one hand, the design decision to include scaffolding hints and the ability to request the correct answers was informed by Cognitive Load Theory (Khong & Kabilan, 2022; Sweller, 1994, 2020) and the desire not to overwhelm the users, regardless of their cognitive capacity at the time. However, the option to view the correct answers seems to have created a situation for this particular group of students in which they could demonstrate lower cognitive engagement as described by the ICAP framework (Chi et al., 2018) while still achieving the desired outcome (i.e., receiving a high number of stars).

Limitations and future work

In this study, the context was limited to a Japanese university. In future studies, it might be worthwhile to expand to different contexts. Likewise, this study used the no-code tools Bubble and Einblick. Perhaps researchers might be interested in trying to recreate an app similar to Pebasco using different tools. Finally, the purpose of Pebasco is to provide microlearning opportunities for students to improve their peer feedback skills on spoken content in a CLT context while collecting learning analytics data. Researchers may want to try to conduct similar studies in other domains.

One limitation of this paper is that the pre-/post- data used was limited to the internal performance behavior data generated by the students using Pebasco. From the evaluation of the learning perspective, this study focused on learning behaviors while using the application. The profiles included the evaluation provided based on the task performance. While such rank indicators helped to understand the dynamics of improvement while using the application repeatedly over multiple similar tasks, one limitation of this remains that there is no control group to find the causal relationship of the intervention. Hence further studies with independent measures of appropriate pre-/post-test performance would also help to establish the learning impact of using Pebasco app.

Implications for instructors

The findings of this study demonstrate that a teacher or researcher with little or no knowledge of computer programming can use no-code tools to build an asynchronous microlearning app that serves as a learning analytics platform while supporting peer feedback skills on spoken content in a CLT-based EFL context. Hopefully, this will inspire others to try to build learning analytics apps and microlearning apps that fit their contexts and teaching/learning needs.

As described earlier in this paper, no-code tools sometimes have a learning curve and may require training and support, particularly when one tries to build a complex app. No-code novices should start with a simple plan for their app and then consider all the elements, such as data and workflows that will be needed in their finished app. It is highly recommended that novices seek out support from the no-code community. Many freely available resources, such as online videos, training boot camps, and educational communities, can help improve a novice’s skill set quickly (see Buildcamp, 2022; ProNoCoders, 2022).

Conclusions

This study looked at the use of Pebasco. This asynchronous mobile microlearning app supports the development of students’ peer feedback skills on spoken content while simultaneously collecting usage data as a learning analytics platform. The system has a hint engine that offers a range of scaffolded support for students. The findings in this study indicate that the Pebasco app can help students improve their peer feedback skills on spoken content in the context of CLT-based EFL. The findings also suggest some areas of improvement for the system that can be made in future design iterations. This research is novel because of a current lack of research on the use of no-code technology to develop educational apps, learning analytics apps, or apps for computer-assisted language learning (CALL), particularly in the context of microlearning for the improvement of peer feedback skills in CLT-based EFL. Furthermore, this research addresses the call for further research in training students’ peer feedback skills (Kasch et al., 2021) and the provision of peer feedback in particular (Sato, 2017).

Data availability

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CLT:

-

Communicative language teaching

- EFL:

-

English as a foreign language

References

Adams, R., Nuevo, A. M., & Egi, T. (2011). Explicit and implicit feedback, modified output, and SLA: Does explicit and implicit feedback promote learning and learner-learner interactions? The Modern Language Journal, 95(s1), 42–63. https://doi.org/10.1111/j.1540-4781.2011.01242.x

Alqassab, M., Strijbos, J.-W., & Ufer, S. (2018). Training peer-feedback skills on geometric construction tasks: Role of domain knowledge and peer-feedback levels. European Journal of Psychology of Education, 33(1), 11–30. https://doi.org/10.1007/s10212-017-0342-0

Arakawa, R., Yakura, H., & Kobayashi, S. (2022). VocabEncounter: NMT-powered vocabulary learning by presenting computer-generated usages of foreign words into users’ daily lives. In Proceedings of the 2022 CHI conference on human factors in computing systems. https://doi.org/10.1145/3491102.3501839

Bubble. (2022). Bubble. In Bubble Group, Inc. Retrieved from https://bubble.io

Buildcamp. (2022). Buildcamp. Buildcamp. Retrieved from https://buildcamp.io/

Cai, C. J., Guo, P. J., Glass, J. R., & Miller, R. C. (2015). Wait-learning: Leveraging wait time for second language education. In Proceedings of the 33rd annual ACM conference on human factors in computing systems. https://doi.org/10.1145/2702123.2702267

Cai, C. J., Ren, A., & Miller, R. C. (2017). WaitSuite: Productive use of diverse waiting moments. ACM Transactions on Computer-Human Interaction, 24(1), 7. https://doi.org/10.1145/3044534

Çelik, S., Baran, E., & Sert, O. (2018). The affordances of mobile-app supported teacher observations for peer feedback. International Journal of Mobile and Blended Learning (IJMBL), 10(2), 36–49. https://doi.org/10.4018/IJMBL.2018040104

Chi, M. T. H., Adams, J., Bogusch, E. B., Bruchok, C., Kang, S., Lancaster, M., Levy, R., Li, N., McEldoon, K. L., Stump, G. S., Wylie, R., Xu, D., & Yaghmourian, D. L. (2018). Translating the ICAP theory of cognitive engagement into practice. Cognitive Science, 42(6), 1777–1832. https://doi.org/10.1111/cogs.12626

Dao, P., & Iwashita, N. (2021). Peer feedback in L2 oral interaction. In H. Nassaji & E. Kartchava (Eds.), The Cambridge handbook of corrective feedback in second language learning and teaching (pp. 275–300). Cambridge University Press.

Dearman, D., & Truong, K. (2012). Evaluating the implicit acquisition of second language vocabulary using a live wallpaper. In: Proceedings of the SIGCHI conference on human factors in computing systems. https://doi.org/10.1145/2207676.2208598

Dingler, T., Weber, D., Pielot, M., Cooper, J., Chang, C.-C., & Henze, N. (2017). Language learning on-the-go: opportune moments and design of mobile microlearning sessions. In: Proceedings of the 19th international conference on human-computer interaction with mobile devices and services. https://doi.org/10.1145/3098279.3098565

Drakidou, C., & Panagiotidis, P. (2018). The use of micro-learning for the acquisition of language skills in mLLL context.

Edge, D., Searle, E., Chiu, K., Zhao, J., & Landay, J. A. (2011). MicroMandarin: mobile language learning in context. In: Proceedings of the SIGCHI conference on human factors in computing systems. https://doi.org/10.1145/1978942.1979413

Einblick. (2022). BYOD: Bring your own data with Thomas Gorham, Assistant Professor at Rissho University, Tokyo, Japan [Video]. YouTube. https://www.youtube.com/watch?v=MIs0ser6HWg&ab_channel=Einblick

El Mortaji, L. (2022). Public speaking and online peer feedback in a blended learning EFL course environment: Students’ perceptions. English Language Teaching, 15(2), 31–49. https://doi.org/10.5539/elt.v15n2p31

Flourish. (2022). Flourish. Retrieved from https://flourish.studio/

Fujii, A., Ziegler, N., & Mackey, A. (2016). Peer interaction and metacognitive instruction in the EFL classroom. In M. Sato & S. Ballinger (Eds.), Peer interaction and second language learning: Pedagogical potential and research agenda (Vol. 45, pp. 63–90). John Benjamins.

Garshasbi, S., Yecies, B., & Shen, J. (2021). Microlearning and computer-supported collaborative learning: An agenda towards a comprehensive online learning system. STEM Education, 1(4), 225–255. https://doi.org/10.3934/steme.2021016

Gentner, D., Holyoak, K. J., & Kokinov, B. N. (Eds.). (2001). The analogical mind: Perspectives from cognitive science. The MIT Press.

Goldstone, R. L., Day, S., & Son, J. Y. (2010). Comparison. In B. Glatzeder, V. Goel, & A. Müller (Eds.), Towards a theory of thinking: Building blocks for a conceptual framework (pp. 103–121). Springer. https://doi.org/10.1007/978-3-642-03129-8_7

Hattie, J. (2009). Visible learning: A synthesis of over 800 meta-analyses relating to achievement. Routledge.

Hattie, J. (2012). Feedback in schools. In R. Sutton, M. J. Hornsey, & K. M. Douglas (Eds.), Feedback: The communication of praise, criticism, and advice (pp. 265–278). Peter Lang Publishing.

Hattie, J., & Clarke, S. (2019). Visible learning: Feedback. Routledge.

Heffernan, N. T., & Heffernan, C. L. (2014). The ASSISTments ecosystem: Building a platform that brings scientists and teachers together for minimally invasive research on human learning and teaching. International Journal of Artificial Intelligence in Education, 24(4), 470–497. https://doi.org/10.1007/s40593-014-0024-x

Hofstadter, D. R., & Sander, E. (2013). Surfaces and essences: Analogy as the fuel and fire of thinking. Basic Books.

Hug, T., Lindner, M., & Bruck, P. (Eds.). (2006). Microlearning: Emerging concepts, practices and technologies after e-Learning. Innsbruck University Press. Retrieved from https://www.researchgate.net/publication/246822097_Microlearning_Emerging_Concepts_Practices_and_Technologies_after_e-Learning

Inie, N., & Lungu, M. F. (2021). Aiki—turning online procrastination into microlearning. In: Proceedings of the 2021 CHI conference on human factors in computing systems. https://doi.org/10.1145/3411764.3445202

Jahnke, I., Lee, Y.-M., Pham, M., He, H., & Austin, L. (2020). Unpacking the inherent design principles of mobile microlearning. Technology, Knowledge and Learning, 25(3), 585–619. https://doi.org/10.1007/s10758-019-09413-w

Johannessen, C., & Davenport, T. (2021). When low-code/no-code development works—and when it doesn’t. Harvard Business Review. Retrieved from https://hbr.org/2021/06/when-low-code-no-code-development-works-and-when-it-doesnt

Kasch, J., van Rosmalen, P., Löhr, A., Klemke, R., Antonaci, A., & Kalz, M. (2021). Students’ perceptions of the peer-feedback experience in MOOCs. Distance Education, 42(1), 145–163. https://doi.org/10.1080/01587919.2020.1869522

Kerr, P. (2020). Giving feedback to language learners: Part of the Cambridge papers in ELT series [PDF](Cambridge papers in ELT, Issue. C. U. Press. Retrieved from https://www.cambridge.org/gb/files/4415/8594/0876/Giving_Feedback_minipaper_ONLINE.pdf

Khong, H. K., & Kabilan, M. K. (2022). A theoretical model of micro-learning for second language instruction. Computer Assisted Language Learning, 35(7), 1483–1506. https://doi.org/10.1080/09588221.2020.1818786

Knight, S., Leigh, A., Davila, Y. C., Martin, L. J., & Krix, D. W. (2019). Calibrating assessment literacy through benchmarking tasks. Assessment & Evaluation in Higher Education, 44(8), 1121–1132. https://doi.org/10.1080/02602938.2019.1570483

Kovacs, G. (2015). FeedLearn: Using Facebook Feeds for Microlearning. In: Proceedings of the 33rd annual ACM conference extended abstracts on human factors in computing systems. https://doi.org/10.1145/2702613.2732775

Layton, R. A., Loughry, M. L., Ohland, M. W., & Pomeranz, H. (2012). Workshop: Training students to become better raters: Raising the quality of self- and peer-evaluations using a new feature of the CATME system. 2012 Frontiers in Education Conference Proceedings.

Lee, Y.-M., Jahnke, I., & Austin, L. (2021). Mobile microlearning design and effects on learning efficacy and learner experience. Educational Technology Research and Development, 69(2), 885–915. https://doi.org/10.1007/s11423-020-09931-w

Liu, N.-F., & Carless, D. (2006). Peer feedback: The learning element of peer assessment. Teaching in Higher Education, 11(3), 279–290. https://doi.org/10.1080/13562510600680582

Loughry, M. L., Ohland, M. W., & Woehr, D. J. (2014). Assessing teamwork skills for assurance of learning using CATME team tools. Journal of Marketing Education, 36(1), 5–19. https://doi.org/10.1177/0273475313499023

Lyster, R., Saito, K., & Sato, M. (2013). Oral corrective feedback in second language classrooms. Language Teaching, 46(1), 1–40. https://doi.org/10.1017/S0261444812000365

Majumdar, R., & Iyer, S. (2016). iSAT: A visual learning analytics tool for instructors. Research and Practice in Technology Enhanced Learning, 11(1), 16. https://doi.org/10.1186/s41039-016-0043-3

Meek, S. E. M., Blakemore, L., & Marks, L. (2017). Is peer review an appropriate form of assessment in a MOOC? Student participation and performance in formative peer review. Assessment & Evaluation in Higher Education, 42(6), 1000–1013. https://doi.org/10.1080/02602938.2016.1221052

Moreno-Marcos, P. M., Alario-Hoyos, C., Muñoz-Merino, P. J., & Kloos, C. D. (2019). Prediction in MOOCs: A review and future research directions. IEEE Transactions on Learning Technologies, 12(3), 384–401. https://doi.org/10.1109/TLT.2018.2856808

Nicol, D. (2020). The power of internal feedback: Exploiting natural comparison processes. Assessment & Evaluation in Higher Education. https://doi.org/10.1080/02602938.2020.1823314

Nicol, D., Thomson, A., & Breslin, C. (2014). Rethinking feedback practices in higher education: A peer review perspective. Assessment & Evaluation in Higher Education, 39(1), 102–122. https://doi.org/10.1080/02602938.2013.795518

Ohland, M. W., Loughry, M. L., Woehr, D. J., Bullard, L. G., Felder, R. M., Finelli, C. J., Layton, R. A., Pomeranz, H. R., & Schmucker, D. G. (2012). The comprehensive assessment of team member effectiveness: Development of a behaviorally anchored rating scale for self- and peer evaluation. Academy of Management Learning & Education, 11(4), 609–630. https://doi.org/10.5465/amle.2010.0177

Panadero, E., & Alqassab, M. (2019). An empirical review of anonymity effects in peer assessment, peer feedback, peer review, peer evaluation and peer grading. Assessment & Evaluation in Higher Education, 44(8), 1253–1278. https://doi.org/10.1080/02602938.2019.1600186

Patri, M. (2002). The influence of peer feedback on self- and peer-assessment of oral skills. Language Testing, 19(2), 109–131. https://doi.org/10.1191/0265532202lt224oa

Philp, J., Walter, S., & Basturkmen, H. (2010). Peer interaction in the foreign language classroom: What factors foster a focus on form? Language Awareness, 19(4), 261–279. https://doi.org/10.1080/09658416.2010.516831

ProNoCoders. (2022). Pro NoCoders. Pro NoCoders. https://pronocoders.com/

Rodríguez-González, E., & Castañeda, M. E. (2016). The effects and perceptions of trained peer feedback in L2 speaking: Impact on revision and speaking quality. Innovation in Language Learning and Teaching, 12(2), 120–136. https://doi.org/10.1080/17501229.2015.1108978

Sato, M. (2013). Beliefs about peer interaction and peer corrective feedback: Efficacy of classroom intervention. The Modern Language Journal, 97(3), 611–633. https://doi.org/10.1111/j.1540-4781.2013.12035.x

Sato, M. (2017). Oral peer corrective feedback: multiple theoretical perspectives. In H. Nassaji & E. Kartchava (Eds.), Corrective feedback in second language teaching and learning: Research, theory, applications, implications (pp. 19–34). Routledge. https://doi.org/10.4324/9781315621432

Sato, M., & Ballinger, S. (2016). Understanding peer interaction: research synthesis and directions. In M. Sato & S. Ballinger (Eds.), Peer interaction and second language learning: Pedagogical potential and research agenda (Vol. 45, pp. 1–30). John Benjamins.

Sato, M., & Lyster, R. (2012). Peer Interaction and corrective feedback for accuracy and fluency development: Monitoring, practice, and proceduralization. Studies in Second Language Acquisition, 34(4), 591–626. https://doi.org/10.1017/S0272263112000356

Schneegass, C., Sigethy, S., Mitrevska, T., Eiband, M., & Buschek, D. (2022). UnlockLearning—Investigating the integration of vocabulary learning tasks into the smartphone authentication process. i-com, 21(1), 157–174. https://doi.org/10.1515/icom-2021-0037

Sluijsmans, D. M. A., Brand-Gruwel, S., van Merriënboer, J. J. G., & Martens, R. L. (2004). Training teachers in peer-assessment skills: Effects on performance and perceptions. Innovations in Education and Teaching International, 41(1), 59–78. https://doi.org/10.1080/1470329032000172720

Sridharan, B., Tai, J., & Boud, D. (2019). Does the use of summative peer assessment in collaborative group work inhibit good judgement? Higher Education, 77(5), 853–870. https://doi.org/10.1007/s10734-018-0305-7

Sweller, J. (1994). Cognitive load theory, learning difficulty, and instructional design. Learning and Instruction, 4(4), 295–312. https://doi.org/10.1016/0959-4752(94)90003-5

Sweller, J. (2020). Cognitive load theory and educational technology. Educational Technology Research and Development, 68(1), 1–16. https://doi.org/10.1007/s11423-019-09701-3

To, J., & Panadero, E. (2019). Peer assessment effects on the self-assessment process of first-year undergraduates. Assessment & Evaluation in Higher Education, 44(6), 920–932. https://doi.org/10.1080/02602938.2018.1548559

The Japan Times. (2021). Tokyo enters fourth COVID-19 state of emergency as Olympics loom. The Japan Times. Retrieved December 1, 2021, from https://www.japantimes.co.jp/news/2021/07/12/national/fourth-coronavirus-emergency-tokyo/

Topping, K. J. (2013). Peers as a source of formative and summative assessment. In SAGE handbook of research on classroom assessment (pp. 395–412). Sage Publications.

Toth, P. D. (2008). Teacher- and Learner-led discourse in task-based grammar instruction: Providing procedural assistance for L2 morphosyntactic development. Language Learning, 58(2), 237–283. https://doi.org/10.1111/j.1467-9922.2008.00441.x

Trusty, A., & Truong, K. N. (2011). Augmenting the web for second language vocabulary learning. In: Proceedings of the SIGCHI conference on human factors in computing systems. https://doi.org/10.1145/1978942.1979414

van den Bogaard, M. E. D., & Saunders-Smits, G. N. (2007). Peer & self evaluations as means to improve the assessment of project based learning. In 2007 37th annual frontiers in education conference—global engineering: Knowledge without borders, opportunities without passports.

van Zundert, M., Sluijsmans, D., & van Merriënboer, J. (2010). Effective peer assessment processes: Research findings and future directions. Learning and Instruction, 20(4), 270–279. https://doi.org/10.1016/j.learninstruc.2009.08.004

Wu, J. G., & Miller, L. (2020). Improving english learners’ speaking through mobile-assisted peer feedback. RELC Journal, 51(1), 168–178. https://doi.org/10.1177/0033688219895335

Gorham, T., & Ogata, H. (2020). Improving skills for peer feedback on spoken content using an asynchronous learning analytics app. In 28th international conference on computers in education conference proceedings (Vol. 2, pp. 782–785). https://ci.nii.ac.jp/naid/120006940277/en/

Zgraggen, E., Galakatos, A., Crotty, A., Fekete, J., & Kraska, T. (2017). How progressive visualizations affect exploratory analysis. IEEE Transactions on Visualization and Computer Graphics, 23(8), 1977–1987. https://doi.org/10.1109/TVCG.2016.2607714

Zhao, H., Liu, J., Wu, J., Yao, K., & Huang, J. (2018). Watch-learning: Using the smartwatch for secondary language vocabulary learning. In Proceedings of the sixth international symposium of Chinese CHI. https://doi.org/10.1145/3202667.3204037

Zhou, Y., Andres-Bray, J. M. L., Hutt, S., Ostrow, K., & Baker, R. S. (2021). A comparison of hints vs. scaffolding in a MOOC with adult learners. In: Artificial intelligence in education: 22nd international conference, AIED 2021, Utrecht, The Netherlands, June 14–18, 2021, Proceedings, Part II (pp. 427–432). https://doi.org/10.1007/978-3-030-78270-2_76

Author information

Authors and Affiliations

Contributions

T.G. contributed to all aspects of the study. R.M. contributed to the analysis of the data, presentation of the results, and editing of the manuscript. H.O. contributed to the design of the study and editing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Ethical statement

This research was approved by the Research Ethics Committee of Rissho University.

Conflict of Interest

The authors declare no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gorham, T., Majumdar, R. & Ogata, H. Analyzing learner profiles in a microlearning app for training language learning peer feedback skills. J. Comput. Educ. 10, 549–574 (2023). https://doi.org/10.1007/s40692-023-00264-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40692-023-00264-0