Abstract

Mobile microlearning platforms have increased over the years. Literature shows that platforms use specific instructions or media, such as videos or multiformat materials (e.g., text, audio, quizzes, hands-on exercises). However, few studies investigate whether or how specific design principles used on these platforms contribute to learning efficacy. A mobile microlearning course for journalism education was developed using the design principles and instructional flow reported in literature. The goal of this formative research was to study the mobile microcourse’s learning efficacy, defined as effectiveness, efficiency, and appeal. Learners’ knowledge before and after the mobile microcourse was analyzed using semistructured questionnaires as well as pretests and posttests to measure differences. The results indicate that learners of this mobile microcourse had an increase in knowledge, more certainty in decisions about practical applications, and an increase in confidence in performing skills. However, automated feedback, timed gamified exercises, and interactive real-world content indicate room for improvement to enhance effective learning.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Mobile microlearning (MML), first mentioned in 2012, is evolving as an emerging practice in corporate training and workplace learning (Callisen 2016; Clark et al. 2018). Mobile microlearning (mobile micro-learning or micro learning) offers a new way to learn on the small screens of portable devices with bite-size steps and small units of information (Giurgiu 2017). Different from traditional online learning environments, such as Canvas or Moodle, mobile microlearning focuses on the learning process by chunking the learning material into smaller units, each teaching a single concept, and each learning unit lasting no longer than 5 min (Khurgin 2015; Nikou and Economides 2018a).

Mobile microlearning targets the mobility and flexibility of learners who use the small screen of portable technologies (e.g., smartphones) to learn anytime and anywhere in an informal manner, such as while waiting in a line for coffee or while riding the bus (Grant 2019). As Berge and Muilenburg (2013) argue, the learners themselves are mobile. Learners have the flexibility of using portable devices to reach out to the world and seek information of their choice when needed, in other words, “just-in-time, just enough, and just-for-me” (Traxler 2005, p. 14). While this is somehow true for all cases of mobile learning, recent technologies specifically made for mobile microlearning offer potential for supporting the learning process in new ways. These features bring new challenges to both learning technology and instructional methods. The main challenge is how to design significant learning content and assignment on small screens of mobile devices while still providing meaningful learning. Different guidelines for designing mobile microcourses and microcontents have been proposed in literature (Jahnke et al. 2019). However, whether a specific design of mobile microlearning can support the learning process has not been sufficiently studied.

Mobile microlearning is a mix of two designs of digital technology and instructional method. There is a codependency between them in that the design of technology and its affordances affect the instructional method and vice versa. As Jonassen et al. (1994) address, debating the separation of media and methods—as Clark (1994) and Kozma (1994) do—is the wrong debate. Rather, learning takes place as “situated learning” (Brown et al. 1989), meaning that learning is situated and constructed through the learner’s activities embedded into a certain context in which new knowledge or skills will be used. MML is part of the learner’s context or environment; the learner does not learn from it but with it when constructing new knowledge. Kozma (2000) acknowledged later that learning experiences exist in a complex mess of media and instructional methods. He writes, “Understanding the relationship between media, design, and learning should be the unique contribution of our field to knowledge in education.” (p. 12, emphasis added). It is critical that researchers embed themselves into the learners’ contexts and deeply understand the relationship among the media (digital technology) they use, the learning materials they engage with, and their real learning situations. They will then be able to develop a better learning solution. Hence, Kozma advises the use of formative research, such as design-based or educational design research. This viewpoint implies a shift from asking the question of “what works” in general to more socially responsible questions, in particular “What is the problem, how can we solve it, and what new knowledge can be derived from the solution?” (Reeves and Lin 2020, p. 8).

The mobile microlearning approach is especially interesting for learners outside traditional office environments who often use smartphones, for example, journalists working in the field to cover breaking news or employees at work who need quick solutions to problems (Wenger et al. 2014). As Wenger et al. (2014) shows, journalists should know “how to gather news with mobile devices, use them to interact with the social media audience, and how to format content appropriately for the medium” (Wenger et al. 2014, p. 138). Whether outside or in offices, they should know how to document information or edit a real-time video quickly so as to effectively present breaking news. In journalism, the 5 Cs of Writing News for Mobile Audiences refers to specific guidelines for writing news for a social media or digital news audience: Be Conversational, Be Considerate, Be Concise, Be Contextual, and Be Chunky (Baehr et al. 2010; Montgomery 2007). But there have been few education research studies focusing on how and in what ways a mobile microlearning approach supports journalists’ professional development in digital skills (Umair 2016).

Hence, this study aims to investigate how and in what ways a mobile microcourse can help journalists achieve certain learning objectives (e.g., understanding and applying the 5 Cs) and examine how the mobile microlearning course supports the learning process and affects the learner experience and learning efficacy including effectiveness, efficiency, and appeal (Honebein and Honebein 2015; Reigeluth and Carr-Chellman 2009).

Literature review

Literature shows that mobile microlearning approaches have increased over the past few years (Emerson and Berge 2018; Nikou and Economides 2018b). It has become a new teaching approach across disciplines, such as nursing education (Hui 2014), medical training and health professions (Simons et al. 2015), language training (Fang 2018), engineering (Zheng et al. 2019), science education (Brom et al. 2015), and programming skills (Skalka and Drlík 2018).

MML can improve learner motivation, engagement, and performance (Dingler et al. 2017; Jing-Wen 2016; Kovacs 2015; Liao 2015; Sirwan Mohammed et al. 2018; Zheng 2015). For example, the study by Nikou and Economides (2018b) reveals that microcontents given as homework activities in science learning can improve high school students’ motivation and performance. In addition, MML has become a promising learning approach that personalize learning materials on small screens and portable devices to the learners’ needs (Cairnes 2017). New teaching strategies, such as interactive microcontent, go beyond short videos by incorporating elements such as gamified learning activities (Aitchanov et al. 2018; Dai et al. 2018; Göschlberger and Bruck 2017). Because of limited time for learning in the workplace, mobile microlearning may have the advantage of flexibly and quickly conveying factual knowledge related to job skills (Decker et al. 2017).

However, issues with mobile microlearning include a lack of awareness and understanding about what microlearning can and cannot do (Baek and Touati 2017; Clark et al. 2018). Working on smartphones, learners can become distracted from their learning when writing text messages instead of completing lessons (Andoniou 2017). Additional research points to issues of accessibility, such as the need to provide offline versions of lessons for those with limited internet and to control bias related to gender, race, and age (Bursztyn et al. 2017). Also, streaming videos can result in relatively high costs, making it unaffordable for users. Another set of studies points to the problems of designing for small screens, including having too much information to fit and making it hard for users to search learning materials (Kabir and Kadage 2017).

Current research is in agreement that designing a mobile microcourse is challenging. One of the major elements that researchers have studied in past years is gamification (using gamified activities) (Ahmad 2018) and its connection to mobile microassessment, that is, using formative gamified activities to assess learners’ knowledge (Nikou and Economides 2018b). Furthermore, several studies point to specific principles for designing microlearning as outlined below (Cates et al. 2017; Nickerson et al. 2017; Park and Kim 2018; Sun et al. 2017; Yang et al. 2018).

-

It creates content that fits the small screens of mobile devices.

-

It addresses learners when they need the knowledge at that moment in time. For example, journalists in the field reporting breaking news need immediate knowledge on how to write for social media audiences. That means the lessons are short, no longer than 5 min.

-

It follows a specific instructional flow: (a) an aha moment that helps the learner understand the importance of the topic, (b) interactive content, (c) short exercises, and (d) instant automated feedback.

-

It requires the learner to interact with the content using practical gamified activities (e.g., drag and drop, fill in the blank, and rearrange words in the correct order).

As Jahnke et al. (2019) note, most of the underlying design principles behind mobile microlearning platforms are behavioristic, focusing on the learners’ click behavior. However, whether a specific design of mobile microlearning effectively supports learning—and how the learners experience the learning process with mobile microlearning—has not been sufficiently studied. Therefore, we investigated how a mobile microlearning course with Jahnke et al.’s (2019) four instructional flow design principles of MML affect learners’ knowledge gain, skills, and confidence. The four principles are demonstrated in detail with examples in the next section (“Design of mobile microlearning”). We chose learners in the field of journalism who were learning to write news for mobile audiences, which is a critical skill for journalists in their profession.

The research questions (RQs) of this study are as follows.

RQ1: To what extent does a specific design of a mobile microcourse increase learners’ knowledge and skills?

RQ2: To what extent does a specific design of a mobile microcourse affect the confidence of the learners in their professional skills to write news headlines and news stories for mobile audiences?

RQ3: What is the learner experience when interacting with the mobile microcourse?

To study these questions, this study applied a formative research approach (McKenney and Reeves 2018) that is, generally speaking, an iterative model of designing, testing (or evaluating), and researching. “The data are analyzed for ways to improve the course, and generalizations are hypothesized for improving the theory” (Reigeluth and Frick 1999, p. 5). This study adopted the framework of Honebein and Honebein (2015) who differentiate the three learning outcome values of instructional methods as effectiveness, efficiency, and appeal. According to them, “effectiveness is a measure of student achievement, efficiency is a measure of student time and/or cost, and appeal is a measure of continued student participation, which in other words means did students like the instruction” (p. 939). Aligned with this framework, the study examined learner experiences with a mobile microlearning course.

Design of mobile microlearning (MML)

This study investigated a mobile microlearning course, The 5 Cs of Writing News for Mobile Audiences, that applied the four specific design principles (Jahnke et al. 2019). The 5 Cs design process and the microlessons’ design are described in the following sections.

Overall design and development procedure

The mobile microcourse was designed for the small screens of mobile devices, namely smartphones. An iterative process of course design, development, and modification was conducted in a three-stage evaluation. In the first stage, an expert journalist of the research team created the first draft of the microlessons on the selected mobile microlearning platform, EdApp, based on literature review and mobile microlearning design principles as proposed by Jahnke et al. (2019). EdApp offers many templates with informational and interactive slides adaptable to different subjects.

In the second stage, two researchers in the study and two external experts conducted the first review of the draft of the microlessons and provided recommendations. The main adjustments in the first stage focused on wording, learning content, presentation formats, and interactive functionalities in the mobile application.

In the third evaluation stage, a pilot test was conducted for gathering feedback from real users to assess whether the revised microcourse content was understandable and reliable. Five volunteers, who were students enrolled in a digital media design course, were recruited as the pilot testers. They were asked to go through the entire mobile microcourse, as well as the pre- and postsurveys and pre- and posttests. The testers’ recommendations and feedback were applied to modify and improve the course. The main revisions in the third stage focused on revising content length, modifying the difficulty levels of exercises and activities, and adjusting overall instructional flow. Consequently, the final version of the mobile microlearning course for this study was confirmed and called, The 5 Cs of Writing News for Mobile Audiences. The course content included five topics (5Cs): (a) be conversational, (b) be considerate, (c) be concise, (d) be contextual, and (d) be chunky. The course targets journalism students and professional journalists who want to learn how to write effective news headlines and news stories for mobile audiences.

Overview of the microlessons, design, content, and sequence of activities

The five microlessons addressed the learning goal of how to effectively write journalistic news for mobile audiences. In response to the growing consumption of mobile news on smartphones, schools of journalism have recently added instruction in writing for this audience. Senior journalists, however, may not have received this training. In detail, the mobile microcourse’s learning goal is that after course completion, learners will be able to apply the 5 Cs, meaning they will be able to write a news headline or a news story for mobile audiences by using the 5 Cs. Each of the five lessons had the same four-step instructional flow, which was an adapted version of Gagne’s et al. (1992) nine events of instruction. Because lessons in mobile microlearning should be short (no more than 5 min), literature suggests that the design of MML should be based on the following four learner activities in this sequence (Jahnke et al. 2019).

-

(1)

Learners understand the relevance of the topic (an aha moment). (Gagne’s #1: Gain attention of the students).

-

(2)

They read and engage with interactive content. (Gagne’s #4: Present the content).

-

(3)

They apply the learned content in short exercises. (Gagne’s #6: Elicit performance, meaning students practice).

-

(4)

They receive immediate automated feedback on performance. (Gagne’s #7: Provide feedback and #8: Assess performance).

Gagne’s event #2 (inform students of the learning objectives), is inherent in the introduction to the microcourse, before the learner actually starts the course. Gagne’s #3 (stimulate recall of prior learning), #5 (provide learning guidance), and #9 (enhance retention and job transfer) are all incorporated through the human–computer interaction design of the MML digital application with gamified activities, drag and drop exercises, short questions, filling in missing words, and so forth.

Based on these four activities for learners, the following sections provide specific examples and screenshots of the microcourse studied.

-

(1)

Students understand the relevance of the topic (an aha moment).

Before students started the microcourse, they read a short paragraph that offered a brief introduction to the course and to each of the 5 Cs. Then, each of the five lessons started with an aha moment to help learners understand the relevance of the topic.

For example, in the lesson Be Considerate (see Fig. 1), learners were asked to put themselves in the shoes of mobile readers. In addition to building empathy with the mobile audience, the sequence was designed to lead to an aha moment for learners about how their audience consumes news on a mobile phone. In applying the design principle of a sequenced and engaging instructional flow, the aha moment was followed by the learning objective (Jahnke et al. 2019).

-

(2)

Reading and engaging with interactive content.

After understanding the topic’s relevance, students read or engaged with interactive content. The microcourse did not just display learning materials, but learners interacted with the learning materials in multiple ways. It differed from the one-way traditional textbook or e-book, in which learners can only read materials.

For example, in the Be Concise microlesson (see Fig. 2), learners used their fingers to swipe through and eliminate words to improve the sentence by making it more concise. This microlesson applied the design principle of interactive microcontent for closing practical skill gaps (Jahnke et al. 2019). A mobile microlearning lesson has interactive elements in which learners can practice and apply what they have learned (e.g., drag and drop, quizzes, and simulations).

-

(3)

Applying learned content in short exercises (gamified activities).

After students engaged and hopefully learned the content, short exercises were offered, and many of them were gamified activities.

An example of a short exercise is shown in Fig. 3. It is from the Be Contextual microlesson. Learners had 10 s to earn up to five stars by swiping true statements to the right and false statements to the left. The final slide in Fig. 3 summarizes the learner’s performance on this gamified quiz and offers a chance to play again. The chosen platform provides a way for learners to trade in their stars for prizes if the administrator decides to activate it. The microlesson applied the design principle of short exercises (Jahnke et al. 2019). The purpose was to engage users by requiring action when using the content.

-

(4)

Receiving instant automated feedback on performance.

The final step of the microlesson included feedback for students. They received immediate automated feedback on their performance from the applied exercises just described. An example is shown in Fig. 4. By tapping the arrows, the learner chose the correct answer from multiple options. Immediate feedback enabled users to correct performance on the spot and provided direction on what they need to work on.

The learning content, materials, activities, and exercises in the 5 Cs microcourse were designed in a bite-size manner; each lesson takes about 5 min. The flow of the 5 Cs mobile microcourse is shown in the Appendix Table 9.

Methods

This formative study of educational design research (McKenney and Reeves 2018) was conducted from September to November 2018 with 35 users. Participants downloaded the application (app) to their personal mobile phones. The original plan included 8 days to complete the course, but some participants took longer (see “Results” section). Participants could use the app whenever they had 5 min to complete a microlesson, e.g., while sitting on a bus or waiting in a line to get coffee.

The actual study process consisted of three steps. (Details are shown in the Appendix Table 9.) First, participants logged in and completed a precourse survey and a pretest. The precourse survey contained eight questions about basic demographic information (e.g., gender), position in the professional field, years of work experience, and perceptions regarding personal skills and existing knowledge about writing news for mobile audiences. The pretest measured the initial knowledge of learners before the microcourse. Second, participants completed the microlessons step by step, then answered a survey regarding their learning perceptions. In each of the five postlesson surveys, five questions were included regarding participant experience, perception, and concerns. Third, after completing the course, participants took a postcourse survey and a posttest. The postcourse questionnaire included 11 questions regarding participant skills, existing knowledge, and perceptions focused on the topic. For example, “In a few words, tell us up to three things you should remember when writing news for mobile audiences” (Q1). “I learned new things about how to write for mobile audiences” (Q2). “I am confident in my skills to write news stories for mobile audiences” (Q6). The posttest measured the gained knowledge of learners after completing the course.

Our hypothesis for the pretests and posttests was that the knowledge level would be relatively higher after the course. The pre- and posttests measured individual participants’ learning growth (La Barge 2007). Both the pre- and posttests had the same ten multiple-choice questions. The correct answers were created by an expert journalist. Questions #1 to #9 asked the learners to select a best headline. For each of those nine questions, the correct answer was the headline that led to at least a doubling in readers of a story on a major metropolitan newspaper’s website. One of the incorrect choices was the headline it replaced in an effort to improve reader traffic, and the third choice was a distractor. Question #10, “Which technique can be applied to chunk news stories?” was a multiple-choice question.

All surveys, online questions, and tests were included in the mobile application delivering the mobile microlearning course.

Data analysis

Qualitative data, such as responses to open-ended questions in the surveys, were analyzed with a thematic analysis approach (Boyatzis 1998). Quantitative data included the total course-completion time, the average completion time of each microlesson, pre- and postcourse surveys, and pre- and posttests. Data were analyzed with three statistical methods: a paired sample t-test, a one-way ANOVA, and a Pearson correlation coefficient comparison (Benesty et al. 2009; Park 2009). Quantitative data also included pre- and posttest scores, as well as Likert-scale responses to certain questions in the surveys.

For the pre- and posttests, the study used the same ten questions. The test scores were analyzed in two ways: a traditional scoring method of correct/incorrect answers (Shadiev et al. 2018) and a qualifier scoring method (La Barge 2007). For the traditional scoring method, learners who correctly answered one question could obtain 10 points, so that the maximum score for the test was 100 points. For the qualifier method, participants were given two qualifier options after each test question: “I knew the answer” and “I was guessing.” Examples are in Fig. 5. This method gave additional information on whether the answer was a lucky guess or whether learners were applying knowledge. Questions in which the learners indicated that they were guessing were counted as incorrect in the qualifier method for determining the number of correct responses (La Barge 2007).

The study investigated the users’ learning growth (i.e., gained score) by the comparison of pre- and posttest scores. Adopted from the Missouri Department of Education’s Setting Growth Targets for Student Learning Criteria (2015), five-tiered growth targets were applied as measurement criteria for effective learning.

Tier One (Beginning): Pretest scores ranged from 0 to 40 out of 100 points. Learners in Tier One should reach a minimum expected target score of 60 points on the posttest to indicate effective learning.

Tier Two (Far but Likely): Pretest scores ranged from 41 to 60 points. Learners in Tier Two should reach a minimum expected target score of 70 points on the posttest to indicate effective learning.

Tier Three (Close to Proficient): Pretest scores ranged from 61 to 75 points. Learners in Tier Three should reach a minimum expected target score of 80 on the posttest to indicate effective learning.

Tier Four (Proficient): Pretest scores ranged from 76 to 85 points. Learners in Tier Four should reach a minimum expected target score of 90 on the posttest to indicate effective learning.

Tier Five (Proficient and Expert): Pretest scores ranged from 86 to 100 points. Learners in Tier Five should reach a minimum expected target score of 95 on the posttest to indicate effective learning.

We applied the three methods (traditional-score analysis, qualifier-score analysis, and learning-growth target score analysis) and have defined effective learning as follows. First, at least 80% of the learners obtain higher scores in the posttest after completing the mobile microcourse. Second, the average score (mean score) of learners in the posttest should be higher than in the pretest. Third, at least 65% of learners achieve the growth target postscore for their tier-level group (Fiore et al. 2017; PowerSchool 2016).

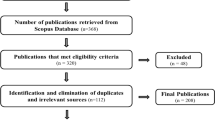

Participant recruitment

Thirty-five participants were recruited for the study, including 28 women and 7 men. The valid sample sizes were estimated according to Eng (2003), which offers a sample size calculation method for comparative research studies (applying Eq. (1), in: Eng 2003, p. 310). According to the proposed equation, the sample size calculated for the study was 31.4. In the calculation, the estimated standard deviation (SD) and the estimated minimum expected difference (D) between the pre- and posttests’ mean scores was 10 points; a single unit score for each question item was 10 points. The selected significance criterion was 1.96 (.05), and the statistical power was .842 (.80) (Eng 2003, Table 1 and Table 2, p. 311). Accordingly, the study met the minimum expected sample size (n = 31.4) by recruiting 35 participants.

Participants had to be journalism students, journalism educators, or journalists to qualify for the study. At the time of the study, 14 participants reported up to 5 years of work experience in journalism; five had worked 6–10 years; nine had worked 11–19 years, and seven had worked 20 years or more.

The sampling process was conducted with a journalism fellow. A list of 98 potential users was given to the research team. Those on the list were clustered into four groups based on their years of experience in journalism, including 37 individuals with 0–5 years of experience, 15 individuals with 6–10 years of experience, 23 individuals with 11–19 years of experience, and 23 individuals with 20 or more years of experience. The research team randomly selected 35 users. As soon as one of the participants dropped out, the next user on the list was requested, and so on, until 35 users completed the microcourse, surveys, and tests. Participants were asked to use their personal smartphones. As an incentive, each participant who went through the entire course and completed the tests and online questionnaires received a $50 gift card.

Results

In this section, study results are described and organized as to the design of mobile microlearning course’s effectiveness, efficiency, and appeal.

Efficiency

Time to complete the mobile microcourse and each lesson

The time to complete the course was expected to be 8 days or less. The actual time that participants took to complete the course varied with 13 participants taking 1–3 days, four participants taking 4–5 days, five participants taking 6–8 days, and 13 participants taking more than 8 days. The average time to complete the course for all 35 participants was 8.8 days.

Learning duration of each lesson

The participants’ average time spent on each lesson ranged from 4.4 to 4.9 min, with an average of 4.7 min. The average time spent on each lesson is listed below from the longest to the shortest time.

-

Be Conversational: 4.9 min

-

Be Considerate: 4.9 min

-

Be Chunky: 4.7 min

-

Be Contextual: 4.5 min

-

Be Concise: 4.4 min

In response to the question of whether the lesson (a) too long (coded point 3), (b) about right (coded point 2), or (c) too short (coded point 1), the mean score is 1.86 (SD = .36), and most of the participants (86%) said that each microlesson’s length is about right.

Effectiveness

Participants’ perception of difficulty levels about the course

Participants were asked about the difficulty level of each lesson by responding to whether the lesson was (a) too hard (coded point 3), (b) about right (coded point 2), or (c) too easy (coded point 1). The mean score was 2.06 (SD = .34), and most of the participants (88%) said the difficulty level in each lesson was about right.

Participants’ comfort level in using mobile technology

Participants’ comfort level in using mobile technology was assessed based on their response to Q7 in the precourse survey: “I am comfortable using mobile technology.” Their average comfort level in using mobile devices was 4.31 points (on a 5-point Likert scale, 5 = strongly agree). About half of the participants (49%) strongly agreed, and 40% agreed that they felt comfortable using mobile devices. Eight percent expressed a neutral opinion and three percent strongly disagreed that using mobile technology was comfortable for them. Using the one-way ANOVA analysis, results show significant differences (F (3, 31) = 2.968, p = .000) among the four groups in their comfort level in using mobile technology. The quantitative data analysis was specified on a 95% confidence level for all statistical tests in the study (see Table 1).

Moreover, Table 2 shows the Fisher’s Least Significant Difference Post Hoc test (a pairwise-comparison to compare each group’s difference with the other, one by one). The groups were A (0–5 years of experience), B (6–10 years), C (11–19 years) and D (20 or more years). Group C (M = 3.88, SD = 1.36) was significantly different from Group A (M = 4.47, SD = .51, p = .009) as well as Group B (M = 4.04, SD = .55, p = .045). It means that participants in Group C (with job experience of 11–19 years) expressed less comfort level in using mobile technologies than participants with < 10 years of job experience. When comparing Group C and Group D (M = 4.29, SD = .76, p = .135), there was no statistical significance between the two groups. While comparing Group D with Group A (M = 4.47, SD = .51, p = .342) and Group B (M = 4.04, SD = .55, p = .507), results showed no significant differences between their comfort levels in using mobile technologies.

Participants’ confidence in writing news headlines and stories for mobile audiences

Participants were asked about their confidence in writing news headlines for mobile audiences in Q6 of the precourse survey and Q5 of the postcourse survey. They rated the statement, “I am confident in my skills to write news headlines for mobile audiences,” on a 5-point Likert scale (5 = strongly agree). Using the statistical analysis of a paired sample t-test, results indicate that participants’ confidence level in writing news headlines was significantly higher after they completed the course (M = 3.86, SD = .81) than before the course (M = 3.06, SD = .97), (t(34) = − 5.253, p = .000) (see Table 3).

Participants were also asked about their confidence in writing news stories for mobile audiences in Q8 of the precourse survey and Q6 of the postcourse survey by rating their agreement with the statement, “I am confident in my skills to write news stories for mobile audiences,” on a 5-point Likert scale (5 = strongly agree). Using the statistical analysis of a paired sample t-test, results indicate that participants’ confidence level in writing news stories was significantly higher after the course (M = 4.23, SD = .81) than before the course (M = 3.60, SD = 1.09), (t(34) = − 3.421, p = .002) (see Table 3).

Participants’ confidence in writing mobile news after each of the five microlessons

Participants were asked about their confidence in writing mobile news after completing each microlesson. Q1 in each postlesson survey was, “I feel more confident in writing mobile news after completing this lesson” (on a 5-point Likert scale, 5 = strongly agree). Figure 6 shows that the majority of participants agreed that they had more confidence in writing after completing each lesson.

In these five lessons, since the lesson, Be Contextual, had the lowest agreement at 63% (20% strongly and agree 43% agree), we looked into the 37% of the responses that were rated as neutral or disagree. These 37% participants (P) said in the open-ended survey that the Be Contextual lesson is too short, and it would be better to include more examples and practice for this topic. For example, P5 mentioned, “This lesson could have been a little longer.” P15 mentioned, “[It] need[s] more examples,” and P25 said, “[It] could have used more practicing.” Accordingly, compared to the other microlessons, the lower confidence level in writing mobile news contextually (63%) appears to indicate the lesson is too short and needs more examples and practice in order for learners to master the skills of being contextual and, consequently, have more confidence in applying them.

Participants’ knowledge of how to write news for mobile audiences

Q6 of the precourse survey and Q1 of the postcourse survey asked, “In a few words, tell us up to three things you should remember when writing news for mobile audiences.” The purpose of this question was to assess whether the learners’ cognitive knowledge of how to write news for mobile audiences changed after the 5 Cs course. Table 4 shows that the 35 learners’ cognitive knowledge was impacted by the microlessons covering the 5 Cs: Be Conversational, Be Contextual, Be Concise, Be Considerate, and Be Chunky. Before the course, participants’ responses could be clustered into 14 themes (see Table 4). After the course, participants’ responses were clustered into two themes. The first theme focused on the 5 Cs of writing conversationally, considerately, concisely, contextually, and in a chunky way (see Table 4). After the course, 32 of the 35 participants (91.4%) were able to list the concepts and relevant knowledge about the 5 Cs and 3 of the 35 participants (8.6%) provided other comments in addition to comments related to the 5 Cs.

Pretest and posttest results

The pretest and posttest results were analyzed by two methods including the traditional scoring method and the qualifier scoring method (see the “Methods” section).

Figure 7 shows the traditional-analysis test scores before (gray line) and after (black line) the course. Results indicate that 80%, 28 out of 35 participants (Ps), obtained higher test scores after completing the course, as shown by the black line in Fig. 7. Three of 35 participants (P 29, P 30, P 31) received the same scores before and after the course as shown by the black line and the gray line overlapping in numbers 29, 30, 31 on the horizontal axis in Fig. 7. Four of 35 participants got lower scores after completing the lessons, resulting in the black line (posttest course) being lower than the gray line (pretest score) for P32–P35.

The posttest scores (M = 73.14, SD = 13.88) are statistically significantly higher than the pretest scores (M = 56.00, SD = 15.18) according to the paired-sample t-test, t(34) = − 5.823, p = .000. The microcourse had a statistically significant effect on participant performance; the average score increased 17 points in the posttest. After the course, participants had gained knowledge about how to apply the 5 Cs in news writing. However, there were individual differences. For example, P1 gained 60 points while P27 gained only 10 points.

Furthermore, results of the pre- and postcourse guessing rate show that the guessing rates of 86% (30 of 35 individuals) of participants decreased after the course. In Fig. 8, the gray line indicates the precourse guessing rate, and the black line represents the postcourse guessing rate. Three people had the same guessing rate before and after. Two people had higher guessing rates after completing the course.

A Pearson correlation coefficient was computed to assess the relationship between the test performance and guessing/knowing ratio (Benesty et al. 2009). There was no significant correlation between the learners’ pretest performance and guessing/knowing rates (guessing rate: r = .16, n = 35, p = .33; knowing rate: r = − .03, n = 35, p = .86). The pretest guessing/knowing rates are scattered among the 35 learners. However, in the posttest, there was a significant correlation between the learners’ posttest performance and guessing/knowing rates (guessing rate: r = − .34, n = 35, p = .04; knowing rate: r = .34, n = 35, p = .04). After the course, the learners’ guessing rate significantly decreased, and the knowing rate significantly increased. Tables 5 and 6 summarize the results.

Applying the Tier One to Tier Five learning growth criteria (introduced in the “Data analysis” section), the results of pre- and posttest scores analysis are shown in Table 7. In Tier One, 100% of the learners (n = 10 in pretest; n = 10 in posttest) achieved the minimum target score of 60 points on their posttest. In Tier Two, 71.42% of the learners (n = 14 in pretest; n = 10 in posttest) achieved the minimum target score of 70 points on their posttest. In Tier Three, 66.66% of the learners (n = 6 in pretest; n = 4 in posttest) achieved the minimum target score of 80 points on their posttest. In Tier Four, 40% of the learners (n = 5 in pretest; n = 2 in posttest) achieved the minimum target score of 90 points on their posttest. There was no participant in Tier Five (pretest, n = 0). Overall, 69.52% (n = 26) of learners achieved their learning-growth target scores and 30.48% (n = 9) of learners did not meet the minimum learning-growth target scores, including two learners whose scores increased but did not achieve the minimum target growth, three learners who had equal scores in the pre- and posttests, and four learners who got lower scores in their posttest (see Table 7).

For the qualifier scoring results, Table 8 presents the percentage of the 35 participants who had the correct answers (using traditional scoring) on the pre- and posttests (see % Correct in Table 8) and the percentage of participants who obtained qualified correct answers (using qualifier scoring). A score was qualified when the participant got the correct answer and selected “I knew the answer” on the follow-up question (see % Select I Know in Table 8).

Under the traditional-scoring calculation, results indicate that, on average, 55% of participants correctly selected the right answer on the pretest and 72% of participants correctly selected the right answer on the posttest (see Table 8), an increase of 17 points.

In terms of the qualifier-scoring calculation, after each question, the qualifier analysis was based on whether the participant selected, “I knew the answer,” or “I was guessing,” on a follow-up question. If the participant answered correctly and also selected “I knew,” the item was counted as a correct answer. If the participant answered correctly and also selected “I was guessing,” the item was counted as an incorrect answer. Accordingly, results show the actual knowledge gained by participants in the course increased by 55 points, to 82% correct after the course from 27% before the course. Moreover, participants decreased their posttest guessing rate by 55 points to 18% as compared to their pretest guessing rate of 73%.

Lastly, there was no significant difference among the four groups (based on years of job experience) in their pretest or posttest guessing or knowing rates. The one-way ANOVA result for the four groups of the pretest guessing rate: F (3, 31) = .476, p = .701 > .05. The one-way ANOVA result for the four groups of the posttest: F (3, 31) = 1.498, p = .237 > .05.

Appeal

Participants’ perception of convenience in fitting microlessons into their daily routine

Participants indicated how convenient it was to fit the microlessons into their daily routine on item #4 of the postcourse survey by rating their agreement with the statement, “Fitting the short lessons into my daily routine was convenient,” on a 5-point Likert scale (5 = strongly agree). The average score was 4.29. Most participants (46% strongly agree and 40% agree) said that it was convenient to fit the short lessons into their daily routine. Among the four groups classified by work experience, all ranked similarly with averages between 4.00 and 4.43.

Participants’ perceptions about the course

The postcourse survey collected data regarding effectiveness and recommendations. In item #2, participants rated their agreement as to whether they learned new things about how to write for mobile audiences on a 5-point Likert scale (5 = strongly agree). The average response was 4.31, with 49% strongly agreeing, 40% agreeing, 8% reporting a neutral opinion, and 3% strongly disagreeing. Using the same Likert scale, participants indicated whether exercises in the course were fun on item #3. The average score was 4.17, with 31% strongly agreeing, 54% agreeing, 15% reporting a neutral opinion, and no participants disagreeing or strongly disagreeing.

Recommendability

All 35 participants agreed that they would recommend the course. Many of the participants mentioned the course was interesting, short, helpful, convenient, and easy to use. Participants were asked to highlight one activity in the course they found helpful. In response, many of the participants (P) mentioned things such as “the interactive exercises such as writing their own headlines,” (P 6), “visual quizzes” (P 28), “multiple choice questions in the review section,” (P 13) and “real-life examples.” (P27).

They also proposed having more practical examples and explanations to aid in understanding the course content. Some participants (P) shared positive comments. P15 said, “I liked the ability to strike the words from the sentences in the beginning. That was a pleasant interactive experience.” P10 explained, “Seeing different examples of how two different headlines on the same story performed helped me understand how writing more conversational headlines can drive engagement and interest among readers.”

Participants also shared concerns. P3 said, “I did not like the true/false game at the end. The statements were too long to read in the short amount of time and I found myself struggling.” P33 stated, “The timed event made me, at times, worry more about the timer than what I was reviewing. Is it possible to make the allowed time longer?”

In addition, participants wanted to have clear navigation guidance before starting a game or an exercise and to receive personalized feedback on their responses. For example, P5 said, “How to choose an answer was sometimes confusing or not clear,” referring to the swipe feature of some exercises. P17 concluded, “I thought the lesson worked well. However, when you submit your own headline, there’s no way of knowing if it’s a good headline or not.”

Participants expressed concern about the timed true or false gamified quizzes and suggested extending the time so that they would be able to read the game instruction and answer the quiz. The true or false gamified quizzes that were too short were in the lessons of Be Considerate, Be Contextual, and Be Chunky. The time to answer each true or false question was 10 s. After each timed quiz, a replay button allowed participants to play the game again and again. The rationale of the timed games was to stimulate the engagement that occurs in playing games. However, the data show that the learners did not prefer the timed element.

Discussion

The study shows that the mobile microlearning course positively supports learning effectiveness, efficiency, and appeal. However, compared to the effect of efficiency and appeal, the design for learning effectiveness has room for improvement as the course was not equally effective for all learners.

Research question 1: To what extent does the mobile microcourse increase the learners’ knowledge and skills?

Pre- and posttest results reveal the effectiveness of the microformat, with this course having significant positive effects on learning performance.

The results show that this MML course supports the learning process. The data show that 80% (n = 28) of learners significantly obtained higher scores in their posttest, achieving the effective learning hypothesis that at lest 80% of the learners obtain higher scores in the posttest. The learners’ average posttest score was higher than in the pretest, achieving the goal of a statistically significant increase in posttest scores (see “Pretest and posttest results” section). In addition, in the qualifier-scoring analysis (guessing vs. knowing), learners’ guessing rate in the posttest dropped by 55 points to 18%, compared to their pretest guessing rate of 73% (Table 8). This drop in the guessing rate indicates a decrease in learners’ uncertainties (Burton and Miller 1999). In the relative learning-growth analysis, 69.5% (n = 26) of learners achieved their minimum learning-growth target scores (Table 7).

However, 30.48% (n = 9) of learners did not meet the minimum learning-growth target scores. Either their posttest scores were higher than their pretest scores but did not achieve the minimum target criteria, or their posttest scores were equal or lower than their pretest scores. These learners’ performance indicates opportunities for improving the course.

Research question 2: To what extent does the mobile microcourse affect the confidence of the learners in their skills to write headlines and stories for mobile audiences?

Participants’ confidence in writing a news headline and in writing a news story for mobile audiences have significantly increased after the MML course.

According to Bandura’s (1977, 2010) social cognitive theory, a person’s self-perceptions of confidence level in their capabilities to accomplish a specific performance can be defined as an individual’s self-efficacy. The greater confidence people have that they can complete a task, the more self-efficacy they possess to achieve the task. In keeping with this concept, the study found that the mobile microcourse increased learners’ confidence level, an indicator for self-efficacy. However, the increased confidence in writing news content for mobile audiences is related to the MML experience in its entirety. We have no data to indicate whether one element of the MML experience supported confidence more than other elements. Further studies are needed.

Research question 3: What is the learner experience when interacting with mobile microlessons?

All the participants would recommend the mobile microlearning course to other journalists who want to learn how to write news for mobile audiences. Also, most of the participants agreed that they had learned new things (89%) and had enjoyed learning (76%). Participants described the course as “fun,” “interesting,” and “short and helpful.” One noted the course “provided excellent tips and insights for writing for mobile in a fun and non-time-consuming format.” They specifically mentioned “the interactive quizzes, games, and exercises such as writing their own headlines,” “multiple-choice questions in the final review section,” and “concrete, different, and real-world examples” as useful parts of the course. Adapting Honebein and Honebein (2015), we argue that appeal means students like the entire MML experience; the positive perceptions in this study indicate that the mobile microlearning course was appealing to the learners. However, some (n = 9) said that even more practical examples and explanations of the learning concepts would aid in understanding the course content. Additionally, eight participants said that they had had difficulty completing the timed exercises. Overall, the participants’ responses confirmed that interactive microcontent, exercises, and instant automated feedback can be valuable design elements for mobile microlessons.

In summary, the results show that the MML course met its goals for learning effectiveness and increased learners’ confidence in applying the skills it taught, and that the MML course is efficient and appealing to learners. However, room remains to improve its learning effectiveness for some learners.

Recommended improvements for MML course design

This formative study gives new knowledge on how to design MML courses to increase their efficiency, effectiveness, and appeal.

The first insight refers to our applied MML design principle #4: automated instant feedback. The theory was that automated feedback delivered instantly is useful for learners. In this MML course, the feedback system gave the correct answers to questions in quizzes and exercises; it also provided an explanation for why a specific answer was correct. The automated system worked well when there was only one correct answer. However, for example, when learners were given an open-ended item, such as writing a news headline, the feedback system could offer only one correct headline and explain why it was correct. It could not comment on that learner’s particular headline and explain how it could be better. Learners indicated that they wanted personalized feedback so that they were able to understand the gap between what they already knew or learned and where to improve. Accordingly, automated feedback should include more personalized, meaningful, and differentiated feedback, instead of generalized automated feedback, which can be a key point for designing a better feedback system in MML. One option would be to use a chatbot, in which the automated feedback system can be trained through machine learning to give personalized feedback (Smutny and Schreiberova 2020). Other options might include a mix of automated generalized feedback and feedback by an instructor. To keep the self-paced nature of MML, one-time personalized feedback from an instructor could be integrated, allowing learners to request further feedback. Further research is needed.

The second set of new knowledge refers to MML design principle #3: apply learned content in short exercises. Our course applied different types of short exercises in each lesson such as dragging and dropping to add missing words, tapping quote bubbles to chunk a story, or evaluating true or false flash cards. Several of such tasks had a time limit. However, the study indicates that the time span was too short. When there is not sufficient time to understand the instruction or the gamified task, learners may feel frustration, which is counterproductive to learning effectiveness. Future research is needed to explore the time restriction and the learning context to determine how much time is effective for each context. Learners from journalism may have different needs than learners from computer science.

The third insight refers to MML design principle #2: interactive content. This study shows the relevance of combining real-world examples with interactive learning content to increase the appeal of the MML course. Real-world examples are authentic for the learner, are age appropriate, and connect the learner’s interests and knowledge with the real world. For example, when learning how to write concisely, best practice is to use an example of wordy versus concise that focuses on things the learner likes and finds interesting. In other words, a real-world example from a relevant news article or social media is more effective than an example from Shakespeare. Through the real-world examples, learners can easily connect their conceptual knowledge to the new situation. Interactive real-world content can be supported with real-life images and a selection of text in which the user applies drag and drop to indicate which image correctly corresponds to which text.

Lastly, MML is meant for the small screens of smartphones, and text that is too long can frustrate the learner. Clear, concise sentences matter. MML does not support the same amount of verbiage that is used in learning management systems designed for desktop or laptop use. Further research is needed to investigate how much content and how to present it for efficient learning. In general, while the recommendations of more information might be logical from the participants’ perspective, more examples or longer lessons might be counterproductive to the idea of MML. Further research is needed on the optimal length and level of difficulty for MML lessons. In addition, as Reeves and Lin (2020) say, technology-enhanced learning is always embedded into certain contexts, and studies of MML have to understand the different contexts to foster the learning experiences.

Implications

Our study shows that the 5 Cs mobile microlearning course positively affects learning efficacy by increasing learners’ knowledge about writing news for mobile audiences. For MML lessons, which should take no more than 5 min, the study points to the importance of a four-step sequence. These steps are (a) an aha moment, (b) interactive content, (c) short gamified exercises, and (d) instant automated feedback. This study shows that such a microcourse designed in this way can efficiently support learning.

Does this research on mobile microlearning show that MML should be applied in all of higher education as a substitute for other online courses? No, this is not the message. The message is that for certain topics, which are rather small and can be chunked into nugget-size units (e.g., video editing, computer programming, business marketing, etc.), mobile microlearning is useful when applying the four specific instructional flow design principles. However, using MML to convey deeper learning concepts, such as meaningful learning with technologies or other more complex topics, is challenging. According to Howland et al. (2013), meaningful learning supports the higher order thinking of analyzing and creating. For such concepts, cooperative learning is one important element. Meaningful learning goes beyond the lower order thinking skills of recalling facts or understanding. The applied design principles of MML proposed in this article certainly helped learners gain lower order thinking skills, such as remembering the 5 Cs concepts and understanding when and how to apply the concepts to write a mobile headline or a story. However, MML has limitations in supporting learners with collaborative learning or higher order thinking as it does not offer learners the chance to collaborate with others to expand and solidify their learning in a broader context. Learners do not get the option to analyze, synthesize, or create new products in order to develop higher order thinking skills.

Instead, MML fosters learners’ click activities that support a quick scanning of information rather than constructing deeper knowledge. The issues discussed here contribute to the broader discourse of automating human activities in the digital age (e.g., creating artifacts that think for humans) versus enriching and empowering teachers and students with technologies (e.g., creating artifacts to think with). MML tends to focus on the automation of learning and supports a learning approach where the answer is known, but it may not be useful for learning when the answer is not known. Further research is required.

Despite these limitations, mobile microlearning is not devoid of usefulness. However, it is crucial to remember that it is not the only way of learning. Our world provides complex questions where the answer is not yet known (e.g., challenges of environmental issues), and MML cannot help there, at least not in its current design. We, therefore, encourage mobile microlearning designers and developers to offer different points of entry into learning. For example, one can be the mobile microlearning way (e.g., to make people curious) and another might be a deeper or more meaningful approach. One can build on the other to encourage learners to get into the details. In addition, as shown by Major and Calandrino (2018), a revised mobile microlearning design can go beyond chunking. Their study illustrates how microlearning can be used for deeper learning. In it, students were encouraged to use mobile devices to connect the subject matter with their everyday lives as well as the world around them. Learners uploaded photos or short videos of what they had applied or created, and coaches gave feedback in a timely manner.

Limitations

The results reflect a specific population: journalists seeking to improve professional skills. Moreover, this study was of a quantitative–exploratory nature. Including more participants at a future date to increase the reliability of the results is recommended because the sample size of the current study was only 35 participants. Attempting a similar study across different teaching fields might also be useful. This study did not follow up to check the participants’ retained knowledge after weeks or months.

In the study, participants were allowed to access the mobile microcourse whenever they found time in order to mirror the real-world scenario of journalists in the field with a need to know. All participants took the pretest, course, and posttest at their own pace instead of having them all do the pretest on the same day, then take the course at their own pace, and later take the posttest on the same day. Further research is needed to understand how this may or may not affect the study results.

The study adopted the Missouri Department of Education Setting Growth Targets Criteria (2015) and used the 5-Tier pre–post metrics to assess participants’ learning growth. Learners in the study were college students and journalism professionals (e.g., journalists and journalism educators), while the original target learners of the Missouri Department of Education Setting were K-12 learners. Future research is needed about the reliability and validity of these pre–post metrics for postsecondary settings.

This course was made for the small screens of smartphones, so the content cannot be long without scrolling. This limitation may impede the ability to follow widely accepted instructional design principles, for example, how to formulate learning goals and objectives, as the length of text would not fit on the screen. Future research is needed to explore how to design meaningful goals and learning objectives for small screens (cf. Mager 1988).

Conclusion

The study provides evidence that mobile microlearning for journalism education is effective at increasing journalists’ skills in writing news for mobile readers. This MML course incorporated several critical design principles of mobile microlearning. The five microlessons, no more than 5 min each, followed a specific sequence of (a) an aha moment that helps the learner grasp the relevance of the topic, (b) interactive content, (c) short exercises, and (d) instant automated feedback. The course supported the learning of the participants, increased their knowledge and skills, and increased their confidence in writing news for mobile audiences.

Results show that mobile microlearning can be effective and efficient in supporting learning and appealing to learners. However, the course was not equally effective for all learners, leaving room for improvement. Future research on mobile microlearning could consider the specific design principles proposed in this study as applied to a broader audience or other professional fields.

References

Ahmad, N. (2018). Effects of gamification as a micro learning tool on instruction. E-Leader International Journal, 13(1), 1–9.

Aitchanov, B., Zhaparov, M., & Ibragimov, M. (2018). The research and development of the information system on mobile devices for micro-learning in Educational Institutes. Proceedings of 2018 14th International Conference on Electronics Computer and Computation (ICECCO) (pp. 1–4). https://doi.org/10.1109/ICECCO.2018.8634653.

Andoniou, C. (2017). Technoliterati: Digital explorations of diverse micro-learning experiences (7). Resource document. https://dspace.adu.ac.ae/handle/1/1141. Accessed 7 November 2017.

Baehr, C. M., Baehr, C., & Schaller, B. (2010). Writing for the Internet: A guide to real communication in virtual space. ABC-CLIO. Santa Barbara, California: Greenwood Press.

Baek, Y., & Touati, A. (2017). Exploring how individual traits influence enjoyment in a mobile learning game. Computers in Human Behavior, 69, 347–357.

Bandura, A. (1977). Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review, 84(2), 191–215.

Bandura, A. (2010). Self-efficacy. The Corsini encyclopedia of psychology. Hoboken, NJ: Wiley.

Benesty, J., Chen, J., Huang, Y., & Cohen, I. (2009). Pearson correlation coefficient. In Noise reduction in speech processing. Berlin: Springer. https://doi.org/10.1007/978-3-642-00296-0_5.

Berge, Z. L., & Muilenburg, L. (Eds.). (2013). Handbook of mobile learning. New York: Routledge.

Boyatzis, R. E. (1998). Transforming qualitative information: Thematic analysis and code development. Thousand Oaks, CA: Sage.

Brom, C., Levčík, D., Buchtová, M., & Klement, D. (2015). Playing educational micro-games at high schools: Individually or collectively? Computers in Human Behavior, 48, 682–694.

Brown, J. S., Collins, A., & Duguid, P. (1989). Situated cognition and the culture of learning. Educational Researcher, 18(1), 32–41.

Bursztyn, N., Shelton, B., Walker, A., & Pederson, J. (2017). Increasing undergraduate interest to learn geoscience with GPS-based augmented reality field trips on Student’s own smartphones. GSA Today, 27(6), 4–10.

Burton, R. F., & Miller, D. J. (1999). Statistical modelling of multiple-choice and true/false tests: Ways of considering, and of reducing, the uncertainties attributable to guessing. Assessment & Evaluation in Higher Education, 24(4), 399–411.

Cairnes, R. (2017). Beyond the hype of micro learning: 4 steps to holistic learning. Resource document. https://www.insidehr.com.au/hype-micro-learning-4-steps-holistic-learning/. Accessed 14 October 2017.

Callisen, L. (2016). Why micro learning is the future of training in the workplace. Resource document. https://elearningindustry.com/micro-learning-future-of-training-workplace. Accessed 14 October 2017.

Cates, S., Barron, D., & Ruddiman, P. (2017). MobiLearn go: Mobile microlearning as an active, location-aware game. Proceedings of the 19th International Conference on Human–Computer Interaction with Mobile Devices and Services (pp. 1–7). https://doi.org/10.1145/3098279.3122146.

Clark, R. E. (1994). Media will never influence learning. Educational Technology Research and Development, 42(2), 21–29.

Clark, H., Jassal, P. K., Van Noy, M., & Paek, P. L. (2018). A new work-and-learn framework. In D. Ifenthaler (Ed.), Digital workplace learning (pp. 23–41). New York: Springer.

Dai, H., Tao, Y., & Shi, T. W. (2018). Research on mobile learning and micro course in the big data environment. Proceedings of the 2nd International Conference on E-Education, E-Business and E-Technology (pp. 48–51).

Decker, J., Hauschild, A. L., Meinecke, N., Redler, M., & Schumann, M. (2017). Adoption of micro and mobile learning in German enterprises: A quantitative study. Proceedings of European Conference on e-Learning (pp. 132–141).

Dingler, T., Weber, D., Pielot, M., Cooper, J., Chang, C.-C., & Henze, N. (2017). Language learning on-the-go: Opportune moments and design of mobile microlearning sessions. Proceedings of the 19th International Conference on Human–Computer Interaction with Mobile Devices and Services (pp. 1–12). https://doi.org/10.1145/3098279.3098565.

Emerson, L. C., & Berge, Z. L. (2018). Microlearning: Knowledge management applications and competency-based training in the workplace. Knowledge Management & E-Learning: An International Journal, 10(2), 125–132.

Eng, J. (2003). Sample size estimation: How many individuals should be studied? Radiology, 227(2), 309–313.

Fang, Q. (2018). A study of college English teaching mode in the context of micro-learning. Proceedings of the 2018 International Conference on Management and Education, Humanities and Social Sciences (MEHSS 2018) (pp. 235–239). https://doi.org/10.2991/mehss-18.2018.50.

Fiore, S. M., Graesser, A., Greiff, S., Griffin, P., Gong, B., Kyllonen, P., et al. (2017). Collaborative problem solving: Considerations for the national assessment of educational progress. Washington, D.C.: National Center for Education Statistics.

Gagne, R. M., Briggs, L. J., & Wager, W. W. (1992). Principles of instructional design (4th ed.). Fort Worth, TX: Harcourt Brace Jovanovich College Publishers.

Giurgiu, L. (2017). Microlearning an evolving elearning trend. Scientific Bulletin, 22(1), 18–23.

Göschlberger, B., & Bruck, P. A. (2017). Gamification in mobile and workplace integrated microLearning. Proceedings of iiWAS ’17: The 19th International Conference on Information Integration and Web-based Applications & Services (pp. 545–552). https://doi.org/10.1145/3151759.3151795.

Grant, M. M. (2019). Difficulties in defining mobile learning: Analysis, design characteristics, and implications. Educational Technology Research and Development, 67(2), 361–388.

Honebein, P. C., & Honebein, C. H. (2015). Effectiveness, efficiency, and appeal: Pick any two? The influence of learning domains and learning outcomes on designer judgments of useful instructional methods. Educational Technology Research and Development, 63(6), 937–955.

Howland, J. L., Jonassen, D. H., & Marra, R. M. (2013). Meaningful learning with technology: Pearson new international edition. New York: Pearson Higher Ed.

Hui, B. I. A. N. (2014). Application of micro-learning in physiology teaching for adult nursing specialty students. Journal of Qiqihar University of Medicine, 21(61), 3219–3220.

Jahnke, I., Lee, Y. M., Pham, M., He, H., & Austin, L. (2019). Unpacking the inherent design principles of mobile microlearning. Technology, Knowledge and Learning, 25(3), 585–619.

Jing-Wen, M. (2016). A design and teaching practice of micro mobile learning assisting college English teaching mode base on WeChat public platform. Conference Proceedings of 2016 2nd International Conference on Modern Education and Social Science (pp. 250–255). https://doi.org/10.12783/dtssehs/mess2016/9595.

Jonassen, D. H., Campbell, J. P., & Davidson, M. E. (1994). Learning with media: Restructuring the debate. Educational Technology Research and Development, 42(2), 31–39.

Kabir, F. S., & Kadage, A. T. (2017). ICTs and educational development: The utilization of mobile phones in distance education in Nigeria. Turkish Online Journal of Distance Education, 18(1), 63–76.

Khurgin, A. (2015). Will the real microlearning please stand up? Association for Talent Development. Resource document. https://www.td.org/insights/will-the-real-microlearning-please-stand-up. Accessed 21 January 2018.

Kovacs, G. (2015). FeedLearn: Using Facebook feeds for microlearning. Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems (pp. 1461–1466). https://doi.org/10.1145/2702613.2732775.

Kozma, R. B. (1994). Will media influence learning? Reframing the debate. Educational Technology Research and Development, 42(2), 7–19.

Kozma, R. (2000). Reflections on the state of educational technology research and development. Educational Technology Research and Development, 48(1), 5–15.

La Barge, G. (2007). Pre-and post-testing with more impact. Journal of extension, 45(6). Resource document. https://www.joe.org/joe/2007december/iw1.php. Accessed 11 December 2018.

Liao, K. (2015). PressToPronounce: An output-oriented approach to mobile language learning. (Doctoral dissertation, Massachusetts Institute of Technology). Retrieved from http://hdl.handle.net/1721.1/100628.

Mager, R. (1988). Making instruction work. Belmont, CA: Lake Publishing Co.

Major, A., & Calandrino, T. (2018). Beyond chunking: Micro-learning secrets for effective online design. FDLA Journal, 3(1), Article 13, 1–5.

McKenney, S., & Reeves, T. (2018). Conducting educational design research. New York: Routledge.

Missouri Department of Elementary & Secondary Education. (2015). Setting growth targets for student learning objectives: Methods and considerations. Resource document. https://dese.mo.gov/sites/default/files/Methods-and-Considerations.pdf. Accessed 29 March 2020.

Montgomery, M. (2007). The discourse of broadcast news: A linguistic approach. New York: Routledge.

Nickerson, C., Rapanta, C., & Goby, V. P. (2017). Mobile or not? Assessing the instructional value of mobile learning. Business and Professional Communication Quarterly, 80(2), 137–153.

Nikou, S. A., & Economides, A. A. (2018a). Mobile-based micro-learning and assessment: Impact on learning performance and motivation of high school students. Journal of Computer Assisted Learning, 34(3), 269–278.

Nikou, S. A., & Economides, A. A. (2018b). Mobile-based assessment: A literature review of publications in major referred journals from 2009 to 2018. Computers & Education, 125, 101–119.

Park, M. H. (2009). Comparing group means: t-tests and one-way ANOVA using Stata, SAs, R, and SPSS. Working paper, Centre for Statistical and Mathematical Computing, Indiana University. Resource document. https://scholarworks.iu.edu/dspace/handle/2022/19735. Accessed 25 October 2017.

Park, Y., & Kim, Y. (2018). A design and development of micro-learning content in e-learning system. International Journal on Advanced Science, Engineering and Information Technology, 8(1), 56–61.

PowerSchool. (2016). There’s one thing you need to do now to show student growth. Resource document. https://www.powerschool.com/resources/blog/theres-one-thing-need-now-show-student-growth/. Accessed 20 April 2020.

Reigeluth, C. M., & Carr-Chellman, A. A. (Eds.). (2009). Instructional-design theories and models, volume III: Building a common knowledge base (Vol. 3). New York: Routledge.

Reigeluth, C. M., & Frick, T. W. (1999). Formative research: A methodology for creating and improving design theories. In C. Reigeluth (Ed.), Instructional-design theories and models. A new paradigm of instructional theory (Vol. II, pp. 633–651). Mahwah, NJ: Lawrence Erlbaum Associates.

Reeves, T. C., & Lin, L. (2020). The research we have is not the research we need. Educational Technology Research and Development, 68(4), 1991–2001. https://doi.org/10.1007/s11423-020-09811-3.

Shadiev, R., Hwang, W. Y., & Liu, T. Y. (2018). Investigating the effectiveness of a learning activity supported by a mobile multimedia learning system to enhance autonomous EFL learning in authentic contexts. Educational Technology Research and Development, 66(4), 893–912.

Simons, L. P., Foerster, F., Bruck, P. A., Motiwalla, L., & Jonker, C. M. (2015). Microlearning mApp raises health competence: Hybrid service design. Health and Technology, 5(1), 35–43.

Sirwan Mohammed, G., Wakil, K., & Sirwan Nawroly, S. (2018). The effectiveness of microlearning to improve students’ learning ability. International Journal of Educational Research Review, 3(3), 32–38.

Skalka, J., & Drlík, M. (2018). Educational model for improving programming skills based on conceptual microlearning framework. Proceedings of International Conference on Interactive Collaborative Learning (pp. 923–934). https://doi.org/10.1007/978-3-030-11932-4_85.

Smutny, P., & Schreiberova, P. (2020). Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Computers & Education. https://doi.org/10.1016/j.compedu.2020.103862.

Sun, G., Cui, T., Beydoun, G., Chen, S., Dong, F., Xu, D., & Shen, J. (2017). Towards massive data and sparse data in adaptive micro open educational resource recommendation: A study on semantic knowledge base construction and cold start problem. Sustainability, 9(6), 1–21.

Traxler, J. (2005). Defining mobile learning. Proceedings of In IADIS International Conference Mobile Learning (pp. 261–266). Online: IADIS Press.

Umair, S. (2016). Mobile reporting and journalism for media trends, news transmission and its authenticity. Journal of Mass Communication and Journalism, 6, 323–328.

Wenger, D., Owens, L., & Thompson, P. (2014). Help wanted: Mobile journalism skills required by top US news companies. Electronic News, 8(2), 138–149.

Yang, L., Zheng, R., Zhu, J., Zhang, M., Liu, R., & Wu, Q. (2018). Green city: An efficient task joint execution strategy for mobile micro-learning. International Journal of Distributed Sensor Networks, 14(6), 1–14. https://doi.org/10.1177/1550147718780933.

Zheng, W. (2015). Design of mobile micro-English vocabulary system based on the Ebbinghaus forgetting theory. Proceedings of Internet Computing for Science and Engineering (ICICSE), 2015 Eighth International Conference (pp. 241–246). https://doi.org/10.1109/ICICSE.2015.51.

Zheng, R., Zhu, J., Zhang, M., Liu, R., Wu, Q., & Yang, L. (2019). A novel resource deployment approach to mobile microlearning: From energy-saving perspective. Wireless Communications and Mobile Computing, 2019, 1–15. https://doi.org/10.1155/2019/7430860.

Acknowledgements

We are very grateful to the study participants who spent their time to conduct the course. We also thank the research assistants of the Information Experience Lab (ielab.missouri.edu) in particular Minh Pham, Nathan Riedel, and Michele Kroll, who helped with data collection. Finally, we thank the Donald W. Reynolds Journalism Institute, School of Journalism at the University of Missouri-Columbia for supporting this project.

Funding

This study was funded by the Donald W. Reynolds Journalism Institute (RJI), University of Missouri–Columbia.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

See Table 9.

Rights and permissions

About this article

Cite this article

Lee, YM., Jahnke, I. & Austin, L. Mobile microlearning design and effects on learning efficacy and learner experience. Education Tech Research Dev 69, 885–915 (2021). https://doi.org/10.1007/s11423-020-09931-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-020-09931-w