Abstract

Reports show that only 40% of 4th-grade students are proficient in math and American students are failing to demonstrate proficiency in key areas of mathematics (National Center for Educational Statistics 2011). Improving students’ fluency of basic math multiplication facts has been recommended as a way to increase math proficiency (Psychology in the Schools, 47:342–353, 2010). Incremental rehearsal is one promising strategy for improving multiplication skills. In this study, three 5th-grade students with disabilities who had Individual Education Plan math goals received one-to-one multiplication instruction using an incremental rehearsal strategy twice per week for 7 weeks. Data indicated immediate and large effects with intervention and growth rates for each student that exceeded expectations. Implications are discussed including the importance of considering both statistically significant and instructionally meaningful results in interpretation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Students require basic math skills to experience academic success in formal schooling and success later in life. Proficiency in mathematics has been linked to admission and graduation from college, successful employment, and higher income upon employment (Adelman 2006; Ketterlin-Geller et al. 2008; National Math Advisory Panel 2008; U.S. Department of Education 1997). For instance, individuals who successfully complete Algebra II in high school are twice as likely to graduate from college as individuals who fail to complete Algebra II (National Mathematics Advisory Panel 2008). There is also concern that American students fail to demonstrate proficiency in a variety of key mathematics skills when compared to students from other industrialized countries (National Mathematics Advisory Panel 2008). This sentiment continues to be reflected in annual standardized test scores, as evidenced in 2011 when only 40% of 4th-grade students scored at or above proficient on the National Assessment of Educational Progress in mathematics (National Center for Educational Statistics 2011).

To improve students’ proficiency in mathematics, a variety of areas (e.g., curriculum, instruction, assessment) need to be addressed, including students’ ability to recall math facts quickly, effortlessly, and accurately (Poncy et al. 2010). Developing automaticity with basic math facts may improve students’ ability to learn more advanced mathematics concepts (Shapiro 2011). Without automaticity, students use excessive cognitive resources (e.g., memory, processing, encoded content and context, retrieval) to solve basic math facts, and thus do not have enough cognitive mechanisms available to learn and apply skills associated with math reasoning and problem solving (Poncy et al. 2010; Varma and Schleisman 2014). Moreover, the inability to recall basic math facts quickly is a characteristic often associated with mathematics disabilities, which tend to persist into adulthood without direct intervention (Burns 2005).

Incremental Rehearsal

It has been hypothesized that U.S. students have difficulty solving single-digit calculations as quickly and accurately as students from other countries because they do not have as many opportunities to practice basic math facts (Codding et al. 2010). One simple way to increase the number of opportunities to practice basic math facts is to increase a student’s number of opportunities to respond (OTR). The automaticity of math facts is linked to the number of opportunities to respond to each math fact (Skinner et al. 1997, 2005). Although a variety of drill and practice interventions have been shown to increase a student’s OTR, research suggests the most robust intervention is incremental rehearsal (Burns 2005; Burns et al. 2004; MacQuarrie et al. 2002).

Incremental rehearsal (IR) is an explicit strategy for learning new information presented in isolated units. IR is a flashcard intervention approach shown to have robust empirical support for children with and without disabilities who have severe difficulties mastering basic skills in letter sounds, words, math facts, and vocabulary words (Burns et al. 2012). Although IR can be applied across a range of discrete academic skills, the basic premise regardless of content is presenting to the student a high percentage of known items (e.g., usually eight or nine) to only one unknown item using a systematic method (see Fig. 1). The goal is to maintain an overall ratio of 90% known to 10% unknown items. The frequent repetition of the unknown item(s) increases a student’s OTR and opportunities to receive corrective feedback, with the high success rates on known items supporting a student’s persistence to learn (Figs. 1, 2, 3, 4, 5, and 6). For students with disabilities, IR has been effective in academic pre-teaching interventions (Burns et al. 2004) and considerably more effective than traditional drill and practice interventions for learning discrete academic facts (Burns 2004; Burns and Boice 2009; MacQuarrie et al. 2002).

Much of the research on IR has examined the acquisition and retention of word reading (Burns 2007; Burns and Boice 2009; Joseph 2006; Joseph et al. 2012; Kupzyk et al. 2011; Nist and Joseph 2008; Szadokierski and Burns 2008), vocabulary (Burns et al. 2004, 2011; Petersen-Brown and Burns 2011), letter sounds (DuBois et al. 2014; Rahn et al. 2015; Volpe et al. 2011), and spelling (Garcia, Joseph, Alber-Morgan, & Conrad, Garcia et al. 2014). Compared to the broad academic area of reading, considerably less research has been conducted on IR in the area of mathematics. Pertinent to the current study, three published peer-reviewed studies have used IR to improve multiplication fact fluency of students diagnosed with and without mathematical learning disabilities (Burns 2005; Codding et al. 2010; Zaslofsky et al. 2016).

Research on Incremental Rehearsal with Multiplication

Burns (2005) examined the use of IR with multiplication facts in 3rd-grade students who were identified with a learning disability in mathematics. During each week of the study, each student completed a unique, 2-min multiplication probe with 35 single-digit facts written vertically. Facts were scored as digits correct per minute (dc/m) and used to monitor progress. Researchers delivered IR interventions to participating students individually twice per week for 8 weeks. A student with accurate completion of 20.0 dc/m on the multiplication probe was defined as operating at “mastery level.” “Instructional level” was defined as a rate of 10.0 to 19.0 dc/m, and “frustration level” was defined as a rate of ≤ 9.0 dc/m. These criteria were suggested initially by Deno and Mirkin (1977), and were used given the lack of other mathematics fluency criteria (Burns 2005).

During baseline of the multiple baseline design, the median dc/m score for each of the three students was 3.0, 8.0, and 11.0, respectively (Burns 2005). Two of these scores were at frustration level and one score was at instructional level. After 8 weeks of IR intervention, median dc/m scores were 15.0, 25.0, and 27.0 dc/m, respectively (Burns 2005). At the conclusion of IR intervention, one student was demonstrating dc/m at instructional level and two students were at mastery level. Based on the change in scores and the criteria provided, the IR intervention was considered effective during this study. In addition, effect sizes, reported using Cohen’s d, were very large: 17.0 for student 1, 3.4 for student 2, and 4.8 for student 3 (Burns 2005). The researcher noted limitations included the time intensiveness of the IR intervention delivery and the one-to-one format.

In 2010, Codding, Archer, and Connell extended Burns’ 2005 study by investigating whether multiplication facts learned using IR could be maintained and generalized to similar stimulus conditions (e.g., fractions, word problems) in a multiple probe across problem sets design (Barlow and Hersen 1984). One 7th-grade student experiencing difficulty learning multiplication facts participated with three sets of problems (A, B, C) twice per week for 12 weeks. A student with accurate completion of 50.0 dc/m in single-digit multiplication problems was defined as operating at “mastery level” (Burns et al. 2006). “Instructional level” was defined at a rate of 24.0 to 49.0 dc/m, and “frustration level” was defined at a rate of 23.0 or fewer dc/m (Burns et al. 2006). Unlike Deno and Mirkin’s (1977) criteria, which were used in the Burns (2005) study, these criteria were derived empirically in a study by Burns et al. (2006).

The student’s median baseline dc/m for problem sets A, B, and C were 3.8, 21.1, and 22.8, respectively (Codding et al. 2010). All of these scores were at frustration level. At the end of the IR intervention for each problem set, the student’s dc/m were 50.0, 75.0, and 53.0, respectively, and all at mastery level (Codding et al. 2010). Based on the change in scores and the criteria for learning level (i.e., frustration, instructional, mastery), the treatment was considered effective during this study. Maintenance and generalization probes administered over a 3-week period after the conclusion of the IR intervention showed that the performance level reached by the end of the IR intervention was maintained and the facts learned during intervention generalized to other conditions requiring multiplication skills, including fractions and word problems (Codding et al. 2010).

Most recently, Zaslofsky et al. (2016) examined difficult two-digit-by-two-digit multiplication combinations in four modified IR conditions administered to students in 4th and 5th grade. Conditions were developed specifically to manipulate both high and low levels of opportunities to respond and response generation demands and evaluated using a two-way ANOVA. Across conditions traditional IR procedures were modified by varying the number of rehearsals and sometimes displaying the answer on the flashcard. Because of these methodological details, this IR study was least informative to the design of the current study.

The Current Study

Both Burns (2005) and Codding et al. (2010) demonstrated IR can improve fluency in multiplication facts. Although Burns (2005) investigated score changes with a focus on fluency with 3rd-grade students with math learning disabilities and Codding et al. (2010) investigated generalization of multiplication skills with one 7th-grade student experiencing math difficulties, neither researcher explicitly considered growth rate by examining slope, although Burns (2005) did report effect sizes. Examining growth rate allows us to determine if the intervention enabled students to make gains at a rate quicker than expected (Hosp et al. 2016). Moreover, with such a limited literature base on the effects of using IR to improve multiplication fluency, expansion of the literature base is warranted.

The purpose of the current study was to contribute to the research literature on using IR to improve the rate of multiplication fact fluency, with a specific focus on growth rate, for students with learning disabilities in mathematics. It was hypothesized that using IR to teach single-digit multiplication facts would produce growth rates significantly greater as compared to pre-intervention scores.

Method

Participants and Setting

This study took place in an elementary school in a suburban district in a Midwest state that uses a non-categorical model for special education eligibility and service delivery. That is, students are identified as eligible individuals (EI) rather than by a specific disability category. The school served 354 students who were predominantly white and Hispanic. Students in this district were identified for special education services using a response-to-intervention (RTI) process. Three 5th-grade students from one special education math class participated in this study. Each of these students qualified for special education and had an active Individual Education Plan (IEP) with math goals. Students were selected for participation in the study based on teacher nomination. For the duration of the study all three students continued to receive core math instruction in general education, which was Everyday Math (McGraw-Hill Education 2007), but they did not participate in any additional math intervention. Specifically, the teacher, who was female and had 30 years of experience as a special educator, selected students who could benefit from additional instruction on multiplication facts. For this report, student names have been replaced with pseudonyms.

David

David became eligible for special education services when he was in preschool. His first IEP included only a goal for speech, but he demonstrated difficulties with math early on. By the end of kindergarten, David was expected to be able to count to 100, name all numbers 0 through 20, and write all numbers 0 through 20; in reality, David was able to count to only 39 (although he regularly skipped 14), name the numbers 1 through 11, 14, and 18, and write the numbers 1 through 7. In the spring of 1st grade, David answered 40% of problems correctly on the single-digit subtraction fact benchmark assessment. David’s 2nd-grade report card indicated he needed improvement in the areas of solving subtraction facts up to 18, adding and subtracting with regrouping, and solving multi-step problems. In 3rd grade, David struggled with multiplication facts despite receiving small group and one-to-one support for math. At this time, a math goal was added to David’s IEP. In the spring of 4th grade, David earned a score of 187 on his Measures of Academic Progress (MAP; Northwest Evaluation Association 2014) math assessment, which was 36 points below the expected proficient score (40th percentile), and a score of 181 (18th percentile) on his Iowa Assessments (Iowa Testing Programs 2012) math, which was 8 points lower than the expected proficient score (40th percentile).

At the time of the study, David’s math goal was “when given a 5th-grade math concepts and application probe and no time limit (his only accommodation), David will score 14 digits correct per minute (dc/m).” This goal represents a modification for David given that a score of 30 dc/m is considered to be grade level for David. At the same time, according to teacher-collected data used to monitor progress toward his IEP goal, David had four consecutive data points above the aim line, which showed progress toward his goal. Despite this progress, the teacher felt David needed additional math instruction on multiplication facts based on (a) data from assignments and math unit tests, and (b) the need to support David’s progress toward the grade-level expectation.

Courtney

Courtney first became eligible for special education services in 1st grade in the areas of reading and math, but she demonstrated weaknesses in math from the beginning of formal schooling. By the end of kindergarten, Courtney could not write or recognize any numbers, but she could count to 100. Through 1st and 2nd grade, Courtney had IEP goals related to reading and writing numbers, solving addition and subtraction up to 18, understanding digits and place value, and adding and subtracting with regrouping. In 3rd grade, Courtney earned a grade equivalency on the Star Math (Renaissance Learning 2014) assessment of 1.6. In the spring of 4th grade, Courtney earned a score of 190 on the MAP math assessment (23 points lower than expected proficiency for her grade), and a score of 177 on the Iowa Assessments math (14th percentile; 22 points below the expected proficiency score).

At the time of the study, Courtney was earning a B in 5th-grade math with a modified curriculum including accommodations specified in her IEP: cues (i.e., rulers, number line), a calculator to help with calculations, and visual models of problems when working on a worksheet. Courtney’s goal at that time was “when given a 5th-grade math concepts and application probe with no time limit, having the probe read to her, and access to a multiplication chart, Courtney will score 12 dc/m.” This goal shows a modification for Courtney given that a score of 30 dc/m is considered to be grade level for her. According to teacher-collected progress monitoring data, Courtney was making some progress toward her IEP goal, but she had not yet met it; she had demonstrated two consecutive points on or above the aim line.

Gabby

Gabby qualified for special education services in 1st grade in the areas of reading, writing and math, and she received English Learning (EL) services since kindergarten with Spanish as her primary language. Gabby was behind her peers in reaching mathematical milestones. By the end of kindergarten, she needed improvement with writing and naming numbers 0 through 20. In 1st through 3rd grade, her math IEP goal focused on number concepts and operations. In 4th grade, her IEP goal focused on math concepts and applications, with specific attention to place value, time, and money. In the spring of 4th grade, Gabby’s MAP math score was 197 (26 points below expected proficiency) and her Iowa Assessments math score was 162 (2nd percentile) which was 27 points lower than expected proficiency.

At the time of the study, Gabby received several accommodations in math, including use of a calculator, math assignments half the length of the students without an IEP, and one extra day to complete math assignments. Gabby’s goal was “when given a 5th-grade math concepts and application probe, with no time limit and other accommodations, Gabby will score 13 dc/m.” This goal reflects a modification for Gabby given that a score of 30 dc/m is considered to be grade level for her. At the time of the study, the teacher reported all of Gabby’s data points had been below the aim line indicating she was not making adequate progress toward her IEP goal.

Measures

CBM-Math Multiplication

Twenty-one different CBM-Math (CBM-M) probes of single-digit multiplication problems (including all combinations 0 through 9) were used in this study. Each probe included 35 problems organized in 7 rows of 5 problems. The probes were obtained from the Intervention Central website (http://www.interventioncentral.org). The school psychologist at the participating students’ school administered probes on three consecutive days prior to the implementation of the intervention to establish pre-intervention performance levels. During intervention, probes were administered by the first author or the school psychologist immediately following every intervention session. A pencil and a stopwatch were used to administer the probes. Each student was given 2 min to complete each probe. When scoring the probe, the number of digits correct were counted and then converted to digit correct per minute (dc/m) by dividing the score by 2. According to district guidelines for 5th graders, participating students were expected to earn a score of 30 digits correct per minute (dc/m) on the multiplication fact fluency probes in order to be considered at grade-level instructionally. More specifically, a 5th-grade student earning a score of fewer than 24 dc/m on the fluency probe was at the frustration level; a student earning a score of 24 to 49 dc/m was at the instructional level; and a student with a score higher than 49 dc/m was at the mastery level. Two scorers independently scored each probe. Interscorer agreement was calculated using the point-by-point agreement ratio (Kazdin 2011). Then, an average across all probes was calculated, which was 100%.

Treatment Integrity

A treatment integrity checklist was developed for this study (see Fig. 2). The checklist consisted of the seven steps required to complete IR. Next to each written step was a “Y” to indicate “yes, this step was completed” and an “N” for “no, this step was not completed.” During training, the author used the checklist to ensure the school psychologist and a trained research assistant (RA) implemented each step of the intervention (see “Training Details and Data” section). The checklist also was used every 3 weeks during the intervention to evaluate implementation integrity. Responses were given values of Yes (Y) = 1 and No (N) = 0. Treatment integrity was calculated by dividing the total “Y” score by the total possible scored and multiplying by 100 yielding the percentage of steps successfully completed.

Social Validity

To measure social validity of intervention goals, procedures, and outcomes, the Intervention Acceptability Rating Form (IARF) was administered to the school psychologist (see Fig. 6). The IARF is a 10-item rating scale with a 6-point Likert-type scale (1 = strongly disagree to 6 = strongly agree) adapted from the Assessment Rating Profile-Revised (ARP-R; Eckert et al. 1999). The ARP-R is a 12-item rating scale used to “measure the acceptability of assessment measures” (Eckert et al. 1999, p. 32). Internal consistency ratings for the ARP-R have been reported between 0.94 and 0.99 (Eckert et al. 1999). Test–retest reliability coefficients obtained at 1-, 3-, 6-, and 12-month intervals ranged from 0.82 to 0.85 (Eckert et al. 1999).

To create the IARF, the ARP-R was modified in two ways. First, the wording of each item was changed to reflect the acceptability of the intervention, instead of an assessment. This was accomplished by replacing the word “assessment” with “intervention,” replacing the word “child” with “student,” and replacing the word “problems” with “difficulties with multiplication.” Second, items 5 and 12 were removed because they were not relevant to this study. The IARF was scored by finding the sum of all the responses, with higher scores indicating higher favorability.

Materials

One single-digit multiplication fact was hand-written horizontally on 100 3″ × 5″ index cards. The answer to the problem was not written anywhere on the card. All facts from 0 × 0 to 9 × 9 were included once (Burns 2005).

Procedures

Training Details and Data

All student data were collected by the first author, the RA, and the school psychologist. Before any data were collected, the first author trained the RA and the school psychologist at the participating students’ school. Although the school psychologist did not implement the intervention, she received the training so she could collect data on implementation integrity. The training lasted approximately 30 min. The first author taught the school psychologist and RA the IR intervention strategy by explaining and then demonstrating each step on the treatment integrity checklist. The school psychologist and the RA were then given time to practice implementing the intervention and ask questions. Finally, the school psychologist and the RA each implemented the intervention while being assessed using the treatment integrity checklist. Both the school psychologist and the RA implemented 100% of the steps during training.

Intervention Implementation

The special education team determined that 8 weeks would be allocated for intervention implementation prior to examining data critically. Thus, data were collected for a total of 8 weeks, including 1 week to collect pre-intervention data and 7 weeks of intervention. The intervention was implemented one-to-one at a table in an unoccupied classroom next to the student’s assigned classroom. Intervention sessions were scheduled on Tuesday and Thursday during student’s math class and each session lasted about 15 min. The first author and RA administered the IR intervention, with each adult working 1 day per week. Due to a scheduling conflict, the students received the intervention only once per week for the final 2 weeks of the intervention.

Implementation Integrity

Implementation was evaluated using a treatment integrity checklist (see Fig. 2) developed for this study. The school psychologist evaluated the first author’s and the RA’s implementation; this was done because all three people were rarely at the school site on the same day. Implementation integrity was evaluated with direct observation during weeks 1, 4, and 7 of intervention. Both the RA and the first author implemented 100% of the steps during sessions in which they were observed.

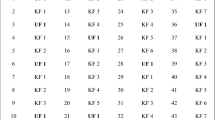

Intervention

In order to compare growth rates, three data points were collected prior to intervention by administering a 2-min CBM-M multiplication probe once a day until three scores were obtained for each student. Each student’s digits correct per minute was calculated, recorded, and graphed. All three students began the intervention during the same week. IR was implemented twice per week for 5 weeks and once per week for 2 weeks. Each session lasted about 15 min. Students continued to receive all regular math instruction with the exception of the 15 min they were pulled out for intervention. Before beginning each intervention session, the first author determined which facts were known and unknown by presenting every flash card to each student. Facts the student answered correctly within 2 s were considered known. Facts the student answered incorrectly or not at all after 2 s were considered unknown. These procedures followed those of Burns (2005) and Codding et al. (2010).

For intervention, nine known fact cards were selected randomly and placed in a pile. The first unknown multiplication fact was presented to the student and the adult provided the answer orally (see Fig. 1). The student was asked to restate the unknown fact aloud and provide the correct answer. Then, the first known fact was presented to the student and s/he was asked to provide the answer orally. Next, the first unknown fact was presented to the student again who answered orally. This was followed by the first and second known facts being presented to the student. After the student answered these correctly, the first unknown fact was presented to the student again who answered orally. Then, first, second, and third known facts were presented to the student who answered orally. This sequence continued until all nine known facts were presented after the first unknown math fact (see also Burns 2005; Codding et al. 2010).

Once the fact had been rehearsed, or demonstrated as known, the unknown fact was treated as the first known fact and the ninth known fact (the last one presented) was removed. The process was repeated with the next unknown multiplication fact. The session ended when the student made three errors while rehearsing one unknown fact (Burns 2005).

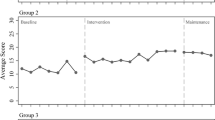

Study Design and Data Analysis

Because the dependent variable was not a reversible behavior (but rather, an academic skill), withdrawing intervention was not appropriate, and the purpose of the study was to compare growth rates from baseline to intervention, a simple AB (A = baseline, B = intervention) design was used to determine the effects of intervention on students’ math performance (Kennedy 2005). Although a multiple baseline design would have allowed the opportunity to demonstrate experimental control, we made a decision to start IR at the same time for all students based on teachers indicating there was an immediate need for intervention. Thus, withholding intervention for students for an extended period of time (which may occur in multiple baseline designs) was not a tenable option (Kennedy 2005). In this case, baseline represented students’ performance level during core instruction (i.e., Everyday Math) only and intervention consisted of IR during core instruction. Consistent with typical analysis of academic progress, growth rates were used to determine the effects of intervention on students’ math performance. According to Shapiro (2011), 5th-grade students are expected to gain 25 dc/m in a 40-week school year. By dividing 25 dc/m by 40 weeks, we can expect students to gain approximately 0.625 dc/m per week. Similarly, by dividing 0.625 dc/m per week by 5, we can expect students to gain 0.125 dc/m per day. Thus, without intervention the expected slope was 0.125. Secondary to examining growth rates, we also calculated the Percentage of Data Points Exceeding the Median (PEM; Ma 2006). We used PEM because it accounts for cases in which there is an unstable baseline or outliers in baseline (Ma 2006) as may have been the case for David. PEM scores of 0.9 to 1 indicate a highly effective treatment, 0.7 to 0.9 indicates moderate effects, and less than 0.7 indicates an ineffective treatment. Consistent with previous research (e.g., Burns 2005), effect size was also calculated for each student. Using Cohen’s d, a small effect size was ≤ 0.2, a medium effect size was 0.5, and a large effect size was ≥ 0.8 (Burns 2005; Cohen 1988).

Results

David

Prior to intervention, across three CBM-M multiplication probes, David’s median score was 11.5 dc/m, or 18.5 less than the expected score and at frustration level in terms of 5th-grade instruction (Fig. 3; Shapiro 2011). David’s last pre-intervention score of 17.5 dc/m was much higher than his first two pre-intervention scores of 10 dc/m and 11.5 dc/m. Although David’s pre-intervention scores exhibited high variability and an upward trend, his performance was still far below the grade level target. Intervention began so that necessary targeted instruction was not delayed. Based on the expected level of growth from David’s pre-intervention median of 11.5 dc/m on CBM-M, David was expected to gain 4.4 dc/m in 7 weeks or a score of at least 15.9 dc/m. During intervention, David’s CBM-M scores ranged from 14.0 to 25.0 dc/m (see Fig. 3). The median point of his last three CBM-M scores was 24.5 dc/m, which represents an increase of 13.0 dc/m compared to pre-intervention scores and growth 8.6 dc/m greater than expected. Additionally, his score of 24.5 dc/m was indicative of 5th-grade instructional level.

David was expected to gain 0.1 dc/m per day during intervention, and his actual slope was 0.2 dc/m per day. David demonstrated a growth rate considerably faster than expected. The mean of the scores prior to intervention was 13.0 dc/m (SD = 4.0 dc/m). The mean of the scores during the intervention phase was 18.5 dc/m (SD = 4.1 dc/m). The effect size was d = 1.4. PEM was 1.0.

Courtney

Prior to intervention, Courtney’s median score across three CBM-M multiplication probes was 0 dc/m, or 30 less than the expected score and at frustration level for 5th grade (Fig. 4; Shapiro 2011). Like David, Courtney was expected to gain 4.4 dc/m in 7 weeks, or a final score of at least 4.4 dc/m on her CBM-M probes. During intervention, Courtney’s scores ranged from 5.0 to 15.0 dc/m (see Fig. 4). The median point of her last three CBM scores was 13.0 dc/m, which was an increase of 13.0 dc/m and was 8.6 dc/m greater than her expected growth. Despite this growth, a score of 13.0 dc/m indicated Courtney was still at frustration level for 5th-grade instruction.

Courtney’s intervention trend line was expected to have a slope of 0.1. The slope of Courtney’s actual trend line was 0.2, which was higher than expected, representing an average daily gain of 0.2 dc/m. Based on pre-intervention (M = 0.3 dc/m; SD = 0.6 dc/m) and intervention (M = 10.2 dc/m; SD = 2.9 dc/m) data, the intervention effect size was d = 4.7. PEM was 1.0.

Gabby

Gabby’s median score across three CBM-M multiplication probes was 4.0 dc/m, which was 26.0 points below expected and indicative of an instructional frustration level (Fig. 5; Shapiro 2011). Gabby was expected to gain 4.4 dc/m in 7 weeks with an end-of-intervention score of at least 8.4 dc/m on her CBM-M probes. During intervention, Gabby’s scores ranged from 4.5 to 12.0 dc/m (see Fig. 5). The median point of her last three CBM scores was 10 dc/m, which was an increase of 6.0 and 1.6 dc/m greater than expected. A score of 10 dc/m was 20.0 dc/m below the expected score for 5th grade and indicative of an instructional frustration level. Gabby was expected to produce CBM-M scores during intervention with a slope of 0.1. Gabby’s actual growth was 0.1 dc/m, which was consistent with her expected rate of growth.

Gabby’s mean score prior to intervention was 3.3 dc/m (SD = 1.15 dc/m). The mean during the intervention phase was 9.2 dc/m (SD = 2.4 dc/m). Thus, the effect size was d = 3.0. PEM was 1.0.

Social Validity

The school psychologist completed the IARF as a measure of the acceptability of the intervention. The authors were interested in the school psychologist’s perception of the intervention because she regularly worked with teachers to develop interventions for students who were struggling. Her total raw score across 10 items was 57 out of 60 possible points (range = 5–6 across items), indicating strong agreement with statements about intervention acceptability.

Discussion

Research has shown that one simple way to improve basic math fact fluency and accuracy is to increase a student’s number of opportunities to respond (OTR; Skinner et al. 2005). In the area of multiplication fact fluency and generalization, increasing OTRs may be done through IR. Researchers have demonstrated the effectiveness of IR with 3rd-grade students with math learning disabilities (Burns 2005) and with one 7th-grade student experiencing difficulty with generalization of multiplication facts to applied contexts (Codding et al. 2010). Moreover, manipulating OTRs in order to learn complex multiplication problems has been shown to influence information retention in 5th graders, with increased OTRs resulting in increased learning (Zaslofsky et al. 2016). The purpose of the current study was to extend the research on IR for teaching multiplication facts to 5th-grade students with IEP math goals and to evaluate participants’ growth rates.

Intervention Effects and Implications

In the current study, each of the 5th-grade student participants who was receiving special education services for math demonstrated a history of struggling with multiplication facts. Pre-intervention data confirmed that all three students were discrepant from their peers in the area of multiplication fact fluency. Moreover, prior to intervention, students were functioning at a level indicative of frustration with math learning in their classrooms (Shapiro 2011), bringing into question the effectiveness of general math instruction and learning overall. In short, participating students were in need of targeted support for multiplication fact learning.

The IR multiplication intervention was shown to be effective for all three students. In comparison to pre-intervention scores, intervention effects were immediate and students gained skills at rates that far exceeded expectations based on guidelines from Shapiro (2011). Using expected versus actual growth rates, calculated effect sizes ranged from d = 1.4 to 4.2, which are considered to be quite large (Cohen 1988). Findings for two out of three participants were comparable to Burns (2005) in terms of effect size. Additionally, PEM was 1.0 for all participants, thus indicating an effective treatment (Ma 2006). However, interpretation of PEM should be viewed with caution and supplementary to growth rates, as it is limited due to the failure to account for slope in baseline and variability or trend during treatment. Statistically, the results are impressive based on score and rate changes from pre-intervention to intervention; however, the end-of-intervention scores for two of the participating students (Courtney and Gabby) were still at frustration level. It is plausible Courtney and Gabby, who both started with a substantially lower dc/m rate at baseline than David, needed more exposure to the intervention as they had a greater initial gap between the frustration and instructional level. This realistic understanding tempers enthusiastic interpretations of large effect sizes. David’s end-of-intervention performance of 24.5 dc/m reflected accelerated growth and moved his performance just into the instructional level. David’s pre-intervention scores also showed an accelerating trend mixed with variable performance, which was reported as a pattern by David’s teacher and contributed to her concern about his multiplication skill and performance. Given that district guidelines define grade-level performance as 30 dc/m and instructional-level as 24 to 49 dc/m, David’s strong progress warrants further monitoring given his long history of math difficulty and need for support. In short, more work needs to be done to bring the performance of these students to grade level, so that they might independently and confidently access general multiplication curriculum. It would be inappropriate to simply herald the demonstrated effect sizes without considering the actual learning contexts and experiences of the students.

Results showed that data collectors were trained to 100% fidelity and then they implemented intervention with 100% integrity. Moreover, a quick measure of social validity suggested a high-level of acceptability of the intervention by the school psychologist. In short, IR appears to be a straightforward intervention to learn and deliver with integrity; further, educators report that it is a practical, feasible, and effective intervention. Perhaps one of the reasons IR was well received is because it is aligned closely with classroom instructional strategies. Educators may be likely to interpret the drill-based foundation of IR as appropriate given the close link to many instructional and curriculum components. Moreover, research supports the use of intervention approaches that align closely with instructional approaches (Hosp and Ardoin 2008; Shapiro 2011).

Limitations and Future Research

Although findings are promising, limitations of the current study and related recommendations for future research should be considered. First, IR is time-intensive. Burns (2005) made a similar observation. The effectiveness of IR is gaining empirical support, but delivery is one-to-one, which requires time away from general instruction and can be expensive if delivered by salaried educators. For these logistical reasons, in this study the special education team requested intervention delivery during core math instruction. Although we understand best practices dictate supplementary instruction outside of core instruction time (McDougal et al. 2010), the constraints of authentic educational settings do not always allow for such delivery. Data also suggest that several sessions are required to meet levels indicative of mastery, which should be the expectation given the basic skill nature of the intervention content. Basic skills must be mastered in order to support accuracy and fluency with trained items, and with generalization (Poncy et al. 2010; Shapiro 2011). This presents two problems to be addressed in future research—students need access to IR frequently in order to master facts (increasing OTR) and to increase accessibility to general curriculum content, and intervention resources need to be minimized to reduce overall cost. One potential solution is a computer-adaptive program that embeds IR strategies and allows students to practice independently (e.g., FASTT Math™; Tom Snyder Productions 2011). Moreover, a computer-based program could be used at home to increase OTR. Or, parents, caregivers, or siblings could be trained to deliver intervention at home; similarly, a peer may be able to deliver intervention at school (Burns 2005).

A second limitation to the study is related to lack of experimental control given the use of concurrent AB designs rather than a multiple baseline design. This study demonstrates the difficulty of balancing the need for methodological rigor with the constraints of working in applied settings in which the immediate needs of students and teachers may supersede the experimental design. That is, due to deficits in multiplication fact fluency exhibited by all three students, the school team felt it was important to start the intervention as soon as possible. Further, against best practice recommendations in which the length of time in intervention is dictated by data, in this case it was based on alignment with the school calendar and other curricular obligations as designated by the teachers, thus forcing an immediate start to intervention (versus waiting for stable baselines as is typical in single-case design). To allow for an opportunity to demonstrate experimental control, and thus state with certainty an intervention’s effectiveness, future research should allow for adequate time to conduct multiple baseline designs.

A third limitation to the study is lack of maintenance data, which would have strengthened findings (Kratochwill 1992). Data from the current study represent a few months of time from the fall of student’s 5th-grade year. Each participating student demonstrated a long history of difficulty with math multiplication, and results from the IR intervention suggested these students improved significantly. However, long-term performance data were not collected. If research on IR for multiplication is to progress, especially given the positive short-term effects, maintenance data will be necessary to inform expectations and to plan for any ongoing intervention supports. These data also can help solidify IR as an effective intervention approach.

Last, social validity data could be expanded to include perceptions of other stakeholders. In this study, social validity data represented only the school psychologist’s perspective. No data were collected on the perceptions of the participants’ general or special education teachers, parents/caregivers, or the participants themselves. Given that IR interventions could be implemented across classroom and home contexts, and that multi-context administration may have bearing on growth rates, it could be very important to know more about feasibility and implementation perspectives from a range of stakeholders.

Conclusion

Students are identified for academic intervention because they struggle with core instruction in general education; while students gain basic skills during intervention, general instruction moves forward. This is a timeless conundrum for meeting student needs. Yet, we must become more sensitive and adept at ensuring that all students are accessing grade-level curriculum even if they are also working on remediation. Given that IR is a time- and labor-intensive intervention and has been critiqued accordingly (Burns 2005), it is important to consider (a) the resources necessary to plan and deliver intervention sessions, and (b) the relation between student skill level and general classroom core mathematics instruction. Results from this study showed large intervention effects for all three participants who all exceeded expected growth rates, and yet, two students were still at a frustration level of instruction. Further work is needed to determine how to scale IR in such a way to bring students to an instructional and then mastery level.

References

Adelman, C. (2006). The toolbox revisited: Paths to degree completion from high school through college. Washington, DC: U.S. Department of Education.

Barlow, D. H., & Hersen, M. (1984). Single case experimental designs: Strategies for studying behavior change (2nd ed.). Boston: Allyn & Bacon.

Burns, M. K. (2004). Empirical analysis of drill ratio rest-arch: Refining the instructional level for drill tasks. Remedial and Special Education, 25(3), 167–175.

Burns, M. K. (2005). Using incremental rehearsal to increase fluency of single-digit multiplication facts with children identified as learning disabled in mathematics computation. Education and Treatment of Children, 28(3), 237–249.

Burns, M. K. (2007). Reading at the instructional level with children identified as learning disabled: Potential implications for response-to-intervention. School Psychology Quarterly, 22, 250–313.

Burns, M. K., & Boice, C. H. (2009). Comparison of the relationship between words retained and intelligence for three instructional strategies among students with low IQ. School Psychology Review, 38, 284–292.

Burns, M. K., Dean, V. J., & Foley, S. (2004). Preteaching unknown key words with incremental rehearsal to improve reading fluency and comprehension with children identified as reading disabled. Journal of School Psychology, 42(4), 303–314.

Burns, M. K., VanDerHeyden, A. M., & Jiban, C. (2006). Assessing the instructional level for mathematics: A comparison of methods. School Psychology Review, 35, 401–418.

Burns, M. K., Hodgson, J., Parker, D. C., & Fremont, K. (2011). Comparison of the effectiveness and efficiency of text previewing and pre-teaching keywords as small-group reading comprehension strategies with middle school students. Literacy Research and Instruction, 50, 241–252.

Burns, M. K., Zaslofsky, A. F., Kanive, R., & Parker, D. C. (2012). Meta-analysis of incremental rehearsal: Using phi coefficients to compare single-case and group designs. Journal of Behavioral Education, 21, 185–202.

Codding, R. S., Archer, J., & Connell, J. (2010). A systematic replication and extension of using incremental rehearsal to improve multiplication skills: An investigation of generalization. Journal of Behavioral Education, 19(1), 93–105.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. New York: Academic Press.

Deno, S. L., & Mirkin, P. K. (1977). Data-based program modification: A manual. Reston: Council for Exceptional Children.

DuBois, M. R., Volpe, R. J., & Hemphill, E. M. (2014). A randomized trial of a computer-assisted tutoring program targeting letter sound expression via incremental rehearsal. School Psychology Review, 43, 210–221.

Eckert, T. L., Hintze, J. M., & Shapiro, E. S. (1999). Development and refinement of a measure for assessing the acceptability of assessment methods: The assessment rating profile-revised. Canadian Journal of School Psychology, 15(1), 21–42.

Garcia, D., Joseph, L. M., Alber-Morgan, S., & Konrad, M. (2014). Efficiency or oral incremental rehearsal versus written incremental rehearsal on students’ rate, retention, and generalization of spelling words. School Psychology Forum: Research in Practice, 8(2), 113–129.

Hosp, J. L., & Ardoin, S. P. (2008). Assessment for instructional planning. Assessment for Effective Intervention, 33(2), 69–77.

Hosp, M. K., Hosp, J. L., & Howell, K. W. (2016). The ABCs of CBM: A practical guide to curriculum-based measurement (2nd ed.). New York: Guilford.

Iowa Testing Programs. (2012). Iowa assessments. Rolling Meadows: Riverside.

Joseph, L. M. (2006). Incremental rehearsal: A flashcard drill technique for increasing retention of reading words. The Reading Teacher, 59(8), 803–807.

Joseph, L., Eveleigh, E., Konrad, M., Neef, N., & Volpe, R. (2012). Comparison of the efficiency of two flashcard drill methods on children’s reading performance. Journal of Applied School Psychology, 28(4), 317–337.

Kazdin, A. E. (2011). Single case experimental designs: Strategies for studying behavior change (2nd ed.). Boston: Allyn & Bacon.

Kennedy, C. H. (2005). Single-case designs for educational research. Boston: Allyn & Bacon.

Ketterlin-Geller, L. R., Chard, D. J., & Fien, H. (2008). Making connections in mathematics: Conceptual mathematics intervention for low-performing students. Remedial and Special Education, 29, 33–45.

Kratochwill, T. R. (1992). Single-case research design and analysis: An overview. In T. R. Kratochwill& J. R. Levin (Eds.) Single-case research design and analyses: New directions for psychology and education (pp. 1–14). Hillsdale, NJ: Erlbaum.

Kupzyk, S., Daly, E. J., & Andersen, M. N. (2011). A comparison of two flash-card methods for improving sight-word reading. Journal of Applied Behavior Analysis, 44(4), 781–792.

Ma, H. H. (2006). An alternative method for quantitative synthesis of single-subject research: Percentage of data points exceeding the median. Behavior Modification, 30, 598–617.

MacQuarrie, L. L., Tucker, J. A., Burns, M. K., & Hartman, B. (2002). Comparison of retention rates using traditional, drill sandwich, and incremental rehearsal flashcard methods. School Psychology Review, 31, 584–595.

McDougal, J. L., Graney, S. B., Wright, J. A., & Ardoin, S. P. (2010). RTI in practice: A practical guide to implementing effective evidence-based interventions in your school. Hoboken: John Wiley & Sons, Inc..

McGraw-Hill Education. (2007). Everyday math. New York: Author.

National Center for Education Statistics. (2011). The nation’s report card: Mathematics 2011. Washington, DC: National Center for Education Statistics Press.

National Mathematics Advisory Panel. (2008). Foundations for success: The final report of the National Mathematics Advisory Panel. Washington: U.S. Department of Education.

Nist, L., & Joseph, L. M. (2008). Effectiveness and efficiency of flashcard drill instructional methods on urban first-graders’ word recognition, acquisition, maintenance, and generalization. School Psychology Review, 37, 294–308.

Northwest Evaluation Association. (2014). Measures of academic progress. Portland: Author.

Petersen-Brown, S., & Burns, M. K. (2011). Adding a vocabulary component to incremental rehearsal to enhance retention and generalization. School Psychology Quarterly, 26(3), 245–255.

Poncy, B. C., Skinner, C. H., & Axtell, P. K. (2010). An investigation of detect, practice, and repair to remedy math fact deficits in a group of third grade students. Psychology in the Schools, 47(4), 342–353.

Rahn, N. L., Wilson, J., Egan, A., Brandes, D., Kunkel, A., Peterson, M., & McComas, J. (2015). Using incremental rehearsal to teach letter sounds to English language learners. Education & Treatment of Children, 38(1), 71–91.

Renaissance Learning. (2014). STAR math: Technical manual. Wisconsin Rapids: Author.

Shapiro, E. S. (2011). Academic skills problems: Direct assessment and intervention (4th ed.). New York: Guilford.

Skinner, C. H., Belfiore, P. J., Mace, H. W., Williams-Wilson, S., & Johns, G. A. (1997). Altering response topography to increase response efficiency and learning rates. School Psychology Quarterly, 12(1), 54–64.

Skinner, C. H., Pappas, D. N., & Davis, K. A. (2005). Enhancing academic engagement: Providing opportunities for responding and influencing students to choose to respond. Psychology in the Schools, 42(4), 389–403.

Szadokierski, I., & Burns, M. K. (2008). Analogue evaluation of the effects of opportunities to respond and rations of known items within drill rehearsal of Esperanto words. Journal of School Psychology, 46, 593–609.

Tom Snyder Productions. (2011). FASTT math. New York: Scholastic.

United States Department of Education. (1997). Mathematics equal opportunity: White paper prepared for US Secretary of Education Richard Riley. Washington, DC: Author.

Varma, S., & Schleisman, K. B. (2014). The cognitive underpinnings of incremental rehearsal. School Psychology Review, 43, 222–228.

Volpe, R. J., Burns, M. K., DuBois, M., & Zaslofsky, A. F. (2011). Computer-assisted tutoring: Teaching letter sounds to kindergarten students using incremental rehearsal. Psychology in the Schools, 48, 332–342.

Zaslofsky, A. F., Scholin, S. E., Burns, M. K., & Varma, S. (2016). Comparison of opportunities to respond and generation effect as potential causal mechanisms for incremental rehearsal with multiplication combinations. Journal of School Psychology, 55, 71–78.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

All data were collected according to APA’s ethical guidelines. Data were collected by the first author, who was working in the schools and delivering the intervention. Because of this and because the data represent standard educational practice which IRB does not define as research, informed consent was not collected formally outside the IEP process.

Conflict of Interest

The authors declare that they have no competing interests.

Rights and permissions

About this article

Cite this article

McVancel, S.M., Missall, K.N. & Bruhn, A.L. Examining Incremental Rehearsal: Multiplication Fluency with Fifth-Grade Students with Math IEP Goals. Contemp School Psychol 22, 220–232 (2018). https://doi.org/10.1007/s40688-018-0178-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40688-018-0178-x