Abstract

Factor analysis is widely utilized to identify latent factors underlying the observed variables. This paper presents a comprehensive comparative study of two widely used methods for determining the optimal number of factors in factor analysis, the K1 rule, and parallel analysis, along with a more recently developed method, the bass-ackward method. We provide an in-depth exploration of these techniques, discussing their historical development, advantages, and limitations. Using a series of Monte Carlo simulations, we assess the efficacy of these methods in accurately determining the appropriate number of factors. Specifically, we examine two cessation criteria within the bass-ackward framework: BA-maxLoading and BA-cutoff. Our findings offer nuanced insights into the performance of these methods under various conditions, illuminating their respective advantages and potential pitfalls. To enhance accessibility, we create an online visualization tool tailored to the factor structures generated by the bass-ackward method. This research enriches the understanding of factor analysis methodology, assists researchers in method selection, and facilitates comprehensive interpretation of latent factor structures.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

One primary goal of exploratory factor analysis (EFA) is to determine the number of latent factors (Costello and Osborne 2005; Zwick and Velicer 1986). By shrinking a large number of observed variables to a smaller set of latent variables, social scientists are able to find the underlying, interpretable factors that can explain the observed data (Suhr 2005; Yang 2005). For datasets with many variables, identifying latent factors would make the data more controllable and better for further analysis.

Properly determining the optimal number of factors is a crucial step in factor analysis, facilitating more precise and insightful data interpretation (Fabrigar et al. 1999). This step clarifies the fundamental patterns within the data, providing valuable insights and a deeper understanding of the subject. Moreover, it ensures model stability and reliability, leading to better predictions and well-informed decisions. Consequently, it maintains an appropriate model complexity, preventing potential errors and inconsistencies that could compromise the integrity of the research findings (Zwick and Velicer 1986).

While identifying factors is beneficial in data analysis, extracting the wrong number of factors could lead to problems such as reduced model accountability and computational errors. One might overfactor by extracting too more factors or underfactor by extracting too fewer, compare to the true number present in a study. Overfactoring results in meaningless factors and increases the chance of Heywood cases (De Winter and Dodou 2012), while underfactoring can lead to conservative results, omitting actual factors and causing substantial errors on all current factor loadings (Wood et al. 1996).

Numerous methods have been developed to identify the correct number of factors in EFA. Most traditional methods are developed based on the eigendecomposition of the observed variables’ correlation matrix. For example, the eigenvalue-greater-than-one rule, also known as the K1 Rule, recommends retaining only factors with eigenvalues larger than one (Kaiser 1960). Parallel analysis, another popular procedure, suggests retaining factors whose eigenvalues exceed those derived from simulated parallel datasets by a certain proportion (Horn 1965). Another type of correlation-based method assesses the interrelations among observed variables to determine the optimal number of factors that can adequately capture the underlying data structure. For example, the minimum average partial (MAP) method (Velicer 1976) determines which factors to retain by minimizing the average of the squared partial correlations. Additionally, machine learning approaches such as random forest (Breiman 2001) have also been adopted to solve the determination of the number of factors as a classification problem (Goretzko and Bühner 2020).

Some EFA methods are dedicated to explaining relationships among factors. A representative example is the bass-ackward method (Goldberg 2006), which aims to develop the hierarchical tree structure from the top down based on the correlations among the factors. The construction of the factors progresses from abstract to specific as the tree expands from top to bottom, and the correlations between inter-level factors serve as the corresponding edge weights. The expansion stops when no new factor emerges. Compared to traditional bottom-up factor analysis, this method provides a more transparent perspective that allows researchers to construct models with suitable factor sizes as well as explore relationships among these factors.

The bass-ackward approach has been applied in a variety of psychological research areas (Bagby et al. 2014; Gerritsen et al. 2018; Kirby and Finch 2010). However, while the approach shows the great capability of constructing a hierarchical structure from the top down, its efficacy in terminating with the correct number of factors has not been thoroughly examined. Since the final extraction could represent the last level of the tree structure, terminating either too early (underfactoring) or too late (overfactoring) could yield misleading results. Therefore, it is important to investigate the performance of the bass-ackward approach in choosing optimal factor numbers, especially concerning its two termination criteria (i.e., BA-maxLoading and BA-cutoff).

This study aims to comprehensively compare three representative methods for determining the optimal number of factors in factor analysis: the K1 rule, parallel analysis, and the bass-ackward method. The rest of the paper is organized as follows. First, we provide an in-depth overview of factor analysis and the three methods for choosing optimal factor numbers. Then, we conduct a simulation study to (1) assess the efficacy of the bass-ackward approach in identifying the correct number of factors and (2) investigate the impact of various conditions on the three methods’ performance.Footnote 1 After that, we introduce an online application that we developed to implement the three methods and visualize the factor structures based on the bass-ackward method. Finally, we conclude the paper with recommendations on the use of these three methods.

2 Method

2.1 Exploratory Factor Analysis (EFA)

Within the framework of EFA, the common factor model represents observed variables as functions of model parameters and latent factors (Preacher et al. 2013). In matrix notation, an EFA model can be expressed as

where \({\textbf{Y}}\) is a \(p \times n\) matrix of data from \(n\) participants on \(p\) observed variables, items, or indicators, \(\varvec{\Lambda }\) is a \(p \times q\) factor loading matrix, \({\textbf{F}}\) is a \(q \times n\) matrix of factor scores, and \({\textbf{E}}\) is a \(p \times n\) matrix of unique factor scores. In EFA, the common factors are assumed to explain the shared variance among the observed variables, while the unique factors account for the variance specific to each variable and the measurement error. And the unique factors are assumed to be uncorrelated with both common factors and among themselves.

Therefore, under the factor model, the covariance matrix of the \(p\) variables is represented as

where \(\varvec{\Sigma }\) is a \(p \times p\) covariance matrix of the observed variables, \(\varvec{\Phi }\) is a \(q \times q\) matrix of factor correlations, and \(\varvec{\Psi }\) is the \(p \times p\) diagonal matrix of variances of the unique factors.

One assumption in EFA concerns the distribution of observed variables, which are typically presumed to follow a multivariate normal distribution. This assumption underpins the use of maximum likelihood estimation (MLE) methods for factor extraction and influences the interpretation and validity of the analysis (Jöreskog 1967). However, it is widely acknowledged that in practical applications, this assumption may not hold (MacCallum et al. 2007), potentially influencing factor loading estimates and the conclusions drawn from the model. To address these challenges, robust techniques have been developed, offering more flexibility by accommodating deviations from normality, thus extending EFA’s utility across various research contexts (Yuan et al. 2000).

A critical step in EFA is to determine the number of factors \(q\). Many methods are available for such a task. In the following sections, we review three methods: the K1 rule, parallel analysis, and the bass-ackward method.

2.2 The K1 Rule

The K1 rule, also known as the Kaiser rule, Kaiser–Guttman rule, and the eigenvalue-greater-than-one rule, is one of the most popular methods for identifying the number of factors in many research fields (Warne et al. 2012). This method starts by calculating the eigenvalues of the correlation matrix of the observed variables. According to the K1 rule, factors with eigenvalues greater than one are retained, as they are considered to capture key information. The idea behind the K1 rule was first developed by Kaiser (1960), inspired by Guttman (1954)’s discussions on the lower bounds for component retention in image analysis. Following its development, numerous studies have further explored and expanded upon this method (Braeken and Van Assen 2017; Kaiser 1970; Wood et al. 1996).

The K1 rule offers computational convenience and ease of implementation, making it a favorable choice in various research contexts (Velicer et al. 2000). However, it has faced criticism for its tendency to inaccurately estimate the appropriate number of factors to retain. Studies (Browne 1968; Cattell and Jaspers 1967; Fava and Velicer 1992; Linn 1965) suggest that the K1 rule often keeps too many factors, especially when the study involves a large number of variables (for example, more than 50) (Zwick and Velicer 1986) and when it is applied to a sample instead of the entire population (Cliff 1988). In addition, the K1 rule might sometimes make inconsistent decisions when the eigenvalues are very close to one, e.g., factors with the eigenvalues of 0.99 and 1.01 (Turner 1998).

2.3 Parallel Analysis

Another prevalent method for determining the number of factors is parallel analysis (PA; Horn 1965). This approach compares the eigenvalues from the observed data with those from the generated parallel data, which mirror the dimensions of the original data but with uncorrelated observed variables. Specifically, one can generate a large number of random datasets with the same dimension as the original data but uncorrelated observed variables, extract eigenvalues for each dataset, and compare them with the eigenvalues from the original data. A smooth eigenvalue pattern is anticipated, as the parallel datasets, generated randomly, are associated with uncorrelated variables. Consequently, eigenvalues from the original dataset exceeding a predetermined portion of those from the parallel datasets are considered substantive and should be retained.

Since its development by Horn (1965), the PA method has undergone several improvements. In the original work, only a single normally distributed parallel dataset was produced. Utilizing advanced computing abilities, Humphreys and Montanelli Jr (1975) modified Horn’s initial method, allowing for the creation of multiple random datasets instead of just one. More recently, researchers have suggested keeping factors with observed eigenvalues greater than those found in the 95th percentile of random datasets (Cota et al. 1993). Alternative methods for creating these parallel datasets have also been introduced, such as generating simulated datasets through permuting the original data (Buja and Eyuboglu 1992).

The simulation-based method has been extremely popular for its accuracy (Warne et al. 2012). Several studies described PA as one of the best procedures for estimating the number of factors (Hubbard and Allen 1987; Thompson and Daniel 1996; Weiner 2003), although generating \(N\) parallel cases and conducting eigenvalue decomposition for each necessitates N times the computing time required for the K1 Rule. Another concern for PA method is its tendency for underfactoring, as pointed out by previous research (Turner 1998).

2.4 The Bass-Ackward Method

The bass-ackward (BA) method, introduced by Goldberg (2006), facilitates the analysis of the hierarchical structure within a set of variables based on factor scores. The construct of the factors goes from abstract to specific as the hierarchical tree expands from top to bottom. The inter-level factor correlations function as the weights for the respective edges or paths, thus offering researchers a nuanced insight into the intrinsic relationships between the factors. Compared to the traditional bottom-up factor analysis, the BA method elucidates the relationships between factors by showing how each main factor decomposes into more detailed sub-factors. This approach is particularly helpful for researchers seeking in-depth explanations.

Over time, the BA method has undergone several enhancements and adaptations. An early study by Waller (2007) introduced a simplified procedure for replicating hierarchical structures. This work demonstrated that correlations between factor scores across different levels could be computed without actual factor scores, enabling BA’s application to any dataset with an available correlation matrix. Subsequently, the BA method has been applied and adapted in diverse applications, particularly in psychopathology and personality research (Kotov et al. 2017; Tackett et al. 2008; Van den Broeck 2013). For example, Ringwald et al. (2023) utilized the BA method to explore connections between psychopathology dimensions within the HiTOP (Hierarchical Taxonomy of Psychopathology) framework. Due to the absence of individual-level data, the study calculated congruence coefficients for factors across levels, offering a comparable estimate of the cross-level factor associations. Kim and Eaton (2015) and Forbush et al. (2023) adopted a modified version of Goldberg’s method, incorporating exploratory structural equation modeling to extract latent factors. A recent advancement by Forbes (2023) further refined the original BA method by enhancing the analysis of associations across hierarchical levels. This refinement introduced techniques to maintain essential factors and strong correlations, thereby facilitating a more distinct and clear-cut hierarchical structure.

2.4.1 Termination Criteria

The maximum number of hierarchical structure levels can equal the number of observed variables; however, the top-down procedure should always terminate before reaching this maximum. In this context, the factors at the bottom level are the ones retained. Consequently, the number of factors depends on when to terminate the top-down expansion.

Existing literature, including the foundational work of Goldberg (2006) and more recent advancements by Forbes (2023), has highlighted the necessity of defining termination criteria. However, the performance of these criteria, and their comparisons with traditional approaches such as the K1 Rule and PA, remains unexplored. In this section, we introduce two fundamental termination criteria, which underpin most existing methods for simplifying hierarchical structures, either independently or in combination.

(1) BA-maxLoading Criterion As per Goldberg’s recommendation, the expansion should stop when no variable registers its highest loading on a given factor. This criterion is rooted in the principle that a factor should be considered redundant if it does not represent the primary explanation for any observed variable. Upon each expansion from the kth level to the \((k+1)\)th level, the factor loading matrix is computed to identify the primary factor for each variable at the \((k+1)\)th level. The process terminates if any factors at this level do not emerge as the primary factor for any variable, resulting in the retention of k factors.

(2) BA-cutoff Criterion This criterion sets a predetermined cutoff value for each inter-level correlation, terminating the expansion when no new factor emerges. It draws inspiration from common graph pruning techniques (Jain and Dubes 1988; Newman 2004; Quinlan 1986), where unimportant edges (e.g., those with low weights) are removed to simplify the structure. In the BA method, these “edges” are the inter-level correlations between factors in adjacent hierarchical levels. At each expansion stage, the \(k*(k+1)\) inter-level correlations between the kth level and the \((k+1)\)th level are computed, and those falling below the cutoff value are discarded. The process ceases if any factors at the \((k+1)\)th level are not correlated with any factors on the kth level, yielding a total of k factors.

We refer to the first criterion as BA-maxLoading and the second criterion as BA-cutoff in this paper.

2.5 An Example

To illustrate the three methods, we applied them to a real dataset previously used in the study by Holzinger and Swineford (1939). In this study, seventh- and eighth-grade students from two schools, the Grant-White School (\(n = 145\)) and the Pasteur School (\(n = 156\)), participated in 26 tests designed to measure a general factor and five specific factors. For the analysis in this example, data from 19 tests were used, focusing on assessing four domain factors: spatial ability, verbal ability, speed, and memory. The data of the 145 students from the Grant-White School were used.

By conducting eigendecomposition, the eigenvalues of the observed data correlation matrix were obtained as: 6.30, 1.95, 1.53, 1.49, 0.94, 0.87, 0.76, 0.66, 0.65, 0.57, 0.55, 0.46, 0.44, 0.41, 0.37, 0.32, 0.31, 0.23, and 0.20. Out of these, four eigenvalues are greater than 1. Thus, according to the K1 rule, one can extract four factors for the 19 variables.

In PA, we generated 1000 sets of parallel uncorrelated data with the same dimension as the real data (145 participants on 19 variables). The eigenvalues of the correlation matrix of each dataset were subsequently obtained. Figure 1 shows the eigenvalues of the original data, the 95% percentile, and the average of the eigenvalues of the 1000 generated datasets. The 95th percentile and the averaged eigenvalues derived from a randomly generated dataset serve as threshold lines in parallel analysis for determining the number of factors to retain. In this example, the first two original eigenvalues were above these thresholds. Therefore, based on parallel analysis, the desired number of factors is two.

Finally, the hierarchical factor structure generated by the BA method is presented in Fig. 2. The single factor at the first level (top row), \(F11\), was retained for the one-factor solution; that is, the solution was based on the assumption that all variables were associated solely with one factor. The factors \(F21\) and \(F22\), located at the second level, were retained for the two-factor solution. Generally speaking, the \(k\) factors at the kth level, denoted as \(Fk1, Fk2, \ldots , Fkk\), were retained for the \(k\)-factor solution. Since the maximum number of possible factors is smaller than the number of variables, the inequality \(1 \le k < p\) holds, where \(p\) is the total number of observed variables. The edges or lines depicted in the figure represent the correlation between two factors across adjacent levels. A large correlation indicates that the factor at the lower level is likely to be a successor of the factor at the higher level. For example, the correlation between \(F31\) and \(F41\) was 1, suggesting that \(F41\) was inherited from \(F31\).

The number of levels retained in Fig. 2 may vary depending on the termination criteria used. When applying BA-maxLoading, the top-down expansion stops at level 6, as no variables exhibit maximum factor loading on the factor F77. When applying the BA-cutoff criterion, the stopping level depends on the cutoff value. For example, with a cutoff value of 0.8, the correlation between \(F21\) and \(F33\) (0.77), \(F21\) and \(F31\) (0.48), and \(F22\) and \(F32\) (0.52) are below the cutoff, thereby terminating the process at level 2 (i.e., the number of desired factors is 2). If using a cutoff value of 0.9, both the correlation between \(F11\) and \(F21\) (0.82) and between \(F11\) and \(F22\) (0.81) are below the cutoff, leading to the termination at level 1 (i.e., there is only one factor). According to our simulation, the best cutoff is usually between 0 and 0.4, resulting in 6 to 7 factors being retained in the example dataset.

3 Simulation Study

The goal of the Monte Carlo simulation study was twofold. First, it sought to assess the effectiveness of the BA method in discerning the number of factors for the first time. Second, it aimed to compare the efficiency of the three previously discussed methods under various conditions.

3.1 Simulation Design

In the simulation study, we investigated various conditions that might influence the performance of the methods: sample size (\(n\)), number of factors (\(q\)), number of variables per factor (\(p/q\)), size of factor loading (\(\lambda \)), size of cross-loading (\(\lambda '\)), and size of factor correlations (\(\phi \)).

Sample Size The sample sizes were set as \(n\) = 100, 200, 300, 400, and 500, ranging from “poor” to “very good” according to Comrey and Lee (1992). A small sample size means few observations and would increase the difficulty of identifying latent factors. Conversely, a large sample size usually leads to more stable and accurate results, yet at the cost of data collection efforts. The median in our setting closely aligns with the median sample size of 267 in empirical studies reported by Henson and Roberts (2006).

Number of Factors We generated datasets with the number of factors \(q\) = 3, 4, 5, and 6, which covers a wide range of real cases in previous psychological research (DiStefano and Hess 2005; Henson and Roberts 2006).

Number of Variables per Factor The variable-to-factor ratio is a critical determinant of model stability (Guadagnoli and Velicer 1988). We examined cases where one latent factor explains 2, 4, 6, 8, and 10 observed variables, aligning with typical research settings (DiStefano and Hess 2005). The corresponding total number of variables \(p\) range from 6 (2 \(\times \) 3) to 60 (6 \(\times \) 10).

Size of Factor Loadings and Cross-Loadings The magnitude of factor loadings has the greatest effect on model performance (Guadagnoli and Velicer 1988). We selected three levels of factor loadings: \(\lambda = 0.5\), 0.6, and 0.7, covering from moderate to strong magnitude (Warne et al. 2012). We also examined the effect of cross-factor loadings. Two levels of cross-factor loadings were considered: \(\lambda '= 0\) and 0.1. When \(\lambda '= 0\), there is a simple structure. Hence, there were \(2 \times 3 = 6\) different factor loading matrices in our simulation settings.

Size of Factor Correlations Factors extracted from psychological datasets often correlate with each other. Our simulations used factor correlation \(\phi =0\), 0.1, 0.2, and 0.3. When \(\phi \)=0, the factors are orthogonal. When \(\phi = 0.1\)–0.2, the factors are slightly correlated. When \(\phi = 0.3\), the factors are moderately correlated.

For the BA-cutoff criterion, we set three cutoff values: 0.20, 0.25, and 0.30, as these were optimal in most cases based on our preliminary investigation.

To better illustrate the settings, the path diagram for the condition with \(p=2\), \(p/q=3\) is given in Fig. 3. The factor loading matrix and factor correlation matrix are as follows:

In the path diagram, the solid line represents a salient loading l, and the dotted line represents a cross-loading c. In this example, there are 2 factors and 6 variables, and each factor is mainly associated with 3 variables. As is shown in Fig. 3, the first 3 variables are mainly associated with factor 1 with a factor loading of \(l\), and are slightly associated with factor 2 with a cross-loading of \(c\). The correlation between factor 1 and factor 2 is \(\phi \).

To be more realistic, we introduced variability to both the factor loading and factor correlation matrices in the data simulation. For a given level of factor loading \(\lambda > 0\), we generated \(l\) following a uniform distribution within [\(\lambda - 0.05\), \(\lambda +0.05\)]; similarly, each factor correlation was generated from following a uniform distribution within [\(\phi -0.05\), \(\phi + 0.05\)]. For simulation results based on data generated with fixed \(l\) and \(\phi \), see “Appendix 1.”

3.2 Evaluation Criteria

There are a total of \(5 \times 4 \times 5 \times 6 \times 4 = 2400\) combinations of conditions in the simulation study. For each condition, we generated 500 datasets and applied the three methods introduced earlier to determine the number of factors. To compare the performance of these methods, we used several evaluation metrics validated by previous research (Auerswald and Moshagen 2019; Warne et al. 2012).

The accuracy of a method is defined as the proportion of correct results across all replications. We also calculated the standard deviation of the 500 estimates of the number of factors to measure the method’s stability.

If a method fails to retain the correct number of factors in a replication, the result is considered biased. Assuming the correct number of factors under a certain condition is \(q\), retaining \(q-1\) or fewer factors denotes underfactoring, while retaining \(q+1\) or more factors indicates overfactoring. Both underfactoring and overfactoring are biased. The mean bias was defined as the mean absolute difference between the estimated and the true number of factors across all replications, with the ratio of underfactoring (or overfactoring) as the proportion of underextraction (or overextraction) across all replications. Correspondingly, the mean bias of underfactoring (or overfactoring) is the mean absolute difference between the estimated and the real number of factors across all underfactoring (overfactoring) cases.

3.3 Results

3.3.1 Overall Comparisons

Table 1 summarizes the overall performance of the three methods. On a general note, PA outperformed all other methods with the lowest average mean bias (0.76). BA-maxLoading had an average mean bias of 1.12, followed by K1 with 1.49. BA-cutoff resulted in a mean bias ranging from 1.80 to 2.12, making it the most biased method.

The direction and extent of bias varied among methods. BA-maxLoading had the lowest ratio of underfactoring (5%), while K1 had the lowest mean bias of underfactoring (0.38). However, both tended to overextract factors, getting an average of 1.45 and 1.99 more factors than expected in 32% and 36% of cases, respectively. PA, on the other hand, underestimated the number of factors by 1.03 in 29% of cases, and rarely overestimated. BA-cutoff also tended to overfactor, with a large bias in both under- and overfactoring cases. The accuracy of the identified number of factors followed a similar pattern: PA identified the correct number of factors in 71% of cases, followed by BA-maxLoading (58%) and K1 (56%).

In terms of stability, PA and K1 produced stable results with an average standard deviation of 0.25 and 0.39, respectively. In contrast, BA-maxLoading and BA-cutoff exhibited relatively large standard deviations. Note that as the cutoff value increased, the standard deviation of BA-cutoff decreased, leading to more conservative and stable estimates across replications.

We now illustrate how individual factor, such as sample size and the existence of cross-loading, influences the performance of different methods.

3.3.2 Sample Size

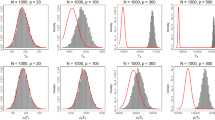

Figure 4 shows the result of the average accuracy (left) and average mean bias (right) of factor extraction depending on sample size \(n\). As anticipated, increasing the sample size enhances the accuracy and reduces the mean bias across all examined methods, which aligns with the principle that a larger dataset contains more information and aids in more effectively identifying the correct number of factors.

Among the evaluated methods, PA consistently delivered superior performance. BA-maxLoading ranked as the second most effective method when \(n < 400\), suggesting it could be well-suited for small and moderate datasets. BA-cutoff was generally less precise. However, it is interesting to note that its accuracy across various threshold values tended to converge at larger sample sizes. Overall, the mean bias of K1 and BA-maxLoading seems more sensitive to changes in sample size compared to the other two methods.

3.3.3 Number of Factors

Figure 5 displays the average accuracy and mean bias of factor extraction with different numbers of factors \(q\). As the number of factors increased, PA and K1 were less likely to identify the number of factors correctly, while BA-maxLoading and BA-cutoff became more accurate. Specifically, BA-maxLoading outperformed K1 when \(q \ge 5\) and exceeded PA when \(q \ge 6\). Therefore, BA-maxLoading seems to be more suitable for conditions with a large number of potential factors, while K1 and PA might be applied when the number of potential factors is small.

3.3.4 Number of Variables Per Factor

Figure 6 reveals the effect of the number of variables per factor \(p/q\) on factor extraction. As shown in the left plot, all methods, except K1, exhibited improved accuracy with larger \(p/q\). K1 experienced a dramatic decrease in accuracy when \(p/q > 4\). A similar trend can be found in the right plot, where K1 had an average mean bias of around 1.1 when \(p/q = 2\), but an average mean bias larger than 3 when \(p/q=10\). Therefore, researchers should avoid using K1 to identify factors when there are numerous variables and a few potential factors. The average mean bias of both BA-maxLoading and BA-cutoff peaked when \(p/q=4\) and then began to decline. Thus, the bass-ackward method might be preferable in situations with a higher variable-to-factor ratio.

3.3.5 Size of Factor Loadings and Cross-Loadings

Figure 7 illustrates the performance of factor extraction methods according to the size of the factor loadings \(\lambda \). All methods performed better as \(\lambda \) increased, which is expected since larger factor loadings make the factors easier to identify.

Table 2 presents the performance of factor extraction methods with and without cross-loadings. Comparing the two tables, we found that PA was the best method without cross-loading, achieving the highest accuracy of 88%. However, its performance deteriorated with small cross-loadings, where its accuracy dropped to 54%. Meanwhile, the other three methods performed better under the conditions with cross-loadings, with BA-maxLoading becoming the best method with an accuracy of 60%. Hence, PA is most suitable for conditions without cross-loadings, and BA-maxLoading is preferable when cross-loadings exist.

3.3.6 Factor Correlations

Figure 8 shows the performance of factor extraction methods when the factor correlation \(\phi \) varies. As illustrated in the left plot, when \(\phi \) increased, PA became less accurate, and was outperformed by BA-maxLoading when \(\phi = 0.3\). The reason might be that higher correlations between factors increase the complexity of the real data, making the comparison between real data and parallel data less effective. In contrast, BA-cutoff became more accurate with larger factor correlations, likely because it takes inter-level factor correlations into account.

4 Software

As demonstrated in our simulation study, the bass-ackward method has advantages in identifying the number of factors under certain conditions. BA can be conducted using the function bassAckward() in the R package Psych (Revelle and Revelle 2015). To provide a user-friendly tool for researchers who are not familiar with R, we have developed a web application that can compute and visualize the hierarchical factor structure of a dataset. The application was developed based on PHP (Bakken et al. 2000) and R (R Core Team 2021).

As is shown in Fig. 9, the online tool supports several data formats (SPSS, SAS, Excel, CSV). Users may select a subset of variables to analyze by entering the corresponding column numbers and denote certain value(s) as missing data by entering these values. For the BA method, both the BA-cutoff and the BA-maxLoading criteria are supported. A self-defined cutoff value is required if users choose to use BA-cutoff.

Once the form is submitted via the “calculate” button, the web application will return the EFA results within a few seconds (Figs. 10 and 11). All three methods discussed in this paper will be applied to determine the number of factors from the given variables. Additionally, based on the selected BA criterion, a visualized factor tree structure will appear at the top of the page. In the hierarchical tree, nodes with the same color represent inherited factors from top down, and the edges with decimal values attached denote factor correlations across adjacent factor levels. Detailed outputs of BA will be provided, including both factor correlations and factor loading matrices.

The computation of the hierarchical factor structure is conducted based on the R package Psych (Revelle and Revelle 2015), with two major enhancements. First, it provides suggestions for the number of factors that should be retained based on the BA-maxLoading criteria. Second, it automatically calculates the inheritance relationship within the factor structure and presents each inheritance from the top down with different colors (Fig. 2). The output was rendered with the R package DiagrammeR (Iannone and Iannone 2022). The web application can be accessed at https://websem.psychstat.org/apps/bass/.

5 Discussion and Conclusion

In this paper, we investigated three methods for identifying the correct number of factors: the K1 rule, parallel analysis, and the bass-ackward method. We began by briefly introducing their historical development, advantages, and limitations. Subsequently, a Monte Carlo simulation study was conducted to evaluate and compare the performance of these three approaches for retaining the correct number of factors.

Based on the simulation results, PA is generally the best method. It produced the most accurate estimates with the smallest mean bias under most conditions, even when the sample size was small. In particular, it approached 100% accuracy with a substantial number of variables and limited latent factors. However, its efficacy diminishes with increased cross-loadings and factor correlations and was outperformed by BA-maxLoading under some circumstances. Another concern about PA is that it underfactored in 29% of conditions, which can have more deleterious effects than overfactoring (Montoya and Edwards 2021). This finding is consistent with previous research by Turner (1998).

K1 performed poorly under most conditions. It was sensitive to the change of a variety of parameters and only worked well under conditions with large sample sizes, large factor loadings, and few variables per factor. Unlike PA’s underfactoring, K1 overfactored in 32% of conditions. K1 was intended to provide an upper bound rather than the exact number of identified factors (Hayton et al. 2004). Therefore, we suggest not to use this rule to retain factors, or only to use it as an assistance under certain circumstances, such as when cross-loading exists.

One of the primary purposes of this paper was to evaluate the efficiency of the bass-ackward approach in identifying the correct number of factors across different conditions. Using different termination criteria, the BA method was discussed with BA-maxLoading and BA-cutoff. BA-maxLoading stood out for its robust performance, particularly in complex scenarios with high inter-factor correlations or cross-loadings, which are common in real-world datasets. It is also recommended for conditions with a moderate sample size, a large number of potential factors, and large factor loadings. Additional advantages include lower computational demands and enhanced interpretability due to its hierarchical output. However, in contrast to PA, which tends to underfactor, BA-maxLoading tends to overestimate the number of factors. Issues with algorithmic convergence further impact its reliability, and Heywood cases occurred when it tried to extract a relatively large number of factors. Therefore, we recommend using PA together with BA-maxLoading when deciding the number of levels of the final factor structure.

BA-cutoff also showed unsatisfactory accuracy and therefore is not recommended as an independent approach for factor retention. However, it has the potential to be integrated with BA-maxLoading or other techniques to produce improved outcomes (Forbes 2023), e.g., applying a conservative cutoff threshold to help reduce the risk of overfactoring. In addition, given the interpretable hierarchical structure produced by the Bass-Ackwards approach, both BA termination criteria can be fine-tuned to suit specific research contexts. More research can be done on exploring the combination of these methods to achieve accurate and robust results.

To summarize the simulation results and to aid researchers in selecting the appropriate method in specific contexts, we offer the following recommendations based on typical scenarios encountered in factor analysis. For datasets with a large number of variables and low to moderate factor correlations, PA is the most reliable choice due to its high accuracy and low mean bias. In contrast, when dealing with complex data structures that exhibit high inter-factor correlations, cross-loadings, or a large number of potential factors, BA-maxLoading is preferable for its robust performance and lower computational demands. For smaller sample sizes, where underfactoring is a big concern, combining PA with BA-maxLoading can provide a balanced approach, leveraging the strengths of both methods. Overall, practitioners should consider the specific characteristics of their data and the strengths and weaknesses of each method to make an informed decision.

It should be noted that the K1 rule and PA methods rely on eigenvalues, contrasting with the tree-based approach of the BA method. While eigenvalue-based methods generally withstand non-normality better than MLE-based methods, they are still vulnerable to distribution non-normality. PA demonstrates superior performance and acceptable results under moderate non-normality (Li et al. 2020). Conversely, the BA method, which does not require specific assumptions about variable distribution, has its response to non-normality still under examination.

For future directions, researchers may consider the evaluations of the bass-ackward approach on more complex datasets, particularly under conditions such as non-normality of data distribution, which could yield valuable insights. Additionally, case studies demonstrating the application of this method can further elucidate its practical utility and limitations. Meanwhile, better termination criteria with higher accuracy and time efficiency can be explored to make bass-ackward a more powerful approach for factor analysis in psychological research.

Notes

Simulation codes are available at https://osf.io/vzufs/.

References

Auerswald, M., and M. Moshagen. 2019. How to determine the number of factors to retain in exploratory factor analysis: A comparison of extraction methods under realistic conditions. Psychological Methods 24 (4): 468. https://doi.org/10.1037/met0000200.

Bagby, R.M., M. Sellbom, L.E. Ayearst, M.S. Chmielewski, J.L. Anderson, and L.C. Quilty. 2014. Exploring the hierarchical structure of the MMPI-2-RF personality psychopathology five in psychiatric patient and university student samples. Journal of Personality Assessment 96 (2): 166–172. https://doi.org/10.1080/00223891.2013.825623.

Bakken, S.S., Z. Suraski, and E. Schmid. 2000. Php manual, vol. 1. Bloomington: iUniverse Incorporated.

Braeken, J., and M.A. Van Assen. 2017. An empirical Kaiser criterion. Psychological Methods 22 (3): 450.

Breiman, L. 2001. Random forests. Machine Learning 45: 5–32.

Browne, M.W. 1968. A note on lower bounds for the number of common factors. Psychometrika 33 (2): 233–236.

Buja, A., and N. Eyuboglu. 1992. Remarks on parallel analysis. Multivariate Behavioral Research 27 (4): 509–540.

Cattell, R., and J. Jaspers. 1967. A general plasmode for factor analytic exercises and research (no. 30-10-52). MBR Monographs 3: 67.

Cliff, N. 1988. The eigenvalues-greater-than-one rule and the reliability of components. Psychological Bulletin 103 (2): 276. https://doi.org/10.1037/0033-2909.103.2.276.

Comrey, A., and H. Lee. 1992. Interpretation and application of factor analytic results. In A first course in factor analysis, 2nd ed., ed. A.L. Comrey and H.B. Lee. London: Psychology Press. https://doi.org/10.4324/9781315827506.

Costello, A.B., and J. Osborne. 2005. Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical Assessment, Research, and Evaluation 10 (1): 7. https://doi.org/10.7275/jyj1-4868.

Cota, A.A., R.S. Longman, R.R. Holden, G.C. Fekken, and S. Xinaris. 1993. Interpolating 95th percentile eigenvalues from random data: An empirical example. Educational and Psychological Measurement 53 (3): 585–596. https://doi.org/10.1177/0013164493053003001.

De Winter, J.C., and D. Dodou. 2012. Factor recovery by principal axis factoring and maximum likelihood factor analysis as a function of factor pattern and sample size. Journal of Applied Statistics 39 (4): 695–710. https://doi.org/10.1080/02664763.2011.610445.

DiStefano, C., and B. Hess. 2005. Using confirmatory factor analysis for construct validation: An empirical review. Journal of Psychoeducational Assessment 23 (3): 225–241. https://doi.org/10.1177/073428290502300303.

Fabrigar, L.R., D.T. Wegener, R.C. MacCallum, and E.J. Strahan. 1999. Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods 4 (3): 272.

Fava, J.L., and W.F. Velicer. 1992. The effects of overextraction on factor and component analysis. Multivariate Behavioral Research 27 (3): 387–415. https://doi.org/10.1207/s15327906mbr2703_5.

Forbes, M.K. 2023. Improving hierarchical models of individual differences: An extension of Goldberg’s bass-ackward method. Psychological Methods. https://doi.org/10.1037/met0000546.

Forbush, K.T., Y. Chen, P.Y. Chen, B.K. Bohrer, K.E. Hagan, D.A. Chapa, K.A. Christensen Pacella, V. Perko, B.N. Richson, S.N. Johnson Munguia, and M. Thomeczek. 2023. Integrating “lumpers’’ versus “splitters’’ perspectives: Toward a hierarchical dimensional taxonomy of eating disorders from clinician ratings. Clinical Psychological Science. https://doi.org/10.1177/21677026231186803.

Gerritsen, C.J., M. Chmielewski, K. Zakzanis, and R.M. Bagby. 2018. Examining the dimensions of Schizotypy from the top down: A hierarchical comparison of item-level factor solutions. Personality Disorders: Theory, Research, and Treatment 9 (5): 467. https://doi.org/10.1037/per0000283.

Goldberg, L.R. 2006. Doing it all bass-ackwards: The development of hierarchical factor structures from the top down. Journal of Research in Personality 40 (4): 347–358. https://doi.org/10.1016/j.jrp.2006.01.001.

Goretzko, D., and M. Bühner. 2020. One model to rule them all? Using machine learning algorithms to determine the number of factors in exploratory factor analysis. Psychological Methods 25 (6): 776. https://doi.org/10.1037/met0000262.

Guadagnoli, E., and W.F. Velicer. 1988. Relation of sample size to the stability of component patterns. Psychological Bulletin 103 (2): 265. https://doi.org/10.1037/0033-2909.103.2.265.

Guttman, L. 1954. Some necessary conditions for common-factor analysis. Psychometrika 19 (2): 149–161.

Hayton, J.C., D.G. Allen, and V. Scarpello. 2004. Factor retention decisions in exploratory factor analysis: A tutorial on parallel analysis. Organizational Research Methods 7 (2): 191–205. https://doi.org/10.1177/1094428104263675.

Henson, R.K., and J.K. Roberts. 2006. Use of exploratory factor analysis in published research: Common errors and some comment on improved practice. Educational and Psychological Measurement 66 (3): 393–416. https://doi.org/10.1177/0013164405282485.

Holzinger, K.J., and F. Swineford. 1939. A study in factor analysis: The stability of a bi-factor solution. Supplementary educational monographs. Chicago: University of Chicago.

Horn, J.L. 1965. A rationale and test for the number of factors in factor analysis. Psychometrika 30 (2): 179–185. https://doi.org/10.1007/BF02289447.

Hubbard, R., and S.J. Allen. 1987. An empirical comparison of alternative methods for principal component extraction. Journal of Business Research 15 (2): 173–190.

Humphreys, L.G., and R.G. Montanelli Jr. 1975. An investigation of the parallel analysis criterion for determining the number of common factors. Multivariate Behavioral Research 10 (2): 193–205.

Iannone, R., and M.R. Iannone. 2022. Package ‘diagrammer’.

Jain, A.K., and R.C. Dubes. 1988. Algorithms for clustering data. Hoboken: Prentice-Hall Inc.

Jöreskog, K.G. 1967. Some contributions to maximum likelihood factor analysis. Psychometrika 32 (4): 443–482.

Kaiser, H.F. 1960. The application of electronic computers to factor analysis. Educational and Psychological Measurement 20 (1): 141–151. https://doi.org/10.1177/001316446002000116.

Kaiser, H.F. 1970. A second generation little jiffy. Psychometrika 35: 401–415.

Kim, H., and N.R. Eaton. 2015. The hierarchical structure of common mental disorders: Connecting multiple levels of comorbidity, bifactor models, and predictive validity. Journal of Abnormal Psychology 124 (4): 1064.

Kirby, K.N., and J.C. Finch. 2010. The hierarchical structure of self-reported impulsivity. Personality and Individual Differences 48 (6): 704–713. https://doi.org/10.1016/j.paid.2010.01.019.

Kotov, R., R.F. Krueger, D. Watson, T.M. Achenbach, R.R. Althoff, R.M. Bagby, et al. 2017. The hierarchical taxonomy of psychopathology (HiTOP): A dimensional alternative to traditional nosologies. Journal of Abnormal Psychology 126 (4): 454.

Li, Y., Z. Wen, K.-T. Hau, K.-H. Yuan, and Y. Peng. 2020. Effects of cross-loadings on determining the number of factors to retain. Structural Equation Modeling: A Multidisciplinary Journal 27 (6): 841–863.

Linn, R.L. 1965. A Monte Carlo approach to the number of factors problem. Champaign: University of Illinois at Urbana-Champaign.

MacCallum, R.C., M.W. Browne, and L. Cai. 2007. Factor analysis models as approximations. Factor Analysis 100: 153–175.

Montoya, A.K., and M.C. Edwards. 2021. The poor fit of model fit for selecting number of factors in exploratory factor analysis for scale evaluation. Educational and Psychological Measurement 81 (3): 413–440. https://doi.org/10.1177/0013164420942899.

Newman, M.E. 2004. Analysis of weighted networks. Physical Review E 70 (5): 056131.

Preacher, K.J., G. Zhang, C. Kim, and G. Mels. 2013. Choosing the optimal number of factors in exploratory factor analysis: A model selection perspective. Multivariate Behavioral Research 48 (1): 28–56.

Quinlan, J.R. 1986. Induction of decision trees. Machine Learning 1: 81–106.

R Core Team. 2021. R: A language and environment for statistical computing [Computer software manual]. Vienna. Retrieved from https://www.R-project.org/.

Revelle, W., and M.W. Revelle. 2015. Package ‘psych’. The Comprehensive R Archive Network 337: 338.

Ringwald, W.R., M.K. Forbes, and A.G. Wright. 2023. Meta-analysis of structural evidence for the hierarchical taxonomy of psychopathology (HiTOP) model. Psychological Medicine 53 (2): 533–546.

Suhr, D.D. 2005. Principal component analysis vs. exploratory factor analysis. SUGI 30 Proceedings 203 (230): 1–11.

Tackett, J.L., R.F. Krueger, W.G. Iacono, and M. McGue. 2008. Personality in middle childhood: A hierarchical structure and longitudinal connections with personality in late adolescence. Journal of Research in Personality 42 (6): 1456–1462.

Thompson, B., and L.G. Daniel. 1996. Factor analytic evidence for the construct validity of scores: A historical overview and some guidelines, vol. 56(2). Sage: Thousand Oaks.

Turner, N.E. 1998. The effect of common variance and structure pattern on random data eigenvalues: Implications for the accuracy of parallel analysis. Educational and Psychological Measurement 58 (4): 541–568.

Van den Broeck, J. 2013. A trait-based perspective on the assessment of personality and personality pathology in older adults. Unpublished doctoral dissertation. Elsene: Faculty of Psychology and Educational Science, Vrije Universiteit Brussel.

Velicer, W.F. 1976. Determining the number of components from the matrix of partial correlations. Psychometrika 41 (3): 321–327. https://doi.org/10.1007/BF02293557.

Velicer, W.F., C.A. Eaton, and J.L. Fava. 2000. Construct explication through factor or component analysis A review and evaluation of alternative procedures for determining the number of factors or components. Problems and Solutions in Human Assessment. https://doi.org/10.1007/978-1-4615-4397-8_3.

Waller, N. 2007. A general method for computing hierarchical component structures by Goldberg’s bass-ackwards method. Journal of Research in Personality 41 (4): 745–752. https://doi.org/10.1016/j.jrp.2006.08.005.

Warne, R.T., M. Lazo, T. Ramos, and N. Ritter. 2012. Statistical methods used in gifted education journals, 2006–2010. Gifted Child Quarterly 56 (3): 134–149. https://doi.org/10.1177/0016986212444122.

Weiner, I.B. 2003. Handbook of psychology, history of psychology, vol. 1. Hoboken: Wiley.

Wood, J.M., D.J. Tataryn, and R.L. Gorsuch. 1996. Effects of under-and overextraction on principal axis factor analysis with varimax rotation. Psychological Methods 1 (4): 354.

Yang, B. 2005. Factor analysis methods. In Research in organizations: Foundations and methods of inquiry, ed. R.A. Swanson and E.F. Holton, 181–199. Oakland: Berrett-Koehler Publishers.

Yuan, K.-H., W. Chan, and P.M. Bentler. 2000. Robust transformation with applications to structural equation modelling. British Journal of Mathematical and Statistical Psychology 53 (1): 31–50.

Zwick, W.R., and W.F. Velicer. 1986. Comparison of five rules for determining the number of components to retain. Psychological Bulletin 99 (3): 432. https://doi.org/10.1037/0033-2909.99.3.432.

Funding

This work was supported by Grants from the Department of Education (R305D140037; R305D210023). However, the contents do not necessarily represent the policy of the Department of Education, and you should not assume endorsement by the Federal Government. It was also supported by the Lucy Family Institute for Data and Society and the Notre Dame International at the University of Notre Dame.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Appendix 1: Simulation Results on Data Without Noises

Appendix 1: Simulation Results on Data Without Noises

See Figs. 12, 13, 14, 15, and 16; Tables 3 and 4.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tong, L., Qu, W. & Zhang, Z. Comparison of the K1 Rule, Parallel Analysis, and the Bass-Ackward Method on Identifying the Number of Factors in Factor Analysis. Fudan J. Hum. Soc. Sci. (2024). https://doi.org/10.1007/s40647-024-00423-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40647-024-00423-2