Abstract

Purpose of Review

Selenium, a trace element, is ubiquitous in the environment. The main source of human exposure is diet. Despite its nutritional benefits, it is one of the most toxic naturally occurring elements. Selenium deficiency and overexposure have been associated with adverse health effects. Its level of toxicity may depend on its chemical form, as inorganic and organic species have distinct biological properties.

Recent Findings

Nonexperimental and experimental studies have generated insufficient evidence for a role of selenium deficiency in human disease, with the exception of Keshan disease, a cardiomyopathy. Conversely, recent randomized trials have indicated that selenium overexposure is positively associated with type 2 diabetes and high-grade prostate cancer. In addition, a natural experiment has suggested an association between overexposure to inorganic hexavalent selenium and two neurodegenerative diseases, amyotrophic lateral sclerosis and Parkinson’s disease.

Summary

Risk assessments should be revised to incorporate the results of studies demonstrating toxic effects of selenium. Additional observational studies and secondary analyses of completed randomized trials are needed to address the uncertainties regarding the health risks of selenium exposure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Controversies are common and unavoidable in scientific research, and they represent a major impetus for additional research. However, there are probably few areas as controversial as the health effects of exposure to selenium, a metalloid of toxicological and nutritional interest for many living organisms [1, 2, 3•, 4•, 5]. Exposure to this trace element mainly occurs through diet, particularly through intakes of fish, seafood, and meat [6], being generally limited to few dozens of micrograms a day. Additional sources of selenium exposure are cigarette smoking [7], traffic-related air pollution [8, 9], coal combustion [10,11,12], and occupational exposures [13], as well as dietary supplements [14,15,16].

The possibility that this trace element can improve or harm human health depending on the dose has been suggested by a large number of studies [17,18,19,20,21]. However, despite hundreds of epidemiologic investigations on this topic, evidence about the amount of exposure and the specific health outcomes affected by selenium exposure is limited [5, 22•]. At the present time, most selenium experts would agree on the need to avoid too low or too high intakes of this element. However, there is a lack of consensus regarding the safe range of exposure and disagreement as to the veracity of some of the purported associations between selenium exposure and health outcomes such as cancer [3•, 5, 23,24,25,26]. The availability of well-conducted environmental epidemiologic studies and experimental studies (as randomized controlled trials) and the more in-depth insights from laboratory studies have improved our knowledge of the health effects of environmental selenium, the tools for monitoring its exposure, and the need to regulate human exposure to this element [1, 17, 20, 27,28,29,30,31]. While the first period of investigation has concerned the potential for harm of this element, and the second its possible beneficial effects, accumulating evidence from recent studies has highlighted again the potential toxicity of selenium overexposure [5, 26]. These recent studies have suggested that overexposure may occur at much lower levels than believed. They also implicated new diseases associated with excess selenium intake and diseases once thought only to arise with selenium deficiency. These diseases include diabetes; hypertension; neurodegenerative diseases such as amyotrophic lateral sclerosis, Parkinson’s disease, and Alzheimer’s dementia; and cancer [3•, 22•, 32, 33, 34•, 35, 36]. In this review, we summarize the most recent lines of evidence concerning the human health effects of environmental selenium, highlighting key issues currently at the forefront of this research.

Environmental Studies

A PubMed search on the human health effects related to environmental selenium exposure shows that such observational studies can be split into two subgroups. One subgroup comprises the investigations carried out in environmental contexts characterized by marked deficient or excess exposures to selenium. Another set of studies has been carried out in non-seleniferous geographic areas, generally Western countries, where investigators have examined the association between environmental selenium and health endpoints. These investigators typically address hypotheses of either beneficial or adverse effects of selenium at the roughly “intermediate” exposure levels that characterize these study populations. For a detailed assessment of these studies, we refer to previous reviews [3•, 5, 26, 37].

Concerning the environmental studies, pioneering studies on naturally occurring selenium overexposure were performed in Northern and Southern America and were later followed by studies in China and other parts of the world [26, 38] (Supplemental Fig. S1). The key details of these studies are reported in Table 1. More recently, new studies have been carried out in other seleniferous areas, such as the Brazilian Amazon [50], the Inuit population of Canada [47, 66], and a seleniferous area in Punjab [64], with Chinese investigations still continuing to provide relevant information on this issue [67, 68]. Environmental exposure to selenium in its inorganic tetravalent form has also occurred in a Northern Italian community, and this has been the only investigation of chronic disease risk using a longitudinal study design [53, 54•]. Overall, these studies have identified toxicity of selenium to a large number of body organs and systems, such as the liver, the skin, the endocrine system, and the nervous system. Despite the nonexperimental nature of most studies, lack of replication of results on some endpoints, and other methodological limitations, the overall results provide evidence of toxicity of naturally occurring selenium at high levels of exposure worldwide (Table 1). However, these studies have been limited in clarifying the exact amounts of exposure that are harmful, generally due to inadequate data on biomarkers of selenium intake [38]. An exception to this pattern has been the observation of an inverse association between selenium exposure and triiodothyronine levels in children living in a seleniferous area of Venezuela starting at around 350 μg/day of selenium intake [48]. This finding is of interest by taking into account the recent results of a Danish trial, where selenium supplementation of 100 to 300 μg/day adversely affected thyroid function in a dose-dependent manner, decreasing serum TSH and FT4 concentrations [69]. In that study, mean participant age was 66.1 years, baseline plasma selenium levels were to 87.3 μg/L, and the supplemental selenium is expected to have been almost entirely organic, being administered as selenium-enriched yeast. In contrast, no effect of supplementation was reported in an UK trial among the elderly [70] following administration of 100, 200, or 300 μg/day of the element through selenium-enriched yeast, despite similar background selenium status (plasma levels 91.3 μg/L).

In addition to selenium overexposure, selenium deficiency has been suggested to play a major role in the etiology of a rare but severe cardiomyopathy described in Chinese populations, Keshan disease [59, 71]. The major pieces of evidence linking the etiology of Keshan disease to selenium deficiency have been its higher frequency in regions low in selenium, and the beneficial effect of selenium in its inorganic tetravalent form in community trials on the incidence of Keshan disease [59, 71,72,73]. However, these community-based trials may have been prone to bias, as they did not include a double-blind design, randomization, or allocation concealment on an individual basis [59, 71, 74]. In addition, some epidemiologic features are not consistent with a causal association with selenium, such as the seasonal occurrence of Keshan disease, which is more compatible with an infectious etiology [75, 76], the decrease over time of the disease independently from major changes in selenium supply, and the lack of Keshan disease eradication despite increased selenium intake [72, 77, 78]. Furthermore, well-known antiviral effects of selenium in its inorganic form may explain the decreasing incidence of Keshan disease after selenite administration [79]. Even though an association between selenium deficiency and Keshan disease has not been firmly established, recent studies linking this disease to selenium deficiency have prompted recommendations by WHO/FAO to avoid selenium intakes below 13–19 μg/day in adults (i.e., thresholds above which Keshan disease has not occurred) [80].

Another selenium-responsive disease is Kashin-Beck, a chronic degenerative disorder of peripheral joints and spine [80]. This osteoarthropathy has been described in some Chinese areas in both children and adults, and is also considered a disease of multifactorial etiology, mainly due to inadequate nutritional status [81]. Selenium deficiency possibly plays a role considering ecologic data showing low selenium intake in disease-affected areas and the effectiveness of selenium supplementation in reducing Kashin-Beck disease incidence [80, 82, 83].

In addition to the limitations of exposure assessment in environmental observational studies investigating selenium deficiency and excess, it must be noted that little if any emphasis has been given to the specific chemical forms of selenium involved in such settings. In fact, the selenium found in foods, drinking water, and other environmental matrices such as soil and ambient air may exist in several inorganic and organic chemical forms, and comprise different selenium compounds [3•, 84,85,86]. The toxicity of selenium species and compounds may differ markedly, and it is generally much higher for the inorganic species (such as selenate and selenite) and some organic form (such as selenomethionine) [3•, 36, 87, 88]. Unfortunately, little is known about selenium speciation in most environmental matrices, and this is also true for human tissues and compartments. In addition, the various chemical forms of selenium may have different excretion rates, being faster for the inorganic forms, as well as metabolism and distribution in body tissues. Unfortunately, selenium speciation in both environmental and biological matrices is analytically complex and resource-consuming, which may explain the paucity of data in this field [3•, 89, 90].

A second key limitation of environmental studies has been the little attention given to neurological diseases and disorders [91], except for amyotrophic lateral sclerosis and Parkinson’s disease after low-dose overexposure to inorganic hexavalent selenium [53, 92, 93] and neurological abnormalities in a high-selenium environment [58]. This contrasts with the growing evidence from both clinical and laboratory studies that selenium exposure, and particularly overexposure, may induce neurotoxic effects [4•, 5, 33, 36, 53, 88, 91, 93,94,95,96,97,98,99,100]. Selenium exposure might also affect cognitive functions both in adults and children, though positive, null, and inverse associations have been reported [101,102,103,104,105,106].

The possible occurrence of adverse health effects for selenium exposures in the “average” or intermediate range, which are typically found in Western countries, is also an issue of strong interest [25]. This is especially true considering scientific claims that several Western populations worldwide might suffer from selenium deficiency and low selenium status [23, 107, 108] or claims of a beneficial effect of selenium in cancer prevention issued in the early 2000s [24, 109]. Conversely, recent observational studies have suggested that the exposure levels found in countries not definable as “selenium deficient” or “seleniferous” might be associated with adverse effects attributed to selenium overexposure, such as excess risk of childhood leukemia [8] and cardiovascular disease [9], esophageal dysphagia [110], Alzheimer’s dementia [36], hypertension [32, 111, 112], and type 2 diabetes [113•]. Despite the potential weaknesses of these studies due to their nonexperimental design (apart from diabetes risk), their results suggest that previous research driven by claims of beneficial health effects of selenium have obscured the detection of the adverse effects due to overexposure, and these adverse effects might occur at much lower exposure levels than believed.

Randomized Controlled Trials

Differently from all other toxic elements and most trace elements of nutritional relevance, selenium has been investigated in experimental studies, generally in the form of randomized, controlled, and double-blinded trials (RCTs) [5, 22•]. The key advantage of this study design is the better control of both measured and unmeasured confounding, compared with nonexperimental studies. Unfortunately, RCTs have rarely been implemented in areas known or suspected to be low in selenium exposure, with the exception of those aiming at preventing the incidence of Keshan disease or Kashin-Beck disease [5]. Most RCTs have been conducted in Western populations (mainly North America) where increased nutritional availability of selenium, even in the absence of overt nutritional deficiencies, was envisaged to protect against chronic diseases, particularly cancer, and more rarely other health disturbances such as metabolic abnormalities or thyroid diseases [5]. The key details and location of the RCTs that were designed to test the ability of selenium to prevent cancer are shown in Table 2 and Supplemental Fig. S2.

Compared with nonexperimental studies, experimental studies are better able to control for confounding and reduce potential for exposure misclassification [129]. However, experimental studies may still be hampered by limitations due to variability and range in selenium exposure, the specific population considered (in some cases affected by a disease), and the selenium species being administered [22•, 26]. In addition, some difficulties arise when attempting to compare results of experimental studies with those generated by the nonexperimental “environmental” studies, for differences related to both the specific outcomes investigated the amounts of exposure.

While experimental studies in regions affected by selenium deficiency sought to assess the beneficial effects of the element for Keshan and Kashin-Beck diseases, studies in Western populations typically sought to assess the risk of cancer, particularly prostate cancer, as reviewed elsewhere [22•, 130]. Other endpoints such as cardiovascular diseases [131, 132] have been frequently included. Unfortunately, high-quality trials on cancer and cardiovascular disease have not been conducted in geographic areas characterized by very low selenium intake, or in the few instances in which low exposure was studied, the methodological quality of the trial was low [22•]. More occasionally in Western populations, RCTs based on selective administration of selenium have been designed to assess additional health outcomes such as acute illness and septic shock [133, 134], dementia [135•], blood cholesterol levels [136], thyroid function [69, 137], immunity [138], and HIV infection [139,140,141]. Almost all RCTs assessing risk of cancer and occasionally cardiovascular disease have been carried out in the USA [22•, 130], despite the observation that US selenium levels tend to be much higher than that in other Western countries, particularly European ones. In fact, NHANES data showed median serum selenium levels in the US population on the order of 134 μg/L and 193 μg/L in the 2003–2004 and 2011–2012 surveys, respectively [142, 143], while average serum/plasma selenium levels in the European populations were generally lower than 100 μg/L, in the 50–120 μg/L range [18, 127, 144]. Greater interest in performing randomized trials with selenium in the USA, despite the higher average selenium exposure, has been likely due to enthusiasm generated by the promising ad interim results of the NPC trial [118, 145]. That trial has likely influenced the marked increase in selenium and multivitamin supplementation in the USA [146], despite the lack of clear evidence of a beneficial effect [147, 148].

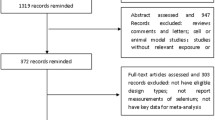

Overall, an evaluation of the RCT results shows that in almost all RCT studies, there was no beneficial effect on cancer or cardiovascular disease following selenium supplementation, particularly when looking at the high-quality studies [22•]. One additional small RCT [108] has been published after a recent Cochrane review on the relation between selenium supplementation and subsequent cancer incidence [22•], but the results [108] did not change the previously published summary rate ratio (RR). In Fig. 1, summary RRs for cancer mortality and incidence are reported, including the newly published trial [108], based on all RCTs and RCTs at low risk of bias.

No clear dose-response association between selenium intake and cancer risk has emerged from these trials [22•]. This led to not only a dismissal of claims about any cancer-preventive effect of selenium, but also to concern following detection of unexpected and, in some cases, serious adverse health effects among selenium-supplemented individuals. Adverse effects ranged from dermatological side effects to diabetes and cancer, namely high-grade prostate cancer [34•, 130, 149]. These adverse effects occurred at exposures much lower than expected, thus suggesting the inadequacy of the selenium standards and upper limits established to date [22•, 26]. The excess risk of high-grade prostate cancer in selenium-supplemented US individuals having the highest background selenium exposure is of particular concern, given growing evidence that some selenium species and selenoproteins have been associated with increased cancer risk in laboratory models [150,151,152,153,154,155, 156•, 157, 158] and in some recent cohort studies [159, 160]. The excess risk of diabetes is also of considerable interest, having been consistently shown to be associated with selenium in both experimental and nonexperimental studies, and at low levels of exposure. In the SELECT trial, the most informative RCT designed on selenium and cancer, excess diabetes incidence in the selenium arm influenced the trial’s termination [125, 161].

The adverse health effects of selenium observed in RCTs have raised safety issues for the remaining ongoing RCTs and more generally for the safety of selenium exposure and supplementation in humans, leading to warnings of side effects associated with supplementation, in the absence of selenium deficiency [35, 53, 162,163,164]. It should be noted that selenium levels associated with adverse effects in these RCTs are in a range of exposure relevant to the general population of several Western countries [22•].

Biomarkers of Exposure

The search for biomarkers of selenium exposure has long attracted investigators seeking indicators of short-term and particularly long-term intake, as well as indicators of a range of exposures permitting the evaluation of harmful and beneficial effects of this element [18, 161, 165, 166].

The most commonly used biomarker of selenium exposure has been serum or plasma selenium levels, and far less frequently whole blood selenium or erythrocyte selenium content [18, 22•, 161]. Whole blood selenium levels, however, can be more difficult to interpret, as they comprise both cellular and non-cellular constituents that have specific and non-specific components, and few studies are available for whole blood selenium that investigate inter-individual heterogeneity [166]. In addition, cellular (erythrocyte-bound) selenium levels appear to be less responsive to changes in dietary intake of the element compared with plasma/serum selenium, making it more difficult to compare whole blood selenium levels across individuals [18]. Plasma/serum selenium tends to reflect exposure up to few days and weeks, and also has the advantage of allowing speciation analysis, an approach which is becoming much more common and relevant. As previously mentioned, this follows the growing awareness of the different and peculiar biological properties of the various selenium species [36, 79, 90, 100, 167]. A recent study indicates that total selenium content in serum correlates with levels of only three selenium species: serum albumin-bound selenium, selenocysteine, and glutathione-peroxidase-bound selenium. Conversely, for other chemical forms of the element, such correlation exists [90]. Therefore, the most commonly used biomarker of selenium exposure in epidemiologic studies, total plasma/serum selenium content, may be inadequate to assess circulating levels of some species of the metalloid. In addition, serum selenium species vary according to diet composition [6], either for the different composition in selenium chemical forms of the different foodstuffs or for metabolic reasons [168, 169]. Several other indicators have been proposed and adopted in both nonexperimental and experimental studies, including in particular nail and hair selenium levels [18, 38]. These biomarkers have the substantial advantage of reflecting more long-term exposure compared with plasma/serum selenium levels and are considerably more suitable for epidemiologic research and clinical screening being less invasive and better tolerated by study participants. However, the ability of hair and nail measures to reflect actual exposure to selenium has been challenged, on the basis of the low correlation with both blood selenium levels and dietary selenium intake seen in some studies, despite indicating substantially stable selenium exposure over time [22•, 56, 170]. This might also be due to a tendency for some tissues to preferentially accumulate some selenium species, generally the organic ones, compared with other chemical forms and compartments, also depending on exposure to other factors such as methionine and heavy metals [3•, 161, 171,172,173,174]. However, even if there is some evidence for differential storage of selenium species in the nails and other body tissues and compartments (such as hair and urine), still limited data exist on these relevant issues [90, 161, 170, 172, 175]. Nails and hair also appear to be unsuitable for speciation analysis because of difficulties in the extraction procedures, and also since in these tissues some selenium species (such as inorganic ones) may be less likely incorporated compared with other selenium forms [170].

Urinary selenium levels have also been proposed as a suitable marker to assess selenium exposure, but their reliability as biomarker of selenium exposure has not been well-studied [165]. In addition, urinary selenium levels appear to be an indicator of recent intake of the metalloid, rather than of its long-term exposure [165, 176]. Overall, these findings confirm that misclassification of exposure is a major issue in selenium research in humans, regardless of the biomarker adopted to assess selenium status [161, 177], and this is particularly true when speciation analysis is not included in the assessment. This greatly hampers exposure assessment in a living organism and represents a source of bias in epidemiologic studies.

More recently, a growing number of studies has used an additional, highly specific biomarker of selenium exposure, cerebrospinal fluid selenium level (CSF), though this indicator is clearly unsuitable for population-based studies [36, 98, 100, 167]. This indicator, in fact, is unique in allowing in vivo biomonitoring of selenium levels in the central nervous system, which may have relevance given the potential involvement of selenium in neurological disease [4•, 36, 97]. In addition, it allows the implementation of speciation analysis [36•, 89, 96,97,98, 100, 167, 178]. However, blood and CSF levels of some selenium species are uncorrelated. In fact, relying on peripheral indicators of selenium exposure, such as blood levels, is not ideal for assessing corresponding exposure in the central nervous system compartment [89, 100, 167, 179].

Proteomic analysis based on measuring the induction of synthesis of selenoproteins is another widely used approach to assess selenium exposure [3•, 18, 144, 165, 168]. Selenoproteins are proteins that contain at least one of the amino acid selenocysteine, and generally serve oxidoreductase functions, though their exact physiopathological functions are still partially obscure and conflicting [27, 180,181,182,183]. The maximal expression of selenoproteins, such as plasma levels of selenium-dependent glutathione peroxidase GPX1 and of selenoprotein P, has been generally considered as an indicator of an adequate selenium intake through diet and other sources [3•, 18]. This has been done under the hypothesis that lower levels of selenoproteins derive from an insufficient bioavailability of selenium associated with its inadequate intake. Therefore, most agencies have based their assessment of selenium dietary reference values on the intake needed to upregulate selenoprotein expression [3•, 26], with values ranging from 55 to 70 μg/day (Fig. 2) [25, 26]. However, this approach to assess selenium dietary requirements has been challenged [3•, 26] since it has been suggested that the selenium-induced maximization of antioxidant enzyme synthesis, including but not limited to selenoproteins, may derive from the pro-oxidant properties of selenium species [99, 188,189,190,191,192], as long recognized [193]. Accordingly, even in the absence of any change in selenium supply, the induction of oxidative stress by several environmental stressors may increase selenoprotein synthesis. These observations suggest that the basal selenoprotein levels are not a direct sign of inadequate availability of selenium, being their levels inducible within the physiological response to stress. Therefore, “low” levels of these selenoproteins should not be confused with selenium deficiency per se [194], being potentially attributable also to the pro-oxidant properties of the element [3•]. The phylogenetic analysis of selenium utilization in mammals and lower animals also raises questions regarding the need to maximize selenoprotein expression [195]. In addition, environmental studies have shown that changes in selenium exposure are unrelated to changes in selenium-containing glutathione peroxidase levels [43, 46]. Finally, little if any demonstration of adverse health effects is attributable to inadequate selenoprotein synthesis according to the available epidemiologic evidence [26, 80]. Therefore, the approach taken by WHO/FAO in assessing the dietary reference values for selenium, i.e., 26 μg/day for females and 34 μg/day for males, may be reasonable since it is not aimed at maximizing selenoproteins expression.

Selenium standards issued by different countries and authorities worldwide, including the acceptable daily intakes/recommended dietary allowances and upper limits, and the lowest-observed-effects levels from environmental and experimental human studies to which uncertainty factors of 3 and 10 are applied. Abbreviations: D-A-CH, German, Austrian and Swiss Nutrition Societies; EFSA, European Food Safety Authority; JAPAN, Japanese National Institute of Health and Nutrition; NORDIC, Nordic Nutrition Recommendations; SINU, Italian Nutrition Society; IOM, Institute of Medicine; WHO, World Health Organization. Adapted from references [25, 34•, 48, 108, 114, 115, 125, 126, 184,185,186,187]

Overall, proteomic indicators such as selenoprotein expression may be inappropriate to assess the adequacy of selenium exposure [26, 80]. This is also true for the use of selenoproteins to assess selenium overexposure, since the highest levels of these proteins already reflect overexposure to selenium, but cannot reflect further increase in exposure. The proteins reach a plateau in serum or plasma at selenium intakes of around 70 μg/day, depending on the specific selenoproteins (and selenium species) involved. Selenoprotein expression, therefore, appears to be an inadequate tool to assess and monitor selenium exposure, both in case of deficient and excess exposures, and to assess to which chemical forms of this element the human has been exposed. It should also be noted that other proteins in addition to selenoproteins have been shown to be affected (i.e., upregulated or downregulated) by selenium exposure [196,197,198,199,200]. However, the physiopathological mechanisms underlying this relation and the suitability of these proteins to monitor selenium compound exposure, including its specificity, have not been elucidated.

To overcome some of the inherent limitations of biomarkers in assessing selenium intake, the assessment of selenium content of usual diet (e.g., via semi-quantitative food frequency questionnaire) and other relevant sources of exposure, such as ambient air, has been proposed. However, the validity and reliability of dietary assessment methods have also long been debated and challenged, with most studies suggesting the validity of this approach, in contrast with other studies [18, 22•, 161, 165]. The main advantage of assessing dietary content of the metalloid is the possibility to assess intake of selenium species independently from their subsequent metabolism and excretion in the body, known to be influenced by individual characteristics and other factors. Conversely, this approach is limited by the variability of selenium in foodstuffs over space and time [18, 22•, 144, 169], by the difficulties in assessing dietary habits, and by limited knowledge of selenium species and their bioavailability in foodstuffs [6, 168, 201]. The assessment of selenium exposure due to ambient air pollution would also be an attractive approach, but still very limited evidence is available for its feasibility and reliability, though tobacco smoking and outdoor air pollution due to motorized traffic or coal combustion appear to be sources of selenium exposure, the latter being potentially linked to adverse health effects [7,8,9].

Risk Assessment of Selenium: Facts, Uncertainties, and Challenges

The aforementioned uncertainties about the health effects and suitable biomarkers of selenium exposure explain why the standards for selenium exposure, with reference to both adequate daily intake and upper limits of intakes, are inconsistent across different countries and agencies. A summary of recommendations from various authorities is given in Figs. 2 and 3. Figure 2 reports the comparative analysis of the environmental standard and the nutritional recommendations, both in terms of recommended dietary intakes and of the LOELs (lowest observed effect levels). In addition, it shows the levels at which the human studies, both nonexperimental (environmental) and experimental, have found adverse effects in humans, and applies them an uncertainty factor of either 3 or 10 to derive safe upper limits of selenium intakes.

The overall picture shown by the comparison of these figures is the variability of the current standards concerning recommended dietary intakes, with consequent implications on the assessment of the safety of selenium exposure in a substantial part of the world population, to avoid both deficiency and excess of this element. In addition, the comparison between the safe upper limits of selenium exposure suggested by the most recent epidemiologic studies (applying an uncertainty factor to the LOAELs) and the upper limits identified in even the most recent assessments show their inconsistencies and call for their reassessments. In addition, this further highlights the potential pitfalls of using a proteomic approach based on selenium-driven selenoprotein upregulation when assessing selenium adequacy.

In addition to the conflicting results and uncertainties arising from the aforementioned patterns, Fig. 3 shows the different drinking water standards adopted worldwide [26, 57]. Variations in the standards for water human consumption are large, showing a factor of 50 between the lowest one (that applied in Russia, 1 μg/L) and the highest one (issued by EPA, 50 μg/L). The European Union and the French ANSES standard (10 μg/L) are at the lower range of the distribution. However, most of these standards have been based on a clearly inadequate assessment of human data, and there is a concern for the health effects of selenium exposure around 10 μg/L and above [37, 57, 202, 203]. Though unusually high levels of selenium in underground and drinking water may occur throughout the world and are being increasingly detected [57, 68, 203,204,205,206,207,208,209], the number of individuals exposed to high selenium levels through drinking water is unknown, also in the USA and the European countries. This mainly depends on the still limited information about distribution of selenium levels in underground and tap waters, also taking into account that such distribution may be uneven across different wells and locations even within small areas [57, 93]. Such situations deserve to be further investigated both in order to gain insight into the disease risks of this element through drinking water, and to protect individuals at risk of selenium overexposure through this source.

More generally, as far as selenium exposure limits and recommendations for both diet and drinking water are concerned, available evidence suggests that more conservative standards should be considered [26, 57]. Finally, we believe that an in-depth assessment of the underlying scientific evidence is required, also taking into account the different selenium species and their potential effects on human health.

Conclusions

Based on epidemiologic studies and particularly on the high-quality human data recently generated by the trials, we recommend a comprehensive and updated assessment of the safety of both deficient and toxic exposure to selenium species supported by an in-depth review of the biochemical and toxicological literature. Such an assessment should be done in the light of recent literature emphasizing the toxic and pro-oxidant properties of the various chemical forms of selenium [31, 190, 191], which raises questions about using selenoprotein upregulation to assess adequacy of selenium intake [3•, 26]. Particular attention should be given to the recent epidemiologic evidence indicating adverse effects of low-dose selenium overexposure [26, 34•, 35, 54•]. A comprehensive assessment of the health effects of deficient and excess selenium exposure should also focus on neurological disease, in addition to other diseases, taking into account the most recent epidemiologic and laboratory studies, and the potential involvement of genetic factors [4•, 33, 36, 53, 54•, 58, 91, 97, 98, 101, 167•, 210,211,212,213,214].

Overall, such a health risk assessment may lead to an advancement of our knowledge of human health effects of selenium, to a more adequate risk assessment of selenium exposure and to an improvement and harmonization of the conflicting standards and reference values recommended worldwide. This may allow scientists and public health professionals to identify even subtle conditions of deficient and excess exposure, thereby ensuring the safety of human exposure to selenium compounds globally.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance

Brigelius-Flohe R, Flohe L. Selenium and redox signaling. Arch Biochem Biophys. 2017;617:48–59.

Hatfield D, Carlson BA, Tsuji P, Tobe R, Gladyshev VN. Selenium and cancer. In: Collins JF, editor. Molecular, genetic, and nutritional aspects of major and trace minerals. Elsevier: Academic Press; 2016.

• Jablonska E, Vinceti M. Selenium and human health: witnessing a Copernican revolution? J Environ Sci Health C Environ Carcinog Ecotoxicol Rev. 2015;33:328–68 This paper summarizes the most recent advancements in epidemiologic and biochemical research on selenium and their comprehensive relation.

• Oliveira CS, Piccoli BC, Aschner M, Rocha JBT. Chemical speciation of selenium and mercury as determinant of their neurotoxicity. Adv Neurobiol. 2017;18:53–83 This paper provides an overview of the relevance of speciation analysis in selenium and mercury research.

Vinceti M, Burlingame B, Fillippini T, Naska A, Bargellini A, Borella P. The epidemiology of selenium and human health. In: Hatfield D, Schweizer U, Gladyshev VN, editors. Selenium: its molecular biology and role in human health. 4th edition. New York: Springer Science+Business Media; 2016. p. 365–76.

Filippini T, Michalke B, Wise LA, Malagoli C, Malavolti M, Vescovi L, et al. Diet composition and serum levels of selenium species: a cross-sectional study. Food Chem Toxicol. 2018;115:482–90.

Bogden JD, Kemp FW, Buse M, Thind IS, Louria DB, Forgacs J, et al. Composition of tobaccos from countries with high or low incidence of lung cancer. I. Selenium, polonium-210, Alternaria, tar, and nicotine. J Natl Cancer Inst. 1981;66:27–31.

Heck JE, Park AS, Qiu J, Cockburn M, Ritz B. Risk of leukemia in relation to exposure to ambient air toxics in pregnancy and early childhood. Int J Hyg Environ Health. 2014;217:662–8.

Ito K, Mathes R, Ross Z, Nadas A, Thurston G, Matte T. Fine particulate matter constituents associated with cardiovascular hospitalizations and mortality in New York City. Environ Health Perspect. 2011;119:467–73.

Hu J, Sun Q, He H. Thermal effects from the release of selenium from a coal combustion during high-temperature processing: a review. Environ Sci Pollut Res Int. 2018;25:13470–8.

Santos MD, Flores Soares MC, Martins Baisch PR, Muccillo Baisch AL, Rodrigues da Silva Junior FM. Biomonitoring of trace elements in urine samples of children from a coal-mining region. Chemosphere. 2018;197:622–6.

Zeng X, Liu Y, You S, Zeng G, Tan X, Hu X, et al. Spatial distribution, health risk assessment and statistical source identification of the trace elements in surface water from the Xiangjiang River, China. Environ Sci Pollut Res Int. 2015;22:9400–12.

Goen T, Schaller B, Jager T, Brau-Dumler C, Schaller KH, Drexler H. Biological monitoring of exposure and effects in workers employed in a selenium-processing plant. Int Arch Occup Environ Health. 2015;88:623–30.

Andrews KW, Roseland JM, Gusev PA, Palachuvattil J, Dang PT, Savarala S, et al. Analytical ingredient content and variability of adult multivitamin/mineral products: National estimates for the Dietary Supplement Ingredient database. Am J Clin Nutr. 2017;105:526–39.

Berendsen AAM, van Lieshout L, van den Heuvel E, Matthys C, Peter S, de Groot L. Conventional foods, followed by dietary supplements and fortified foods, are the key sources of vitamin D, vitamin B6, and selenium intake in Dutch participants of the NU-AGE study. Nutr Res. 2016;36:1171–81.

Kubachka KM, Hanley T, Mantha M, Wilson RA, Falconer TM, Kassa Z, et al. Evaluation of selenium in dietary supplements using elemental speciation. Food Chem. 2017;218:313–20.

Brozmanova J, Manikova D, Vlckova V, Chovanec M. Selenium: a double-edged sword for defense and offence in cancer. Arch Toxicol. 2010;84:919–38.

Fairweather-Tait SJ, Bao Y, Broadley MR, Collings R, Ford D, Hesketh JE, et al. Selenium in human health and disease. Antioxid Redox Signal. 2011;14:1337–83.

Fordyce F. Selenium geochemistry and health. Ambio. 2007;36:94–7.

Lee KH, Jeong D. Bimodal actions of selenium essential for antioxidant and toxic pro-oxidant activities: the selenium paradox (review). Mol Med Rep. 2012;5:299–304.

Navarro-Alarcon M, Cabrera-Vique C. Selenium in food and the human body: a review. Sci Total Environ. 2008;400:115–41.

• Vinceti M, Filippini T, Del Giovane C, Dennert G, Zwahlen M, Brinkman M, et al. Selenium for preventing cancer. Cochrane Database Syst Rev. 2018;1:CD005195 This review summarizes the effect of selenium on human cancer risk though a systematic review and meta-analysis of experimental and nonexperimental human studies with longitudinal design.

Jones GD, Droz B, Greve P, Gottschalk P, Poffet D, McGrath SP, et al. Selenium deficiency risk predicted to increase under future climate change. Proc Natl Acad Sci U S A. 2017;114:2848–53.

Rayman MP. The argument for increasing selenium intake. Proc Nutr Soc. 2002;61:203–15.

Vinceti M, Maraldi T, Bergomi M, Malagoli C. Risk of chronic low-dose selenium overexposure in humans: insights from epidemiology and biochemistry. Rev Environ Health. 2009;24:231–48.

Vinceti M, Filippini T, Cilloni S, Bargellini A, Vergoni AV, Tsatsakis A, et al. Health risk assessment of environmental selenium: emerging evidence and challenges. Mol Med Rep. 2017;15:3323–35.

Hatfield DL, Tsuji PA, Carlson BA, Gladyshev VN. Selenium and selenocysteine: roles in cancer, health, and development. Trends Biochem Sci. 2014;39:112–20.

Laporte M, Pavey SA, Rougeux C, Pierron F, Lauzent M, Budzinski H, et al. RAD-sequencing reveals within-generation polygenic selection in response to anthropogenic organic and metal contamination in North Atlantic eels. Mol Ecol. 2016;25:219–37.

Letavayova L, Vlckova V, Brozmanova J. Selenium: from cancer prevention to DNA damage. Toxicology. 2006;227:1–14.

Mazokopakis EE, Liontiris MI. Commentary: Health concerns of Brazil nut consumption. J Altern Complement Med. 2018;24:3–6.

Misra S, Boylan M, Selvam A, Spallholz JE, Bjornstedt M. Redox-active selenium compounds—from toxicity and cell death to cancer treatment. Nutrients. 2015;7:3536–56.

Berthold HK, Michalke B, Krone W, Guallar E, Gouni-Berthold I. Influence of serum selenium concentrations on hypertension: the lipid analytic Cologne cross-sectional study. J Hypertens. 2012;30:1328–35.

Filippini T, Michalke B, Mandrioli J, Tsatsakis AM, Weuve J, Vinceti M. Selenium neurotoxicity and amyotrophic lateral sclerosis: an epidemiologic perspective. In: Michalke B (ed) Selenium, molecular and integrative toxicology,. Springer International Publishing. 2018.

• Kristal AR, Darke AK, Morris JS, Tangen CM, Goodman PJ, Thompson IM, et al. Baseline selenium status and effects of selenium and vitamin e supplementation on prostate cancer risk. J Natl Cancer Inst. 2014;106:djt456 This paper reports the excess high-grade prostate risk experienced by selenium-supplemented participants in the SELECT trial, the most powerful selenium trial.

Stranges S, Marshall JR, Natarajan R, Donahue RP, Trevisan M, Combs GF, et al. Effects of long-term selenium supplementation on the incidence of type 2 diabetes: a randomized trial. Ann Intern Med. 2007;147:217–23.

Vinceti M, Chiari A, Eichmuller M, Rothman KJ, Filippini T, Malagoli C, et al. A selenium species in cerebrospinal fluid predicts conversion to Alzheimer’s dementia in persons with mild cognitive impairment. Alzheimers Res Ther. 2017;9:100.

Cary L, Naveau A, Migeot V, Rabouan S, Charlet L, Foray N, et al. From water-rock interactions to the DNA: a review of selenium issues. Procedia Earth Planet Sci. 2017;17:698–701.

Vinceti M, Wei ET, Malagoli C, Bergomi M, Vivoli G. Adverse health effects of selenium in humans. Rev Environ Health. 2001;16:233–51.

Smith MI, Franke KW, Westfall BB. The selenium problem in relation to public health: a preliminary survey to determine the possibility of selenium intoxication in the rural population living on seleniferous soil. Public Health Rep. 1936;51:1496–505.

Smith MI, Westfall BB. Further field studies on the selenium problem in relation to public health. Public Health Rep. 1937;52:1375–84.

Tsongas TA, Ferguson SW. Human health effects of selenium in a rural Colorado drinking water supply. In: Hemphill DD (ed) Proceedings of “Trace substances in environmental health-XI. A symposium”. University of Missouri, Columbia, pp 30–35. 1977.

Tsongas TA, Ferguson SW. Selenium concentrations in human urine and drinking water. In: Kirchgessner N (ed) Proceedings of "Trace Elements in Man and Animal-3". pp 320–321. 1978.

Valentine JL, Kang HK, Dang PM, Schluchter M. Selenium concentrations and glutathione peroxidase activities in a population exposed to selenium via drinking water. J Toxicol Environ Health. 1980;6:731–6.

Longnecker MP, Taylor PR, Levander OA, Howe M, Veillon C, McAdam PA, et al. Selenium in diet, blood, and toenails in relation to human health in a seleniferous area. Am J Clin Nutr. 1991;53:1288–94.

Valentine JL, S. RL, Kang HK, Schluchter M. Effects on human health of exposure to selenium in drinking water. In: Combs GF, Levander OA, Spallholz JE, Oldfield JE (eds) Proceedings of "Selenium in Biology and Medicine - Part B". Van Nostrand Reihold Co, New York, pp 675–687. 1987.

Valentine JL, Faraji B, Kang HK. Human glutathione peroxidase activity in cases of high selenium exposures. Environ Res. 1988;45:16–27.

Saint-Amour D, Roy MS, Bastien C, Ayotte P, Dewailly E, Despres C, et al. Alterations of visual evoked potentials in preschool Inuit children exposed to methylmercury and polychlorinated biphenyls from a marine diet. Neurotoxicology. 2006;27:567–78.

Brätter P, Negretti de Brätter VE. Influence of high dietary intake on the thyroid hormone level in human serum. J Trace Elem Med Biol. 1996;10:163–6.

Jaffe WG, Ruphael M, Mondragon MC, Cuevas MA. Estudio clinico y bioquimico en ninos escolares de una zona selenifera. Arch Latinoam Nutr. 1972;22:595–611.

Lemire M, Philibert A, Fillion M, Passos CJ, Guimaraes JR, Jr Barbosa F, et al. No evidence of selenosis from a selenium-rich diet in the Brazilian Amazon. Environ Int. 2012;40:128–36.

Lemire M, Fillion M, Frenette B, Passos CJ, Guimaraes JR, Barbosa F, Jr. et al. Selenium from dietary sources and motor functions in the Brazilian Amazon. Neurotoxicology 2011;32:944–953.

Martens IB, Cardoso BR, Hare DJ, Niedzwiecki MM, Lajolo FM, Martens A, et al. Selenium status in preschool children receiving a Brazil nut-enriched diet. Nutrition. 2015;31:1339–43.

Vinceti M, Ballotari P, Steinmaus C, Malagoli C, Luberto F, Malavolti M, et al. Long-term mortality patterns in a residential cohort exposed to inorganic selenium in drinking water. Environ Res. 2016;150:348–56.

• Vinceti M, Vicentini M, Wise LA, Sacchettini C, Malagoli C, Ballotari P, et al. Cancer incidence following long-term consumption of drinking water with high inorganic selenium content. Sci Total Environ. 2018;635C:390–6 This cohort study reports the long-term effects on cancer risk of a naturally occurring exposure to inorganic selenium through drinking water.

Vinceti M, Cann CI, Calzolari E, Vivoli R, Garavelli L, Bergomi M. Reproductive outcomes in a population exposed long-term to inorganic selenium via drinking water. Sci Total Environ. 2000;250:1–7.

Vinceti M, Crespi CM, Malagoli C, Bottecchi I, Ferrari A, Sieri S, et al. A case-control study of the risk of cutaneous melanoma associated with three selenium exposure indicators. Tumori. 2012;98:287–95.

Vinceti M, Crespi CM, Bonvicini F, Malagoli C, Ferrante M, Marmiroli S, et al. The need for a reassessment of the safe upper limit of selenium in drinking water. Sci Total Environ. 2013;443:633–42.

Yang GQ, Wang SZ, Zhou RH, Sun SZ. Endemic selenium intoxication of humans in China. Am J Clin Nutr. 1983;37:872–81.

Keshan Disease Research Group of the Chinese Academy of Medical Sciences. Observations on effect of sodium selenite in prevention of Keshan disease. Chin Med J. 1979;92:471–6.

Johnson CC, Ge X, Green KA, Liu X. Selenium distribution in the local environment of selected villages of the Keshan disease belt, Zhangjiakou District, Hebei Province, People’s Republic of China. Appl Geochem. 2000;15:385–401.

Fordyce FM, Zhan G, Green K, Liu X. Soil, grain and water chemistry in relation to human selenium-responsive diseases in Enshi District, China. Appl Geochem. 2000;15:117–32.

Qin HB, Zhu JM, Liang L, Wang MS, Su H. The bioavailability of selenium and risk assessment for human selenium poisoning in high-Se areas, China. Environ Int. 2013;52:66–74.

Hira CK, Partal K, Dhillon KS. Dietary selenium intake by men and women in high and low selenium areas of Punjab. Public Health Nutr. 2004;7:39–43.

Chawla R, Loomba R, Chaudhary RJ, Singh S, Dhillon KS. Impact of high selenium exposure on organ function and biochemical profile of the rural population living in seleniferous soils in Punjab, India. In: Banuelos GS, Lin Z-Q, Ferreira Moraes M, Guimaraes Guilherme LR, Rodrigues dos Reis A (eds) Global advance in selenium research from thepry to application. CRC Press. 2016.

Fordyce FM, Johnson CC, Navaratna UR, Appleton JD, Dissanayake CB. Selenium and iodine in soil, rice and drinking water in relation to endemic goitre in Sri Lanka. Sci Total Environ. 2000;263:127–41.

Hu XF, Eccles KM, Chan HM. High selenium exposure lowers the odds ratios for hypertension, stroke, and myocardial infarction associated with mercury exposure among Inuit in Canada. Environ Int. 2017;102:200–6.

Dinh QT, Cui Z, Huang J, Tran TAT, Wang D, Yang W, et al. Selenium distribution in the Chinese environment and its relationship with human health: a review. Environ Int. 2018;112:294–309.

Du Y, Luo K, Ni R, Hussain R. Selenium and hazardous elements distribution in plant-soil-water system and human health risk assessment of Lower Cambrian, Southern Shaanxi, China. Environ Geochem Health. 2018 (in press).

Winther KH, Bonnema SJ, Cold F, Debrabant B, Nybo M, Cold S, et al. Does selenium supplementation affect thyroid function? Results from a randomized, controlled, double-blinded trial in a Danish population. Eur J Endocrinol. 2015;172:657–67.

Rayman MP, Thompson AJ, Bekaert B, Catterick J, Galassini R, Hall E, et al. Randomized controlled trial of the effect of selenium supplementation on thyroid function in the elderly in the United Kingdom. Am J Clin Nutr. 2008;87:370–8.

Keshan Disease Research Group of the Chinese Academy of Medical Sciences. Epidemiologic studies on the etiologic relationship of selenium and Keshan disease. Chin Med J. 1979;92:477–82.

Cheng YY, Qian PC. The effect of selenium-fortified table salt in the prevention of Keshan disease on a population of 1.05 million. Biomed Environ Sci. 1990;3:422–8.

Zhou H, Wang T, Li Q, Li D. Prevention of Keshan disease by selenium supplementation: a systematic review and meta-analysis. Biol Trace Elem Res 2018 (in press).

Yang G, Chen J, Wen Z, Ge K, Zhu L, Chen X, et al. The role of selenium in Keshan disease. Adv Nutr Res. 1984;6:203–31.

Wang T, Li Q. Interpretation of selenium deficiency and Keshan disease with causal inference of modern epidemiology. In: The International Selenium Seminar 2015, Moscow, 2015. Organizing Committee of the International Selenium Seminar 2015. Year.

Beck MA, Levander OA, Handy J. Selenium deficiency and viral infection. J Nutr. 2003;133:1463S–7S.

Lei C, Niu X, Ma X, Wei J. Is selenium deficiency really the cause of Keshan disease? Environ Geochem Health. 2011;33:183–8.

Xu GL, Wang SC, Gu BQ, Yang YX, Song HB, Xue WL, et al. Further investigation on the role of selenium deficiency in the aetiology and pathogenesis of Keshan disease. Biomed Environ Sci. 1997;10:316–26.

Cermelli C, Vinceti M, Scaltriti E, Bazzani E, Beretti F, Vivoli G, et al. Selenite inhibition of Coxsackie virus B5 replication: implications on the etiology of Keshan disease. J Trace Elem Med Biol. 2002;16:41–6.

Joint FAO/WHO Expert Consultation on Human Vitamin and Mineral Requirements. Vitamin and mineral requirements in human nutrition. Second ed. Geneva: World Health Organization and Food and Agriculture Organization of the United Nations; 2004.

Ning Y, Wang X, Zhang P, Anatoly SV, Prakash NT, Li C et al. Imbalance of dietary nutrients and the associated differentially expressed genes and pathways may play important roles in juvenile Kashin-Beck disease. J Trace Elem Med Biol. 2018;50:441–60.

Shi Z, Pan P, Feng Y, Kan Z, Li Z, Wei F. Environmental water chemistry and possible correlation with Kaschin-Beck disease (KBD) in northwestern Sichuan, China. Environ Int. 2017;99:282–92.

Xie D, Liao Y, Yue J, Zhang C, Wang Y, Deng C, et al. Effects of five types of selenium supplementation for treatment of Kashin-Beck disease in children: a systematic review and network meta-analysis. BMJ Open. 2018;8:e017883.

Fan A, Vinceti M. Selenium and its compunds. In: John Wiley & Sons I (ed) Hamilton & Hardy’s industrial toxicology, sixth edition. John Wiley & Sons, Inc. , Hoboken, NJ. 2015.

Schiavon M, Pilon-Smits EA. The fascinating facets of plant selenium accumulation—biochemistry, physiology, evolution and ecology. New Phytol. 2016.

Weekley CM, Harris HH. Which form is that? The importance of selenium speciation and metabolism in the prevention and treatment of disease. Chem Soc Rev. 2013;42:8870–94.

Dolgova NV, Hackett MJ, MacDonald TC, Nehzati S, James AK, Krone PH, et al. Distribution of selenium in zebrafish larvae after exposure to organic and inorganic selenium forms. Metallomics. 2016;8:305–12.

Maraldi T, Riccio M, Zambonin L, Vinceti M, De Pol A, Hakim G. Low levels of selenium compounds are selectively toxic for a human neuron cell line through ROS/RNS increase and apoptotic process activation. Neurotoxicology 2011;32:180–187.

Solovyev N, Berthele A, Michalke B. Selenium speciation in paired serum and cerebrospinal fluid samples. Anal Bioanal Chem. 2013;405:1875–84.

Vinceti M, Grill P, Malagoli C, Filippini T, Storani S, Malavolti M, et al. Selenium speciation in human serum and its implications for epidemiologic research: a cross-sectional study. J Trace Elem Med Biol. 2015;31:1–10.

Vinceti M, Mandrioli J, Borella P, Michalke B, Tsatsakis A, Finkelstein Y. Selenium neurotoxicity in humans: bridging laboratory and epidemiologic studies. Toxicol Lett. 2014;230:295–303.

Vinceti M, Guidetti D, Pinotti M, Rovesti S, Merlin M, Vescovi L, et al. Amyotrophic lateral sclerosis after long-term exposure to drinking water with high selenium content. Epidemiology. 1996;7:529–32.

Vinceti M, Bonvicini F, Rothman KJ, Vescovi L, Wang F. The relation between amyotrophic lateral sclerosis and inorganic selenium in drinking water: a population-based case-control study. Environ Health. 2010;9:77.

Gobi N, Vaseeharan B, Rekha R, Vijayakumar S, Faggio C. Bioaccumulation, cytotoxicity and oxidative stress of the acute exposure selenium in Oreochromis mossambicus. Ecotoxicol Environ Saf. 2018;162:147–59.

Kim JH, Kang JC. Oxidative stress, neurotoxicity, and non-specific immune responses in juvenile red sea bream, Pagrus major, exposed to different waterborne selenium concentrations. Chemosphere. 2015;135:46–52.

Maass F, Lingor P. Bioelemental patterns in the cerebrospinal fluid as potential biomarkers for neurodegenerative disorders. Neural Regen Res. 2018;13:1356–7.

Maass F, Michalke B, Leha A, Boerger M, Zerr I, Koch JC, et al. Elemental fingerprint as a cerebrospinal fluid biomarker for the diagnosis of Parkinson’s disease. J Neurochem. 2018;145:342–51.

Mandrioli J, Michalke B, Solovyev N, Grill P, Violi F, Lunetta C, et al. Elevated levels of selenium species in cerebrospinal fluid of amyotrophic lateral sclerosis patients with disease-associated gene mutations. Neurodegener Dis. 2017;17:171–80.

Naderi M, Salahinejad A, Ferrari MCO, Niyogi S, Chivers DP. Dopaminergic dysregulation and impaired associative learning behavior in zebrafish during chronic dietary exposure to selenium. Environ Pollut. 2018;237:174–85.

Vinceti M, Solovyev N, Mandrioli J, Crespi CM, Bonvicini F, Arcolin E, et al. Cerebrospinal fluid of newly diagnosed amyotrophic lateral sclerosis patients exhibits abnormal levels of selenium species including elevated selenite. Neurotoxicology. 2013;38:25–32.

Amoros R, Murcia M, Ballester F, Broberg K, Iniguez C, Rebagliato M, et al. Selenium status during pregnancy: influential factors and effects on neuropsychological development among Spanish infants. Sci Total Environ. 2018;610-611:741–9.

Cardoso BR, Roberts BR, Bush AI, Hare DJ. Selenium, selenoproteins and neurodegenerative diseases. Metallomics. 2015;7:1213–28.

Oken E, Rifas-Shiman SL, Amarasiriwardena C, Jayawardene I, Bellinger DC, Hibbeln JR, et al. Maternal prenatal fish consumption and cognition in mid childhood: mercury, fatty acids, and selenium. Neurotoxicol Teratol. 2016;57:71–8.

Skroder H, Kippler M, Tofail F, Vahter M. Early-life selenium status and cognitive function at 5 and 10 years of age in Bangladeshi children. Environ Health Perspect. 2017;125:117003.

Wasserman GA, Liu X, Parvez F, Chen Y, Factor-Litvak P, LoIacono NJ, et al. A cross-sectional study of water arsenic exposure and intellectual function in adolescence in Araihazar, Bangladesh. Environ Int. 2018;118:304–13.

Yang X, Yu X, Fu H, Li L, Ren T. Different levels of prenatal zinc and selenium had different effects on neonatal neurobehavioral development. Neurotoxicology. 2013;37:35–9.

Hughes DJ, Duarte-Salles T, Hybsier S, Trichopoulou A, Stepien M, Aleksandrova K, et al. Prediagnostic selenium status and hepatobiliary cancer risk in the European Prospective Investigation into Cancer and Nutrition cohort. Am J Clin Nutr. 2016;104:406–14.

Rayman MP, Winther KH, Pastor-Barriuso R, Cold F, Thvilum M, Stranges S et al. Effect of long-term selenium supplementation on mortality: results from a multiple-dose, randomised controlled trial. Free Radic Biol Med 2018 (in press).

Combs GF Jr. Selenium in global food systems. Br J Nutr. 2001;85:517–47.

Pritchett NR, Burgert SL, Murphy GA, Brockman JD, White RE, Lando J, et al. Cross sectional study of serum selenium concentration and esophageal squamous dysplasia in western Kenya. BMC Cancer. 2017;17:835.

Su L, Jin Y, Unverzagt FW, Liang C, Cheng Y, Hake AM, et al. Longitudinal association between selenium levels and hypertension in a rural elderly Chinese cohort. J Nutr Health Aging. 2016;20:983–8.

Wu G, Li Z, Ju W, Yang X, Fu X, Gao X. Cross-sectional study: relationship between serum selenium and hypertension in the Shandong Province of China. Biol Trace Elem Res. 2018;185:295–301.

• Vinceti M, Filippini T, Rothman KJ. Selenium exposure and the risk of type 2 diabetes: a systematic review and meta-analysis. Eur J Epidemiol. 2018;33:789–810. This review provides an updated and comprehensive dose-response assessment of experimental and nonexperimental studies about selenium and type 2 diabetes risk.

Duffield-Lillico AJ, Reid ME, Turnbull BW, Combs GFJ, Slate EH, Fischbach LA, et al. Baseline characteristics and the effect of selenium supplementation on cancer incidence in a randomized clinical trial: a summary report of the Nutritional Prevention of Cancer Trial. Cancer Epidemiol Biomark Prev. 2002;11:630–9.

Duffield-Lillico AJ, Slate EH, Reid ME, Turnbull BW, Wilkins PA, Combs GF Jr, et al. Selenium supplementation and secondary prevention of nonmelanoma skin cancer in a randomized trial. J Natl Cancer Inst. 2003;95:1477–81.

Reid ME, Duffield-Lillico AJ, Slate E, Natarajan N, Turnbull B, Jacobs E, et al. The nutritional prevention of cancer: 400 mcg per day selenium treatment. Nutr Cancer. 2008;60:155–63.

Dreno B, Euvrard S, Frances C, Moyse D, Nandeuil A. Effect of selenium intake on the prevention of cutaneous epithelial lesions in organ transplant recipients. Eur J Dermatol. 2007;17:140–5.

Lippman SM, Klein EA, Goodman PJ, Lucia MS, Thompson IM, Ford LG, et al. Effect of selenium and vitamin E on risk of prostate cancer and other cancers: the Selenium and Vitamin E Cancer Prevention Trial (SELECT). JAMA. 2009;301:39–51.

Klein EA, Thompson IM Jr, Tangen CM, Crowley JJ, Lucia MS, Goodman PJ, et al. Vitamin E and the risk of prostate cancer: the Selenium and Vitamin E Cancer Prevention Trial (SELECT). JAMA. 2011;306:1549–56.

Lance P, Alberts DS, Thompson PA, Fales L, Wang F, San Jose J, et al. Colorectal adenomas in participants of the SELECT randomized trial of selenium and vitamin E for prostate cancer prevention. Cancer Prev Res (Phila). 2017;10:45–54.

Lotan Y, Goodman PJ, Youssef RF, Svatek RS, Shariat SF, Tangen CM, et al. Evaluation of vitamin E and selenium supplementation for the prevention of bladder cancer in SWOG coordinated SELECT. J Urol. 2012;187:2005–10.

Lubinski J, Jaworska K, Durda K, Jakubowska A, Huzarski T, Byrski T, et al. Selenium and the risk of cancer in BRCA1 carriers. Hered Cancer Clin Pract. 2011;9(Suppl 2):A5.

Marshall JR, Tangen CM, Sakr WA, Wood DP Jr, Berry DL, Klein EA, et al. Phase III trial of selenium to prevent prostate cancer in men with high-grade prostatic intraepithelial neoplasia: SWOG S9917. Cancer Prev Res (Phila). 2011;4:1761–9.

Algotar AM, Stratton MS, Ahmann FR, Ranger-Moore J, Nagle RB, Thompson PA, et al. Phase 3 clinical trial investigating the effect of selenium supplementation in men at high-risk for prostate cancer. Prostate. 2013;73:328–35.

Karp DD, Lee SJ, Keller SM, Wright GS, Aisner S, Belinsky SA, et al. Randomized, double-blind, placebo-controlled, phase III chemoprevention trial of selenium supplementation in patients with resected stage I non-small-cell lung cancer: ECOG 5597. J Clin Oncol. 2013;31:4179–87.

Thompson PA, Ashbeck EL, Roe DJ, Fales L, Buckmeier J, Wang F, et al. Selenium supplementation for prevention of colorectal adenomas and risk of associated type 2 diabetes. J Natl Cancer Inst. 2016;108:djw152.

Haldimann M, Venner TY, Zimmerli B. Determination of selenium in the serum of healthy Swiss adults and correlation to dietary intake. J Trace Elem Med Biol. 1996;10:31–45.

Higgins JP, Green S. Cochrane handbook for systematic reviews of interventions 5.1.0 [updated March 2011. Cochrane Collaboration 2011.

Rothman KJ. Six persistent research misconceptions. J Gen Intern Med. 2014;29:1060–4.

Vinceti M, Filippini T, Cilloni S, Crespi CM. The epidemiology of selenium and human cancer. Adv Cancer Res. 2017;136:1–48.

Brigo F, Storti M, Lochner P, Tezzon F, Nardone R. Selenium supplementation for primary prevention of cardiovascular disease: proof of no effectiveness. Nutr Metab Cardiovasc Dis. 2014;24:e2–3.

Rees K, Hartley L, Day C, Flowers N, Clarke A, Stranges S. Selenium supplementation for the primary prevention of cardiovascular disease. Cochrane Database Syst Rev. 2013;1:CD009671.

Kong Z, Wang F, Ji S, Deng X, Xia Z. Selenium supplementation for sepsis: a meta-analysis of randomized controlled trials. Am J Emerg Med. 2013;31:1170–5.

Manzanares W, Lemieux M, Elke G, Langlois PL, Bloos F, Heyland DK. High-dose intravenous selenium does not improve clinical outcomes in the critically ill: a systematic review and meta-analysis. Crit Care. 2016;20:356.

• Kryscio RJ, Abner EL, Caban-Holt A, Lovell M, Goodman P, Darke AK, et al. Association of antioxidant supplement use and dementia in the prevention of Alzheimer’s disease by vitamin E and selenium trial (PREADViSE). JAMA Neurol. 2017;74:567–73 This paper reports the lack of effects of selenium supplementation in the prevention of Alzheimer’s dementia in the large SELECT trial.

Cold F, Winther KH, Pastor-Barriuso R, Rayman MP, Guallar E, Nybo M, et al. Randomised controlled trial of the effect of long-term selenium supplementation on plasma cholesterol in an elderly Danish population. Br J Nutr. 2015;114:1807–18.

Mao J, Pop VJ, Bath SC, Vader HL, Redman CW, Rayman MP. Effect of low-dose selenium on thyroid autoimmunity and thyroid function in UK pregnant women with mild-to-moderate iodine deficiency. Eur J Nutr. 2016;55:55–61.

Ivory K, Prieto E, Spinks C, Armah CN, Goldson AJ, Dainty JR, et al. Selenium supplementation has beneficial and detrimental effects on immunity to influenza vaccine in older adults. Clin Nutr. 2017;36:407–15.

Baum MK, Campa A, Lai S, Sales Martinez S, Tsalaile L, Burns P, et al. Effect of micronutrient supplementation on disease progression in asymptomatic, antiretroviral-naive, HIV-infected adults in Botswana: a randomized clinical trial. JAMA. 2013;310:2154–63.

Sudfeld CR, Aboud S, Kupka R, Mugusi FM, Fawzi WW. Effect of selenium supplementation on HIV-1 RNA detection in breast milk of Tanzanian women. Nutrition. 2014;30:1081–4.

Kamwesiga J, Mutabazi V, Kayumba J, Tayari JC, Uwimbabazi JC, Batanage G, et al. Effect of selenium supplementation on CD4+ T-cell recovery, viral suppression and morbidity of HIV-infected patients in Rwanda: a randomized controlled trial. AIDS. 2015;29:1045–52.

Christensen K, Werner M, Malecki K. Serum selenium and lipid levels: associations observed in the National Health and Nutrition Examination Survey (NHANES) 2011–2012. Environ Res. 2015;140:76–84.

Laclaustra M, Stranges S, Navas-Acien A, Ordovas JM, Guallar E. Serum selenium and serum lipids in US adults: National Health and Nutrition Examination Survey (NHANES) 2003–2004. Atherosclerosis. 2010;210:643–8.

EFSA NDA Panel. Scientific opinion on dietary reference values for selenium. EFSA J. 2014;12:3846.

Clark LC, Combs GFJ, Turnbull BW, Slate EH, Chalker DK, Chow J, et al. Effects of selenium supplementation for cancer prevention in patients with carcinoma of the skin. A randomized controlled trial. Nutritional Prevention of Cancer Study Group. JAMA. 1996;276:1957–63.

Bailey RL, Gahche JJ, Lentino CV, Dwyer JT, Engel JS, Thomas PR, et al. Dietary supplement use in the United States, 2003–2006. J Nutr. 2011;141:261–6.

Bjelakovic G, Nikolova D, Gluud C. Antioxidant supplements and mortality. Curr Opin Clin Nutr Metab Care. 2014;17:40–4.

Moyer VA, Force USPST. Vitamin, mineral, and multivitamin supplements for the primary prevention of cardiovascular disease and cancer: U.S. Preventive services Task Force recommendation statement. Ann Intern Med. 2014;160:558–64.

Vinceti M, Filippini T, Del Giovane C, Crespi CM. Exploring inconsistencies between observational and experimental studies of selenium and diabetes risk. Cochrane Database Syst Rev. 2015;Suppl:181.

Birt DF, Julius AD, Runice CE, White LT, Lawson T, Pour PM. Enhancement of BOP-induced pancreatic carcinogenesis in selenium-fed Syrian golden hamsters under specific dietary conditions. Nutr Cancer. 1988;11:21–33.

Chen X, Mikhail SS, Ding YW, Yang G, Bondoc F, Yang CS. Effects of vitamin E and selenium supplementation on esophageal adenocarcinogenesis in a surgical model with rats. Carcinogenesis. 2000;21:1531–6.

Kandas NO, Randolph C, Bosland MC. Differential effects of selenium on benign and malignant prostate epithelial cells: stimulation of LNCaP cell growth by noncytotoxic, low selenite concentrations. Nutr Cancer. 2009;61:251–64.

Kasaikina MV, Turanov AA, Avanesov A, Schweizer U, Seeher S, Bronson RT, et al. Contrasting roles of dietary selenium and selenoproteins in chemically induced hepatocarcinogenesis. Carcinogenesis. 2013;34:1089–95.

National Toxicology Program. Selenium sulfide. Rep Carcinog. 2011;12:376–7.

Novoselov SV, Calvisi DF, Labunskyy VM, Factor VM, Carlson BA, Fomenko DE, et al. Selenoprotein deficiency and high levels of selenium compounds can effectively inhibit hepatocarcinogenesis in transgenic mice. Oncogene. 2005;24:8003–11.

• Peters KM, Carlson BA, Gladyshev VN, Tsuji PA. Selenoproteins in colon cancer. Free Radic Biol Med. 2018 (in press). This paper provides an updated overview of the complex and intriguing role of selenoproteins in human cancer and of their role as future targets for clinical interventions.

Rose AH, Bertino P, Hoffmann FW, Gaudino G, Carbone M, Hoffmann PR. Increasing dietary selenium elevates reducing capacity and ERK activation associated with accelerated progression of select mesothelioma tumors. Am J Pathol. 2014;184:1041–9.

Su YP, Tang JM, Tang Y, Gao HY. Histological and ultrastructural changes induced by selenium in early experimental gastric carcinogenesis. World J Gastroenterol. 2005;11:4457–60.

Takata Y, Kristal AR, Santella RM, King IB, Duggan DJ, Lampe JW, et al. Selenium, selenoenzymes, oxidative stress and risk of neoplastic progression from Barrett’s esophagus: results from biomarkers and genetic variants. PLoS One. 2012;e38612:7.

Takata Y, Xiang YB, Burk RF, Li H, Hill KE, Cai H et al. Plasma selenoprotein P concentration and lung cancer risk: results from a case-control study nested within the Shanghai Men’s Health Study. Carcinogenesis 2018 (in press).

Vinceti M, Crespi CM, Malagoli C, Del Giovane C, Krogh V. Friend or foe? The current epidemiologic evidence on selenium and human cancer risk. J Environ Sci Health C Environ Carcinog Ecotoxicol Rev 2013;31:305–341.

Bleys J, Navas-Acien A, Guallar E. Selenium and diabetes: more bad news for supplements. Ann Intern Med. 2007;147:271–2.

Bruhn RL, Stamer WD, Herrygers LA, Levine JM, Noecker RJ. Relationship between glaucoma and selenium levels in plasma and aqueous humour. Br J Ophthalmol. 2009;93:1155–8.

Moyad MA. Heart healthy=prostate healthy: SELECT, the symbolic end of preventing prostate cancer via heart unhealthy and over anti-oxidation mechanisms? Asian J Androl. 2012;14:243–4.

Ashton K, Hooper L, Harvey LJ, Hurst R, Casgrain A, Fairweather-Tait SJ. Methods of assessment of selenium status in humans: a systematic review. Am J Clin Nutr. 2009;89:2025S–39S.

Combs GF Jr. Biomarkers of selenium status. Nutrients. 2015;7:2209–36.

• Michalke B, Willkommena D, Drobyshevb E, Solovyev N. The importance of speciation analysis in neurodegeneration research. Trends Anal Chem. 2018;104:160–70 This review illustrates the selenium speciation issues with particular reference to neurodegenerative diseases. Emphasis is given to selenium measurements in cerebrospinal fluid and brain.

Fairweather-Tait SJ, Collings R, Hurst R. Selenium bioavailability: current knowledge and future research requirements. Am J Clin Nutr. 2010;91:1484S–91S.

Filippini T, Cilloni S, Malavolti M, Violi F, Malagoli C, Tesauro M et al. Dietary intake of cadmium, chromium, copper, manganese, selenium and zinc in a northern Italy community. J Trace Elem Med Biol. 2018;50:508–17

Filippini T, Ferrari A, Michalke B, Grill P, Vescovi L, Salvia C, et al. Toenail selenium as an indicator of environmental exposure: a cross-sectional study. Mol Med Rep. 2017;15:3405–12.

Behne D, Kyriakopoulos A, Scheid S, Gessner H. Effects of chemical form and dosage on the incorporation of selenium into tissue proteins in rats. J Nutr. 1991;121:806–14.

Behne D, Gessner H, Kyriakopoulos A. Information on the selenium status of several body compartments of rats from the selenium concentrations in blood fractions, hair and nails. J Trace Elem Med Biol. 1996;10:174–9.

Salbe AD, Levander OA. Effect of various dietary factors on the deposition of selenium in the hair and nails of rats. J Nutr. 1990;120:200–6.

Salbe AD, Levander OA. Comparative toxicity and tissue retention of selenium in methionine-deficient rats fed sodium selenate or L-selenomethionine. J Nutr. 1990;120:207–12.

Shiobara Y, Yoshida T, Suzuki KT. Effects of dietary selenium species on Se concentrations in hair, blood, and urine. Toxicol Appl Pharmacol. 1998;152:309–14.

Hawkes WC, Richter BD, Alkan Z, Souza EC, Derricote M, Mackey BE, et al. Response of selenium status indicators to supplementation of healthy North American men with high-selenium yeast. Biol Trace Elem Res. 2008;122:107–21.

Vinceti M, Rovesti S, Bergomi M, Vivoli G. The epidemiology of selenium and human cancer. Tumori. 2000;86:105–18.

Solovyev N, Vinceti M, Grill P, Mandrioli J, Michalke B. Redox speciation of iron, manganese, and copper in cerebrospinal fluid by strong cation exchange chromatography—sector field inductively coupled plasma mass spectrometry. Anal Chim Acta. 2017;973:25–33.

Michalke B, Solovyev N, Vinceti M. Se-speciation investigations at neural barrier (NB). In: 11th International Symposium ons elenium in Biology and Medicine, Stockholm, 2017. Year.

Labunskyy VM, Lee BC, Handy DE, Loscalzo J, Hatfield DL, Gladyshev VN. Both maximal expression of selenoproteins and selenoprotein deficiency can promote development of type 2 diabetes-like phenotype in mice. Antioxid Redox Signal. 2011;14:2327–36.

Labunskyy VM, Hatfield DL, Gladyshev VN. Selenoproteins: molecular pathways and physiological roles. Physiol Rev. 2014;94:739–77.

Mita Y, Nakayama K, Inari S, Nishito Y, Yoshioka Y, Sakai N, et al. Selenoprotein P-neutralizing antibodies improve insulin secretion and glucose sensitivity in type 2 diabetes mouse models. Nat Commun. 2017;8:1658.

Tsuji PA, Carlson BA, Yoo MH, Naranjo-Suarez S, Xu XM, He Y, et al. The 15kDa selenoprotein and thioredoxin reductase 1 promote colon cancer by different pathways. PLoS One. 2015;10:e0124487.

Chandra RK, Hambreaus L, Puri S, Au B, Kutty KM. Immune response of healthy volunteers given supplements of zinc or selenium. FASEB J. 1993;A23.

Galan-Chilet I, Tellez-Plaza M, Guallar E, De Marco G, Lopez-Izquierdo R, Gonzalez-Manzano I, et al. Plasma selenium levels and oxidative stress biomarkers: a gene-environment interaction population-based study. Free Radic Biol Med. 2014;74:229–36.

Ravn-Haren G, Krath BN, Overvad K, Cold S, Moesgaard S, Larsen EH, et al. Effect of long-term selenium yeast intervention on activity and gene expression of antioxidant and xenobiotic metabolising enzymes in healthy elderly volunteers from the Danish Prevention of Cancer by Intervention by Selenium (PRECISE) pilot study. Br J Nutr. 2008;99:1190–8.

Stranges S, Sieri S, Vinceti M, Grioni S, Guallar E, Laclaustra M, et al. A prospective study of dietary selenium intake and risk of type 2 diabetes. BMC Public Health. 2010;10:564.

Ferlemi AV, Mermigki PG, Makri OE, Anagnostopoulos D, Koulakiotis NS, Margarity M, et al. Cerebral area differential redox response of neonatal rats to selenite-induced oxidative stress and to concurrent administration of highbush blueberry leaf polyphenols. Neurochem Res. 2015;40:2280–92.

Naderi M, Salahinejad A, Jamwal A, Chivers DP, Niyogi S. Chronic dietary selenomethionine exposure induces oxidative stress, dopaminergic dysfunction, and cognitive impairment in adult zebrafish (Danio rerio). Environ Sci Technol. 2017;51:12879–88.

Pettem CM, Briens JM, Janz DM, Weber LP. Cardiometabolic response of juvenile rainbow trout exposed to dietary selenomethionine. Aquat Toxicol. 2018;198:175–89.

Plateau P, Saveanu C, Lestini R, Dauplais M, Decourty L, Jacquier A, et al. Exposure to selenomethionine causes selenocysteine misincorporation and protein aggregation in Saccharomyces cerevisiae. Sci Rep. 2017;7:44761.

Van Hoewyk D. Defects in endoplasmic reticulum-associated degradation (ERAD) increase selenate sensitivity in Arabidopsis. Plant Signal Behav. 2018;13:e1171451.

Hafeman DG, Sunde RA, Hoekstra WG. Effect of dietary selenium on erythrocyte and liver glutathione peroxidase in the rat. J Nutr. 1974;104:580–7.

Macallan DC, Sedgwick P. Selenium supplementation and selenoenzyme activity. Clin Sci. 2000;99:579–81.

Lobanov AV, Hatfield DL, Gladyshev VN. Reduced reliance on the trace element selenium during evolution of mammals. Genome Biol. 2008;9:R62.

Algotar AM, Behnejad R, Singh P, Thompson PA, Hsu CH, Stratton SP. Effect of selenium supplementation on proteomic serum biomarkers in elderly men. J Frailty Aging. 2015;4:107–10.

Naderi M, Keyvanshokooh S, Salati AP, Ghaedi A. Proteomic analysis of liver tissue from rainbow trout (Oncorhynchus mykiss) under high rearing density after administration of dietary vitamin E and selenium nanoparticles. Comp Biochem Physiol Part D Genomics Proteomics. 2017;22:10–9.

Sinha R, Sinha I, Facompre N, Russell S, Somiari RI, Richie JP Jr, et al. Selenium-responsive proteins in the sera of selenium-enriched yeast-supplemented healthy African American and Caucasian men. Cancer Epidemiol Biomark Prev. 2010;19:2332–40.

Sun H, Dai H, Wang X, Wang G. Physiological and proteomic analysis of selenium-mediated tolerance to Cd stress in cucumber (Cucumis sativus L.). Ecotoxicol Environ Saf. 2016;133:114–26.

Usami M, Mitsunaga K, Nakazawa K, Doi O. Proteomic analysis of selenium embryotoxicity in cultured postimplantation rat embryos. Birth Defects Res B Dev Reprod Toxicol. 2008;83:80–96.

Rayman MP, Infante HG, Sargent M. Food-chain selenium and human health: spotlight on speciation. Br J Nutr. 2008;100:238–53.

Frisbie SH, Mitchell EJ, Sarkar B. Urgent need to reevaluate the latest World Health Organization guidelines for toxic inorganic substances in drinking water. Environ Health. 2015;14:63.

Genthe B, Kapwata T, Le Roux W, Chamier J, Wright CY. The reach of human health risks associated with metals/metalloids in water and vegetables along a contaminated river catchment: South Africa and Mozambique. Chemosphere. 2018;199:1–9.

Aakre I, Henjum S, Folven Gjengedal EL, Risa Haugstad C, Vollset M, Moubarak K, et al. Trace element concentrations in drinking water and urine among Saharawi women and young children. Toxics. 2018;6:E40.

Cui Z, Huang J, Peng Q, Yu D, Wang S, Liang D. Risk assessment for human health in a seleniferous area, Shuang’an, China. Environ Sci Pollut Res Int. 2017;24:17701–10.

Golubkina N, Erdenetsogt E, Tarmaeva I, Brown O, Tsegmed S. Selenium and drinking water quality indicators in Mongolia. Environ Sci Pollut Res Int. 2018;25:28619–27.

Hoover JH, Coker E, Barney Y, Shuey C, Lewis J. Spatial clustering of metal and metalloid mixtures in unregulated water sources on the Navajo Nation—Arizona, New Mexico, and Utah, USA. Sci Total Environ. 2018;633:1667–78.

Munyangane P, Mouri H, Kramers J. Assessment of some potential harmful trace elements (PHTEs) in the borehole water of Greater Giyani, Limpopo Province, South Africa: possible implications for human health. Environ Geochem Health. 2017;39:1201–19.

Stillings LL Selenium. In: Schulz KJ, De Young JH, Jr, Seal RR, II, Bradley DC (eds) Critical mineral resources of the United States—economic and environmental geology and prospect for future supply. US Geological Survey Professional Paper 1802, Reston, p 797. 2017.

De Benedetti S, Lucchini G, Del Bo C, Deon V, Marocchi A, Penco S, et al. Blood trace metals in a sporadic amyotrophic lateral sclerosis geographical cluster. Biometals. 2017;30:355–65.

Ellwanger JH, Franke SI, Bordin DL, Pra D, Henriques JA. Biological functions of selenium and its potential influence on Parkinson’s disease. An Acad Bras Cienc. 2016;88:1655–74.

Morris JS, Crane SB. Selenium toxicity from a misformulated dietary supplement, adverse health effects, and the temporal response in the nail biologic monitor. Nutrients. 2013;5:1024–57.